Abstract

The determination of the fundamental parameters of the Standard Model (and its extensions) is often limited by the presence of statistical and theoretical uncertainties. We present several models for the latter uncertainties (random, nuisance, external) in the frequentist framework, and we derive the corresponding p values. In the case of the nuisance approach where theoretical uncertainties are modeled as biases, we highlight the important, but arbitrary, issue of the range of variation chosen for the bias parameters. We introduce the concept of adaptive p value, which is obtained by adjusting the range of variation for the bias according to the significance considered, and which allows us to tackle metrology and exclusion tests with a single and well-defined unified tool, which exhibits interesting frequentist properties. We discuss how the determination of fundamental parameters is impacted by the model chosen for theoretical uncertainties, illustrating several issues with examples from quark flavor physics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In particle physics, an important part of the data analysis is devoted to the interpretation of the data with respect to the Standard Model (SM) or some of its extensions, with the aim of comparing different alternative models or determining the fundamental parameters of a given underlying theory [1,2,3]. In this activity, the role played by uncertainties is essential, since they constitute the limit for the accurate determination of these parameters, and they can prevent from reaching a definite conclusion when comparing several alternative models. In some cases, these uncertainties are from a statistical origin: they are related to the intrinsic variability of the phenomena observed, they decrease as the sample size increases and they can be modeled using random variables. A large part of the experimental uncertainties belong to this first category. However, another kind of uncertainties occurs when one wants to describe inherent limitations of the analysis process, for instance, uncertainties in the calibration or limits of the models used in the analysis. These uncertainties are very often encountered in theoretical computations, for instance when assessing the size of higher orders in perturbation theory or the validity of extrapolation formulas. Such uncertainties are often called “systematics”, but they should be distinguished from less dangerous sources of systematic uncertainties, usually of experimental origin, that roughly scale with the size of the statistical sample and may be reasonably modeled by random variables [4]. In the following we will thus call them “theoretical” uncertainties: by construction, they lack both an unambiguous definition (leading to various recipes to determine these uncertainties) and a clear interpretation (beyond the fact that they are not from a statistical origin). It is thus a complicated issue to incorporate their effect properly, even in simple situations often encountered in particle physics [5,6,7].Footnote 1

The relative importance of statistical and theoretical uncertainties might be different depending on the problem considered, and the progress made both by experimentalists and theorists. For instance, statistical uncertainties are the main issue in the analysis of electroweak precision observables [11, 12]. On the other hand, in the field of quark flavor physics, theoretical uncertainties play a very important role. Thanks to the B-factories and LHCb, many hadronic processes have been very accurately measured [13, 14], which can provide stringent constraints on the Cabibbo–Kobayashi–Maskawa matrix (in the Standard Model) [15,16,17], and on the scale and structure of New Physics (in SM extensions) [18,19,20,21]. However, the translation between hadronic processes and quark-level transitions requires information on hadronization from strong interaction, encoded in decay constants, form factors, bag parameters... The latter are determined through lattice QCD simulations. The remarkable progress in computing power and in algorithms over the last 20 years has led to a decrease of statistical uncertainties and a dominance of purely theoretical uncertainties (chiral and heavy-quark extrapolations, scale chosen to set the lattice spacing, finite-volume effects, continuum limit...). As an illustration, the determination of the Wolfenstein parameters of the CKM matrix involves many constraints which are now limited by theoretical uncertainties (neutral-meson mixing, leptonic and semileptonic decays, ...) [22].

The purpose of this note is to discuss theoretical uncertainties in more detail in the context of particle physics phenomenology, comparing different models not only from a statistical point of view, but also in relation with the problems encountered in phenomenological analyses where they play a significant role. In Sect. 2, we summarize fundamental notions of statistics used in particle physics, in particular p values and test statistics. In Sect. 3, we list properties that we seek in a good approach for theoretical uncertainties. In Sect. 4, we propose several approaches and in Sect. 5, we compare their properties in the most simple one-dimensional case. In Sect. 6, we consider multi-dimensional cases (propagation of theoretical uncertainties, average of several measurements, fits and pulls), which we illustrate using flavor physics examples related to the determination of the CKM matrix in Sect. 7, before concluding. An appendix is devoted to several issues connected with the treatment of correlations.

2 Statistics concepts for particle physics

We start by briefly recalling frequentist concepts used in particle physics, highlighting the role played by p values in hypothesis testing and how they can be used to define confidence intervals.

2.1 p values

2.1.1 Data fitting and data reduction

First, we would like to illustrate the concepts of data fitting and data reduction in particle physics, starting with a specific example, namely the observation of the time-dependent CP asymmetry in the decay channel \(B^0(t)\) \(\rightarrow J/\psi K_S\) by the BaBar, Belle and LHCb experiments [23,24,25]. Each experiment collects a sample of observed decay times \({t_i}\) corresponding to the B-meson events, where this sample is theoretically known to follow a PDF f. The PDF is parameterized in terms of a few physics parameters, among which we assume the ones of interest are the direct and mixing-induced C and S CP asymmetries. The functional form of this PDF is dictated on very general grounds by the CPT invariance and the formalism of two-state mixing (see, e.g., [26]), and is independent of the particular underlying phenomenological model (e.g. the Standard Model of particle physics). In practice, however, detector effects are required to be modeled by additional parameters that modify the shape of the PDF. We denote by \(\theta \) the set of parameters \(\theta =(C,S,\ldots )\) that are needed to specify the PDF completely. The likelihood for the sample \(\{t_i\}\) is defined by

and can be used as a test statistic to infer constraints on the parameters \(\theta \), and/or construct estimators for them, as will be discussed in more detail below. The combination of different samples/experiments can be done simply by multiplication of the corresponding likelihoods. On the other hand one can choose to work directly in the framework of a specific phenomenological model, by replacing in \(\theta \) the quantities that are predicted by the model in terms of more fundamental parameters: for example in the Standard Model, and neglecting the “penguin” contributions, one has the famous relations \(C=0\), \(S=\sin 2\beta \) where \(\beta \) is one of the angles of the Unitarity Triangle and can be further expressed in terms of the Cabibbo–Kobayashi–Maskawa couplings.

The latter choice of expressing the experimental likelihood in terms of model-dependent parameters such as \(\beta \) has, however, one technical drawback: the full statistical analysis has to be performed for each model one wants to investigate, e.g., the Standard Model, the Minimal Supersymmetric Standard Model, GUT models, ... In addition, building a statistical analysis directly on the initial likelihood requires one to deal with a very large parameter space, depending on the parameters in \(\theta \) that are needed to describe the detector response. One common solution to these technical difficulties is a two-step approach. In the first step, the data are reduced to a set of model- and detector-independentFootnote 2 random variables that contains the same information as the original likelihood (to a good approximation): in our example the likelihood-based estimators \(\hat{C}\) and \(\hat{S}\) of the parameters C and S can play the role of such variables (estimators are functions of the data and thus are random variables). In a second step, one can work in a particular model, e.g., in the Standard Model, to use \(\hat{C}\) and \(\hat{S}\) as inputs to a statistical analysis of the parameter \(\beta \). This two-step procedure gives the same result as if the analysis were done in a single step through the expression of the original likelihood in terms of \(\beta \). This technique is usually chosen if the PDF g of the estimators \(\hat{C}\) and \(\hat{S}\) can be parameterized in a simple way: for example, if the sample size is sufficiently large, then the PDF can often be modeled by a multivariate normal distribution, where the covariance matrix is approximately independent of the mean vector.

Let us now extend the above discussion to a more general case. A sample of random events is \(\{E_i,i=1\ldots n\}\), where each event corresponds to a set of directly measurable quantities (particle energies and momenta, interaction vertices, decay times...). The distribution of these events is described by a PDF, the functional form f of which is supposed to be known. In addition to the event value E, the PDF value depends on some fixed parameters \({\theta }\), hence the notation \(f(E;\theta )\). The likelihood for the sample \(\{E_i\}\) is defined by \( \mathcal L_{\{E_i\}}(\theta ) = \prod _{i=1}^n f(E_i;\theta ). \) We want to interpret the event observation in a given phenomenological scenario that predicts at least some of the parameters \(\theta \) describing the PDF in terms of a set of more fundamental parameters \(\chi \).

To this aim we first reduce the event observation to a set of model- and detector-independent random variables X together with a PDF \(g(X;\chi )\), in such a way that the information that one can get on \(\chi \) from g is equivalent to the information one can get from f, once \(\theta \) is expressed in terms of \(\chi \) consistently with the phenomenological model of interest. Technically, it amounts to identifying a minimal set of variables x depending on \(\theta \) that are independent of both the experimental context and the phenomenological model. One performs an analysis on the sample of events \({E_i}\) to derive estimators \(\hat{x}\) for x. The distribution of these estimators can be described in terms of a PDF that is written in the \(\chi \) parametrization as \(g(X;\chi )\), where we have replaced \(\hat{x}\) by the notation X, to stress that in the following X will be considered as a new random variable, setting aside how it has been constructed from the original data \(\{E_i\}\). Obviously, in our previous example for \(B^0(t)\) \(\rightarrow J/\psi K_S\), \(\{t_i\}\) correspond to \(\{E_i\}\), C ans S to x, and \(\beta \) to \(\chi \).

2.1.2 Model fitting

From now on we work with one or more observable(s) x, with associated random variable X, and an associated PDF \(g(X;\chi )\) depending on purely theoretical parameters \(\chi \). With a slight abuse of notation we include in the symbol g not only the functional form, but also all the needed parameters that are kept fixed and independent of \(\chi \). In particular for a one-dimensional Gaussian PDF we have

where X is a potential value of the observable x and \(x(\chi )\) corresponds to the theoretical prediction of x given \(\chi \). This PDF is obtained from the outcome of an experimental analysis yielding both a central value \(X_0\) and an uncertainty \(\sigma \), where \(\sigma \) is assumed to be independent of the realization \(X_0\) of the observable x and is thus included in the definition of g.

Our aim is to derive constraints on the parameters \(\chi \), from the measurement \(X_0\pm \sigma \) of the observable x. One very general way to perform this task is hypothesis testing, where one wants to quantify how much the data are compatible with the null hypothesis that the true value of \(\chi \), \(\chi _t\), is equal to some fixed value \(\chi \):

In order to interpret the observed data \(X_0\) measured in a given experiment in light of the distribution of the observables X under the null hypothesis \({\mathcal H}_\chi \), one defines a test statistic \(T(X;\chi )\), that is, a scalar function of the data X that measures whether the data are in favor or not of the null hypothesis. We indicated the dependence of T on \(\chi \) explicitly, i.e., the dependence on the null hypothesis \({\mathcal H}_\chi \). The test statistic is generally a definite positive function chosen in a way that large values indicate that the data present evidence against the null hypothesis. By comparing the actual data value \(t=T(X_0;\chi )\) with the sampling distribution of \(T=T(X;\chi )\) under the null hypothesis, one is able to quantify the degree of agreement of the data with the null hypothesis.

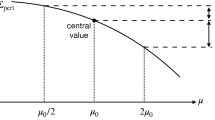

Illustration in the simple case where X is predicted as \(x(\mu )=\mu \). Under the hypothesis \(\mu _t=\mu \), and having measured \(X=0\pm 1\), one can determine the associated p value \(p(0;\mu )\) by examining the distribution of the quadratic test statistic \(T(X;\mu )=(X-\mu )^2\) assuming X is distributed as a Gaussian random variable with central value 0 and width 1. The blue dashed line corresponds to the value of T associated with the hypothesis \(\mu =-1.4\), with a p value obtained by considering the gray area. The red dotted line corresponds to the hypothesis \(\mu =2.5\)

Mathematically it amounts to defining a p value. One calculates the probability to obtain a value for the test statistic at least as large as the one that was actually observed, assuming that the null hypothesis is true. This tail probability is used to define the p value of the test for this particular observation

where the PDF h of the test statistic is obtained from the \(\mathrm{PDF}\) g of the data as

which can be obtained easily from comparing the convolution of \(\frac{\mathrm{d}T}{\mathrm{d}X}h(T)=g(X)\) with a test function of T with the convolution of the r.h.s. of (5) with the same test function. A small value of the p value means that \(T(X_0;\chi )\) belongs to the “large” region, and thus provides evidence against the null hypothesis. This is illustrated for a simple example in Figs. 1 and 2.

On the left for a given observation \(X=0\pm 1\), p value \(p(0;\mu )\) as a function of the value of \(\mu \) being tested. Blue dashed and red dotted lines correspond to \(\mu =-1.4\) and \(\mu =2.5\). A confidence interval for \(\mu \) at 68% CL is obtained by considering the region of \(\mu \) with a p value larger than 0.32, as indicated by the green dotted dashed line and arrows. On the right the same information is expressed in Gaussian units of \(\sigma \), where the 68% CL interval corresponds to the region below the horizontal line of significance 1

From its definition, one sees that \(1-p(X_0;\chi )\) is nothing else but the cumulative distribution function of the PDF h

where \(\theta \) is the Heaviside function. This expression corresponds to the probability for the test statistic to be smaller than a given value \(T(X_0;\chi )\). The p value in Eq. (4) is defined as a function of \(X_0\) and as such, is a random variable.

Through the simple change of variable \(\frac{\mathrm{d}p}{\mathrm{d}T}\frac{\mathrm{d}\mathcal P}{\mathrm{d}p}=\frac{\mathrm{d}\mathcal P}{\mathrm{d}T}\), one obtains that the null distribution (that is, the distribution when the null hypothesis is true) of a p value is uniform, i.e., the distribution of values of the p value is flat between 0 and 1. This uniformity is a fundamental property of p values that is at the core of their various interpretations (hypothesis comparison, determination of confidence intervals...) [1, 2].

In the frequentist approach, one wants to design a procedure to decide whether to accept or reject the null hypothesis \({\mathcal H}_\chi \), by avoiding as much as possible either incorrectly rejecting the null hypothesis (Type-I error) or incorrectly accepting it (Type-II error). The standard frequentist procedure consists in selecting a Type-I error \(\alpha \) and determining a region of sample space that has the probability \(\alpha \) of containing the data under the null hypothesis. If the data fall in this critical region, the hypothesis is rejected. This must be performed before data are known (in contrast to other interpretations, e.g, Fischer’s approach of significance testing [1]). In the simplest case, the critical region is defined by a condition of the form \(T\ge t_\alpha \), where \(t_\alpha \) is a function of \(\alpha \) only, which can be rephrased in terms of p value as \(p\le \alpha \). The interest of the frequentist approach depends therefore on the ability to design p values assessing the rate of Type-I error correctly (its understatement is clearly not desirable, but its overstatement yields often a reduction in the ability to determine the truth of an alternative hypothesis), as well as avoiding too large a Type-II error rate.

A major difficulty arises when the hypothesis to be tested is composite. In the case of numerical hypotheses like (3), one gets compositeness when one is only interested in a subset \(\mu \) of the parameters \(\chi \). The remaining parameters are called nuisance parameters Footnote 3 and will be denoted by \(\nu \), thus \(\chi =(\mu ,\nu )\). In this case the hypothesis \({\mathcal H}_\mu : \mu _t=\mu \) is composite, because determining the distribution of the observables requires the knowledge of the true value \(\nu _t\) in addition to \(\mu \). In this situation, one has to devise a procedure to infer a “p value” for \({\mathcal H}_\mu \) out of p values built for the simple hypotheses where both \(\mu \) and \(\nu \) are fixed. Therefore, in contrast to a simple hypothesis, a composite hypothesis does not allow one to compute the distribution of the data.Footnote 4

At this stage, it is not necessarily guaranteed that the distribution of the p value for \({\mathcal H}_\mu \) is uniform, and one may get different situations:

which may depend on the value of \(\alpha \) considered. Naturally, one would like to design as much as possible an exact p value (exact coverage), or if this is not possible, a (reasonably) conservative one (overcoverage). Such p values will be called “valid” p values. In the case of composite hypotheses, the conservative or liberal nature of a p value may depend not only on \(\alpha \), but also on the structure of the problem and of the procedure used to construct the p value, and it has to be checked explicitly [1, 2].

A \(\alpha \)-CL interval built from a p value with exact coverage has a probability of \(\alpha \) of containing the true value. This is illustrated in the simple case of a quantity X which has a true value \(\mu _t=0\) but is measured with an uncertainty \(\sigma =1\). Each time a measurement is performed, it will yield a different value for \(X_0\) and thus a different p value curve as a function of the hypothesis tested \(\mu _t=\mu \). From each measurement, a 68% CL interval can be determined by considering the part of the curve above the line \(p=0.32\), but this interval may or may not contain the true value \(\mu _t=0\). The curves corresponding to the first case (second case) are indicated with 6 green solid lines (4 blue dotted lines). Asymptotically, if the p value has exact coverage, 68% of these confidence intervals will contain the true value

Once p values are defined, one can build confidence intervals out of them by using the correspondence between acceptance regions of tests and confidence sets. Indeed, if we have an exact p value, and the critical region \(C_\alpha (X)\) is defined as the region where \(p(X;\mu )<\alpha \), the complement of this region turns out to be a confidence set of level \(1-\alpha \), i.e., \(P[\mu \notin C_\alpha (X)]= 1-\alpha \). This justifies the general use of plotting the p value as a function of \(\mu \), and reading the 68 or 95% CL intervals by looking at the ranges where the p value curve is above 0.32 or 0.05. This is illustrated for a simple example in Figs. 2 and 3. Once again, this discussion is affected by issues of compositeness and nuisance parameters, as well as the requirement of checking the coverage of the p value used to define these confidence intervals: an overcovering p value will yield too large confidence intervals, which will prove indeed conservative.

A few words about the notation and the vocabulary are in order at this stage. A p value necessarily refers to a null hypothesis, and when the null hypothesis is purely numerical such as (3) we can consider the p value as a mathematical function of the fundamental parameter \(\mu \). This of course does not imply that \(\mu \) is a random variable (in frequentist statistics, it is always a fixed, but unknown, number). When the p value as a function of \(\mu \) can be described in a simple way by a few parameters, we will often use the notation \(\mu =\mu _0\pm \sigma _\mu \). In this case, one can easily build the p value and derive any desired confidence interval. Even though this notation is similar to the measurement of an observable, we stress that this does not mean that the fundamental parameter \(\mu \) is a random variable, and it should not be seen as the definition of a PDF. In line with this discussion, we will call uncertainties the parameters like \(\sigma \) that can be given a frequentist meaning, e.g., they can be used to define the PDF of a random variable. On the other hand, we will call errors the intermediate quantities such as \(\sigma _\mu \) that can be used to describe the p value of a fundamental parameter, but cannot be given a statistical meaning for this parameter.

2.2 Likelihood-ratio test statistic

Here we consider test statistics that are constructed from the logarithm of the likelihoodFootnote 5

More precisely, one uses tests based on the likelihood ratio in many instances. Its use is justified by the Neyman–Pearson lemma [1, 2, 27] showing that this test has appealing features in a binary model with only two alternatives for \(\chi _t\), corresponding to the two simple hypotheses \({\mathcal H}_{\chi _1}\) and \({\mathcal H}_{\chi _2}\). Indeed one can introduce the likelihood ratio \({\mathcal L}_X(\chi _1)/{\mathcal L}_X(\chi _2)\), define the critical region where this likelihood ratio is smaller than a given \(\alpha \), and decide that one rejects \({\mathcal H}_{\chi _1}\) whenever the observation falls in this critical region. This test is the most powerful test that can be built [1, 2], in the sense that among all the tests with a given Type-I error \(\alpha \) (probability of rejecting \({\mathcal H}_{\chi _1}\) when \({\mathcal H}_{\chi _1}\) is true), the likelihood ratio test has the smallest Type-II error (probability of accepting \({\mathcal H}_{\chi _1}\) when \({\mathcal H}_{\chi _2}\) is true). These two conditions are the two main criteria to determine the performance of a test.

In the case of a composite hypothesis, there is no such clear-cut approach to choose the most powerful test. The maximum likelihood ratio (MLR) is inspired by the Neynman–Pearson lemma, comparing the most plausible configuration under \({\mathcal H}_\mu \) with the most plausible one in general:

Let us emphasize that even though T is constructed not to depend on the nuisance parameters \(\nu \) explicitly, its distribution Eq. (5) a priori depends on them (through the PDF g). Even though the Neyman–Pearson lemma does not apply here, there is empirical evidence that this test is powerful, and in some cases it exhibits good asymptotic properties (easy computation and distribution independent of nuisance parameters) [1, 2].

For the problems considered here, the MLR choice features alluring properties, and in the following we will use test statistics that are derived from this choice. First, if \(g(X;\chi _t)\) is a multi-dimensional Gaussian function, then the quantity \(-2\ln {\mathcal L}_X(\chi _t)\) is the sum of the squares of standard normal random variables, i.e., is distributed as a \(\chi ^2\) with a number of degrees of freedom (\(N_\mathrm{dof}\)) that is given by \(\mathrm{dim}(X)\). Secondly, for linear models, in which the observables X depend linearly on the parameters \(\chi _t\), the MLR Eq. (11) is again a sum of standard normal random variables, and is distributed as a \(\chi ^2\) with \(N_\mathrm{dof}=\mathrm{dimension}(\mu )\). Wilks’ theorem [28] states that this property can be extended to non-Gaussian cases in the asymptotic limit: under regularity conditions and when the sample size tends to infinity, the distribution of Eq. (11) will converge to the same \(\chi ^2\) distribution depending only on the number of parameters tested.

The great virtue of the \(\chi ^2\)-distribution is that it only depends on the number of degrees of freedom, which means in particular that the null-distribution of Eq. (11) is independent of the nuisance parameters \(\nu \), whenever the conditions of the Wilks’ theorem apply. Furthermore the integral (4) can be computed straightforwardly in terms of complete and incomplete \(\varGamma \) functions:

In practice the models we want to analyze, such as the Standard Model, predict nonlinear relations between the observables and the parameters. In this case one has to check whether Wilks’ theorem applies, by considering whether the theoretical equations can be approximately linearized.Footnote 6

3 Comparing approaches to theoretical uncertainties

We have argued before that an appealing test statistic is provided by the likelihood ratio Eq. (11) due to its properties in limit cases (linearized theory, asymptotic limit). These properties rely on the fact that the likelihood ratio can be built as a function of random variables described by measurements involving only statistical uncertainties. However, in flavor physics (as in many other fields in particle physics), there are not only statistical but also theoretical uncertainties. Indeed, as already indicated in the introduction, these phenomenological analyses combine experimental information and theoretical estimates. In the case of flavor physics, the latter come mainly from QCD-based calculations, which are dominated by theoretical uncertainties.

Unfortunately, the very notion of theoretical uncertainty is ill-defined as “anything that is not due to the intrinsic variability of data”. Theoretical uncertainties (model uncertainty) are thus of a different nature with respect to statistical uncertainties (stochastic uncertainty, i.e. variability in the data), but they can only be modeled (except in the somewhat academic case where a bound on the difference between the exact value and the approximately computed one can be proven). The choice of a model for theoretical uncertainties involves not only the study of its mathematical properties and its physical implications in specific cases, but also some personal taste. One can indeed imagine several ways of modeling/treating theoretical uncertainties:

-

one can (contrarily to what has just been said) treat the theoretical uncertainty on the same footing as a statistical uncertainty; in this case, in order to follow a meaningful frequentist procedure, one has to assume that one lives in a world where the repeated calculation of a given quantity leads to a distribution of values around the exact one, with some variability that can be modeled as a PDF (“random-\(\delta \) approach”),

-

one can consider that theoretical uncertainties can be modeled as external parameters, and perform a purely statistical analysis for each point in the theoretical uncertainty parameter space; this leads to an infinite collection of p values that will have to be combined in some arbitrary way, following a model averaging procedure (“external-\(\delta \) approach”),

-

one can take the theoretical uncertainties as fixed asymptotic biases,Footnote 7 treating them as nuisance parameters that have to be varied in a reasonable region (“nuisance-\(\delta \) approach”).

There are some desirable properties for a convincing treatment of theoretical uncertainties:

-

as general as possible, i.e., apply to as many “kinds” of theoretical uncertainties as possible (lattice uncertainties, scale uncertainties) and as many types of physical models as possible,

-

leading to meaningful confidence intervals, in reasonable limit cases: obviously, in the absence of theoretical uncertainties, one must recover the standard result; one may also consider the type of constraint obtained in the absence of statistical uncertainties,

-

exhibiting good coverage properties, as it benchmarks the quality of the statistical approach: the comparison of different models provides interesting information but does not shed light on their respective coverage,

-

associated with a statistically meaningful goodness-of-fit,

-

featuring reasonable asymptotic properties (large samples),

-

yielding the errors as a function of the estimates easily (error propagation), in particular by disentangling the impact of theoretical and statistical contributions,

-

leading to a reasonable procedure to average independent estimates – if possible, it should be equivalent for any analysis to include the independent estimates separately or the average alone (associativity). In addition, one may wonder whether the averaging procedure should be conservative or aggressive (i.e., the average of similar theoretical uncertainties should have a smaller uncertainty or not), and if the procedure should be stationary (the uncertainty of an average should be independent of the central values or not),

-

leading to reasonable results in the case of averages of inconsistent measurements.

Finally a technical requirement is the computing power needed to calculate the best-fit point and confidence intervals for a large parameter space with a large number of constraints. Even though it should not be the sole argument in favor of a model, it should be kept in mind (a very complicated model for theoretical uncertainties would not be particularly interesting if it yields very close results to a much simpler one).

We summarize some of the points mentioned above in Table 1. As it will be seen, it will, however, prove challenging to fulfill all these criteria at the same time, and we will have to make compromises along the way.

4 Illustration of the approaches in the one-dimensional case

4.1 Situation of the problem

We will now discuss the three different approaches and some of their properties in the simplest case, i.e. with a single measurement (for an experimental quantity) or a single theoretical determination (for a theoretical quantity). Following a fairly conventional abuse of language, we will always refer to this piece of information as a “measurement” even though some modeling may be involved in its extraction through data reduction, as discussed in Sect. 2. The main, yet not alone, aim is to model/interpret/exploit a measurement likeFootnote 8

to extract information on the value of the associated fundamental parameter \(\mu \). Without theoretical uncertainty (\(\varDelta =0\)), one would use this measurement to build a PDF

yielding the MLR test statistic

and one can build a p value easily from Eq. (4)

In the presence of a theoretical uncertainty \(\varDelta \), the situation is more complicated, as there is no clear definition of what \(\varDelta \) corresponds to. A possible first step is to introduce a theoretical uncertainty parameter \(\delta \) that describes the shift of the approximate theoretical computation from the exact value, and that is taken to vary in a region that is defined by the value of \(\varDelta \). This leads to the PDF

in such a way that in the limit of an infinite sample size (\(\sigma \rightarrow 0\)), the measured value of X reduces to \(\mu +\delta \). The challenge is to extract some information on \(\mu \), given the fact that the value of \(\delta \) remains unknown.

The steps (to be spelt out below) to achieve this goal are:

-

Take a model corresponding to the interpretation of \(\delta \): random variable, external parameter, fixed bias as a nuisance parameter...

-

Choose a test statistic \(T(X;\mu )\) that is consistent with the model and that discriminates the null hypothesis: Rfit, quadratic, other...

-

Compute, consistently with the model, the p value, which is in general a function of \(\mu \) and \(\delta \).

-

Eliminate the dependence with respect to \(\delta \) by some well-defined procedure.

-

Exploit the resulting p value (coverage, confidence intervals, goodness-of-fit).

Since we focus on Gaussian experimental uncertainties (the generalization to other shapes is formally straightforward but may be technically more complicated), for all approaches that we discuss in this note we take the following PDF:

where, in the limit of an infinite sample size (\(\sigma \rightarrow 0\)), \(\mu \) can be interpreted as the exact value of the parameter of interest, and \(\mu +\delta \) the approximately theoretically computed one. The interpretation of \(\delta \) will differ depending on the approach considered, which we will discuss now.

4.2 The random-\(\delta \) approach

In the random-\(\delta \) approach, \(\delta \) would be related to the variability of theoretical computations, which one can model with some PDF for \(\delta \), such as \({\mathcal N}_{(0,\varDelta )}\) (normal) or \({\mathcal U}_{(-\varDelta ,+\varDelta )}\) (uniform). The natural candidate for the test statistic \(T(X;\mu )\) is the MLR built from the PDF. One considers a model where \(X=s+\delta \) is the sum of two random variables, s being distributed as a Gaussian of mean \(\mu \) and width \(\sigma \), and \(\delta \) as an additional random variable with a distribution depending on \(\varDelta \).

One may often consider for \(\delta \) a variable normally distributed with a mean zero and a width \(\varDelta \) (denoted naive Gaussian or “nG” in the following, corresponding to the most common procedure in the literature of particle physics phenomenology). The resulting PDF for X is then the convolution of two Gaussian PDFs, leading to

to which corresponds the usual quadratic test statistic (obtained from MLR)

recovering the p value that would be obtained when the two uncertainties are added in quadrature

We should stress that considering \(\delta \) as a random variable corresponds to a rather strange frequentist world,Footnote 9 and there is no strong argument that would help to choose the associated PDF (for instance, \(\delta \) could be a variable uniformly distributed over \([-\varDelta ,\varDelta ]\)). However, for a general PDF, the p value has no simple analytic formula and it must be computed numerically from Eq. (4). In the following, we will only consider the case of a Gaussian PDF when we discuss the random-\(\delta \) approach.

4.3 The nuisance-\(\delta \) approach

In the nuisance approach, \(\delta \) is not interpreted as a random variable but as a fixed parameter so that in the limit of an infinite sample size, the estimator does not converge to the true value \(\mu _t\), but to \(\mu _t+\delta \). The distinction between statistical and theoretical uncertainties is thus related to their effect as the sample size increases, statistical uncertainties decreasing while theoretical uncertainties remaining of the same size (see Refs. [29,30,31] for other illustrations in the context of particle physics). One works with the null hypothesis \(\mathcal {H}_{\mu }: \mu _t=\mu \), and one has then to determine which test statistic is to be built.

In the frequentist approach, the choice of the test statistic is arbitrary as long as it models the null hypothesis correctly, i.e., the smaller the value of the test statistic, the better the agreement of the data with the hypothesis. A particularly simple possibility consists in the quadratic statistic already introduced earlier:

where the minimum is not taken over a fixed range, but on the whole space. The great virtue of the quadratic shape is that in linear models it remains quadratic after minimization over any subset of parameters, in contrast with alternative, non-quadratic, test statistics.

The PDF for X is normal, with mean \(\mu +\delta \) and variance \(\sigma ^2\)

Although we choose test statistics for the random-\(\delta \) and nuisance-\(\delta \) of the same form, Eqs. (20) and (22), the different PDFs Eqs. (19) and (23) imply very different constructions for the p values and the resulting statistical outcomes. Indeed, with this PDF for the nuisance-\(\delta \) approach, T is distributed as a rescaled, non-central \(\chi ^2\) distribution with a non-centrality parameter \((\delta /\sigma )^2\) (this non-centrality parameter illustrates that the test statistic is centered around \(\mu \) whereas the distribution of X is centered around \(\mu +\delta \)). \(\delta \) is then a genuine asymptotic bias, implying inconsistency: in the limit of an infinite sample size, the estimator constructed from T is \(\mu \), whereas the true value is \(\mu +\delta \). Using the previous expressions, one can easily compute the cumulative distribution function of this test statistic,

which depends explicitly on \(\delta \) but not on \(\varDelta \) (as indicated before, even if T is built to be independent of nuisance parameters, its PDF depends on them a priori).

To infer the p value one can take the supremum value for \(\delta \) over some interval \(\varOmega \)

The interpretation is the following: if the (unknown) true value of \(\delta \) belongs to \(\varOmega \), then \(p_{\varOmega }\) is a valid p value for \(\mu \), from which one can infer confidence intervals for \(\mu \). This space cannot be the whole space (as one would get \(p=1\) trivially for all values of \(\mu \)), but there is no natural candidate (i.e., coming from the derivation of the test statistic). More specifically, should the interval \(\varOmega \) be kept fixed or should it be rescaled when investigating confidence intervals at different levels (e.g. 68 vs. 95%)?

-

If one wants to keep it fixed, \(\varOmega _r=r[-\varDelta ,\varDelta ]\):

$$\begin{aligned} p_\mathrm{fixed\ \varOmega _r}=\mathrm{Max}_{\delta \in \varOmega _r}[1- \mathrm {CDF}_\delta (\mu )]. \end{aligned}$$(26)One may wonder what the best choice is for r, as the p value gets very large if one works with the reasonable \(r=3\), while the choice \(r=1\) may appear as non-conservative. We will call this treatment the fixed r-nuisance approach.

-

One can then wonder whether one would like to let \(\varOmega \) depend on the value considered for p. In other words, if we are looking at a \(k\,\sigma \) range, we could consider the equivalent range for \(\delta \). This would correspond to

$$\begin{aligned} p_\mathrm{adapt\ \varOmega }=\mathrm{Max}_{\delta \in \varOmega _{k_\sigma ( p)}}[1- \mathrm {CDF}_\delta (\mu )] \end{aligned}$$(27)where \(k_\sigma (p )\) is the “number of sigma” corresponding to p

$$\begin{aligned} k_\sigma (p )^2=\mathrm{Prob}^{-1}(p,N_\mathrm{dof}=1), \end{aligned}$$(28)where the function Prob has been defined in Eq. (12). We will call this treatment the adaptive nuisance approach. The correct interpretation of this p value is: p is a valid p value if the true (unknown) value of \(\delta /\varDelta \) belongs to the “would be” \(1-p\) confidence interval around 0. This is not a standard coverage criterion: one can use adaptive coverage, and adaptively valid p value, to name this new concept. Note that Eqs. (27), (28) constitute a non-algebraic implicit equation that has to be solved by numerical means.

Let us emphasize that the fixed interval is very close to the original ‘Rfit’ method of the CKMfitter group [15, 16] in spirit, but not numerically, as will be shown below by an explicit comparison. In contrast the adaptive choice is more aggressive in the region of \(\delta \) close to zero, but allows this parameter to take large values, provided one is interested in computing small p values accordingly. In this sense, the adaptive approach provides a unified approach to deal with two different issues of importance, namely the metrology of parameters (at 1 or 2\(\sigma \)) and exclusion tests (at 3 or 5\(\sigma \)).

4.4 The external-\(\delta \) approach

In this approach, the parameter \(\delta \) is also considered as a fixed parameter. The idea behind this approach is very simple, and it is close to what experimentalists often do to estimate systematic effects: in a first step one considers that \(\delta \) is a fixed constant, and one performs a standard, purely statistical analysis that leads to a p value that explicitly depends on \(\delta \). If one takes \(X\sim \mathcal {N}_{(\mu +\delta ,\sigma )}\) and T quadratic [either \((X-\mu -\delta )^2/\sigma ^2\) or \((X-\mu -\delta )^2/(\sigma ^2+\varDelta ^2)\)]:Footnote 10

Note that this procedure actually corresponds to the simple null hypothesis \(\mathcal {H}^{(\delta )}_{\mu }: \mu _t=\mu +\delta \) instead of \(\mathcal {H}_{\mu }\): \(\mu _t=\mu \), hence one gets an infinite collection of p values instead of a single one related to the aimed constraint on \(\mu \).

Since \(\delta \) is unknown one has to define a procedure to average all the \(p_\delta (\mu )\) obtained. The simplest possibility is to take the envelope (i.e., the maximum) of \(p_\delta (\mu )\) for \(\delta \) in a definite interval (e.g. \([-\varDelta ,+\varDelta ]\)), leading to

By analogy with the previous case, we will call this treatment the fixed r-external approach for \(\delta \in \varOmega _r\). This is equivalent to the Rfit ansatz used by CKMfitter [15, 16] in the one-dimensional case (but not in higher dimensions), proposed to treat theoretical uncertainties in a different way from statistical uncertainties, treating all values within \([-\varDelta ,\varDelta ]\) on an equal footing. We recall that the Rfit ansatz was obtained starting from a well test statistic, with a flat bottom with a width given by the theoretical error and parabolic walls given by statistical uncertainty.

A related method, called the Scan method, has been developed in the context of flavor physics [32, 33]. It is however slightly different from the case discussed here. First, the test statistic chosen is not the same, since the Scan method uses the likelihood rather than the likelihood ratio, i.e. it relies on the test statistic \(T=-2\log \mathcal{L(\mu ,\nu })\) which is interpreted assuming that T follows a \(\chi ^2\)-law with the corresponding number of degrees of freedom N, including both parameters of interest and nuisance parameters.Footnote 11 Then the \(1-\alpha \) confidence region is then determined by varying nuisance parameters in given intervals (typically \(\varOmega _1\)), but accepting only points where \(T\le T_c\), where \(T_c\) is a critical value so that \(P(T\ge T_c;N|H_0)\ge \alpha \) (generally taken as \(\alpha =0.05\)). This latter condition acts as a test of compatibility between a given choice of nuisance parameters and the data.

Comparison of different treatments of theoretical uncertainties of the measurement \(X=0 \pm \sigma \ (\mathrm{exp}) \pm \varDelta (\mathrm{th})\), with different values of \(\varDelta /\sigma \) (with the normalization \(\sqrt{\varDelta ^2+\sigma ^2}=1\)). The p values have been converted into a significance in Gaussian units of \(\sigma \) following the particle physics conventions. The various approaches are: nG (dotted, red), Rfit or 1-external (dashed, black), fixed 1-nuisance (dotted-dashed, blue), fixed 3-nuisance (dotted-dotted-dashed, purple), adaptive nuisance (solid, green)

5 Comparison of the methods in the one-dimensional case

In the following, we will discuss properties of the different approaches in the case of one dimension. More specifically, we will consider:

-

the random-\(\delta \) approach with a Gaussian random variable, or naive Gaussian (nG), see Sect. 4.2,

-

the nuisance-\(\delta \) approach with quadratic statistic and fixed range, or fixed nuisance, see Sect. 4.3,

-

the nuisance-\(\delta \) approach with quadratic statistic and adaptive range, or adaptive nuisance, see Sect. 4.3,

-

the external-\(\delta \) approach with quadratic statistic and fixed range, equivalent to the Rfit approach in one dimension; see Sect. 4.4.

Note that we will not consider other (nonquadratic) statistics. Finally, we consider

with varying \(\varDelta /\sigma \) as an indication of the relative size of the experimental and theoretical uncertainties.

5.1 p values and confidence intervals

We can follow the discussion of the previous section and plot the results for the p values obtained from the various methods discussed above in Fig. 4, where we compare nG, Rfit, fixed nuisance and adaptive nuisance approaches. From these p values, we can infer confidence intervals at a given significance level and a given value of \(\varDelta /\sigma \), and determine the length of the (symmetric) confidence interval (see Table 2). We notice the following points:

-

By construction, nG always provides the same errors whatever the relative proportion of theoretical and statistical uncertainties, and all the approaches provide the same answer in the limit of no theoretical uncertainty \(\varDelta =0\).

-

By construction, for a given \(n\sigma \) confidence level, the interval provided by the adaptive nuisance approach is identical to the one obtained using the fixed nuisance approach with a \([-n,n]\) interval. This explains why the adaptive nuisance approach yields identical results to the fixed 1-nuisance approach at 1\(\sigma \) (and similarly for the fixed 3-nuisance approach at 3\(\sigma \)). The corresponding curves cannot be distinguished on the upper and central panels of Fig. 5.

-

The adaptive nuisance approach is numerically quite close to the nG method; the maximum difference occurs for \(\varDelta /\sigma =1\) (up to 40% larger error size for 5\(\sigma \) intervals).

-

The p value from the fixed-nuisance approach has a very wide plateau if one works with the ‘reasonable’ range \([-3\varDelta ,+3\varDelta ]\), while the choice of \([-\varDelta ,+\varDelta ]\) might be considered as nonconservative.

-

The 1-external and fixed 1-nuisance approaches are close to each other and less conservative than the adaptive approach, which is expected, but also than nG, for confidence intervals at 3 or 5\(\sigma \) when theory uncertainties dominate.

-

When dominated by theoretical uncertainties (\(\varDelta /\sigma \) large), all approaches provide 3 and 5\(\sigma \) errors smaller than the nG approach, apart from the adaptive nuisance approach.

Comparison of the size of the \((1, 3, 5)\sigma \) errors (upper, central and lower panels, respectively) as a function of \(\varDelta /\sigma \). Different approaches are shown: nG (dotted, red), Rfit or 1-external (dashed, black), fixed 1-nuisance (dotted-dashed, blue), fixed 3-nuisance (dotted-dotted-dashed, purple), adaptive nuisance (solid, green). In the upper panel (\(1\sigma \) confidence level), the adaptive and fixed 1-nuisance approaches yield the same result by construction, and the two curves cannot be distinguished (only the adaptive one is shown). The same situation occurs in the central panel corresponding to 3\(\sigma \) with the adaptive and fixed 3-nuisance approaches

5.2 Significance thresholds

Another way of comparing methods consists in taking the value of \(\mu \) for which the p value corresponds to \(1,3,5 \sigma \) (in significance scale) in a given method, and compute the corresponding p values for the other methods. The results are gathered in Tables 3 and 4. Qualitatively, the comparison of significances can be seen from Fig. 4: if the size of the error is fixed, the different approaches quote different significances for this same error.

In agreement with the previous discussion, we see that fixed 1-nuisance and 1-external yield similar results for 3 and 5\(\sigma \), independently of the relative size of statistical and theoretical effects. Moreover, they are prompter to claim a tension than nG, the most conservative method in this respect being the adaptive nuisance approach.

As a physical illustration of this problem, we can consider the current situation for the anomalous magnetic moment of the muon, namely the difference between the experimental measurement and the theoretical computation in the Standard Model [34]:

This discrepancy has a different significance depending on the model chosen for theoretical uncertainties, which can be computed from the associated p value (under the hypothesis that the true value of \(a_\mu ^\mathrm{SM}-a_\mu ^\mathrm{exp}\) is \(\mu =0\)).Footnote 12 The nG method yields 3.6\(\sigma \), the 1-external approach 3.8\(\sigma \), the 1-nuisance approach 4.0\(\sigma \), and the adaptive nuisance approach 2.7\(\sigma \). The overall pattern is similar to what can be seen from the above tables, with a significance of the discrepancy which depends on the model used for theoretical uncertainties.

5.3 Coverage properties

As indicated in Sect. 2.1.2, p values are interesting objects if they cover exactly or slightly overcover in the domain where they should be used corresponding to a given significance; see Eqs. (7)–(9). If coverage can be ensured for a simple hypothesis [1, 2], this property is far from trivial and should be checked explicitly in the case of composite hypotheses, where compositeness comes from nuisance parameters that can be related to theoretical uncertainties, or other parameters of the problem.

For all methods we study coverage properties in the standard way: one first fixes the true values of the parameters \(\mu \) and \(\delta \) (which are not assumed to be random variables), from which one generates a large sample of toy experiments \(X_i\). Then for each toy experiment one computes the p value at the true value of \(\mu \). The shape of the distribution of p values indicates over, exact or under coverage. More specifically, one can determine \(P(p\ge 1-\alpha )\) for a CL of \(\alpha \): if it is larger (smaller) than \(\alpha \), the method overcovers (undercovers) for this particular CL, i.e. it is conservative (liberal). We emphasize that this property is a priori dependent on the chosen CL.

Distribution of p value (for a fixed total number of events) for different true values \(\delta /\varDelta \) and various relative sizes of statistical and theoretical uncertainties \(\varDelta /\sigma \). The following approaches are shown: nG (dotted, red), Rfit or 1-external (dashed, black), fixed 1-nuisance (dotted-dashed, blue), adaptive nuisance (solid, green). Since the 1-external approach produces clusters of \(p=1\) p values, the coverage values excluding these clusters are also shown, as well as the distribution of p values (dotted-dotted-dashed, grey). Note that the behavior of the 1-external p value around \(p=1\) is smoothened by the graphical representation

In order to compare the different situations, we take \(\sigma ^2+\varDelta ^2=1\) for all methods, and compute for each method the coverage fraction (the number of times the confidence level interval includes the true value of the parameter being extracted) for various confidence levels and for various values of \(\varDelta /\sigma \). Note that the coverage depends also on the true value of \(\delta /\varDelta \) (the normalized bias). The results are gathered in Table 5 and Fig. 6. We also indicate the distribution of p values obtained for the different methods.

One notices in particular that the 1-external approach has a cluster of values for \(p=1\), which is expected due to the presence of a plateau in the p value. This behavior makes the interpretation of the coverage more difficult, and as a comparison, we also include the results when we consider the same distribution with the \(p=1\) values removed. Indeed one could imagine a situation where reasonable coverage values could only be due to the \(p=1\) clustering, while other values of p would systematically undercover: such a behavior would either yield no constraints or too liberal constraints on the parameters depending on the data.

The results are the following:

-

If \(\varOmega \) is fixed and does not contain the true value of \(\delta /\varDelta \) (“unfortunate” case), both external-\(\delta \) and nuisance-\(\delta \) approaches lead to undercoverage; the size of the effect depends on the distance of \(\delta /\varDelta \) with respect to \(\varOmega \). This is also the case for nG.

-

If \(\varOmega \) is fixed and contains the true value of \(\delta /\varDelta \) (“fortunate” case), both the external-\(\delta \) and the nuisance-\(\delta \) approaches overcover. This is also the case for nG.

-

If \(\varOmega \) is adaptive, for a fixed true value of \(\delta \), a p value becomes valid if it is sufficiently small so that the corresponding interval contains \(\delta \). Therefore, for the adaptive nuisance-\(\delta \) approach, there is always a maximum value of CL above which all p values are conservative; this maximum value is given by \(1-\mathrm {Erf}[\delta /(\sqrt{2}\varDelta )]\).

To interpret the pattern of coverage seen above in the external and nuisance approaches, note that one starts with a p value that has exact coverage under the individual simple hypotheses when \(\delta \) is fixed. Therefore, as long as the true value \(\delta \) lies within the range over which one takes the supremum, this procedure yields a conservative envelope. This explains the overcoverage/undercoverage properties for the external-\(\delta \) and nuisance-\(\delta \) approaches given above.

5.4 Conclusions of the uni-dimensional case

It should be stressed that, by construction, all methods are conservative if the true value of the \(\delta \) parameter satisfy the assumption that has been made for the computation of the p value. Therefore coverage properties are not the only criterion to investigate in this situation in order to assess the methods: in particular one has to study the robustness of the p value when the assumption set on the true value of \(\delta \) is not true. The adaptive approach provides a means to deal with a priori unexpected true values of \(\delta \), provided one is interested in a small enough p value, that is, a large enough significance effect. Other considerations (size of confidence intervals, significance thresholds) suggest that the adaptive approach provides an interesting and fairly conservative framework to deal with theoretical uncertainties. We are going to consider the different approaches in the more general multi-dimensional case, putting emphasis on the adaptive nuisance-\(\delta \) approach and the quadratic test statistic.

6 Generalization to multi-dimensional cases

Up to here we only have discussed the simplest example of a single measurement X linearly related to a single model parameter \(\mu \). Obviously the general case is multi-dimensional, where we deal with several observables, depending on several underlying parameters, possibly in a non-linear way, with several measurements involving different sources of theoretical uncertainty. Typical situations correspond to averaging different measurements of the same quantity, and performing fits to extract confidence regions for fundamental parameters from the measurement of observables. In this section we will discuss the case of an arbitrary number of observables in a linear model with an arbitrary number of parameters, where we are particularly interested in a one-dimensional or two-dimensional subset of these parameters.

6.1 General formulas

We start by defining the following quadratic test statistic:

where \(X=(X_i,\ i=1,\ldots ,n)\) is the n-vector of measurements, \(x=(x_i,\ i=1,\ldots ,n)\) is the n-vector of model predictions for the \(X_i\) that depends on \(\chi =(\chi _j,\ j=1,\ldots , n_\chi )\), the \(n_\chi \)-vector of model parameters, \(\tilde{\delta }\) is the m-vector of (dimensionless) theoretical biases, \(W_s\) is the (possibly non-diagonal) \(n\times n\) inverse of the statistical covariance matrix \(C_s\), \(\widetilde{W}_t\) is the inverse of the (possibly non-diagonal) \(m\times m\) theoretical correlation matrix \(\widetilde{C}_t\), \(\varDelta \) is the \(n\times m\)-matrix of theoretical uncertainties \(\varDelta _{i\alpha }\), so that the reduced biases \(\tilde{\delta }_\alpha \) have a range of variation within \([-1,1]\) (this explains the notation with tildes for the reduced quantities rescaled to be dimensionless).

After minimization over the \(\tilde{\delta }_\alpha \), T can be recast into the canonical form,

where

with

The definition of \(\bar{W}\) involves the inverse of matrices that can be singular. This may occur in particular in cases where the statistical uncertainties are negligible and some of the theoretical uncertainties are assumed to be 100% correlated. This requires us to define a generalized inverse, including singular cases, which is described in detail in Appendix A and corresponds to a variation of the approach presented in Ref. [5]. Ambiguities and simplifications that can occur in the definition of T are further discussed in Appendix C. In particular, one can reduce the test statistic to the case \(m=n\) with a diagonal \(\varDelta \) matrix without losing information. In the case where both correlation/covariance matrices are regular, Eq. (36) boils down to \(\bar{W}=[C_s+C_t]^{-1}\) with \(C_t =\varDelta \widetilde{C}_t \varDelta ^T\). This structure is reminiscent of the discussion of theoretical uncertainties as biases and the corresponding weights given in Ref. [29], but it extends it to the case where correlations yield singular matrices.

We will focus here on the case where the model is linear, i.e., the predictions \(x_i\) depend linearly on the parameters \(\chi _j\):

where \(a_{ik}\) and \(b_i\) are constants. We leave the phenomenologically important non-linear case and its approximate linearization for a dedicated discussion in a separate paper [35].

Following the one-dimensional examples in the previous sections, we always assume that the measurements \(X_i\) have Gaussian distributions for the statistical part. We will consider two main cases of interest in our field: averaging measurements and determining confidence intervals for several parameters.

6.2 Averaging measurements

We start by considering the averages of several measurements of a single quantity, each with both statistical and theoretical uncertainties, with possible correlations. We will focus mainly on the nuisance-\(\delta \) approach, starting with two measurements before moving to other possibilities.

6.2.1 Averaging two measurements and the choice of a hypervolume

A first usual issue consists in the case of two uncorrelated measurements \(X_1\pm \sigma _1\pm \varDelta _1\) and \(X_2\pm \sigma _2\pm \varDelta _2\) that we want to combine. The procedure is well defined in the case of purely statistical uncertainties, but it depends obviously on the way theoretical uncertainties are treated. As discussed in Sect. 3, associativity is a particularly appealing property for such a problem as it allows one to replace a series of measurements by its average without loss of information.

Averaging two measurements amounts to combining them in the test statistic. The nuisance-\(\delta \) approach, together with the quadratic statistic Eq. (34), in the absence of correlations yields

with

\(\hat{\mu }\) is a linear combination of Gaussian random variables, and is thus distributed according to a Gaussian p.d.f, with mean \(\mu +\delta _\mu \) and variance \(\sigma _\mu ^2\)

Therefore, \(T-T_\mathrm{min}\) is distributed as a rescaled uni-dimensional non-central \(\chi ^2\) distribution with non-centrality parameter \((\delta _\mu /\sigma _\mu )^2\).

\(\sigma _\mu \) corresponds to the statistical part of the error on \(\mu \). \(\delta _1\) and \(\delta _2\) remain unknown by construction, and the combined theory error can only be obtained once a region of variation is chosen for the \(\delta \)’s (as a generalization of the \([-1,1]\) interval in the one-dimension case). If one maximizes the p value over a rectangle \({\mathcal C}\) (called the “hypercube case” in the following, in reference to its multi-dimensional generalization), \(\delta _\mu \) varies in \(\varDelta _\mu \), with

recovering the proposal in Ref. [29] for the treatment of systematic uncertainties. In this case, \(\delta _1\) and \(\delta _2\) are allowed to be varied separately, without introducing any relation in their values, and can assume both extremal values. On the other hand, if one performs the maximization over a disk (referred to as the “hyperball case” for the same reasons as above) one has the range

In this case, the values of \(\delta _1\) and \(\delta _2\) are somehow related, since they cannot both reach extremal values simultaneously.

Each choice of volume provides an average with different properties. As discussed earlier, associativity is a very desirable property: one can average different observations of the same quantity prior to the full fit, since it gives the same result as keeping all individual inputs. The hyperball choice indeed fulfills associativity. On the other hand, the hypercube case does not: the combination of the inputs 1 and 2 yields the following test statistic: \((w_1+w_2)(\mu -\hat{\mu })^2\), whereas the resulting combination \(\hat{\mu }\pm \sigma _\mu \pm \varDelta _\mu \) has the statistic \((\mu -\hat{\mu })^2/(\sigma _\mu ^2+\varDelta _\mu ^2)\). The two statistics are proportional and hence lead to the same p value, but they are not equivalent when added to other terms in a larger combination.

A comment is also in order concerning the size of the uncertainties for the average. In the case of the hypercube, the resulting linear addition scheme is the only one where the average of different determinations of the same quantity cannot lead to a weighted theoretical uncertainty that is smaller than the smallest uncertainty among all determinations.Footnote 13 In the case of the hyperball, it may occur that the average of different determinations of the same quantity yields a weighted theoretical uncertainty smaller than the smallest uncertainty among all determinations.

Whatever the choice of the volume, a very important and alluring property of our approach is the clean separation between the statistical and theoretical contribution to the uncertainty on the parameter of interest. This is actually a general property that directly follows from the choice of a quadratic statistic, and in the linear case it allows one to perform global fits while keeping a clear distinction between various sources of uncertainty.

6.2.2 Averaging n measurements with biases in a hyperball

We will now consider here the problem of averaging n, possibly correlated, determinations of the same quantity, each individual determination coming with both a Gaussian statistical uncertainty, and a number of different sources of theoretical uncertainty. We focus first on the nuisance-\(\delta \) approach, as it is possible to provide closed analytic expressions in this case. We will first discuss the variation of the biases over a hyperball, before discussing other approaches, which will be illustrated and compared with examples from flavor physics in Sect. 7.

We use the test statistic Eq. (34) for \(\mu \), with \(x(\chi )\) simply replaced by \(\mu U\), where U is the n-vector \((1,\ldots ,1)\). After minimization over the \(\tilde{\delta }_\alpha \), T can be recast into the canonical form

The minimization of Eq. (44) over \(\mu \) leads to an estimator \(\hat{\mu }\) of the average in terms of the measurements \(X_i\)

that allows one to compute the statistical uncertainty \(\sigma _\mu \) in the following way:

The theoretical bias is given by \(\delta _\mu =\sum _{i,\alpha } w_i \varDelta _{i\alpha } \tilde{\delta }_\alpha \). We would like to vary \(\tilde{\delta }_\alpha \) in ranges required to infer the theoretical uncertainty, identifying the combination of biases that is uncorrelated. This is a well-known problem of statistics, and it can easily be achieved in a linear manner by noticing that the relevant combination is \( \varDelta ^T \tilde{C}_t \varDelta \), cf. Eq. (36), and by introducing the Cholesky decomposition for the theoretical correlation matrix \(\widetilde{C}_t=P\cdot P^T\), with P a lower triangular matrix with diagonal positive entries. This yields the expression for the bias,

where \((P^{-1}\tilde{\delta })_\beta \) are uncorrelated biases. If the latter biases are varied over a hyperball, the biases \(\tilde{\delta }\) are varied over a hyperellipsoid elongated along the directions corresponding to strong correlations (see Appendix B for illustrations) and one gets

Known (linear) statistical correlations between two measurements are straightforward to implement, by using the full covariance matrix in the test statistic Eq. (46). On the other hand, in the physical problems considered here (involving hadronic inputs from lattice QCD simulations), it often happens that two a priori independent calculations of the same quantity are statistically correlated, because they use the same (completely or partially) ensemble of gauge configurations. The correlation is not perfect of course, since usually different nonlinear actions are used to perform the computation. However, the accurate calculation of the full covariance matrix is difficult, and in many cases it is not available in the literature. For definiteness, we will assume that if two lattice calculations are statistically correlated, then the (linear) correlation coefficient is one. In such a case the covariance matrix is singular, and its inverse \(W_s\) is ill-defined, as well as all quantities that are defined above in terms of \(W_s\). A similar question arises for fully correlated theoretical uncertainties (coming from the same method), leading to ambiguities in the definition of \(\widetilde{W}_t\). Details of these issues are given in Appendices A and B.

Statistical uncertainties are assumed here to be strictly Gaussian and hence symmetric (see Appendix C for more details of the asymmetric case). In contrast, in the nuisance approach, a theoretical uncertainty that is modeled by a bias parameter \(\delta \) may be asymmetric: that is, the region in which \(\delta \) is varied may depend on the sign of \(\delta \), e.g., \(\delta \in [-\varDelta _-,+\varDelta _+]\) in one dimension with the fixed hypercube approach (\(\varDelta _\pm \ge 0\)). In order to keep the stationarity property that follows from the quadratic statistic, we take the conservative choice \(\varDelta =\mathrm{Max}(\varDelta _+,\varDelta _-)\) in the definition Eq. (34). Let us emphasize that this symmetrization of the test statistic is independent of the range in which \(\delta \) is varied: if theoretical uncertainties are asymmetric, one computes Eqs. (46)–(48) to express the asymmetric combined uncertainties \(\varDelta _{\mu ,\pm }\) in terms of the \(\varDelta _{i\alpha ,\pm }\).

6.2.3 Averages with other approaches

In Sect. 6.2.1, we indicated that other domains can be chosen in principle in order to perform the averages of measurements, for instance a hypercube rather than a hyperball. If we do not try to take into account theoretical correlations in the range of variation, it is quite easy to determine the result for \(\varDelta \)

reminiscent of the formulas derived in Ref. [29]. However, we encountered severe difficulties when trying to include theoretical correlations in the discussion. Similarly to the hyperball case, it would be interesting to consider a linear transformation P of the biases (for instance, the Cholesky decomposition of \(C_t\), but the discussion is more general), so that \((P^{-1}\tilde{\delta })_\beta \) are uncorrelated biases varied within a hypercube. This would lead to \(\tilde{\delta }\) varied within a deformed hypercube, which corresponds to cutting the hypercube by a set of \((\tilde{\delta }_i,\tilde{\delta }_j)\) hyperplanes. It can take a rather complicated convex polygonal shape that is not symmetric along the diagonal in the \((\tilde{\delta }_i,\tilde{\delta }_j)\) plane, leading to the unpleasant feature that the order in which the measurements are considered in the average matters to define the range of variation of the biases (an illustration is given in Appendix B).Footnote 14

As indicated before, this discussion occurs for any linear transformation P and is not limited to the Cholesky decomposition. We have not been able to find other procedures that would avoid these difficulties while paralleling the hypercube case. In the following, we will thus use Eq. (49) even in the presence of theoretical correlations: therefore, the latter will be taken into account in the definition of T through \(\bar{W}\), but not in the definition of the range of variations to compute the error \(\varDelta \). We also notice that the problems that we encounter are somehow due to contradicting expectations concerning the hypercube approach. In Sect. 6.2.1, the hypercube corresponds to values of \(\delta _1\) and \(\delta _2\) left free to vary without relation among them (contrary to the hyperball case). It seems therefore difficult to introduce correlations in this case which was designed to avoid them initially. Our failure to introduce correlations in this case might be related to the fact that the hypercube is somehow designed to avoid such correlations from the start and cannot accommodate them easily.

In the case of the external-\(\delta \) approach, the scan method leads to the same discussion as for the nuisance case, provided that one uses the following statistic: \(T = (X-\mu -\delta )^2/(\sigma ^2+\varDelta ^2)\). This choice is different from Ref. [32] by the normalization (\(\sigma ^2+\varDelta ^2\) rather than \(\sigma ^2\)) in order to take into account of the importance of both uncertainties when combining measurements (damping measurements which are imprecise in one way or the other). As indicated in Sect. 4.4, the difference of normalization of the test statistic does not affect the determination of the p value in the uni-dimensional case, but it has an impact once several determinations are combined. The choice above corresponds to the usual one when \(\varDelta \) is of statistical nature. It gives a reasonable balance when two or more inputs are combined that all come with both statistical and theoretical uncertainties.

A similar discussion holds for the random-\(\delta \) approach. However, if the combined errors \(\sigma _\mu \) and \(\varDelta _\mu \) are the same between the nuisance-\(\delta \) (with hyperball), the random-\(\delta \) and the external-\(\delta \) (with hyperball) approaches, we emphasize that the p value for \(\mu \) built from these errors is different and yields different uncertainties for a given confidence level for each approach, as discussed in Sect. 4.

6.2.4 Other approaches in the literature

There are other approaches available in the literature, often starting from the random-\(\delta \) approach (i.e., modeling all uncertainties as random variables).

The Heavy Flavor Averaging Group [36] choose to perform the average including correlations. In the absence of knowledge on the correlation coefficient between uncertainties of two measurements (typically coming from the same method), they tune the correlation coefficient so that the resulting uncertainty is maximal (which is not \(\rho =1\) in the case where the correlated uncertainties have a different size and are combined assuming a statistical origin; see Appendix A.2). This choice is certainly the most conservative one when there is no knowledge concerning correlations.

The Flavor Lattice Averaging Group [37] follows the proposal in Ref. [38]: they build a covariance matrix where correlated sources of uncertainties are included with 100% correlation, and they perform the average by choosing weights \(w_i\) that are not optimal but are well defined even in the presence of \(\rho =\pm 1\) correlation coefficients. As discussed in Appendix A.2, our approach to singular covariance matrices is similar but more general and guarantees that we recover the weights advocated in Ref. [38] for averages of fully correlated measurements.

Finally, the PDG approach [34] combines all uncertainties in a single covariance matrix. In the case of inconsistent measurements, one may then obtain an average with an uncertainty that may be interpreted as ‘too small’ (notice however that the weighted uncertainty does not increase with the incompatibility of the measurements). This problem occurs quite often in particle physics and cannot be solved by purely statistical considerations (even in the absence of theoretical uncertainties). If the model is assumed to be correct, one may invoke an underestimation of the uncertainties. A (commonly used) recipe in the pure statistical case has been adopted by the Particle Data Group, which consists in computing a factor \(S=\sqrt{\chi ^2/(N_\mathrm{dof}-1)}\) and rescaling all uncertainties by this factor. A drawback of this approach is the lack of associativity: the inconsistency is either removed or kept as it is, depending on whether the average is performed before any further analysis, or inside a global fit. Furthermore since the ultimate goal of statistical analyses is indeed to exclude the null hypothesis (e.g. the Standard Model), it looks counter-intuitive to first wash out possible discrepancies by an ad hoc procedure. Therefore we refrain to define a S factor in the presence of theoretical uncertainties, and we leave the discussion of discrepancies between independent determinations of the same quantity to a case-by-case basis, based on physical (and not statistical) grounds.

In the case of the Rfit approach adopted by the CKMfitter group [15, 16], a specific recipe was chosen to avoid underestimating combined uncertainties in the case of marginally compatible values. The idea is first combine the statistical uncertainties by combining the likelihoods restricted to their statistical part, then assign to this combination the smallest of the individual theoretical uncertainties. This is justified by the following two points: the present state of the art is assumed not to allow one to reach a better theoretical accuracy than the best of all estimates, and this best estimate should not be penalized by less precise methods. In contrast with the plain (or naive) Rfit approach for averages (consisting in just combining Rfit likelihoods without further treatment), this method of combining uncertainties was called educated Rfit and is used by the CKMfitter group for averages [17, 19, 22]. Let us note finally that the calculation of pull values, discussed in Sect. 6.3, is a crucial step for assessing the size of discrepancies.

6.3 Global fit

6.3.1 Estimators and errors

Another prominent example of multi-dimensional problem is the extraction of a constraint on a particular parameter of the model from the measured observables. If the model is linear, Eq. (38), the discussion follows closely that of Sect. 6.2.2. In the case where there is a single parameter of interest \(\mu \), we do not write explicitly the calculations and refer to Sect. 7 for numerical examples.

We start from the test statistic Eq. (34) in the linear case defined in Eq. (38), reducing the number of theoretical biases to the case \(m=n\) as indicated in Appendix C. Following the same discussion as in Sect. 6.2.2, we can minimize with respect to \(\tilde{\delta }_\alpha \), leading to the canonical form,

The minimum of this function is found at the point \(\hat{\chi }_k\) where

so that we have

The minimum \(\hat{\chi }_q\) is thus linearly related to the measured observables \(X_i\) and their statistical properties are closely related. The test statistic for a particular parameter \(\mu =\chi _q\) will lead to \(T(X;\mu )=(\mu -\hat{\chi }_q)^2 \times (a^T\bar{W}a)_{qq}\), so that the discussion of the p value for \(\mu \) follows exactly the discussion for uni-dimensional measurements.Footnote 15

For instance, if the observables \(X_i\) have central values \(X_{i0}\) and variances \(\sigma ^2_{X_i}\), the central value and the variance for \(\hat{\chi }_q\) (corresponding also to the central value and statistical uncertainty for the p value for \(\mu =\chi _q\)), can readily be obtained from

Similar to the previous section, the theoretical uncertainty on \(\mu =\chi _q\) is obtained in the hyperball case as

It remains to determine how to define the theoretical correlation in this framework, denoted \(\kappa _{qr}\) corresponding to the actual parameters of interest. This can be seen as trying to infer a scalar product on the vectors \([w^{(q)}\varDelta P]_i\) from the knowledge of a norm, here \(L^2\). We will thus define the theoretical correlation in the following way:

In Sect. 6.2.2 we encountered difficulties in extending the discussion to the hypercube case. We can define errors varying the biases without correlations in the definition of the hypercube

but we could not determine a way of defining this hypercube taking into account theoretical correlations. Moreover, there is no obvious way to extend the definition of theoretical correlation for the hypercube in a similar way to Eq. (56), as there is no scalar product associated to the \(L^1\)-norm. We will thus not quote theoretical correlations for the hypercube case.

6.4 Goodness-of-fit