Abstract

The single-mirror small-size telescope (SST-1M) is one of the three proposed designs for the small-size telescopes (SSTs) of the Cherenkov Telescope Array (CTA) project. The SST-1M will be equipped with a 4 m-diameter segmented reflector dish and an innovative fully digital camera based on silicon photo-multipliers. Since the SST sub-array will consist of up to 70 telescopes, the challenge is not only to build telescopes with excellent performance, but also to design them so that their components can be commissioned, assembled and tested by industry. In this paper we review the basic steps that led to the design concepts for the SST-1M camera and the ongoing realization of the first prototype, with focus on the innovative solutions adopted for the photodetector plane and the readout and trigger parts of the camera. In addition, we report on results of laboratory measurements on real scale elements that validate the camera design and show that it is capable of matching the CTA requirements of operating up to high moonlight background conditions.

Similar content being viewed by others

1 Introduction

The CTA, the next generation very high energy gamma-ray observatory, is a project to build two arrays of over 100 imaging atmospheric Cherenkov telescopes (IACTs) placed in two sites in the northern and southern hemispheres. The array will consist of three types of telescopes: large-size telescopes (LSTs), with \(\sim \)24 m reflector diameter and an effective light collection surface of 396 m\(^{2}\); medium-size telescopes (MSTs), with \(\sim \)12 m reflector diameter and an effective light collection surface of 100 m\(^{2}\); small size telescopes (SSTs), with \(\sim \)4 m reflector diameter.Footnote 1 About 70 SSTs will be installed in the Southern site, which offers the best view of the galactic plane, and will be spaced at inter-telescope distances between 200–300 m to cover an air shower collecting surface of several square-kilometers. This surface allows for observation of gamma-rays with energy between about 3 and 300 TeV [1]. A single mirror Davies–Cotton telescope (SST-1M) based on silicon photo-multiplier (SiPM) photodetectors and whose camera is described in the paper is one of the proposed designed for the SSTs. The other two projects [2, 3] are dual mirror telescopes of Schwarzschild–Couder design.

The camera is a critical element of the proposed SST-1M telescope. It has been designed to address the CTA specifications on the sensitivity of the array, on the angular resolution which needs to be at least comparable to the PSF shown in Fig. 2, on the charge resolution (see Sect. 8.3) and dynamic range from 1 to about 2000 p.e., the field-of-view (FoV) of at least \(9^\circ \) for SSTs, the uniformity of the response, as well as on the maintenance time and cost. The SST-1M camera has been designed to achieve the best cost over performance ratio while satisfying the stringent CTA requirements. Its components are made with standard industrial techniques, which make them reproducible and suited for large scale production. For these reasons, the camera features a few innovative strategies in both the optical system of the photo-detection plane (PDP) and the fully digital readout and trigger system, called DigiCam.

The University of Geneva-UniGE is producing the first camera prototype and is in charge of the design and production of the PDP, its front-end electronics, the cooling system, the mechanics including the shutter and the system for the integration on the telescope structure. The Jagiellonian University and the AGH University of Science and Technology in Kraków are in charge of the development of the readout and trigger system. This prototype not only serves to prove that the overall concept can meet the expected performance, but also serves as a test-bench to validate the production and assembly phases in view of the production of twenty SST-1M telescopes.

This paper is structured as follows: the general concept of the camera is described in Sect. 2, while Sects. 3 and 4 are dedicated to more details on the design of the PDP and of DigiCam, respectively. Sect. 5 describes the cooling system and Sect. 6 the housekeeping system. Sections 7 and 8 are devoted to the description of the camera tests and validation of its performance estimated with the simulation described in Sect. 10. Section 9 describes initial plans on the calibration strategy during operation. In Sect. 11, we draw the conclusions of the results and the plans for future operation and developments.

2 Overview on the SST-1M camera design

2.1 Camera structure

The geometry of the the SST-1M camera is determined by the optical properties and geometry of the telescope, as was discussed in [4]. As can be seen in Fig. 1, the camera has a hexagonal shape with the vertex-to-vertex length of 1120 mm and a height of 735 mm. It weighs less than 200 kg. According to the CTA requirements, the SST optical point spread function (PSF) shall not exceed 0.25\(^\circ \) at \(4^\circ \) off-axis and the telescope must focus a parallel beam of light (over 80% of the required camera FoV of 9\(^\circ \)) with a time spread of less than 1.5 ns (rms). To achieve the required PSF with a Davies-Cotton design, a focal ratio of 1.4 is adopted for a telescope with a reflector diameter of about 4 m and consequently focal length of 5.6 m. The hexagonal pixel size is 23.2 mm flat-to-flat and the cut-off angle 24\(^\circ \). The cut-off angle can be achieved using light concentrators. The optical PSF, shown in Fig. 2, is obtained with ray tracing including the mirror facet geometry and the measured spot size and the used focal length. To obtain the angular resolution of the telescope, the PSF has to be convolved with the precision coming from the camera and its pixel size.

Simulations indicate that for 80% of the FoV, which corresponds to within \(4^\circ \) off-axis, the largest time spread is 0.244 ns for on-axis rays.

A CAD drawing of the camera decomposed in its elements is shown in Fig. 3.

The mechanics features an entrance window that protects the PDP (see Fig. 4) and a shutter (see Fig. 5) that provides a light-tight cover when the telescope is in parking position and also protects the camera from environmental conditions. The camera mechanics offers protection from rain showers and prevent any dust from entering which is compliant with the international protection level of 65.Footnote 2

2.2 General concept of the camera architecture

The camera is composed of two main parts. The PDP (described in Sect. 3), based on SiPM sensors, and the trigger and readout system, DigiCam (see Sect. 4). DigiCam uses an innovative fully digital approach in gamma-ray astronomy. Another example of this kind in CTA is FlashCam, the camera for the mid-size telescopes [5]. The general idea behind such camera architecture is to have a continuous digitization of the signals issued by the PDP and use a low resolution copy of them on which the trigger decision is based.

The SST-1M camera takes snapshots of all pixels every 4 ns and stores them in ring buffers. As explained in Sect. 4, the trigger system applies the selection criteria on a lower resolution copy of these data. If an event passes the selection, the full resolution data are sent to a camera server via a 10 Gbps link (this bandwidth can be shared among events of different types).Footnote 3 The camera server filters the data, reducing the event rate down to the CTA target of 600 Hz for the commissioning (300 Hz for normal operation). It also acts as the interface between the camera and the central array system. It not only ships the data to the array data stream, but it also transmits information and commands to and from central array control system (ACTL) and handles the array trigger requests.

The use of ring buffers in DigiCam allows the system to keep taking data while analyzing previous images for the trigger decision, providing a dead time free operation at the targeted event rate of 600 Hz. Latest generation of FPGAsFootnote 4 are used to achieve the high data throughput needed to aggregate the huge amount of data exchanged within the DigiCam hardware components (see Sect. 4), to have resources to guarantee low latency and high performance of the trigger algorithms and keep the flexibility for further evolution of the system.

The trigger logic is based on pattern matching algorithms which guarantee flexibility as different types of events (gamma, protons, muons, calibration events, etc.) produce different patterns, and the data can be triggered and flagged accordingly. The main advantage of such a feature is that the event flagging does not have to be performed later by the camera server and therefore it saves resources for the other operations such as on-line calibration and data compression.

2.3 SiPM sensors in the SST-1M camera

The use of SiPM technology is quite recent in the field of gamma-ray astrophysics and it is an important feature of the SST-1M camera. Currently, FACT is the first and so far only telescope operating the first SiPM-based camera on field [6]. It is very similar in dimensions to the SST-1M telescope but with half its FoV. SiPMs offer many advantages with respect to the traditional photo-multiplier tubes (PMTs), such as negligible ageing, insensitivity to magnetic fields, cost effectiveness, robustness against intense light, considerably lower operation voltage. For the case of the CTA SSTs, the capability of SiPMs of operating at high levels of light without any ageing, implies that data can also be taken with intense moonlight [7], increasing the telescope duty cycle, hence improving the discovery potential and sensitivity in the high-energy domain. The SST-1M camera will use a largely improved SiPM technology compared to the one used in FACT that reduces dramatically the cross-talk while the fill factor is not much affected (see Sect. 3.3).

A feature of the SST-1M camera design is that the sensors are DC coupled to the front-end electronics while the other SST solutions [2, 3] are AC coupled. With DC coupling, shifts in the baseline due to changes of the intensity of the night sky background (NSB) and of the moon light, can be measured and used to monitor such noise pixel-by-pixel. This information can be used by the entire array to monitor the stray light environmental noise.

Another innovative feature of the camera concerns the stabilization of the SiPM working point. The breakdown voltage of the sensor depends strongly on temperature. For the sensors used in the SST-1M camera prototype, the breakdown voltage varies with temperature with a coefficient of typically 54 mV/\(^\circ \)C that was measured on a set of sensors as described in Sect. 3.3. If no counter measures are taken, the sensors within the PDP operate at different gains, that is the conversion factor of charge into the number of p.e.s. The gain can change due to temperature variations in time and can be different between pixels due to temperature gradients within the PDP (see Sect. 26). These effects would lead to a non-uniformity of the trigger efficiency in time and across the camera. Since the sensors are operated at an average of 2.8 V over-voltage,Footnote 5 which the operational voltage suggested by the manufacturer, this would imply a gain variation of 2%/\(^\circ \)C.Footnote 6 A stabilization of the sensor working point has therefore been developed and is described in Sect. 3.4.

3 The design and production of the PDP

The PDP (see Fig. 4) has 1296 pixels, distributed in 108 modules of 12 pixels each. The PDP has a hexagonal sensitive area of 87.7 cm side-to-side and weighs about 35 kg. Its mechanical stability is provided by an aluminium backplate, to which the modules are screwed. The backplate also serves as a heat sink for the PDP cooling system (see Sect. 5). A drawing and a photograph of a single module are shown in Fig. 6.

The pixels are formed by a hexagonal hollow light-funnel with a compression factor of about 6 coupled to a large area, hexagonal SiPM sensor [4]. A pixel design is shown in Fig. 7. The sensor has been designed in collaboration with HamamatsuFootnote 7 to reach the desired size. The choice of hexagonal shape has been preferred to facilitate the industrial manufacture of lightguides. Moreover, for an easy implementation of selection algorithms based on the recognition of circular, elliptical or ring-shaped patterns (these latter peculiar of muon events), it is desirable that the trigger operates in fully symmetrical conditions. The hexagonal shape provides such a feature since the center of each pixel is at the same distance from the centers of all its neighbors and minimizes the dead space between pixels.

The PDP also includes the front-end electronics which, due to space constraints, is implemented in two separate printed circuit boards (PCBs) in each module. The front-end electronics boards – the pre-amplifier board and the slow control board (SCB) – are introduced in Sect. 3.4 and are described in detail in Ref. [8]. The former has been specifically realized to handle the signals arising from the large area (hence large capacitance) sensors, the latter serves to manage the slow control parameters of each sensor (such as the bias voltage and the temperature) and to stabilize its operational point. The design of the two boards has been driven by the need of having a low noise, high-bandwidth and low power front-end electronics. Cost minimization has also been accounted for reaching \(\sim \)100€ (including the cost of the sensors) per pixel in the production phase of 20 telescopes. In what follows the different components of the PDP are described in detail.

3.1 The entrance window

The main protection of the PDP against water and dust is provided by an entrance window made of 3.3 mm thick Borofloat layer. Borofloat was chosen against PMMA due to its better mechanical rigidity. PMMA has good UV transmittance down to 280 nm (310 nm for Borofloat). Nonetheless, a rigid enough PMMA window would require a 6-8 mm thickness, hence absorbing too much incoming light, while finite element analysis (FEA) studies indicate that for Borofloat 3.3 mm is sufficient. Given that the photo-detection efficiency of the selected sensors significantly degrades for wavelengths below 310 nm, it was decided to adopt the Borofloat solution. The Cherenkov light of the significant part of the shower for the purpose of reconstruction does not require sensitivity below this wavelength.

The outer of the entrance window is coated with an anti-reflective layer to reduce Fresnel losses. The inner side is coated with a dichroic filter cutting off wavelengths above 540 nm. The coating of the window is a delicate procedure given its large surface. In order to obtain a uniform result, a large enough coating chamber is required. The only company offering such a possibility, among those we explored, is Thin Film PhysicsFootnote 8 (TFP).

The first produced window is shown in Fig. 8.

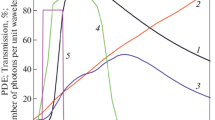

Top the Cherenkov light spectrum (blue solid line) and the CTA reference night sky background spectrum (green solid line) for dark nights. For comparison, the photo-detection efficiency (PDE) of the sensors is indicated by the red solid line, and its convolution with the wavelength filter due to the window and the light concentrator transmittance is shown by the dashed red line. Bottom signal-to-noise ratio as a function of the wavelength, showing a maximum at about 540 nm

As a reference, at the top of Fig. 9 the Cherenkov spectrum (blue line, calculated for showers at 20\(^\circ \) zenith angle and detected at 2000 m above see level) and the CTA reference NSB (green line) spectrumFootnote 9 are compared to the PDE of the sensors (red line) and its convolution with the wavelength filter (red dashed line) on the window and the light concentrator transmittance (see next section); in the bottom panel of the figure, the SNR of the window is shown. Cutting the long wavelengths is more important for SiPMs than for PMTs since the SiPMs have higher sensitivity in the red and near infrared where the NSB is larger. The intense NSB peaks at wavelengths larger than 540 nm are cut away by the filter layer on the window as shown by the red dashed line in Fig. 9 (top).

3.2 The hollow light concentrators

Light guides are often used in gamma-ray telescope cameras to focus light from the pixel surface onto sensors of smaller area with good efficiency, and to reduce the contamination by stray light coming from the NSB and from reflections of light on the terrain, snow, etc. The light guide design has to be the closest possible to the ideal Winston cone, whose efficiency is maximum up to a sharp cut-off angle which depends on the f/D ratio of the telescope (f and D being the focal length and the reflector diameter, respectively) and on the FoV [4].

The light funnel design was optimized by using the Zemax optical and illumination design software [12]. To maximize the collection efficiency of the cone (that is, the amount of outgoing light with respect to the amount of incoming light) the design of the funnel inner surface has been optimized using a cubic Bèzier curve [13].

Two possible light guide designs have been investigated: full cones and hollow cones. The calculations show that full cones in a material of the same optical properties as the camera window would provide a better compression factor (14.1, compared to 6.1 for the hollow cones). However, they would be more elongated (53.3 mm, while the hollow cones are 36.7 mm long), and would therefore absorb a higher fraction of the light than hollow cones below 420 nm [14]. A solution would be to reduce the pixel size, so that the length of the full cone would reduce accordingly. However, this would increase considerably the number of channels (and hence the cost) of the camera. Moreover, since the PSF is fixed by the telescope optics, there is no advantage in reducing the pixel size.

Therefore, the adopted solution has been the hollow light concentrator. A drawing of the light guide is shown in Fig. 7. The light is collected from an entrance hexagonal surface of 23.2 mm side-to-side linear size and focused onto an exit hexagonal surface of 9.4 mm side-to-side linear size. The cut-off angle is around 24\(^\circ \).

In line with the overall camera design philosophy, the production strategy of the cones has been conceived for being cheap, reproducible and scalable, at the same time delivering a high quality product that could be tested on a subset of samples prior to assembly on the camera structure. The cones substrate is produced by Viaoptic GmbHFootnote 10 in black polycarbonate (MAKROLON 2405 SW 901510) using plastic injection molding, a well established mass production technique, followed by cleaning and coating. The Bezier shape eases the manufacturing process since computer-numerical-control machines are typically using this format. While the stringent precision on the geometry (shape and size with tolerance <40 \(\upmu \)m) of the half cones substrate was met, the requirements on the roughness (<50 nm) of the inner surface where obtained with a dedicated optimization and polishing of the injection mould. This part is critical, since the overall reflectivity of the cone is driven by the smoothness of the reflective surfaces [15], while the coating’s role is to modify and/or enhance it. Also the coating technique, similar to sputtering, required some development in collaboration with TFP and BTE.Footnote 11 Methods for the deposition of reflective layers on plastic are well established for flat surfaces, but in the case of the highly curved surfaces of the light concentrators such techniques are more difficult and required a dedicated optimization.

The cones are produced in two halves, that are coated separately and later on glued together (see Fig. 10) in jigs shown at the bottom of the figure. The assembly time of the camera elements is affected by the drying time of the glue of about 6 hours. To make the assembly process faster the number of jigs can be increased.

Top the transmission curve versus incidence angle on the light guide entrance surface for the reference TFP curve (red line) and for cones which underwent 10, 20, 30 and 40 thermal cycles between the thermal range of \(-15\) to \(35^{\circ }\) at constant (low) humidity levels. The reference TFP curve is obtained averaging 42 cones, chosen randomly, of the production of 1300 cones. Differences are smaller than the precision of the measurement set up. Bottom difference between the measured transmittance of cones after temperature cycles and the reference TFP curve in the upper plot

The overall optimization of the cones design required multiple production campaigns followed by dedicated measurements of the cone transmittance versus incident angle of light. For each production batch, ellipsometric evaluation of the coating on flat samples at the factory are followed by laboratory measurements at UniGE on a set of assembled cones with a dedicated test setup that allows measuring the reflection efficiency of a single cone in about half an hour time and to compare it with the one expected from simulations. Since individual testing of all the cones is too time consuming, assessments can be made only on samples of the produced cones. The high uniformity of the substrates is guaranteed by the producer thanks to the coating in chambers large enough to contain all cones and with high uniformity of sputtering.

The measuring set up and the Zemax simulation are fully described in [4], where an agreement of the order of 2% on the transmittance, comparable to the estimated systematic error of the measurement, is shown. The transmittance of the measured cones for an angle of incidence of \(16^{\circ }\) on the entrance surface (which corresponds to the incidence angle that produces the maximum of the distribution of the light reflected on the telescope mirror) is about 88–90%. This value does not include the absorption by the entrance window of the camera. BTE and TFP cones have shown negligible performance degradation after thermal cycles (within the 2% measurement systematic errors). In Fig. 11 the average of the transmittance function of 42 cones is shown and compared to the transmittance of cones which underwent different numbers of thermal cycles.

3.3 The SiPM sensors

A picture of the hexagonal sensor is shown in Fig. 12. With its 93.56 mm\(^2\) sensitive surface, this device is one of the largest monolithic SiPM produced. Since such large area hexagonal shaped sensors were not yet commercially available, the device was designed and produced in collaboration with Hamamatsu. The first version was named S12516(X)-050, followed by the version, called S10943-3739(X), which is used for the camera, which employs the Hamamatsu low cross-talk technology.Footnote 12 This allows for an operation at higher over-voltage than the with the S12516(X)-050 sensors, translating into a higher PDE and improved signal to noise ratio (SNR), especially relevant for the detection of few photons.

The SiPM is a matrix of 36,840, 50 \(\upmu \)m-size square microcells (see Fig. 13). The main drawback of the large area sensors with respect to smaller sensors is the related high capacitance (3.4 nF) which induces long signals. For the hexagonal sensors, signals last of order of 100 ns, a value which does not fit within the CTA required 80 ns integration window. To reduce the capacitance, the 36,840 cells are grouped into four channels of 9210 cells each, with a capacitance of 850 pF each.

Although the SiPM production technique is well established, it has been important to characterize the hexagonal large surface device thoroughly to ensure that it meets the expected performance.

Top dark noise per unit area at two different p.e. thresholds for the first batch of sensors received (red curves) and the selected low technology cross-talk sensors (blue curves). The operation over-voltage of the camera will be at around 2.8 V. Middle optical cross-talk versus over-voltage for the two sensor types. Bottom optical cross-talk versus PDE for the two types of sensors. The saturation of the PDE is visible in the S10943-2832-050 sensors data. If only the room temperature was known at the time of the measurement, the temperature of the sensor was evaluated afterwards (35 \(^{\circ }\)C) and the overvoltage were corrected according to it. A summary of all measurements of the first batch custom sensors is in Ref. [16]

As an example, the measured PDE, dark count rate and cross-talk of sensors are shown in Fig. 14. At the time of these measurements, a system to monitor the temperature was not available and no cooling was implemented. Later evaluations showed that the sensors had been operated at an average temperature of 40\(^\circ \). The overvoltage values quoted on Fig. 14 were corrected accounting for the difference between the room temperature and the actual temperature of the sensor. As a result, the values measured for dark count rate are sensibly higher than the ones that will be presented later on in Sect. 7, for which the sensors were operated at around 20\(^\circ \). However, these results still provide a valid comparison between the two sensor types, since the measurement conditions were the same for both. A future publication will discuss extensively these measurements and a previous one discussed the properties of the first version of the sensor [16].

Top correlation between the operational voltage provided by Hamamatsu and the breakdown voltage measured at UniGE for 42 sensors. Middle \(\Delta V\) is the residual of \(V_{break}\) measured at \(25 ^\circ \)C for each of the four channels of a sensor with respect to their average value. The spread varies between 10 and 140 mV and a Gaussian fit of the distribution returns a standard deviation of 30 mV. Bottom spread in the pixel’s channel gain determined with respect to the average per sensor operated at the \(V_{ov}\) provided by Hamamatsu. The RMS of the distribution is 3% and the Gaussian fit returns a \(\sigma = 1.2\%\). Outliers of the distribution are about 20 channels beyond the 5% variation belonging to 13 channels of 42 sensors (4 channels each)

As for the light concentrators, testing each SiPM in the laboratory was not feasible. Hence, our strategy has been to characterize a sub-sample of the sensor total production to verify the reliability of the Hamamatsu data-sheets. A dedicated measurement campaign has thus been carried out to measure the basic functional properties and the values of the main parameters on some sensors: I–V curves, optical cross-talk, dark-count rate, gain, breakdown voltage, PDE and pulse shape analysis.

In particular, from the measurement of the I–V characteristics the breakdown voltage and the quenching resistance can be extracted. It has been verified that the information in the Hamamatsu data-sheets on the operational voltage is well correlated with our measurements of the breakdown voltage for 42 sensors, as shown in Fig. 15-top. In that case, the breakdown voltage is measured for each of the 4 channels. Because the aim is to observe a correlation of the measured breakdown voltage with the data sheet value, other measurement methods were not considered. The systematics on the static measurement of the breakdown voltage using the tangent method on the are in the order of 0.05 V. The conclusion of this campaign was that the sensors’ homogeneity is high, that the values of the operational voltage at a fixed gain (of \(7.5 \times 10^5\) for the first type of sensors and \(1.6 \times 10^6\) for the second type) provided by Hamamatsu are highly reliable. As a matter of fact, their suggested operational voltage is the best working point in terms of compromise between the PDE, the cross-talk and the dark count rate. As visible in Fig. 14, while the PDE saturates the cross talk and dark count continue to increase with increasing over-voltage. Moreover, the main parameter values of the custom designed sensors correspond to the ones expected by extrapolation from smaller area devices, which indicates that the large area hexagonal sensors are in fact performing as a conventional (smaller area, square) SiPM for surface-independent parameters.

In the Hamamatsu data sheets, the value of the operational voltage at fixed gain is reported for each of the four channels of a sensor. Nonetheless, the four channels share a common cathode, which implies that only one bias voltage can be applied per sensor, rather than an individual bias per channel. This feature affects the gain spread within one sensor but not the gain spread cross the photo-detection plane. Therefore, it has been necessary to check the values of the differences of the break down voltages among the four channels. The typical breakdown voltage spread between channels in a sensor was less than 300 mV, that is less than 0.5% if compared to the typical bias voltage at around 57 V. Figure 15-middle shows the residual of \(V_{break}\) for each of the four channels of a sensor that is the difference between the bias voltage that a single channel would require and the average bias voltage that is applied. This is the main parameter affecting the gain uncertainty (see Fig. 15-bottom) and hence the charge resolution of the camera. In Sect. 8.3 it will be shown that, indeed, this feature does not have relevant consequences on the single photon sensitivity and charge resolution of the sensors. Simulations indicate that for a Gaussian distribution of the \(V_{break}\) residuals with \(\sigma /\mu = 5\%\), the charge resolution is inside the CTA required values (see section 8.3). Consequently, a specification value for Hamamatsu has been estimated in order to reject sensors with channel spreads \(\Delta V > 300\) mV.

The high number of microcells in the sensor provides a high dynamic range of the collected light. Measurements have demonstrated that, for the foreseen light intensities (up to a few thousand photons per pixel at most, see simulation results in Sect. 10.3), the deviation from linearity is negligible. What could affect this feature is the presence of the non-imaging light concentrators, which results in a non-homogeneous distribution of the incoming light onto the sensor surface (see Ref. [4]). However, studies on ray-tracing simulations and preliminary measurements have shown that the effect is negligible.

Each sensor has an NTC probeFootnote 13 in the packaging used by the front-end electronics to readout the instantaneous temperature of the device. This information is used by the PDP slow control system to stabilize the working point of individual sensors as a function of temperature (see Sect. 3.4). Employing a climatic chamber, the breakdown voltage as a function of temperature was studied, which allowed us to verify that the relation is linear with slope 54 mV\(/^\circ \)C close to the value provided by Hamamatsu.

3.4 The front-end electronics

The need to operate many large area SiPMs within the compact PDP structure has posed a few challenges in the design of the front-end electronics. Due to space constraints, this has been implemented in two levels, so that each 12 pixel module is provided with a pre-amplifier and a slow control board. Both boards have been designed to use low-power and low-cost components. A full description of the front-end is reported in Ref. [8].

The pre-amplifier board (see Fig. 16), holds together the 12 pixels of a module and implements the amplification scheme shown in Fig. 17.

Due to space, power and cost constraints, it was not possible to provide each of the four channels of a sensor with a low-noise amplifier. As a solution, the signals from the four channels are summed via two low-noise trans-impedance amplification stages followed by a differential output stage. The values of the parameters of this circuitry have been fine-tuned (through simulations validated by measurements) as a compromise between gain and bandwidth, to achieve well behaved pulses over the full dynamic range.

The pulse shapes produced by the pre-amplifier are shown in Fig. 18 for increasingly high light levels. The system provides a linear response up to around 750 photons, after which saturation occurs. Despite this loss of linearity, it will be shown later in Sect. 8.3, that the signals up to few thousands of photons (that is, the range foreseen for the SSTs) can be reconstructed with a resolution that is still well within the CTA requirements.

A peculiarity of the pre-amplification scheme is the DC coupling of the sensor to the pre-amplifier, which gives the possibility to measure directly the NSB during observation on a per-pixel basis. In fact, the NSB is expected to be of the order of 20–30 MHz per pixel in dark nights, reaching up to 600 MHz in half-moon nights at 6\(\circ \) off-axis, considerably higher than the sensor dark noise rate of about few MHz (see Fig. 14). As a net effect, the signal baseline position shifts towards higher values as a function of the NSB level. Therefore, the latter can be estimated by measuring the position (in addition to the noise) of the baseline thanks to the DC coupling. The capability of measuring the NSB could be used to keep the trigger rate constant and this feature can be implemented in DigiCam thanks to its high flexibility (see Sect. 4).

The SCB (see Fig. 19) has a more complex design than the pre-amplifier board and features both analog and digital components. Its functions are:

-

to route the analog signals from the pre-amplifier board to DigiCam via the three RJ45 connectors,

-

to read and write the bias voltages of the 12 sensors individually,

-

to read the 12 NTC probes encapsulated in the sensors,

-

to stabilize the operational point of the sensors,

-

to allow the user to retrieve the high-voltage and temperature values, as well as the values of the various functional parameters, via a CAN bus interface.

Stabilization of the sensor’s operational point is a key feature of the camera design. The breakdown voltage variation with temperature has been measured on a few sensors in a climatic chamber by extracting the breakdown voltage from the I–V characteristics at different temperatures. Figure 20 shows an example of I–V curves measured at different temperatures. The results provide a coefficient k of the order of 54 mV/\(^\circ \)C, very close to the value in the datasheet of 56 mV/\(^\circ \)C. Temperature variations produce gain changes and gain non-uniformities across the pixels. A stabilization of the working point is thus necessary, and can be achieved, in principle, in two ways: either by maintaining the temperature constant or by actively adapting the bias voltage according to the temperature variations, in order to keep the over-voltage constant. The implementation of a precise temperature stabilization system was challenging and would have been costly due to the complexity of the camera and the heterogeneity of the different heating sources. Therefore, the choice has been to build a water cooling system (described in Sect. 5) that keeps the temperature within the specified operation range during observation (between \({-15}^\circ \)C and +25\(^\circ \)C), and to use a dedicated correction loop, implemented in a micro-controller on the SCB, to compensate for temperature variations at the level of single pixels. In the compensation loop, the NTC probe of each sensor is read at a frequency of 2 Hz and, according to a pre-calibrated look-up table, the bias voltage of individual sensors is updated at a frequency of 10 Hz to compensate the working point for temperature variations of less than 0.2 \(^\circ \)C. With such a system, the over-voltage of each sensor is kept stable, as well as the gain and the PDE. This concept was successfully proven by FACT [6], with a lower number of temperature sensors (31 distributed homogeneously over the PDP and read every 15 s [17]). A detailed description of the compensation loop of the SCB is reported in Ref. [8].

As a design validation test of the front-end hardware, the electronic cross-talk of a full module (cones + sensors + pre-amplifier board + SCB) has been measured. A single pixel has been biased and set in front of a calibrated LED source, while all the other pixels have been left unbiased, and the signal induced on these pixels was measured. The results of the test shows a very low level of electronic cross-talk. Small induced pulses on pixels sharing the same connector as the illuminated pixel, corresponding to a signal between 1 and 2 p.e.s, can be observed only when around 3000 p.e.s are injected in the illuminated pixel. Although the effect is, in fact, negligible, in a future re-design of the front-end boards the electronic cross-talk could be further minimized (if not eliminated) by a more appropriate choice of these connectors.

To qualify the electronic components of the camera, standard industrial techniques have been developed in house, where dedicated electronic boards, test setups, firmwares and softwares have been produced. The design of both the pre-amplifier board and the SCB has been accompanied by the parallel development of PCBs designed to perform a full functional test of the two boards at the production factory. Test setups and analysis software have been developed in order to provide a user friendly interface that could be used by the operators at the factory to assess the quality of the production prior to the shipping of the boards. The functional test automatically produces a report and uploads it on the web for an easy real-time monitoring of the progress. In the case of the SCB, the functional test also performs a first calibration, that is then completed in the laboratory in Geneva.

The results of the functional test allow us to establish the overall quality of the production. A typical example is presented in Figs. 21 and 22. Figure 21 shows the distribution of the measured gains of the preamplifiers over a full batch of pre-amplifier boards. The 0.5% relative dispersion demonstrates the high homogeneity of the outcome of the production and of the components of boards. In Fig. 22, the distribution of the residual on the applied sensor bias voltage, with respect to the one measured by the slow control closed loop, gives a value of (\(2.3\pm 1.9\)) mV, very small when compared to the typical values of the bias voltage, of the order of 57 V. For more details, and for a results on first tests of the compensation loop, see Ref. [8].

4 The DigiCam readout and trigger electronics

DigiCam is the fully digital readout and trigger system of the camera using the latest field programmable gate array (FPGA) for high throughput, high flexibility and dead-time free operation. Here, we summarize the relevant features of DigiCam, but for a complete overview see Ref. [18].

The DigiCam hardware consists of 27 digitizer boards and three trigger boards (see Fig. 23) arranged in three micro-crates (see Fig. 24), each containing nine digitizer boards and one trigger board.

From the point of view of the readout, the PDP is divided into three logical sectors (432 pixels, 36 modules, see Fig. 25), each connected to one micro-crate. The three micro-crates are connected with each other through the three trigger boards. Data are exchanged between crates in order for the trigger logic to be able to process images where Cherenkov events have been detected at the boundary between two (or all three) sectors. One of the trigger boards is configured as master, with the function of receiving the signals used for the trigger decision and the corresponding data of the selected images from the slave boards and of sending them to the camera server.

The analog signals from 4 modules (48 pixels) of the PDP are transferred to a single digitizer board via standard RJ45 ethernet CAT5 cables, where the signals are digitized at a sampling rate of 250 MHz (4 ns time steps) by 12-bit fast analog to digital converters (FADCs). ADCs from both Analog Devices, Inc, and Intersil have been tested in order to verify possible performance and cost benefits. Preliminary results are given in Sect. 8.3. The 250 MHz sampling rate has been proven to be adequate for a sufficiently precise photo-signal reconstruction already by FlashCam [18], which deals with PMT signals that are faster than the SST-1M camera SiPM signals (for which, therefore, the sampling is more accurate).

The digitized samples are serialized and sent in packets through high speed multi-Gbit serial digital GTX/GTH interfaces to the Xilinx XC7VX415T FPGA, where they are pre-processed and stored in the local ring buffers.

The data from the 9 FADC boards of a micro-crate are copied and sent to the corresponding trigger board, where they are stored into 4GB external DDR3 memories. Without accounting for the entire readout chain, i.e. only at the trigger board level, with a trigger rate of 600 Hz and 2 kHz, the events can be stored 154 and 46 s respectively before being readout. This calculation assumes an event size of 43.2 kB.

In order to reduce the size of the data received and processed by the trigger card, the digitized signals are first grouped in sets of three adjacent pixels (called triplets) and re-binned at 8 bits.

The trigger board features a Xilinx XC7VX485T FPGA where a highly parallelized trigger algorithm will be implemented. The algorithm is applied within the PDP sector managed by the micro-crate, plus the neighboring pixels from the adjacent sectors, whose information is shared thanks to the intercommunication links between the three trigger boards via the backplane of the microcrate. The trigger decisions are taken based on the recognition of specified geometrical patterns among triplets over threshold in the lower resolution copy of the image. A high flexibility is ensured in the implementation of different trigger algorithms for the recognition of multiple pattern shapes (e.g. circles and ellipses for gamma-ray events and rings for muon events) without significantly increasing the level of complexity. If an event is selected, the corresponding full resolution data stored in the digitizer boards are sent to the central acquisition system of the telescope by the master trigger card via a 10Gbps ethernet link.

As for the front-end electronics, testing hardware and protocols have been developed also for DigiCam, which are used to check the internal communication and the proper functioning of the FADCs, this latter by injecting test pulses.

5 The cooling system

The camera will need about 2 kW of cooling power, of which about 500 W will be needed by the PDP (0.38 W per channel) and about 1200 W by DigiCam, the rest being dissipated by auxiliary systems within the camera structure, such as the power supplies. The challenge in the design of the cooling system has been the necessity of efficiently extracting the dissipated heat from such a compact camera while complying with the IP65 insulation requirement. Such a demand rules out the possibility of using air cooling, and a water-based cooling system has been adopted as a solution, to extract the heat from both the PDP and DigiCam.

5.1 PDP cooling

The PDP is cooled by a constant flow of cold water mixed with glycol to prevent coolant from freezing and keeps the temperature at around 15–20 \(^\circ \)C. Fluctuations on the temperature of individual sensors, that translate into fluctuations of their operational point, are managed by the compensation loop of the slow control system as described in Sect. 3.4. The water is cooled at around 7 \(^\circ \)C by a chilling unit installed outside the camera on the telescope tower head. The liquid flows through aluminium pipes that are connected to the backplate of the PDP via aluminium blocks (see Fig. 26). The backplate itself thus acts as a cold plate for the whole PDP. The contact between the backplate and the front-end electronics boards (the pre-amplifier and the SCB) is realized via the four mounting screws of each module, that act as cold fingers.

To homogenize the heat distribution over the surface of the two electronics boards, both PCBs have been realized with a thicker copper layer (72 \(\upmu \)m instead of the conventional 18 \(\upmu \)m). Furthermore, a thermally conductive material (TFLEX 5200 from LAIRD technologies) is inserted between the two boards and between the full module and the backplate.

Figure 27 shows an FEA calculation of the temperature distribution over the 1296 pixels during operation of the cooling system with water at 7 \(^\circ \)C.

The concept has been validated on a mock-up of the PDP with 12 of the 108 modules installed on a size-reduced PDP mechanical structure (see Figs. 28, 29).

Results of the PDP cooling tests on a 1:10 mockup of the PDP. Comparison between data and the FEA calculation are shown for groups of pixels belonging to different sections (1,2,3,4,5) along the surface as shown in the bottom right plot. The agreement between data and simulation is good. The discrepancy that is visible in the first 50 mm along direction 3 (top right plot) is due to the fact that in the actual setup the cooling pipe was locally touching the backplane, which is not accounted for in the FEA

The results of the test are presented in Fig. 30.

5.2 DigiCam cooling

Due to the compact design of the micro-crates, the DigiCam boards can not be cooled with standard water pipes, so they are cooled with heat pipes. The mechanics of the DigiCam cooling system is shown in Fig. 31. Metal blocks act as heat exchangers by connecting the heat pipes to the water cooling pipes. Two heat pipes, each capable of absorbing 25 W, are connected to each digitizer and trigger board, in contact with the FADCs of the formers and the FPGAs of both.

The efficiency of the heat pipes is influenced by gravity, since the return of the coolant liquid is usually produced via capillarity or gravity itself. In the mechanical design of the camera structure, the DigiCam micro-crates are installed with an inclination of 45\(^\circ \) (see Fig. 24). This configuration ensures that the heat pipes will work properly irrespective of the inclination of the telescope.

6 The camera housekeeping system

The camera has been designed to be long term stable and reliable during its lifetime on site. Day-night temperature gradients as well as any possible weather condition must be carefully accounted for to avoid permanent damages. For this reason, the camera is provided with a housekeeping system that continuously monitors its conditions, in particular during non-operation in daytime, and reacts accordingly when potentially dangerous conditions are recognized.

While the IP65 compliant design will provide major protection against water and dust, the chance of condensation inside the sealed structure is still high, especially outside of operation time, when the camera is turned off and information on temperature from the SiPM NTC probes and from DigiCam is not available. To avoid damages due to water condensation or moist, other temperature, pressure and humidity sensors are installed inside the camera and are continuously (also in daytime) readout by a dedicated housekeeping board. If a condensation danger is detected, the housekeeping board sends a signal to the safety PLC, which activates a heating unit installed inside the camera. To avoid over-pressure conditions, the camera chassis implements an IP65 Gore-Tex® membrane, that allows for the air exchange with the environment but prevents water to flow inside. Another solution using a compact desiccant air dryer is under study.

7 Camera test setups

An aspect that has been taken care of during the design of the camera is the development of dedicated test setups and test routines for the validation of each component of the camera prior to its final installation, both for individual elements (cones, sensors, electronics boards, etc., as presented in the previous sections), for assembled parts (e.g. modules, as it is shown in the following section), and for the assessment of the homogeneity and reproducibility of the production. When needed, the same tests are used to characterize the object (e.g. the measurement of sensor properties during the module optical test, see Sect. 7.1) or even to calibrate it (such as in the test of the slow control board [8]).

7.1 Optical test of full modules

Following its assembly and prior to its final installation on the PDP, each 12-pixel module undergoes an optical test using the setup shown in Fig. 32. A single 470 nm LED illuminates a diffuser onto which a bundle of 48 optical fibers is connected. The fiber outputs are aligned with the center of the 48 pixels of four modules fixed on a support structure. The distance from the fiber output to the cone entrance has been fixed according to the opening angle of the fiber (30\(\circ \)) and ensure that the whole pixel (SiPM+light guide) is illuminated. The setup is enclosed in a light tight box. A replica of the PDP cooling (see Sect. 5) system is used to cool the modules via the metal plate of the support structure. Using an external chiller, the system stabilizes the temperature of the modules while the control loop is running during testing. A reference pixel has been used to calibrate the 48 optical fibers in order to be able to perform a flat fielding of PDP.

The setup is used to qualify the overall functioning of the modules, but also to characterize each pixel in terms of basic performance parameters. For this purpose, four types of data are taken. Dark runs are used to extract the dark count rate and the cross-talk; low light level runs are used to reconstruct the Multiple PhotoElectron (MPE) spectrum (see Sect. 8.2), from which parameters such as the gain can be extracted; high light level runs yielding signals below saturation are used to monitor the signal amplitude, rise time and fall time, and to study the baseline position and noise; very high light level runs produce pulses above saturation, useful to check the saturation behavior of the channel. These data also allow us to monitor the proper functioning of the entire readout chain (the modules are readout by DigiCam FADC boards in their final version or using a demonstrator board), and can also provide preliminary calibration data.

A few examples of the typical results from the module optical test are shown in Fig. 33. The data are taken using a LabVIEW interface to control the hardware units (including the power supplies, the LED pulse generator, the CAN bus communication with the slow control board and the ethernet connection to DigiCam for the readout). A C++ program analyses the data and produces automatically a report that the user can scroll to quickly check the proper functioning of the module or, conversely, to spot possible problems. In the analysis, the data are corrected for the relative light yield of the optical fibers, that has been calibrated using a single SiPM coupled to a light guide to measure the light intensity of individual fibers (Fig. 34, left). A correction, derived from the calibration of the individual FADCs of the DigiCam, is also applied. The correction is measured by injecting the same analog pulse to each FADC channel and by comparing the amplitudes of the corresponding digitized signals (Fig. 34, right). Both, the LabVIEW interface for data taking and the analysis software are designed to be run with minimal intervention of the user.

7.2 Cabling test setup

A test setup has been developed to check the proper cabling of the PDP to DigiCam prior to the camera installation on the final telescope structure. The setup is shown in Fig. 35. A mechanical structure covers one third plus the central region of the photo-detection plane and hosts a matrix of 420 nm LED sources located on its surface that illuminate each pixel individually (the setup will be rotated in steps of 120\(^\circ \) to cover the full PDP). By illuminating each pixel at a time, it is possible to check the proper routing of the signal in order to spot possible errors in the connection between the PDP and DigiCam. Although this system was originally conceived to solely test the cabling, it will also be used for calibration and flat fielding (see Sect. 9). For this reason, the LED carrier boards have been designed with two LEDs pointing to each pixel, one pulsed and one in continuous light mode. The former simulates pulses of Cherenkov light, the latter emulates the NSB. By switching on and off each LED individually, and by adjusting their light level in groups of three, it will be possible to reproduce most of the foreseen calibration conditions. Moreover, light patterns can be programmed in order to test the trigger logic.

8 Performance validation

Preliminary measurements prior to the final camera assembly have been carried out to validate the performance with respect to the goals and requirements set by CTA. The main performance parameters to be checked are the sensitivity to single photons and the charge resolution. The former is crucial for a SiPM camera, because single photon spectra and multiple photon spectra are regularly used to extract calibration parameters, such as the gain of the sensors, the dark count rate and cross talk; the latter affects the energy and the angular resolution that are of primary importance for the CTA physics goals. Such measurements have been crucial also to compare the different FADCs provided by Analog Devices and Intersil mounted on the prototype DigiCam digitizer boards. Moreover, for a given FADC type, different gain settings could be evaluated and optimized.

In the analysis of the data that is carried out to extract the camera performance parameters, a few systematic effects have been taken into account, among which the effect of cross talk and dark counts in the reconstruction of the signals. To estimate such effects, a toy Monte Carlo to simulate the signals produced by the SiPMs has been developed, as described in the following section.

8.1 The toy Monte Carlo

In the toy Monte Carlo, single pulses produced by detected photons are generated using waveform templates taken from measurements, and taking into account Poisson statistics, cross talk, electronics noise, dark counts and NSB. The input values for cross talk and electronic noise levels and dark count rate were derived from measurements. Hence charge spectra are built.

As Fig. 36 shows, the toy Monte Carlo well reproduces the typical shape of the multiple photoelectron (MPE). The disagreement observed around the mean value of the Poisson distribution is due to the fact that the parameters injected in the toy Monte Carlo are derived from a simplified fit function. The fit function used (see Eq. 1) does not include the dark count rate and cross talk. For instance in Fig. 36, the mean value extracted from the data is shifted due to optical cross talk (see Fig. 37). However in the toy Monte Carlo, the same mean value has been used and the optical cross talk has been added on top of it. The results is that the whole distribution is shifted toward higher p.e.s values producing a depopulation of the low p.e.s peaks in favour of the higher p.e.s peaks.

Simulated datasets can be used to study how cross talk and dark count rate influence the shape of the charge distributions. An example is shown in Fig. 37, where a pure Poisson distribution gets distorted and its mean value shifts towards a higher level. The main contribution to this effect arises from cross talk, i.e. a cross talk level of 10% (as it was in the case of this simulation) results in a shift of the Poisson mean of at least the same amount. This systematic shift has to be taken into account when the actual signal has to be extracted from fits to the distributions of pulse amplitude or area.

Figure 38, for example, shows the effect on the determination of the mean of the amplitude distributions in a range up to 300 p.e.s with a cross talk of 6.4% and a 2.79 MHz dark count rate,Footnote 14 for two different gain settings (9.2 ADC/p.e. and 4.3 ADC/p.e.). The two different regimes that are visible (a left-most inclined one and a right-most flat one) arise from the two types of distributions that are fitted: multiple photon spectra for lower light levels and Gaussians for higher light levels. While these latter are fitted via a Gaussian function, the former are fitted via the model presented in Sect. 8.2. Similar plots are produced to study as well the deviation of the measured gain from the true gain (see Fig. 41).

MPE spectrum of a SiPM obtained pulsing at 1 kHz a 400 nm LED with readout window of 80 ns. The device sees an average of \(\sim \)7.5 photons (the mean value of the Poisson function is \(7.504\pm 0.034\)). The distance between the photo-peaks gives the gain of the detector, that is \(9.757\pm 0.015\) ADC counts/p.e.

8.2 Sensitivity to single photons

The sensitivity of a SiPM to single photons can be assessed through the quality of the MPE spectrum. An example of a MPE spectrum acquired with a sensor mounted on a module and readout with DigiCam is shown in Fig. 39. Despite the large capacitance of the SiPM (which affects the noise performance) and despite the common cathode configuration of the four channels of the sensor (which causes each of the four channels to be biased at the same average voltage, instead of applying a dedicated bias voltage per channel) the individual photo-peaks are well separated. The performance of such a large area sensor in the detection of single photons is thus comparable to that of conventional SiPMs.

MPE spectra are important in the camera calibration strategy, since they are used to extract the gain of individual sensors together with the overall optical efficiency (sensor+light guides), to be used in the gain flat-fielding of the camera. MPE spectra are also acquired during the optical test of each module for individual pixels (see Sect. 7.1).

To extract the gain from the MPE spectrum we use two methods: a direct fit of the spectrum or the analysis of its Fast Fourier Transform (FFT). In the former case, the MPE spectrum can be described, to first approximation, by a function of the form

In this formula, A is a normalization constant, \(P(n|\mu )\) is the integer Poisson distribution with mean \(\mu \) modulating a set of Gaussian distributions for each photo-peak n, each centered in \(n\cdot g\) where g is the gain, i.e. the conversion factor between ADC counts and number of p.e.s. The width of the n-th photo-peak is given by

where \(\sigma _e\) is the electronic noise and \(\sigma _1\) is the intrinsic noise associated with the detection of a single photon. The fit to the data shown in Fig. 39, is done according to this model. If it is known that this simplified model has limitations as it does not include the optical cross talk, it was used for its robustness as used in an automatized fitting procedure. The fact that at low p.e.s, the fit function overestimates the event count and that at high p.e.s, it underestimate it, is the consequence of the event migration caused by the optical cross talk as already discussed in Sect. 8.1. For future studies the generalized Poisson function will be used [19].

In the FFT method the Fast Fourier transform of the MPE spectrum is calculated, as shown in Fig. 40 for a MPE spectrum acquired from one sensor readout by DigiCam. The main peak at around 500 p.e. corresponds to the main frequency of the single photon peaks, and the gain can be extracted as

A study of the accuracy of either methods (fit and FFT) has been carried out in the framework of the toy Monte Carlo. As was shown earlier (see Sect. 8.1), the pure Poisson signal distributions are distorted by cross talk and dark count rate. In the fit method, one could improve the model by adding these effects in some parametrized form, as was already shown in Ref. [20]. However, this adds parameters and, in general, complexity to the fit. A study on simulated data has been carried out to characterize the quality of the pure Poisson fit when used to estimate the gain (for the estimation of the light level from the same fit, the result was already shown in Fig. 38).

This result is shown in Fig. 41 (data simulated with gain of 10 ADC/p.e., cross talk 10% and dark count rate 5 MHz), and is compared to the performance of the FFT method applied on the same set. The fit method yields a more accurate estimation for low light levels (up to around 13 p.e.s), and looses accuracy with increasing light. The FFT method is generally less accurate, but the effect does not depend on the light level. Overall, either methods give an uncertainty which is systematically below 1%. This can be used to set a systematic uncertainty on the extracted gain values. Otherwise, the data points in this plot can be employed as correction coefficients to retrieve a more accurate value of the gain in either methods, as will be done in Sect. 8.3.

8.3 Charge resolution

The charge measured by a single pixel in the camera is proportional to the amount of Cherenkov light that has reached the sensor. The charge resolution is determined by the statistical fluctuations of the charge on top of which sensor intrinsic resolution and sensor and electronic noise can contribute significantly. CTA provides specific requirements and goals for the fractional charge resolution \(\sigma _Q/Q\) in the range between 0 p.e. and 2000 p.e.s (see Fig. 47).

The charge resolution of the camera has been measured on a few sensors using a dedicated LED driver board. In line with the cabling test setup concept described in Sect. 7.2, this board hosts two LED sources, one in AC mode to simulate the Cherenkov light pulses of particles, and one in DC modeFootnote 15 to simulate the night sky background after having been calibrated. The data are taken using a fully assembled module readout by a standalone DigiCam digitizer board. The module is mounted on the temperature-controlled support structure of the optical test setup (Sect. 7.1).

The charge resolution is extracted from the data by analyzing the distributions of pulse amplitude or area (both after baseline subtraction) at different light levels of the AC and DC LEDs. At each level of the DC LED, i.e. at each emulated NSB level (no NSB, 40 MHz – corresponding to dark nights – 80 and 660 MHz – corresponding to half moon conditions with the moon at 6\(^\circ \) off-axis), the datasets consist of a collection of signals from detected light pulses at increasingly higher levels, from few photons up to few thousand photons.

8.3.1 Source calibration

While the DC LED was calibrated with a pin diode, the calibration of the AC LED source is derived from the data themselves. For the low intensity data sets, the MPE spectra were used to extract the gain with the methods described in Sect. 8.2.

Measurement of the gain from the data taken with the DigiCam digitizer board hosting the FADCs from Intersil configured with gain around 5 ADC/p.e.. The gain has been measured from the fit method (top) and the FFT method (bottom). In both cases, the raw values at different light levels are adjusted by the corresponding correction coefficients calculated with the toy Monte Carlo

Correction coefficients calculated via the toy Monte Carlo have been used to improve the accuracy of the measured gain as shown, as an example, in Fig. 42. The Monte Carlo uses, as input, the values of the parameters extracted from the data (cross talk, dark count rate, electronic noise). The uncertainty on the cross talk and the dark count rate was used to determine the systematic uncertainty on the correction coefficients.

The gain value was used to determine the light level from the Gaussian distributions of signal amplitudes below saturation. For the MPE spectra, the light levels were retrieved directly from the fits to the distributions as discussed in Sect. 8.2. The light levels obtained in either cases (from MPE spectra and from Gaussians) were corrected for systematic effects (mostly cross talk) using the correction coefficients calculated from the toy Monte Carlo. As with the case of the correction coefficients for the gain, also in this case the systematic uncertainty on the correction coefficients was estimated from the experimental errors on cross talk and dark count rate. The result of the systematic study to calculate the light level correction coefficients and their uncertainties is shown in Fig. 43.

Simulation of the light level correction coefficients for different levels of cross talk and dark count rate according to the measured uncertainty of these quantities. The two regimes (an inclined one on the leftmost part and a flat one on the rightmost part) correspond to the two different fit regimes: MPE spectra and Gaussians

At this stage, a LED calibration curve was built for light levels below the saturation of the detected signals. The extrapolation of the LED calibration curve above saturation was done via a 4th degree polynomial.Footnote 16 An example of a complete calibration curve is shown in Fig. 44.

8.3.2 Charge resolution

At each level of the AC LED, i.e. at each light level calculated according to the calibration curve (as the one in Fig. 44), the charge resolution is determined as

where \(\mu \) and \(\sigma \) are the mean value and standard deviation of the charge distribution. Before applying Eq. 4, the mean value \(\mu \) is corrected by the coefficients calculated from the toy Monte Carlo.

When the distribution is Gaussian (in either non-saturated or saturated regimes), the two quantities are derived directly from a Gaussian fit. In the case of MPEs, the distributions are fitted by Eq. 5. The \(\mu \) parameter is the one determined from the fit, while \(\sigma \) is calculated as

where \(\sigma _{68CL}\) corresponds to the 68% confidence level around \(\mu \), and the second term comes from the propagation of the fit error on \(\mu \) for a Poisson-like variance \(\sqrt{\mu }\).

The \(\mu \) and \(\sigma \) from Gaussian distributions of pulse amplitudes for signals below saturation are used directly to calculate the charge resolution.

In such a case, the pulse amplitude and the light level are related to each other by a simple conversion factor (see Fig. 45), and Eq. 4 can be applied on the raw values of the corrected \(\mu \) and \(\sigma \) in units of ADC counts.

When the light intensity is high enough for saturation to occur (be it either in the pre-amplifier or in DigiCam, depending on the gain settings of the FADC used), charge distributions of pulse area rather than pulse amplitude are used (see also [8]). In this case, the relation between charge and light level is not scalar, and the raw \(\sigma \) and \(\mu \) values retrieved from the area of the Gaussian fit can not be used directly to calculate the charge resolution according to Eq. 4, but must be first converted into units of p.e.s. For this purpose, area vs. light level curves are built from the data at each NSB level, using the AC LED calibration to estimate the light level at each pulsed light setting, to be correlated to the mean area value of the corresponding pulses. An example of such curve is shown in Fig. 46.

Figure 47 shows the charge resolution measured at different emulated NSB levels for two gain configurations of DigiCam: low gain (around 5 ADC/p.e.) and high gain (around 10 ADC/p.e.). The two cases are different in terms of saturation conditions: in the former the pre-amplifier saturates before DigiCam at around 750 p.e.s, which means that the full waveforms are always digitized; in the latter DigiCam saturates before the pre-amplifier at around 400 p.e.s, and as a consequence the pulses are truncated from this light level on. Notice that in this analysis the possible effect of the LED source fluctuations is not subtracted.

The results show that, apart from the case at 660 MHz NSB (half moon), all the points fall below the CTA goal curve. In particular there is no sharp transition in correspondence of the saturation points (\(\sim \)750 p.e.s for low gain, \(\sim \)400 p.e.s for high gain), meaning that, despite the overall non-linearity of the camera, the signals can be reconstructed with equal precision in either non-saturated and saturated regimes. At 660 MHz both gain settings loose performance at low light levels (as it is, in fact, expected for the operation of the telescope in half moon nights), but still keeping below the requirement curve. The 660 MHz high gain data points, however, show, at around 1000 p.e.s, an increase in resolution above requirement. This effect is to be attributed to the truncation effect in combination with the waveform distortion undergone by signals that exceed the dynamic range of the pre-amplifier (for more details, see [8]). Thus, these results show that a low-gain configuration, with no truncation from the digitizers, is to be preferred. The final version of the DigiCam prototype has been produced with a gain configuration where the pre-amplifier saturates before the FADCs and where the full dynamic range of the FADCs is exploited. As far as performance differences between FADCs from Analog Devices and FADCs from Intersil are concerned, the two turned out to be equivalent. The choice of either company elements will be driven by the cost benefits.

9 Camera calibration studies

To ensure a homogeneous performance of the camera, the pixels will be calibrated regularly. The determination of the relevant parameters that are necessary to equalize the response of each pixel over the full PDP (also referred to as flat-fielding) will be performed at different timescales and in different measurement conditions, depending on the parameter type (see [21]). While some parameters can be monitored on an event-by-event basis (for instance the measurement of the baseline), others (e.g. dark count rate and cross talk) can be measured with lower frequency. Some of the parameters can be extracted directly from the physics data, others will require special calibration runs, for example dark runs taken with the camera lid closed or data taken by illuminating the camera with a dedicated flasher unit installed on the telescope structure. The studies performed in the laboratory during the prototyping phase will define the calibration strategy that will be adopted on site. We describe here some relevant aspects of the calibration of the camera.

The baseline level for a sensor, DC coupled to the pre-amplifier, is correlated to the NSB. The determination of the baseline level can be done on an event-by-event basis by extending the signal acquisition window before the signal peak arrival time. This is possible thanks to the ring buffer structure implemented in DigiCam and to the relatively low trigger rates expected for the SSTs. Using the LED driver board described in Sect. 10, different NSB conditions could be reproduced in the laboratory. Data from a pixel in a fully assembled module, readout by DigiCam at different emulated NSB levels, are taken and analyzed to characterize the behavior of the baseline. The results are shown in Figs. 48 and 49. Here the baseline position (which here we determine as the mean of the counts) and noise (RMS) are calculated for different emulated NSB conditions over a large number of DigiCam samples, corresponding to a total time window of 800 \(\upmu \)s.

A detailed study of the baseline level determination was performed and the accuracy of the baseline estimate as a function of the number of data samples was estimated. This is studied within the same dataset at different emulated NSB levels, and the result is shown in Fig. 50. These plots refer to the dark condition, but similar ones have been made for a number of NSB levels between dark and 660 MHz. From these, it can be concluded that sufficiently accurate measurements can be made on a set of around 50 events, when around 30 pre-pulse samples are considered, which in total corresponds to a 10 \(\upmu \)s window. This means that, even in the case in which the baseline is measured during data taking at the frequency of 1 Hz (which is, most likely, too high), this would add a negligible duty cycle. Thus, in general, the baseline (level and noise) can be measured accurately at a frequency that is high enough to efficiently monitor the NSB in real time.

All these studies shown here are done systematically for each pixel of the camera prior to its installation on the telescope structure, by using the cabling test setup as discussed in Sect. 7.2. The same setup will also be used to perform a preliminary flat fielding of the camera and to test the trigger logic by illuminating the camera with pre-defined light patterns that mimic real Cherenkov events (e.g. elliptical images from gamma-ray and proton showers and rings from muon events). The possibility is also foreseen to reproduce patterns from simulated events.

It is understood that the presence at the sensor bias stage of a 10 kOhm resistor in series [8] with the sensor produces a voltage drop at the sensor cathode when a current (e.g. induced by the NSB photons) flows through the resistor. If the voltage drops, the over-voltage is not anymore the one set by the user and therefore the operation point changes with the following consequences:

-

1.

the gain decreases;

-

2.

the PDE decreases;

-

3.

the optical cross talk decreases;

-

4.

the dark count rate decreases.

The fact that the gain decreases implies that the conversion factor from photon equivalent to ADC count changes (see Fig. 51). For the prototype camera, this effect will not be compensated at the hardware level but will be taken into account during the telescope operation since the baseline shift can be evaluated online and the gain correction can be derived at FPGA level or at software level.

The baseline shift measurement will be part of the data stream and accessible at the data analysis level and will allow one to derive the evolution of the relevant parameters with the operation point, such as the photo-detection efficiency. As a matter of fact, as shown in Fig. 14, the PDE variation with the operation point with the NSB level has been measured. We will operate the sensor slightly before the region where the PDE becomes independent on the over-voltage. Hence the PDE variations have to be monitored since they could affect the trigger threshold (expressed in terms of p.e.), on which the efficiency of the data taking depends. The trigger threshold is set according to Monte Carlo simulations (see Sect. 10), which will be benchmarked against real data. The setting point is approximately in the region where cosmic rays begin to emerge on top of the noise.

Incidentally, it is possible that the PDE vs. wavelength does not scale identically for different over-voltages, therefore different NSB levels. This effect will be characterized in the laboratory with a Xenon lamp by measuring the PDE vs. wavelength for different over-voltages. Moreover, the contribution of the dark count rate and optical cross talk variations as a function of the over-voltage (see Fig. 14) have to be subtracted to the measured signal to avoid problems with different over-voltages, and hence NSB levels. These effects require a well defined camera calibration, which can be properly set up as we demonstrate here. Moreover, there is a positive counter part: the voltage drop feature leads to an increase in dynamic range even when the baseline or noise increases, as visible in Fig. 52, due to the fact that the gain decreases.

10 Expected performances through simulations

The measurements on the characterization of the camera performance (window transmittance, cone reflectivity, sensor PDE, charge resolution, and so on) have been used to reproduce the camera response in Monte Carlo simulations. Through these simulations we can estimate the performance parameters and compare them to the CTA requirements.

Different simulation tools have been used for this study. Atmospheric showers induced by gamma-rays and/or cosmic rays have been simulated with CORSIKA up to 100 EeV [22]. The simulation of the telescope was done using two different tools which produce comparable results: sim_telarray [23] and the combination of GrOptics and CARE [24]. sim_telarray is widely used in CTA to study the preliminary performance of the array of telescopes (sensitivity, array layout, array trigger, etc.). It simulates the telescope optics and the camera, but it does not account for the shadowing of the elements (such as the masts and the camera box) in an exact way, nor does it simulate the camera with a great deal of detail. Hence, a more detailed simulation of the SST-1M telescope and its camera was implemented with GrOptics and CARE. GrOptics is a package for ray tracing that considers the mirror transmission in detail and the telescope structure. CARE simulates the camera down to more fundamental properties of the detector, such as the microcells of the SiPMs and the saturation of the signals, the trigger system and the backgrounds (such as the electronics noise and the NSB).

10.1 Single telescope performance as a function of energy

The studies in this section concern the sensitivity as a function of energy of single SST-1M telescopes and they strongly depend on the kind of trigger logic chosen. CORSIKA simulated gamma-ray showers have been fed to sim_telarray to estimate the differential trigger rate \(\mathrm {d}R/\mathrm {d}E\) as a function of the energy of the primaries. The rate is estimated as the Crab flux unit [25] detected over the effective area. The effective area at a given energy is the integral of the distribution of triggered events over the distance between the core of the shower and the telescope, and is shown in Fig. 53.

The differential trigger rate is shown in Fig. 54. The maximum of the curve, named “energy threshold”, marks the point above which the telescope becomes most effective. The simulation shows that the threshold for the SST-1M telescope is at around 300 GeV, one order magnitude lower than the requirement specified by CTA. Currently, only a simple majority trigger has been implemented requiring that the trigger is fired if the digitized pulse of the signal in a hexagonal patch of 7 pixels, with a readout window of 200 ns, is above a threshold of 145 ADC counts (28.7 p.e.s summed up in the patch, for a simulated gain of 5 ADC/p.e.).

10.2 Estimated average camera detection efficiency