Abstract

The Tile Calorimeter is the hadron calorimeter covering the central region of the ATLAS experiment at the Large Hadron Collider. Approximately 10,000 photomultipliers collect light from scintillating tiles acting as the active material sandwiched between slabs of steel absorber. This paper gives an overview of the calorimeter’s performance during the years 2008–2012 using cosmic-ray muon events and proton–proton collision data at centre-of-mass energies of 7 and 8 TeV with a total integrated luminosity of nearly 30 fb\(^{-1}\). The signal reconstruction methods, calibration systems as well as the detector operation status are presented. The energy and time calibration methods performed excellently, resulting in good stability of the calorimeter response under varying conditions during the LHC Run 1. Finally, the Tile Calorimeter response to isolated muons and hadrons as well as to jets from proton–proton collisions is presented. The results demonstrate excellent performance in accord with specifications mentioned in the Technical Design Report.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

ATLAS [1] is a general-purpose detector designed to reconstruct events from colliding hadrons at the Large Hadron Collider (LHC) [2]. The hadronic barrel calorimeter system of the ATLAS detector is formed by the Tile Calorimeter (TileCal), which provides essential input to the measurement of the jet energies and to the reconstruction of the missing transverse momentum. The TileCal, which surrounds the barrel electromagnetic calorimeter, consists of tiles of plastic scintillator regularly spaced between low-carbon steel absorber plates. Typical thicknesses in one period are 3 mm of the scintillator and 14 mm of the absorber parallel to the colliding beams’ axis, with the steel:scintillator volume ratio being 4.7:1. The calorimeter is divided into three longitudinal segments; one central long barrel (LB) section with 5.8 m in length (\(|\eta | < 1.0\)), and two extended barrel (EB) sections (\(0.8< |\eta | < 1.7\)) on either side of the barrel each 2.6 m long.Footnote 1 Full azimuthal coverage around the beam axis is achieved with 64 wedge-shaped modules, each covering \(\Delta \phi = 0.1\) radians. The Tile Calorimeter is located at an inner radial distance of 2.28 m from the LHC beam-line, and has three radial layers with depths of 1.5, 4.1, and \(1.8\lambda \) (\(\lambda \) stands for the nuclear interaction lengthFootnote 2) for the LB, and 1.5, 2.6, and \(3.3\lambda \) for the EB. The amount of material in front of the TileCal corresponds to \(2.3\lambda \) at \(\eta =0\) [1]. A detailed description of the ATLAS TileCal is provided in a dedicated Technical Design Report [3]; the construction, optical instrumentation and installation into the ATLAS detector are described in Refs. [4, 5].

The TileCal design is driven by its ability to reconstruct hadrons, jets, and missing transverse momentum within the physics programme intended for the ATLAS experiment. For precision measurements involving the reconstruction of jets, the TileCal is designed to have a stand-alone energy resolution for jets of \(\sigma /E = 50\%/\sqrt{E \mathrm {(GeV)}} \oplus 3\%\) [1, 3]. To be sensitive to the full range of energies expected in the LHC lifetime, the response is expected to be linear within 2% for jets up to 4 TeV. Good energy resolution and calorimeter coverage are essential for precise missing transverse momentum reconstruction. A special Intermediate Tile Calorimeter (ITC) system is installed between the LB and EB to correct for energy losses in the region between the two calorimeters.

This paper presents the performance of the Tile Calorimeter during the first phase of LHC operation. Section 2 describes the experimental data and simulation used throughout the paper. Details of the online and offline signal reconstruction are provided in Sect. 3. The calibration and monitoring of the approximately 10,000 channels and data acquisition system are described in Sect. 4. Section 5 explains the system of online and offline data quality checks applied to the hardware and data acquisition systems. Section 6 validates the full chain of the TileCal calibration and reconstruction using events with single muons and hadrons. The performance of the calorimeter is summarised in Sect. 7.

1.1 The ATLAS Tile Calorimeter structure and read-out electronics

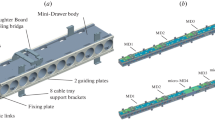

The light generated in each plastic scintillator is collected at two edges, and then transported to photomultiplier tubes (PMTs) by wavelength shifting (WLS) fibres [5]. The read-out cell geometry is defined by grouping the fibres from individual tiles on the corresponding PMT. A typical cell is read out on each side (edge) by one PMT, each corresponding to one channel. The dimensions of the cells are \(\Delta \eta \times \Delta \phi = 0.1 \times 0.1\) in the first two radial layers, called layers A and BC (just layer B in the EB), and \(\Delta \eta \times \Delta \phi = 0.2 \times 0.1\) in the third layer, referred to as layer D. The projective layout of cells and naming convention are shown in Fig. 1. The so-called ITC cells (D4, C10 and E-cells) are located between the LB and EB, and provide coverage in the range \(0.8< |\eta | < 1.6\). Some of the C10 and D4 cells have reduced thickness or special geometry in order to accommodate services and read-out electronics for other ATLAS detector systems [3, 6]. The gap (E1–E2) and crack (E3–E4) cells are only composed of scintillator and are exceptionally read out by only one PMT. For Run 1, eight crack scintillators were removed per side, to allow for routing of fibres for 16 Minimum Bias Trigger Scintillators (MBTS), used to trigger on events from colliding particles, as well as to free up the necessary electronics channels for read-out of the MBTS. The MBTS scintillators are also read out by the TileCal EB electronics.

The PMTs and front-end electronics are housed in a steel girder at the outer radius of each module in 1.4 m long aluminium units that can be fully extracted while leaving the remaining module in place, and hence are given the name of electronics drawers. Each drawer holds a maximum of 24 channels, two of which form a super-drawer. There are nominally 45 and 32 active channels per super-drawer in the LB and EB, respectively. Each channel consists of a unit called a PMT block, which contains the light-mixer, PMT tube and voltage divider, and a so-called 3-in-1 card [7, 8]. This card is responsible for fast signal shaping in two gains (with a bi-gain ratio of 1:64), the slow integration of the PMT signal, and provides an input for a charge injection calibration system.

The maximum height of the analogue pulse in a channel is proportional to the amount of energy deposited by the incident particle in the corresponding cell. The shaped signals are sampled and digitised every 25 ns by 10-bit ADCs [9]. The sampled data are temporarily stored in a pipeline memory until a trigger Level-1 signal is received. Seven samples, centred around the pulse peak, are obtained. A gain switch is used to determine which gain information is sent to the back-end electronics for event processing. By default the high-gain signal is used, unless any of the seven samples saturates the ADC, at which point the low-gain signal is transmitted.

Adder boards receive the analogue low-gain signal from the 3-in-1 cards and sum the signal from six 3-in-1 cards within \(\Delta \eta \times \Delta \phi = 0.1 \times 0.1\) before transmitting it to the ATLAS hardware-based trigger system as a trigger tower.

The integrator circuit measures PMT currents (0.01 nA to 1.4 \(\upmu \)A) over a long time window of 10–20 ms with one of the six available gains, and is used for calibration with a radioactive caesium source and to measure the rate of soft interactions during collisions at the LHC [10]. It is a low-pass DC amplifier that receives less than 1% of the PMT current, which is then digitised by a 12-bit ADC card (which saturates at 5 V) [11].

Power is supplied to the front-end electronics of a single super-drawer by means of a low-voltage power supply (LVPS) source, which is positioned in an external steel box mounted just outside the electronics super-drawer. The high voltage is set and distributed to each individual PMT using dedicated boards positioned inside the super-drawers located with the front-end electronics.

The back-end electronics is located in a counting room approximately 100 m away from the ATLAS detector. The data acquisition system of the Tile Calorimeter is split into four partitions, the ATLAS A-side (\(\eta > 0\)) and C-side (\(\eta < 0\)) for both the LB and EB, yielding four logical partitions: LBA, LBC, EBA, and EBC. Optical fibres transmit signals between each super-drawer and the back-end trigger, timing and control (TTC) and read-out driver (ROD [12]) crates. There are a total of four TTC and ROD crates, one for each physical partition. The ATLAS TTC system distributes the LHC clock, trigger decisions, and configuration commands to the front-end electronics. If the TTC system sends the trigger acceptance command to the front-end electronics, the corresponding digital signals for all channels of the calorimeter are sent to the ROD via optical links, where the signal is reconstructed for each channel.

2 Experimental set-up

The data used in this paper were taken by the Tile Calorimeter system using the full ATLAS data acquisition chain. In addition to the TileCal, there are also other ATLAS subsystems used to assist in particle identification, track, momentum, and energy reconstruction. The inner detector is composed of a silicon pixel detector (Pixel), a semiconductor tracker (SCT), and a transition radiation tracker (TRT). Together they provide tracking of charged particles for \(|\eta | < 2.5\), with a design resolution of \(\sigma _{p_\mathrm {T}}/p_{\mathrm {T}} = 0.05\% \cdot p_{\mathrm {T}} \mathrm {(GeV)} \oplus 1\%\) [1]. The electromagnetic lead/liquid-argon barrel (EMB [13]) and endcap (EMEC [14]) calorimeters provide coverage for \(|\eta | < 3.2\). The energy resolution of the liquid-argon (LAr) electromagnetic calorimeter is designed to be \(\sigma _E/E = 10\%/\sqrt{E \mathrm {(GeV)}} \oplus 0.7\%\). The hadronic calorimetry in the central part of the detector (\(|\eta | < 1.7\)) is provided by the TileCal, which is described in detail in Sect. 1. In the endcap region (\(1.5< |\eta | < 3.2\)) hadronic calorimetry is provided by a LAr/copper sampling calorimeter (HEC [15]) behind a LAr/lead electromagnetic calorimeter with accordion geometry, while in the forward region (\(3.2< |\eta | < 4.9\)) the FCal [16] provides electromagnetic (the first module with LAr/copper) and hadronic (the second and third module with LAr/tungsten) calorimetry. The muon spectrometer system, the outermost layer of the ATLAS detector, is composed of monitored drift tubes, and cathode strip chambers for the endcap muon track reconstruction for \(|\eta | < 2.7\). Resistive plate chambers (RPCs) and thin gap chambers (TGCs) are used to trigger muons in the range \(|\eta | < 2.4\). ATLAS has four superconducting magnet systems. In the central region, a 2 T solenoid placed between the inner detector and calorimeters is complemented with 0.5 T barrel toroid magnets located outside of TileCal. Both endcap regions encompass their own toroid magnet placed between TileCal and muon system, producing the field of 1.0 T.

A three-level trigger system [17] was used by ATLAS in Run 1 to reduce the event rate from a maximum raw rate of 40 MHz to 200 Hz, which is written to disk. The Level 1 Trigger (L1) is a hardware-based decision using the energy collected in coarse regions of the calorimeter and hits in the muon spectrometer trigger system. The High Level Trigger (HLT) is composed of the Level 2 Trigger (L2) and the Event Filter (EF). The HLT uses the full detector information in the regions of interest defined by L1. The reconstruction is further refined in going from L2 to the EF, with the EF using the full offline reconstruction algorithms. A trigger chain is defined by the sequence of algorithms used in going from L1 to the EF. Events passing trigger selection criteria are separated into different streams according to the trigger category for which the event is triggered. Physics streams are composed of triggers that are used to identify physics objects (electrons, photons, muons, jets, hadronically-decaying \(\tau \)-leptons, missing transverse momentum) in collision data. There are also calibration streams used by the various subsystems for calibration and monitoring purposes, which take data during empty bunch crossings in collision runs or in dedicated calibration runs. Empty bunch crossings are those with no proton bunch and are separated from any filled bunch by at least five bunch crossings to ensure signals from collision events are cleared from the detector. The calibration and monitoring data are explained in more detail in the next sections.

2.1 ATLAS experimental data

The full ATLAS detector started recording events from cosmic-ray muons in 2008 as a part of the detector commissioning [6, 18]. Cosmic-ray muon data from 2008–2010 are used to validate test beam and in situ calibrations, and to study the full calorimeter in the ATLAS environment; these results are presented in Sect. 6.1.1.

The first \(\sqrt{s} = 7\) TeV proton–proton (pp) collisions were recorded in March 2010, and started a rich physics programme at the LHC. In 2011 the LHC pp collisions continued to be at \(\sqrt{s} = 7\) TeV, but the instantaneous luminosity increased and the bunch spacing decreased to 50 ns. Moving to 2012 the centre-of-mass energy increased to 8 TeV. In total, nearly 30 fb\(^{-1}\) of proton collision data were delivered to ATLAS during Run 1. A summary of the LHC beam conditions is shown in Table 1 for 2010–2012, representing the collision data under study in this paper. In ATLAS, data collected over long periods of time spanning an LHC fill or generally stable conditions are grouped into a “run”, while the entire running period under similar conditions for several years is referred to as a “Run”. Data taken within a run are broken down into elementary units called luminosity blocks, corresponding to up to one minute of collision data for which detector conditions or software calibrations remain approximately constant.

ATLAS also recorded data during these years with lower-energy proton collisions (at \(\sqrt{s}\) = 900 GeV, 2.76 TeV), and data containing lead ion collisions. Nevertheless, this paper focuses on the results obtained in pp collisions at \(\sqrt{s} = 7\) and 8 TeV.

2.2 Monte Carlo simulations

Monte Carlo (MC) simulated data are frequently used by performance and physics groups to predict the behaviour of the detector. It is crucial that the MC simulation closely matches the actual data, so those relying on simulation for algorithm optimisations and/or searches for new physics are not misled in their studies.

The MC process is divided into four steps: event generation, simulation, digitisation, and reconstruction. Various event generators were used in the analyses as described in each subsection. The ATLAS MC simulation [19] relies on the Geant4 toolkit [20] to model the detector and interactions of particles with the detector material. During Run 1, ATLAS used the so-called QGSP_BERT physics model to describe the hadronic interactions with matter, where at high energies the hadron showers are modelled using the Gluon String Plasma model, and the Bertini intra-nuclear cascade model is used for lower-energy hadrons [21]. The input to the digitisation is a collection of hits in the active scintillator material, characterised by the energy, time, and position. The amount of energy deposited in scintillator is divided by the calorimeter sampling fraction to obtain the channel energy [22]. In the digitisation step, the channel energy in GeV is converted into its equivalent charge using the electromagnetic scale constant (Sect. 4) measured in the beam tests. The charge is subsequently translated into the signal amplitude in ADC counts using the corresponding calibration constant (Sect. 4.3). The amplitude is convolved with the pulse shape and digitised each 25 ns as in real data. The electronic noise is emulated and added to the digitised samples as described in Sect. 3.2. Pile-up (i.e. contributions from additional minimum-bias interactions occurring in the same bunch crossing as the hard-scattering collision or in nearby ones), are simulated with Pythia 6 [23] in 2010–2011 and Pythia 8 [24] in 2012, and mixed at realistic rates with the hard-scattering process of interest during the digitisation step. Finally, the same reconstruction methods, detailed in Sect. 3, as used for the data are applied to the digitised samples of the simulations.

3 Signal reconstruction

The electrical signal for each TileCal channel is reconstructed from seven consecutive digital samples, taken every 25 ns. Nominally, the reconstruction of the signal pulse amplitude, time, and pedestal is made using the Optimal Filtering (OF) technique [25]. This technique weights the samples in accordance with a reference pulse shape. The reference pulse shape used for all channels is taken as the average pulse shape from test beam data, with reference pulses for both high- and low-gain modes, each of which is shown in Fig. 2. The signal amplitude (A), time phase (\(\tau \)), and pedestal (p) for a channel are calculated using the ADC count of each sample \(S_i\) taken at time \(t_i\):

where the weights (\(a_i\), \(b_i\), and \(c_i\)) are derived to minimise the resolution of the amplitude and time, with a set of weights extracted for both high and low gain. Only electronic noise was considered in the minimisation procedure in Run 1.

The reference pulse shapes for high gain and low gain, shown in arbitrary units [6]

The expected time of the pulse peak is calibrated such that for particles originating from collisions at the interaction point the pulse should peak at the central (fourth) sample, synchronous with the LHC 40 MHz clock. The reconstructed value of \(\tau \) represents the small time phase in ns between the expected pulse peak and the time of the actual reconstructed signal peak, arising from fluctuations in particle travel time and uncertainties in the electronics read-out.

Two modes of OF reconstruction were used during Run 1, an iterative and a non-iterative implementation. In the iterative method, the pulse shape is recursively fit when the difference between maximum and minimum sample is above a noise threshold. The initial time phase is taken as the time of the maximum sample, and subsequent steps use the previous time phase as the starting input for the fit. Only one iteration is performed assuming a pulse with the peak in the central sample for signals below a certain threshold. For events with no out-of-time pile-up (see Sect. 3.3) this iterative method proves successful in reconstructing the pulse peak time to within 0.5 ns. This method is used when reconstructing events occurring asynchronously with the LHC clock, such as cosmic-ray muon data and also to reconstruct data from the 2010 proton collisions. With an increasing number of minimum-bias events per bunch crossing, the non-iterative method, which is more robust against pile-up, is used. The time phase was fixed for each individual channel and only a single fit to the samples was applied in 2011–2012 data.

In real time, or online, the digital signal processor (DSP) in the ROD performs the signal reconstruction using the OF technique, and provides channel energy and time to the HLT. The conversion between signal amplitude in ADC counts and energy units of MeV is done by applying channel-dependent calibration constants which are described in the next section. The DSP reconstruction is limited by the use of fixed point arithmetic, which has a precision of 0.0625 ADC counts (approximately 0.75 MeV in high gain), and imposes precision limitations for the channel-dependent calibration constants.

The offline signal is reconstructed using the same iterative or non-iterative OF technique as online. In 2010 the raw data were transmitted from the ROD for offline signal reconstruction, and the amplitude and time computations from the ROD were used only for the HLT decision. From 2011 onward, with increasing instantaneous luminosity the output bandwidth of the ROD becomes saturated, and only channels for which the difference between the maximum and minimum \(S_i\) is larger than five ADC counts (approximately 60 MeV) have the raw data transmitted from the ROD for the offline signal reconstruction; otherwise the ROD signal reconstruction results are used for the offline data processing.

The reconstructed phase \(\tau \) is expected to be small, but for any non-zero values of the phase, there is a known bias when the non-iterative pulse reconstruction is used that causes the reconstructed amplitude to be underestimated. A correction based on the phase is applied when the phase is reconstructed within half the LHC bunch spacing and the channel amplitude is larger than 15 ADC counts, to reduce contributions from noise. Figure 3 shows the difference between the non-iterative energy reconstructed in the DSP without (circles) and with (squares) this parabolic correction, relative to the iterative reconstruction calculated offline for data taken during 2011. Within time phases of \(\pm \,10\) ns the difference between the iterative and non-iterative approaches with the parabolic correction applied is less than 1%.

The difference between the energies reconstructed using the non-iterative (with the parabolic correction applied) and iterative OF technique as a function of energy can be seen in Fig. 4 for high \(p_{\text {T}} \) (\(> 20\) GeV) isolated muons taken from the 2010 \(\sqrt{s}=7\) TeV collision data. For channel energies between 200 and 400 MeV the mean difference between the two methods is smaller than 10 MeV. For channel energies larger than 600 MeV, the mean reconstructed energy is the same for the two methods.

The relative difference between the online channel energy (\(E_{\mathrm {DSP}}\)) calculated using the non-iterative OF method and the offline (\(E_{\mathrm {OFLI}}\)) channel energy reconstruction using the iterative OF method, as a function of the phase computed by the DSP (\(t_{\mathrm {DSP}}\)) with no correction (circles) and with application of the parabolic correction (squares) as a function of phase (\(\tau \)). The error bars are the standard deviations (RMS) of the relative difference distribution. Data are shown for collisions in 2011

The absolute difference between the energies reconstructed using the optimal filtering reconstruction method with the non-iterative (\(E_{\mathrm {OFLNI}}\)) and iterative (\(E_{\mathrm {OFLI}}\)) signal reconstruction methods as a function of energy. The black markers represent mean values of \(E_{\mathrm {OFLNI}}\)–\(E_{\mathrm {OFLI}}\) per a bin of \(E_{\mathrm {OFLNI}}\). The parabolic correction is applied to \(E_{\mathrm {OFLNI}}\). The data shown uses high \(p_{\text {T}} \) (\(> 20\) GeV) isolated muons from \(\sqrt{s}=7\) TeV collisions recorded in 2010

3.1 Channel time calibration and corrections

Correct channel time is essential for energy reconstruction, object selection, and for time-of-flight analyses searching for hypothetical long-lived particles entering the calorimeter. Initial channel time calibrations are performed with laser and cosmic-ray muon events, and are later refined using beam-splash events from a single LHC beam [6]. A laser calibration system pulses laser light directly into each PMT. The system is used to calibrate the time of all channels in one super-drawer such that the laser signal is sampled simultaneously. These time calibrations are used to account for time delays due to the physical location of the electronics. Finally, the time calibration is set with collision data, considering in each event only channels that belong to a reconstructed jet. This approach mitigates the bias from pile-up noise (Sect. 3.3) and non-collision background. Since the reconstructed time slightly depends on the energy deposited by the jet in a cell (Fig. 5 left), the channel energy is further required to be in a certain range (2–4 GeV) for the time calibration. An example of the reconstructed time spectrum in a channel satisfying these conditions is shown in Fig. 5(right). The distribution shows a clear Gaussian core (the Gaussian mean determines the time calibration constant) with a small fraction of events at both high- and low-time tails. The higher-time tails are more evident for low-energy bins and are mostly due to the slow hadronic component of the shower development. Symmetric tails are due to out-of-time pile-up (see Sect. 3.3) and are not seen in 2010 data where pile-up is negligible. The overall time resolution is evaluated with jets and muons from collision data, and is described in Sect. 6.3.

Left: the mean cell reconstructed time (average of the times in the two channels associated with the given cell) as measured with jet events. The mean cell time decreases with the increase of the cell energy due to the reduction of the energy fraction of the slow hadronic component of hadronic showers [26, 27]. Right: example of the channel reconstructed time in jet events in 2011 data, with the channel energy between 2 and 4 GeV. The solid line represents the Gaussian fit to the data

During Run 1 a problem was identified in which a digitiser could suddenly lose its time calibration settings. This problem, referred to as a “timing jump”, was later traced to the TTCRx chip in the digitiser board, which received clock configuration commands responsible for aligning that digitiser sampling clock with the LHC clock. During operation these settings are sent to all digitisers during configuration of the super-drawers, so a timing jump manifests itself at the beginning of a run or after a hardware failure requiring reconfiguration during a run. All attempts to avoid this feature at the hardware or configuration level failed, hence the detection and correction of faulty time settings became an important issue. Less than 15% of all digitisers were affected by these timing jumps, and were randomly distributed throughout the TileCal. All channels belonging to a given digitiser exhibit the same jump, and the magnitude of the shift for one digitiser is the same for every jump.

Laser and collision events are used to detect and correct for the timing jumps. Laser events are recorded in parallel to physics data in empty bunch crossings. The reconstructed laser times are studied for each channel as a function of luminosity block. As the reconstructed time phase is expected to be close to zero the monitoring algorithm searches for differences (\(> 3\) ns) from this baseline. Identified cases are classified as potential timing jumps, and are automatically reported to a team of experts for manual inspection. The timing differences are saved in the database and applied as a correction in the offline data reconstruction.

Reconstructed jets from collision data are used as a secondary tool to verify timing jumps, but require completion of the full data reconstruction chain and constitute a smaller sample as a function of luminosity block. These jets are used to verify any timing jumps detected by the laser analysis, or used by default in cases where the laser is not operational. For the latter, problematic channels are identified after the full reconstruction, but are corrected in data reprocessing campaigns.

A typical case of a timing jump is shown in Fig. 6 before (left) and after (right) the time correction. Before the correction the time step is clearly visible and demonstrates good agreement between the times measured by the laser and physics collision data.

An example of timing jumps detected using the laser (full red circles) and physics (open black circles) events (left) before and (right) after the correction. The small offset of about 2 ns in collision data is caused by the energy dependence of the reconstructed time in jet events (see Fig. 5, left). In these plots, events with any energy are accepted to accumulate enough statistics

The overall impact of the timing jump corrections on the reconstructed time is studied with jets using 1.3 fb\(^{-1}\) of collision data taken in 2012. To reduce the impact of the time dependence on the reconstructed energy, the channel energy is required to be \(E > 4\) GeV, and read out in high-gain mode. The results are shown in Fig. 7, where the reconstructed time is shown for all calorimeter channels with and without the timing jump correction. While the Gaussian core, corresponding to channels not affected by timing jumps, remains basically unchanged, the timing jump correction significantly reduces the number of events in the tails. The 95% quantile range around the peak position shrinks by 12% (from 3.3 ns to 2.9 ns) and the overall RMS improves by 9% (from 0.90 ns to 0.82 ns) after the corrections are applied. In preparation for Run 2, problematic digitisers were replaced and repaired. The new power supplies, discussed in the next section, also contribute to the significant reduction in the number of the timing jumps since the trips almost ceased (Sect. 5.4) and thus the module reconfigurations during the run are eliminated in Run 2.

3.2 Electronic noise

The total noise per cell is calculated taking into account two components, electronic noise and a contribution from pile-up interactions (so-called pile-up noise). These two contributions are added in quadrature to estimate the total noise. Since the cell noise is directly used as input to the topological clustering algorithm [28] (see Sect. 6), it is very important to estimate the noise level per cell with good precision.

The electronic noise in the TileCal, measured by fluctuations of the pedestal, is largely independent of external LHC beam conditions. Electronic noise is studied using large samples of high- and low-gain pedestal calibration data, which are taken in dedicated runs without beam in the ATLAS detector. Noise reconstruction of pedestal data mirrors that of the data-taking period, using the OF technique with iterations for 2010 data and the non-iterative version from 2011 onward.

The electronic noise per channel is calculated as a standard deviation (RMS) of the energy distributions in pedestal events. The fluctuation of the digital noise as a function of time is studied with the complete 2011 dataset. It fluctuates by an average of 1.2% for high gain and 1.8% for low gain across all channels, indicating stable electronic noise constants.

As already mentioned in Sect. 1.1, a typical cell is read out by two channels. Therefore, the cell noise constants are derived for the four combinations of the two possible gains from the two input channels (high–high, high–low, low–high, and low–low). Figure 8 shows the mean cell noise (RMS) for all cells as a function of \(\eta \) for the high–high gain combinations. The figure also shows the variations with cell type, reflecting the variation with the cell size. The average cell noise is approximately 23.5 MeV. However, cells located in the highest \(|\eta |\) ranges show noise values closer to 40 MeV. These cells are formed by channels physically located near the LVPS. The influence of the LVPS on the noise distribution is discussed below. A typical electronic noise values for other combinations of gains are 400–700 MeV for high–low/low–high gain combinations and 600–1200 MeV for low–low gain case. Cells using two channels with high gain are relevant when the deposited energy in the cell is below about 15 GeV, above that both channels are often in low-gain mode, and if they fall somewhere in the middle range of energies (10–20 GeV) one channel is usually in high gain and the other in low gain.

During Run 1 the electronic noise of a cell is best described by a double Gaussian function, with a narrow central single Gaussian core and a second central wider Gaussian function to describe the tails [6]. A normalised double Gaussian template with three parameters (\(\sigma _1\), \(\sigma _2\), and the relative normalisation of the two Gaussian functions R) is used to fit the energy distribution:

The means of the two Gaussian functions are set to \(\mu _1 = \mu _2 = 0\), which is a good approximation for the cell noise. As input to the topological clustering algorithm an equivalent \(\sigma _{\mathrm {eq}}(E)\) is introduced to measure the significance (\(S = |E| /\sigma _{\mathrm {eq}}(E)\)) of the double Gaussian probability distribution function in units of standard deviations of a normal distribution.Footnote 3

The \(\phi \)-averaged electronic noise (RMS) as a function of \(\eta \) of the cell, with both contributing read-out channels in high-gain mode. For each cell the average value over all modules is taken. The statistical uncertainties are smaller than the marker size. Values are extracted using all the calibration runs used for the 2011 data reprocessing. The different cell types are shown separately for each layer: A, BC, D, and E (gap/crack). The transition between the long and extended barrels can be seen in the range \(0.7< |\eta | < 1.0\)

The double Gaussian behaviour of the electronic noise is believed to originate from the LVPS used during Run 1, as the electronic noise in test beam data followed a single Gaussian distribution, and this configuration used temporary power supplies located far from the detector. During December 2010, five original LVPS sources were replaced by new versions of the LVPS. During operation in 2011 these LVPSs proved to be more reliable by suffering virtually no trips, and resulted in lower and more single-Gaussian-like behaviour of channel electronic noise. With this success, 40 more new LVPS sources (corresponding to 16% of all LVPSs) were installed during the 2011–2012 LHC winter shutdown. Figure 9 shows the ratio of the RMS to the width of a single Gaussian fit to the electronic noise distribution for all channels averaged over the 40 modules before and after the replacement of the LVPS. It can be seen that the new LVPS have values of RMS\(/\sigma \) closer to unity, implying a shape similar to a single Gaussian function, across all channels. The average cell noise in the high–high gain case decreases to 20.6 MeV with the new LVPS.

The coherent component of the electronic noise was also investigated. A considerable level of correlation was only found among channels belonging to the same motherboard,Footnote 4 for other pairs of channels the correlations are negligible. Methods to mitigate the coherent noise were developed;Footnote 5 they reduce the correlations from (\(-\,40\%\), \(+\,70\%\)) to (\(-\,20\%\), \(+\,10\%\)) and also decrease the fraction of events in the tails of the double Gaussian noise distribution.

Electronic noise in the Monte Carlo simulations

The emulation of the electronic noise, specific to each individual calorimeter cell, is implemented in the digitisation of the Monte Carlo signals. It is assumed that it is possible to convert the measured cell noise to an ADC noise in the digitisation step, as the noise is added to the individual samples in the MC simulation. The correlations between the two channels in the cell are not considered. As a consequence, the constants of the double Gaussian function, used to generate the electronic noise in the MC simulation, are derived from the cell-level constants used in the real data. As a closure test, after reconstruction of the cell energies in the MC simulation the cell noise constants are calculated using the same procedure as for real data. The reconstructed cell noise in the MC reconstruction is found to be in agreement with the original cell noise used as input from the real data. Good agreement between data and MC simulation of the energy of the TileCal cells, also for the low and negative amplitudes, is found (see Fig. 10). The measurement is performed using 2010 data where the pile-up contribution is negligible. The noise contribution can be compared with data collected using a random trigger.

3.3 Pile-up noise

The pile-up effects consist of two contributions, in-time pile-up and out-of-time pile-up. The in-time pile-up originates from multiple interactions in the same bunch crossing. In contrast, the out-of-time pile-up comes from minimum-bias events from previous or subsequent bunch crossings. The out-of-time pile-up is present if the width of the electrical pulse (Fig. 2) is longer than the bunch spacing, which is the case in Run 1 where the bunch spacing in runs used for physics analyses is 50 ns. These results are discussed in the following paragraphs.

The pile-up in the TileCal is studied as a function of the detector geometry and the mean number of inelastic pp interactions per bunch crossing \(\left<\mu \right>\) (averaged over all bunch crossings within a luminosity block and depending on the actual instantaneous luminosity and number of colliding bunches). The data are selected using a zero-bias trigger. This trigger unconditionally accepts events from collisions occurring a fixed number of LHC bunch crossings after a high-energy electron or photon is accepted by the L1 trigger, whose rate scales linearly with luminosity. This triggering provides a data sample which is not biased by any residual signal in the calorimeter system. Minimum-bias MC samples for pile-up noise studies were generated using Pythia 8 and Pythia 6 for 2012 and 2011 simulations, respectively. The noise described in this section contains contributions from both electronic noise and pile-up, and is computed as the standard deviation (RMS) of the energy deposited in a given cell.

The total noise (electronic noise and contribution from pile-up) in different radial layers as a function of \(|\eta |\) for a medium pile-up run (average number of interactions per bunch crossing over the whole run \(\left<\mu _{\mathrm {run}}\right>=15.7\)) taken in 2012 is shown in Fig. 11. The plots make use of the \(\eta \) symmetry of the detector and use cells from both \(\eta \) sides in the calculation. In the EB standard cells (all except E-cells), where the electronic noise is almost flat (see Fig. 8), the amount of upstream material as a function of \(|\eta |\) increases [1], causing the contribution of pile-up to the total noise to visibly decrease. The special cells (E1–E4), representing the gap and crack scintillators, experience the highest particle flux, and have the highest amount of pile-up noise, with cell E4 (\(|\eta | = 1.55\)) exhibiting about 380 MeV of noise at \(\left<\mu _{\mathrm {run}}\right>=15.7\) (of which about 5 MeV is attributed to electronic noise). In general, the trends seen in the data for all layers as a function of \(|\eta |\) are reproduced by the MC simulation. The total noise observed in data exceeds that in the simulation, the differences are up to 20%.

The total noise per cell as a function of \(|\eta |\) for \(\left<\mu _{\mathrm {run}}\right>=15.7\), for the high–high gain combination. The data from a 2012 run, with a bunch spacing of 50 ns, are shown in black while the simulation is shown in blue. Four layers are displayed: layer A (top left), layer BC (top right), layer D (bottom left), and the special gap and crack cells (bottom right). The electronic noise component is shown in Fig. 8

The energy spectrum in the cell A12 is shown in Fig. 12(left) for two different pile-up conditions with \(\left<\mu \right>=20\) and \(\left<\mu \right>=30\). The mean energy reconstructed in TileCal cells is centred around zero in minimum-bias events. Increasing pile-up widens the energy distribution both in data and MC simulation. Reasonable agreement between data and simulation is found above approximately 200 MeV. However, below this energy, the simulated energy distribution is narrower than in data. This results in lower total noise in simulation compared with that in experimental data as already shown in Fig. 11. Figure 12(right) displays the average noise for all cells in the A-layer as a function of \(\left<\mu \right>\). Since this layer is the closest to the beam pipe among LB and EB layers, it exhibits the largest increase in noise with increasing \(\left<\mu \right>\). When extrapolating \(\left<\mu \right>\) to zero, the noise values are consistent with the electronic noise.

The area-normalised energy spectra in cells A12 over all TileCal modules for two different pile-up conditions \(\left<\mu \right>=20,\ 30\) (left) and the total noise, computed as the standard deviation of the energy distribution in all A-layer cells, as a function of \(\left<\mu \right>\) (right) for data and minimum-bias MC simulation in 2012

4 Calibration systems

Three calibration systems are used to maintain a time-independent electromagnetic (EM) energy scaleFootnote 6 in the TileCal, and account for changes in the hardware and electronics due to irradiation, ageing, and faults. The caesium (Cs) system calibrates the scintillator cells and PMTs but not the front-end electronics used for collision data. The laser calibration system monitors both the PMT and the same front-end electronics used for physics. Finally, the charge injection system (CIS) calibrates and monitors the front-end electronics. Figure 13 shows a flow diagram that summarises the components of the read-out tested by the different calibration systems. These three complementary calibration systems also aid in identifying the source of problematic channels. Problems originating strictly in the read-out electronics are seen by both laser and CIS, while problems related solely to the PMT are not detected by the charge injection system.

The signal amplitude A is reconstructed in units of ADC counts using the OF algorithm defined in Eq. (1). The conversion to channel energy, \(E_{\mathrm {channel}}\), is performed with the following formula:

where each \(C_i\) represents a calibration constant or correction factor, which are described in the following paragraphs.

The overall EM scale \(C_{\mathrm {TB}}\) was determined in dedicated beam tests with electrons incident on 11% of the production modules [6, 27]. It amounts to \(1.050\,\pm \,0.003\) pC/GeV with an RMS spread of \((2.4\,\pm \,0.1)\)% in layer A, with additional corrections applied to the other layers as described in Sect. 4.1. The remaining calibration constants in Eq. (2) are used to correct for both inherent differences and time-varying optical and electrical read-out differences between individual channels. They are calculated using three dedicated calibration systems (caesium, laser, charge injection) that are described in more detail in the following subsections. Each calibration system determines their respective constants to a precision better than 1%.

4.1 Caesium calibration

The TileCal exploits a radioactive \(^{137}\)Cs source to maintain the global EM scale and to monitor the optical and electrical response of each PMT in the ATLAS environment [30]. A hydraulic system moves this Cs source through the calorimeter using a network of stainless steel tubes inserted into small holes in each tile scintillator.Footnote 7 The beta decay of the \(^{137}\)Cs source produces 0.665 MeV photons at a rate of \(\sim 10^6\) Hz, generating scintillation light in each tile.Footnote 8 In order to collect a sufficient signal, the electrical read-out of the Cs calibration is performed using the integrator read-out path; therefore the response is a measure of the integrated current in a PMT. As is described in Sect. 4.3, dedicated calibration runs of the integrator system show that the stability of individual channels was better than 0.05% throughout Run 1.

In June 2009 the high voltage (HV) of each PMT was modified so that the Cs source response in the same PMTs was equal to that observed in the test beam. Corrections are applied to account for differences between these two environments, namely the activity of the different sources and half-life of \(^{137}\)Cs.

Three Cs sources are used to calibrate the three physical TileCal partitions in the ATLAS detector, one in the LB and one in each EB. A fourth source was used for beam tests and another is used in a surface research laboratory at CERN. The response to each of the five sources was measured in April 2009 [6] and again in March 2013 at the end of Run 1 using a test module for both the LB and EB. The relative response to each source measured on these two dates agrees to within 0.2% and confirms the expected \(^{137}\)Cs activity during Run 1.

A full Cs calibration scan through all tiles takes approximately six hours and was performed roughly once per month during Run 1. The precision of the Cs calibration in one typical cell is approximately 0.3%. For cells on the extreme sides of a partition the precision is 0.5% due to larger uncertainties associated with the source position. Similarly, the precision for the narrow C10 and D4 ITC cells is 3% and \(\sim \)1%, respectively, due to the absence of an iron end-plate between the tile and Cs pipe. It makes more challenging the distinction between the desired response when the Cs source is inside that particular tile of interest versus a signal detected when the source moves towards a neighbouring tile row.

The Cs response as a function of time is shown in Fig. 14(left) averaged over all cells of a given radial layer. The solid line, enveloped by an uncertainty band, represents the expected response due to the reduced activity of the three Cs sources in the ATLAS detector (\(-2.3\%\)/year). The error bars on each point represent the RMS spread of the response in all cells within a layer. There is a clear deviation from this expectation line, with the relative difference between the measured and expected values shown in Fig. 14(right). The average up-drift of the response relative to the expectation was about 0.8%/year in 2009–2010. From 2010 when the LHC began operation, the upward and downward trends are correlated with beam conditions–the downward trends correspond to the presence of colliding beams, while the upward trends are evident in the absence of collisions. This effect is pronounced in the innermost layer A, while for layer D there is negligible change in response. This effect is even more evident when looking at pseudorapidity-dependent responses in individual layers. While in most LB-A cells a deviation of approximately 2.0% is seen (March 2012 to December 2012), in EB-A cells the deviation ranges from 3.5% (cell A13) to 0% (outermost cell A16). These results indicate the total effect, as seen by the Cs system, is due to the scintillator irradiation and PMT gain changes (see Sect. 4.5 for more details).

The plot on the left shows the average response (in arbitrary units, a.u.) from all cells within a given layer to the \(^{137}\)Cs source as a function of time from July 2009 to December 2012. The solid line represents the expected response, where the Cs source activity decreases in time by \(-2.3\%\)/year. The coloured band shows the declared precision of the Cs calibration (\(\pm \, 0.3\)%). The plot on the right shows the percentage difference of the response from the expectation as a function of time averaged over all cells in all partitions. Both plots display only the measurements performed with the magnetic field at its nominal value. The first points in the plot on the right deviate from zero, as the initial HV equalisation was done in June 2009 using Cs calibration data taken without the magnetic field (not shown in the plot). The increasing Cs response in the last three measurements corresponds to the period without collisions after the Run 1 data-taking finished

The Cs calibration constants are derived using Cs calibration data taken with the full ATLAS magnetic field system on, as in the nominal physics configuration. The magnetic field effectively increases the light yield in scintillators approximately by 0.7% in the LB and 0.3% in the EB.

Since the response to the Cs source varies across the surface of each tile, additional layer-dependent weights are applied to maintain the EM scale across the entire calorimeter [27]. These weights reflect the different radial tile sizes in individual layers and the fact that the Cs source passes through tiles at their outer edge.

The total systematic uncertainty in applying the EM scale from the test beam environment to ATLAS was found to be 0.7%, with the largest contributions from variations in the response to the Cs sources in the presence of a magnetic field (0.5%) and the layer weights (0.3%) [27].

4.2 Laser calibration

A laser calibration system is used to monitor and correct for PMT response variations between Cs scans and to monitor channel timing during periods of collision data-taking [31, 32].

This laser calibration system consists of a single laser source, located off detector, able to produce short light pulses that are simultaneously distributed by optical fibres to all 9852 PMTs. The intrinsic stability of the laser light was found to be 2%, so to measure the PMT gain variations to a precision of better than 0.5% using the laser source, the response of the PMTs is normalised to the signal measured by a dedicated photodiode. The stability of this photodiode is monitored by an \(\alpha \)-source and, throughout 2012, its stability was shown to be 0.1%, and the linearity of the associated electronics response within 0.2%.

The calibration constants, \(C_{\mathrm {laser}}\) in Eq. (2), are calculated for each channel relative to a reference run taken just after a Cs scan, after new Cs calibration constants are extracted and applied. Laser calibration runs are taken for both gains approximately twice per week.

For the E3 and E4 cells, where the Cs calibration is not possible, the reference run is taken as the first laser run before data-taking of the respective year. A sample of the mean gain variation in the PMTs for each cell type averaged over \(\phi \) between 19 March 2012 (before the start of collisions) and 21 April 2012 is shown in Fig. 15. The observed down-drift of approximately 1% mostly affects cells at the inner radius with higher current draws.

The mean gain variation in the PMTs for each cell type averaged over \(\phi \) between a stand-alone laser calibration run taken on 21 April 2012 and a laser run taken before the collisions on 19 March 2012. For each cell type, the gain variation was defined as the mean of a Gaussian fit to the gain variations in the channels associated with this cell type. A total of 64 modules in \(\phi \) were used for each cell type, with the exclusion of known pathological channels

The laser calibration constants were not used during 2010. For data taken in 2011 and 2012 these constants were calculated and applied for channels with PMT gain variations larger than 1.5% (2%) in the LB (EB) as determined by the low-gain calibration run, with a consistent drift as measured in the equivalent high-gain run. In 2012 up to 5% of the channels were corrected using the laser calibration system. The laser calibration constants for E3 and E4 cells were applied starting in the summer of 2012, and were retroactively applied after the ATLAS data were reprocessed with updated detector conditions. The total statistical and systematic errors of the laser calibration constants are 0.4% for the LB and 0.6% for the EBs, where the EBs experience larger current draws due to higher exposure.

4.3 Charge injection calibration

The charge injection system is used to calculate the constant \(C_{{\mathrm {ADC}\rightarrow \mathrm {pC}},\mathrm {CIS}}\) in Eq. (2) and applied for physics signals and laser calibration data. A part of this system is also used to calibrate the gain conversion constant for the slow integrator read-out.

All 19704 ADC channels in the fast front-end electronics are calibrated by injecting a known charge from the 3-in-1 cards, repeated for a wide range of charge values (approximately 0–800 pC in low-gain and 0–12 pC in high-gain). A linear fit to the mean reconstructed signal (in ADC counts) yields the constant \(C_{{\mathrm {ADC}\rightarrow \mathrm {pC}},\mathrm {CIS}}\). During Run 1 the precision of the system was better than 0.7% for each ADC channel.

Charge injection calibration data are typically taken twice per week in the absence of colliding beams. For channels where the calibration constant varies by more than 1.0% the constant is updated for the energy reconstruction. Figure 16 shows the stability of the charge injection constants as a function of time in 2012 for the high-gain and low-gain ADC channels. Similar stability was seen throughout 2010 and 2011. At the end of Run 1 approximately 1% of all ADC channels were unable to be calibrated using the CIS mostly due to hardware problems evolving in time, so default \(C_{{\mathrm {ADC}\rightarrow \mathrm {pC}},\mathrm {CIS}}\) constants are used in such channels.

Stability of the charge injection system constants for the low-gain ADCs (left) and high-gain ADCs (right) as a function of time in 2012. Values for the average over all channels and for one typical channel with the 0.7% systematic uncertainty are shown. Only good channels not suffering from damaged components relevant to the charge injection calibration are included in this figure

The slow integrator read-out is used to measure the PMT current over \(\sim \!\!10\) ms. Dedicated runs are periodically taken to calculate the integrator gain conversion constant for each of the six gain settings, by fitting the linear relationship between the injected current and measured voltage response. The stability of individual channels is better than 0.05%, the average stability is better than 0.01%.

4.4 Minimum-bias currents

Minimum-bias (MB) inelastic proton–proton interactions at the LHC produce signals in all PMTs, which are used to monitor the variations of the calorimeter response over time using the integrator read-out (as used by the Cs calibration system).Footnote 9 The MB rate is proportional to the instantaneous luminosity, and produces signals in all subdetectors, which are uniformly distributed around the interaction point. In the integrator circuit of the Tile Calorimeter this signal is seen as an increased PMT current I calculated from the ADC voltage measurement as:

where the integrator gain constant (Int. gain) is calculated using the CIS calibration, and the pedestal (ped) from physics runs before collisions but with circulating beams (to account for beam background sources such as beam halo and beam–gas interactions). Studies found the integrator has a linear response (non-linearity \(<1\%\)) for instantaneous luminosities between \(1\times 10^{30}\) and \(3\times 10^{34}\) cm\(^{-2}\)s\(^{-1}\).

Due to the distribution of upstream material and the distance of cells from the interaction point the MB signal seen in the TileCal is not expected to be uniform. Figure 17 shows the measured PMT current versus cell \(\eta \) (averaged over all modules) for a fixed instantaneous luminosity. As expected, the largest signal is seen for the A-layer cells which are closer to the interaction point, with cell A13 (\(|\eta | = 1.3\)) located in the EB and (with minimal upstream material) exhibiting the highest currents.

The currents induced in the PMTs due to MB activity are used to validate response changes observed by the Cs calibration system as well as for response monitoring during the physics runs. Moreover, they probe the response in the E3 and E4 cells, which are not calibrated by Cs.

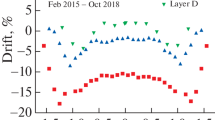

4.5 Combination of calibration methods

The TileCal response is expected to vary over time, with particular sensitivity to changing LHC luminosity conditions. Figure 18 shows the variation of the response to MB, Cs, and laser calibration systems for cell A13 as a function of the time in 2012. Cell A13 is located in the EB, and due to the smaller amount of upstream material, it is exposed to one of the highest radiation doses of all cells as also seen in Fig. 17. To disentangle the effects of PMT and scintillator changes one can study the laser versus MB (or Cs) responses.

The PMT gain, as monitored with the laser, is known to decrease with increasing light exposure due to lower secondary emissions from the dynode surfaces [33].Footnote 10 When a PMT is initially exposed to light after a long period of ‘rest’, its gain decreases rapidly and then a slow stabilisation occurs [34]. This behaviour is demonstrated in Fig. 18 – the data-taking in 2012 started after four months of inactivity, followed by the gain stabilisation after several weeks of LHC operation. The same trends were also observed in 2011. The periods of recovery, where the laser response tends towards initial conditions, coincide with times when LHC is not colliding protons. This is consistent with the known behaviour of ‘fatigued’ PMTs that gradually return towards original operating condition after the exposure is removed [35]. A global PMT gain increase of 0.9% per year is observed even without any exposure (e.g. between 2003 and 2009). This is consistent with Fig. 14(right) – after 3.5 years the total gain increase corresponds to approximately 3.5%. Throughout Run 1 the maximum loss of the PMT gain in A13 is approximately 3%, but at the end of 2012 after periods of inactivity the gain essentially recovered from this loss.

The responses to the Cs and MB systems, which are sensitive to both the PMT gain changes and scintillator irradiation show consistent behaviour. The difference between MB (or Cs) and laser response variations is interpreted as an effect of the scintillators’ irradiation. The transparency of scintillator tiles is reduced after radiation exposure [36]; in the TileCal this is evident in the continued downward response to MB events (and Cs) with increasing integrated luminosity of the collisions, despite the eventual slow recovery of the PMTs as described above. In the absence of the radiation source the annealing process is believed to slowly restore the scintillator material, hence improving the collected light yield. The rate and amount of scintillator damage and recovery are complicated combinations of factors, such as particle energies, temperatures, exposure rates and duration, and are difficult to quantify.

The change of response seen in cell A13 by the minimum-bias, caesium, and laser systems throughout 2012. Minimum-bias data cover the period from the beginning of April to the beginning of December 2012. The Cs and laser results cover the period from mid-March to mid-December. The variation versus time for the response of the three systems was normalised to the first Cs scan (mid-March, before the start of collisions data-taking). The integrated luminosity is the total delivered during the proton–proton collision period of 2012. The down-drifts of the PMT gains (seen by the laser system) coincide with the collision periods, while up-drifts are observed during machine development periods. The drop in the response variation during the data-taking periods tends to decrease as the exposure of the PMTs increases. The variations observed by the minimum-bias and Cs systems are similar, both measurements being sensitive to PMT drift and scintillator irradiation

The overlap between the different calibration systems allows calibration and monitoring of the complete hardware and read-out chain of the TileCal, and correct for response changes with fine granularity for effects such as changing luminosity conditions. These methods enable the identification of sources of response variations, and during data-taking, the correction of these variations to maintain the global EM scale throughout Run 1. When possible, problematic components are repaired or replaced during maintenance periods.

5 Data quality analysis and operation

A suite of tools is available to continuously monitor detector hardware and data acquisition systems during their operation. The work-flow is optimised to address problems that arise in real time (online) and afterwards (offline). For cases of irreparable problems, data quality flags are assigned to fractions of the affected data, indicating whether those data are usable for physics analyses with care (depending on the analysis) or must be discarded entirely.

5.1 ATLAS detector control system

An ATLAS-wide Detector Control System (DCS) [37, 38] provides a common framework to continuously monitor, control, and archive the status of all hardware and infrastructure components for each subsystem. The status and availability of each hardware component is visually displayed in real time on a web interface. This web interface also provides a detailed history of conditions over time to enable tracking of the stability. The DCS infrastructure stores information about individual device properties in databases.

The TileCal DCS is responsible for tracking the low voltage, high voltage, front-end electronics cooling systems, and back-end crates. The DCS monitoring data are used by automatic scripts to generate alarms if the actual values are outside the expected operating conditions. Actions to address alarm states can be taken manually by experts, or subject to certain criteria the DCS system can automatically execute actions.

The TileCal DCS system monitors the temperature of the front-end electronics with seven probes at various locations in the super-drawer. A temperature variation of \(1\,^{\circ }\)C would induce a PMT gain variation of 0.2% [6]. Analyses done over several data periods within Run 1 indicated the temperature is maintained within \(0.2\,^{\circ }\)C.

One key parameter monitored by the Tile DCS is the HV applied to each PMT; typical values are 650–700 V. Since the HV changes alter the PMT gain, an update of the calibration constants is required to account for the response change. The relative PMT gain variation \(\Delta G\) between a reference time \(t_\mathrm {r}\) and a time of interest t depends on the HV variation over the same period according to:

where the parameter \(\beta \) is extracted experimentally for each PMT. Its mean value is \(\beta = 7.0\) with an RMS of 0.2 across 97% of the measured PMTs; hence a variation of 1 V corresponds to a gain variation of 1% (for \(\beta = 7\)).

The TileCal high-voltage system is based on remote HV bulk power supplies providing a single high voltage to each super-drawer. Each drawer is equipped with a regulator system (HVopto card) that provides fine adjustment of the voltage for each PMT. One controller (HVmicro card) manages two HVopto cards of the super-drawer. The HVmicro card reports actual HV values to the DCS through a CANbus network every few seconds.

Several studies were performed to quantify the stability of the HV of the PMTs and to identify unstable PMTs. One study compares the value of the measured HV with the expected HV for each PMT over the 2012 period. The difference between the measured and set high voltage (\(\Delta \mathrm {HV}\)) for each PMT is fitted with a Gaussian distribution, and the mean value is plotted for all good channels in a given partition. Good channels are all channels except those in modules that were turned off or in the so-called emergency state (described later). For each partition the mean value is approximately 0 V with an RMS spread of 0.44 V, showing good agreement. Another study investigates the time evolution of \(\Delta \mathrm {HV}\) for a given partition. The variation of the mean values versus time is lower than 0.05 V, demonstrating the stability of the HV system over the full period of the 2012 collision run.

In order to identify PMTs with unstable HV over time, \(\Delta \mathrm {HV}\) is computed every hour over the course of one day for each PMT. Plots showing the daily variation in HV over periods of several months are made. PMTs with \(\Delta \mathrm {HV} > 0.5\) V are classified as unstable. The gain variation for these unstable channels is calculated using Eq. (3) (with knowledge of the \(\beta \) value for that particular PMT), and compared with the gain variation as seen by the laser and Cs calibration systems. These calibration systems are insensitive to electrical failures associated with reading back the measured HV and provide a cross-check of apparent instabilities. Figure 19 shows the gain variation for one PMT that suffered from large instabilities in 2012, as measured by the HV and calibration systems. The gain variations agree between the three methods used. Only those channels that demonstrate instabilities in both the HV and calibration systems are classified as unstable. During 2012, a total of only 15 PMTs (0.15% of the total number of PMTs) were found to be unstable.

One PMT of the EBC64 module with the largest gain variation. This plot presents a comparison between the gain expected from the HV instability (tiny dots), the one measured by the laser (open squares) and Cs (full circles) systems during the whole 2012 run. One HV point represents the averaged gain variation over one hour. The vertical structures are due to power cycles. There is very good agreement between the three methods, meaning that even large variations can be detected and handled by the TileCal monitoring and calibration systems

5.2 Online data quality assessment and monitoring

During periods of physics collisions, the Tile Calorimeter has experts in the ATLAS control room 24 hours per day and a handful of remote experts available on call to assist in advanced interventions. The primary goal is to quickly identify and possibly correct any problem that cannot be fixed later in software, and that can result in overall data loss. The ATLAS data quality framework is designed to perform automatic checks of the data and to alert experts to potential problems that warrant further investigation [39].

Common problems identified by TileCal experts during the online shifts include hardware failures that do not automatically recover, or software configuration problems that might present themselves as data corruption flags from the ROD data integrity checks. The trigger efficiency and data acquisition, as well as higher-level reconstruction data quality, might be influenced by such problems.

5.3 Offline data quality review

Shortly after the data are taken, a small fraction is quickly reconstructed using the Tier-0 computing farm within the ATLAS Athena software framework [40]. Reconstructed data are then used by the offline data quality experts with more complex tools to evaluate the quality of the data. The experts are given 48 hours to identify, and, where possible, to correct problems, before the bulk reconstruction of the entire run is made. The TileCal offline experts can update the conditions database, where information such as the calibration constants and status of each channel is stored. Channels that suffer from high levels of noise have calibration constants in the database updated accordingly. For channels that suffer from intermittent data corruption problems, data quality flags are assigned to the affected data to exclude the channels in the full reconstruction during that period. This 48-hour period is also used to identify cases of digitiser timing jumps and to add the additional time phases to the time constants of the digitiser affected to account for the magnitude of the time jump.

Luminosity blocks can be flagged as defective to identify periods of time when the TileCal is not operating in its nominal configuration. These defects can either be tolerable whereby corrections are applied but additional caution should be taken while analysing these data, or intolerable in which case the data are not deemed suitable for physics analyses. Defects are entered into the ATLAS Data Quality Defect database [41] with the information propagating to analyses as well as to integrated luminosity calculations.

One luminosity block nominally spans one minute, and removing all data within that time can accumulate to a significant data loss. For rare situations where only a single event is affected by the data corruption, an additional error-state flag is introduced into the reconstruction data. This flag is used to remove such events from the analysis.

Once all offline teams review the run, it is sent to the Tier-0 computing farm for bulk reconstruction, where the entire run is reconstructed using the most up-to-date conditions database. Subsequently the data can be re-reconstructed when reconstruction algorithms are improved and/or the conditions database is further refined to improve the description of the detector.Footnote 11 These data reprocessing campaigns typically occur several months after the data are taken.

5.4 Overall Tile Calorimeter operation

Overall the TileCal operation was highly successful in Run 1, with an extremely high fraction of data acceptable for a physics analysis. A summary of the total integrated luminosity delivered to ATLAS and approved for analysis is shown in Table 2, along with the fraction of data passing the Tile Calorimeter data quality reviews.

In 2012, the total integrated luminosity lost after the first bulk reconstruction of the data due to TileCal data-quality-related problems was \(104\,\mathrm {pb}^{-1}\) out of \(21.7\,\mathrm {fb}^{-1}\), and is summarised in Fig. 20 as a function of time for various categories of intolerable defects.Footnote 12 The primary source of Tile luminosity losses are cases when a read-out link (ROL), which transmits data from the ROD to the subsequent chain in the trigger and data acquisition system, is removed from processing. It implies no data are received from the four corresponding modules. ROLs are disabled in situations when they are flooding the trigger with data (malfunctioning configuration or difficulty processing data), putting the trigger into a busy state where effectively no data can be read from any part of the detector. Removing a ROL during a run is done in a so-called stop-less recovery state, whereby the run is not stopped, as restarting a run can take several minutes. One role of the online experts is to identify these cases and to respond by correcting the source of the removal and re-enabling the ROL in the run. After a new run begins any ROLs that were previously removed are re-included. Improvements for handling ROL removals include adding monitoring plots counting the number of reconstructed Tile cells, where large drops can indicate a ROL removal, and an automatic ROL recovery procedure. With the automatic recovery in place, a single ROL removal lasts less than 30 seconds, and losses due to ROL removal dramatically dropped in the second half of 2012. As the removal of a ROL affects four consecutive modules, this defect is classified as intolerable, and it accounted for 45.2 pb\(^{-1}\) of data loss in 2012.

Power cuts or trips of the HV bulk power supply sources accounted for 22.6 pb\(^{-1}\) of lost integrated luminosity. The last 4.9 pb\(^{-1}\) of loss came from situations when the laser ROD became busy.Footnote 13 During 2012 this was improved by prompting the online expert to disable the laser ROD.

An additional loss of 31.3 pb\(^{-1}\) was due to a 25 ns timing shift in a large fraction of the EBC partition which was not caught by the online or offline experts or tools. Improvements for large timing shifts include data quality monitoring warnings when the reconstructed time for large numbers of Tile channels differs from the expected value by a large amount. These data are subsequently recovered in later data reprocessing campaigns when the timing database constants are updated accordingly.

The sources and amounts of integrated luminosity lost due to Tile Calorimeter data quality problems in 2012 as a function of time. The primary source of luminosity losses comes from the stop-less read-out link (ROL) removal in the extended barrels accounting for 45.2 pb\(^{-1}\) of this loss. Power cuts or trips of the 200V power supplies account for 22.6 pb\(^{-1}\). The last 4.9 pb\(^{-1}\) of losses stem from Laser Calibration ROD (LASTROD) busy events. The loss of 31.3 pb\(^{-1}\) due to a \(-25\) ns timing shift in EBC are recovered after the data are reprocessed with updated timing constants. Each bin in the plot represents about two weeks of data-taking

There are several operational problems with the LVPS sources that contribute to the list of tolerable defects. In some cases the LVPS fails entirely, implying an entire module is not analysed. The failure rate was one LVPS per month in 2011 and 0.5 LVPS per month in 2012. The faulty LVPS sources were replaced with spares during the maintenance campaigns in the ATLAS cavern at the end of each year.

In addition to overall failures, sometimes there are problems with the low voltage supplied to the HVopto card, which means the PMT HV can be neither controlled nor measured. In this case the applied HV is set to the minimum value, putting the module in an emergency state. The calibration and noise constants for all channels within a module in emergency mode are updated to reflect this non-nominal state.

Finally, the LVPS suffered from frequent trips correlated with the luminosity at a rate of 0.6 trips per 1 pb\(^{-1}\). Automatic recovery of these modules was implemented, to recover the lost drawer. During the maintenance period between 2011 and 2012, 40 new LVPS sources (version 7.5) with improved design [42] were installed on the detector. In 2012 there were a total of about 14,000 LVPS trips from all modules, only one of which came from the new LVPS version. After the LHC Run 1, all LVPS sources were replaced with version 7.5.

Figure 21 shows the percentage of the TileCal cells masked in the reconstruction as a function of time. These cells are located in all areas of the detector, with no one area suffering from a large number of failures. The main reasons for masking a cell are failures of LVPS sources, evident by the steep steps in the figure. Other reasons are severe data corruption problems or very large noise. The periods of maintenance, when faulty hardware components are repaired or replaced (when possible), are visible by the reduction of the number of faulty cells to near zero. For situations when cell energy reconstruction is not possible the energy is interpolated from neighbouring cells. The interpolation is linear in energy density (energy per cell volume) and is done independently in each layer, using all possible neighbours of the cell (i.e. up to a maximum of eight). In cases where only one of two channels defining a cell is masked the energy is taken to be twice that of the functioning channel.

The percentage of the TileCal cells that are masked in the reconstruction as a function of time starting from June 2010. Periods of recovery correspond to times of hardware maintenance when the detector is accessible due to breaks in the accelerator schedule. Each super-drawer LB (EB) failure corresponds to 0.43% (0.35%) of masked cells. The total number of cells (including gap, crack, and minimum-bias trigger scintillators) is 5198. Approximately 2.9% of cells were masked in February 2013, at the end of the proton–lead data-taking period closing the Run 1 physics programme

6 Performance studies

The response of each calorimeter channel is calibrated to the EM scale using Eq. (2). The sum of the two channel responses associated with the given read-out cell forms the cell energy, which represents a basic unit in the physics object reconstruction procedures. Cells are combined into clusters with the topological clustering algorithm [28] based on the significance of the absolute value of the reconstructed cell energy relative to the noise, \(S = |E|/\sigma \). The noise \(\sigma \) combines the electronic (see Sect. 3.2) and pile-up contributions (Sect. 3.3) in quadrature. Clusters are then used as inputs to jet reconstruction algorithms.

The ATLAS jet performance [43, 44] and measurement of the missing transverse momentum [45] are documented in detail in other papers. The performance studies reported here focus on validating the reconstruction and calibration methods, described in previous sections, using the isolated muons, hadrons and jets entering the Tile Calorimeter.

6.1 Energy response to single isolated muons

Muon energy loss in matter is a well-understood process [46], and can be used to probe the response of the Tile Calorimeter. For high-energy muons, up to muon energies of a few hundred GeV, the dominant energy loss process is ionisation. Under these conditions the muon energy loss per unit distance is approximately constant. This subsection studies the response to isolated muons from cosmic-ray sources and to \(W\rightarrow \mu \nu \) events from pp collisions.

Candidate muons are selected using the muon RPC and TGC triggering subsystems of the Muon Spectrometer. A muon track measured by the Pixel and SCT detectors is extrapolated through the calorimeter volume, taking into account the detector material and magnetic field [47]. A linear interpolation is performed to determine the exact entry and exit points of the muon in every crossed cell to compute the distance traversed by the muon in a given TileCal cell. The distance (\(\Delta x\)) together with the energy deposited in the cell (\(\Delta E\)) are used to compute the muon energy loss per unit distance, \(\Delta E/\Delta x\).

The measured \(\Delta E/\Delta x\) distribution for a cell can be described by a Landau function convolved with a Gaussian distribution, where the Landau part describes the actual energy loss and the Gaussian part accounts for resolution effects. However, the fitted curves show a poor \(\chi ^2\) fit to the data, due to high tails from rare energy loss mechanisms, such as bremsstrahlung or energetic gamma rays. For this reason a truncated mean \(\langle \Delta E/\Delta x \rangle _{\mathrm {t}}\) is used to define the average muon response. For each cell the truncated mean is computed by removing a small fraction (1%) of entries with the highest \(\Delta E/\Delta x\) values. The truncated mean exhibits a slight non-linear scaling with the path length \(\Delta x\). This non-linearity and other residual non-uniformities, such as the differences in momentum and incident angle spectra, are to a large extent reproduced by the MC simulation. To compensate for these effects, a double ratio formed by the ratio of the experimental and simulated truncated means is defined for each calorimeter cell as:

The double ratio R is used to estimate the calorimeter response as a function of various detector geometrical quantities (layer, \(\phi \), \(\eta \), etc). Deviations of the double ratio from unity may indicate poor EM energy scale calibration in the experimental data.

6.1.1 Cosmic-ray muon data