Abstract

An approximate dual representation for non-Abelian lattice gauge theories in terms of a new set of dynamical variables, the plaquette occupation numbers (PONs) that are natural numbers, is discussed. They are the expansion indices of the local series of the expansion of the Boltzmann factors for every plaquette of the Yang–Mills action. After studying the constraints due to gauge symmetry, the SU(2) gauge theory is solved using Monte Carlo simulations. For a PONs configuration the weight factor is given by Haar-measure integrals over all links whose integrands are products of powers of plaquettes. Herein, updates are limited to changes of the PON at a plaquette or all PONs on a coordinate plane. The Markov chain transition probabilities are computed employing truncated maximal trees and the Metropolis algorithm. The algorithm performance is investigated with different types of updates for the plaquette mean value over a large range of \(\beta \)s. Using a \(12^4\) lattice very good agreement with a conventional heath bath algorithm is found for the strong and weak coupling limits. Deviations from the latter being below 0.1% for \(2.5< \beta < 3\). The mass of the lightest \(J^{PC}=0^{++}\) glueball is evaluated and reproduces the results found in the literature.

Similar content being viewed by others

1 Introduction

The computation of the properties of strongly-interacting matter directly from Quantum Chromodynamics (QCD) remains a challenging problem. For matter at zero baryon density, Monte Carlo lattice QCD simulations are currently used to address both zero and finite temperature [1]. On the other hand, the investigation of dense quark matter, as required for example to study the structure of atomic nuclei and neutron stars, the quark-gluon plasma produced in heavy ion collisions, and the matter that existed in the early stages of the Universe, is still an open problem for lattice QCD simulations due to algorithmic limitations. Indeed, the investigation of such systems, e.g. in the grand canonical ensemble, demands the introduction of a finite chemical potential \(\mu \) in the partition function of the theory. The baryon chemical potential turns the Euclidean action into a complex-valued function and the integration measure in the path integral of the partition function is no longer positive definite, giving rise to the so-called sign problems, and thereby limiting the use of Monte Carlo techniques with importance sampling. For sufficiently small values of \(\mu \), the study of dense systems can still rely on importance sampling when combined with re-weighting [2, 3]. However, in general, the handling of complex actions requires the introduction of new sampling techniques as, for example, the direct sampling of the density of states or a mapping of the theory into new variables such that one recovers a positive Boltzmann factor; in this latter approach, the theory reformulated in terms of the new variables is called the dual theory – Ref. [4] provides a recent review on these methods for lattice field theories. The mapping of a given theory into its dual has been used to overcome sign problems appearing in different fields [5].

In lattice QCD in the strong coupling limit, sign problems can be avoided by mapping the theory into a dual representation, using new “dual variables”, after the integration of the gauge fields prior to the integration of the fermion fields [6,7,8]. The gauge symmetry of the original theory imposes constraints on the new set of dual variables which, nevertheless, can be handled via generalizations of the original Prokof’ev-Svistunov worm algorithm [9].

Another example of a dual representation of QCD is the effective theory introduced in Ref. [10], where the fundamental degrees of freedom are the Polyakov loops defined in the group \({\mathbb {Z}}(3)\). This effective theory can be derived from QCD in the strong coupling limit, by restricting the non-Abelian gauge degrees of freedom to the center of the group SU(3), i.e. to the group \({\mathbb {Z}}(3)\), and performing a hopping expansion in the quark sector. The action of the \({\mathbb {Z}}(3)\) effective theory inherits a sign problem from QCD. However, after rewriting the original partition function in terms of dual variables, it becomes a sum of real and positive Boltzmann weights [11]. The dual variables are dimers, that are attached to the lattice links, and monomers, that are attached to the lattice sites. In the dual representation, the complex nature of the original action is washed out [11]. Symmetries of the original theory appear, again, as constraints on the dual variables of the reformulated theory that can be handled with the use of a generalized worm algorithm.

In recent years several other interesting QCD-related theories were studied using dual representations. Theories with O(N) and CP(N-1) symmetries, which, like QCD, are asymptotically free, were investigated with dual representations at zero [12, 13] and finite density [14]. Strongly interacting fermionic theories, relevant for graphene [15] and also for particle physics, were investigated with the fermion bag approach [16,17,18,19], in which a dual representation can be built after a suitable integration of the fermionic degrees of freedom. By combining strong coupling and hopping-parameter expansions, an effective theory [20] in the dual representation free of sign problems is obtained. Scalar field theories have also been successfully mapped into dual representations, see e.g. Refs. [21,22,23,24,25].

In what concerns gauge field theories, dual representations were implemented for pure Abelian U(1) theory [26, 27], Higgs-U(1) theory [28, 29], and U(1) Abelian theory with fermion fields [30]. For pure SU(N) lattice gauge theory a dual representation was suggested recently in Ref. [31], where the dual variables are random Gaussian matrices introduced by recursive applications of the Hubbard-Stratonovich transformation [32]. Recently, a dual representation for non-Abelian gauge theories was suggested in Refs. [33,34,35], in which the partition function for the dual theory is given by a sum of positive and negative terms, which prevents the use of Monte Carlo simulations with importance sampling to solve the theory.

In the present work we discuss a new approximate dual representation for pure non-Abelian gauge theories. Starting from the partition function written in terms of the Wilson action, we expand the Boltzmann exponential factor of a single plaquette as a power series. The expansion indices of each plaquette, \(b_{\mu \nu }\left( x\right) \in {\mathbb {N}}_{0}\), where \({\mathbb {N}}_{0}\) is the set of natural numbers, after integrating over the gauge fields, play the role of dynamical variables. The Boltzmann weights become functions of the dual variables \(b_{\mu \nu }\left( x\right) \), and a Metropolis-type algorithm can be built. The transition probabilities of the corresponding Markov chain are ratios between these weights. In the new representation of the non-Abelian gauge theory, the weights are computed using the Haar-measure integrals involving the link variables. The integration over the links is a non-trivial problem per se as each link is coupled to all links in the entire lattice. For the numerical experiment, we make approximations in the integration over the link fields to estimate the transition probability defining the Markov chain and thus generate ensembles of the dual variables \(\left\{ b_{\mu \nu }\left( x\right) \right\} \).

The work reported herein investigates the pure SU(2) Yang–Mills gauge theory. Although the boson sector of a gauge theory does not suffer from the sign problem, our goal is to test a new algorithm/representation of a gauge theory to study strong interactions. The natural development of the ideas discussed herein are both the inclusion of matter fields to simulate the full theory and the improvement in the approximations considered. The rationale used here to build a new dual representation can, in principle, be extended to the fermionic sector. The full theory requires the use of an enlarged set of dynamical variables, defined after the expansion of the partition function. Furthermore, the constraints in the corresponding dual theory due to the gauge symmetry are of the same type as those for the pure Yang–Mills theory. On the other hand, the integration over the link fields requires a new analysis.

We test our algorithm by computing the plaquette mean value, related to the energy density of the pure gauge system, over a large range of the lattice coupling constant \(\beta \) and the mass of the (expected) lightest scalar glueball state (\(J^{PC}=0^{++}\)). Our results show that the plaquette mean value obtained with the algorithm developed here deviates, in the worst case, by less than 0.1% when compared with a standard heat bath simulation for \(\beta \in [0, \, 4.5]\). The mass of the lightest glueball agrees well with previous lattice estimates [36,37,38,39,40,41,42] and also with estimates based on a gauge-gravity duality model [43].

Our paper is organized as follows. In the next section we present our approximate dual representation for the non-Abelian Yang–Mills theory. In Sect. 3 we discuss the constraints on the dual variables due to gauge symmetry which determine the types of updates that must be considered in a algorithm approach to solve the theory. In Sect. 4 we discuss the Monte Carlo algorithm used in our approach. We also present strategies to decouple a region from the entire lattice surrounding a dual variable to be updated locally. In the factorized region, the group integrals are done analytically. Still in Sect. 4 we show how to implement one possible type of nonlocal update. In Sect. 5 we show how to represent the observables to be measured in terms of the dual variables. In Sect. 6 we give the details of the simulations and report the numerical data for the observables measured. A summary in Sect. 7 completes the paper.

2 Approximate dual representation for lattice Yang–Mills theory

The lattice formulation of pure Yang–Mills theory uses as fundamental fields the link variables \(U_{\mu }(x)\), which belong to the gauge group SU(N). We consider the standard Wilson action [44]:

with the plaquette \(U_{\mu \nu }(x)\) given by the product of link variables

where the spacetime indices \(\mu \) and \(\nu \) run from 1 to d, with d being the dimension of the Euclidean space, and x runs over the lattice volume V. The partition function of the theory is given by

where \({\mathscr {D}}U=\prod _{x,\mu }dU_{\mu }(x)\) is the Haar measure for the gauge links, \(C= \exp \left( -\beta N^{V_{p}-1}\right) \) is a normalization factor and \(V_{p}\) is the number of plaquettes in the volume V. Given an operator \({\mathscr {O}}(U)\), its vacuum expectation value is represented by the functional integral

In the traditional lattice approach, this expectation value is estimated by the average

where the set of configurations \({\mathscr {U}}\!=\!\{U^{(i)}, i\!=\!1, \ldots , N_{conf}\}\), distributed according to \(\exp \{-S[U]\}\), is produced with a Monte Carlo algorithm. The statistical error associated with such an estimate scales with the number of configurations as \(N_{conf}^{-1/2}\).

The simulation of Yang–Mills theory with a dual representation demands rewriting the partition function in Eq. (3) in terms of a new set of dynamical variables other than the links. In order to be able to apply such a type of algorithm, let us expand the exponentials appearing in the partition function in powers of \(\beta \)

where we have discarded for the moment the global factor C. Performing the integration over the link variables, i.e. computing the integral \(\int {\mathscr {D}}U\), Z can then be viewed as a function of the discrete set of variables \(b_{\mu \nu }(x)\), which are natural numbers. Let us introduce the notation

so that the partition function can be written as

where

The integration of the link variables defines the weight functions \(Q_{\left\{ U\right\} }\left[ \left\{ b\right\} \right] \) which are, themselves, functions of the natural numbers \(b_{\mu \nu }\left( x\right) \), the new dynamical variables; \(b_{\mu \nu }\left( x\right) \) are from now on called plaquette occupation number (PON). Then, one can define a Markov chain to update the \(b_{\mu \nu }(x)\) values by choosing a transition probability given by the ratio of the weight functions \(Q_{\left\{ U\right\} }\left[ \left\{ b\right\} \right] \), that complies with the principle of detailed balance and ensures the convergence of the Markov chain to the right probability distribution. Before dealing with the details of the update, let us discuss the constraints on \(b_{\mu \nu }(x)\) due to the group integration over the link variables.

3 Constraints on the dual variables \(b_{\mu \nu }(x)\)

Herein we discuss the constraints on the \(b_{\mu \nu }(x)\) when group-integrating over the gauge links. The results and the group integrations discussed below can, in principle, be extended to SU(N) but we restrict our analysis to SU(2). The main properties and results for the group integration required to understand the current work are summarized in the “Appendix A”.

Representation of the \((\mu _{0},\nu _{0})\) lattice plane. The gauge links are shown as arrows. The “central plaquette” \(U_{\mu _{0}\nu _{0}}(x_{0})= U_{1}U_{2}U_{3}U_{4}\) (in solid red arrows) and the plaquettes which contain any of the links appearing in \(U_{\mu _{0}\nu _{0}}(x_{0})\). \(A_{i}\) are the staples (in non-solid black lines), defined in the \((\mu _{0},\nu _{0})\) plane, required to complete the neighboring plaquettes besides the links in the “central plaquette”

Let us consider the plaquette

defined on the \((\mu _{0},\nu _{0})\) plane, see Fig. 1, where \(U_{l}\) with \(l=1,\ldots ,4\) stands for a generic link and l is a composite index taking values in the set:

Let \(A_{i}\) be the staple that together with the link variable \(U_{i}\) defines a plaquette in the \((\mu _{0},\nu _{0})\) plane which shares with \(U_{\mu _{0}\nu _{0}}(x_{0})\) the link \(U_{i}\) – see Fig. 1. In the following, to simplify the notation, we will write \(b_{l}\) for the dynamical variable that is associated with the plaquette containing the staple \(A_{l}\), i.e. the staple in the plane \((\mu _{0},\nu _{0})\) that is associated with the link \(U_{l}\). The weight function Q associated with the plaquettes represented in Fig. 1 is

The properties of the group integration are such that most of the possible sets \(\left\{ b\right\} \) have a null weight and do not contribute to the partition function. The non-vanishing contributions are those where a given link variable \(U_l\), with \(l=(\mu ,x)\), appears \(n_l\) times in the integrand, with \(n_l\) being a multiple of N, where N is the number of colors. Consider, for example, the link variable \(U_{2}\) in Eq. (12): it appears \(b_{0}+b_{2}=n_{2}\) times in the integrand, i.e.

and this gives a non-vanishing contribution to \(Q_{\left\{ U\right\} }\left[ \left\{ b\right\} \right] \) only if \(n_2 = b_{0}+b_{2}\) is a multiple of N. This implies that \(b_{0}\) and \(b_{2}\) are either multiples of N or their sum is a multiple of N, despite \((b_{0}\mod N)\ne 0\) and \((b_{2}\mod N)\ne 0\). In four dimensions, the link \(U_{2}\) belongs to the plaquettes represented in Fig. 1 and also to plaquettes belonging to orthogonal planes not shown in the figure. Therefore, for a generic link \(U_{\mu }(x)\), it follows that the sum over the set \(\{b_{\mu \nu }(x)\}\) that count the number of times \(U_{\mu }(x)\) appears in the integral in Eq. (12) is given by

and only those \(\{b_{\mu \nu }(x)\}\) configurations such that all \(\{n_{\mu }(x)\}\) are multiples of N contribute to the partition function, i.e.

This is a non trivial constraint that also simplifies the analysis of the possible sets of updates that can appear within a Markov chain.

4 Update algorithm

A possible local update compatible with Eq. (15) replaces \(b_{\mu \nu }(x)\rightarrow b_{\mu \nu }(x)\pm \varDelta \), with \(\varDelta \) being a multiple of N. In this way, if the original configuration \(\{b_{\mu \nu }(x)\}\) verifies the constraint in Eq. (15), the new configuration is also compatible with Eq. (15). On the other hand, if \((\varDelta \!\!\mod \mathrm N)\ne 0\), then to fulfil Eq. (15) at all lattice points, one has to change the b values in the neighboring points accordingly and, therefore, in the next neighboring points and so on and so forth. The updates where \(\varDelta \) is not an integer multiple of N requires a global update over a finite region of the lattice.

An ergodic algorithm must access all possible b values and, therefore, requires the use of both local and nonlocal updates. If, for example, the Markov chain is initiated setting all PON such that \((b_{\mu \nu }(x)\!\!\mod \mathrm N)=0\) and only local updates are implemented, i.e. a given b is modified by adding an integer multiple of N, configurations where all PONs of a given plane are not multiples of N cannot be reached and, therefore, the update does not verify the ergodicity requirement.

To ensure convergence to the right probability distribution, one needs to set a detailed balance equation compatible with Eq. (15). Our implementation chooses randomly a b or a set of b’s and proposes new values \(b'\). As usual in algorithms of this kind, the transition probability for accepting the new \(b'\) is given by

which is enough to ensure that the sampling reproduces the correct distribution probability [45].

The computation of the weight function Q requires integration over the link variables, for all possible PONs configurations, which per see is a difficult problem. The Haar measure for the group integration is invariant under gauge transformations and this allows rotating the links and, eventually, replace some of them by the identity in the evaluation of the \(Q[\{b\}]\) functions. In particular, a path in which the maximal number of links allowed by the group integration are rotated to the identity defines what is known as a “maximal tree” [46]. Our proposal consists in, given a \(b_{\mu \nu }(x)\) variable to be updated, performing an exact integration of the gauge links in the neighborhood of the plaquette \(U_{\mu \nu }(x)\). In order to be able to compute the transition probability p we set a small number of links to the identity matrix and, in this way, decouple a region with links “close” to the \(b_{\mu \nu }(x)\) variable to be updated and a region with the remaining “distant” links. The transition probability for accepting the new value p is given by the ratio between weight functions and, therefore, the integration of the “distant” links cancels out and we need consider only the contributions of the links that are closer to the plaquette associated with \(b_{\mu \nu }(x)\). The replacement of a small subset of link variables by the identity matrix is clearly an approximation, but it enables to perform group integrations analytically.

4.1 Local update

To illustrate our update scheme, let us start considering the crudest approximation possible in the local update of a given plaquette occupation number, say \(b_{\mu _0 \nu _0}(x_0)\), associated with the central plaquette represented in Fig. 1, i.e. replace all the staples that are connected with \(U_{\nu _{0}}(x_{0})\) (the link \(U_4\) in the figure) by the identity. Then, the integration over \(U_{\nu _{0}}(x_{0})\) is decoupled from the integrations over the remaining links and

where \(n_0 = n_{\nu _0}(x_0)\) is calculated from Eq. (14), and \(Q'\left[ \left\{ b\right\} '\right] \) is independent of the link \(U_{\nu _{0}}(x_{0})\). In this way, the transition probability of the local update of the PON \(b_{0}=b_{\mu _{0}\nu _{0}}(x_{0})\) is

where

To improve on the estimation of p, couplings of \(U_{\nu _0}(x_0)\) to neighboring links need to be considered. A possible next level of approximation is to set all the staples associated with \(U_{\nu _{0}}(x_{0})\) to the identity with exception of \(g= U_{1}U_{2}U_{3}\) (see Fig. 1), then

where

and \(Q''\left[ \left\{ b\right\} ''\right] \) is the group integral over all the lattice links except for \(U_{\nu _{0}}(x_{0})\). Now, since \(Q''\left[ \left\{ b\right\} ''\right] \) and the integral in Eq. (20) share the links \(U_{1}\), \(U_{2}\), and \(U_{3}\), they do not decouple and this would not allow us to obtain a number for the transition probability p. However, as we shall discuss in the next two sections, one can still devise a strategy that allows us to integrate over \(U_{\nu _{0}}(x_{0})\) taking into account couplings with neighboring links, so that under a local update \(b^{\mathrm{old}}_{0}\rightarrow b^{\mathrm{new}}_{0}\), the transition probability is the positive real number given by

where F contains through \({\mathscr {U}}\) a subset of all links \(U_{\mu }\left( x\right) \) of the lattice that are integrated, and \({\mathscr {B}}\) stands for the PONs associated with the PON \(b_{0}\) which is being updated.

The Monte Carlo updates considered in the present work approximate ratios of weight functions Q following the strategy just discussed. The integration of the functions F all give positive definite answers and, thus, the approximate ratio between the dual Boltzmann weights Q to estimate the transition probability p is also a positive real number.

4.1.1 Integration over a short path

Let us consider Fig. 1 and the central plaquette associated with the dual variable \(b_{0}=b_{\mu _{0}\nu _{0}}(x_{0})\). The links belonging to this plaquette (solid red arrows) also contribute to the staples \(A_{i} = \{A_1, A_2, A_3, A_4\}\) (non-solid black lines). Recall that the aim is to update \(b_{0}\) and compute the transition probability p.

A maximal tree can be built by rotating some, but not all, staples associated with the links \(U_{i}\) in the plaquette \(U_{\mu _{0}\nu _{0}}(x_{0})\) to the identity matrix. However, assuming that all the links in \(A_{i}\) can be set to the identity, the group integration can be factorized and one has to consider only the following integrating function

with the integration measure given by

The set \({\mathscr {U}}=\left\{ U_{1},U_{2},U_{3},U_{4}\right\} \) contains the link variables to be integrated. The set \({\mathscr {B}}\) contains the PONs that couple the plaquette \(U_{\mu _{0}\nu _{0}}\left( x_{0}\right) \) with the neighboring plaquettes and in two dimensions

For a generic dimensionality, the set \({\mathscr {B}}\) contains the PONs that define the powers \(c_{i}\) in Eq. (23), i.e.

Let us now discuss the integration of \(F_4\) with the measure \(\widetilde{{\mathscr {D}}U_{4}}\) defined in Eq. (24). The integration over the links of the central plaquette can be started by picking any of the links and for the function \(F_{4}\) one can reduce the integration to

where U and g are SU(2) matrices. Integrals of this type have been computed in Ref. [47]; they are given by

For a non-vanishing result, the condition \(2q=b+c\) must be fulfilled. The integral \(I_{1}\) is a polynomial in \(\text {Tr}\left[ g\right] \), i.e.

where \(\text {min}(b,c)\) stands for the minimum of b and c, and the coefficients \(\varGamma _{q}^{b,c}\) are given by

and %2 returns the remainder of the integer division by 2. The Kronecker delta in Eq. (33) indicates that the polynomial in Eq. (32) contains only odd or even powers of q. The evaluation of \(I_{1}\) is a first step towards the evaluation of the weights Q. In our code the expression given in Eq. (32) was used directly. The routine to compute \(I_{1}\) was checked against a numerical evaluation of \(I_{1}\) for a number of cases and both results agreed within machine precision. The integral \(I_{1}\), given in Eq. (32), can be used recursively to perform the integration of Eq. (23):

Once the coefficients \(\varGamma _{q}^{b,c}\) are known, one can get an approximate estimation for the weights Q and also for the transition probability p which is defined in the Markov chain.

In principle, the calculation of the weights can be improved by considering more complex integrations over the gauge links as, for example:

However, the coding of this type of solutions in a Monte Carlo simulation is rather complex and will not be pursued here. Alternatively and keeping the same rationale as described so far, one can explore integrations over more complex paths on the lattice.

4.1.2 Integration over a long path

The method described in the previous section can be extended to more complex and longer lattice paths. From the practical point of view, one has to compromise the length and complexity of the path to perform the group integration with the coding of the outcome of group integration.

Next, we consider an integration over the link variables which takes into account a larger set of links that are decoupled from the remaining lattice. In Fig. 2 we show, in color, the links to be integrated in the computation of the probability transition p. To avoid clutter, we do not draw all links to be integrated exactly. In particular, the links corresponding to the fourth dimension are not represented in the figure. For the path represented in Fig. 2, the links represented by solid red lines, which belong to the central plaquette \(U_{\mu _{0}\nu _{0}}(x_{0})\), are integrated exactly together with those represented by double blue lines and by triple green lines.

The links in double blue lines are in the same plane as \(U_{\mu _{0}\nu _{0}}(x_{0})\) and their integration involves terms which include the links of the central plaquette. For example, the integrals referring to the links belonging to the plaquettes \(U_{\mu _{0}\nu _{0}}(x_{0})\) and \(U_{\mu _{0}\nu _{0}}(x_{2})\) are not independent as these staples share \(U_{\mu _{0}}(x_{2})\). The same applies to the links belonging to the plaquettes \(U_{\mu _{0}\nu _{0}}(x_{3})\) and \(U_{\mu _{0}\nu _{0}}(x_{4})\), whose staples share \(U_{\mu _{0}}(x_{3})\). Also, the integration over the links in the \((\mu , \nu _{0})\) plane are not independent of the integration of links in parallel planes as those represented by triple green lines. In our integration over the longer path, we will consider four green-type paths which belong to the upper parallel plane shown in Fig. 2, on the down parallel plane and similar paths related to the path which dislocated not by \({\hat{\rho }}_{0}\) but by the unit vector belonging to the fourth dimension not represented in Fig. 2. The later three paths are not represented in Fig. 2.

In the computation of the weights Q and of the probability transition p the link variables represented in red (central plaquette with solid lines), blue (double lines) and green (triple lines) in Fig. 2 are integrated exactly. Each of these link variables is coupled with \(2\left( d-1\right) \) staples which belong to \(2\left( d-1\right) \) plaquettes. With the exception of the red, blue, and green links, all staples associated with the links which are going to be integrated are rotated to the identity matrix. In Fig. 2 the solid lines in gray represent the link variables that are being fixed to the identity matrix in the plane \(\left( \mu _{0},\nu _{0}\right) \). As in the integration described in Sect. 4.1.1, we are not building an exact maximal tree. Indeed, there are closed paths whose links are all set to the identity. The approximation used to perform the group integrations factorizes a local region, which is decoupled from the remaining lattice, and, in this way, allows for an exact group integration in each of the regions considered. Furthermore, it factorizes the calculation of the weights Q, which enables an easy estimation of the transition probability p.

A 3-dimensional representation of the lattice. For an update of the central plaquette \(U_{\mu _0\nu _0}(x_0)\) (solid red lines), the links represented by red, blue (double lines) and green (triple lines) are integrated in the computation of the weight function ratio. In grey solid lines we shown a sample of the links that are rotated to the identity in the calculation of p. See text for details

Let us now discuss on how to integrate over the link variables in color in Fig. 2. In principle one can choose to start the integration by considering any of the colored links. However, we found that starting the integration by the links in green or the links in blue and only then performing the integration of the links belonging to the central plaquette \(U_{\mu _{0}\nu _{0}}(x_{0})\) simplifies considerably the integration process. For the integration over a path that is coupled with the link \(U_{\mu }(x)\) of the central plaquette, one can rely on the result given in Eq. (32) applied recursively. The outcome is a polynomial

whose coefficients \(\lambda _{k}\) are combinations of the coefficients \(\varGamma \) and are functions of the plaquette occupation numbers of the region surrounding the integrated path. For the group integration, every link belonging to the central plaquette is coupled with two different paths, namely, the path in green which has four links and the path in blue with five links. The total number of links to be integrated is now forty and for this larger integration we define \(F_{40}\left[ {\mathscr {U}},{\mathscr {B}},b_{0}\right] \) as being the local function of the approximate weight function ratio, see Eq. (22). The set of links \({\mathscr {U}}\) contains all the forty gauge links to be integrated and the set of the plaquette occupation numbers \({\mathscr {B}}\) include the \(b_{\mu \nu }(x)\) whose links are in the integrated paths. Recall that for the simpler integration discussed previously a similar situation is found.

The formal expression for \(F_{40}\left[ {\mathscr {U}},{\mathscr {B}},b_{0}\right] \) includes the central plaquette \(U_{\mu _{0}\nu _{0}}(x_{0})\) and four polynomials, one for each link variable \(U_{l}\in U_{\mu _{0}\nu _{0}}(x_{0})\), coming from the integration over the green and blue paths

where L is the set of coordinates of the links associated with \(U_{\mu _{0}\nu _{0}}(x_{0})\), see Eq. (11). \(P_{{\mathscr {B}}\left( l\right) }[U_{l}]\) is the polynomial coming from the integration of the green and blue paths coupled to the link variable \(U_{l}\), i.e.

The polynomial \(P_{{\mathscr {B}}_{G}\left( l\right) }\) is the outcome of the integration over a green path and \(P_{{\mathscr {B}}_{B}\left( l\right) }\) the outcome of integration over a blue path. The set \({\mathscr {B}}_{G}\left( l\right) \) includes the PONs of the plaquettes whose links belong to the integrated green path. The set \({\mathscr {B}}_{B}\left( l\right) \) has the same meaning as \({\mathscr {B}}_{G}\left( l\right) \) but related to a blue path. The union of \({\mathscr {B}}_{G}\left( l\right) \) and \({\mathscr {B}}_{B}\left( l\right) \) defines the set \({\mathscr {B}}\left( l\right) \). Finally, the set \({\mathscr {B}}\), required to perform the group integration present in \(F_{40}\left[ {\mathscr {U}},{\mathscr {B}},b_{0}\right] \), is given by the union of the four sets \({\mathscr {B}}\left( l\right) \) together with the set of PONs of the plaquettes that share the links present in \(U_{\mu _{0}\nu _{0}}(x_{0})\).

Before providing expressions for \(P_{{\mathscr {B}}_{G}\left( l\right) }\) and \(P_{{\mathscr {B}}_{B}\left( l\right) }\) let us have a closer look on the integrations leading to these polynomials.

In Fig. 3 the green path coupled to the link \(U_{3}\) is shown in full detail. This path has four links \(\left\{ U_{3a},U_{3b},U_{3c},U_{3d}\right\} \) and the integration over these links gives

and the integration measure reads

The plaquette occupation numbers \(\left\{ b_{1},b_{2},b_{3}\right\} \) refer to the plaquettes \(\text {Tr}\left[ U_{3}U_{3d}\right] \), \(\text {Tr}\left[ U_{3c}U_{3d}\right] \) and \(\text {Tr}\left[ U_{3a}U_{3b}U_{3c}\right] \), respectively, and \(\left\{ c_{1},c_{2},c_{3},c_{4}\right\} \), i.e. the powers of the trace of the links that include \(\left\{ U_{3a},U_{3b},U_{3c},U_{3d}\right\} \), are given by sums of the plaquette occupation numbers similar to those found in the case discussed in Sect. 4.1.1. For example, one has \(c_{1}= n_{x_{0}+{\hat{\nu }}_{0}+{\hat{\rho }}-{\hat{\mu }}_{0},\nu _{0}}-\,b_{3}\). The definition of the remaining \(c_{i}\) and \(b_{i}\) associated with the integration over the green path is given in “Appendix A”.

The coefficients \({\mathscr {B}}_{G}=\left\{ b_{1},b_{2},b_{3},c_{1},c_{2},c_{3},c_{4}\right\} \) take into account the coupling of the central plaquette and a green path attached to the link \(U_{3}\). The degree and the coefficients of the polynomial \(P_{{\mathscr {B}}_{G}\left( l\right) }\) is determined by the values of the \({\mathscr {B}}_{G}\left( l\right) \).

Sublattice of the representation given in Fig. 2 with the links labeled

The blue path associated with the link \(U_{3}\) is shown in Fig. 4. The blue path associated with the link \(U_{3}\) includes the plaquette occupation numbers associated with the first neighbor plaquette \(\text {Tr}[U_{3}^{\dagger }\tilde{U}_{3e}\tilde{U}_{3d}^{\dagger }]\) of \(U_{\mu _{0}\nu _{0}}(x_{0})\) and of its second neighbor \(\text {Tr}[\tilde{U}_{3a}\tilde{U}_{3b} \tilde{U}_{3c}\tilde{U}_{3d}]\). The group integration over the blue path is

for an integration measure given by

As for the green path, expressions for the coefficients \(\tilde{c}_{i},\tilde{b}_{i}\) are given in “Appendix A”.

The polynomials coming from performing the integrations over the green and blue paths are computed in “Appendix A”. It follows that for the green path

while the blue path the group integration gives

Another sublattice of the representation given in Fig. 2 with the links labeled

In order to evaluate Eq. (38) for each link of the central plaquette \(U_{l}\in \left\{ U_{1},U_{2},U_{3},U_{4}\right\} \), it remains to multiply Eqs. (43) and (44). Once the polynomials \(\,P_{{\mathscr {B}}\left( l\right) }[U_{l}]\,\) are evaluated, we can integrate \(F_{40}\), see Eq. (37), over the remaining links

and estimate the ratio between the weights Q in order to evaluate the probability transition p.

For the particular case of a local update transition \(b_{0}\rightarrow b_{0}\pm \varDelta \), the polynomials \(P_{{\mathscr {B}}\left( l\right) }[U_{l}]\) contributing to \(F_{40}\) do not depend on \(\varDelta \), i.e. on the update of the central plaquette and, therefore, they do not need to be evaluated twice to compute p. Note that the function \(F_{40}\) defined in Eq. (37) is given by a sum of terms like \(F_{4}\) given in Eq. (23). It follows that the solution of the group integration in Eq. (45) is a sum of the solutions that look like Eq. (34). Then, the group integration is reduced to the computation of factorial numbers and, it follows from the definition and the approximation used, that the transition probability is a real and positive definite number.

4.2 Nonlocal update

The Monte Carlo updates discussed in Sects. 4.1, 4.1.1 and 4.1.2 do not allow us to access all possible configurations for the plaquette occupation numbers. For example, those local updates are unable to change a given plaquette occupation number from an odd natural number to an even natural number or vice-versa. As discussed in Sect. 3, the introduction of a global or a nonlocal update can improve the algorithm in the sense that it enlarges the space sampled by the algorithm.

A nonlocal update can be implemented via a simultaneous transformation of all the plaquette occupation numbers over a plane surface, where each of the PONs is changed accordingly to \(b_{\mu \nu }(x)\rightarrow b_{\mu \nu }(x)\pm \varDelta \), where \(\varDelta \) is not necessarily a multiple of N. For this update, the number of links to be integrated increases with the lattice size. Recall that for the updates discussed previously, the number of links integrated to compute the weights depends only on the type of update and is fixed a priori for each of the updates. Although by enlarging the size of the space sampled by the algorithm, this nonlocal update might not be enough to guarantee full ergodicity of the algorithm, but it certainly helps in approaching an ergodic update. Of course, one can introduce other types of nonlocal updates as, for example, an update of the PONs attached to a cube. The updating process where a plane surface is filled with PONs that are not multiples of N can not generate a configuration where the PONs that are not multiples of N are attached to the cube surface. In addition to the so-called planar update, and to comply with full ergodicity, one should also implement the cube type of update. However, its implementation is rather complex and its impact on the performance of the algorithm will be the object of a future report.

Let us now discuss the group integration to compute the transition probability p. In Fig. 5 we show the surface over which the plaquette occupation numbers are to be updated. In order to perform the group integration, the links represented by solid lines are set to the identity matrix and those represented by doted lines are to be integrated exactly for the weight evaluation. In \(d>2\) dimensions and in what respects the group integration, the links in Fig. 5 are coupled with staples in perpendicular planes. In the integration to compute the transition probability for this nonlocal update, all those staples are set to the identity matrix. Again, we are not building an exact maximal tree but the approximation allows us to get relatively simple expressions in the calculus of the transition probability p.

The local function \(F_{p}\), associated with the update over a \(5\times 5\) plane represented in Fig. 5, contains 24 link variables and is given by

where all \(b_{i}\) are plaquette occupation numbers belonging to the plane where the nonlocal update takes place, the \(c_{i}\) are related to the integrated link \(U_{i}\) and are given by a sum of plaquette occupation numbers belonging to the plaquettes that share \(U_{i}\) in other planes than the updated plane.

Starting the group integration by the link labelled 1 in Fig. 5, the integration function is of the same type as that defined in Eq. (30) and whose solution is given in Eq. (32). The integration leads to a polynomial of the trace of the link with label 2. For the integration of the link labelled 2 one uses the solution in Eq. (32) and repeat the process to the subsequent links, as for the integration of the green and blue paths in the local update associated with Fig. 2.

5 Observables

We implemented the algorithm to compute the mean value of the plaquette and the mass of the \(J^{PC}=0^{++}\) glueball. The mean value of the plaquette is easily computed in terms of the plaquette occupation numbers. In the partition function given by Eq. (3), the plaquette \(U_{\mu \nu }(x)\) comes associated with the factor \(\beta \). Formally, one can identify a different \(\beta \) with each of the plaquettes and make the replacement \(\beta \rightarrow \beta _{\mu \nu }(x)\). Ignoring the constant C in Eq. (3), it follows that

where \(W\left[ U\right] \) is the Boltzmann weight factor in the standard representation of the partition function. Performing the same operations with the partition function written in the new representation, given by Eqs. (8) and (9), it follows that

and, therefore, the plaquette expectation value is given by the average of the dual variables \(b_{\mu \nu }(x)\)

The average of the plaquette expectation value over the lattice volume, called plaquette mean value u, reads

The value of u estimated with our algorithm will be compared with the output of a conventional heat bath Monte Carlo method.

In this exploratory work besides the mean value of the plaquette we also compute the mass of the scalar glueball with quantum numbers \(J^{PC}=0^{++}\). This requires the building of an interpolating field \(\varPhi \) with the right quantum numbers and writing \(\varPhi \) in terms of the dual variables \(b_{\mu \nu }(x)\). A first step toward the computation of the mass of the scalar glueball is the evaluation of the correlation function

Setting y fixed at the origin of the lattice, then the zero momentum Euclidean space Green’s function reads

where m is the mass of the glueball ground state and the last line is the result of making the change of variable \(p^{2}+m^{2}=z^{2}m^{2}\) in the first line. The integration over z is given in terms of the \(K_{1}\) Bessel:

where the second expression holds for large values of t.

The lattice version of the operator \(\varPhi \) is constructed by mapping the continuum symmetries and therefore the quantum numbers of the corresponding particle into the hypercubic group [40]. For the ground state and for the channel \(J^{PC}=0^{++}\), the simplest operator is given by

i.e., the sum of spacelike plaquettes. With the use of Eq. (49), the operator \(\varPhi \) can be mapped into the new representation and is given in terms of \(b_{\mu \nu }(x)\) as

The estimation of the glueball masses from correlation functions of type given in Eq. (53) with smaller statistical errors is not an easy task. Indeed, given that G(t) decays exponentially with Euclidean time, the signal to noise ratio decreases speedily for large Euclidean time and, therefore, on the lattice one can only rely on a limited number of time slices to estimate m. Although there are a number of techniques to improve the signal to noise ratio, as e.g. the use of anisotropic lattices or the use of smeared operators [36, 38, 39], we will take the interpolating operator as given in Eq. (54), with the representation given in Eq. (55), to test the algorithm.

In practice, for estimating the scalar glueball mass, a number of uncorrelated configurations will be generated and the operator \(\varPhi \) will be computed using Eq. (55). From the interpolating field we evaluate the scalar glueball connected Green function

where T is the lattice time length and the second term on the right hand side in Eq. (56) removes the vacuum contribution to the signal. The mass of the \(J^{PC}=0^{++}\) glueball is measured fitting the lattice estimation in Eq. (56) to the functional form given in Eq. (53).

6 Results

In the simulations we start the Markov chain with a cold start, where all \(b_{\mu \nu }(x)=0\), and the Monte Carlo updates use both the local and nonlocal updates.

For the local updates, a given PON \(b_{\mu \nu }(x)\) is chosen randomly and a change by \(\pm \,2\) is proposed with the sign being chosen randomly. This process is repeated \(V_{p}\) times, where \(V_{p}\) is the total number of lattice plaquettes. To this set of updates we call one Monte Carlo step or full sweep for the local update.

For the nonlocal update, a two dimensional surface is chosen randomly on the lattice and for each PON \(b_{\mu \nu }(x)\) on the surface a change by \(\pm \,1\) is proposed randomly. The process is repeated \(N_{p}\) times, where \(N_{p}\) is the number of two dimensional surfaces on the lattice. To this set of updates we call one Monte Carlo step or full sweep for the nonlocal surface update.

6.1 Sampling and the mean value of the plaquette

For the evaluation of the mean value of the plaquette given in terms of the dual variables, as given in Eq. (50), we simulate two different lattice volumes, \(6^{4}\) and \(12^{4}\), for various values of \(\beta \). For each of the simulations, after discarding \(10^{3}\) combined Monte Carlo steps for thermalization, we consider \(10^{4}\) configurations separated by 10 combined Monte Carlo steps. Our numerical experiments have shown that a separation of 10 combined Monte Carlo sweeps is enough to decorrelate the observables measured in the current work.

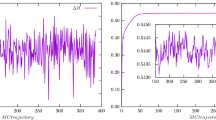

In Fig. 6 we compare the results obtained with the present algorithm with the results obtained with the heat bath algorithm (also for the standard Wilson action) implemented with the library Chroma [48]. The results shown for the heat bath algorithm refer to simulations performed on a \(10^{4}\) lattice, for an ensemble with \(10^{4}\) configurations, separated by 5 Monte Carlo steps.

The same as in Fig. 6 but for the relative deviation of the results obtained with present algorithm with respect to the heat bath results. The vertical black lines show the interval of \(\beta \) values where the efficiency of the nonlocal update is higher. See text for details

In the top panel of Fig. 6 we show the plaquette mean value obtained in simulations of a \(12^4\) lattice combining the local and nonlocal updates against the results of the standard Wilson action using a heat bath simulation. As can be seen, there is good agreement between the results obtained with our algorithm, using any of the local updates, and with those obtained with the heat bath simulation in the strong coupling limit. The data also suggest that in the weak coupling limit the present algorithm prediction for u converges to the value given by the heat bath algorithm.

In what concerns the \(\beta \) dependence of the results, the present prediction for u starts to deviate from the heat bath result for \(\beta \sim 1.5\) up to \(\beta \sim 3\), but its maximal deviation is about 0.1% and occurs for \(\beta \sim 2.3\). Interestingly, in this range the local algorithm which takes into account the smaller number of integrations, see Sect. 4.1.1, is closer to the results of the heat bath simulation. However, as one approaches the continuum limit, i.e. for \(\beta \gtrsim 3\), it is the algorithm which uses the other local update, see Sect. 4.1.2, which is closer to the heat bath outcome. Indeed, for the algorithm whose local update takes into account the larger number of group integrations the deviations from the heat bath result are marginal for \(\beta \gtrsim 3\).

The volume dependence of the algorithm can be seen in the lower panel of Fig. 6, where the sampling of u is investigated for two different lattice volumes. Recall that the full Monte Carlo update is defined by a combination of local and nonlocal updates. The data show no or only a mild dependence on the lattice volume.

In Fig. 7 we show the relative deviation of the present estimation of u with respect to the heat bath results for different lattice volumes. The deviations are negligible in the strong coupling limit and are very small when the continuum limit is approached. The maximal deviations \(\lesssim 0.1\)% occur for intermediate values of the coupling around \(\beta \sim 2.3\). From the figure one can also read the improvement of considering \(F_{40}\) instead of \(F_{4}\); recall that \(F_{40}\) takes into account forty Haar integrals in the evaluation of p while \(F_{4}\) takes only four Haar integrals. In particular, for the largest lattice, the data show a notorious improvement on the values of the mean value of the plaquette relative to the heat bath numbers when using \(F_{40}\). Indeed, in the continuum limit, when one uses \(F_{40}\) to estimate p, the deviations are about \(\sim 50\%\) smaller compared to computations using \(F_{4}\).

The numerical simulations performed show that our approach is a good approximation in the strong and weak coupling limits. Given that Boltzmann weights are proportional to powers \(\beta \), in the strong coupling limit, i.e. for small \(\beta \) values, most likely the dual variables are zero or close to zero and setting the link variables to the identity matrix is essentially an irrelevant operation. As \(\beta \) increases the dual variables start to deviate from zero, the previous argument no longer applies, and one can expect deviations from the exact result. This seems to be the case for \(\beta \) values in the range \(2.5< \beta < 3\). Although one would expect that integrating more links is always better, one should recall that the integration is not exact. While it is true that the \(F_{40}\) updates take into account more link variables, it also sets a large number of link variables to the identity matrix and, therefore, at some stage it can become less accurate than the \(F_4\) update. On the other hand, as the continuum limit is approached, the link variables approach the identity and, in this case, our approximation reproduces faithfully the theoretical expectations.

The performance of our algorithm for different \(\beta \) values and different volumes can be understood looking at the update efficiency \({\mathscr {E}}\), defined as its acceptance rate in the Markov chain. As can be seen in the top panel of Fig. 8, the nonlocal update has an essentially vanishing \({\mathscr {E}}\), with the exception of the smaller lattice and over a narrow range of \(\beta \) values. Note, however, that the region where the simulations using the two volumes give different values for u, see the lower panel in Fig. 6, is precisely the region of \(\beta \) where the efficiency associated with the nonlocal update has its maximum value. Furthermore, the results of Figs. 6 and 8 suggest that the nonlocal update plays an important role. Indeed, for the smaller lattice volume and for \(\beta \) in the range 2.5–3, the efficiency \({\mathscr {E}}\) is maximal and non negligible for the nonlocal update, which makes the estimation of u by the present algorithm closer to the values provided by the heat bath method. The deviations of our estimation for u relative to the heat bath result for the smaller volumes are milder for \(\beta \) in the range 2.5–3, as can be seen from Fig. 7. For the larger volumes, \({\mathscr {E}}\) is always residual and the our estimation of u shows larger deviations which are, nonetheless, less than 0.1% relative to the heat bath numbers.

Herein, we considered a single type of nonlocal update but many other possibilities can be explored to achieve a better and more complete sampling of the dynamical range of values allowed for the plaquette occupation number space. Within the rationale considered in this work, the building of a algorithm, i.e. the implementation of other types of nonlocal updates, implies a compromise between a given geometrical setting, i.e. the definition of a given set of links over a large region of the lattice, and the ability of being able to perform the group integration over the corresponding sublattices. Recall that, within our framework, the local updates do not sample the entire \(\{b\}\) space. For example, for the local updates the \(\{b\}\) remain either in the subset of odd or even natural numbers. The nonlocal updates were built to allow for a better dynamical range, allowing for transitions in Markov chain where the PONs could become either odd or even natural numbers. The search for other nonlocal types of updates is one of the features that we aim to explore in a future work.

Another way of reading the results in Fig. 8 is that one should improve the efficiency \({\mathscr {E}}\) of the non local update. Indeed, for the local updates its acceptance rate is always \(\sim 10\%\) or above, reaching a value of about \(50\%\) as the continuum limit is approached. On the other hand, the nonlocal update defined in Sect. 4.2, has an extremely low \({\mathscr {E}}\), with has a maximum of \(\sim 0.25\%\) for \(\beta \sim 2.7\) for the smaller lattice and being always residual for the larger lattice. The low values for the efficiency associated with the nonlocal update mean that the plaquette occupation numbers are essentially trapped into the subset of the odd or the even natural numbers which is sampled by the local Monte Carlo updates.

The local updates change, in a single update, a fixed number of \(b_{\mu \nu }(x)\) and, in principle, are not so sensitive to volume effects as the nonlocal updates which are affected by surface effects.

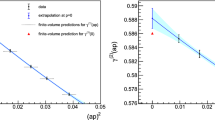

6.2 \(J^{PC}=0^{++}\) glueball mass

In order to estimate the \(J^{PC}=0^{++}\) glueball mass we simulate the theory for \(\beta =3.01\) on a \(10^{3}\times 20\) lattice, with a Monte Carlo step combining the local update as defined in Sect. 4.1.2 and nonlocal updates as defined in Sect. 4.2.

For the conversion of the glueball mass into physical units, we rely on Ref. [49] which uses the string tension \(\sqrt{\sigma }=440\) MeV and assumes

where the first two terms are the predictions of 2-loop perturbation theory and the remaining terms parameterize higher-order effects. The parameters \(c=4.38(9)\) and \(d=1.66(4)\) were set by fitting the lattice data for the string tension using simulations with \(\beta \in [2.3,2.85]\). For \(\beta =3.01\), the above relation estimates \(a\approx 0.02\) fm for the lattice spacing.

The glueball mass is evaluated from the asymptotic expression for the two-point correlation function

Our lattice estimations for G(t) use \(\sim 10^{7}\) configurations and the correlation function can be seen in Fig. 9. Despite using a large ensemble, our Monte Carlo code is not parallelized. However, the ensembles were built running the code on various independent standalone machines. For \(t\ge 6\) the lattice two point correlation function becomes negative and compatible with zero within one standard deviation and, therefore, lattice Euclidean times larger than 6 will not be considered.

Lattice estimation of correlation function G(t). The curve in black is the fit of the lattice data to the asymptotic expression in Eq. (58) and for the fitting range \(t\in [2,5]\). In the inset, G(t) in logarithm scale

The correlation function for \(t=1\) does not comply with the remaining values for larger t and with Eq. (58) and, therefore, in the estimation of m it is discarded. In the measurement of the glueball mass we consider three different fitting ranges and two large and independent ensembles as described in Table 1. For each of the fitting ranges considered, the lattice data are well described by the asymptotic expression for the correlation function in Eq. (58), as can be seen by the values of the \(\chi ^{2}/.d.o.f.\). Furthermore, m and \(g_{0}\) are independent of the fitting range. The simulations point towards a glueball mass of \(1588\pm 378\) MeV for a \(\sqrt{\sigma }=440\) MeV.

The simulation described so far uses a small physical volume and the simplest operator to estimate the glueball mass. Indeed, none of the available techniques to improve the signal to noise ratio is used in the numerical experiments. However, despite this limitations we are able to reproduce the numbers that can be found in the literature. Our estimates for the intermediate fitting range are \(m=(3.61\pm 0.86)\,\sqrt{\sigma }\) and \(m=(3.62\pm 0.53)\,\sqrt{\sigma }\), and agree within one standard deviation with the numbers quoted above. In Ref. [41] the authors report several estimates for SU(2) scalar glueball mass. Ref. [42] uses the same interpolating operator for the glueball as ours and reports the value \(m=(3.7\pm 1.2)\,\sqrt{\sigma }\), as we can see, the error in our estimation is about 30% smaller. In Ref. [39], the simulation is done using improved signal to noise methods and the authors report the value \(m=(3.12\pm 0.22)\,\sqrt{\sigma }\). Finally, the more recent calculation in Ref. [37] gives \(m=(3.78\pm 0.07)\,\sqrt{\sigma }\).

7 Summary

In the present work we discuss a mapping of the lattice Wilson action into an approximate dual representation, whose dynamical variables, the so-called plaquette occupation numbers (PONs) \(\left\{ b_{\mu \nu }\left( x\right) \right\} \), belong to the natural numbers \({\mathbb {N}}_{0}\). These dual variables are the expansion indices of the power series expansion of the Boltzmann factor for each plaquette. The partition function in terms of the new variables is given by a sum of weights \(Q_{\left\{ U\right\} }\left[ \left\{ b\right\} \right] \). The PONs are subject to constraints imposed by gauge symmetry, given by Eq. (15).

The weights Q for a configuration of PONs involve integrals over the link variables over the entire lattice volume whose integrands are products of powers of plaquettes. We used Monte Carlo simulations to solve the theory. The transition probability p defining the corresponding Markov chain is given by ratios of the weights Q. The link integration is simplified to get an approximate analytical estimation for p. Specifically, the approximations consist in the following. In an update of \(b_{\mu \nu }\left( x\right) \), the lattice is factorized into a region containing the plaquette \(U_{\mu \nu }\left( x\right) \) and its complementary. Then, the links at the interface between the two regions are rotated to the identity. This allows us to evaluate analytically the link integrals necessary to estimate p. Two different types of updates, named local and nonlocal, are considered. In the local updates, a given PON \(b_{\mu \nu }(x)\) is chosen randomly and a change by \(\pm \,2\) – we have concentrated on SU(2) gauge theory, see Eq. (15) – is proposed with the sign being chosen randomly. For the nonlocal update, a two dimensional surface is chosen randomly on the lattice and for each PON \(b_{\mu \nu }(x)\) on the surface a change by \(\pm \,1\) is proposed randomly. The nonlocal update improves the ergodicity of the algorithm as it allows to switch the occupation numbers from odd to even and vice-versa, an evolution which is not allowed by the local updates. We have not considered updates that involve changing the PONs on a cube.

The estimations for the plaquette mean value agree very well with those obtained with a conventional heath bath algorithm in the weak and strong coupling limits. Deviations from heath bath estimations occur in the range \(2.5< \beta < 3\), but they are below than 0.1%. In what concerns the estimation of the lightest SU(2) glueball mass, the simulations reported here are in good agreement with estimated in the literature.

We stress that the approach presented here relies on a series of approximations to get the transition probability p. One can speculate that the fact that the links rotated to the identity are a very small subset of the entire set of links \(\{ U_\mu (x) \}\), their contribution to p to be subleading, at least for the quantities studied. Furthermore, given that the number of links set to identity is volume independent, one expects to approximate the exact value of p in the limit of large volumes.

The results reported here suggest that the inclusion of larger lattice partitions in the “inner” integral, i.e. including larger numbers of links in the neighborhood of the updated plaquette occupation number, to estimate the transition probability takes p closer to its real value. This can be achieved by a careful choice of the “inner” region, i.e. the region which includes the lattice point where the plaquette occupation number is to be updated, and the “outer” sublattices such that one is able to perform necessary group integrals after setting some of the links to the identity. Certainly, any progress in the evaluation of SU(N) integrals, see e.g. Ref. [50], will help in improving the estimation of p. Another possible approach, still to be developed, is the numerical evaluation of the group integrals which, hopefully, could lead to an “exact” estimation of the transition probability.

The algorithm discussed here can be generalized to SU(N) gauge groups with N > 2. Another interesting research topic is the inclusion of the fermionic degrees of freedom which, in principle, can be accommodated within the procedure described. These are research problems that we aim to address in the near future.

References

C. Gattringer, C.B. Lang, Quantum Chromodynamics on the lattice: An Introductory Presentation (Springer, New York, 2010)

K. Splittorff, B. Svetitsky, Phys. Rev. D 75, 114504 (2007)

K. Splittorff, J.J.M. Verbaarschot, Phys. Rev. D 75, 116003 (2007)

C. Gattringer, K. Langfeld, Int. J. Mod. Phys. A 31, 1643007 (2016)

G. Aarts, Pramana 84, 787 (2015)

P. de Forcrand, J. Langelage, O. Philipsen, W. Unger, Phys. Rev. Lett. 113, 152002 (2014)

P. de Forcrand, M. Fromm, Phys. Rev. Lett. 104, 112005 (2010)

P. Rossi, U. Wolff, Nucl. Phys. B 248, 105 (1984)

N. Prokof’ev, B. Svistunov, Phys. Rev. Lett. 87, 160601 (2001)

T.A. DeGrand, C.E. DeTar, Nucl. Phys. B 225, 590 (1983)

Y.D. Mercado, H.G. Evertz, C. Gattringer, Phys. Rev. Lett. 106, 222001 (2011)

U. Wolff, Nucl. Phys. B 824, 254 (2010a)

U. Wolff, Nucl. Phys. B 832, 520 (2010b)

F. Bruckmann, C. Gattringer, T. Kloiber, T. Sulejmanpasic, Phys. Lett. B 749, 495 (2015)

A.H. Castro Neto, F. Guinea, N.M.R. Peres, K.S. Novoselov, A.K. Geim, Rev. Mod. Phys. 81, 109 (2009)

V. Ayyar, S. Chandrasekharan, Phys. Rev. D 91, 065035 (2015)

S. Chandrasekharan, Eur. Phys. J. A 49, 90 (2013)

S. Chandrasekharan, A. Li, Phys. Rev. D 88, 021701 (2013)

S. Chandrasekharan, A. Li, Phys. Rev. Lett. 108, 140404 (2012)

M. Fromm, J. Langelage, S. Lottini, O. Philipsen, JHEP 01, 042 (2012)

C. Gattringer, T. Kloiber, Nucl. Phys. B 869, 56 (2013a)

T. Korzec, I. Vierhaus, U. Wolff, Comput. Phys. Commun. 182, 1477 (2011)

C. Gattringer, T. Kloiber, Phys. Lett. B 720, 210 (2013b)

M.G. Endres, Phys. Rev. D 75, 065012 (2007)

T. Rindlisbacher, O. Åkerlund, P. de Forcrand, Nucl. Phys. B 909, 542 (2016)

M. Panero, JHEP 05, 066 (2005)

V. Azcoiti, E. Follana, A. Vaquero, G. Di Carlo, JHEP 08, 008 (2009)

Y. Delgado Mercado, C. Gattringer, A. Schmidt, Phys. Rev. Lett. 111, 141601 (2013a)

Y. Delgado Mercado, C. Gattringer, A. Schmidt, Comput. Phys. Commun. 184, 1535 (2013b)

D.H. Adams, S. Chandrasekharan, Nucl. Phys. B 662, 220 (2003)

H. Vairinhos, P. Forcrand, J. High Energy Phys. 2014, 38 (2014)

J. Hubbard, Phys. Rev. Lett. 3, 77 (1959)

C. Marchis, C. Gattringer, Phys. Rev. D 97, 034508 (2018)

C. Gattringer, C. Marchis, Nuclear Phys. B 916, 627 (2017)

C. Marchis, C. Gattringer, PoS Lattice 2016, 034 (2016)

Y. Chen, A. Alexandru, S.J. Dong, T. Draper, I. Horvath, F.X. Lee, K.F. Liu, N. Mathur, C. Morningstar, M. Peardon, S. Tamhankar, B.L. Young, J.B. Zhang, Phys. Rev. D 73, 014516 (2006)

B. Lucini, M. Teper, U. Wenger, JHEP 06, 012 (2004)

M. Albanese et al. (APE), Phys. Lett. B 192, 163 (1987)

M. Teper, Phys. Lett. B 183, 345 (1987)

B. Berg, A. Billoire, Nucl. Phys. B 221, 109 (1983)

M. Falcioni, E. Marinari, M.L. Paciello, G. Parisi, B. Taglienti, Y.-C. Zhang, Nucl. Phys. B 215, 265 (1983)

B. Berg, Phys. Lett. B 97, 401 (1980)

F. Brunner, A. Rebhan, Phys. Rev. Lett. 115, 131601 (2015)

K.G. Wilson, Phys. Rev. D 10, 2445 (1974)

M. Newman, G. Barkema, Monte Carlo Methods in Statistical Physics (Clarendon Press, Oxford, 1999)

M.J. Creutz, Quarks, Gluons and Lattices (Cambridge University Press, Cambridge, 1985)

K.E. Eriksson, N. Svartholm, B.S. Skagerstam, J. Math. Phys. 22, 2276 (1981)

R.G. Edwards, B. Joo (SciDAC, LHPC, UKQCD), Nucl. Phys. Proc. Suppl. 140, 832 (2005)

J.C.R. Bloch, A. Cucchieri, K. Langfeld, T. Mendes, Nucl. Phys. B 687, 76 (2004)

J.-B. Zuber, J. Phys. A 50, 015203 (2017)

Acknowledgements

The authors thank Paulo J. Silva for the heat bath data. This research was supported by computational resources supplied by the Center for Scientific Computing (NCC/GridUNESP) of the São Paulo State University (UNESP) and by the Departament of Physics of Coimbra University. Work partially supported by Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP), Grant. No. 2013/01907-0 (G.K.) and No. 2017/01142-4 (O.O.), Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq), Grant No. 305894/2009-9 (G.K.) and No. 464898/2014-5 (G.K. and O.O.) (INCT Física Nuclear e Applicações), and by Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) (R.L.).

Author information

Authors and Affiliations

Corresponding author

Appendix A: Group integration

Appendix A: Group integration

Here we will compute some group integrations that appears in the evaluation of the weight function of the non-Abelian gauge partition function written in the new representation. First we introduce some basic properties of the group integration. Consider a function f(U) where \(U\in \text {SU(N)}\), as we can see in many textbooks e.g. [1], the group integration is left and right invariant

where g and \(g'\) are arbitrary elements of the group SU(N) and the Haar measure are also left and right invariant

From these basic properties we can conclude

and these properties determine the constraint over the new degrees of freedom discussed in Sect. 3.

1.1 Appendix A.1: Integration over the green paths

Consider the green path, see Fig. 3, containing the links variables \(U_{3a}\),\(U_{3b}\), \(U_{3c}\) and \(U_{3d}\) that need be integrated, this path is coupled to the central plaquette (CP) \(U_{\mu _{0}\nu _{0}}\left( x_{0}\right) = U_{1}U_{2}U_{3}U_{4}\) by the link \(U_{3}\) and belong in a plane parallel to CP in the direction \(\rho \). Each link of CP is coupled to one green path, here we will show the integration of the green path coupled to the link \(U_{3}\), the precise definition of this green path links are

and the integration in question is given by

The plaquette occupation numbers (PON) \(b_{i}\) are defined as

and the collective powers \(c_{i}\) are a sum of PONs and, using Eq. (14), are defined as

We use Eq. (32) to solve each integral in the path. Starting the integration by the link \(U_{3a}\) we have

where the coefficients \(\varGamma \) are given by Eq. (33). The function \(K_{1}=K_{1}\left[ U_{3b},U_{3c},U_{3d};U_{3},\left\{ b'\right\} \right] \) is defined by

and the measure \(\widetilde{{\mathscr {D}}U\,}'_{G}\) by

Now integrating \(K_{1}\) with the measure \(dU_{3b}\) we find

where \(K_{2}=K_{2}\left[ U_{3c},U_{3d};U_{3},\left\{ b'\right\} \right] \) and the measure \(\widetilde{{\mathscr {D}}U\,}''_{G}\) are defined by

Integrating the link \(U_{3c}\) we obtain

where \(K_{3}=K_{3}\left[ U_{3d};U_{3},\left\{ b'\right\} \right] \) is defined by

Finally integrating the link \(U_{3d}\) we have

Collecting Eqs. (A.18), (A.21), (A.24) and (A.26), we have

i.e., a polynomial in \(\text {Tr}\left[ U_{3}\right] \). This solution can be applied to the other green paths but the definitions of the green path links, the PONs \(b_{i}\) and the sum of PONs \(c_{i}\) change accordingly.

1.2 Appendix A.2: Integration over the blue paths

In the Fig. 4 we present the blue path coupled to the link \(U_{3}\) of CP. Like in the green path case, each link of CP is coupled to one blue path. Here we will show only the integration of the blue path coupled to the link \(U_{3}\), the integration in question is

where the definitions of the blue path links are

The PONs \(\tilde{b_{i}}\) are defined as

and the collective powers \(\tilde{c_{i}}\) are defined by

In order to guarantee that we deal with integration that looks like Eq. (32), we need start the integration by one link of the plaquette \(\tilde{U}_{3a}\tilde{U}_{3b}\tilde{U}_{3c}\tilde{U}_{3d}\), here we start by the link \(\tilde{U}_{3a}\), then

where \(\tilde{K}_{1}\) and the measure \(\widetilde{{\mathscr {D}}U\,}'_{B}\) are defined by

Now integrating \(\tilde{K}_{1}\) by the measure \(d\tilde{U}_{3b}\) we have

where \(\tilde{K}_{2}\) and the measure \(\widetilde{{\mathscr {D}}U\,}''_{B}\) are defined by

Integrating the link \(\tilde{U}_{3c}\) we obtain

where \(\tilde{K}_{3}\) and the measure \(\widetilde{{\mathscr {D}}U\,}'''_{B}\) are defined by

Now integrating the link \(\tilde{U}_{3d}\) we can write

where \(\tilde{K}_{4}\) is defined by

Finally, integrating the link \(\tilde{U}_{3e}\) we have

Now, inserting Eqs. (A.44), (A.47), (A.50) and (A.52) into Eq. (A.41) we can write

As in the green path case, the outcome is a polynomial. The above solution can be used to evaluate all blue path integrations but we need to be careful, the definitions of the blue path links, the PONs \(b_{i}\) and the sum of PONs \(c_{i}\) change accordingly.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Leme, R., Oliveira, O. & Krein, G. Approximate dual representation for Yang–Mills SU(2) gauge theory. Eur. Phys. J. C 78, 656 (2018). https://doi.org/10.1140/epjc/s10052-018-6101-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-018-6101-9