Abstract

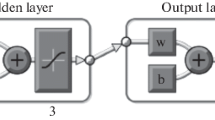

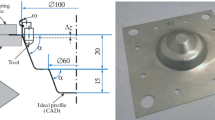

For industrial processes, an operational assessment and forecast of the main difficult-to-measure quality indicators of output streams are required. Soft sensors have become widely used for their assessment and prediction in real time. The use of neural networks (NNs) for their development allows us to take into account the nonlinear features of a technological object and allows us to obtain a more accurate forecast. However, the structure of NNs is extensive and the choice of the optimal structure, as well as the question of the convergence of learning with the given structure, are still beyond the reach of theoretical methods. In relation to this, a new NN learning algorithm and a new approach to determine the structure of a NN are presented. The latter allows us to establish the structure of a NN with the optimal convergence of the resulting structure. The proposed algorithms are presented in detail on the example of a fractionator and demonstrate their effectiveness.

Similar content being viewed by others

REFERENCES

P. Kadlec, B. Gabrys, and S. Strandt, “Data-driven soft sensors in the process industry,” Comput. Chem. Eng. 33, 795–814 (2009). https://doi.org/10.1016/j.compchemeng.2008.12.012

M. Dam and D. N. Saraf, “Design of neural networks using genetic algorithm for on-line property estimation of crude fractionator products,” Comput. Chem. Eng. 30, 722–729 (2006). https://doi.org/10.1016/j.compchemeng.2005.12.001

D. Whitley, T. Starkweather, and C. Bogart, “Genetic algorithms and neural networks: optimizing connections and connectivity,” Parallel Comput. 14, 347–361 (1990). https://doi.org/10.1016/0167-8191(90)90086-o

G. A. P. De Morais, B. H. G. Barbosa, D. D. Ferreira, and L. S. Paiva, “Soft sensors design in a petrochemical process using an evolutionary algorithm,” Measurement 148, 106920 (2019). https://doi.org/10.1016/j.measurement.2019.106920

S. Ding, C. Su, and J. Yu, “An optimizing BP neural network algorithm based on genetic algorithm,” Artif. Intell. Rev. 36, 153–162 (2011). https://doi.org/10.1007/s10462-011-9208-z

L. S. Iliadis and C. Jayne, “Emerging applications of deep learning and spiking ANN,” Neural Comput. Appl. 32, 17119–17124 (2020). https://doi.org/10.1007/s00521-020-05443-z

J.-J. Zhu, S. Borzooei, J. Sun, and Z. J. Ren, “Deep learning optimization for soft sensing of hard-to-measure wastewater key variables,” ACS ES&T Eng. 2, 1341–1355 (2022). https://doi.org/10.1021/acsestengg.1c00469

S. M. R. Loghmanian, H. Jamaluddin, R. Ahmad, R. Yusof, and M. Khalid, “Structure optimization of neural network for dynamic system modeling using multi-objective genetic algorithm,” Neural Comput. Appl. 21, 1281–1295 (2012). https://doi.org/10.1007/s00521-011-0560-3

Q. Li, M. Yang, Z. Lu, Yu. Zhang, and W. Ba, “A soft-sensing method for product quality monitoring based on particle swarm optimization deep belief networks,” Trans. Inst. Meas. Control 44, 2900–2910 (2022). https://doi.org/10.1177/01423312221093166

H. Wu, Yo. Han, J. Jin, and Z. Geng, “Novel deep learning based on data fusion integrating correlation analysis for soft sensor modeling,” Ind. Eng. Chem. Res. 60, 10001–10010 (2021). https://doi.org/10.1021/acs.iecr.1c01131

N. M. Aszemi and P. D. D. Dominic, “Hyperparameter optimization in convolutional neural network using genetic algorithms,” Int. J. Adv. Comput. Sci. Appl. 10 (6) (2019). https://doi.org/10.14569/ijacsa.2019.0100638

M. Hartley and T. S. G. Olsson, “dtoolAI: Reproducibility for deep learning,” Patterns 1, 100073 (2020). https://doi.org/10.1016/j.patter.2020.100073

M. Shahriari, R. Ramler, and L. Fischer, “How do deep-learning framework versions affect the reproducibility of neural network models?,” Mach. Learn. Knowl. Extr. 4, 888–911 (2022). https://doi.org/10.3390/make4040045

O. Yu. Snegirev and A. Yu. Torgashov, “Determining the optimal parameters of adaptive virtual analyzer for mass-transfer technological process,” in 13th All-Russian Meeting on Control Problems: Coll. of Sci. Papers, Ed. by D. A. Novikov (Inst. Problem Upravleniya im. V.A. Trapeznikova, Moscow, 2019), pp. 2655–2659. https://doi.org/10.25728/vspu.2019.2655

H. A. Al-Jamimi, G. M. Binmakhashen, K. Deb, and T. A. Saleh, “Multiobjective optimization and analysis of petroleum refinery catalytic processes: A review,” Fuel 288, 119678 (2021). https://doi.org/10.1016/j.fuel.2020.119678

L. Ingber, “Adaptive simulated annealing (ASA): Lessons learned,” Pol. J. Control Cybern. (1995).

E. Mezura-Montes and C. A. Coello Coello, “Constraint-handling in nature-inspired numerical optimization: Past, present and future,” Swarm Evol. Comput. 1, 173–194 (2011). https://doi.org/10.1016/j.swevo.2011.10.001

J. Forkman, “Estimator and tests for common coefficients of variation in normal distributions,” Commun. Stat. Theory Methods 38, 233–251 (2009). https://doi.org/10.1080/03610920802187448

Funding

This work was supported by ongoing institutional funding. No additional grants to carry out or direct this particular research were obtained.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors of this work declare that they have no conflicts of interest.

Additional information

Publisher’s Note.

Pleiades Publishing remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shtakin, D.V., Shevlyagina, S.A. & Torgashov, A.Y. Neural Network Model for Estimating the Quality Indicators of Industrial Fractionator Products. Math Models Comput Simul 16, 235–245 (2024). https://doi.org/10.1134/S2070048224020169

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S2070048224020169