Abstract

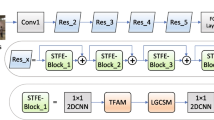

Feature extraction based traditional human action recognition algorithms are complicated, leading to low recognition accuracy. We present an algorithm for the recognition of human actions in videos based on spatio-temporal fusion using 3D convolutional neural networks (3D CNNs). The algorithm contains two subnetworks, which extract deep spatial information and temporal information, respectively, and bilinear fusion policy is applied to obtain the final fused spatio-temporal information. Spatial information is represented by a gradient feature, and the temporal information is represented by optical flow. The fused spatio-temporal information can retrieve deep features from multiple angles by constructing a new 3D CNNs. The proposed algorithm is compared with the current mainstream algorithms in the KTH and UCF101 datasets, showing effectiveness and high recognition accuracy.

Similar content being viewed by others

REFERENCES

K. Cheng, Y. F. Zhang, X. Y. He, W. H. Chen, J. Cheng, and H. Q. Lu, “Skeleton-based action recognition with shift graph convolutional network,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020), pp. 180–189. https://doi.org/10.1109/CVPR42600.2020.00026.

J. Donahue, L. A. Hendricks, M. Rohrbach, S. Venugopalan, S. Guadarrama, K. Saenko, and T. Darrell, “Long-term recurrent convolutional networks for visual recognition and description,” IEEE Trans. Pattern Anal. Mach. Intell. 39 (4), 677–691 (2017). https://doi.org/10.1109/Tpami.2016.2599174

C. Feichtenhofer, A. Pinz, and R. P. Wildes, “Spatiotemporal multiplier networks for video action recognition,” in 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017) (2017), pp. 7445–7454. https://doi.org/10.1109/CVPR.2017.787.

C. Feichtenhofer, A. Pinz, and A. Zisserman, “Convolutional two-stream network fusion for video action recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 1933–1941. https://doi.org/10.1109/CVPR.2016.213.

Y. Gao, O. Beijbom, N. Zhang, and T. Darrell, “Compact bilinear pooling,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 317–326. https://doi.org/10.1109/CVPR.2016.41.

H. Jhuang, T. Serre, L. Wolf, and T. Poggio, “A biologically inspired system for action recognition,” in 2007 IEEE 11th International Conference on Computer Vision (2007), Vols. 1–6, pp. 1253–1260.

S. W. Ji, W. Xu, M. Yang, and K. Yu, “3D convolutional neural networks for human action recognition,” IEEE Trans. Pattern Anal. Mach. Intell. 35 (1), 221–231 (2013). https://doi.org/10.1109/Tpami.2012.59

A. Karpathy, G. Toderici, S. Shetty, T. Leung, R. Sukthankar, and L. Fei-Fei, “Large-scale video classification with convolutional neural networks,” in 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014), pp. 1725–1732. https://doi.org/10.1109/CVPR.2014.223.

A. S. Keceli, A. Kaya, and A. B. Can, “Combining 2D and 3D deep models for action recognition with depth information,” Signal Image Video Process. 12 (6), 1197–1205 (2018). https://doi.org/10.1007/s11760-018-1271-3

M. Koohzadi and N. M. Charkari, Survey on deep learning methods in human action recognition,” IET Comput. Vision 11 (8), 623–632 (2017). https://doi.org/10.1049/iet-cvi.2016.0355

Q. V. Le, W. Y. Zou, S. Y. Yeung, and A. Y. Ng, “Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis,” in 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2011).

H. Liu, H. Tu, and M. Liu, “Two-stream 3D convolutional neural network for human skeleton-based action recognition,” arXiv (2017). arXiv:1705.08106

U. Mahbub, H. Imtiaz, and M. A. R. Ahad, “Motion clustering-based action recognition technique using optical flow,” in 2012 International Conference on Informatics, Electronics and Vision (ICIEV) (2012), pp. 919–924.

J. Y. H. Ng, M. Hausknecht, S. Vijayanarasimhan, O. Vinyals, R. Monga, and G. Toderici, “Beyond short snippets: Deep networks for video classification,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015), pp. 4694–4702. https://doi.org/10.1109/cvpr.2015.7299101.

J. C. Niebles, H. C. Wang, and L. Fei-Fei, “Unsupervised learning of human action categories using spatial-temporal words,” Int. J. Comput. Vision 79 (3), 299–318 (2008). https://doi.org/10.1007/s11263-007-0122-4

L. Onofri, P. Soda, M. Pechenizkiy, and G. Iannello, “A survey on using domain and contextual knowledge for human activity recognition in video streams,” Expert Syst. Appl. 63, 97–111 (2016). https://doi.org/10.1016/j.eswa.2016.06.011

X. J. Peng, L. M. Wang, X. X. Wang, and Y. Qiao, “Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice,” Comput. Vision Image Understanding 150, 109–125 (2016). https://doi.org/10.1016/j.cviu.2016.03.013

A. C. S. E. Santos and H. Pedrini, “Human action recognition based on a spatio-temporal video autoencoder,” Int. J. Pattern Recognit. Artif. Intell. 34 (11) (2020). https://doi.org/10.1142/S0218001420400017

A. B. Sargano, P. Angelov, and Z. Habib, “A comprehensive review on handcrafted and learning-based action representation approaches for human activity recognition,” Appl. Sci. (Basel) 7 (1) (2017). https://doi.org/ARTN11010.3390/app7010110.

K. Schindler and L. van Gool, “Action snippets: How many frames does human action recognition require?,” in 2008 IEEE Conference on Computer Vision and Pattern Recognition (2008). https://doi.org/10.1109/CVPR.2008.4587730.

C. Schuldt, I. Laptev, and B. Caputo, “Recognizing human actions: A local SVM approach,” in Proceedings of the 17th International Conference on Pattern Recognition (2004), Vol. 3, pp. 32–36. https://doi.org/10.1109/Icpr.2004.1334462

K. Simonyan and A. Zisserman, “Two-stream convolutional networks for action recognition in videos,” in Advances in Neural Information Processing Systems (NIPS 2014) (2014), Vol. 27.

D. Tran, L. Bourdev, R. Fergus, L. Torresani, and M. Paluri, ““Learning spatiotemporal features with 3D convolutional networks,” in 2015 IEEE International Conference on Computer Vision (ICCV) (2015), pp. 4489–4497. https://doi.org/10.1109/Iccv.2015.510.

Y. Wang and M. Hoai, “Improving human action recognition by non-action classification,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 2698–2707. https://doi.org/10.1109/CVPR.2016.295.

D. F. Xie, L. Zhang, and L. Bai, “Deep learning in visual computing and signal processing,” Appl. Comput. Intell. Soft Comput. 2017, 1320780 (2017). https://doi.org/10.1155/2017/1320780

X. D. Yang and Y. L. Tian, “Effective 3D action recognition using EigenJoints,” J. Visual Commun. Image Representation 25 (1), 2–11 (2014). https://doi.org/10.1016/j.jvcir.2013.03.001

C. F. Yuan, X. Li, W. M. Hu, H. B. Ling, and S. Maybank, “3D R transform on spatio-temporal interest points for action recognition,” in 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2013), pp. 724–730. https://doi.org/10.1109/CVPR.2013.99.

A. Zare, H. A. Moghaddam, and A. Sharifi, “Video spatiotemporal mapping for human action recognition by convolutional neural network,” Pattern Anal. Appl. 23 (1), 265–279 (2020). https://doi.org/10.1007/s10044-019-00788-1

W. Zheng, L. Li, Z. X. Zhang, Y. Huang, and L. Wang, “Relational network for skeleton-based action recognition,” in 2019 IEEE International Conference on Multimedia and Expo (ICME) (2019), pp. 826–831. https://doi.org/10.1109/Icme.2019.00147.

Funding

This work was supported in part by the State Key Development Program (project no. 2018YFC0830103), in part by the National Natural Science Foundation of China (project no. 61876070), in part by Key Projects of Jilin Province Science and Technology Development Plan (project no. 20180201064SF), and in part by Jilin University Student Innovation Experiment Project (project no. S202110183428).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

COMPLIANCE WITH ETHICAL STANDARDS

This article is a completely original work of its authors; it has not been published before and will not be sent to other publications until the Editorial Board of the Pattern Recognition and Image Analysis decides not to accept it for publication.

CONFLICT OF INTEREST

The process of writing and the content of the article does not give grounds for raising the issue of a conflict of interest.

Additional information

Yu Wang: Associate Professor at the College of Computer Science and Technology, Jilin University. He received his PhD degree from College of Computer Science and Technology, Jilin University in 2017. His research interest covers image processing and machine learning. Corresponding author of this paper.

Xuanjing Shen: Professor at the College of Computer Science and Technology, Jilin University. He received his PhD degree from Harbin Institute of Technology in 1990. His research interest covers multimedia technology, computer image processing, intelligent measurement system, and optical-electronic hybrid system.

Haipeng Chen: Professor at the College of Computer Science and Technology, Jilin University. His research interest covers image processing and pattern recognition.

Jiaxi Sun: Bachelor at the College of Software, Jilin University. His research interest covers video processing and pattern recognition.

Rights and permissions

About this article

Cite this article

Wang, Y., Shen, X.J., Chen, H.P. et al. Action Recognition in Videos with Spatio-Temporal Fusion 3D Convolutional Neural Networks. Pattern Recognit. Image Anal. 31, 580–587 (2021). https://doi.org/10.1134/S105466182103024X

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S105466182103024X