Abstract

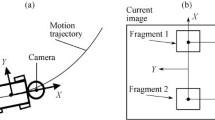

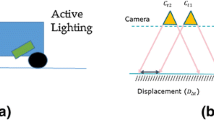

We consider the problem of visual odometry for a sequence of video frames using a camera directed perpendicularly downward. We propose an adaptive two-stage visual odometry technology based on sequential determination of interframe shifts and regular correction of current coordinate estimates. At the first stage, the shift between two consecutive frames is determined by the correlation method, with the compared video frames being aligned using the found shift parameters up to a pixel. At the second stage, the shifts are refined with subpixel precision using the optical flow method. To improve reliability, the most consistent estimates of the optical flow are selected. We present the results of experimental studies on publicly available survey data, which confirm the high reliability and accuracy of the estimates.

Similar content being viewed by others

REFERENCES

Nister, D., Naroditsky, O., and Bergen, J., Visual odometry for ground vehicle applications, J. Field Robot., 2006, vol. 23, no. 1, pp. 3–20.

Fraundorfer, F. and Scaramuzza, D., Visual odometry: Part I: The first 30 years and fundamentals, IEEE Robot. Autom. Mag., 2011, vol. 18, no. 4, pp. 80–92.

Fraundorfer, F. and Scaramuzza, D., Visual odometry: Part II: Matching, robustness, optimization, and applications, IEEE Robot. Autom. Mag., 2012, vol. 19, no. 2, pp. 78–90.

Min, Z., Yang, Y., and Dunn, E., VOLDOR: Visual odometry from log-logistic dense optical flow residuals, Proc. IEEE/CVF Conf. Computer Vision Pattern Recognit., 2020, pp. 4898–4909.

Moravec, H.P., Obstacle avoidance and navigation in the real world by a seeing robot rover, Ph.D. Dissertation, Stanford, CA, USA. 1980.

Matthies, L. and Shafer, S., Error modeling in stereo navigation, IEEE J. Robot. Autom., 1987, vol. 3, no. 3, pp. 239–248.

Kitt, B., Geiger, A., and Lategahn, H., Visual odometry based on stereo image sequences with ransac based outlier rejection scheme, 2010 IEEE Intell. Veh. Symp., 2010, pp. 486–492.

Pire, T., Fischer, T., Civera, J., Cristoforis, P., and Berlles, J., Stereo parallel tracking and mapping for robot localization, 2015 IEEE/RSJ Int. Conf. Intell. Robots Syst. (IROS), 2015, pp. 1373–1378.

Mur-Artal, R. and Tardos, J.D., Orb-slam2: An open-source slam system for monocular, stereo, and RGB-D cameras, IEEE Trans. Robot., 2017, vol. 33, no. 5, pp. 1255–1262.

Newcombe, R.A., Lovegrove, S.J., and Davison, A.J., DTAM: Dense tracking and mapping in real-time, 2011 Int. Conf. Comput. Vision, 2011, pp. 2320–2327.

Engel, J., Schops, T., and Cremers, D., LSD-SLAM: Large-scale direct monocular SLAM, Eur. Conf. Comput. Vision, 2014, pp. 834–849.

Kerl, C., Sturm, J., and Cremers, D., Dense visual SLAM for RGB-D cameras, 2013 IEEE/RSJ Int. Conf. Intell. Robots Syst., 2013, pp. 2100–2106.

Mohamed, S.A., Haghbayan, M.H., Westerlund, T., Heikkonen, J., Tenhunen, H., and Plosila, J., A survey on odometry for autonomous navigation systems, IEEE Access, 2019, no. 7, pp. 97466–97486.

Nourani-Vatani, N. and Borges, P.V.K., Correlation-based visual odometry for ground vehicles, J. Field Robot., 2011, vol. 28, no. 5, pp. 742–768.

Harris, C.G. and Pike, J.M., 3D positional integration from image sequences, Proc. Alvey Vision Conf. (Cambridge, U.K., 1987), pp. 1–4.

Lowe, D.G., Object recognition from local scale-invariant features, Proc. Int. Conf. Comput. Vision (ICCV). Vol. 2 (Washington, DC, USA, 1999), pp. 1150–1157.

Bay, H., Ess, A., Tuytelaars, T., and Van Gool, L., Speeded-up robust features (SURF), Comput. Vision Image Understand., 2008, vol. 110, no. 3, pp. 346–359.

Rosten, E. and Drummond, T., Machine learning for high-speed corner detection, in Proc. 9th Eur. Conf. Comput. Vision (ECCV), Berlin: Springer-Verlag, 2006, pp. 430–443.

Rublee, E., Rabaud, V., Konolige, K., and Bradski, G., Orb: An efficient alternative to SIFT or SURF, Proc. Int. Conf. Comput. Vision, 2011, pp. 2564–2571.

Miller, B.M., Stepanyan, K.V., Popov, A.K., and Miller, A.B., UAV navigation based on videosequences captured by the onboard video camera, Autom. Remote Control, 2017, vol. 78, no. 12, pp. 2211–2221.

Goppert, J., Yantek, S., and Hwang, I., Invariant Kalman filter application to optical flow based visual odometry for UAVs, 2017 Ninth Int. Conf. on Ubiquitous and Future Networks (ICUFN), 2017, pp. 99–104.

Muller, P. and Savakis, A., Flowdometry: An optical flow and deep learning based approach to visual odometry, 2017 IEEE Winter Conf. Appl. Comput. Vision (WACV), 2017, pp. 624–631.

Gonzalez, R., Rituerto, A., and Guerrero, J.J., Improving robot mobility by combining downward-looking and frontal cameras, Robotics, 2016, vol. 5, no. 4, article ID 25.

Charrett, T.O., Waugh, L., and Tatam, R.P., Speckle velocimetry for high accuracy odometry for a Mars exploration rover, Measurement Sci. Technol., 2009, vol. 21, no. 2, article ID 025301.

Myasnikov, V.V. and Dmitriev, E.A., The accuracy dependency investigation of simultaneous localization and mapping on the errors from mobile device sensors, Comput. Opt., 2019, vol. 43, no. 3, pp. 492–503.

Fursov, V.A., Kotov, A.P., and Goshin, Y.V., Solution of overdetermined systems of equations using the conforming subsystem selection, J. Phys.: Conf. Ser., 2019, vol. 1368, no. 5, pp. 821–828.

Fursov, V.A., Gavrilov, A.V., and Goshin, Y.V., Conforming identification of the fundamental matrix in the image matching problem, Comput. Opt., 2017, vol. 41, no. 4, pp. 559–563.

Antonini, A., Guerra, W., Murali, V., Sayre-McCord, T., and Karaman, S., The Blackbird dataset: a large-scale dataset for UAV perception in aggressive flight, Int. Symp. Exp. Robot., 2018, pp. 130–139.

Funding

The research was carried out within the state assignment theme 0777-2020-0017.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Translated by V. Potapchouck

Rights and permissions

About this article

Cite this article

Fursov, V.A., Minaev, E.Y. & Kotov, A.P. Vehicle Motion Estimation Using Visual Observations of the Elevation Surface. Autom Remote Control 82, 1730–1741 (2021). https://doi.org/10.1134/S0005117921100106

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S0005117921100106