Abstract

Predicting phase stability in high entropy alloys (HEAs), such as phase fractions as functions of composition and temperature, is essential for understanding alloy properties and screening desirable materials. Traditional methods like CALPHAD are computationally intensive for exploring high-dimensional compositional spaces. To address such a challenge, this study explored and compared the effectiveness of random forests (RF) and deep neural networks (DNN) for accelerating materials discovery by building surrogate models of phase stability prediction. For interpolation scenarios (testing on the same order of system as trained), RF models generally produce smaller errors than DNN models. However, for extrapolation scenarios (training on lower-order systems and testing on higher order systems), DNNs generalize more effectively than traditional ML models. DNN demonstrate the potential to predict topologically relevant phase composition when data were missing, making it a powerful predictive tool in materials discovery frameworks. The study uses a CALPHAD dataset of 480 million data points generated from a custom database, available for further model development and benchmarking. Experiments show that DNN models are data-efficient, achieving similar performance with a fraction of the dataset. This work highlights the potential of DNNs in materials discovery, providing a powerful tool for predicting phase stability in HEAs, particularly within the Cr-Hf-Mo-Nb-Ta-Ti-V-W-Zr composition space.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

High entropy alloys (HEAs), also described as complex concentrated alloys (CCAs) or multi-principal element alloys (MPEAs), are a class of materials that have demonstrated promising properties1,2,3,4,5,6 relative to traditional single principal element alloys. One recent area of considerable interest in materials design is the discovery of stable single-phase, body-centered cubic (BCC) refractory HEAs that have superior strength at elevated temperatures7,8,9,10,11,12,13. Compared to traditional alloys, the composition design space for HEAs is much more vast and consequently less explored. While HEAs are theorized to be stabilized by ideal mixing entropy, all energetic contributions play an important role in determining phase stability14. When designing new materials in unexplored regions of phase space with many elements in high concentrations, many phases can form (due to miscibility gaps, intermetallic phases, or ordered phases) that may be difficult to anticipate or experimentally determine. To efficiently optimize new HEAs, there is a need to understand and rapidly predict phase formation in complex multicomponent design spaces.

The CALPHAD (CALculation of PHAse Diagrams) method15 is an important computational framework for predicting phase stability of HEAs in complex multicomponent design spaces and is widely used in integrated computational materials engineering (ICME) approaches to design materials. CALPHAD is the state-of-the-art tool for exploring multicomponent design spaces because the method is capable of accurately extrapolating Gibbs energies assessed for low-order systems, typically all unary, binary, and ternary subsystems of a larger multicomponent system, into multicomponent space. Extrapolating low-order systems into higher-order systems works because the most dominant energetic interactions when mixing elements come from binary (pairwise) and ternary (three-way) interactions. For a multicomponent system, the energetic contribution of higher-order interactions is dominated by these low-order interactions, such that the higher-order interactions (four-way and above) can often be neglected. One challenge in using CALPHAD for phase stability screening in large multicomponent design spaces is that the volume of these high-dimensional composition spaces becomes increasingly large and computationally expensive to explore exhaustively. In ICME-type materials design problems, Calphad-based phase stability calculations can often be the rate-limiting step16,17, especially if used in tandem with quick-to-evaluate property models, such as the analytical yield strength model for refractory HEAs created by Maresca and Curtin18 or ML-based property models for elasticity19 or ductility20 that have recently been developed. Furthermore, performance and acceleration of Calphad-type calculations, including through interpolation methods, are actively being developed21,22. In this work, we aim to provide a fast surrogate model of a CALPHAD database developed for refectory HEAs to significantly improve the efficiency of screening multicomponent composition spaces while preserving the prediction accuracy and thermodynamic constraints (e.g., Gibbs phase rule) that are present in the CALPHAD method by construction.

With the rapid development of machine learning (ML) and its applications in the scientific domain, several existing works have adopted ML models for various predictive task related to HEAs, including strength prediction23,24,25, and phase prediction26,27. Several different approaches have been taken for predicting phase stability. Some approaches use energy-based schemes, where CALPHAD data28 or density functional theory (DFT) data29,30 are used to fit models that predict the energies of individual phases given a crystal structure or composition. These energies can then be used to calculate the stable set of phases through energy minimization. Drawbacks of these approaches include the additional energy minimization step and approximations of the effect of temperature and pressure on the energy, while other approaches only use 0K energies obtained from DFT. The works of Ward et al.29, Wang et al.25, and Krajewksi et al.30 were based on neural networks, however traditional ML approaches such as random forests (RF)31, support vector machines32 and gradient boosting trees33 are often the models of choice when learning from small and tabular datasets. For example, gradient boosting methods are adopted for exploring the phase selection rules34,35, and support vector machines are utilized for a phase formation classification task27,36. Moreover, Roy et al.37 provide a survey on related works that primarily rely on traditional ML methods.

Despite the various methods and tasks explored in these recent works, most, if not all, models focus on classification tasks, such as the work of Deffrennes et al.38 that focused on direct training to predict the number of phases that form at a given pressure, temperature, and composition. In a recent study by Vazquez et al.39, a deep neural network (DNN)-based surrogate model was trained on CALPHAD data to rapidly calculate the phase stability of alloys within the Al-Co-Cr-Fe-Mn-Nb-Ni composition space. However, the work focused on predicting phase stability at only one temperature (800C) for 5 different classes of phases (BCC, FCC, sigma, C14, and all others). In addition, the CALPHAD data were generated on a fairly rough fixed grid (5 atomic percent-at.%) using commercial software (Thermo-Calc) and database (TCHEA5), making it impossible to release the full dataset and benchmark the ML surrogate model.

In this work, we aim to fill the gap of developing accurate and rapid ML surrogate models that directly predict all phase fractions across a wide temperature range. To provide a more comprehensive picture of the effectiveness of ML surrogate models for phase fraction, we employed an RF approach and a DNN to investigate the benefits and limitations of both methods. We show that both methods can provide good performance for interpolating (i.e., when training and testing samples are with the same system order) existing CALPHAD samples and traditional ML outperforms neural network models in most setups. This interesting and significant observation aligns with existing insights on tabular data, i.e., the type of data we see for CALPHAD prediction problems. As illustrated in some recent works40,41, DNNs rarely outperform traditional RF Gradient Boosting methods for tabular data. For extrapolation scenarios (i.e., when training on lower-order systems and testing on higher-order systems), the neural network models, on the other hand, tend to produce smoother and better-behaved output. The detailed problem setups, prediction results, and evaluations are discussed in the following section.

More importantly, we believe the surrogate models will be an integral part of the overall accelerated phase fraction prediction pipeline, as illustrated in Fig. 1. The surrogate model is a critical part of the system for reusing and learning from existing queries, to facilitate fast future phase fraction queries in the extremely large design spaces. Essentially, we can leverage surrogate models, particularly DNN, to augment missing CALPHAD assessments or extrapolate to a more complex system. Training and evaluation data can be gathered on the fly or by pre-sampling the space. In our current setup, the latter is adopted. Specifically, we use a dataset containing 480 million samples generated using a custom CALPHAD database. As shown in our data efficiency experiments, we can achieve similar performance with only a fraction of the overall data for adapting a lower-order system model to a higher-order one (5-ary to 6-ary). Additionally, the pretrained ML models are made available for further model development and benchmarking of the present work.

Results

In this work, our core task is the accurate prediction of phase fractions. It is one of the most critical pieces of information for understanding materials behavior using CALPHAD computation results. Given this information, we can easily produce various physically meaningful plots, such as phase diagrams and property diagrams, which are often used for visualizing and reasoning about material behaviors. Compared to prior works that leverage ML models for HEA phase-related prediction that primarily focus on the existence of a phase, the multi-valued regression task poses significant challenges. There are limited existing works on the prediction of phase fractions or phase diagrams, and they are either related to other non-HEA applications42 or provide limited output27 as compared to all phase fractions.

To comprehensively explore the potential ML solutions, we employ both traditional ML techniques as well as deep learning techniques for phase fraction prediction tasks. On the side of traditional ML, we focus on the RF model as initial experiments show that RF models consistently outperformed both logistic regressions43 and gradient boosting models44 on all of our tasks. As for the deep learning model, we found that multilayer perceptron (MLP, in the context of this paper, we will refer to it as DNN as it is the only DNN architecture employed) provides good performance. The more sophisticated architectures from existing works, e.g., periodic table CNN (Convolutional Neural Network)26, perform worse for the multi-output regression task and are not suitable for our intended goals. In addition to our goal of producing fast surrogate models to be used in place of CALPHAD (interpolation tasks), we also sought to explore the generalization capabilities of each ML model when presented with data beyond (e.g., higher-order system than the model was trained on) what was used during training (extrapolation tasks). In the following sections, we will first describe in detail the training and testing configuration for both the interpolation and extrapolation tasks. Then we will provide a comparative study between the RF and DNN models for the single-phase fraction prediction (scalar value regression), as well as the all-phase-fraction prediction (multi-variate regression). These comparisons demonstrate clear advantages of our DNN, particularly in terms of extrapolation and generalization performance. To further understand the extrapolation behavior of DNN, we look at more extreme extrapolation scenarios, in which we try to predict the entire binary phase diagram while withholding all binary relationships of these two elements during the training. Moreover, we examined the model’s performance regarding the data efficiency, i.e., can we use much less data to obtain similarly accurate models? Lastly, we evaluate the DNN model on complex compositions with a system of nine elements and demonstrate the DNN model’s performance on more challenging phase diagrams and property diagrams.

Training and testing configurations

Here we will provide a breakdown of our training and testing scheme. We configured datasets to allow for training and testing on increasingly complex elemental systems, thus allowing us to test the interpolation and extrapolation performance of each model. To describe these datasets, first recall that the generated CALPHAD data consists of n-ary elemental systems for n ∈ {1, 2, 3, 4, 5, 6} where the elements used in each composition are restricted to the set {Cr, Hf, Mo, Nb, Ta, Ti, V, W, Zr}.

To simplify the definition of our datasets, we denote the full CALPHAD dataset by \({{{\mathcal{C}}}}\) consisting of N feature-target pairs \({\{({X}_{i},{Y}_{i})\}}_{i = 1}^{N}\). The features, denoted Xi, are 10-dimensional arrays containing the fractional amount of each of the 9 elements and the temperature (in degrees Kelvin) of the elemental composition. For each feature Xi, the target Yi contains the phase fraction of 18 different phases (out of 20 phases, where we removed the independent phases that contain less than 3 non-zero value samples. Phases: BCC_B2, BCC_B2#2, BCC_B2#3, BCC_B2#4, BCT_A5, CBCC_A12, FCC_A1, HCP_A3, HCP_A3#2, HCP_ZN, Laves_C14, Laves_C14#2, Laves_C15, Laves_C15#2, Laves_C15#3, Laves_C36, Liquid, Omega). First, we performed a train/test split of 80/20 to produce \({{{{\mathcal{C}}}}}^{train}\) and \({{{{\mathcal{C}}}}}^{test}\). Then we created up-to-n-ary datasets defined by \({{{{\mathcal{U}}}}}_{n}^{t}:=\{x\in {{{{\mathcal{C}}}}}^{t}:x\,\,{{\mbox{composed of up to}}}\,\,n\,\,{{\mbox{elements}}}\,\}\), for t ∈ {train, test}. Additionally, we defined n-ary datasets by \({{{{\mathcal{E}}}}}_{n}^{t}:=\{x\in {{{{\mathcal{C}}}}}^{t}:x\,\,{{\mbox{composed of exactly}}}\,\,n\,\,{{\mbox{elements}}}\,\}\), for t ∈ {train, test}. Note that for a given n ∈ {1, 2, 3, 4, 5, 6} and a model trained on \({{{{\mathcal{U}}}}}_{n}^{train}\), we can measure the interpolation capabilities of the model by testing on \({{{{\mathcal{E}}}}}_{m}^{test}\) for 1 ≤ m ≤ n and extrapolation capabilities of the model by testing on \({{{{\mathcal{E}}}}}_{m}^{test}\) for n < m ≤ 6.

For our training configurations, we considered two phase fraction prediction tasks with increasing complexity. Specifically, we performed one scalar output regression tasks, and one 18-output regression task: regression task: 1) overall BCC phase fraction prediction (regression of a scalar quantity) and, 2) all phase fraction predictions (regression of 18 possible phases).

Note that the original target vectors contain the 18 phase fractions. For task = 1, we obtain the target by adding all BCC phases fractions. We measure the scalar value regression (overall BCC phase fraction prediction) task’s performance by computing both R-squared score (R2[ 45) and mean absolute error (MAE46) score of the model on the test set. For task = 2 each target Y is the original 18-dimensional vector containing the 18 different phase fractions. Due to a large number of phase fractions from all 18 phases being zeros or close to zero, R2 can be very sensitive and not particularly meaningful. Therefore, we rely only on MAE for evaluation.

Now for each n ∈ {1, 2, 3, 4, 5, 6} and task ∈ {1, 2}, we train a RF and MLP on \({{{{\mathcal{U}}}}}_{n}^{train}\) and denote these models by \(R{F}_{n}^{task}\) and \(DN{N}_{n}^{task}\), respectively. Note that for task = 2, the RF model is an ensemble of 18 individual RF regressors trained to predict the phase fraction for each of the 18 possible phases.

Following these two tasks, we use the 18 phase fraction prediction RF and MLP models from task 2 as surrogate models to predict the property diagrams and phase diagrams. Accuracy of the prediction is measured by both MAE and R2 for the prediction task.

Task 1: prediction of overall BCC phase fraction

To establish a baseline performance, we first develop a model for the simpler prediction task, i.e., overall BCC phase fraction prediction. The model takes the alloy composition and temperature as input and predicts a single output scalar value. One key motivation of the study is to better understand behaviors and assess the performance of different types of models. To this end, as described in the previous section, we developed extensive interpolation and extrapolation tasks. For each RFn, DNNn model trained using data up to n-ary, we study the model’s interpolation performance on the test split of the dataset for a given specific order (1 to n). The extrapolation task, in which we examine how the model performs when presented with examples of higher-order systems (n to 6, where 6 is the highest-order system in our setup), is more challenging. The test performance of the different models on different test splits is summarized in a matrix format as heatmap visualizations (see Fig. 2). In this visualization, each heatmap row summarizes test performances from a single model, evaluated on test data of different system orders. The x axis indicates the order of the test system, whereas the y axis indicates the training data the model used, i.e., training with data up to n-ary.

In the first row of the matrices presented in Fig. 2, where we use a model trained on unary dataset and prediction on all n-ary tests, the performance is understandably poor across the board (both DNN and RF models), as we do not expect the model to extrapolate well from pure elements alone. However, the DNN models exhibit consistent extrapolation behavior, i.e., as we increase the predicted system order, the performance gets progressively worse. Contrary to DNN models, the RF models have a less understandable trend (the error goes up and down) that does not align well with intuition.

As we move to the model trained with a higher-order dataset (second row and later in the heatmap matrixes presented in Fig. 2), we can see a clear advantage of DNN models in generalization performance compared to RF models. The RF models perform well as long as we test up to the order of the system they are trained on. However, any extrapolation tasks appear to be challenging. The DNN models tend to generalize better as they are trained with more sophisticated data. This behavior aligns well with the fundamental domain understanding of the phase fraction problem.

Task 2: all-phase fractions prediction

In addition to the prediction of scalar phase fraction, the core contribution of this work involves the simultaneous prediction of all relevant phases of the CALPHAD computation. By analyzing the predictive performance utilizing a standard accuracy metric (i.e., MAE) as well as generating and comparing property/phase diagrams computed from the prediction, we demonstrate the potential limitations and trade-offs between RF models and the DNN models. We employed a similar experimental setup as described in Section 2.2 for the all-phase-fractions prediction tasks.

As illustrated in Fig. 3, we evaluate the interpolation as well as the extrapolation performance across models trained on different system orders. We observe a similar trend, apart from the unary models, with the RF models consistently producing accurate predictions when the training dataset has good coverage of the testing scenarios, but failing quickly when the presented text setup requires extrapolation capability. As we increase the order of the system, both methods generate less accurate predictions, and the DNN models appear to have a comparatively larger performance drop.

Prediction vs. ground truth comparison

Even though Figs. 2 and 3 provide a global view of different model performances across tasks, they provide limited sample-level understanding of how predicted values compare against the ground truth and any potential bias in the predictions. Here, we present a 2D histogram to provide an occlusion-free view (as compared to a standard scatterplot) of the relationship between predicted and ground truth values across the value ranges. In these plots, deviation from the diagonal indicates a mismatch between the predicted value and the ground truth. From Fig. 4 (LAVES_C15 phase prediction), the RF models, on average, produce more accurate predictions compared to the DNN counterpart. More interestingly, the DNN appears to have a bias in terms of the prediction error. As shown in the DNN error histogram plot, when errors occur, the predicted values have a stronger tendency to be near zero. This behavior can be influenced by the choice of loss function and training procedures. However, it is an interesting observation that warrants further investigation in future works. For a more detailed quantitative breakdown, we also show the per-phase prediction error in Table 1.

Property diagram comparison

Besides evaluating the raw predicted phase fraction in terms of aggregated error and value distribution, we explored the generated property diagrams. Fig. 5 shows model predictions of property diagrams for specific binary systems (Cr-50W and Cr-50Nb, in at.%). Here, we use the same model as the previous evaluation setup (interpolation, trained on a binary system, and testing on a binary relationship). As we can see the DNN and RF models produce comparable results, however, the DNN tends to generate continuous and smooth predictions, whereas we see more abrupt jumps are generated for the RF predictions.

Besides the interpolation setup, we also evaluate how the property diagram behaves when we extrapolate from the binary model to a ternary system. As shown in Fig. 6, RF produces wildly incorrect predictions, and the prediction value tends to jump dramatically across the output range. On the other hand, the DNN model can much better extrapolate into the unknown system (unseen during training).

Phase diagram comparison

It is interesting to compare the phase diagrams reconstructed from the all-phase-fraction predictions (RF vs. DNN). As shown in Fig. 7, for the interpolation evaluation setup, the RF closely follows the training data, even capturing the aliasing artifact resulting from the sampling pattern. On the other hand, the DNN produces a much smoother diagram that mimics the true phase boundaries, while making small mistakes in specific areas, e.g., the lower left corner of the Cr-W phase diagram and the center of the Cr-Nb phase diagram.

In light of the relatively large gap regarding the extrapolation performance between the RF and DNN models, we designed more challenging extrapolation tasks to explore the potential limit of DNN. As a result, we include here a new type of extrapolation test, where we try to predict a binary phase diagram (Cr-W) that was totally removed from the training dataset. This can be viewed as a more extreme extrapolation test than simply going from binary to ternary, as the model was not trained using any of the element combinations that we tested. Several representative examples are presented in Fig. 8. The phase diagrams, in general, are not accurately predicted; however, the predicted phase diagrams seem to capture the global trend and major features of the ground truth information (i.e., topology of phase diagrams) in most of these examples (we include a more complete list in the Supplementary Materials).

Selection of DNN models for more complex materials

So far, our discussion has focused on the comparison between neural network models and traditional ML models (RF models). Based on the above-illustrated results, not only does the DNN model produce smoother and more continuous output, but it is also much better at extrapolation and generalization compared to the traditional ML model. So, for the rest of the section, we will dive deeper into the properties of the DNN models and additional experiments where additional elements (Hf, Ti, Zr) are introduced for more complex usage scenarios.

Inference speed comparison with CALPHAD

One important advantage of employing the ML model compared to CALPHAD is the inference speed. Compared to CALPHAD computation, the DNN models provide a significant inference speed boost, especially with GPU acceleration and batch processing. Moreover, since the DNN model’s inference speed is primarily linked to the parameter count and architecture, there is no difference between a unary input or a high system order input. The comparison result is shown in Fig. 9, the average per sample inference speed for the DNN model is 2.097e − 6 second (test with a 256 batch size on an NVIDIA P100 GPU), whereas the standard PyCalphad package running on CPU is multiple orders of magnitude slower (from ~1000× to ~100,000× slower depending on system size).

Data efficiency for adapting DNN model to higher-order system

Based on the extrapolation comparison between DNN and RF models presented in the previous section, the DNN significantly outperforms RF in terms of generalization to high-order systems without direct access to the data. In this section, we show that even a small amount of high-order sample can significant boost its prediction accuracy. As illustrated in Fig. 10, we demonstrate that we only need a small fraction of the data (i.e., 15%) to achieve ~80% of the best performance. In other words, we can adapt a model trained up to (n-1)-ary systems to n-ary system with a limited number of samples. This could significantly reduce the total sample required. More importantly, in this example, we only illustrate the case when higher-order samples are randomly selected for training. However, methods such as active learning47 can be employed to further improve the data efficiency where the most critical samples for training the model need to be acquired in the first place.

Experiments with more complex material (9 elements)

As illustrated in Fig. 11, we show several complex phase diagrams involving these newly introduced elements, specifically, the Cr-Ti, Cr-Zr, and V-Ti pairs. As we can see in the plots, the CALPHAD ground truth is illustrated on the left, and the predicted phase diagram is shown on the right. The result demonstrates that the model can accurately reflect these complex interactions and structures among phases. In Fig. 12, we show a property diagram for the HfNbTiV equiatomic system.

Discussion

In this work, we have successfully built ML models for predicting the phase fraction of CALPHAD computation for the Cr-Hf-Mo-Nb-Ta-Ti-V-W-Zr system (the general method can be applied to any HEA system). We compared the performance of traditional ML with DNNs for the single regression task of predicting overall BCC phase fraction and the multi-regression task of predicting multiple phase fraction values. Our experimental results demonstrate that traditional ML models like RF can produce better-fitted models, however, at the expense of rather poor generalization/extrapolation performance. On the other hand, the deep learning model, i.e., multilayer perceptron, though producing a comparably larger error in the interpolation task, can often produce meaningful extrapolation beyond what the model is trained on. Specifically, our results show that the proposed method can reliably predict phase fractions for systems where training data cover all elemental combinations. The model will struggle when predicting the phase equilibrium of elemental interactions that are not in the training data. The current model can be extended to composition of individual phases in addition to the phase fraction by prediction additional output, however, a significant increase in output dimension can pose challenge for training.

These observations indicate not only an interesting trade-off between these two fundamentally different classes of methods but also reveal the great potential of DNNs. Since our initial experiments only explored relatively simple network architecture, we believe further investigation and experimentation with modern architectures and more intelligent use of training data may yield better interpolation as well as extrapolation performance. Additionally, the limitation of generalizing to elemental combinations that were not part of the training data also calls for the development of methods that can leverage prior materials science knowledge for more meaningful extrapolation. Pretraining and self-supervised representation learning can be utilized to obtain prior knowledge of the elements, and recent developments in deep learning architecture, such as transformers, can also be leveraged to improve prediction accuracy and generalization performance. Combining these improvements (from interpolation to extrapolation) with the intrinsic speedup offered by ML surrogate models will make robust and rapid alloy design starting from a vast design space (i.e., composition and temperature) a reality.

Methods

Generating training data with CALPHAD

A CALPHAD database containing the elements Cr-Hf-Mo-Nb-Ta-Ti-V-W-Zr was constructed by combining existing CALPHAD assessments for all binary (Cr-Hf48, Cr-Mo49, Cr-Nb50, Cr-Ta51,52, Cr-Ti53, Cr-V53, Cr-W49,52, Cr-Zr54, Hf-Mo55, Hf-Nb56, Hf-Ta57, Hf-Ti58, Hf-V59, Hf-W55, Hf-Zr60, Mo-Nb61, Mo-Ta62, Mo-Ti54,63, Mo-V64, Mo-W62, Mo-Zr65, Nb-Ta61, Nb-Ti66, Nb-V67, Nb-W68, Nb-Zr69, Ta-Ti54, Ta-V70, Ta-W62, Ta-Zr71, Ti-V53, Ti-W72, Ti-Zr73, V-W74, V-Zr75, W-Zr76) and select ternary (Cr-Mo-W49, Cr-Ta-W52, Cr-Ti-V53,63, Mo-Nb-Ta61) systems.

The present study focuses on providing a CALPHAD surrogate pipeline. However, the goal is not to develop a specific high-accuracy CALPHAD database. As previously mentioned, extrapolating low-order systems into higher-order systems (i.e., CALPHAD predictions) works because the most dominant energetic interactions when mixing elements come from binary (pairwise) and ternary (three-way) interactions. The database includes all the necessary binary systems but would benefit from including more ternary interactions (especially for the Laves phase) to improve accuracy in the initial CALPHAD predictions.

We used PyCalphad77 to generate training data from our CALPHAD database. Data was generated on a dense composition grid including the unary systems and every 2 at.% (represented in the data as mole fractions, X, for each element) for all combinations of binary (36 combinations), ternary (84 combinations), quaternary (126 combinations), quinary (126 combinations), and for one senary (Cr-Mo-Nb-Ta-V-W). At each composition, we computed equilibrium phase fractions (0P) and molar Gibbs energies (GM) every 5 K between 400 K and 4000 K at atmospheric pressure (101 325 Pa). Before training and testing, samples in which the molar Gibbs energy is NaN are removed, as this indicates that PyCalphad failed to compute equilibrium. We summarize the number of total runs, successful runs, and failure rate of PyCalphad computation in Table 2.

Note that if any single CALPHAD equilibrium calculation produces a miscibility gap, the phase names are relabeled to distinguish the multiple versions of those phases (with different compositions) that can be stable at the same time. For example, some composition/temperature combinations give up to 4 BCC_B2 phases in equilibrium simultaneously. The second BCC_B2 phase detected is relabeled BCC_B2#2, the third BCC_B2#3, and so on. The labels are assigned arbitrarily as the phases in a miscibility gap are indistinguishable other than having different compositions and phase fractions. Also, a CALPHAD partitioning model is used for the A2/B2 phase to effectively describe the order-disorder transitions in the BCC phase (e.g., Cr-Ta, Mo-Ta, Mo-Ti, Ta-Ti, and Ta-W binary systems). As both disordered and ordered versions of the BCC phase are modeled with the same model, the BCC_B2 label is kept for both states (an additional calculation would be necessary to distinguish them, but this is not the goal of this study).

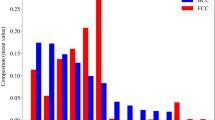

For training a model, it is important to understand the data distribution, in our case, the output phases have drastically different distributions. As shown in Fig. 13, we visualized the non-zero phase fraction in the dataset, here a log scale is used as some phase only have a few non-zero entries while other have millions. In our training, we use 18 phases out of the 20 phases, excluding the independent phases that contain fewer than three non-zero samples.

Traditional ML models

We first considered traditional ML techniques to address our goal. Our initial experiments utilized three such techniques: logistic regression, RF, and gradient boosting. However, we ultimately found that RF consistently outperformed both logistic regression and gradient boosting models on all of our tasks. As such, all presented traditional ML results in this work use RF. We used sklearn.ensemble.RandomForestClassifier and sklearn.ensemble.RandomForestRegressor from scikit-learn78 for training and testing RF for classification and regression, respectively.

In an effort to consider model performance with additional feature information beyond the temperature and elemental composition, we used the Magpie library79 to produce supplemental features for use with RF. The strategy for using Magpie features is to explore whether the use of additional features during training can produce models that are more accurate on in-distribution samples and more generalizable to out-of-distribution samples. However, somewhat surprisingly, we found that RF trained with these additional Magpie features did not provide noticeable performance gains on our tasks over the CALPHAD composition and temperature features. As such, and in order to simplify the presentation of results, we only presented results for models trained on the original dataset features (i.e. without Magpie features). Note that by providing the composition information for each data sample to Magpie, we were able to produce an additional 271 features. Of note, these features include the composition by accounting for the fraction of each element in the sample for all elements in the periodic table. For example, some additional features generated by Magpie for each sample include statistics (e.g., mean, max) for atomic weight, melting temperature, covalent radius, electronegativity, and Mendeleev number.

Deep neural network

The DNN has established state-of-the-art performance in a wide range of benchmarks and applications80,81. One key advantage of the neural network compared to traditional approaches is its capability to learn from a large amount of data without explicit feature engineering. For a given type of data (e.g., image, graph, time series) neural network architectures are often tailored to leverage their unique structure and properties. Such prior knowledge, often referred to as “inductive bias” help the ML model to access knowledge beyond the raw dataset itself. For example, CNN82 utilizes the fact that images reside on a 2D grid. However, for simple input, or input in which we do not have an explicit understanding of suitable assumptions, a multilayer perceptron (MLP) can be used to obtain reasonable performance. In this work, we implemented several variations of MLP with different parameter counts to explore the trade-off between model expressiveness and the potential to overfit, including (1) a standard (3-layer) MLP, (2) a deeper MLP with skip-connections83, and (3) MLP with Fourier features84. Despite the later variations incorporating more recent advances, the overall performance of each MLP variation is comparable with similar parameter counts. The presented result uses the standard MLP setup, as it represents a general baseline performance for DNN.

Data availability

All data generated or analyzed during this study will be included in this published article (and its supplementary information files) upon acceptance.

Code availability

The code to reproduce RF and DNN models will be available upon request.

References

Ye, Y., Wang, Q., Lu, J., Liu, C. & Yang, Y. High-entropy alloy: challenges and prospects. Mater. Today 19, 349–362 (2016).

Li, W. et al. Mechanical behavior of high-entropy alloys. Prog. Mater. Sci. 118, 100777 (2021).

Ma, E. & Wu, X. Tailoring heterogeneities in high-entropy alloys to promote strength–ductility synergy. Nat. Commun. 10, 5623 (2019).

Shi, P. et al. Enhanced strength–ductility synergy in ultrafine-grained eutectic high-entropy alloys by inheriting microstructural lamellae. Nat. Commun. 10, 489 (2019).

Miracle, D. & Senkov, O. A critical review of high entropy alloys and related concepts. Acta Mater. 122, 448–511 (2017).

Senkov, O. N., Miracle, D. B., Chaput, K. J. & Couzinie, J.-P. Development and exploration of refractory high entropy alloys-a review. J. Mater. Res. 33, 3092–3128 (2018).

Elder, K. L. et al. Computational discovery of ultra-strong, stable, and lightweight refractory multi-principal element alloys. part i: design principles and rapid down-selection. npj Comput. Mater. 9, 84 (2023).

Elder, K. L. et al. Computational discovery of ultra-strong, stable, and lightweight refractory multi-principal element alloys. part ii: comprehensive ternary design and validation. npj Comput. Mater. 9, 88 (2023).

Senkov, O., Wilks, G., Scott, J. & Miracle, D. Mechanical properties of nb25mo25ta25w25 and v20nb20mo20ta20w20 refractory high entropy alloys. Intermetallics 19, 698–706 (2011).

Senkov, O., Wilks, G., Miracle, D., Chuang, C. & Liaw, P. Refractory high-entropy alloys. Intermetallics 18, 1758–1765 (2010).

Tseng, K.-K. et al. Effects of mo, nb, ta, ti, and zr on mechanical properties of equiatomic hf-mo-nb-ta-ti-zr alloys. Entropy 21, 15 (2018).

Feng, R. et al. Superior high-temperature strength in a supersaturated refractory high-entropy alloy. Adv. Mater. 33, 2102401 (2021).

Shittu, J. et al. Microstructural, phase, and thermophysical stability of crmonbv refractory multi-principal element alloys. J. Alloy. Compd. 977, 173349 (2024).

Zhu, S. et al. Probing phase stability in crmonbv using cluster expansion method, calphad calculations and experiments. Acta Mater. 255, 119062 (2023).

Saunders, N. & Miodownik, A. P. CALPHAD (calculation of phase diagrams): a comprehensive guide (Elsevier, 1998).

Yang, S., Lu, J., Xing, F., Zhang, L. & Zhong, Y. Revisit the vec rule in high entropy alloys (heas) with high-throughput calphad approach and its applications for material design-a case study with al–co–cr–fe–ni system. Acta Mater. 192, 11–19 (2020).

Eliseeva, O. et al. Functionally graded materials through robotics-inspired path planning. Mater. Des. 182, 107975 (2019).

Maresca, F. & Curtin, W. A. Mechanistic origin of high strength in refractory bcc high entropy alloys up to 1900k. Acta Mater. 182, 235–249 (2020).

Vazquez, G. et al. Efficient machine-learning model for fast assessment of elastic properties of high-entropy alloys. Acta Mater. 232, 117924 (2022).

Hu, Y.-J., Sundar, A., Ogata, S. & Qi, L. Screening of generalized stacking fault energies, surface energies and intrinsic ductile potency of refractory multicomponent alloys. Acta Mater. 210, 116800 (2021).

Pillai, R., Galiullin, T., Chyrkin, A. & Quadakkers, W. J. Methods to increase computational efficiency of calphad-based thermodynamic and kinetic models employed in describing high temperature material degradation. Calphad 53, 62–71 (2016).

Roos, W. A., Bogaers, A. E. & Zietsman, J. H. Geometric acceleration of complex chemical equilibrium calculations-performance in two-to five-component systems. Calphad 82, 102584 (2023).

Wen, C. et al. Machine learning assisted design of high entropy alloys with desired property. Acta Mater. 170, 109–117 (2019).

Klimenko, D., Stepanov, N., Li, J., Fang, Q. & Zherebtsov, S. Machine learning-based strength prediction for refractory high-entropy alloys of the al-cr-nb-ti-v-zr system. Materials 14, 7213 (2021).

Wang, J., Kwon, H., Kim, H. S. & Lee, B.-J. A neural network model for high entropy alloy design. npj Comput. Mater. 9, 60 (2023).

Zheng, X., Zheng, P. & Zhang, R.-Z. Machine learning material properties from the periodic table using convolutional neural networks. Chem. Sci. 9, 8426–8432 (2018).

Li, Y. & Guo, W. Machine-learning model for predicting phase formations of high-entropy alloys. Phys. Rev. Mater. 3, 095005 (2019).

Bartel, C. J. et al. Physical descriptor for the Gibbs energy of inorganic crystalline solids and temperature-dependent materials chemistry. Nat. Commun. 9, 4168 (2018).

Ward, L. et al. Including crystal structure attributes in machine learning models of formation energies via Voronoi tessellations. Phys. Rev. B 96, 024104 (2017).

Krajewski, A. M., Siegel, J. W., Xu, J. & Liu, Z.-K. Extensible structure-informed prediction of formation energy with improved accuracy and usability employing neural networks. Comput. Mater. Sci. 208, 111254 (2022).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Cortes, C. & Vapnik, V. Support-vector networks. Mach. Learn. 20, 273–297 (1995).

Friedman, J. H. Greedy function approximation: a gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001).

Zeng, Y., Man, M., Bai, K. & Zhang, Y.-W. Explore the full temperature-composition space of 20 quinary ccas for fcc and bcc single-phases by an iterative machine learning +  calphad method. Acta Mater. 231, 117865 (2022).

Zeng, Y. et al. Machine learning-based inverse design for single-phase high entropy alloys. APL Mater. 10, 101104 (2022).

Lee, K., Ayyasamy, M. V., Delsa, P., Hartnett, T. Q. & Balachandran, P. V. Phase classification of multi-principal element alloys via interpretable machine learning. npj Comput. Mater. 8, 1–12 (2022).

Roy, A. & Balasubramanian, G. Predictive descriptors in machine learning and data-enabled explorations of high-entropy alloys. Comput. Mater. Sci. 193, 110381 (2021).

Deffrennes, G., Terayama, K., Abe, T. & Tamura, R. A machine learning–based classification approach for phase diagram prediction. Mater. Des. 215, 110497 (2022).

Vazquez, G., Chakravarty, S., Gurrola, R. & Arróyave, R. A deep neural network regressor for phase constitution estimation in the high entropy alloy system al-co-cr-fe-mn-nb-ni. npj Computat. Mater. 9, 68 (2023).

Schäfl, B., Gruber, L., Bitto-Nemling, A. & Hochreiter, S. Hopular: modern hopfield networks for tabular data. arXiv https://arxiv.org/abs/2206.00664 (2022).

Somepalli, G., Goldblum, M., Schwarzschild, A., Bruss, C. B. & Goldstein, T. Saint: improved neural networks for tabular data via row attention and contrastive pre-training. arXiv https://arxiv.org/abs/2106.01342 (2021).

Tsutsui, K. & Moriguchi, K. A computational experiment on deducing phase diagrams from spatial thermodynamic data using machine learning techniques. Calphad 74, 102303 (2021).

Wright, R. E. Logistic regression. In L. G. Grimm & P. R. Yarnold (Eds.), Reading and understanding multivariate statistics. 217–244, American Psychological Association. (1995).

Friedman, J. H. Stochastic gradient boosting. Comput. Stat. Data Anal. 38, 367–378 (2002).

Steel, R. G. D., Torrie, J. H. et al. Principles and procedures of statistics. Biom. Z. 4, 207–208 (1960).

Willmott, C. J. & Matsuura, K. Advantages of the mean absolute error (mae) over the root mean square error (rmse) in assessing average model performance. Clim. Res. 30, 79–82 (2005).

Rao, Z. et al. Machine learning–enabled high-entropy alloy discovery. Science 378, 78–85 (2022).

Hasek, B. Thermodynamic modeling and first-principles calculations of the Cr-Hf-Y ternary system. M.S., Pennsylvania State University, State College, PA (2010).

Frisk, K. & Gustafson, P. An assessment of the cr-mo-w system. Calphad 12, 247–254 (1988).

Neto, J. G. C., Fries, S. G., Lukas, H. L., Gama, S. & Effenberg, G. Thermodynamic optimisation of the nb-cr system. Calphad 17, 219–228 (1993).

Dupin, N. & Ansara, I. Thermodynamic assessment of the cr-ta system. J. Phase Equilib 14, 451–456 (1993).

Kaufman, L., Turchi, P., Huang, W. & Liu, Z.-K. Thermodynamics of the cr-ta-w system by combining the ab initio and calphad methods. Calphad 25, 419–433 (2001).

Ghosh, G. Thermodynamic and kinetic modeling of the cr-ti-v system. J. Phase Equilib. 23, 310 (2002).

Lukas, H. Cost 507 thermochemical database for light metal alloys. EUR 18499 EN 2 (1998).

Shao, G. Thermodynamic assessment of the hf–mo and hf–w systems. Intermetallics 10, 429–434 (2002).

Ghosh, G., Van de Walle, A., Asta, M. & Olson, G. Phase stability of the hf-nb system: from first-principles to calphad. Calphad 26, 491–511 (2002).

Guillermet, A. F. Gibbs energy modelling of the phase diagram and thermochemical properties in the hf-ta system. Int. J. Mater. Res. 86, 382–387 (1995).

Bittermann, H. & Rogl, P. Critical assessment and thermodynamic calculation of the ternary system boron-hafnium-titanium (b-hf-ti). J. Phase Equilib. 18, 24–47 (1997).

Servant, C. Thermodynamic assessments of the phase diagrams of the hafnium-vanadium and vanadium-zirconium systems. J. Phase Equilib. Diffus. 26, 39–49 (2005).

Bittermann, H. & Rogl, P. Critical assessment and thermodynamic calculation of the ternary system c-hf-zr (carbon-zirconium-hafnium). J. Phase Equilib. 23, 218 (2002).

Xiong, W. et al. Thermodynamic assessment of the mo–nb–ta system. Calphad 28, 133–140 (2004).

Turchi, P. et al. Application of ab initio and calphad thermodynamics to mo-ta-w alloys. Phys. Rev. B 71, 094206 (2005).

Hu, B., Wang, J., Wang, C., Du, Y. & Zhu, J. Calphad-type thermodynamic assessment of the ti–mo–cr–v quaternary system. Calphad 55, 103–112 (2016).

Bratberg, J. & Frisk, K. A thermodynamic analysis of the mo-v and mo-vc system. Calphad 26, 459–476 (2002).

Perez, R. J. & Sundman, B. Thermodynamic assessment of the mo–zr binary phase diagram. Calphad 27, 253–262 (2003).

Zhang, Y., Liu, H. & Jin, Z. Thermodynamic assessment of the nb-ti system. Calphad 25, 305–317 (2001).

Kumar, K. H., Wollants, P. & Delaey, L. Thermodynamic calculation of nb-ti-v phase diagram. Calphad 18, 71–79 (1994).

Huang, W. & Selleby, M. Thermodynamic assessment of the nb–w–c system. Int. J. Mater. Res. 88, 55–62 (2021).

Fernandez, G. et al. Thermodynamic analysis of the stable phases in the zr-nb system and calculation of the phase diagram. Z. Metallkd. J. 82, 478–487 (1991).

Danon, C. & Servant, C. A thermodynamic evaluation of the ta–v system. J. Alloy. Compd. 366, 191–200 (2004).

Guillermet, A. F. Phase diagram and thermochemical properties of the zr-ta system. an assessment based on gibbs energy modelling. J. Alloy. Compd. 226, 174–184 (1995).

Jonsson, S. Reevaluation of the ti-w system and prediction of the ti-wn phase diagram. Int. J. Mater. Res. 87, 784–787 (1996).

Kumar, K. H., Wollants, P. & Delacy, L. Thermodynamic assessment of the ti–zr system and calculation of the nb–ti–zr phase diagram. J. Alloy. Compd. 206, 121–127 (1994).

Bratberg, J. Investigation and modification of carbide sub-systems in the multicomponent fe–c–co–cr–mo–si–v–w system. Int. J. Mater. Res. 96, 335–344 (2022).

Cui, J., Guo, C., Zou, L., Li, C. & Du, Z. Experimental investigation and thermodynamic modeling of the ti–v–zr system. Calphad 55, 189–198 (2016).

Zhou, P., Peng, Y., Du, Y., Wang, S. & Wen, G. Thermodynamic modeling of the c–w–zr system. Int. J. Ref. Met. Hard Mater. 50, 274–281 (2015).

Otis, R. & Liu, Z.-K. pycalphad: calphad-based computational thermodynamics in python. J. Open Res. Softw. 5, 1 (2017).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Ward, L., Agrawal, A., Choudhary, A. & Wolverton, C. A general-purpose machine learning framework for predicting properties of inorganic materials. npj Comput. Mater. 2, 16028 (2016).

Deng, J. et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, 248–255 (Ieee, 2009).

Krizhevsky, A. et al. Learning multiple layers of features from tiny images. https://api.semanticscholar.org/CorpusID:18268744 (2009).

Sermanet, P., Chintala, S. & LeCun, Y. Convolutional neural networks applied to house numbers digit classification. In Proceedings of the 21st international conference on pattern recognition (ICPR2012), 3288–3291 (IEEE, 2012).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 (2016).

Tancik, M. et al. Fourier features let networks learn high frequency functions in low dimensional domains. Adv. Neural Inf. Process. Syst. 33, 7537–7547 (2020).

Acknowledgements

This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. This work was supported by the Laboratory Directed Research and Development (LDRD) program under project tracking code 22-SI-007, and has been reviewed and released under LLNL-JRNL-848291.

Author information

Authors and Affiliations

Contributions

S. Liu: Conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal); visualization (equal); writing/original draft preparation (lead). B. Bocklund and J. Diffenderfer: conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal); visualization (equal); writing/original draft preparation (equal). S. Chaganti: methodology (equal); visualization (equal). B. Kailkhura: conceptualization (equal); formal analysis (supporting); investigation (equal); methodology (equal); supervision (supporting); visualization (equal); writing/original draft preparation (supporting). S. Mccall: funding acquisition (supporting); project administration (equal); writing/original draft preparation (supporting). B. Gallagher: conceptualization (equal); formal analysis (equal); funding acquisition (supporting); investigation (equal); methodology (equal); supervision (lead); visualization (equal); project administration (equal); writing/original draft preparation (supporting); A. Perron: conceptualization (equal); formal analysis (equal); funding acquisition (supporting); investigation (equal); methodology (equal); supervision (supporting); visualization (equal); project administration (equal); writing/original draft preparation (equal); J. Mckeown: funding acquisition (lead); project administration (equal); writing/original draft preparation (supporting).

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, S., Bocklund, B., Diffenderfer, J. et al. A comparative study of predicting high entropy alloy phase fractions with traditional machine learning and deep neural networks. npj Comput Mater 10, 172 (2024). https://doi.org/10.1038/s41524-024-01335-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-024-01335-1

- Springer Nature Limited