Abstract

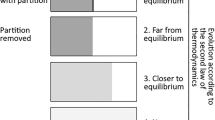

WOOLHOUSE1 remarks that the work of Shannon and Brillouin showed the fundamental relationship between information defined as I=−ΣPi log Pi (where 0 ⩽Pi⩽1, ΣPi=1 and Pi is the relative probability of the ith symbol generated by a source), and entropy defined in statistical terms as S=−KΣPi log Pi (where ΣPi=1 and Pi is, in this case, the probability of an idealized physical system being in the state i of n possible equivalent states or complexions). It is the unwarranted extrapolation of this relationship to biological systems which, Woolhouse says, leads to erroneous conclusions. He points to the warning given by Brillouin himself, that the theory of information ignores the value or the meaning of the information which is quantified by the definition. Yet in spite of these warnings by Brillouin, the confusion is already present in his work even before its extension to biology.

Similar content being viewed by others

References

Woolhouse, H. W., Nature, 216, 200 (1967).

Brillouin, L., Science and Information Theory, second ed. (Academic Press, New York, 1962).

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

WILSON, J. Entropy, not Negentropy. Nature 219, 535–536 (1968). https://doi.org/10.1038/219535a0

Received:

Issue Date:

DOI: https://doi.org/10.1038/219535a0

- Springer Nature Limited