Abstract

A fundamental difficulty faced by groups of agents that work together is how to efficiently coordinate their efforts. This coordination problem is both ubiquitous and challenging, especially in environments where autonomous agents are motivated by personal goals.

Previous AI research on coordination has developed techniques that allow agents to act efficiently from the outset based on common built-in knowledge or to learn to act efficiently when the agents are not autonomous. The research described in this paper builds on those efforts by developing distributed learning techniques that improve coordination among autonomous agents.

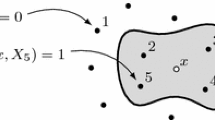

The techniques presented in this work encompass agents who are heterogeneous, who do not have complete built-in common knowledge, and who cannot coordinate solely by observation. An agent learns from her experiences so that her future behavior more accurately reflects what works (or does not work) in practice. Each agent stores past successes (both planned and unplanned) in their individual casebase. Entries in a casebase are represented as coordinated procedures and are organized around learned expectations about other agents.

It is a novel approach for individuals to learn procedures as a means for the group to coordinate more efficiently. Empirical results validate the utility of this approach. Whether or not the agents have initial expertise in solving coordination problems, the distributed learning of the individual agents significantly improves the overall performance of the community, including reducing planning and communication costs.

Similar content being viewed by others

References

R. Alterman, “An adaptive planner,” in Proceedings of the Fifth National Conference on Artificial Intelligence. 1986, Reprinted in Readings in Planning. Morgan Kaufmann, Publishers, 1990, pp. 65-69.

R. Alterman, “Adaptive planning,” Cognitive Sci., vol. 12, pp. 393-421, 1988.

R. Alterman and A. Garland, “Convention in joint activity,” Cognitive Sci., vol. 25(4) pp. 611-657, 2001.

M. E. Bratman, “Shared cooperative activity,” in Faces of Intention, Cambridge University Press: Cambridge, 1999, pp. 93-108.

J. Carbonell, “Derivational analogy and its role in problem solving,” in Proceeding of the Third National Conference on Artificial Intelligence, 1983, pp. 64-69.

H. H. Clark, Using Language, Cambridge University Press: Cambridge, 1996.

K. S. Decker and V. R. Lesser, “Generalized partial global planning,” Int. J. Intelli. Coop. Inf. Syst., vol. 1, no. 2, pp. 319-346, 1992.

G. DeJong and R. Mooney “Explanation-based learning: an alternative view,” Mach. Learn., vol. 1, no. 2, pp. 145-176, 1986.

M. E. desJardins, E. H. Durfee, C. L. Ortiz, and M. J. Wolverton, “A survey of research in distributed, continual planning,” AI Magazine, vol. 20, no. 4, pp. 13-22, 1999.

E. H. Durfee and V. R. Lesser, “Partial global planning: A coordination framework for distributed hypothesis formation,” IEEE Trans. Sys. Man. Cyber., vol. 21, no. 5, pp. 1167-1183, 1991.

D. H. Fisher, “Knowledge acquisition via incremental conceptual clustering,” Mach. Learn., vol. 2, pp. 139-172, 1987.

A. Garland, Learning to Better Coordinate in Joint Activities. Ph.D. thesis, Brandeis University.

B. Grosz and C. Sidner, “Plans for discourse.“ in P. R. Cohen, J. Morgan, and M. E. Pollack, eds., Intentions in Communication. Bradford Books: Cambridge, MA, 1990, pp. 417-444.

K. J. Hammond, “CHEF: A model of case-based planning,” in Proceedings of the Fifth National Conference on Artificial Intelligence, 1986a, pp. 267-271.

K. J. Hammond, “Learning to anticipate and avoid planning problems through the explanation of failures,” in Proceedings of the Fifth National Conference on Artificial Intelligence, 1986b, pp. 556-560.

K. J. Hammond, “Case-based planning: a framework for planning from experience,” Cognitive Sci., vol. 14, pp. 385-443, 1990.

T. Haynes and S. Sen, “Learning cases to resolve conflicts and improve group behavior,” Int. J. Human-Comput. Stud., vol. 48, pp. 31-49, 1998.

F. Ho and M. Kamel, “Learning coordination strategies for cooperative multiagent systems,” Mach. Learn., vol. 33, no. 2-3, pp. 155-177, 1998.

S. Kambhampati and J. A. Hendler “Control of refitting during plan reuse,” Artifi. Intelli., vol. 55, pp. 193-258, 1992.

J. Kolodner, “Retrieving events from a case memory: A parallel implementation,” in J. Kolodner (ed.), Case-based Reasoning Workshop, San Mateo, CA, 1988.

J. L. Kolodner, “Maintaining organization in a dynamic long-term memory,” Cognitive Sci., vol. 7, pp. 243-280, 1983a.

J. L. Kolodner, “Reconstructive memory: A computer model,” Cognitive Sci., vol. 7, pp. 281-328, 1983b.

N. Kushmerick, S. Hanks, and D. Weld, “An algorithm for probabilistic planning,” Artif. Intell., vol. 76, pp. 239-286, 1995.

Y. Labrou and T. Finin, “Proposal for a new KQML specification,” Technical Report CS-97-03, University of Maryland Baltimore County, 1997.

J. E. Laird, P. S. Rosenbloom, and A. Newell “Chunking in SOAR: The anatomy of a general learning mechanism,” Mach. Learn., vol. 1, pp. 11-46, 1986.

H. J. Levesque, P. R. Cohen, and J. H. T. Nunes “On Acting Together,” in Proceeding of the Eighth National Conference on Artificial Intelligence, 1990, pp. 94-99.

J. McCarthy, “Epistemological problems in artificial intelligence,” in Proceeding of the Fifth International Joint Conference on Artificial Intelligence, 1977, pp. 1038-1044.

T. Mitchell, R. Keller, and S. Kedar-Cabelli, “Explanation-based generalization: A unifying view,” Mach. Learn., vol. 1, pp. 47-80, 1986.

M. V. NagendraPrasad, V. R. Lesser, and S. Lander, “Retrieval and reasoning in distributed case bases,” Technical Report CS TR 95-27, University of Massachusetts, 1995.

T. Ohko, K. Hiraki, and Y. Anzai, “Learning to reduce communication costs in task negotiation among multiple autonomous mobile robots,” in G. Weiβ and S. Sen (eds.), Adaptation and Learning in Multi-Agent Systems, Lecture Notes in Artificial Intelligence, Springer-Verlag: Berlin, 1996, pp. 177-190.

M. V. N. Prasad and V. R. Lesser, “Learning situation-specific coordination in cooperative multiagent systems,” Auton. Agents Multi-Agent Sys., vol. 2, pp. 173-207, 1999.

E. D. Sacerdoti, “The nonlinear nature of plans,” in Proceedings of the Fourth International Joint Conference on Artificial Intelligence, 1975, pp. 206-214.

T. C. Schelling, The Strategy of Conflict, Oxford University Press; New York, NY, 1963, First published in 1960.

B. Smyth and M. T. Keane, “Remembering to forget,” in Proceedings of the Fourteenth Intrenational Joint Conference on Artificial Intelligence, 1995, pp. 377-382.

L. A. Suchman, Plans and situated actions. Cambridge University Press: Cambridge, 1987.

T. Sugawara and V. Lesser, “Learning to improve coordinated actions in cooperative distributed problem-solving environments,” Mach. Learn., vol. 33, no. 2-3, pp. 129-153, 1998.

M. Tambe, “Towards flexible teamwork,” J. Artif. Intelli. Res., vol. 7, pp. 83-124, 1997.

M. Veloso and J. Carbonell, “Derivational analogy in PRODIGY: Automating case acquisition, storage, and utilization,” Mach. Learn., vol. 10, pp. 249-278, 1993.

J. M. Vidal and E. H. Durfee, “Recursive agent modeling using limited rationality,” in Proceedings of the First International Conference on Multiagent Systems, 1995, pp. 376-383.

R. Zito-Wolf and R. Alterman, “Multicases: A case-based representation for procedural knowledge,” in Proceedings of the Fourteenth Annual Conference of the Cognitive Science Society, 1992, pp. 331-336.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Garland, A., Alterman, R. Autonomous Agents that Learn to Better Coordinate. Autonomous Agents and Multi-Agent Systems 8, 267–301 (2004). https://doi.org/10.1023/B:AGNT.0000018808.95119.9e

Issue Date:

DOI: https://doi.org/10.1023/B:AGNT.0000018808.95119.9e