Abstract

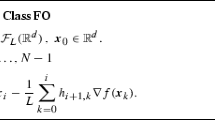

Descent algorithms use sufficient descent directions combined with stepsize rules, such as the Armijo rule, to produce sequences of iterates whose cluster points satisfy some necessary optimality conditions. In this note, we present a proof that the whole sequence of iterates converges for quasiconvex objective functions.

Similar content being viewed by others

References

Iusem, A. N., and Svaiter, B. F., A Proximal Regularization of the Steepest Descent Method, RAIRO-Recherche Opérationnelle, Vol. 29, pp. 123–130, 1995.

Armijo, L., Minimum of Functions Having Lipschitz-Continuous First Partial Derivatives, Pacific Journal of Mathematics, Vol. 16, pp. 1–3, 1966.

Wolfe, P., Convergence Conditions for Ascent Methods,SIAM Review, Vol. 11, pp. 226–235, 1969.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Dussault, J.P. Convergence of Implementable Descent Algorithms for Unconstrained Optimization. Journal of Optimization Theory and Applications 104, 739–745 (2000). https://doi.org/10.1023/A:1004602012151

Issue Date:

DOI: https://doi.org/10.1023/A:1004602012151