Abstract

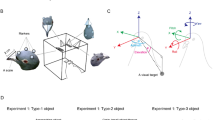

Inspired by the abilities of both the praying mantis and the pigeon to judge distance by use of motion-based visually mediated odometry, we create miniature models for depth estimation that are similar to the head movements of these animals. We develop mathematical models of the praying mantis and pigeon visual behavior and describe our implementations and experimental environment. We investigate structure from motion problems when images are taken from a camera whose focal point is translating according to each of the biological models. This motion in the first case is reminiscent of a praying mantis peering its head left and right, apparently to obtain depth perception, hence the moniker “mantis head camera.” In the second case this motion is reminiscent of a pigeon bobbing its head back and forth, also apparently to obtain depth perception, hence the moniker “pigeon head camera.” We present the performance of the mantis head camera and pigeon head camera models and provide experimental results and error analysis of the algorithms. We provide the comparison of the definitiveness of the results obtained by both models. The precision of our mathematical model and its implementation is consistent with the experimental facts obtained from various biological experiments.

Similar content being viewed by others

References

Ali, K.S. and Arkin, R.C. 1998. Implementing schema-theoretic models of animal behavior in robotic systems. In 5th International Workshop on Advanced Motion Control-AMC '98. Coimbra, Portugal, pp. 246–254.

Aloimonos, Y. 1993. Active vision revisited. In Active Perception, Lawrence Erlbaum Associates: Hillsdale, New Jersey.

Argyros, A.A., Bekris, K.E., and Orphanoudakis, S.C. 2001. Robot homing based on corner tracking in a sequence of panoramic images. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2001),Vol. 2. Kauai, Hawaii, pp. 3–10.

Arkin, R.C., Ali, K.S., Weitzenfeld, A., and Cervantes-Perez, F. 2000. Behavioral models of the praying mantis as a basis for robotic behavior. Journal of Robotics and Autonomous Systems, 32(1):39–60.

Barron, J.L., Fleet, D.J., and Beauchemin, S.S. 1994. Performance of optical flowtechniques. International Journal of Computer Vision, 12:43–77.

Chameron, S., Beugnon, G., Schatz, B., and Collett, T.S. 1999. The use of path integration to guide route learning in ants. Nature, 399:769–772.

Chaumette, F., Boukir, S., Bouthemi, P., and Juvin, D. 1996. Structure from controlled motion. IEEE Transactions on Pattern Analysis and Machine Intelligence, 18(5):492–504.

Collett, T.S. 1996. Insect navigation en route to the goal: Multiple strategies for the use of landmarks. Journal of Experimental Biology, 199:227–235.

Collett, T.S. and Rees, J.A. 1997. View-based navigation in hymenoptera: Multiple strategies of landmark guidance in the approach to a feeder. J. Comp. Physiol.A, 181:47–58.

Dalmia, L.K. and Trivedi, M. 1996. High speed extraction of 3D structure of selectable quality using a translating camera. Computer Vision and Image Understanding, 64:97–110.

Davies, M.N.O. and Green, P.R. 1988. Head-bobbing during walking, running and flying: Relative motion perception in the pigeon. Journal of Experimental Biology, 138:71–91.

Davies, M.N.O. and Green, P.R. 1990. Optic flow-field variables trigger landing in hawk but not in pigeons. Naturwissenschaften, 77:142–144.

Davies, M.N.O. and Green, P.R. 1991. The adaptability of visuo-motor control in the pigeon during flight. Zool. Jahrb. Physiol., 95:331–338.

Dellaert, F., Seitz, S.M., Thorpe, C.E., and Thrun, S. 2000. Structure from motion without correspondence. In IEEE, CVPR00, pp. 557–564.

Dunlap, K. and Mowrer, O.H. 1930. Head movements and eye functions of birds. J. Comp. Psychol., 11:99–113.

Fah, L. and Xiang, T. 2001. Characterizing depth distortion under different generic motions. International Journal of Computer Vision, 44(3):199–217.

Frost, B.J. 1978. The optokinetic basis of head-bobbing in the pigeon. Journal of Experimental Biology, 74:187–195.

Iida, F. 2001. Goal-directed navigation of an autonomous flying robot using biologically inspired cheap vision. In Proceedings of the 32nd ISR (International Symposium on Robotics), pp. 19–21.

Kamon, I., Rimon, E., and Rivlin, E. 1998. Tangent bug: A range-Sensor-Based navigation algorithm. International Journal of Robotic Research, 17(9):934–953.

Kamon, I. and Rivlin, E. 1997. Sensor based motion planning with global proofs. IEEE Transactions on Robotics and Automation, 13(6):814–822.

Kirschfeld, K. 1976. The resolution of lens and compound eyes. In F. Zettler and R. Weiler (eds.), Neural Principles in Vision, Springer: Berlin, pp. 354–370.

K¨ ock, A., Jakobs, A.-K., and Kral, K. 1993. Visual prey discrimination in monocular and binocular praying mantis Tenodera sinensis during postembryonic development. J. Insect Physiol., 39:485–491.

Kral, K. 1998. Side-to-side head movements to obtain motion depth cues: A short review of research on the praying mantis. Behavioural Processes, 43:71–77.

Kral, K. 1999. Binocular vision and distance estimation. In F.R.Prete, H. Wells, P.H. Wells, and L.E. Hurd (eds.), The PrayingMantids: Research Perspectives, Johns Hopkins University Press: Baltimore, pp. 114–140.

Kral, K. and Devetak, D. 1999. The visual orientation strategies of Mantis religiosa and Empusa fasciata reflect differences in the structure of their visual surroundings. J. Insect Behav., 12:737–752.

Kral, K. and Poteser, M. 1997. Motion parallax as a source of distanceinformation in locusts and mantids. J. Insect Behav., 10:145–163.

Lambrinos, D., M¨ oller, R., Labhart, T., Pfeifer, R., and Wehner, R.2000. A mobile robot employing insect strategies for navigation.Robotics and Autonomous Systems, special issue on BiomimeticRobots, 30:39–64.

Land, M.F. 1999. Motion and vision: Why animals move their eyes.J. Comp Physiol.A, 185:341–352.

Lenz, R.K. and Tsai, R.Y. 1988. Techniques of calibration of the scalefactor and image center for high accuracy 3D machine visionmetrology. IEEE Transactions on Pattern Analysis and MachineIntelligence, 10:713–720.

Lewis, M.A. and Nelson, M.E. 1998. Look before you Leap: Peering behaviour for depth perception. In R. Pfeifer, B. Blumberg, J.-A. Meyer, and S.W. Wilson (eds.), From Animals to Animats 5:Proceedings of the Fifth Internatoinal Conference on Simulationof Adaptive Behavior, MIT Press, pp. 98–103.

Lumelsky, V. and Skewis, T. 1990. Incorporating range sensing inthe robot navigation function. IEEE Transactions on Systems, Manand Cybernetics, 20(5):1058–1069.

Martin, G.R. and Katzir, G. 1995. Visual fields in ostriches. Nature,374:19–20.

Martin, G.R. and Katzir, G. 1999. Visual fields in short toed eagles,Circaetus gallicus (Accipitridae), and the function of binocularityin birds. Brain, Behavior and Evolution, 53:55–56.

Martinoya, C., Le Houezec, J., and Bloch, S. 1988. Depth resolutionin the pigeon. J. Comp. Physiol.A, 163:33–42.

McFadden, S.A. 1993. Constructing the three-dimensional image. InH.P. Zeigler and H.-J. Bischof (eds.), Vision, Brain and Behaviorin Birds, MIT Press: Cambridge, MA, pp. 47–61.

McFadden, S.A. 1994. Binocular depth perception. In M.N.O. Daviesand P.R.G. Green (eds.), Perception and Motor Control in Birds,an Ecological Approach, Springer-Verlag: Berlin, pp. 5–34.

M¨ oller, R. and Lambrinos, D. 1997. Modelling the landmark navigation behavior of the desert ant Cataglyphis. Technical report, AILab, University of Zurich.

M¨ oller, R., Lambrinos, D., Pfeifer, R., Labhart, T., and Wehner, R. 1998. Modeling ant navigation with an autonomous agent. In FromAnimals to Animats 5: Proceedings of the Fifth International Conferenceon Simulation of Adaptive Behavior, MIT Press, pp. 185–194.

M¨ oller, R., Lambrinos, D., Roggendorf, T., Pfeifer, R., and Wehner,R. 2000. Insect strategies of visual homing in mobile robots. In T.Consi and B. Webb (eds.), Biorobotics, AAAI Press.

Nicholson, D.J., Judd, S.P.D., Cartwright, B.A., and Collett, T.S.1999. Learning walks and landmark guidance in wood antsFormica Rufa. Journal of Experimental Biology, 202:1831–1838.

Poteser, M. and Kral, K. 1995. Visual distance discrimination between stationary targets in praying mantis: An index of the useof motion parallax. Journal of Experimental Biology, 198:2127–2137.

Poteser, M., Pabst, M.A., and Kral, K. 1998. Proprioceptive contribution to distance estimation by motion parallax in praying mantid.Journal of Experimental Biology, 201:1483–1491.

Sandini, G. and Tistarelli, M. 1990. Active tracking strategy formonocular depth inference over multiple frames. IEEE Transactions on Pattern Analysis and Machine Intelligence, 12:13–27.

Sobel, E.C. 1990. The locust's use of motion parallax to measuredistance. J. Comp. Physiol.A, 167:579–588.

Srinivasan, M.V., Lehrer, M., Kirchner, W., Zhang, S.W., and Horridge, G.A. 1988. How honeybees use motion cues to estimatethe range and distance objects. In Proc. IEEE SMC, pp. 579–582(in English).

Srinivasan, M.V., Poteser, M., and Kral, K. 1999. Motion detection in insect orientation and navigation. Vision Res., 39:2749–2766.

Srinivasan, M.V., Zhang, S.W., and Bidwell, N. 1997. Visually mediate dodometry in honeybees. Journal of Experimental Biology,200:2513–2522.

Srinivasan, M.V., Zhang, S.W., Lehrer, M., and Collett, T.S. 1996. Honeybee navigation en route to the goal: Visual flight control andodometry. Journal of Experimental Biology, 199:237–244.

Troje, N.F. and Frost, B.J. 2000. Head-bobbing in pigeons: How stable is the hold phase. Journal of Experimental Biology, 203:935–940.

Weber, K., Venkatesh, S., and Srinivasan, M.V. 1998. Aninsect-based approach to robotic homing. In ICPR98,p.CV13.

Yamawaki, Y. 2000a. Effect of luminance, size and angular velocityon the recognition of non-locomotive prey models by the prayingmantis. Journal of Ethology, 18(2):85–90.

Yamawaki, Y. 2000b. Saccadic tracking of a light grey target in themantis, Tenodera aridifolia. Journal of Insect Physiology, 46:203–210.

Zheng, W., Kanatsugu, Y., Shishikui, Y., and Tanaka, Y. 2000. Robustdepth-map estimation from image sequences with precise cameraoperation parameters. In ICIP00,p.TP08.07.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bruckstein, A., Holt, R.J., Katsman, I. et al. Head Movements for Depth Perception: Praying Mantis versus Pigeon. Autonomous Robots 18, 21–42 (2005). https://doi.org/10.1023/B:AURO.0000047302.46654.e3

Issue Date:

DOI: https://doi.org/10.1023/B:AURO.0000047302.46654.e3