Abstract

Marshall Olkin Exponentiated Dagum distribution (MOED) with four shape parameters and one scale parameter is proposed. Alternative expressions are derived for the newly proposed distribution to sort out lengthy calculations. Various properties of MOED are derived, including the measure of central tendency, the measure of dispersion, hazard rate and survival rate are also derived for the MOED. Moreover, the network properties are also derived, including moments, moment generating function, quantile function, median, and mean deviation random number generator. Estimation of parameters is done through MLE (Maximum likelihood estimation) method, and to assess the value of the proposed distribution, the performance of the parameter’s estimates is studied through Monte Carlo Simulation. Three real-life data sets are used to evaluate the application and to scrutinize the performance of MOED as compared to the Marshall Olkin Dagum (MOD) distribution, Exponentiated Dagum (ED) distribution, Dagum (D) distribution, Burr III distribution, Kumaraswamy Burr III (KBurr III) distribution, Kumaraswamy Log Logistic (KLL) distribution and Log Logistic (LL) distribution. Four information criteria are used to examine the performance of the MOED with other special cases. The results showed that the newly proposed distribution performs better than the compared distributions.

Similar content being viewed by others

1 Introduction

The suitable technique to derive a new, flexible and unknown distribution is adding parameters [1]. Through statistical techniques, the probability distributions derived from generalization have no more effect, so there is a need to be proposed such a type of probability distribution with authentic and valid application compared to previous distributions [2]. With the help of literature, the moment’s development has more effect on the generalization of probability distributions [3]. Some important distributions commonly used in reliability theory and survival analysis are Exponential, Weibull and Gamma [4,5,6]. Cox and Oakes [7] present these families' importance and application. Koleoso [8] studied the Odd-Lomax Dagum distribution and explored several properties. Aslam et al. [9] presented the Bayesian version of exponentiated Gompertz distribution using informative priors. Faryal et al. [10] discussed the Lomax distribution under different posterior priors. In application aspects, these distributions have limited performance access and cannot handle all situations. For instance, even though the exponential distribution is often described as flexible, its hazard function is restricted, being constant. The limitations of standard distributions often provoke the interest of researchers in finding new distributions by extending existing ones. Expanding a family of distributions for added flexibility or constructing covariate models is a well-known technique in the literature [10,11,12]. For instance, the family of Weibull distributions contains exponential distribution and is constructed by taking powers of exponentially distributed random variables [13]. Marshall and Olkin [14] introduced a new method of adding a parameter to a family of distributions. Marshall-Olkin extended distributions offer a wide range of behavior than the basic distributions from which they are derived. The property that the extended form of distributions can have an interesting hazard function depending on the value of the added parameter α and, therefore, can be used to model real situations in a better manner than the basic distribution resulted in the detailed study of Marshall-Olkin extended family of distributions by many researchers [15,16,17,18].

In the 1970s, Camilo Dagum [19] boarded on a mission for a statistical distribution thoroughly fitting empirical income and wealth distributions. He is unsatisfied with classical distributions used to summarize such data—the Pareto distribution [20] and the lognormal distribution [21]. The earlier characteristic is well defined by the Pareto but not by the lognormal distribution, the latter by the lognormal but not the Pareto distribution. Experimenting with a shifted log-logistic distribution, a generalized distribution previously considered by Fisk [22], he quickly realized that a further parameter was needed. This led to the Dagum type I distribution, a three-parameter distribution, and two four-parameter generalizations [23, 27].

Theorem 1

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the CDF and PDF of MOED distribution are:

where “\(\propto\)”, “\(\theta\)”, “\(\beta\)” and “\(\gamma\)” are the shape parameters and “a” is the scale parameter.

Proof

The cumulative distribution function (cdf) of the Marshall Olkin family of distribution [18] is

G(x) is the Cdf of any continuous type distribution and \(\propto\) is the shape parameter [24].

The corresponding probability density function (pdf) of the Marshall Olkin family of distribution

where g(x) is the pdf of a continuous type distribution and \(\overline{G}\left( x \right)\) = 1-\(G\left( x \right)\) is the survival function of any continuous type distribution.

The cumulative density function (cdf) and pdf of Dagum distribution [20] are,

The corresponding Pdf of Dagum distribution is

where, \(\theta {\text{ and }}\beta \) are shape parameters, and \(a{\text{ is the scale parameter}}.\)

The cdf of the exponentiated family of distribution [25] is

where x > 0, \({\upgamma } > 0{ }\)

The corresponding Pdf of the exponentiated family of distribution Nadaraja and Kotz [25] is

\(\gamma :\) shape parameter, F(x) is the Cdf, f(x) is the Pdf of any continuous distribution.

Substituting Eq. (3) in (5), the Cdf of Exponentiated Dagum distribution is,

By putting Eqs. (3) and (4) in (6), the Pdf of Exponentiated Dagum distribution is:

“\(\theta\)”, “\(\beta {\text{ and}} \gamma\)” are the shape parameters and “a” is the scale parameter.

The Cdf of Marshall Olkin Exponentiated Dagum distribution can be obtained by using Eqs. (8) and (8a) in (1)

Equation (11) is the Cdf of Marshal Olkin Exponentiated Dagum Distribution (MOED).where, “\(\propto\)”, “\(\theta\)”, “\(\beta\)” and “\(\gamma\)” are the shape parameters and “a” is the scale parameter. At the same time, the respective Pdf of Marshall Olkin Exponentiated Dagum distribution can be obtained by using Eqs. (8a) and (9) in (2).

Equation (12) is the Pdf of MOED, where “\(\propto\)”, “\(\theta\)”, “\(\beta\)” and “\(\gamma\)” are the shape parameters and “a” is the scale parameter.

Lemma 1

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then (2) is a proper PDF.

Proof

The probability density function (2) of MOED distribution is a proper pdf if

As Eq. (12) in the above equation, we get

Making substitution

Limits; When \(x \to \infty then t = 1\) and when \(x \to 0 \;{\text{then}}\; t = 0\).

So Eq. (13) becomes

Let \(\left( {1 - \propto } \right)\left( {1 - t^{\beta } } \right)^{\gamma } = s\), \(t = \left( {1 - \frac{{s^{{\frac{1}{\gamma }}} }}{{\left( {1 - \propto } \right)^{{\frac{1}{\gamma }}} }}} \right)^{{\frac{1}{\beta }}}\),\(dt = - \frac{1}{\beta \gamma }\left( {\frac{{s^{{\frac{1}{\gamma } - 1}} }}{{\left( {1 - \propto } \right)^{{\frac{1}{\gamma }}} }}} \right)\left( {1 - \frac{{s^{{\frac{1}{\gamma }}} }}{{\left( {1 - \propto } \right)^{{\frac{1}{\gamma }}} }}} \right)^{{\frac{1}{\beta } - 1}} ds\).

Limits; when \( t \to 1\; {\text{then}}\; s = 0\) and when \(t \to 0 then s = 1 - \propto\), so after simplification, we get

Let, \(\left( {\text{1 - s}} \right){{ = u}}\), \(\left( {\text{1 - u}} \right){{ = s}}\), \({{ds = - du}}\), Limits; When \({\text{s}} \to 1 - \propto {\text{ u}} = \quad \propto\) and when \({\text{s}} \to 0{\text{ u}} = 1\), so we have

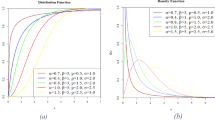

Figure 1 shown below represent the shapes of pdf and CDF of MOED distribution.

1.1: Pdf plot of MOED distribution. 1.2: Pdf plot of MOED distribution. 1.3: F(x) plot of MOED when a = 0.4, \({\upbeta }\) = 1.2, \({\upgamma } = 5\) and \({\uptheta } = 2\). 1.4: F(x) plot of MOED when a = 0.4, \({\upbeta }\) = 1.2, \({\upgamma } = 5\) and \(\propto = 5\). 1.5: F(x) plot of MOED when a = 1, \({\upbeta }\) = 1.2, \(\propto = 5{\text{ and }}\) \({\uptheta } = 3\). 1.6: F(x) plot of MOED when a = 1,, \({\upgamma } = 12{ }\), \(\propto = 5{\text{ and }}\) \({\uptheta } = 3\)

The CDF plots for MOED distribution depict an increasing behavior by varying the respective parameters and keeping the rest of the parameters constant. F(x) plots show a gradual rise by varying the parameters a,\({{ \beta }}\), \(\propto {\text{and}}\) \({\upgamma }\) one by one and keeping the rest of the parameters fixed.

Lemma 1.2

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the CDF (1) can be expressed as

where

Proof

For any positive real number “a” and for |z|< 1, we have the generalized binomial expansion

Applying expression (15) in the denominator of Eq. (1),

or, \(\left( {1 - \left( {1 - (1 + ax^{ - \theta } )^{ - \beta } } \right)^{\gamma } } \right)^{2 - 1} \mathop \sum \limits_{i = 0}^{\infty } \left( {1 - \propto } \right)^{i} \frac{{\Gamma \left( {1 + i} \right)}}{\Gamma \left( 1 \right)i!}\left( {1 - (1 + ax^{ - \theta } )^{ - \beta } } \right)^{\gamma i} .\)

Consider the power series expansion by Cordeiro et al. [15]

Using the expression (16) in the above equation, we get

Again using the expression (16) in the above equation, we will get (14).

Lemma 1.3

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the PDF (2) can be expressed as

where, \(t_{j} = \mathop \sum \limits_{i = 0}^{\infty } \left( {1 - \propto } \right)^{i} \frac{{\left( { - 1} \right)^{j} }}{i!j!}\frac{{\Gamma \left( {2 + i} \right)}}{\Gamma \left( 2 \right)}\frac{{\Gamma \left( {\gamma \left( {i + 1} \right)} \right) }}{{\Gamma \left( {\gamma \left( {i + 1} \right) - j} \right)}}.\)

Proof

Applying the generalized Binomial expansion (15) in Eq. (2), we get

Now applying the power series expansion (16), we have

Corollary 1.1

If \(\gamma\) = 1 in (2), we get the Marshall Olkin Dagum distribution:

Corollary 1.2

If \(\propto\) = 1 in (2), we get the Exponentiated Dagum distribution

Corollary 1.3

If \(\propto\) = 1 and \(\gamma = 1\) in (2), we get the Cdf of Dagum distribution

Corollary 1.4

If \(\propto\) = 1, \(\beta\) = 1 and \(\gamma = 1\) in (2), we get the cdf of Log-logistic or Fisk distribution

Corollary 1.5

If \(\propto\) = 1, a = 1 and \(\gamma = 1\) in (2), we get the Cdf of Burr III distribution

Corollary 1.6

If \(\propto\) = 1 and a = 1 in (2), we get the cdf of Kumaraswamy Burr III distribution

Corollary 1.7

If \(\propto \) =1 and \(\beta \) =1 in (2), we get the cdf of Kumaraswamy Fisk or Kumaraswamy Log-logistic distribution.

2 Statistical Properties of MOED Distribution

In this section, the statistical properties of the proposed MOED distribution will be developed and discussed.

Theorem 2.1

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the characteristic function for r.v X is

Proof

The characteristic function for MOED distribution can be defined as;

Making substitution

Limits; when \(x \to \infty\) then \(s = 1 \) and when \(x \to 0\) then \(s = 0\). So Eq. (19) becomes

Because of Mead and Abd–Eltawab [26], \(e^{tx} = \mathop \sum \limits_{j = 0}^{\infty } \frac{{(tx)^{j} }}{j!}\), we have

or, \({{\varphi }}_{{\text{x}}} \left( {\text{t}} \right) = \, \propto {{\gamma \beta }}\mathop \sum \limits_{{{\text{j}} = 0}}^{\infty } \mathop \sum \limits_{{{\text{p}} = 0}}^{\infty } \frac{{{\text{t}}_{{\text{j}}} (ita^{{\frac{1}{\theta }}} )^{p} }}{p!}\frac{{\Gamma \left( {\beta \left( {j + 1} \right) + \frac{p}{\theta }} \right)\Gamma \left( {1 - \frac{p}{\theta }} \right)}}{{\Gamma \left( {\beta \left( {j + 1} \right) + 1} \right)}}, \) Under condition \( \left( {\frac{p}{\theta } < 1} \right).\)

Theorem 2.2

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the moment generating function for r.v X is

Proof

Moment generating function for MOED can be defined as,

where, \({\text{E}}\left( {{\text{X}}^{{\text{r}}} } \right) = \mathop \int \limits_{0}^{\infty } {\text{x}}^{{\text{r}}} \propto {{\gamma a\theta \beta x}}^{{{{ - \theta - 1}}}} \mathop \sum \limits_{j = 0}^{\infty } t_{j} \left( {1 + ax^{ - \theta } } \right)^{{ - \beta \left( {j + 1} \right) - 1}} dx{,}\)

Making substitution,

Limits: when \(x \to \infty \; {\text{then}}\; u = 1\) and when \(x \to 0 \;{\text{then}}\; u = 0.\) So Eq. (22) becomes

By substituting Eq. (23) in (21), we will get (20).

Putting r = 1, 2, 3, 4 in (23), we get the first four raw moments as:

Also, the variance of MOED is,

Theorem 2.2

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the qth quantile for r.v X is,

Proof

The \(q{th}\) quantile of any distribution can be obtained by simplifying

Substituting Eq. (11) in Eq. (30), we get

After simplification for x, we get

which is the \(q^{th}\) quantile for MOED distribution.

Corollary 2.1

The median for MOED distribution is obtained by putting \(q = \frac{1}{2} = 0.5\) in Eq. (29), we have,

Theorem 2.3

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the mean deviation about mean and median are:

Under the condition \(\left( {\frac{1}{\theta } < 1} \right)\). Also

where M is the median defined in (30).

Corollary 2.2

The random number generator of MOED distribution is

3 Measures of Uncertainty and Inequality

In this section, we find the measure of uncertainty for MOED distribution, which includes Renyi entropy and Q entropy. After that, measures of inequality expressions like Lorenz and Bonferroni curves are derived.

3.1 Renyi Entropy

Renyi entropy is defined as;

where

Substituting (12) in (35) and applying the generalized Binomial expansion (15), we get,

Applying the power series expansion (4.1.2 = 16) in the above equation, we get

Making substitution, \(\left( {1 + ax^{ - \theta } } \right)^{ - 1} = z\), \(x = \frac{1}{{a^{{ - \frac{1}{\theta }}} }} \left[ {\frac{1}{z} - 1} \right]^{{ - \frac{1}{\theta }}}\), \(dx = \frac{1}{{\theta z^{2} a^{{ - \frac{1}{\theta }}} }}\left[ {\frac{1}{z} - 1} \right]^{{ - \frac{1}{\theta } - 1}} dz\).

Limits; When \(x \to \infty\) then \(z = 1\) and when \(x \to 0\) then \(z = 0\). Equation (36) becomes

Or,

\(I_{X} \left( \delta \right) = \quad \propto^{\delta } \gamma^{\delta } a^{{\frac{1}{\theta }\left( {1 - \delta } \right)}} \theta^{{ - \left( {1 - \delta } \right)}} \beta^{\delta } \left[ {\mathop \sum \limits_{i = 0}^{\infty } \mathop \sum \limits_{j = 0}^{\infty } \frac{{\left( { - 1} \right)^{j} }}{i!j!}\frac{{\Gamma \left( {2\delta + i} \right)}}{{\Gamma \left( {2\delta } \right)}}\frac{{\Gamma \left( {\gamma \left( {\delta + i} \right) - \delta + 1} \right) }}{{\Gamma \left( {\gamma \left( {\delta + i} \right) - \delta + 1 - j} \right)}}\left( {1 - \propto } \right)^{i} B\left( {\beta \left( {\delta + j} \right) + \frac{1}{\theta }\left( {1 - \delta } \right),\frac{1}{\theta }\left( {\delta - 1} \right) + \delta } \right)} \right],\) so after simplification and applying log on both sides, we get

Substituting (37) in (34), we get

Under the condition (\(\delta > 1)\).

3.2 Q entropy

Let X be a r.v that has MOED distribution with parameters a,\( \beta\),\({{ \gamma }}\),\({{ \theta {\rm and} }} \propto { }\) then Q entropy is obtained by

For MOED distribution

As solved before

Hence Q entropy is

3.3 Lorenz Curve

Let the random variable “X” have a MOED distribution with parameters a,\( \beta\),\({{ \gamma }}\),\({{ \theta {\rm and} }} \propto\) The Lorenz curve denoted by L(X) is defined as

where

where

Substituting Eq. (17) and (43) in Eq. (42)

Substitute Eq. (44) in Eq. (41)

Making substitution, \(\left( {1 + az^{ - \theta } } \right)^{ - 1} = t,\) and after simplification, we get the expression for Lorenz Curve:

3.4 Bonferroni Curve

Bonferroni curve is defined as

Substitute the Lorenz curve (46) and Cdf (1) in the above equation; we get the Bonferroni Curve for MOED distribution.

4 Order Statistics

This section comprises expressions for \(i^{th}\) order statistics.

Theorem 4.1

If \(X \sim {\text{MOED}}\left( {{\text{X}}: \propto , \theta , \beta ,\Upsilon ,a} \right)\), then the ith order statistics for MOED distribution is,

Proof

The density function of \(i^{th} \) order statistics of the random variable \(X_{i:n}\) can be obtained using

Substituting (11) and (12) in above Eq. (49), we get

After simplification, we get ith order statistics (48).

Corollary 4.1

The rth moment of ith order statistic from MOED distribution is:

5 MLE’s of MOED Distribution Parameters

This section obtains maximum likelihood estimates of the unknown parameters of the newly introduced distribution, Marshall Olkin Exponentiated distribution. Suppose a random sample of size n from \(X\sim MOED\left( {{\text{ a}},{{ \beta }},{{ \gamma }},{{ \alpha }},{{ \theta }}} \right);\) then the likelihood function can be expressed as

Taking logarithms on both sides, we have

On differentiating Eq. (51) w.r.t unknown parameters, we have:

The maximum likelihood estimates (MLEs) of nonlinear Eqs. (52–56) are obtained by solving them iteratively using the Newton–Raphson method.

6 Simulation Study

The effect of sample size on maximum likelihood estimates (MLEs) of the corresponding parameters of the Marshall Olkin exponentiated Dagum distribution is presented using the Monte Carlo approach. The performance of the respective parameters of MOED distribution is assessed for different sample sizes (25, 50, 100, 200 and 500), and the procedure is replicated 5000 times for each sample size. The following two situations are considered here for estimating parameters and determining their stability.

-

a = 1.5, \({\upbeta }\) = 1.5,\({{ \gamma }}\) = 1.5, \({\upalpha }\) = 1.5,\({{ \theta }}\) = 1.5

-

a = 2, \({\upbeta }\) = 3,\({{ \gamma }}\) = 4, \({\upalpha }\) = 5,\({{ \theta }}\) = 6

Results presented in Table 1 are the MLEs, standard deviation, bias and mean square error of the parameters of the MOED distribution and depicted that as sample size increases, the standard deviation, bias, and mean square error of the estimators decrease.

7 Applications of MOED Distribution on Real Data

In this section, three real-life data sets are fitted to Marshall Olkin Exponentiated Dagum distribution to analyze its applicability and flexibility. We compare the Marshall Olkin Exponentiated Dagum (MOED) distribution with seven different models that are the Marshall Olkin Dagum (MOD) distribution, Exponentiated Dagum (ED) distribution, Dagum (D) distribution, Burr III distribution, Kumaraswamy Burr III (KBurr III) distribution, Kumaraswamy Log Logistic (KLL) distribution and Log Logistic (LL) distribution.

7.1 Application 1: Fracture Toughness of Alumina (Al2O3) Data

The first data set is taken from Nadarajah [26] based on the fracture toughness of Alumina (Al2O3) (in the units of MPa m1 = 2).

Table 2 lists the Maximum Likelihood Estimates (MLE) of the unknown parameters of the fitted distributions for the fracture toughness of Alumina (Al2O3) data. The log-likelihood of the subject distribution is highest than other competing distributions. The MOED distribution gives the smallest values for the AIC, CAIC, HQIC, Cramrvon Mises (\({\text{W}}^{*}\)), Anderson–Darling (\({\text{A}}^{*}\)) and Kolmogorov–Smirnov (KS) statistics (for fracture toughness of Alumina (Al2O3) data) among all other seven distributions. The Histogram of the fracture toughness of Alumina (Al2O3) data and the fitted density functions is displayed in Fig. 2. It is clear from Fig. 2 that the Marshall Olkin Exponentiated Dagum (MOED) model provides a better fit to the other distributions in terms of model fitting for this data set.

7.2 Application 2: Yarn Sample at 2.3% Strain Level Data

The second data set comprises a 100-cm yarn sample at a 2.3% strain level. The polyester’s/viscose's characteristics of tensile fatigue is studied using this data set to resolve the wrap breakage issue. Queensberry et al. [27] and other researchers used the above data set to determine various probability models' reliability.

From Table 3, it can be observed that the Log likelihood of the subject distribution is highest than other competing distributions. The MOED distributon gives the smallest values for the AIC, CAIC, HQIC, Cramrvon Mises (\({\text{W}}^{*}\)), Anderson–Darling (\({\text{A}}^{*}\)) and Kolmogorov–Smirnov (KS) statistics (for yarn sample at 2.3% strain level data) among all other seven distributions. The Histogram of the yarn sample at 2.3% strain level data and the fitted density functions are displayed in Fig. 3. It is clear from Fig. 3 that the Marshall Olkin Exponentiated Dagum (MOED) model provides a better fit to the other distributions in terms of model fitting for this data set.

7.3 Application 3: Breaking Stress of Carbon Fibres (in Gba) Data

The third example is a data set from Cordeiro et al. [28] consisting of 66 observations on the breaking stress of carbon fibres (in Gba) [29].

From Table 4, it can be observed that the Log likelihood of the subject distribution is highest than other competing distributions. The MOED distribution gives the smallest values for the AIC, CAIC, HQIC, Cramrvon Mises (\({\text{W}}^{*}\)), Anderson–Darling (\({\text{A}}^{*}\)) and Kolmogorov–Smirnov (KS) statistics (for breaking stress of carbon fibres data) among all other seven distributions. The Histogram of the breaking stress of carbon fibres data and the fitted density functions are displayed in Fig. 4. It is clear from Fig. 6 that the Marshall Olkin Exponentiated Dagum (MOED) model provides a better fit to the other distributions in terms of model fitting for this data set.

8 Conclusion

A five-parametric Marshall Olkin Exponentiated Dagum distribution is introduced using Marshall Olkin family distribution. The newly proposed distribution is studied thoroughly, and various properties are derived. Parameters were estimated theoretically through the maximum likelihood method and numerically via Newton Raphson's iterative method for various sample sizes. Lastly, the proposed distribution is applied to three real data sets and compared with other allied distributions in the literature. The results depicted that the newly proposed MOED distribution performs better than the compared distributions.

Availability of Data and Material

The data is given in the paper.

Abbreviations

- MOED:

-

Marshall Olkin exponentiated dagum distribution

- MOD:

-

Marshall Olkin dagum

- ED:

-

Exponentiated dagum

- KLL:

-

Kumaraswamy log logistic

- LL:

-

Log logistic

References

Saboor, A., Khan, M.N., Cordeiro, G.M., Pascoa, M.A.R., Ramos, P.L., Mustafa, M.: Some new results for the transmuted generalized gamma distribution. J. Comput. Appl. Math. 352, 165–180 (2019). https://doi.org/10.1016/j.cam.2018.12.002

Nofal, Z. M., Afify, A. Z., Yousuf, H. M., Granzotto, D. C. T., Louzada, F.: The transmuted exponentiated additive weibull distribution. Prop. Appl. J. Mod. Appl. Stat. Methods 17(1), eP2526 (2018). https://doi.org/10.22237/jmasm/1525133340.

Castellares, F., Lemonte, A.J.: On the moments of the generalized Gompertz distribution. Appl. Math. Model. 72, 420–424 (2019). https://doi.org/10.1016/j.apm.2019.03.032

Okasha, H.M., Al-Shomrani, A.A.: Generalized linear exponential geometric distributions and its applications. J. Comput. Appl. Math. 351, 198–211 (2019). https://doi.org/10.1016/j.cam.2018.10.041

Aryal, G. R., Tsokos, C. P.: Transmuted Weibull distribution: a generalization of the Weibull probability distribution. Eur. J. Pure Appl. Math. 4(2), 89–102 (2011).

Saboor, A., Pogány, T.K.: Marshall–Olkin gamma–Weibull distribution with applications. Commun. Stat. Theory Methods 45(5), 1550–1563 (2016)

Cox, D.R., Oakes, D.: Analysis of survival data. Volume 21 of Chapman & Hall/CRC Monographs on Statistics and Applied Probability (1984)

Koleoso, P. O.: The properties of odd Lomax–Dagum distribution and its application. Sci. Afric., e01555 (2023)

Aslam, M., Afzaal, M., Bhatti, M.I.: A study on exponentiated Gompertz distribution under Bayesian discipline using informative priors. Stat. Transit. New Ser. 22(4), 101–119 (2021)

Faryal, Y., Aslam, M., Bhatti, I.: Preference of prior for two component mixture of Lomax distribution (2023).

Benkhelifa, L.: The beta generalized Gompertz distribution. Appl. Math. Model. 52, 341–357 (2017). https://doi.org/10.1016/j.apm.2017.06.021

Cordeiro, G.M., Ortega, E.M., Silva, G.O.: The Kumaraswamy modified Weibull distribution: theory and applications. J. Stat. Comput. Simul. 84(7), 1387–1411 (2014)

Afify, A.Z., Yousof, H.M., Cordeiro, G.M., Ortega, E.M., Nofal, Z.M.: The Weibull Fréchet distribution and its applications. J. Appl. Stat. 43(14), 2608–2626 (2016).

Khan, M.S., King, R.: Transmuted modified weibull distribution: a generalization of the modified weibull probability distribution. Eur J Pure Appl Math 6(1), 66–88 (2013)

Marshall, A.W., Olkin, I.: A new method for adding a parameter to a family of distributions with application to the exponential and weibull families. Biometrika 84(3), 641–652 (2016)

Alawadhi, F.A., Sarhan, A.M., Hamilton, D.C.: Marshall–Olkin extended two-parameter bathtub-shaped lifetime distribution. J. Stat. Comput. Simul. 86(18), 3653–3666 (2016)

Barreto-Souza, W., Lemonte, A.J., Cordeiro, G.M.: General results for the Marshall and Olkin's family of distributions. Anais da Academia Brasileira de Ciências, 85(1), 3–21 (2013).

Cordeiro, G.M., Lemonte, A.J., Ortega, E.M.: The Marshall–Olkin family of distributions: mathematical properties and new models. J. Stat. Theory Pract. 8(2), 343–366 (2014)

George, R., Thobias, S.: Marshall-Olkin Kumaraswamy distribution. Int Math Forum 12(2), 47–69 (2017)

Dagum, C.: A new model of personal income distribution: specification and estimation. Economie Appliqu´ee 30, 413–437 (1977).

Arnold, C.B.: Pareto Distributions. International Cooperative Publishing House, Silver Spring (1983)

Aitchison, J., Brown, J.A.C.: The Lognormal Distribution. Cambridge University Press (1957).

Fisk, P.R.: The graduation of income distribution. Econometrica 29, 171–185 (1961)

Dagum, C.: The generation and distribution of income, the Lorenz curve and the Gini ratio. Economie Appliqué e 33, 327–367 (1980)

Nadarajah, S., Kotz, S.: The exponentiated type distributions. Acta Appl Math 92(2), 97–111 (2006).

Nadarajah, S.: A truncated inverted beta distribution with application to air pollution data. Stoch. Env. Res. Risk Assess. 22(2), 285–289 (2008)

Quesenberry, C. P., Kent, J.: Selecting among probability distributions used in reliability. Technometrics 24(1), 59–65 (1982).

Cordeiro, G.M., Silva, G.O., Edwin, M.M., Ortega, E.M.: The beta–Weibull geometric distribution. Stat. J. Theor. Appl. Stat. 47, 817–834 (2013).

Mead, M.E., Abd-Eltawab, A.R.: A note on Kumaraswamy–Fréchet distribution. Aust. J. Basic Appl. Sci. 8, 294–300 (2014)

Acknowledgements

The authors are deeply thankful to the editor and reviewers for their valuable suggestions to improve the quality and presentation of the paper.

Funding

None.

Author information

Authors and Affiliations

Contributions

RAKS, SA, SA and MA wrote the paper.

Corresponding author

Ethics declarations

Conflict of Interest

No conflict of interest regarding the paper.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sherwani, R.A.K., Ashraf, S., Abbas, S. et al. Marshall Olkin Exponentiated Dagum Distribution: Properties and Applications. J Stat Theory Appl 22, 70–97 (2023). https://doi.org/10.1007/s44199-023-00056-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44199-023-00056-6