Abstract

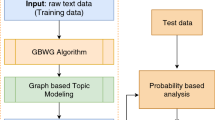

Automatically extracting topics from large amounts of text is one of the main uses of natural language processing (NLP). The latent Dirichlet allocation (LDA) technique is frequently used to extract topics from pre-processed materials based on word frequency. One of the main problems of LDA is that the topics extracted are of poor quality if the document does not coherently belong to a single topic. However, Gibbs sampling operates on a word-by-word basis, which allows it to be used on documents with a variety of topics and modifies the topic assignment of a single word. To improve the quality of topics extracted, this paper developed a hybrid-based semantic similarity measure for topic modeling combining LDA and Gibbs sampling to maximize the coherence score. To verify the effectiveness of the suggested model, an unstructured dataset was taken from a public repository. The evaluation carried out shows that the proposed LDA-Gibbs had a coherence score of 0.52650 as against the LDA coherence score of 0.46504. The proposed multi-level model provides better quality of topics extracted.

Similar content being viewed by others

Data availability

The data that support the findings of this study are openly available in Newsgroup Master Datasets at https://raw.githubusercontent.com/selva86/datasets/master/newsgroups.json.

References

Deerwester, S., Dumais, S.T., Furnas, G.W., Landauer, T.K., Harshman, R.: Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 41(6), 391 (1990)

Vayansky, I., Kumar, S.A.P.: A review of topic modeling methods. Inform. Syst. 94, 101582 (2020). https://doi.org/10.1016/j.is.2020.101582

Qiang, J., Qian, Z., Li, Y., Yuan, Y., Wu, X.: Short text topic modeling techniques, applications, and performance: a survey. IEEE Trans Knowl Data Eng 34(3), 1427–1445 (2022). https://doi.org/10.1109/TKDE.2020.2992485

Zhao, W.X., Jiang, J., Weng, J., He, J., Lim, E.-P., Yan, H., Li, X.: Comparing Twitter and traditional media using topic models. In: Advances in information retrieval, pp. 338–349. Springer (2011)

Ramage, D., Dumais, S., Liebling, D.: Characterizing microblogs with topic models, In: Fourth International AAAI Conference on Weblogs and social media (2010)

Dai, Z., Sun, A., Liu, X.-Y.: Crest: cluster-based representation enrichment for short text classification. In: Pacific–Asia Conference on Knowledge Discovery and Data Mining, Springer, pp 256–267 (2013)

Razavi, A. H., Inkpen, D.: Text representation using multi-level latent dirichlet allocation. In: Canadian Conference on Artificial Intelligence, Springer, pp 215–226 (2014)

Lin, C. X., Zhao, B., Mei, Q., Han, J.: Pet: a statistical model for popular events tracking in social communities. In: Proceedings of the 16th ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp 929–938 (2010)

Aggarwal, C.C., Subbian, K.: Event detection in social streams. In: Proceedings of the 2012 SIAM international conference on data mining, SIAM, pp. 624–635 (2012)

Ritter, A., Etzioni, O., Clark, S., et al.: Open domain event extraction from twitter. In: Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp 1104–1112 (2012)

Yin, H., Hu, Z., Zhou, X., Hao, W., Kai, Z., Nguyen, Q.V.H., Sadiq, S.: Discovering interpretable geo-social communities for user behavior prediction. In: 2016 IEEE 32nd International Conference on Data Engineering (ICDE) (2016)

Goyal, A., Kashyap, I.: Latent Dirichlet Allocation–An approach for topic discovery. In: 2022 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COM-IT-CON), pp. 97–102 (2022). https://doi.org/10.1109/COM-IT-CON54601.2022.9850912

Barde, B. V., Bainwad, A. M.: An overview of topic modeling methods and tools. In: 2017 International Conference on Intelligent Computing and Control Systems (ICICCS), pp 745–750 (2017) https://doi.org/10.1109/ICCONS.2017.8250563

Qin, D., Zheng, G., Liu, L., Li, L., Wang, Y., Zhao, L.: A knowledge search algorithm based on multidimensional semantic similarity analysis in knowledge graph systems of power grid networks. In: 2020 IEEE 20th International Conference on Communication Technology (ICCT), pp. 1447–1451 (2020) https://doi.org/10.1109/ICCT50939.2020.9295697

Jiao, Y., Jing, Ma., Kang, F.: Computing text semantic similarity with syntactic network of co-occurrence distance. Data Anal. Knowl. Discov. 12, 93–100 (2019)

Sheng, Q., Ying, G.: Measuring semantic similarity in ontology and its application in information retrieval. In: 2008 Congress on Image and Signal Processing, pp. 525–529 (2008) https://doi.org/10.1109/CISP.2008.596

Newsgroup Master dataset. Retrieved from https://raw.githubusercontent.com/selva86/datasets/master/newsgroups.json

Hamed, J., Yongli, W., Chi, Y., Xia, F., Xiahui, J., Yanchao, L., Liang, Z.: Latent Dirichlet Allocation (LDA) and Topic modeling: models, applications, a survey. ArXiv.org e-print archive. (n.d.). https://arxiv.org/ftp/arxiv/papers/1310/1310.8059.pdf. Retrieved 7 Aug 2022

Kherwa, P., Bansal, P.: Topic modelling: a comprehensive review. J. EAI Endorsed Trans. Scalable Inform. Syst. (2019). https://doi.org/10.4108/eai.13-7-2018.159623

Adegoke, M.A., Ayeni, J.O., Adewole, P.A.: Empirical prior latent Dirichlet allocation model. Niger. J. Technol. (NIJOTECH). 38(1), 223–232 (2019)

Mohamed, M., Oussalah, M.: SRL-ESA-TextSum: a text summarization approach based on semantic role labeling and explicit semantic analysis. Inform. Process. Manag. 56(4), 1356–1372 (2019)

Sainani, A. et al.: Extracting and classifying requirements from software engineering contracts. In: 2020 IEEE 28th International Requirements Engineering Conference (RE) (2020) [Preprint]. https://doi.org/10.1109/re48521.2020.00026

Jonsson, E., Stolee, J.: An evaluation of topic modelling techniques for twitter. An evaluation of topic modelling techniques for Twitter. (n.d.). https://www.cs.toronto.edu/jstolee/projects/topic.pdf. Retrieved 7 Aug 2022

Asmussen, C.B., Møller, C.: Smart literature review: a practical topic modelling approach to exploratory literature review. J. Big Data 6, 93 (2019). https://doi.org/10.1186/s40537-019-0255-7

Akila, D., Jayakumar, C.: Semantic similarity—a review of approaches and metrics. Int. J. Appl. Eng. Res. 9(24), 27581–27600 (2014)

Sontag, D., Roy, D.M.: Complexity of inference in latent Dirichlet allocation. (2022). https://people.csail.mit.edu/dsontag/papers/SontagRoy_nips11.pdf. Accessed: 31 Oct 2022

Bailón-Elvira, J.C., Cobo, M.J., Herrera-Viedma, E., López-Herrera, A.G.: Latent Dirichlet allocation (LDA) for improving the topic modeling of the official bulletin of the Spanish state (BOE). Procedia Comput. Sci. 162, 207–214 (2019)

Horn, N., Gampfer, F., Buchkremer, R.: Latent Dirichlet allocation and t-distributed stochastic neighbor embedding enhance scientific reading comprehension of articles related to enterprise architecture. Institute of IT Management and Digitization Research (IFID) (2021)

Anima A. et al.: A spectral algorithm for latent Dirichlet allocation. Retrieved from https://www.cs.columbia.edu/~djhsu/papers/lda-nips.pdf

Lei, S., Griffiths, T.L., Kevin, R.C.: Online inference of topics with latent Dirichlet allocation. In: 2th International Conference on Artificial Intelligence and Statistics (AISTATS) vol. 5 (2009)

Gross, A., Murthy, D.: Modeling virtual organizations with latent Dirichlet allocation: a case for natural language processing. J. Neural Netw. 58, 38–49 (2014)

Špeh, J., Muhic, J., Rupnik, J.: Parameter estimation for the Latent Dirichlet Allocation. Retrieved from https://ailab.ijs.si/dunja/SiKDD2013/Papers/Speh-ldaAlgorithms.pdf

Zhe, C., Dossaca, H.. Inference for the number of topics in the Latent Dirichlet allocation model via Bayesian mixture modelling. Retrieved from https://users.stat.ufl.edu/~doss/Research/lda-ntopics.pdf

Foster, A., Li, H., Maierhofer, G., Shearer, M.: An extension of standard latent dirichlet allocation to multiple corpora. Retrieved on April 2016 from http://evoq-eval.siam.org/Portals/0/Publications/SIURO/Vol9/AN_EXTENSION_STANDARD_LATENT_DIRICHLET_ALLOCATION.pdf?ver=2018-04-06-152049-177

Hew, Z.J., Olanrewaju, V.J., Chew, X.Y., Khaw, K.W.: Text summarization for news articles by machine learning techniques. J. Appl. Math. Comput. Intell. (2022)

Funding

There was no outside funding for the study.

Author information

Authors and Affiliations

Contributions

The research's conception and design were influenced by the work of all contributors. Data gathering, material preparation, and analysis were completed by MOA. The first draft of the manuscript was written by AAO, UCC, POS. All the authors read and approved the final manuscript and also agreed to all the content of the article including the author list and contributions.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to disclose about the article's content.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ajinaja, M.O., Adetunmbi, A.O., Ugwu, C.C. et al. Semantic similarity measure for topic modeling using latent Dirichlet allocation and collapsed Gibbs sampling. Iran J Comput Sci 6, 81–94 (2023). https://doi.org/10.1007/s42044-022-00124-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42044-022-00124-7