Abstract

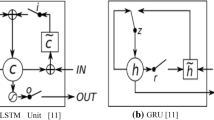

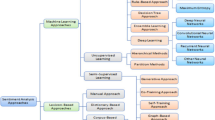

Sentiment analysis is a particularly common task for determining user thoughts and has been widely used in Natural Language Processing (NLP) applications. Gated Recurrent Unit (GRU) was already effectively integrated into the NLP process with comparatively excellent results. GRU networks outperform traditional recurrent neural networks in sequential learning tasks and solve gradient vanishing and explosion limitations of RNNs. This paper introduces a new method called Normalize Auto-Encoded GRU (NAE-GRU) to address data dimensionality reduction using an Auto-Encoder and to improve performance through batch normalization. Empirically, we demonstrate that with slight adjustments to hyperparameters and optimization of statistic vectors, the proposed model achieves excellent results in sentiment classification on benchmark datasets. The developed NAE-GRU approach outperformed other various traditional approaches in terms of accuracy and convergence rate. The experimental results showed that the developed NAE-GRU approach accomplished better sentiment analysis accuracy of 91.32%, 82.27%, 87.43%, and 84.49% on IMDB, SSTb, Amazon review, and Yelp review datasets respectively. Furthermore, experimental results have shown that the developed approach is proficient in reducing the loss function and capturing long-term relationships with an effective design that achieved excellent results compared to state-of-the-art methods.

Similar content being viewed by others

Data availability

The following information was supplied regarding data availability: The code is available at GitHub: https://github.com/zunimalik777/Improved-DNNs-Autoencoder-GRU-Sentiment-Analysis.git. The collected datasets are available on the following links: https://www.kaggle.com/datasets/lakshmi25npathi/imdb-dataset-of-50k-movie-reviews. https://snap.stanford.edu/data/web-Amazon.html. https://www.tensorflow.org/datasets/catalog/yelp_polarity_reviews. https://www.kaggle.com/competitions/sentiment-analysis-on-movie-reviews/data.

Data transparency

Authors will ensure data transparency.

References

Tang D, Wei F, Qin B, Yang N, Liu T, Zhou M (2015) Sentiment embeddings with applications to sentiment analysis. IEEE Trans Knowl Data Eng 28(2):496–509

Zulqarnain M, Ghazali R, Hassim YMM, Rehan M (2020) A comparative review on deep learning models for text classification. Indones J Electr Eng Comput Sci 19(1):1856–1866

Ghazali R, Husaini NA, Ismail LH, Herawan T, Hassim YMM (2014) The performance of a Recurrent HONN for temperature time series prediction. In: 2014 International Joint Conference on Neural Networks (IJCNN), IEEE, pp 518–524

Abbas Q (2019) MADeep-automatic microaneurysms detection on retinal fundus by using region growing and deep neural networks. Int J Comput Sci Netw Secur 19(1):161–166

Wadawadagi R, Pagi V (2022) Polarity enriched attention network for aspect-based sentiment analysis. Int J Inf Technol 14(6):2767–2778

Hughes M, Li I, Kotoulas S, Suzumura T (2017) Medical text classification using convolutional neural networks. Informatics for health: connected citizen-led wellness and population health. IOS Press, pp 246–250

Zulqarnain M, Alsaedi AKZ, Ghazali R, Ghouse MG, Sharif W, Husaini NA (2021) A comparative analysis on question classification task based on deep learning approaches. PeerJ Comput Sci 7:e570

Priya CSR, Deepalakshmi P (2023) Sentiment analysis from unstructured hotel reviews data in social network using deep learning techniques. Int J Inf Technol. https://doi.org/10.1007/s41870-023-01419-z

Al-harbi O (2019) A comparative study of feature selection methods for dialectal arabic sentiment classification using support vector machine. Int J Comput Sci Netw Secur 19(1):167–176

Bengio Y, Courville A, Vincent P (2012) Representation learning: a review and new perspectives, pp 1–30

Kalchbrenner N, Grefenstette E, Blunsom P (2014) A convolutional neural network for modelling sentences. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp 655–665

Tang D, Qin B, Liu T (2015) Document modeling with gated recurrent neural network for sentiment classification. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, pp 1422–1432

Song J, Qin S, Zhang P (2016) Chinese text categorization based on deep belief networks. IEEE ICIS 2016:1–5

Soni J, Mathur K (2022) Sentiment analysis based on aspect and context fusion using attention encoder with LSTM. Int J Inf Technol 14(7):3611–3618

Cho K et al (2014) Learning phrase representations using rnn encoder-decoder for statistical machine translation. ArXiv, pp 1–15

Divate MS (2021) Sentiment analysis of Marathi news using LSTM. Int J Inf Technol 13(5):2069–2074

Kumar A (2018) Self-attention enhanced recurrent neural networks for sentence classification. In: IEEE Symposium Series on Computational Intelligence, pp 905–911

Yang T, Tseng T, Chen C (2016) Recurrent neural network-based language models with variation in net topology, language, and granularity. Int Conf Asian Lang Process 3:71–74

Rumelhart DE, Todd PM (1993) Learning and connectionist representations. Attention Perform XIV 2:3–30 (Synergies in Experimental Psychology, Artificial Intelligence, and Cognitive Neuroscience)

Aamir M, Nawi NM, Mahdin HB, Naseem R, Zulqarnain M (2020) Auto-encoder variants for solving handwritten digits classification problem. Int J Fuzzy Logic Intell Syst 20(1):8–16

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical Evaluation of gated recurrent neural networks on sequence modeling, pp 1–9

Zulqarnain M, Ghazali R, Hassim YMM, Rehan M (2020) Text classification based on gated recurrent unit combines with support vector machine. Int J Electr Comput Eng 10:2088–8708

Lipton ZC, Berkowitz J, Elkan C (2015) A critical review of recurrent neural networks for sequence learning, pp. 1–38. arXiv: 1506.00019v4 [ cs . LG ]

Noaman HM, Sarhan SS, Rashwan MA (2012) Enhancing recurrent neural network-based language models by word tokenization. Hum-Centric Comput Inf Sci 8(1):12

Hao Y, Sheng Y, Wang J (2019) Variant gated recurrent units with encoders to preprocess packets for payload-aware intrusion detection. IEEE Access 7:49985–49998. https://doi.org/10.1109/ACCESS.2019.2910860

Davidson DW (2016) Modeling missing data in clinical time series with RNNs. Proc Mach Learn Healthc 58(4):725–737

Justus D, Brennan J, Bonner S, McGough AS (2019) Predicting the computational cost of deep learning models. In: Proceedings-2018 IEEE International Conference on Big Data, Big Data, pp 3873–3882

Maas AL, Daly RE, Pham PT, Huang D, Ng AY, Potts C (2011) Learning word vectors for sentiment analysis. Proc Annu Assoc Comput Linguist Hum Lang Technol 1:142–150

Pang B, Lee L (2005) Seeing stars: exploiting class relationships for sentiment categorization with respect to rating scales. Proc Annu Meet Assoc Comput Linguist 3(1):115–124

Socher R, Perelygin A, Wu J (2013) Recursive deep models for semantic compositionality over a sentiment Treebank. In: Proceedings, pp 1631–1642

Kingma DP, Ba JL (2015) A method for stochastic optimization. arXiv, pp 1–15

Hinton G (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Zulqarnain M, Ghazali R, Aamir M, Hassim YMM (2022) An efficient two-state GRU based on feature attention mechanism for sentiment analysis. Multimedia Tools Appl. https://doi.org/10.1007/s11042-022-13339-4

Pennington J, Socher R, Manning CD (2014) GloVe : global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, pp 1532–1543

Conneau A, Schwenk H, Barrault L, Lecun Y (2016) Very deep convolutional networks for text classification. arXiv preprint arXiv:1606.01781

Deep pyramid convolutional neural networks for text categorization (2017). In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp 562–570

Yang Z, Dai Z, Yang Y, Carbonell J, Salakhutdinov RR, Le QV (2019) Xlnet: generalized autoregressive pretraining for language understanding. Advances in neural information processing systems. Springer, Cham, pp 5754–5764

Liu B (2020) Text sentiment analysis based on CBOW model and deep learning in big data environment. J Ambient Intell Humaniz Comput 11(2):451–458. https://doi.org/10.1007/s12652-018-1095-6

Shen D et al (2018) Baseline needs more love: on simple word-embedding-based models and associated pooling mechanisms. ACL Annu Meet Assoc Comput Linguist Proc Conf (Long Paper) 1:440–450. https://doi.org/10.18653/v1/p18-1041

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805

Sun C, Qiu X, Xu Y, Huang X (2019) Howto fine-tune bert for text classification? China national conference on chinese computational linguistics. Springer, Cham, pp 194–206

Ren H, Lu H (2018) Compositional coding capsule network with k-means routing for text classification. arXiv preprint arXiv:1810.09177

Xu J, Du Q (2019) A deep investigation into fasttext. Proc IEEE Int Conf High Perform Comput Commun. https://doi.org/10.1109/HPCC/SmartCity/DSS.2019.00234

Zhang X, Zhao J, Le Cun Y (2015) Character-level convolutional networks for text classification. Advances in neural information processing systems. Springer, pp 649–657

Socher R et al (2013) Recursive deep models for semantic compositionality over a sentiment treebank. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP)

Socher R, Pennington J, Huang EH, Ng AY, Manning CD (2011) Semi-supervised recursive autoencoders for predicting sentiment distributions. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing, pp 151–161

Wang T, Liu L, Zhang H, Zhang L, Chen X (2020) Joint character-level convolutional and generative adversarial networks for text classification. Complexity 2020:1–11. https://doi.org/10.1155/2020/8516216

Ma Y, Fan H, Zhao C (2019) Feature-based fusion adversarial recurrent neural networks for text sentiment classification. IEEE Access 7:132542–132551. https://doi.org/10.1109/ACCESS.2019.2940506

Fu X, Yang J, Li J, Fang M, Wang H (2018) Lexicon-enhanced LSTM with attention for general sentiment analysis. IEEE Access 6:71884–71891. https://doi.org/10.1109/ACCESS.2018.2878425

Camacho-Collados J, Pilehvar MT (2018) On the role of text preprocessing in neural network architectures: an evaluation study on text categorization and sentiment analysis. ArXivPreprint. https://doi.org/10.18653/v1/w18-5406

Funding

The authors did not receive support from organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict interest.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zulqarnain, M., Alsaedi, A.K.Z., Sheikh, R. et al. An improved gated recurrent unit based on auto encoder for sentiment analysis. Int. j. inf. tecnol. 16, 587–599 (2024). https://doi.org/10.1007/s41870-023-01600-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41870-023-01600-4