Abstract

The review considers the modelling process for stellar convection rather than specific astrophysical results. For achieving reasonable depth and length we deal with hydrodynamics only, omitting MHD. A historically oriented introduction offers first glimpses on the physics of stellar convection. Examination of its basic properties shows that two very different kinds of modelling keep being needed: low dimensional models (mixing length, Reynolds stress, etc.) and “full” 3D simulations. A list of affordable and not affordable tasks for the latter is given. Various low dimensional modelling approaches are put in a hierarchy and basic principles which they should respect are formulated. In 3D simulations of low Mach number convection the inclusion of then unimportant sound waves with their rapid time variation is numerically impossible. We describe a number of approaches where the Navier–Stokes equations are modified for their elimination (anelastic approximation, etc.). We then turn to working with the full Navier–Stokes equations and deal with numerical principles for faithful and efficient numerics. Spatial differentiation as well as time marching aspects are considered. A list of codes allows assessing the state of the art. An important recent development is the treatment of even the low Mach number problem without prior modification of the basic equation (obviating side effects) by specifically designed numerical methods. Finally, we review a number of important trends such as how to further develop low-dimensional models, how to use 3D models for that purpose, what effect recent hardware developments may have on 3D modelling, and others.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and historical background

The goal of this review is to provide an overview on the subject of modelling stellar convection. It is supposed to be accessible not only to specialists on the subject, but to a wider readership including astrophysicists in general, students who would like to specialize in stellar astrophysics, and also to researchers from neighbouring fields such as geophysics, meteorology, and oceanography, who have an interest in convection from the viewpoint of their own fields.

A detailed introduction into the subject would easily lead to a book of several hundred pages. To keep the material manageable and thus the text more accessible we have made a specific selection of topics. The very recent review of Houdek and Dupret (2015) chiefly deals with the subject of the interaction between stellar convection and pulsation, but indeed contains an extended, detailed introduction into local and non-local mixing length models of convection that are frequently used in that field of research. An extended review on the capabilities of numerical simulations of convection at the surface of the Sun to reproduce a large set of different types of observations has been given in Nordlund et al. (2009). The large scale dynamics of the solar convection zone and numerical simulations of the deep, convective envelope of the Sun have been reviewed in Miesch (2005). Numerical simulations of turbulent convection in solar and stellar astrophysics have also been reviewed in Kupka (2009b). An introduction into the Reynolds stress approach to model convection in astrophysics and geophysics has been given in Canuto (2009).

Keeping these and further reviews on the subject in mind, we here focus on computational aspects of the modelling of convection which so far have found much less deliberation, in particular within the literature more accessible to astrophysicists. We are thus going to pay particular attention to computability in convection modelling and thus very naturally arrive at the necessity to describe both the advanced two-dimensional and three-dimensional numerical simulation approach as well as more idealized but also more affordable models of convection. The latter are not only based on the fundamental conservation laws, which are the foundation of numerical simulations of stellar convection, but introduce further hypotheses to keep the resulting mathematical model computationally more manageable.

Indeed, there are two different types of problems which make convection an inherently difficult topic. One class of problems is of a general, physical nature. The other ones relate to the specific modelling approach. We deal with both of them in this review. Following the intention of keeping this text accessible in terms of contents and volume we also exclude here the highly important separate topic of magnetohydrodynamics (MHD), since this opens a whole new range of specific problems. Even within the purely hydrodynamic context, some specific problems such as rotation–convection interaction had to be omitted, essential as they are, considering that this review has already about twice the default length applicable for the present series.

Before we summarize the specific topics we are going to deal with, we undertake a short tour on the history of the subject, as it provides a first overview on the methods and the problems occurring in dealing with a physical understanding and mathematical modelling of turbulent convection in stellar astrophysics.

1.1 History

1.1.1 The beginning

The first encounter with stellar convection occurred, not surprisingly, in the case of the Sun, with the discovery of solar granulation, even if the physical background was naturally not properly recognized. Early sightings are due to Galileo and to Scheiner (Mitchell 1916 and Mitchell’s other articles of 1916 in that journal, see also Vaquero and Vázquez 2009, p. 143). Quite frequently, however, Herschel (1801) who reported a mottled structure of the whole solar surface is termed discoverer of solar granulation in the literature. The subject started to be pursued more vividly and also controversially in the 1860s (Bartholomew 1976). The photographic recording of solar granulation, the first one being due to Janssen (1878), clarified the subject of shapes of solar granules and remained the principal method of direct investigation for many decades to come.

These observations required a closer physical understanding. In 1904, Karl Schwarzschild raised the question whether the layering of the solar atmosphere was adiabatic, as was known to hold for the atmosphere of the Earth when strong up- and downflows prevail, or whether a then new concept of layering was appropriate which he dubbed radiative equilibrium (“Strahlungsgleichgewicht” in the original German version, Schwarzschild 1906). By comparing theoretical predictions with the observed degree of solar limb darkening he concluded that the solar atmosphere rather obeyed radiative equilibrium. This applies even from our present viewpoint in the sense that the convective flux, which is non-zero in the lower part of the solar atmosphere, is small compared to the radiative one.

Yet the very occurrence of granulation made it obvious that there had to be some source of mechanical energy able to stir it. For a period of time, the presence of flows in a rotating star, known through work of von Zeipel, Milne, and Eddington to necessarily occur, was considered a candidate. However, this turned out not to lead to a viable explanation when Eddington gave estimates of the velocities of these flows. Indeed, Eddington at first also considered convection not to be important for the physics of stars (cf. Eddington 1926), although he later on changed his opinion (Eddington 1938). The proper mechanism was figured out by Unsöld (1930). He noted that under conditions of the solar subsurface an (assume for the sake of discussion) descending parcel of material would not only have to do work in order to increase pressure and temperature, but that ionization work would be required as well. From such considerations Unsöld concluded that below the solar surface there was a convection zone to be expected and caused by the ionization of hydrogen. Another early line of thought had the stellar interior in mind and considered cases of energy sources strongly concentrated near the center of the star. For such a situation, convective zones were predicted to occur under appropriate circumstances by Biermann (1932). In that paper an analytical convection model was proposed, too: the mixing length theory of convection—which had initially not been developed to model solar convection and granulation.

1.1.2 “Classical” modelling

Once basic causes of solar granulation or rather solar and stellar convection zones had been identified in the early 1930s, theoreticians faced the problem to derive models of stellar and, in the first instance, of solar convection. Ideally, one would of course solve the Navier–Stokes (or Euler’s) equations of hydrodynamics, augmented with realistic microphysics and opacities, in some cases also elaborate radiative transfer calculations and other ingredients. Naturally that was out of question at a time when, at best, only mechanical calculators were available. As a consequence, models had been derived which were computationally (!) sufficiently simple to be, ultimately, incorporated into routine stellar structure or stellar atmosphere calculations. The mixing length paradigm, i.e., the concept of a characteristic length scale over which an ascending convective element survives, dissolving then and delivering its surplus energy, appears first in the work of Biermann (1932, 1942, 1948) and Siedentopf (1935). In her influential 1953 paper, Vitense developed a model where the essential transition region between radiative and convective zone was considered more accurately. In its improved form derived in her 1958 paper (Böhm-Vitense 1958), and in several variants thereof the mixing length model of stellar convection is still the most widely applied prescription in investigations of stellar structure and evolution (cf. Weiss et al. 2004). This is true despite of shortcomings, some of which were mentioned already in the original paper of Biermann (1932).

1.1.3 Non-local models and large scale numerical simulations

From a computational point of view the “classical” approach to modelling amounts to greatly reduce space dimensions from three to zero (in local models, where one has, at each point just to solve some nonlinear algebraic equation) or from three to one (in non-local models, where a depth variable is retained and ordinary differential equations result). Regarding the time variable, it may either be discarded as in mixing length theory or other local models of convection (Böhm-Vitense 1958; Canuto and Mazzitelli 1991; Canuto et al. 1996) or even some Reynolds stress models (Xiong 1985, 1986) or it may be retained, since that allows either finding a stationary solution more easily (Canuto and Dubovikov 1998) or making the theory applicable to pulsating stars, such as in non-local mixing length models (Gough 1977a, b) or other non-local models developed for this purpose (Kuhfuß 1986; Xiong et al. 1997).

The need for non-local models of convection was motivated by two physical phenomena:

-

1.

There is “overshooting” of convective flow into neighbouring, “stable” layers and thus mixing between the two.

-

2.

In pulsating stars convection interacts with pulsation. Convection may cause pulsation, drive it or damp it, or convection may be modulated by pulsation (see Houdek and Dupret 2015, for a review).

For a while the existence of overshooting had remained a controversial issue, as can be seen from the introductory summary in Marcus et al. (1983) who provided their own model for this process. Disagreement concerned both the modelling approach [the modal approach of Latour et al. (1976) is an example for a method “between analytical modelling and numerical simulation”] and the existence and importance of the phenomenon. The latter was settled later on (Andersen et al. 1990; Stothers and Chin 1991), but the disagreement on how to model it remained (cf. the comparison in Canuto 1993). As for pulsating stars, the classical formalism clearly required an extension. This development progressed from non-local mixing length models by Unno (1967) and Gough (1977a, b) to ever more advanced models including the Reynolds stress approach (Xiong et al. 1997) (see again Houdek and Dupret 2015). While the latter was pioneered by Xiong (1978), the most complete models intended for applications in astrophysics were published in a series of papers by Canuto (1992, 1993, 1997a), Canuto and Dubovikov (1998) and Canuto (1999). Nevertheless, most frequently used in practice are probably the non-local models of convection by Stellingwerf (1982) and Kuhfuß (1986) in studies of pulsating stars.

We note that in parallel to the developments in astrophysics the need for a non-local description of convection has also been motivated by laboratory experiments on the relation of the heat flux (which is measured by the Nusselt number) as a function of Rayleigh number in Rayleigh–Bénard convection. A transition between “soft” (Gaussian distributed) and “hard” turbulence in such flows was noted (Heslot et al. 1987) followed by the demonstration of the existence of a large-scale, coherent flow (Sano et al. 1989). These experiments are no longer compatible with the assumption of a simple scaling relation which underlies also the local mixing length model used in astrophysics (cf. its derivation in Spruit 1992). A much more complex set of scaling relations is required (see Grossmann and Lohse 2000; Stevens et al. 2013 for an attempt on a unifying theory) just to describe the interior of a convective zone as a function of fluid parameters (viscosity, heat conductivity) and system parameters (heat flux, temperature gradient). Such experiments have also been made for cases which exhibit what astrophysicists call overshooting and usually refer to what meteorologists and oceanographers describe as “entrainment”: field experiments and laboratory models of the convective, planetary boundary layer of the atmosphere of the Earth began in the late 1960s and early 1970s, respectively. Precision laboratory data on overshooting were obtained in water tank experiments (Deardorff and Willis 1985) followed by similarly accurate, direct measurements in the planetary boundary layer by probes (Hartmann et al. 1997). In both scenarios a convective zone is generated by heating from below and the convective layer is topped by an entrainment layer. Successful attempts to explain these data required the construction of either large scale numerical simulations or complex, non-local models of convection (cf. Canuto et al. 1994; Gryanik and Hartmann 2002 as examples). Although it is encouraging, if a model of convection successfully explains such data, this does not imply it also works in the case of stars: the physical boundary conditions play a crucial role for convective flows and the terrestrial and laboratory cases fundamentally differ in this respect from the stellar case which features no solid walls but can be subject to strong (and non-local) radiative losses and in general occurs and interacts with magnetic fields.

Given the long lasting need for models of convection which are physically more complete than the classical models, it is surprising that the latter are still in widespread use. However, as we discuss in Sect. 3, none of these more advanced models can achieve its wider region of applicability without additional assumptions and in most cases those cannot be obtained from first principles, the Navier–Stokes equations we introduce in Sect. 2, only. Moreover, such models usually introduce considerable computational and analytical complexity which until more recently, with the advent of space-based helio- and asteroseismology on the observational side and advanced numerical simulations on the theoretical side, were difficult to test. Furthermore, the traditional, integral properties of stars can easily be reproduced merely by adjusting free parameters of the classical models (for example, adjusting the mixing length to match the solar radius and luminosity, Gough and Weiss 1976).

However, the advent of computers has made it possible to solve, in principle, the “complete”, spatially multidimensional and time-dependent equations, often also for realistic microphysics and opacities and other physical ingredients as deemed necessary for the investigation at hand. Of course, in particular in early investigations, the space dimensionality had to be reduced to 2, microphysics had to be kept simple, and the like. But until today and for the foreseeable future it remains true that only a limited part of the relevant parameter range—in terms of achievable spatial resolution and computationally affordable time scales—is accessible in all astrophysically relevant cases.

Such numerical simulations request a style of work differing from the one applicable for the papers we have cited up to now. Whereas papers devoted to classical modelling are often authored by just one person, numerical simulations practically always require researchers working in teams.

If we consider compressibility and coverage of a few pressure scale heights as the hallmark of many convection problems in stellar physics, the first simulations aiming at understanding what might be going on in stellar convection, such as Graham (1975) and Latour et al. (1976), date from the mid-1970s.

Quite soon two rather different avenues of research were followed in the modelling community. In the first strand of research interest focussed on solar (and later on stellar) granulation. The two-dimensional simulations of Cloutman (1979) are probably the earliest work in this direction. Indeed it took only a short time until the basic properties of solar granulation could be reproduced, in the beginning invoking the anelastic approximation (see Nordlund 1982, 1984). In contrast to many contemporary papers this pioneering work was already based on numerical simulations in three dimensions. The topic has since evolved in an impressive manner and includes now investigations of spectral lines and chemical abundances, generation of waves, magnetic fields, interaction with the chromosphere, among others. We refer to the review provided in Nordlund et al. (2009). Since it has been completed, important new results have been achieved, regarding excitation of pressure waves, local generation of magnetic fields, high resolution simulations, and others. An impression on recent advances in such areas can be gained, e.g., from Kitiashvili et al. (2013). These simulations require, in particular, a detailed treatment of the radiative transfer equation, since they involve the modelling of layers of the Sun or a star which directly emit radiation into space, i.e., which are optically thin.

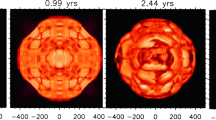

The second strand of investigations is directed more towards general properties of compressible convection and has stellar interiors in mind. As a consequence, it can treat radiative transfer in the diffusion approximation. Early work addressed modal expansions (Latour et al. 1976), but subsequently there was a trend towards using finite difference methods (Graham 1975; Hurlburt et al. 1984). In the course of time, the arsenal of numerical methods which were applied expanded considerably. Efforts to model a star as a whole or considering a spherical shell, where deep convection occurs, made it necessary to abandon Cartesian (rectangular) box geometry. Simulations based on spectral methods for spherical shells were hence developed (see, e.g., Gilman and Glatzmaier 1981). In the meantime, many investigations have addressed the question of convection under the influence of rotation (including dynamo action), the structure of the lower boundary of the convection zone in solar-like stars (tachocline), core convection in stars more massive than the Sun, and others. Figure 1 provides an example. A number of such recent advances are covered in Hanasoge et al. (2015).

Image reproduced with permission from Augustson et al. (2012), copyright by AAS

Convection cells and differential rotation in simulations of an F-type star (spherical shell essentially containing the convective zone; near surface regions not included in the calculations). Rotation rate increasing from top to bottom. Left column radial velocity of convective flows in the upper part of the computational domain. Following columns rotation rate, temperature deviation from horizontal mean and stream function of meridional flow. Differential rotation is clearly visible.

A rather different form of convection, dubbed semiconvection, occurs during various evolutionary stages of massive stars. In an early paper Ledoux (1947) addressed the question of how a jump or gradient in molecular weight within a star would develop and derived what is today known as Ledoux criterion for convective instability. Semiconvection occurs when a star (at a certain depth) would be unstable against convection in the sense of the temperature gradient (Schwarzschild criterion) but stable considering the gradient in mean molecular weight which arises from dependency of chemical composition on depth (Ledoux criterion). In most of the literature the term semiconvection is restricted to the case where stability is predicted to hold according to the criterion of Ledoux (while instability is predicted by the Schwarzschild criterion), since both criteria coincide in the case of instability according to the Ledoux criterion which implies efficient mixing and no difference to the case without a gradient in chemical composition. Since application of one or the other of the criteria leads to different mixing in stellar models, calculations of stellar evolution are affected accordingly (see the critical discussion, also on computational issues, by Gabriel et al. 2014). In addition, thermal and “solute” (helium in stars; salt in the ocean) diffusivities play a role in the physics of the phenomena. Semiconvection gained interest with the advent of models of stellar evolution, specifically through a paper by Schwarzschild and Härm (1958). The interest was and is based on the material transport processes, and therefore of stellar mixing, thought to be effected by semiconvection. Unlike ordinary convection, where there existed and exists a standard recipe (in the form of mixing length theory) used in most stellar structure or evolution codes, such a recipe has not appeared for semiconvection (cf. Kippenhahn and Weigert 1994). Indeed, the semiconvection models which are used in studies of stellar physics are based on quite different physical pictures and arguments. Knowledge is in an early stage even considering the most basic physical picture. Likewise, numerical simulations referring to the astrophysical context have appeared only more recently. Early simulations which, however, remained somewhat isolated have been reported in Merryfield (1995) and Biello (2001). Only during the last few years activity has increased. For recent reviews on various aspects consult Zaussinger et al. (2013) and Garaud (2014) as well as the papers cited there (see also Zaussinger and Spruit 2013). The simulation parameters are far from stellar ones, but the ordering of the size of certain parameters is the same as in the true stellar case. Such simulations seem to consider a popular picture of semiconvection, many convecting layers, horizontally quite extended, vertically more or less stacked and divided by (more or less) diffusive interfaces (Spruit 1992), to be at least tenable, since it is possible to generate such layers also numerically with an, albeit judicious, choice of parameters.

1.2 Consequences and choosing the subjects for this review

Considering the advances made during the last decades, why is convection still considered an “unsolved problem” in stellar astrophysics? The reason is that although numerical simulations can nowadays provide answers to many of the questions posed in this context, they cannot be applied to all the problems of stellar structure, evolution, and pulsation modelling: this is simply unaffordable. At the same time, no computationally affordable model can fully replace such simulations: uncertainties they introduce are tied to a whole range of physical approximations and assumptions that have to be made in those models, and to the poor robustness of their results and to the lack of universality of the model specific parameters.

Instead of providing an extended compilation of success and failure of the available modelling approaches, in this review we rather want to shed some more light on the origins of these difficulties and how some of these can be circumvented while others cannot. Our focus is hence—in a broad sense—on the computational aspects of modelling stellar convection. In particular, we want to provide an overview on which types of problems are accessible to numerical simulations now or in the foreseeable future and which ones are not. These questions are dealt with in Sect. 2 and motivate an overview in Sect. 3 on the state-of-the-art of convection modelling based on various types of (semi-) analytical models, since at least for a number of key applications they cannot be replaced by numerical simulations. Multidimensional modelling and the available techniques are reviewed in the next sections. The different variants of equations used in this context are reviewed in Sect. 4. There is a particular need for such a description, since a detailed summary of the available alternatives appears to be missing in the present literature, most clearly in the context of stellar astrophysics. We then proceed with an equally wanting review of the available numerical methods along with their strengths and weaknesses in Sect. 5. We conclude this review with a discussion on modelling specific limitations for the available approaches in Sect. 6. This section also addresses the hot topic of “parameter freeness” which is always around in discussions on the modelling of convection. Indeed, it had kept alive one of the most vivid and arguably one of the least helpful discussions in stellar astrophysics held during the last few decades. An effort is hence made to disentangle the various issues related to this subject. The limitations—including that one of claimed parameter freeness—are reviewed both from a more stringent and a more pragmatic point view and it is hoped that this can provide some more help for future work on the subject of stellar convection. To keep the material for this review within manageable size, the topic of interaction of convection with rotation and in particular with magnetic fields had mostly to be omitted. In a few cases we have provided further references to literature covering those topics, in particular with respect to the numerical methods for low-Mach-number flows discussed in Sect. 4. For a recent account of numerical magnetohydrodynamics, even in the more complex relativistic setting, we refer to Martí and Müller (2015).

2 What is the difficulty with convection?

2.1 The hydrodynamical equations and some solution strategies

What are the basic difficulties of modelling convection by analytical or numerical methods? To answer this question we first define the hydrodynamical equations which actually are just the conservation laws of mass, momentum, and energy of a fluid. In Sect. 4 we return to the numerical implications of their specific mathematical form.

The discovery of the hydrodynamical equations dates back to the eighteenth and nineteenth century. Analysis of the local dynamics of fluids eventually led to a set of partial differential equations which was proposed to govern the time development of a flow (see Batchelor 2000; Landau and Lifshitz 1963, e.g., for a derivation) for a fully compressible, heat conducting, single-component fluid. This was later on extended to include the forcing by magnetic fields. In the twentieth century the consistency of these equations with statistical mechanics and the limits of validity were demonstrated as well (Huang 1963; Hillebrandt and Kupka 2009, e.g.). A huge number of successful applications has established their status as fundamental equations of classical physics. Under their individual names they are known as continuity equation, Navier–Stokes equation (NSE), and energy equation and they describe the conservation of mass, momentum, and total energy. The term NSE is also assigned to the whole system of equations. In the classical, non-relativistic limit they read (we write \(\partial _t f\) instead of \(\partial f / \partial t\) for the partial derivative in time of a function f here and in the following):

where \(q_{\mathrm{source}} = q_{\mathrm{rad}} + q_{\mathrm{cond}} + q_{\mathrm{nuc}}\) is the net production of internal energy in the fluid due to radiative heat exchange, \(q_{\mathrm{rad}}\), thermal conduction, \(q_{\mathrm{cond}}\), and nuclear processes, \(q_{\mathrm{nuc}}\). At this stage of modelling they are functions of the independent variables of (1)–(3), the spatial location \(\varvec{x}\) and time t. The same holds for the dependent variables of this system, \(\rho , \varvec{\mu }= \rho \varvec{u}\), and \(e = \rho E\), i.e., the densities of mass, momentum, and energy. We note that \(\varvec{u} \otimes \varvec{u}\) is the dyadic product of the velocity \(\varvec{u}\) with itself and \(E=\varepsilon + \frac{1}{2}|\varvec{u}|^2\) is the total (sum of internal and kinetic) specific energy, each of them again functions of \(\varvec{x}\) and t. The quantities \(q_{\mathrm{rad}}\) and \(q_{\mathrm{cond}}\) can be written in conservative form as \(q_{\mathrm{rad}} = -\mathrm{div}\,\varvec{f}_{\mathrm{rad}}\) and \(q_{\mathrm{cond}} = -\mathrm{div}\,\varvec{h}\), where \(\varvec{f}_{\mathrm{rad}}\) is the radiative flux and \(\varvec{h}\) the conductive heat flux whereas \(q_{\mathrm{nuc}}\) remains as a local source term. Inside stars the mean free path of photons is about 2 cm and along this distance in radial direction the temperature changes are only of the order of \({\sim } 3\times 10^{-4}\) K (see Kippenhahn and Weigert 1994). This justifies a diffusion approximation for the radiative flux \(\varvec{f}_{\mathrm{rad}}\) which avoids solving an additional equation for radiative transfer. Indeed, the diffusion approximation is exactly the local limit of that equation (Mihalas and Mihalas 1984) and it reads

where \(T=T(\rho ,\varepsilon ,\hbox {chemical composition})\) is the temperature and \(K_\mathrm{rad}\) is the radiative conductivity,

The quantities a, c, and \(\sigma \) are the radiation constant, vacuum speed of light, and Stefan–Boltzmann constant, while \(\kappa \) is the Rosseland mean opacity (see Mihalas and Mihalas 1984; Kippenhahn and Weigert 1994). \(\kappa \) is the specific cross-section of a gas for photons emitted and absorbed at local thermodynamical conditions (local thermal equilibrium) integrated over all frequencies (thus, \([\kappa ]= \mathrm{cm}^{2}\,\mathrm{g}^{-1}\) and \(\kappa =\kappa (\rho ,T,\hbox {chemical composition})\), see Table 1). The heat flux due to collisions of particles can be accurately approximated by the diffusion law

where \(K_\mathrm{h}\) is the heat conductivity (cf. Batchelor 2000). In stars, radiation is usually much more efficient for energy transport than heat conduction. This is essentially due to the large mean free path of photons in comparison with those of ions and electrons. Conditions of very high densities are the main exception. These are of particular importance for modelling the interior of compact stars (see Weiss et al. 2004). A derivation of the hydrodynamical equations for the case of special relativity, in particular for the more general case where the fluid flow is coupled to a radiation field, is given in Mihalas and Mihalas (1984). The latter also give a detailed discussion of the transition between classical Galilean relativity, a consistent treatment containing all terms of order \(\mathrm{O}(v/\mathrm{c})\) for velocities v no longer much smaller than the speed of light c, and a completely co-variant treatment as obtained from general relativity. For an account of the theory of general relativistic flows see Lichnerowicz (1967), Misner et al. (1973) and Weinberg (1972), and further references given in Mihalas and Mihalas (1984). Numerical simulation codes used in astrophysical applications, in particular for the modelling of stellar convection, usually implement only a simplified set of equations when dealing with radiative transfer: typically, the fluid is assumed to have velocities \(v \ll \,\mathrm{c}\), whence the intensity of light can be computed from the stationary limit of the radiative transfer equation (see Chap. 2 in Weiss et al. 2004). The solution of that equation allows the computation of \(\varvec{f}_{\mathrm{rad}}\) and the radiative pressure \(p_{\mathrm{rad}}\) to which we return below.

For numerical simulation of stellar convection Eqs. (1)–(6) are often augmented by a partial differential equation for the time evolution of the (divergence free) magnetic induction \(\varvec{B}\) which also couples into the conservation laws for momentum and energy of the fluid, (2)–(3). A derivation of these equations and an introduction into magnetohydrodynamics can be found, for example, in Landau and Lifshitz (1984) and Biskamp (2008). Like the Navier–Stokes equations these can also be derived from the more general viewpoint of statistical physics (Montgomery and Tidman 1964) which allows recognizing their limits of applicability. For the remainder of this review we restrict ourselves to the classical, non-relativistic limit, (1)–(6), without a magnetic field. The radiative flux is obtained from the diffusion approximation (for the case of optically thin regions at stellar surfaces, which occurs in a few examples, it is assumed to be obtained from solving the stationary limit of the radiative transfer equation, see Weiss et al. 2004).

Returning to Eqs. (1)–(6) we note that internal forces per unit volume, given by the divergence of the pressure tensor \(\varvec{\varPi }\), can be split into an isotropic part and an anisotropic one. The latter originates from viscous stresses. The isotropic part is just the mechanical pressure. It equals the gas pressure p from an equation of state, \(p=p(\rho ,T,\hbox {chemical composition})\), if extra contributions arising from compressibility are collected into the second or bulk viscosity, \(\zeta \) (see Batchelor 2000, for a detailed explanation). Thus,

where \({{\varvec{I}}}\) is the unit tensor with its components given by the Kronecker symbol \(\delta _{ik}\) and the components of the tensor viscosity \(\varvec{\pi }\) are given by (as for time t we abbreviate \(\partial f / \partial x_j\) by \(\partial _{x_j} f\))

The dynamical viscosity \(\eta \) is related to the kinematic viscosity \(\nu \) by \(\eta = \nu \rho \). Similar to \(\kappa \) the quantities \(\nu \) and \(\zeta \) are functions of the thermodynamical variables \(\rho , T\) (or \(\varepsilon \)), and chemical composition. Because \(\varvec{\pi }\) is a tensor of rank two, a quantity such as \(\varvec{\pi } \varvec{u}\) refers to the contraction of \(\varvec{\pi }\) with the vector \(\varvec{u}\). Note that (8) is linear in \(\varvec{u}\) which is an approximation sufficiently accurate for essentially all fluids of interest to astrophysics. A detailed derivation of (7)–(8) is given in Batchelor (2000).

To model stellar conditions Eq. (2) has to be modified, since photons can transport a significant amount of momentum. This mechanical effect is represented by the radiation pressure tensor \(P^{ij}\) (see Mihalas and Mihalas 1984) which is coupled into Eqs. (2)–(3). For isotropic radiation this problem can be simplified since in that case \(P^{ij}\) can be written as the product of a scalar radiation pressure \(p_{\mathrm{rad}}\) and the unit tensor \({{\varvec{I}}}\). Because the contribution of \(\mathrm{div}\,(p_{\mathrm{rad}} {{\varvec{I}}})\) in (2) is additive, it is possible to absorb \(p_{\mathrm{rad}}\) into the term for the gas pressure and treat it as part of the equation of state (\(p_{\mathrm{rad}} = (1/3) a T^4\), see Weiss et al. 2004; Mihalas and Mihalas 1984). Such a procedure is exact at least as long as the diffusion approximation holds (Mihalas and Mihalas 1984) and allows retaining (2)–(3) in their standard form, a result of great importance for the modelling of stellar structure and evolution.

Finally, the gradient of the potential of external forces, \(\phi \), has to be specified. The coupling of magnetic fields in magnetohydrodynamics as well as Coriolis forces in co–rotating coordinate systems could be considered as external forces. However, the external potential itself is usually just due to gravitation, where \(\varvec{g} = -\mathrm{grad}\,\varPhi \). Equations (2)–(3) are thus rewritten as follows:

As implied by the discussion above, here p is usually meant as the sum of gas and radiation pressure and supposed to be given by the equation of state (cf. Sect. 6.3, 6.4, and 11 in Weiss et al. 2004). The gravitational acceleration \(\varvec{g} = -\mathrm{grad}\,\varPhi \) is the solution of the Poisson equation \(\mathrm{div}\,\mathrm{grad}\,\varPhi = 4\pi \mathrm{G}\,\rho \), where G is the gravitational constant. Since in all cases of interest here \(q_\mathrm{nuc}\) is a function of local thermodynamic parameters (\(\rho , T\), chemical composition, cf. Kippenhahn and Weigert 1994), we find that Eqs. (1), (9) and (10) together with (4)–(6) and (8) form a closed system of equations provided the material functions for \(\kappa , K_\mathrm{h}, \nu , \zeta \), and the equation of state are known as well.

2.1.1 Solution strategies

While (1)–(10) have been known for a long time, analytical solutions or even just proofs of existence of a unique solution have remained restricted to rather limited, special cases. So how should we proceed to use the prognostic and diagnostic capabilities of these equations? One possibility is to construct approximate solutions by means of numerical methods. We focus on this approach in Sects. 4 and 5. An alternative to that is to approximate first the basic equations themselves. Famous examples for this approach are the Boussinesq approximation, stationary solutions, or the Stokes equations for viscous flows (see Batchelor 2000; Quarteroni and Valli 1994, e.g., and Sect. 4.3.1 below). The equations of stellar structure for the fully radiative case with no rotation (cf. Kippenhahn and Weigert 1994; Weiss et al. 2004) provide another example. The latter can also be obtained from models of the statistical properties of the flow (see below). In most cases simplified variants of the basic equations also require numerical methods for their solution. This is still advantageous as long as the computational costs of such approximate solutions are less demanding (cf. Sect. 5) than numerical solutions of (1)–(10).

Another possibility is the construction of a different type of mathematical models which models properties of the hydrodynamical equations. Staying most closely to the original equations are model equations for volume averages of (1)–(10). This is quite a natural approach, since also each astrophysical observation has a finite resolution in space, time, and energy, and in this sense refers to a volume average. Numerical solutions constructed with this goal in mind are usually termed large eddy simulations (LES), although slightly different names are used to denote specific variants of it, for instance, iLES and VLES, abbreviations for implicit large eddy simulations and very large eddy simulations. The former refer to numerical solution methods for (1)–(10) where the numerical viscosity inherent to the solution scheme has the role of representing all effects operating on length scales smaller than the grid of spatial discretization used with the method. The latter implies that a significant amount of kinetic energy and dynamical interaction resides and occurs on such “unresolved” (“sub-grid”) length scales. An introduction to LES can be found in Pope (2000). In astrophysics it is common to make no clear distinction between such calculations and direct numerical simulations (DNS). The latter actually refers to numerical approximations of (1)–(10) which do not assume any additional (statistical, physical) properties of the local volume average of the numerical solution to hold: all length scales of physical interest are hence resolved in such calculations, a requirement typically only fulfilled for mildly turbulent laboratory flows, viscous flows, and some idealized setups as used in numerical studies of the basic properties of (1)–(10). On the other hand, in “hydrodynamical simulations” of astrophysical objects it is often (implicitly) assumed that numerical viscosity of a scheme used to construct an approximate solution with moderate spatial resolution mimics the spatially and temporally averaged solution which is obtained with the same scheme at much higher resolution. Such simulations are actually iLES and hence clearly fall into the category of LES. We return to this subject further below.

Since an LES approach may be unaffordable or difficult to interpret or compare with observational data, further physical modelling is often invoked to derive mathematical model equations that are more manageable. A classical example are the standard equations of stellar structure and evolution (cf. Kippenhahn and Weigert 1994; Weiss et al. 2004) which account for both radiative and convective energy transport. These equations are actually mathematical models for statistical properties of Eqs. (1)–(10). More generally, ensemble averaging can be used to construct model equations, for instance, for some mean density \({\overline{\rho }}\) and mean temperature \(\overline{T}\). The most common averages are one-point averages such as the Reynolds stress approach (see Sect. 3.3) which model statistical distributions that are functions of location and time only (cf. Lesieur 1997 and, in particular, Pope 2000). In turbulence modelling two-point averages are popular as well (see also Sect. 3.3). They deal with distribution functions that depend on the distance (or difference of locations) in addition to their spatial and temporal dependencies (Lesieur 1997; Pope 2000). The ensemble averaged approach requires additional, closure assumptions to construct complete sets of model equations.

Because the closure assumptions cannot be derived from (1)–(10) alone, alternatives have been sought for a long time. The coherent structures found in turbulent flows have been interpreted as a hint that geometrical properties may be taken as a guideline towards a new modelling approach (cf. Lumley 1989). When comparing such ambitious goals with more recent introductions into the subject of turbulent flows (Pope 2000; Tsinober 2009), progress achieved along this route is more modest than one might have expect one or two decades earlier (Lumley 1989). Interestingly, the analysis of structural properties of turbulent convection, for instance, has led to improved models on their statistical properties (Gryanik and Hartmann 2002; Gryanik et al. 2005), a nice example for why Tsinober (2009) has listed the idea that “statistics” and “structure” contrapose each other among the common misconceptions about turbulent flows.

To replace the NSE at the fundamental level by a discrete approach has already been proposed several decades ago (see the discussion in Lumley 1989), for instance, through the concept of cellular automata (cf. Wolf-Gladrow 2000). Today the Lattice Boltzmann Methods (LBM) have become a common tool particularly in engineering applications, but rather as a replacement of LES or direct numerical simulations instead of becoming an approach for more theoretical insights (for an introduction see, e.g., Succi 2001). For the study of fluids in astrophysics the smooth particle hydrodynamics (SPH) method has become the most successful among the discrete or particle-based concepts to model fluid dynamics (for a general introduction, see, e.g., Violeau 2012). SPH may be seen as a grid-free method to solve the NSE and in this sense again it is rather an alternative to (grid-based) numerical solutions of (1)–(10) and not an analytical tool. Until now these discrete methods, however, have found little interest for the modelling convection in astrophysics or geophysics. This is presumably because for many physical questions asked in this context there is not so much benefit from having very high local resolution at the extent of low resolution elsewhere. In Sects. 4 and 5 we discuss how also grid-based methods can deal with strong stratification, which may require high spatial resolution in a limited domain, and non-trivial boundary conditions as well.

A completely different goal has been suggested with the introduction of stochastic simulations of the multi-scale dynamics of turbulence (cf. Kerstein 2009). Contrary to LBM it does not evolve probability density functions, i.e., properties of particles, nor does it require the definition of kernels to model interactions as in SPH. Rather, stochastic maps for the evolution of individual particles are introduced which realize the interactions themselves. Clearly, at the largest scale and in three spatial dimensions, such an approach would become unaffordable. But it appears highly suitable to construct subgrid-scale models for conventional LES (see Kerstein 2009). This holds particularly, if the exchange of information (on composition, internal energy, etc.) is complex and crucial for the correct dynamics of the investigated system at large scales, for instance, in combusting flows.

2.2 Spatial grids for numerical simulations of stellar convection

2.2.1 Constructing a grid for simulating the entire solar convection zone

How expensive would be a hydrodynamical simulation of an entire stellar convection zone or of a star as a whole? Let us first have a look at the spatial scales of interest in a star, specifically our Sun. Adapting these estimates to other stars is simple and does not change the basic arguments.

The solar radius has been measured to be \(R_{\odot } \sim 695{,}500\,\mathrm{km}\) (cf. Brown and Christensen-Dalsgaard 1998 and Chap. 18.4c in Weiss et al. 2004). From helioseismology the lower boundary of the solar convection zone is \(R / R_{\odot } \sim 0.713\) (Weiss et al. 2004, cf. Bahcall et al. 2005). The solar convection zone reaches the observable surface where \(R / R_{\odot } \sim 1\). Its depth is hence about \(D \sim 200{,}000\,\mathrm{km}\) considering overall measurement uncertainties (see also the comparison in Table 4 of Christensen-Dalsgaard et al. 1991). Differences of D of up to 10% are not important for most of the following. Another important length scale is given by solar granules which are observed to have typical sizes of about \(L_\mathrm{g} \sim 1200 \ldots 1300\,\mathrm{km}\). Measurements made from such observations have spatial resolutions as small as \({\sim }35\,\mathrm{km}\) (cf. Spruit et al. 1990; Wöger et al. 2008). By comparison the highest resolution LES of solar convection in three dimensions, which has been published thus far (Muthsam et al. 2011), has achieved a horizontal and vertical resolution of \(h \sim 3\,\mathrm{km}\). But this high resolution was limited to a region containing one granule and its immediate surroundings (Muthsam et al. 2011, regions further away were simulated at lower resolution).

This value of h is orders of magnitudes larger than the Kolmogorov scale \(l_\mathrm{d}\) which quantifies length scales where viscous friction becomes important (cf. Lesieur 1997; Pope 2000). \(l_\mathrm{d}\) can be constructed with dimensional arguments from the kinetic energy dissipation rate \(\epsilon \) and the kinematic viscosity as \(l_\mathrm{d} = (\nu ^3 \epsilon ^{-1})^{1/4}\). Due to the conservative form of (1)–(10) production of kinetic energy has to equal its dissipation. For the bulk of the solar convection, where most of the energy transport is by convection and no net energy is produced locally in the same region, one can estimate \(\epsilon \) from the energy flux through the convection zone (see Canuto 2009) as \(\epsilon \sim L_{\odot } / M_{\odot } \approx 1.9335\,\mathrm{cm}^2\,\mathrm{s}^{-3} \sim \,\mathrm{O}(1)\,\mathrm{cm}^2\,\mathrm{s}^{-3}\) using standard values for solar luminosity and mass (see Chap. 18.4c in Weiss et al. 2004, and references therein). From solar models (e.g., Stix 1989; Weiss et al. 2004) temperature and density as functions of radius can be estimated. With Chapman’s result (1954) on kinematic viscosity of fully ionized gases, \(\nu = 1.2 \times 10^{-16} T^{5/2} \rho ^{-1}\,\mathrm{cm}^2\,\mathrm{s}^{-1}, \nu \) is found in the range of 0.25–\(5\,\mathrm{cm}^{2}\,\mathrm{s}^{-1}\), whence \(l_\mathrm{d} \approx 1\,\mathrm{cm}\) throughout most of the solar convection zone (Canuto 2009). Near the solar surface the fluid becomes partially ionized. From Tables 1 and 2 in Cowley (1990) \(\nu \) is found in the range of 145 cm\(^2\) s\(^{-1}\) to 1740 cm\(^2\) s\(^{-1}\) for T between 19,400 and 5660 K. Thus, just at and underneath the solar surface, \(\nu \sim 10^3\,\mathrm{cm}^2\,\mathrm{s}^{-1}\) whence \(l_\mathrm{d} \approx 1\,\mathrm{m} \ldots 2\,\mathrm{m}\) in the top layers of the solar convection zone.

Another length scale of interest is the thickness of radiative thermal boundary layers. We note that the designation “boundary layer” strictly speaking refers to the geophysical and laboratory scenario where heat enters the system through a solid horizontal plate (a scenario also used in idealized numerical simulations with astrophysical applications such as Hurlburt et al. 1994; Muthsam et al. 1995, 1999; Chan and Sofia 1996, e.g.). However, the same length scale \(\delta \) is equally important for convection zones without “solid boundaries” in the vertical direction, since it describes the length scale below which temperature fluctuations are quickly smoothed out by radiative transfer (in the diffusion approximation) and we use this notion for its definition.Footnote 1 It is thus the length scale to be resolved for an accurate computation of the thermal structure and radiative cooling processes. Taking the diffusivity of momentum described by \(\nu \) as a reference, the Prandtl number \(\mathrm{Pr} = \nu / \chi \) for the solar convection zone is in the range of \(10^{-9}\) near the surface and increases inwards to about \(10^{-7}\) (see Sect. 4.1 in Kupka 2009b, for details). Since for diffusion equations one can relate the mean free path l, the diffusivity d, and the collision time t through \(t \approx l^2/d\) to each other (cf. Chap. 18.3a in Weiss et al. 2004), we can compare heat and momentum transport on the same reference time scale to each other. This may be, for instance, the time scale of convective transport over the largest length scales appearing in a certain depth of the convective zone (the size of granules, e.g.). During this amount of time heat diffusion transports material over a distance \(\delta = \sqrt{\chi t_\mathrm{ref}}\). Since this choice for \(t_\mathrm{ref}\) is also the time scale during which kinetic energy is dissipated (cf. Sects. 1 and 2 in Canuto 2009), we may use it to compare \(\delta ^2\) with \(l_\mathrm{d}^2\) and obtain \(\delta ^2 / l_\mathrm{d}^2 \sim \chi / \nu =\,\mathrm{Pr}^{-1}\) (note that the time scale cancels out in this ratio). Thus, \(\delta \sim \,\mathrm{Pr}^{-1/2}\,l_\mathrm{d}\) and for the lower part of the solar convection zone, \(\delta _\mathrm{min} \sim 30\,\mathrm{m}\) whileFootnote 2 near the surface, \(\delta _\mathrm{surface} \sim 30\,\mathrm{km} \ldots 60\,\mathrm{km}\). We note that near the solar surface, \(\chi \) varies rapidly and in the solar photosphere the fluid becomes optically thin, but for a rough estimate of scales these approximations are sufficient. Indeed, the steepest gradients in the solar photosphere are found just were the fluid has already become optically thick and \(\delta _\mathrm{surface}\) is thus closely related to the resolution used in LES of solar (stellar) surface convection, as we discuss below.

Evidently, it is hopeless trying to resolve the Kolmogorov scale, as required by a DNS, in a simulation which encompasses the whole solar convection zone: this would require about \(N_\mathrm{r}(l_\mathrm{d}) \sim 2\times 10^{10}\) grid points in radial direction. With D and \(R_{\odot }\) the solar convection zone is estimated to have a volume of \(V \sim 9\times 10^{32}\,\mathrm{cm}^3\) which yields the number of required grid points, \(N_\mathrm{tot}(l_\mathrm{d}) \sim 9\times 10^{32}\) (a simulation of the total solar volume exceeds this by less than 60%). Even before taking time integration into account, it is clear that such a calculation is a pointless issue on semiconductor based computers (irrespectively of whether a DNS is considered to be really necessary or not).

The odds are not much better for a simulation to resolve \(\delta \) throughout the solar convection zone, since this requires a grid with \(h = \min (\delta ) = \delta _\mathrm{min} \sim 30~\mathrm{m}\). That resolution is more “coarse” by a factor of 3000 which reduces the number of (roughly equidistantly spaced) grid points by \(2.7\times 10^{10}\) to \(N_\mathrm{tot}(\delta _\mathrm{min}) \sim 3.3\times 10^{22}\) for the solar convective shell. If we take ten double words to hold the five basic variables \(\rho , \mu , e\) and the derived quantities \(\varvec{u}, T, P\) for each grid cell volume, we obtain a total of 80 bytes as a minimum storage requirement per grid point (most hydrodynamical simulation codes require at least five to ten times that amount of memory). We can thus estimate a single snapshot to take 2.6 YB (Yottabyte, \(1\,\mathrm{YB} = 10^{24}\) bytes) of main memory. This exceeds current supercomputer memory capacities by seven to eight orders of magnitude.

As a minimum requirement for an LES of the entire solar convection zone one would like to resolve at least the radiative cooling layer near the surface. This is necessary to represent the radiative cooling of gas at the solar surface within the simulation on the computational grid, as it is the physical process which drives the entire convective flow (cf. Spruit et al. 1990; Stein and Nordlund 1998). From our previous estimates we would expect that \(h = \min (\delta _\mathrm{surface})/2 \sim 15\,\mathrm{km}\), because two grid points are the minimum to represent a feature in a solution and thus catch a property of interest related to it.

Indeed, \(h \lesssim 15\,\mathrm{km}\) is the standard resolution of LES of solar granulation (see Table 3 in Beeck et al. 2012 and Sect. 4 of Kupka 2009a). Then, radiative cooling is properly modelled, i.e., at \(h = 12\,\mathrm{km}\) the horizontal average of the vertical radiative flux \(F_\mathrm{rad}\) is found to be smooth in a one-dimensional numerical experiment even though its negative divergence, the cooling rate \(q_\mathrm{rad}\), would require a ten times higher resolution (Nordlund and Stein 1991). From the same calculations T is found to change by up to \(190\,\mathrm{K}\,\mathrm{km}^{-1}\) which at 10,000 K and at \(h = 12\,\mathrm{km}\) is a relative change of \({\sim }23\%\) between two grid cells. In actual LES of about that resolution (Stein and Nordlund 1998) T changes vertically on average only by up to \(30\,\mathrm{K}\,\mathrm{km}^{-1}\) and in hot upflows by up to \(100\,\mathrm{K}\,\mathrm{km}^{-1}\), a relative change less than 4% (or up to 12% where it is steepest). As the maximum mean superadiabatic gradient \(\partial \ln T / \partial \ln P\) is found to be about 2 / 3 in these simulations (Rosenthal et al. 1999), the corresponding changes in P between grid cells are up to 6% on average and 18% at most. Hence, the basic thermodynamical variables are resolved on such a grid despite opacity \(\kappa \) and thus optical depth \(\tau \) and the cooling rate \(q_\mathrm{rad}\) vary more rapidly by up to an order of magnitude due to the extreme temperature sensitivity of opacity in the region of interest (Nordlund and Stein 1991).

The actual resolution demands are somewhat higher than the simple estimate of \(h \approx 15\,\mathrm{km}\) which is anyway limited to regions where the approximation of radiative diffusion holds (optical depths \(\tau \gtrsim 10\)). Since it appears to be sufficient in practice (cf. Beeck et al. 2012; Kupka 2009b) we use it for the following estimate. By lowering the number of grid points along the solar convection zone in radial direction to \(N_\mathrm{r}(\delta _\mathrm{surface}) \sim 13{,}000\) we obtain that \(N_\mathrm{tot}(\delta _\mathrm{surface}) {\sim }2.7\times 10^{14}\) or \({\sim }4.3\times 10^{14}\) for the Sun as a whole. For grids of this size it is becoming possible to store one snapshot of the basic variables in the main memory of a supercomputer, if the entire capacity of the machine were used for this purpose: a minimum amount of 80 bytes per grid cell requires 21.6 PB (or 34.4 PB, respectively), although production codes are more likely to require several 100 PB – 1 EB for such applications.

One can further reduce this resolution demand by taking into account that the pressure scale height \(H_p\) drastically increases from the top to the bottom of the convection zone. We recall its definition, \(H_p = -(\partial r / \partial \ln p) \approx p/(\rho g)\), where g is the (negative) radial component of \(\varvec{g}\). In the stationary case, for spherical symmetry and negligibly small turbulent pressure the two expressions are identical for \(r > 0\), so the second one is almost always used to define \(H_p\) (cf. Kippenhahn and Weigert 1994; Weiss et al. 2004). If we require the relative accuracy of p to be the same throughout the convection zone, it suffices to keep the number of grid points per pressure scale height constant or, simply, scale the radial grid spacing with \(H_p\). Except for the surface layers, where \(\mathrm{grad}\,T\) can become very steep, this should ensure comparable relative accuracy of numerical derivatives for all basic thermodynamic quantities throughout the solar convection zone under the assumption that microphysical data (equation of state, opacity, etc.) are also known to the same relative accuracy. With \(h \approx 15\,\mathrm{km}\) and \(H_p \approx 150\,\mathrm{km}\) at the solar surface and for a total depth of solar convection zone of about 20 pressure scale heights (cf. Stix 1989; Weiss et al. 2004, and references therein) one can thus reduce \(N_\mathrm{r}(\delta _\mathrm{surface})\) to an optimistic \(N_\mathrm{r}(\mathrm{minimal}) \sim 200\). Since pressure stratification occurs only vertically, the net gain is a factor of 65, whence \(N_\mathrm{tot}(\mathrm{minimal}) \sim 4.5\times 10^{12}\). Because the solar interior region has its own resolution demands due to the temperature sensitivity of nuclear fusion (Kippenhahn and Weigert 1994; Weiss et al. 2004), there can be no further gain for models of the entire Sun. For simplicity we assume the overall scaling factor in comparison with models of the convective shell to be the same and obtain \(N_\mathrm{tot}(\mathrm{minimal}) \sim 7.2\times 10^{12}\) for models of the Sun. Memory requirements as low as 0.33–0.5 PB are within reach of the current, largest supercomputers. Again, realistic production codes may require something like 2–20 PB for such a model. Such demands may mislead one to consider LES of that kind suitable for next generation supercomputers, but there are further, severe constraints ahead. As we discuss in Sect. 2.3 it is the necessary number of time steps which continues to prohibit this class of simulations for the Sun for quite a few years to come. Solar simulations hence have to be limited to smaller segments or boxes as domains which include the solar (and in general stellar) surface or alternatively to spherical shells excluding the surface layers. A list including also exceptions from these limitations and a summary of computational complexity are given in Sect. 2.6.

2.2.2 Computing grids for affordable problems

If one sufficiently limits the extent of the domain of the numerical simulation, its computational demands can be brought into the range of affordable problems. The construction of computing grids which are affordable on existing hardware is hence the first step to make LES of convection in stars viable. Two important ideas to do this have frequently been used and they can readily be generalized:

-

1.

The box-in-a-star approach suggests to perform the numerical simulation only in a spatially limited domain the location of which is usually considered to be close to the surface of a star and include those layers emitting photons directly into space, i.e., the photosphere of the star. This is not in any way a necessary condition, as the same simulation technique can also be applied for layers located completely inside a star. But the “3D stellar atmospheres” are certainly the most prominent application of this kind since the pioneering work of Nordlund (1982). The main challenge in this approach is to define suitable boundary conditions to allow for an in- and outflow of energy. Usually, this is also assumed for mass and momentum in which case the boundary conditions are called open. Due to the strong stratification found near the observable surface of most stars the size of the dominant flow structure is small enough such that a Cartesian geometry can be assumed (cf. Stein and Nordlund 1998), except for the case of bright giants and supergiants which require a different way of modelling (see below). The gravitational acceleration is hence approximated to be constant along the vertical direction which coincides with the radial one of the actual star and the curvature of the star in that domain is neglected. This motivates the introduction of periodic boundary conditions in the plane orthogonal to the direction of gravity. The simulation domain has to be defined sufficiently wide for this approach to work (cf. Appendix A of Robinson et al. 2003). The choice of vertical boundary conditions is more subtle and it may depend on the type of star under investigation. A recent comparison of the impact of boundary conditions on numerical simulations of the solar surface can be found in Grimm-Strele et al. (2015a). A large amount of astrophysical literature gives credit to this approach. A detailed account for just the case of the Sun has already been subject to a review on its own (Nordlund et al. 2009). We note here that this basic idea is no sense limited to the case of stars, but is equally applicable to giant planets, the atmosphere of the Earth and meteorology in particular, to oceanography, or other types of flow problems whenever it is possible to reasonably model the influence of the environment of a simulation box through boundary conditions. Indeed, also in some of those other scientific disciplines the equivalent of a box-in-a-star ansatz already has a decade-long tradition in applications.

Generalization: simulations with artificial boundaries inside the star. The simulation can be designed such as to exclude the near-surface layers in numerical simulations of just those stars for which in turn the Cartesian box-in-a-star approach is particularly suited for simulations of their surface layers. Here, the upper (near surface) boundary condition is located sufficiently inside the star such that large scale flow structures can be resolved throughout the simulation domain. This permits to set up a shell-in-a-star approach where the curved geometry of a star (either a sphere or ellipsoid) is properly accounted for. In the spherical case, the stellar radius replaces the vertical coordinate used in box-in-a-star type simulations and a series of shells then builds up the simulation grid which may be a sector (in 2D) or a full shell (in 3D). Pioneered at about the same time by Gilman and Glatzmaier (1981) as its box-in-a-star cousin, this approach has since been applied to the case of (rotating) stars including our Sun and planets including the interior of our Earth even though the latter in terms of viscosity and, in particular, Prandtl number (\(\mathrm{Pr} \gg 1\)) is the extreme oppositeFootnote 3 of the Sun (\(\mathrm{Pr} \ll 1\)).

-

2.

For several, special physical situations it is possible to perform full 3D LES of entire stars with a star-in-a-box approach: for supergiants, especially for AGB stars, such simulations are feasible as the large, energy carrying scales of the flow are no longer small compared to the stellar diameter (cf. Sect. 4.7 in Freytag et al. 2012, and references therein). This is similar to supernovae where spatial and temporal scales separated in earlier evolutionary stages by orders of magnitudes become comparable to each other leaving only the turbulent flame front to subgrid modelling (Röpke and Schmidt 2009). We note that the transition from this kind of simulation to box-in-a-star and shell-in-a-star cases is not a sharp one, since for AGB star simulations (Freytag et al. 2012) the central region of the star is also not included for lack of resolution.

Generalization: simulations with mapped grids and interpolation between grids to generate natural boundaries. Although in most cases not affordable for stellar simulations with realistic microphysics other than for the special case of supernovae, grid mapping and interpolation between grids can be used to avoid artificial boundaries inside a star and to trace the stellar surface layers to optimize resolution. This allows at least in principle to simulate an entire star, with optimized grid geometry. We return to the topic of such grids in Sect. 5.4.

For each of these scenarios the computational grid for 3D LES is nowadays between about 100 and 500 grid cells per spatial direction (for very few cases this value may currently range up to around 2000), an impressive development beyond \({\approx } 16\) cells which were the limit faced in the work of Gilman and Glatzmaier (1981) and Nordlund (1982). In case of only two instead of three spatial dimensions, the number of grid cells can be accordingly much larger, for instance, up to 13,000 cells along the azimuthal direction in the simulation of a full \(360^{\circ }\) sector, i.e., a ring, located at equatorial latitude, in a 2D LES of the He ii ionization zone and the layers around it for the case of a Cepheid (Kupka et al. 2014) (see also Fig. 14). This way the computations have computer memory requirements which put them in the realm of equipment anywhere between workstations and the largest and fastest supercomputers currently available. But as already indicated, memory consumption and a large spread of spatial scales to be covered by a computational grid are not the only restrictions to affordability of numerical simulations.

2.3 Hydrodynamical simulations and the problem of time scales

2.3.1 General principles

Hydrodynamical simulations based on variants of (1)–(10) are conducted to predict the time development of \(\rho , \varvec{u}\), and E within a specified region in space starting from an initial state. Since in astrophysics that state cannot be determined in sufficient detail from observational data only, the initial conditions have to be constructed from approximations. For numerical simulations of stellar convection one-dimensional stellar structure or stellar atmosphere models can provide the necessary input to initialize the calculation. A more recent, but particularly detailed description of this procedure is given in Grimm-Strele et al. (2015a). Other basic variables such as the velocity field \(\varvec{u}\) have to be chosen according to computational convenience since a “realistic guess” is impossible. If a “sufficiently similar” multidimensional state has been constructed from a previous simulation, it can be used to construct a new state through scaling (this is simple for changing resolution, where interpolation is sufficient, but quite subtle, if quantities such as the input energy flux at the bottom or the gravitational acceleration are to be changed). Unless obtained through scaling from a simulation with the same number of spatial dimensions, the initial state is slightly perturbed randomly. Each of \(\rho , \varvec{u}, p\), or \(\varvec{\mu }\) has been used for this purpose (see Sect. 3.6 in Muthsam et al. 2010, Sect. 2 in Kupka et al. 2012, and Sect. 2.7 in Mundprecht et al. 2013 for examples of such different perturbations being used with the same numerical simulation code).

The simulation is then conducted for a time interval \(t_\mathrm{rel}\) during which the physical state is supposed to relax to a “typical state”. This is followed by simulation over a time interval \(t_\mathrm{stat}\) adjacent to the relaxation time \(t_\mathrm{rel}\). The numerical data obtained during \(t_\mathrm{stat}\) are then considered suitable for physical interpretation. The physical meaningfulness of this procedure requires that an ergodicity hypothesis holds (Tsinober 2009): essentially, one expects that a sufficiently long time series of measurements or of a numerical simulation has the same statistical properties as an average obtained from several (possibly shorter) time series each of which is related to a different initial condition. This requires that the measured properties are invariant under time evolution (Chap. 3.7 in Tsinober 2009), an “intuitively evident” property of turbulence which is in fact very difficult to prove. Particularly, there are flows which are only turbulent in a limited domain, such as turbulent jets and wakes past bodies (Tsinober 2009). These may not even be “quasi-ergodic” which would ensure otherwise that the physical states in phase space are visited by a long-term simulation according to their realization probability.

Nevertheless, the assumption that turbulent convective flows “forget” their detailed initial conditions is considered to be well-confirmed by current research. The mean thermal structure (and also large-scale or global magnetic fields, the latter being excluded from a more detailed discussion here anyway) can have a longer “memory”, i.e., their initial state has an influence on the solution over long integration times, a principle used to speed up relaxation described further below in Sect. 2.3.4. But the mean thermal structure is also more influenced by the boundary conditions of the problem than the turbulent flow field which adjusts itself to a state that is often very different from its initial condition. Eventually, the granulation pattern of solar convection is found with each numerical code capable of doing such kind of simulations (cf. Fig. 1 in Beeck et al. 2012, reprinted here as Fig. 2 for convenience). Even if quite different solar structure models are used as initial states of a simulation, the same average thermal structure is recovered (cf. Sect. 3.3 of Grimm-Strele et al. 2015a). The numerical simulation approach is hence tenable for astrophysical applications. Thus, one can start from approximate models, relax the simulations towards a statistically stationary state (cf. Pope 2000), and perform a numerical study with one or a few long-term simulation runs. But what are the minimum and maximum time scales to be considered for this kind of numerical simulation? Let us consider minimum time scales first.

Image reproduced with permission from Beeck et al. (2012), copyright by ESO

Tracing granules with the vertically emerging continuum intensity at 500 nm which results from numerical simulations with the CO\(^5\)BOLD code (left figure), the Stagger code (middle figure), and the MURaM code (right figure). Units of the two horizontal axes are Mm. While different resolution, numerical methods, and domain size result in different granule boundaries, the basic flow pattern remains unaltered.

2.3.2 Time steps and time resolution in numerical simulations of stellar convection

In applications to helioseismology, for example, we might want to study the fate of sound waves near the solar surface whereas stellar evolution during core or shell burning of hydrogen or helium takes place on time scales which depend on nuclear processes, chemical composition, and the total stellar mass. As a result, the time scales of interest may range from a few seconds to more than \(10^{17}\,\mathrm{s}\) (and even much smaller time scales are of relevance in stars other than the Sun, for instance, for white dwarfs, which in turn gradually cool on time scales of billions of years). Can one get around those 17 orders of magnitude when performing a “3D stellar evolution simulation”? Not without introducing some kind of averaging which means to introduce a new set of basic equations: whether grid-based or particle-based, the maximum allowed time steps as well as the required duration of a numerical simulation are properties which stem from the dynamical equations themselves and cannot easily be neglected. We discuss the most important time step restrictions in the following.

The best-known constraint on time integration is the Courant–Friedrichs–Lewy (CFL) limit due to advection (Strikwerda 1989). For a discretization of the NSE with mesh widths \(\varDelta x, \varDelta y, \varDelta z\) in each of the three spatial directions it requires that the time step \(\varDelta t\) is bounded by

In case of a variable mesh the minimum of (11) over each grid cell has to be taken. \(C_{\mathrm{adv}}\) depends on both temporal and spatial discretization schemes chosen. This limit is obtained from linear (von Neumann) stability analysis of the advection terms in (1)–(3) and ensures that each signal, i.e., a change in the solution which propagates with velocity \(\varvec{u}\), is taken into account during each time step. In practice, (11) cannot be overcome even by implicit time integration methods. The reason is that as long as the flow is not close to stationarity, throughout its evolution the solution changes on just that time scale \(\tau \sim \varDelta \,t_{\mathrm{adv}}\), typically by several percent or more. Hence, even fully implicit time integration methods cannot exceed a value of \(C_{\mathrm{adv}} \sim 1\). We refer to the discussion of the ADISM method in Sect. 3 of Robinson et al. (2003) where a time step at most five times that of an explicit method for the case of a solar granulation simulation had been achieved and the maximum \(\varDelta t\) was indeed given by advection. Note that fast moving shock fronts are already taken into account by (11). In practice, attempts of increasing \(\varDelta t\) beyond what would correspond to a value of \(C_{\mathrm{adv}} \gtrsim 1\) will lead to fail in solving the non-linear system of equations obtained in fully or semi-implicit time discretization methods while explicit methods will run into the usual exponential growth of linear instabilities (cf. Strikwerda 1989). Since locally the flow can become slightly supersonic near the solar surface (Bellot Rubio 2009), for values of \(h \sim 12\)–15 km and a sound speed of roughly \(10~\mathrm{km}\,\mathrm{s}^{-1}\) (see Fig. 18.11 in Weiss et al. 2004) we obtain that \(\varDelta t \lesssim 1\,\mathrm{s}\) in an LES of the surface of the solar convection zone.

Sound waves which originate (Chap. 8 in Landau and Lifshitz 1963, Chap. 10 in Richtmyer and Morton 1967, Chap. 3.6 in Batchelor 2000) from the presence of \(\mathrm{grad}\,p\) in (9) can introduce restrictions similar to (11). Tracking sound waves on a computational grid requires to resolve their motion between grid cells. As also revealed by a local characteristics analysis this requires that for explicit time integration methods the sound speed \(c_\mathrm{s}\) is added to the flow velocity in (11), whence \(\varDelta \,t_{\mathrm{cour}} \leqslant C_{\mathrm{adv}} \min \left\{ \varDelta x, \varDelta y, \varDelta z\right\} / {\max (\left| \varvec{u}\right| +c_\mathrm{s})}\) (see Chap. 12 in Richtmyer and Morton 1967, cf. Muthsam et al. 2010). If accurate tracking is not needed, particularly for low Mach number flows, where sound waves carry very little energy, this restriction can be avoided by numerical methods which use an additive splitting approach or analytical approximations to allow implicit time integration of the \(\mathrm{grad}\,p\) term (see Sects. 4, 5).

The application of additive splitting methods is motivated by the structure of (9)–(10) where each term corresponds to a particular physical process which in turn can impose a time step restriction \(\tau \leqslant \varDelta t\) on a numerical method. This algebraic structure of the NSE simplifies the construction of semi-implicit or implicit–explicit methods which can remove such restrictions as long as the solution changes only by a small amount during a time interval \(\tau \sim \varDelta \,t\). According to linear stability analysis (Strikwerda 1989) terms representing diffusion processes such as viscous friction, \(\mathrm{div}\,\varvec{\pi }\) and \(\mathrm{div}\,(\varvec{\pi } \varvec{u})\), conductive heat transfer, \(\mathrm{div}\,\varvec{h} =\,\mathrm{div}\,(K_\mathrm{h}\,\mathrm{grad}\,T)\), and radiative transfer in the diffusion approximation, \(\mathrm{div}\,\varvec{f}_\mathrm{rad} =\,\mathrm{div}\,(K_\mathrm{rad}\,\mathrm{grad}\,T)\), give rise to restrictions of the following type: \(\varDelta \,t_{\mathrm{visc}} \leqslant C_{\mathrm{visc}} \min \left\{ (\varDelta x)^2, (\varDelta y)^2, (\varDelta z)^2\right\} / {\max (\nu )}\) and, in particular,

For realistic stellar microphysics \(\varDelta \,t_{\mathrm{visc}}\) poses no restriction, since in practice \(\varDelta \,t_{\mathrm{visc}} \gg \varDelta \,t_{\mathrm{adv}}\) for achievable spatial resolutions (Sect. 2.2). However, condition (12) can lead to serious limitations not only in convection studies with idealised microphysics (cf. Kupka et al. 2012), but even more so for the simulation of convection in stars such as Cepheids (Fig. 9 in Mundprecht et al. 2013). This restriction can be avoided using fully implicit time integration methods (cf. Dorfi and Feuchtinger 1991, 1995; Dorfi 1999) or more cost-efficient implicit–explicit methods (e.g., Happenhofer 2013). Moreover, within the photospheric layers of a star condition (12) is relieved, if the radiative transfer equation is solved instead of the diffusion approximation, which anyway does not hold in an optically thin fluid. For the linearization of this problem it was shown (Spiegel 1957) that in the optically thin limit the relaxation rate of temperature perturbations by radiation is proportional to the (inverse) conductivity only and with a smooth transition to a quadratic dependence on grid resolution for the optically thick case represented by Eq. (12) (see Sect. 3.2 in Mundprecht et al. 2013):