Abstract

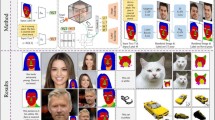

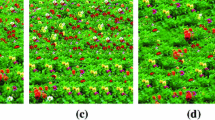

This paper surveys the state-of-the-art of research in patch-based synthesis. Patch-based methods synthesize output images by copying small regions from exemplar imagery. This line of research originated from an area called “texture synthesis”, which focused on creating regular or semi-regular textures from small exemplars. However, more recently, much research has focused on synthesis of larger and more diverse imagery, such as photos, photo collections, videos, and light fields. Additionally, recent research has focused on customizing the synthesis process for particular problem domains, such as synthesizing artistic or decorative brushes, synthesis of rich materials, and synthesis for 3D fabrication. This report investigates recent papers that follow these themes, with a particular emphasis on papers published since 2009, when the last survey in this area was published. This survey can serve as a tutorial for readers who are not yet familiar with these topics, as well as provide comparisons between these papers, and highlight some open problems in this area.

Article PDF

Similar content being viewed by others

Avoid common mistakes on your manuscript.

References

Wei, L.-Y.; Lefebvre, S.; Kwatra, V.; Turk, G. State of the art in example-based texture synthesis. In: Proceedings of Eurographics 2009, State of the Art Report, EG-STAR, 93–117, 2009.

Efros, A. A.; Freeman, W. T. Image quilting for texture synthesis and transfer. In: Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, 341–346, 2001.

Praun, E.; Finkelstein, A.; Hoppe, H. Lapped textures. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, 465–470, 2000.

Liang, L.; Liu, C.; Xu, Y.-Q.; Guo, B.; Shum, H.-Y. Real-time texture synthesis by patch-based sampling. ACM Transactions on Graphics Vol. 20, No. 3, 127–150, 2001.

Kwatra, V.; Schödl, A.; Essa, I.; Turk, G.; Bobick, A. Graphcut textures: Image and video synthesis using graph cuts. ACM Transactions on Graphics Vol. 22, No. 3, 277–286, 2003.

Wexler, Y.; Shechtman, E.; Irani, M. Space-time completion of video. IEEE Transactions on Pattern Analysis and Machine Intelligence Vol. 29, No. 3, 463–476, 2007.

Simakov, D.; Caspi, Y.; Shechtman, E.; Irani, M. Summarizing visual data using bidirectional similarity. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1–8, 2008.

Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D. B. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Transactions on Graphics Vol. 28, No. 3, Article No. 24, 2009.

Kaspar, A.; Neubert, B.; Lischinski, D.; Pauly, M.; Kopf, J. Self tuning texture optimization. Computer Graphics Forum Vol. 34, No. 2, 349–359, 2015.

Burt, P. J. Fast filter transform for image processing. Computer Graphics and Image Processing Vol. 16, No. 1, 20–51, 1981.

Lowe, D. G. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision Vol. 60, No. 2, 91–110, 2004.

Barnes, C.; Shechtman, E.; Goldman, D. B.; Finkelstein, A. The generalized PatchMatch correspondence algorithm. In: Computer Vision— ECCV 2010. Daniilidis, K.; Maragos, P.; Paragios, N. Eds. Springer Berlin Heidelberg, 29–43, 2010.

Xiao, C.; Liu, M.; Yongwei, N.; Dong, Z. Fast exact nearest patch matching for patch-based image editing and processing. IEEE Transactions on Visualization and Computer Graphics Vol. 17, No. 8, 1122–1134, 2011.

Ashikhmin, M. Synthesizing natural textures. In: Proceedings of the 2001 Symposium on Interactive 3D Graphics, 217–226, 2001.

Tong, X.; Zhang, J.; Liu, L.; Wang, X.; Guo, B.; Shum, H.-Y. Synthesis of bidirectional texture functions on arbitrary surfaces. ACM Transactions on Graphics Vol. 21, No. 3, 665–672, 2002.

Barnes, C.; Zhang, F.-L.; Lou, L.; Wu, X.; Hu, S.-M. PatchTable: Efficient patch queries for large datasets and applications. ACM Transactions on Graphics Vol. 34, No. 4, Article No. 97, 2015.

Korman, S.; Avidan, S. Coherency sensitive hashing. In: Proceedings of the IEEE International Conference on Computer Vision, 1607–1614, 2011.

He, K.; Sun, J. Computing nearest-neighbor fields via propagation-assisted kd-trees. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 111–118, 2012.

Olonetsky, I.; Avidan, S. TreeCANN-k-d tree coherence approximate nearest neighbor algorithm. In: Computer Vision—ECCV 2012. Fitzgibbon, A.; Lazebnik, S.; Perona, P.; Sato, Y.; Schmid, C. Eds. Springer Berlin Heidelberg, 602–615, 2012.

Barnes, C.; Goldman, D. B.; Shechtman, E.; Finkelstein, A. The PatchMatch randomized matching algorithm for image manipulation. Communications of the ACM Vol. 54, No. 11, 103–110, 2011.

Datar, M.; Immorlica, N.; Indyk, P.; Mirrokni, V. S. Locality-sensitive hashing scheme based on p-stable distributions. In: Proceedings of the 20th Annual Symposium on Computational Geometry, 253–262, 2004.

Wei, L.-Y.; Levoy, M. Fast texture synthesis using tree-structured vector quantization. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, 479–488, 2000.

Dunteman, G. H. Principal Components Analysis. Sage, 1989.

Muja, M.; Lowe, D. G. Fast approximate nearest neighbors with automatic algorithm configuration. In: Proceedings of VISAPP International Conference on Computer Vision Theory and Applications, 331–340, 2009.

Bleyer, M.; Rhemann, C.; Rother, C. PatchMatch stereo-stereo matching with slanted support windows. In: Proceedings of the British Machine Vision Conference, 14.1–14.11, 2011.

Lu, J.; Yang, H.; Min, D.; Do, M. N. Patch match filter: Efficient edge-aware filtering meets randomized search for fast correspondence field estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1854–1861, 2013.

Chen, Z.; Jin, H.; Lin, Z.; Cohen, S.; Wu, Y. Large displacement optical flow from nearest neighbor fields. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2443–2450, 2013.

Besse, F.; Rother, C.; Fitzgibbon, A.; Kautz, J. PMBP: PatchMatch belief propagation for correspondence field estimation. International Journal of Computer Vision Vol. 110, No. 1, 2–13, 2014.

Guillemot, C.; Le Meur, O. Image inpainting: Overview and recent advances. IEEE Signal Processing Magazine Vol. 31, No. 1, 127–144, 2014.

Cao, F.; Gousseau, Y.; Masnou, S.; Pérez, P. Geometrically guided exemplar-based inpainting. SIAM Journal on Imaging Sciences Vol. 4, No. 4, 1143–1179, 2011.

Huang, J.-B.; Kang, S. B.; Ahuja, N.; Kopf, J. Image completion using planar structure guidance. ACM Transactions on Graphics Vol. 33, No. 4, Article No. 129, 2014.

Bugeau, A.; Bertalmio, M.; Caselles, V.; Sapiro, G. A comprehensive framework for image inpainting. IEEE Transactions on Image Processing Vol. 19, No. 10, 2634–2645, 2010.

Arias, P.; Facciolo, G.; Caselles, V.; Sapiro, G. A variational framework for exemplar-based image inpainting. International Journal of Computer Vision Vol. 93, No. 3, 319–347, 2011.

Kopf, J.; Kienzle, W.; Drucker, S.; Kang, S. B. Quality prediction for image completion. ACM Transactions on Graphics Vol. 31, No. 6, Article No. 131, 2012.

Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A. A. Context encoders: Feature learning by inpainting. arXiv preprint arXiv:1604.07379, 2016.

Zhu, Z.; Huang, H.-Z.; Tan, Z.-P.; Xu, K.; Hu, S.-M. Faithful completion of images of scenic landmarks using internet images. IEEE Transactions on Visualization and Computer Graphics Vol. 22, No. 8, 1945–1958, 2015.

Cho, T. S.; Butman, M.; Avidan, S.; Freeman, W. T. The patch transform and its applications to image editing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1–8, 2008.

Cheng, M.-M.; Zhang, F.-L.; Mitra, N. J.; Huang, X.; Hu, S.-M. RepFinder: Finding approximately repeated scene elements for image editing. ACM Transactions on Graphics Vol. 29, No. 4, Article No. 83, 2010.

Huang, H.; Zhang, L.; Zhang, H.-C. RepSnapping: Efficient image cutout for repeated scene elements. Computer Graphics Forum Vol. 30, No. 7, 2059–2066, 2011.

Zhang, F.-L.; Cheng, M.-M.; Jia, J.; Hu, S.-M. ImageAdmixture: Putting together dissimilar objects from groups. IEEE Transactions on Visualization and Computer Graphics Vol. 18, No. 11, 1849–1857, 2012.

Buades, A.; Coll, B.; Morel, J.-M. A nonlocal algorithm for image denoising. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Vol. 2, 60–65, 2005.

Liu, C.; Freeman, W. T. A high-quality video denoising algorithm based on reliable motion estimation. In: Computer Vision—ECCV 2010. Daniilidis, K.; Maragos, P.; Paragios, N. Eds. Springer Berlin Heidelberg, 706–719, 2010.

Deledalle, C.-A.; Salmon, J.; Dalalyan, A. S. Image denoising with patch based PCA: Local versus global. In: Proceedings of the 22nd British Machine Vision Conference, 25.1–25.10, 2011.

Chatterjee, P.; Milanfar, P. Patch-based nearoptimal image denoising. IEEE Transactions on Image Processing Vol. 21, No. 4, 1635–1649, 2012.

Karacan, L.; Erdem, E.; Erdem, A. Structurepreserving image smoothing via region covariances. ACM Transactions on Graphics Vol. 32, No. 6, Article No. 176, 2013.

Liu, Q.; Zhang, C.; Guo, Q.; Zhou, Y. A nonlocal gradient concentration method for image smoothing. Computational Visual Media Vol. 1, No. 3, 197–209, 2015.

Tong, R.-T.; Zhang, Y.; Cheng, K.-L. StereoPasting: Interactive composition in stereoscopic images. IEEE Transactions on Visualization and Computer Graphics Vol. 19, No. 8, 1375–1385, 2013.

Wang, L.; Jin, H.; Yang, R.; Gong, M. Stereoscopic inpainting: Joint color and depth completion from stereo images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1–8, 2008.

Daribo, I.; Pesquet-Popescu, B. Depth-aided image inpainting for novel view synthesis. In: Proceedings of IEEE International Workshop on Multimedia Signal Processing, 167–170, 2010.

Kim, C.; Hornung, A.; Heinzle, S.; Matusik, W.; Gross, M. Multi-perspective stereoscopy from light fields. ACM Transactions on Graphics Vol. 30, No. 6, Article No. 190, 2011.

Morse, B.; Howard, J.; Cohen, S.; Price, B. PatchMatch-based content completion of stereo image pairs. In: Proceedings of the 2nd International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, 555–562, 2012.

Zhang, L.; Zhang, Y.-H.; Huang, H. Efficient variational light field view synthesis for making stereoscopic 3D images. Computer Graphics Forum Vol. 34, No. 7, 183–191, 2015.

Zhang, F.-L.; Wang, J.; Shechtman, E.; Zhou, Z.-Y.; Shi, J.-X.; Hu, S.-M. PlenoPatch: Patch-based plenoptic image manipulation. IEEE Transactions on Visualization and Computer Graphics DOI: 10.1109/TVCG.2016.2532329, 2016.

HaCohen, Y.; Shechtman, E.; Goldman, D. B.; Lischinski, D. Non-rigid dense correspondence with applications for image enhancement. ACM Transactions on Graphics Vol. 30, No. 4, Article No. 70, 2011.

HaCohen, Y.; Shechtman, E.; Goldman, D. B.; Lischinski, D. Optimizing color consistency in photo collections. ACM Transactions on Graphics Vol. 32, No. 4, Article No. 38, 2013.

Gould, S.; Zhang, Y. PatchMatchGraph: Building a graph of dense patch correspondences for label transfer. In: Computer Vision—ECCV2012. Fitzgibbon, A.; Lazebnik, S.; Perona, P.; Sato, Y.; Schmid, C. Eds. Springer Berlin Heidelberg, 439–452, 2012.

Hu, S.-M.; Zhang, F.-L.; Wang, M.; Martin, R. R.; Wang, J. PatchNet: A patch-based image representation for interactive library-driven image editing. ACM Transactions on Graphics Vol. 32, No. 6, Article No. 196, 2013.

Kalantari, N. K.; Shechtman, E.; Barnes, C.; Darabi, S.; Goldman, D. B.; Sen, P. Patch-based high dynamic range video. ACM Transactions on Graphics Vol. 32, No. 6, Article No. 202, 2013.

Granados, M.; Kim, K. I.; Tompkin, J.; Kautz, J.; Theobalt, C. Background inpainting for videos with dynamic objects and a free-moving camera. In: Computer Vision—ECCV 2012. Fitzgibbon, A.; Lazebnik, S.; Perona, P.; Sato, Y.; Schmid, C. Eds. Springer Berlin Heidelberg, 682–695, 2012.

Newson, A.; Almansa, A.; Fradet, M.; Gousseau, Y.; Pérez, P. Video inpainting of complex scenes. SIAM Journal on Imaging Sciences Vol. 7, No. 4, 1993–2019, 2014.

Barnes, C.; Goldman, D. B.; Shechtman, E.; Finkelstein, A. Video tapestries with continuous temporal zoom. ACM Transactions on Graphics Vol. 29, No. 4, Article No. 89, 2010.

Sen, P.; Kalantari, N. K.; Yaesoubi, M.; Darabi, S.; Goldman, D. B.; Shechtman, E. Robust patchbased hdr reconstruction of dynamic scenes. ACM Transactions on Graphics Vol. 31, No. 6, Article No. 203, 2012.

Cho, S.; Wang, J.; Lee, S. Video deblurring for hand-held cameras using patch-based synthesis. ACM Transactions on Graphics Vol. 31, No. 4, Article No. 64, 2012.

Sun, L.; Cho, S.; Wang, J.; Hays, J. Edgebased blur kernel estimation using patch priors. In: Proceedings of the IEEE International Conference on Computational Photography, 1–8, 2013.

Sun, L.; Cho, S.; Wang, J.; Hays, J. Good image priors for non-blind deconvolution. In: Computer Vision—ECCV 2014. Fleet, D.; Pajdla, T.; Schiele, B.; Tuytelaars, T. Eds. Springer International Publishing, 231–246, 2014.

Hertzmann, A.; Jacobs, C. E.; Oliver, N.; Curless, B.; Salesin, D. H. Image analogies. In: Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, 327–340, 2001.

Bénard, P.; Cole, F.; Kass, M.; Mordatch, I.; Hegarty, J.; Senn, M. S.; Fleischer, K.; Pesare, D.; Breeden, K. Stylizing animation by example. ACM Transactions on Graphics Vol. 32, No. 4, Article No. 119, 2013.

Zeng, K.; Zhao, M.; Xiong, C.; Zhu, S.-C. From image parsing to painterly rendering. ACM Transactions on Graphics Vol. 29, No. 1, Article No. 2, 2009.

Fišer, J.; Jamriška, O.; Lukáč, M.; Shechtman, E.; Asente, P.; Lu, J.; Sýkora, D. StyLit: Illuminationguided example-based stylization of 3D renderings. ACM Transactions on Graphics Vol. 35, No. 4, Article No. 92, 2016.

Sloan, P.-P. J.; Martin, W.; Gooch, A.; Gooch, B. The lit sphere: A model for capturing NPR shading from art. In: Proceedings of Graphics Interface, 143–150, 2001.

Heckbert, P. S. Adaptive radiosity textures for bidirectional ray tracing. ACM SIGGRAPH Computer Graphics Vol. 24, No. 4, 145–154, 1990.

Lukáč, M.; Fišer, J.; Bazin, J.-C.; Jamriška, O.; Sorkine-Hornung, A.; Sýkora, D. Painting by feature: Texture boundaries for example-based image creation. ACM Transactions on Graphics Vol. 32, No. 4, Article No. 116, 2013.

Ritter, L.; Li, W.; Curless, B.; Agrawala, M.; Salesin, D. Painting with texture. In: Proceedings of the Eurographics Symposium on Rendering, 371–376, 2006.

Lukáč, M.; Fišer, J.; Asente, P.; Lu, J.; Shechtman, E.; Sýkora, D. Brushables: Examplebased edge-aware directional texture painting. Computer Graphics Forum Vol. 34, No. 7, 257–267, 2015.

Lu, J.; Barnes, C.; DiVerdi, S.; Finkelstein, A. RealBrush: Painting with examples of physical media. ACM Transactions on Graphics Vol. 32, No. 4, Article No. 117, 2013.

Xing, J.; Chen, H.-T.; Wei, L.-Y. Autocomplete painting repetitions. ACM Transactions on Graphics Vol. 33, No. 6, Article No. 172, 2014.

Lu, J.; Barnes, C.; Wan, C.; Asente, P.; Mech, R.; Finkelstein, A. DecoBrush: Drawing structured decorative patterns by example. ACM Transactions on Graphics Vol. 33, No. 4, Article No. 90, 2014.

Zhou, S.; Lasram, A.; Lefebvre, S. By-example synthesis of curvilinear structured patterns. Computer Graphics Forum Vol. 32, No. 2pt3, 355–360, 2013.

Zhou, S.; Jiang, C.; Lefebvre, S. Topologyconstrained synthesis of vector patterns. ACM Transactions on Graphics Vol. 33, No. 6, Article No. 215, 2014.

Bhat, P.; Ingram, S.; Turk, G. Geometric texture synthesis by example. In: Proceedings of the 2004 Eurographics/ACM SIGGRAPH Symposium on Geometry Processing, 41–44, 2004.

Kopf, J.; Fu, C.-W.; Cohen-Or, D.; Deussen, O.; Lischinski, D.; Wong, T.-T. Solid texture synthesis from 2D exemplars. ACM Transactions on Graphics Vol. 26, No. 3, Article No. 2, 2007.

Dong, Y.; Lefebvre, S.; Tong, X.; Drettakis, G. Lazy solid texture synthesis. Computer Graphics Forum Vol. 27, No. 4, 1165–1174, 2008.

Lee, S.-H.; Park, T.; Kim, J.-H.; Kim, C.-H. Adaptive synthesis of distance fields. IEEE Transactions on Visualization and Computer Graphics Vol. 18, No. 7, 1135–1145, 2012.

Ma, C.; Wei, L.-Y.; Tong, X. Discrete element textures. ACM Transactions on Graphics Vol. 30, No. 4, Article No. 62, 2011.

Ma, C.; Wei, L.-Y.; Lefebvre, S.; Tong, X. Dynamic element textures. ACM Transactions on Graphics Vol. 32, No. 4, Article No. 90, 2013.

Dumas, J.; Lu, A.; Lefebvre, S.; Wu, J.; München, T. U.; Dick, C.; München, T. U. By-example synthesis of structurally sound patterns. ACM Transactions on Graphics Vol. 34, No. 4, Article No. 137, 2015.

Martínez, J.; Dumas, J.; Lefebvre, S.; Wei, L.-Y. Structure and appearance optimization for controllable shape design. ACM Transactions on Graphics Vol. 34, No. 6, Article No. 229, 2015.

Chen, W.; Zhang, X.; Xin, S.; Xia, Y.; Lefebvre, S.; Wang, W. Synthesis of filigrees for digital fabrication. ACM Transactions on Graphics Vol. 35, No. 4, Article No. 98, 2016.

Kwatra, V.; Adalsteinsson, D.; Kim, T.; Kwatra, N.; Carlson, M.; Lin, M. Texturing fluids. IEEE Transactions on Visualization and Computer Graphics Vol. 13, No. 5, 939–952, 2007.

Bargteil, A. W.; Sin, F.; Michaels, J. E.; Goktekin, T. G.; O’Brien, J. F. A texture synthesis method for liquid animations. In: Proceedings of the 2006 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, 345–351, 2006.

Ma, C.; Wei, L.-Y.; Guo, B.; Zhou, K. Motion field texture synthesis. ACM Transactions on Graphics Vol. 28, No. 5, Article No. 110, 2009.

Browning, M.; Barnes, C.; Ritter, S.; Finkelstein, A. Stylized keyframe animation of fluid simulations. In: Proceedings of the Workshop on Non-Photorealistic Animation and Rendering, 63–70, 2014.

Jamriška, O.; Fišer, J.; Asente, P.; Lu, J.; Shechtman, E.; Sýkora, D. LazyFluids: Appearance transfer for fluid animations. ACM Transactions on Graphics Vol. 34, No. 4, Article No. 92, 2015.

Darabi, S.; Shechtman, E.; Barnes, C.; Goldman, D. B.; Sen, P. Image melding: Combining inconsistent images using patch-based synthesis. ACM Transactions on Graphics Vol. 31, No. 4, Article No. 82, 2012.

Aittala, M.; Weyrich, T.; Lehtinen, J. Two-shot SVBRDF capture for stationary materials. ACM Transactions on Graphics Vol. 34, No. 4, Article No. 110, 2015.

Bellini, R.; Kleiman, Y.; Cohen-Or, D. Time-varying weathering in texture space. ACM Transactions on Graphics Vol. 34, No. 4, Article No. 141, 2016.

Diamanti, O.; Barnes, C.; Paris, S.; Shechtman, E.; Sorkine-Hornung, O. Synthesis of complex image appearance from limited exemplars. ACM Transactions on Graphics Vol. 34, No. 2, Article No. 22, 2015.

Gatys, L. A.; Ecker, A. S.; Bethge, M. A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576, 2015.

Ulyanov, D.; Lebedev, V.; Vedaldi, A.; Lempitsky, V. Texture networks: Feed-forward synthesis of textures and stylized images. arXiv preprint arXiv:1603.03417, 2016.

Denton, E. L.; Chintala, S.; Fergus, R. Deep generative image models using a Laplacian pyramid of adversarial networks. In: Proceedings of Advances in Neural Information Processing Systems, 1486–1494, 2015.

Acknowledgements

Thanks to Professor Shi-Min Hu at Tsinghua University for his support in this project. Thanks to the National Science Foundation for support under Grants CCF 0811493 and CCF 0747220. Thanks for support from the General Financial Grant from the China Postdoctoral Science Foundation (No. 2015M580100).

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is published with open access at Springerlink.com

Connelly Barnes is an assistant professor at the University of Virginia. He received his Ph.D. degree from Princeton University in 2011. His group develops techniques for efficiently manipulating visual data in computer graphics by using semantic information from computer vision. Applications are in computational photography, image editing, art, and hiding visual information. Many computer graphics algorithms are more useful if they are interactive; therefore, his group also has a focus on efficiency and optimization, including some compiler technologies.

Fang-Lue Zhang is a post-doctoral research associate at the Department of Computer Science and Technology in Tsinghua University. He received his doctoral degree from Tsinghua University in 2015 and bachelor degree from Zhejiang University in 2009. His research interests include image and video editing, computer vision, and computer graphics.

Rights and permissions

Open Access The articles published in this journal are distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Other papers from this open access journal are available free of charge from http://www.springer.com/journal/41095. To submit a manuscript, please go to https://www.editorialmanager.com/cvmj.

About this article

Cite this article

Barnes, C., Zhang, FL. A survey of the state-of-the-art in patch-based synthesis. Comp. Visual Media 3, 3–20 (2017). https://doi.org/10.1007/s41095-016-0064-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41095-016-0064-2