Abstract

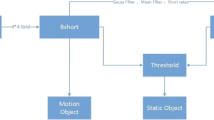

This paper presents a method for synthesizing a stroboscopic image of a moving sports player from a hand-held camera sequence. This method has three steps: synthesis of background image, synthesis of stroboscopic image, and removal of player’s shadow. In synthesis of background image step, all input frames masked a bounding box of the player are stitched together to generate a background image. The player is extracted by an HOG-based people detector. In synthesis of stroboscopic image step, the background image, the input frame, and a mask of the player synthesize a stroboscopic image. In removal of shadow step, we remove the player’s shadow which negatively affects an analysis by using mean-shift. In our previous work, synthesis of background image has been timeconsuming. In this paper, by using the bounding box of the player detected by HOG and by subtracting the images for synthesizing a mask, computational speed and accuracy can be improved. These have contributed greatly to the improvement from the previous method. These are main improvements and novelty points from our previous method. In experiments, we confirmed the effectiveness of the proposed method, measured the player’s speed and stride length, and made a footprint image. The image sequence was captured under a simple condition that no other people were in the background and the person controlling the video camera was standing still, such like a motion parallax was not occurred. In addition, we applied the synthesis method to various scenes to confirm its versatility.

Article PDF

Similar content being viewed by others

Avoid common mistakes on your manuscript.

References

Strohrmann, C.; Harms, H.; Kappeler-Setz, C.; Troster, G. Monitoring kinematic changes with fatigue in running using body-worn sensors. IEEE Transactions on Information Technology in Biomedicine Vol. 16, No. 5, 983–990, 2012.

Ghasemzadeh, H.; Loseu, V.; Guenterberg, E.; Jafari, R. Sport training using body sensor networks: A statistical approach to measure wrist rotation for golf swing. In: Proceedings of the 4th International Conference on Body Area Networks, Article No. 2, 2009.

Oliveira, G.; Comba, J.; Torchelsen, R.; Padilha, M.; Silva, C. Visualizing running races through the multivariate time-series of multiple runners. In: Proceedings of XXVI Conference on Graphics, Patterns and Images, 99–106, 2013.

Eskofier, B. M.; Musho, E.; Schlarb, H. Pattern classification of foot strike type using body worn accelerometers. In: Proceedings of IEEE International Conference on Body Sensor Networks, 1–4, 2013.

Beetz, M.; Kirchlechner, B.; Lames, M. Computerized real-time analysis of football games. IEEE Pervasive Computing Vol. 4, No. 3, 33–39, 2005.

Hamid, R.; Kumar, R. K.; Grundmann, M.; Kim, K.; Essa, I.; Hodgins, J. Player localization using multiple static cameras for sports visualization. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 731–738, 2010.

Lu, W.-L.; Ting, J.-A.; Little, J. J.; Murphy, K. P. Learning to track and identify players from broadcast sports video. IEEE Transactions on Pattern Analysis and Machine Intelligence Vol. 35, No. 7, 1704–1716, 2013.

Perse, M.; Kristan, M.; Kovacic S.; Vuckovic, G.; Pers, J. A trajectory-based analysis of coordinated team activity in a basketball game. Computer Vision and Image Understanding Vol. 113, No. 5, 612–621, 2009.

Atmosukarto, I.; Ghanem, B.; Ahuja, S.; Muthuswamy, K.; Ahuja, N. Automatic recognition of offensive team formation in American football plays. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition Workshops, 991–998, 2013.

Hasegawa, K.; Saito, H. Auto-generation of runner’s stroboscopic image and measuring landing points using a handheld camera. In: Proceedings of the 16th Irish Machine Vision and Image Processing, 169–174, 2014.

Ebdelli, M.; Meur, O. L.; Guillemot, C. Video inpainting with short-term windows: Application to object removal and error concealment. IEEE Transactions on Image Processing Vol. 24, No. 10, 3034–3047, 2015.

Farbman, Z.; Lischinski, D. Tonal stabilization of video. ACM Transactions on Graphics Vol. 30, No. 4, Article No. 89, 2011.

Lu, S.-P.; Ceulemans, B.; Munteanu, A.; Schelkens, P. Spatio-temporally consistent color and structure optimization for multiview video color correction. IEEE Transactions on Multimedia Vol. 17, No. 5, 577–590, 2015.

Brown, M.; Lowe, D. G. Automatic panoramic image stitching using invariant features. International Journal of Computer Vision Vol. 74, No. 1, 59–73, 2007.

Rother, C.; Kolmogorov, V.; Blake, A. “GrabCut”: Interactive foreground extraction using iterated graph cuts. ACM Transactions on Graphics Vol. 23, No. 3, 309–314, 2004.

Farbman, Z.; Hoffer, G.; Lipman, Y.; Cohen-Or, D.; Lischinsk, D. Coordinates for instant image cloning. ACM Transactions on Graphics Vol. 28, No. 3, Article No. 67, 2009.

Guo, R.; Dai, Q.; Hoiem, D. Single-image shadow detection and removal using paired regions. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2033–2040, 2011.

Miyazaki, D.; Matsushita, Y.; Ikeuchi, K. Interactive shadow removal from a single image using hierarchical graph cut. In: Lecture Notes in Computer Science, Vol. 5994. Zha, H.; Taniguchi, R.; Maybank, S. Eds. Springer Berlin Heidelberg, 234–245, 2009.

Correa, C. D.; Ma, K.-L. Dynamic video narratives. ACM Transactions on Graphics Vol. 29, No. 4, Article No. 88, 2010.

Lu, S.-P.; Zhang, S.-H.; Wei, J.; Hu, S.-M.; Martin, R. R. Timeline editing of objects in video. IEEE Transactions on Visualization and Computer Graphics Vol. 19, No. 7, 1218–1227, 2013.

Klose, F.; Wang, O.; Bazin, J.-C.; Magnor, M.; Sorkine-Hornung, A. Sampling based scene-space video processing. ACM Transactions on Graphics Vol. 34, No. 4, Article No. 67, 2015.

Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Transactions on Information Theory Vol. 21, No. 1, 32–40, 1975.

Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence Vol. 24, No. 5, 603–619, 2002.

Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Vol. 1, 886–893, 2005.

Kalman, R. E. A new approach to linear filtering and prediction problems. Journal of Basic Engineering Vol. 82, No. 1, 35–45, 1960.

Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L. V. Speededup robust features (SURF). Computer Vision and Image Understanding Vol. 110, No. 3, 346–359, 2008.

Cho, S.-H.; Kang, H.-B. Panoramic background generation using mean-shift in moving camera environment. In: Proceedings of the International Conference on Image Processing, Computer Vision and Pattern Recognition, 829–835, 2011.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is published with open access at Springerlink.com

Kunihiro Hasegawa received his B.E. and M.E. degrees in information and computer science from Keio University, Japan, in 2007 and 2009, respectively. He joined Canon Inc. in 2009. Since 2014, he has been in Ph.D. course of science and technology at Keio University, Japan. His research interests include sports vision, augmented reality, document processing, and computer vision.

Hideo Saito received his Ph.D. degree in electrical engineering from Keio University, Japan, in 1992. Since then, he has been on the Faculty of Science and Technology, Keio University. From 1997 to 1999, he had joined into Virtualized Reality Project in the Robotics Institute, Carnegie Mellon University as a visiting researcher. Since 2006, he has been a full professor of Department of Information and Computer Science, Keio University. His recent activities for academic conferences include a program chair of ACCV2014, a general chair of ISMAR2015, and a program chair of ISMAR2016. His research interests include computer vision and pattern recognition, and their applications to augmented reality, virtual reality, and human robot interaction.

Open Access The articles published in this journal are distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Other papers from this open access journal are available free of charge from http://www.springer.com/journal/41095. To submit a manuscript, please go to https://www. editorialmanager.com/cvmj.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hasegawa, K., Saito, H. Synthesis of a stroboscopic image from a hand-held camera sequence for a sports analysis. Comp. Visual Media 2, 277–289 (2016). https://doi.org/10.1007/s41095-016-0053-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41095-016-0053-5