Abstract

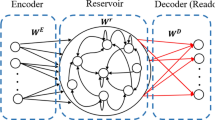

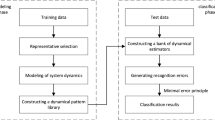

Time series classification (TSC) has been tackled through a wide range of algorithms. Seminal reservoir computing (s-RC) is composed of a recurrent neural network with random parameters serves as a dynamical memory and is a well-known end-to-end neural networks (NNs) that have been applied to TSC problems. Although the s-RC architecture is suited for dynamic (temporal) data processing, nevertheless choosing the proper design of reservoir plays a crucial role in the efficiency of reservoir computing (RC) in comparison to state-of-the-art fully trainable NNs. In contrast with a large body of researches that has been focused on the aspect of sparsity in the design of RC, in this article, the role of a variety of dynamical behaviors (e.g. stability, periodicity, high-periodicity, and chaos) in RC design is empirically investigated in terms of the richness of the developed dynamical (temporal) representations. Finally, it is shown that RCs with rich dynamical behaviors outperform RCs with a limited spectrum of dynamic behavior in TSC tasks. To evaluate the TSC adaptability of the newly proposed RC framework and state-of-the-art NN-based methods, different experiments on 15 multivariate time series datasets (UCR and UEA datasets) were performed. Our findings divulge that the proposed framework outperforms other RC methods in learning capacity and accuracy and attains classification accuracy comparable with the best fully trainable deep neural networks.

Similar content being viewed by others

References

Fawaz, H.I., Forestier, G., Weber, J., Idoumghar, L., Muller, P.-A.: Deep learning for time series classification: a review. Data Min. Knowl. Discov. 33, 917–963 (2019)

Abanda, A., Mori, U., Lozano, J.A.: A review on distance based time series classification. Data Min. Knowl. Discov. 33, 378–412 (2019)

Hartmann, C., Ressel, F., Hahmann, M., Habich, D., Lehner, W.: CSAR: the cross-sectional autoregression model for short and long-range forecasting. Int. J. Data Sci. Anal. 8, 165–181 (2019)

Ding, H., Trajcevski, G., Scheuermann, P., Wang, X., Keogh, E.: Querying and mining of time series data: experimental comparison of representations and distance measures. Proc. VLDB Endow. 1(2), 1542–1552 (2008)

Felzenszwalb, P.F., Zabih, R.: Dynamic programming and graph algorithms in computer vision. IEEE Trans. Pattern Anal. Mach. Intell. 33(4), 721–740 (2011)

Dilmi, M.D., Barthès, L., Mallet, C., Chazottes, A.: Iterative multiscale dynamic time warping (IMs-DTW): a tool for rainfall time series comparison. Int. J. Data Sci. Anal. 9, 65–79 (2019)

Xu, Y., Yan, C., Feng, J., Ying, G., Dunwei, G.: SVMs classification based two-side cross domain collaborative filtering by inferring intrinsic user and item features. Knowl. Based Syst. 141, 80–91 (2018)

Baydogan, M.G., Runger, G., Tuv, E.: A bag-of-features framework to classify time series. IEEE Trans. Pattern Anal. Mach. Intell. 35(11), 2796–2802 (2013)

Arantes, J.D.S., Arantes, M.D.S., Fröhlich, H.B., Siret, L., Bonnard, R.: A novel unsupervised method for anomaly detection in time series based on statistical features for industrial predictive maintenance. Int. J. Data Sci. Anal. 12, 383–404 (2021)

Schäfer, P.: The BOSS is concerned with time series classification in the presence of noise. Data Min. Knowl. Discov. 29, 1505–1530 (2015)

Grabocka, J., Schilling, N., Wistuba, M., Schmidt-Thieme, L.: Learning time-series shapelets. In: ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (2014)

Bagnall, A., Lines, J., Bostrom, A., Large, J., Keogh, E.: The great time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 31(3), 606–660 (2016)

Ngan, C.-K.: Time Series Analysis: Data, Methods, and Applications. IntechOpen, London (2019)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Cui, Z., Chen, W., Chen, Y.: Multi-scale convolutional neural networks for time series classification. arXiv:1603.06995 (2016)

Wang, Z., Yan, W., Oates, T.: Time series classification from scratch with deep neural networks: a strong baseline. In: International Joint Conference on Neural Networks (IJCNN), Anchorage (2017)

Serra, J., Pascual, A., Karatzoglou, A.: Towards a universal neural network encoder for time series. In: Falomir, Z., Gibert, K., Plaza, E. (eds.) Artificial Intelligence Research and Development: Current Challenges, New Trends and Applications, pp. 308–120. IOS Press, Amsterdam (2018)

Sutskever, I., Martens, J., Hinton, G.: Generating text with recurrent neural networks. In: Proceeding ICML 11 Proceedings of the 28th International Conference on International, Washington (2011)

Maya, S., Ueno, K., Nishikawa, T.: dLSTM: a new approach for anomaly detection using deep learning with delayed prediction. Int. J. Data Sci. Anal. 6, 137–164 (2019)

Tanisaro, P., Heidemann, G.: Time series classification using time warping invariant echo state networks. In: IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, pp. 831–836 (2016)

Kasabov, N.: NeuCube: a spiking neural network architecture for mapping, learning and understanding of spatio-temporal brain data. Neural Netw. 52, 62–76 (2014)

Austin, D., Sanzgiri, A., Sankaran, K., Woodard, R., Lissack, A., Seljan, S.: Classifying sensitive content in online advertisements with deep learning. Int. J. Data Sci. Anal. 10, 265–276 (2020)

Rumelhart, D., Hinton, G.E., Williams, R.J.: Learning internal representations by error propagation. In: Holyoak, K.J. (ed.) Parallel Distributed Processing: Explorations in the Microstructure of Cognition. MIT Press, Cambridge (1987)

Williams, R.J., Zipser, D.: A learning algorithm for continually running fully recurrent neural networks. Neural Comput. 1(2), 270–280 (1989)

Zhang, G., Zhang, C., Zhang, W.: Evolutionary echo state network for long-term time series prediction: on the edge of chaos. Appl. Intell. 5, 893–904 (2019)

Jaeger, H.: Tutorial on Training Recurrent Neural Networks, Covering BPPT, RTRL, EKF and the Echo State Network Approach. German National Research Center for Information, Zurich (2002)

Lukoševičius, M.: Neural networks: tricks of the trade. In: Montavon, G., Orr, G.B., Müller, K.-R. (eds.) A Practical Guide to Applying Echo State Networks, pp. 659–686. Springer, Berlin (2012)

Jiang, F., Berry, H., Schoenauer, M.: Supervised and evolutionary learning of echo state networks. In: Rudolph, G., Jansen, T., Beume, N., Lucas, S., Poloni, C. (eds.) Parallel Problem Solving from Nature (PPSN). Springer, Berlin (2008)

Rodan, A., Tino, P.: Minimum complexity echo state network. IEEE Trans. Neural Netw. 200(1), 131–144 (2010)

Jaeger, H.: The echo state approach to analysing and training recurrent neural networks-with an erratum note. National Research Center for Information Technology GMD Technical, vol. 148, No. 34, p. 13 (2001)

Gallicchio, C.: Sparsity in reservoir computing neural networks. In: 2020 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), pp. 1–7 (2020)

Schrauwen, B., Verstraeten, D., Campenhout, J.V.: An overview of reservoir computing: theory, applications and implementations. In: Proceedings of the 15th European Symposium on Artificial Neural Networks, pp. 471–482 (2007)

Xue, Y., Yang, L., Haykin, S.: Decoupled echo state networks with lateral inhibition. Neural Netw. 20(3), 365–376 (2007)

Kasabov, N., Dhoble, K., Nuntalid, N., Indiveri, G.: Dynamic evolving spiking neural networks for on-line spatio- and spectro-temporal pattern recognition. Neural Netw. 41, 188–201 (2013)

Kasabov, N., Scott, N.M., Tu, E., Marks, S., Sengupta, N., Capecci, E., Othman, M.: Evolving spatio-temporal data machines based on the NeuCube neuromorphic framework: design methodology and selected applications. Neural Netw. 78, 1–14 (2016)

Thorpe, S., Jacques, G.: Rank order coding. In: Bower, J.M. (ed.) Computational Neuroscience, pp. 113–118. Springer US, Boston (1998)

Caporale, N., Dan, Y.: Spike timing-dependent plasticity: a Hebbian learning rule. Annu. Rev. Neurosci. 31, 25–46 (2008)

Modiri, M., Homayounpour, M.M., Ebadzadeh, M.M.: Reservoir weights learning based on adaptive dynamic programming and its application in time series classification. Neural Comput. Appl. 34(2), 1–17 (2022)

Grossberg, S.: Adaptive pattern classification and universal recoding: II. Feedback, expectation, olfaction, illusions. Biol. Cybern. 23, 187–202 (1976)

Grossberg, S.: Adaptive resonance theory: how a brain learns to consciously attend, learn, and recognize a changing world. Neural Netw. 37, 1–47 (2013)

Ashby, W.R.: An Introduction to Cybernetics. Chapman and Hall, London (1956)

Jaeger, H.: The Echo State Approach to Analysing and Training Recurrent Neural Networks. German National Research Center for Information Technology, Bonn (2001)

Maass, W., Nachtschlaeger, T., Markram, H.: Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14(11), 2531–2560 (2002)

Gallicchio, C., Micheli, A., Pedrelli, L.: Deep reservoir computing: a critical experimental analysis. Neurocomputing 268, 87–99 (2017)

Li, Q., Wu, Z., Ling, R., Feng, L., Liu, K.: Multi-reservoir echo state computing for solar irradiance prediction: a fast yet efficient deep learning approach. Appl. Soft Comput. J. 95, 106481 (2020)

Alalshekmubarak, A., Smith, L.S.: A novel approach combining recurrent neural network and support vector machines for time series classification. In: Innovations in Information Technology (IIT), pp. 42–47 (2013)

Bianchi, F., Scardapane, S., Løkse, S., Jenssen, R.: Bidirectional deep-readout echo state networks. In: European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges (2018)

Tanaka, G., Yamane, T., Heroux, J.B., Nakane, R., Kanazawa, N., Takeda, S., Hirose, A.: Recent advances in physical reservoir computing: a review. Neural Netw. 115, 100–123 (2019)

Hebb, D.O.: The Organization of Behavior: A Neuropsychological. Wiley, New York (1949)

Gerstner, W., Kistler, W.: Spiking Neuron Models—Single Neurons, Populations, Plasticity. Cambridge University Press, Cambridge (2002)

Figari, M., Altosole, M.: Dynamic behaviour and stability of marine propulsion systems. Proc. Inst. Mech. Eng. Part M J. Eng. Marit. Environ. 221(4), 187–205 (2007)

Izhikevich, E.M.: Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572 (2003)

Canavier, C., Baxter, D., Clark, J., Byrne, J.: Nonlinear dynamics in a model neuron provide a novel mechanism for transient synaptic inputs to produce long-term alterations of postsynaptic activity. J. Neurophysiol. 69(6), 2252–2257 (1993)

Izhikevich, E.M.: Polychronization: computation with spikes. Neural Comput. 18(2), 245–282 (2006)

Szatmáry, B., Izhikevich, E.M.: Spike-timing theory of working memory. PLOS Comput. Biol. 6, e1000879 (2010)

Chen, Y., Keogh, E., Hu, B., Begum, N., Bagnall, A., Mueen, A., Batista, G.: The UCR time series classification archive. Available: www.cs.ucr.edu/~eamonn/time_series_data/ (2015). Accessed 20 May 2020

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Fawaz, H.I., Lucas, B., Forestier, G., Pelletier, C., Schmidt, D.F., Weber, J., Webb, G.I., Idoumghar, L., Muller, P.-A., Petitjean, F.: InceptionTime: finding AlexNet for time series classification series classification. Data Min. Knowl. Disc. 34, 1936–1962 (2020)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D.: Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston (2015)

Middlehurst, M., Large, J., Flynn, M., Lines, J., Bostrom, A., Bagnall, A.: HIVE-COTE 2.0: a new meta ensemble for time series classification. Mach. Learn. 110, 3211–3243 (2021)

Hills, J., Lines, J., Baranauskas, E., Mapp, J., Bagnall, A.: Classification of time series by shapelet transformation. Data Min. Knowl. Disc. 28(1), 851–881 (2014)

Middlehurst, M., Vickers, W., Bagnall, A.: Scalable dictionary classifiers for time series classification. In: Proceedings of Intelligent Data Engineering and Automated Learning, Lecture Notes in Computer Science (2019)

Dempster, A., Petitjean, F., Webb, G.I.: ROCKET: exceptionally fast and accurate time series classification using random convolutional kernels. Data Min. Knowl. Disc. 20, 1454–1495 (2020)

Middlehurst, M., Large, J., Bagnall, A.: The canonical interval forest (CIF) classifier for time series classification. In: IEEE International Conference on Big Data (Big Data) (2020)

Large, J., Lines, J., Bagnall, A.: A probabilistic classifier ensemble weighting scheme based on cross-validated accuracy estimates. Data Min. Knowl. Disc. 33, 1674–1709 (2019)

Funding

The authors declare that they received no funding for this work.

Author information

Authors and Affiliations

Contributions

MM designed and conducted all the experiments, and drafted the manuscript. Dr. MME and Dr. MMH contributed to the guidance of the research, presented valuable comments on the design of the experiments and revised the manuscript. All authors have read and approved this manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Modiri, M., Ebadzadeh, M.M. & Homayounpour, M.M. Reservoir consisting of diverse dynamical behaviors and its application in time series classification. Int J Data Sci Anal 17, 75–92 (2024). https://doi.org/10.1007/s41060-022-00360-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41060-022-00360-x