Abstract

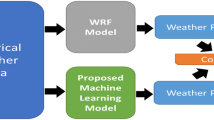

Droughts can cause significant damage to agricultural and other systems. An important aspect of mitigating the impacts of drought is an effective method of forecasting future drought events. In this study, five methods of forecasting drought for short lead times were explored in the Awash River Basin of Ethiopia. The Standard Precipitation Index (SPI) was the drought index chosen to represent drought in the basin. Machine learning techniques including artificial neural networks (ANNs) and support vector regression (SVR) were compared with coupled models (WA-ANN and WA-SVR) which pre-process input data using wavelet analysis (WA). This study proposed and tested the SVR and WA-SVR methods for short term drought forecasting. This study also used only the approximation series (derived via wavelet analysis) as inputs to the ANN and SVR models, and found that using just the approximation series as inputs for models gave good forecast results. The forecast results of all five data driven models were compared using several performance measures (RMSE, MAE, R 2 and a measure of persistence). The forecast results of this study indicate that the coupled wavelet neural network (WA-ANN) models were the best models for forecasting SPI 3 and SPI 6 values over lead times of 1 and 3 months in the Awash River Basin.

Similar content being viewed by others

Introduction

Drought is a natural phenomenon that occurs when precipitation is significantly lower than normal (Belayneh et al. 2014; Saadat et al. 2011). These deficits may cause low crop yields for agriculture, reduced flows for ecological systems, loss of biodiversity and other problems for the environment, in addition to adversely impacting the hydroelectric industry, as well as causing deficits in the drinking water supply which can negatively affect local populations. The less predictable characteristics of droughts such as their initiation, termination, frequency and severity can make drought both a hazard and a disaster. Drought is characterized as a hazard because it is a natural accident of unpredictable occurrence, but of recognizable recurrence (Mishra and Singh 2010). Drought is also characterized as a disaster because it corresponds to the failure of the precipitation regime, causing the disruption of the water supply to natural and agricultural ecosystems as well as to other human activities (Mishra and Singh 2010). 22 % of the global damage caused by natural disasters can be attributed to droughts (Keshavarz et al. 2013).

Droughts have also had a great impact in Africa. The Sahel has experienced droughts of unprecedented severity in recorded history (Mishra and Singh 2010). The impacts in sub-Saharan Africa are more severe because rain-fed agriculture comprises 95 % of all agriculture (Keshavarz et al. 2013). In 2009, reduced rainfall levels led to an increase in the frequency in droughts and resulted in an increase of 53 million food insecure people in the region (Husak et al. 2013). Due to the changes in climate in Africa and around the world (Adamowski et al. 2009, 2010; Nalley et al. 2012, 2013; Pingale et al. 2014), it is likely that droughts will become more severe in the future.

Due to their slow evolution in time, droughts are phenomena whose consequences take a significant amount of time with respect to their inception to be perceived by both ecological and socio-economic systems. Due to this characteristic, effective mitigation of the most adverse drought impacts is possible, more than in the case of the other extreme hydrological events such as floods, earthquakes or hurricanes, provided a drought monitoring system which is able to promptly warn of the onset of a drought and to follow its evolution in space and time is in operation (Rossi et al. 2007 ). An accurate selection of indices for drought identification, providing a synthetic and objective description of drought conditions and future drought conditions, represents a key point for the implementation of an efficient drought warning system (Cacciamani et al. 2007). This can help local stakeholders to try and adapt to the effects of droughts in an effective and sustainable manner (Halbe et al. 2013; Kolinjivadi et al. 2014a, b; Straith et al. 2014; Inam et al. 2015; Butler and Adamowski 2015).

In this study, the drought index chosen to forecast drought is the Standardized Precipitation Index (SPI), which was developed to quantify a precipitation deficit for different time scales (Guttman 1999). The Awash River Basin was the study basin explored in this study and the SPI index was used to forecast drought mainly because the SPI drought index requires precipitation as its only input. Furthermore, it has been determined that precipitation alone can explain most of the variability of East African droughts and that the SPI is an appropriate index for monitoring droughts in East Africa (Ntale and Gan 2003).

In hydrologic drought forecasting, stochastic methods have been traditionally used to forecast drought indices. Markov Chain models (Paulo et al. 2005; Paulo and Pereira 2008) and autoregressive integrated moving average models (ARIMA) (Mishra and Desai 2005, 2006; Mishra et al. 2007; Han et al. 2010) have been the most widely used stochastic models for hydrologic drought forecasting. The major limitation of these models is that they are linear models and they are not very effective in forecasting non-linearities, a common characteristic of hydrologic data (Tiwari and Adamowski 2014; Campisi et al. 2012; Adamowski et al. 2012; Haidary et al. 2013).

In response to non-linear data, researchers in the last two decades have increasingly begun to forecast hydrological data using artificial neural networks (ANNs). ANNs have been used to forecast droughts in several studies (Mishra and Desai 2006; Morid et al. 2007; Bacanli et al. 2008; Barros and Bowden 2008; Cutore et al. 2009; Karamouz et al. 2009; Marj and Meijerink 2011). However, ANNs are limited in their ability to deal with non-stationarities in the data, a weakness also shared by ARIMA and other stochastic models.

Support vector regression (SVRs) are a relatively new form of machine learning that was developed by Vapnik (1995), and which have been recently used in the field of hydrological forecasting. There are several studies where SVRs were used in hydrological forecasting. Khan and Coulibaly (2006) found that a SVR model was more effective at predicting 3–12 month lake water levels than ANN models. Kisi and Cimen (2009) used SVRs to estimate daily evaporation. Finally, SVRs have been successfully used to predict hourly streamflow (Asefa et al. 2006), and were shown to perform better than ANN and ARIMA models for monthly streamflow prediction (Wang et al. 2009; Maity et al. 2010), respectively. SVRs have also been applied in drought forecasting (Belayneh and Adamowski 2012).

Wavelet analysis, an effective tool to deal with non-stationary data, is an emerging tool for hydrologic forecasting and has recently been applied to: examine the rainfall–runoff relationship in a Karstic watershed (Labat et al. 1999), to characterize daily streamflow (Saco and Kumar 2000) and monthly reservoir inflow (Coulibaly et al. 2000), to evaluate rainfall–runoff models (Lane 2007), to forecast river flow (Adamowski 2008; Adamowski and Sun 2010; Ozger et al. 2012; Rathinasamy et al. 2014; Nourani et al. 2014), to forecast groundwater levels (Adamowski and Chan 2011), to forecast future precipitation values (Partal and Kisi 2007) and for the purposes of drought forecasting (Kim and Valdes 2003; Ozger et al. 2012; Mishra and Singh 2012; Belayneh and Adamowski 2012).

The effectiveness of these data-driven models and wavelet analysis, coupled with ANN and SVR models, has been shown in a variety of study locations. Kim and Valdes (2003) used WA-ANN models to forecast drought in the semi-arid climate of the Conchos River Basin of Mexico. Mishra and Desai (2006) used ANN models to forecast drought in the Kansabati River Basin of India. Bacanli et al. (2008) forecast the SPI in Central Anatolia where the precipitation was concentrated in the spring and winter and where the temperature difference between summer and winter was extremely high. Ozger et al. (2012) coupled wavelet analysis with artificial intelligence models to forecast long term drought in Texas, while Mishra and Singh (2012) investigated the relationship between meteorological drought and hydrological drought using wavelet analysis in different regions of the United States. While the principal reason for the use of these models in the aforementioned areas is the susceptibility of these regions to drought, the variability in climatic conditions highlights how versatile and effective these new forecasting methods are.

Belayneh and Adamowski (2012) forecast SPI 3 and SPI 12 in the Awash River Basin using ANN, SVR and WA-SVR models. This study complements that study by forecasting the SPI over a larger selection of stations in the same area, and by coupling, SVR models with wavelet transforms. The main objective of the present study was to compare traditional drought forecasting methods such as ARIMA models with machine learning techniques such as ANNs and SVR, along with ANNs with data pre-processed using wavelet transforms (WA-ANN), SVR, and the coupling of wavelet transforms and support vector regression (WA-SVR) for short-term drought forecasting. The SPI, namely SPI 3 and SPI 6, were forecast using the above mentioned methods for lead times of 1 and 3 months in the Awash River Basin of Ethiopia. Both SPI 3 and SPI 6 are short-term drought indicators, and forecast lead times of 1 and 3 months represent the shortest possible monthly lead time and a short seasonal lead time, respectively.

Theoretical development

Development of SPI series

The SPI was developed by McKee et al. (1993). A number of advantages arise from the use of the SPI index. First, the index is based on precipitation alone making its evaluation relatively easy (Cacciamani et al. 2007). Secondly, the index makes it possible to describe drought on multiple time scales (Tsakiris and Vangelis 2004; Mishra and Desai 2006; Cacciamani et al. 2007). A third advantage of the SPI is its standardization which makes it particularly well suited to compare drought conditions among different time periods and regions with different climates (Cacciamani et al. 2007). A drought event occurs at the time when the value of the SPI is continuously negative; the event ends when the SPI becomes positive. Table 1 provides a drought classification based on SPI. Details regarding the computation of the SPI can be found in Belayneh and Adamowski (2012) and Mishra and Desai (2006).

Autoregressive integrated moving average (ARIMA) models

Autoregressive integrated moving average models are amongst the most commonly used stochastic models for drought forecasting (Mishra and Desai 2005, 2006; Mishra et al. 2007; Cancelliere et al. 2007; Han et al. 2010).

The general non-seasonal ARIMA model may be written as (Box and Jenkins 1976):

and

where z t is the observed time series and B is a back shift operator. \(\phi\)(B) and θ(B) are polynomials of order p and q, respectively. The orders p and q are the order of non-seasonal auto-regression and the order of non-seasonal moving average, respectively. Random errors, a t are assumed to be independently and identically distributed with a mean of zero and a constant variance. \(\nabla^{d}\) describes the differencing operation to data series to make the data series stationary and d is the number of regular differencing.

The time series model development consists of three stages: identification, estimation and diagnostic check (Box et al. 1994). In the identification stage, data transformation is often needed to make the time series stationary. Stationarity is a necessary condition in building an ARIMA model that is useful for forecasting (Zhang 2003). The estimation stage of model development consists of the estimation of model parameters. The last stage of model building is the diagnostic checking of model adequacy. This stage checks if the model assumptions about the errors are satisfied. Several diagnostic statistics and plots of the residuals can be used to examine the goodness of fit of the tentative model to the observed data. If the model is inadequate, a new tentative model should be identified, which is subsequently followed, again, by the stages of estimation and diagnostic checking.

Artificial neural network models

ANNs are flexible computing frameworks for modeling a broad range of nonlinear problems. They have many features which are attractive for forecasting such as their rapid development, rapid execution time and their ability to handle large amounts of data without very detailed knowledge of the underlying physical characteristics (ASCE 2000a, b).

The ANN models used in this study have a feed forward multi-layer perceptron (MLP) architecture which was trained with the Levenberg–Marquardt (LM) back propagation algorithm. MLPs have often been used in hydrologic forecasting due to their simplicity. MLPs consist of an input layer, one or more hidden layers, and an output layer. The hidden layer contains the neuron-like processing elements that connect the input and output layers given by (Belayneh and Adamowski 2012):

where N is the number of samples, m is the number of hidden neurons, \(x_{i} (t)\) = the ith input variable at time step t; \(w_{ji}\) = weight that connects the ith neuron in the input layer and the jth neuron in the hidden layer; \(w_{j0}\) = bias for the jth hidden neuron; \(f_{n}\) = activation function of the hidden neuron; \(w_{kj}\) = weight that connects the jth neuron in the hidden layer and kth neuron in the output layer; \(w_{k0}\) = bias for the kth output neuron; \(f_{0}\) = activation function for the output neuron; and \(y_{k}^{\prime } (t)\) is the forecasted kth output at time step t (Kim and Valdes 2003).

MLPs were trained with the LM back propagation algorithm. This algorithm is based on the steepest gradient descent method and Gauss–Newton iteration. In the learning process, the interconnection weights are adjusted using the error convergence technique to obtain a desired output for a given input. In general, the error at the output layer in the model propagates backwards to the input layer through the hidden layer in the network to obtain the final desired output. The gradient descent method is utilized to calculate the weight of the network and adjusts the weight of interconnections to minimize the output error.

Support vector regression models

Support vector regression (SVRs) was introduced by Vapnik (1995) in an effort to characterize the properties of learning machines so that they can generalize well to unseen data (Kisi and Cimen 2011). SVRs embody the structural risk minimization principle, unlike conventional neural networks which adhere to the empirical risk minimization principle. As a result, SVRs seek to minimize the generalization error, while ANNs seek to minimize training error.

In regression estimation with SVR the purpose is to estimate a functional dependency f(\(\mathop x\limits^{ \to }\)) between a set of sampled points X = \({\vec x_{1} , \vec x_{2} , \ldots , \vec x_{l}}\) taken from R n and target values Y = \(\{ y_{1} ,y_{2} , \ldots ,y_{l} \}\) with \(y_{i} \in R\) [the input and target vectors (x i ’s and y i ’s) refer to the monthly records of the SPI index]. Detailed descriptions of SVR model development can be found in Cimen (2008).

Wavelet transforms

The first step in wavelet analysis is to choose a mother wavelet (\(\psi\)). The continuous wavelet transform (CWT) is defined as the sum over all time of the signal multiplied by scaled and shifted versions of the wavelet function ψ (Nason and Von Sachs 1999):

where s is the scale parameter; \(\tau\) is the translation and * corresponds to the complex conjugate (Kim and Valdes 2003). The CWT produces a continuum of all scales as the output. Each scale corresponds to the width of the wavelet; hence, a larger scale means that more of a time series is used in the calculation of the coefficient than in smaller scales. The CWT is useful for processing different images and signals; however, it is not often used for forecasting due to its complexity and time requirements to compute. Instead, the successive wavelet is often discrete in forecasting applications to simplify the numerical calculations. The discrete wavelet transform (DWT) requires less computation time and is simpler to implement. DWT scales and positions are usually based on powers of two (dyadic scales and positions). This is achieved by modifying the wavelet representation to (Cannas et al. 2006):

where j and k are integers that control the scale and translation respectively, while s 0 > 1 is a fixed dilation step (Cannas et al. 2006) and \(\tau_{0}\) is a translation factor that depends on the aforementioned dilation step. The effect of discretizing the wavelet is that the time–space scale is now sampled at discrete levels. The DWT operates two sets of functions: high-pass and low-pass filters. The original time series is passed through high-pass and low-pass filters, and detailed coefficients and approximation series are obtained.

One of the inherent challenges of using the DWT for forecasting applications is that it is not shift invariant (i.e. if we change values at the beginning of our time series, all of the wavelet coefficients will change). To overcome this problem, a redundant algorithm, known as the à trous algorithm can be used, given by (Mallat 1998):

where h is the low pass filter and the finest scale is the original time series. To extract the details, \(w_{i} (k)\), that were eliminated in Eq. (7), the smoothed version of the signal is subtracted from the coarser signal that preceded it, given by (Murtagh et al. 2003):

where \(c_{i} (k)\) is the approximation of the signal and \(c_{i - 1} (k)\) is the coarser signal. Each application of Eqs. (7) and (8) creates a smoother approximation and extracts a higher level of detail. Finally, the non-symmetric Haar wavelet can be used as the low pass filter to prevent any future information from being used during the decomposition (Renaud et al. 2002).

Study areas

In this study, the SPI was forecast for the Awash River Basin in Ethiopia (Fig. 1). The Awash River Basin was separated into three smaller basins for the purpose of this study on the basis of various factors such as location, altitude, climate, topography and agricultural development. The statistics of each station is shown in Table 2. Drought is a common occurrence in the Awash River Basin (Edossa et al. 2010). The heavy dependence of the population on rain-fed agriculture has made the people and the country’s economy extremely vulnerable to the impacts of droughts. The mean annual rainfall of the basin varies from about 1600 mm in the highlands to 160 mm in the northern point of the basin. The total amount of rainfall also varies greatly from year to year, resulting in severe droughts in some years and flooding in others. The total annual surface runoff in the Awash Basin amounts to some 4900 × 106 m3 (Edossa et al. 2010). Effective forecasts of the SPI can be used for mitigating the impacts of drought that manifests as a result of rainfall shortages in the area. Rainfall records from 1970 to 2005 were used to generate SPI 3 and SPI 6 time series. The normal ratio method, recommended by Linsley et al. (1988), was used to estimate the missing rainfall records at some stations.

Awash River Basin (Source: Ministry of Water Resources, Ethiopia. Agricultural Water Management Information System. http://www.mowr.gov.et/AWMISET/images/Awash_agroecologyv3.pdf. Accessed 06-June-2013)

Methodology

ARIMA model development

Based on the Box and Jenkins approach, ARIMA models for the SPI time series were developed based on three steps: model identification, parameter estimation and diagnostic checking. The details on the development of ARIMA models for SPI time series can be found in the works of Mishra and Desai (2005) and Mishra et al. (2007).

In an ARIMA model, the value of a given times series is a linear aggregation of p previous values and a weighted sum of q previous deviations (Mishra and Desai 2006). These ARIMA models are autoregressive to order p and moving average to order q and operate on dth difference of the given times series. Hence, an ARIMA models is distinguished with three parameters (p, d, q) that can each have a positive integer value or a value of zero.

Wavelet transformation

When conducting wavelet analysis, the number of decomposition levels that is appropriate for the data must be chosen. Often the number of decomposition levels is chosen according to the signal length (Tiwari and Chatterjee 2010) given by L = int[log(N)] where L is the level of decomposition and N is the number of samples. According to this methodology the optimal number of decompositions for the SPI time series in this study would have been 3. In this study, each SPI time series was decomposed between 1 and 9 levels. The best results were compared at all decomposition levels to determine the appropriate level. The optimal decomposition level varied between models. Once a time series was decomposed into an appropriate level, the subsequent approximation series was either chosen on its own, in combination with relevant detail series or the relevant detail series were added together without the approximation series. With most SPI time series, choosing just the approximation series resulted in the best forecast results. In some cases, the summation of the approximation series with a decomposed detail series yielded the best forecast results. The appropriate approximation was used as an input to the ANN and SVR models. As discussed in “Wavelet transforms”, the ‘a trous’ wavelet algorithm with a low pass Haar filter was used.

ANN models

The ANN models used to forecast the SPI were recursive models. The input layer for the models was comprised of the SPI values computed from each rainfall gauge in each sub-basin. The input data was standardized from 0 to 1.

All ANN models, without wavelet decomposed inputs, were created with the MATLAB (R.2010a) ANN toolbox. The hyperbolic tangent sigmoid transfer function was the activation function for the hidden layer, while the activation function for the output layer was a linear function. All the ANN models in this study were trained using the LM back propagation algorithm. The LM back propagation algorithm was chosen because of its efficiency and reduced computational time in training models (Adamowski and Chan 2011).

There are between 3 and 5 inputs for each ANN model. The optimal number of input neurons was determined by trial and error, with the number of neurons that exhibited the lowest root mean square error (RMSE) value in the training set being selected. The inputs and outputs were normalized between 0 and 1. Traditionally the number of hidden neurons for ANN models is selected via a trial and error method. However a study by Wanas et al. (1998) empirically determined that the best performance of a neural network occurs when the number of hidden nodes is equal to log(N), where N is the number of training samples. Another study conducted by Mishra and Desai (2006) determined that the optimal number of hidden neurons is 2n + 1, where n is the number of input neurons. In this study, the optimal number of hidden neurons was determined to be between log(N) and (2n + 1). For example, if using the method proposed by Wanas et al. (1998) gave a result of four hidden neurons and using the method proposed by Mishra and Desai (2006) gave seven hidden neurons, the optimal number of hidden neurons is between 4 and 7; thereafter the optimal number was chosen via trial and error. These two methods helped establish an upper and lower bound for the number of hidden neurons.

For all the ANN models, 80 % of the data was used to train the models, while the remaining 20 % of the data was divided into a testing and validation set with each set comprising 10 % of the data.

WA-ANN models

The WA-ANN models were trained in the same way as the ANN models, with the exception that the inputs were made up from either, the approximation series, or a combination of the approximation and detail series after the appropriate wavelet decomposition was selected. The model architecture for WA-ANN models consists of 3–5 neurons in the input layer, 4–7 neurons in the hidden layer and one neuron in the output layer. The selection of the optimal number of neurons in both the input and hidden layers was done in the same way as for the ANN models. The data was partitioned into training, testing and validation sets in the same manner as ANN models.

Support vector regression models

All SVR models were created using the OnlineSVR software created by Parrella (2007), which can be used to build support vector machines for regression. The data was partitioned into two sets: a calibration set and a validation set. 90 % of the data was partitioned into the calibration set while the final 10 % of the data was used as the validation set. Unlike neural networks the data can only be partitioned into two sets with the calibration set being equivalent to the training and testing sets found in neural networks. All inputs and outputs were normalized between 0 and 1.

All SVR models used the nonlinear radial basis function (RBF) kernel. As a result, each SVR model consisted of three parameters that were selected: gamma (γ), cost (C), and epsilon (ε). The γ parameter is a constant that reduces the model space and controls the complexity of the solution, while C is a positive constant that is a capacity control parameter, and ε is the loss function that describes the regression vector without all the input data (Kisi and Cimen 2011). These three parameters were selected based on a trial and error procedure. The combination of parameters that produced the lowest RMSE values for the calibration data sets were selected.

WA-SVR models

The WA-SVR models were trained in exactly the same way as the SVR models with the OnlineSVR software (2007) with the exception that the inputs were wavelet decomposed.

The data for WA-SVR models was partitioned exactly like the data for SVR. The optimal parameters for the WA-SVR models were chosen using the same procedure used to find the parameters for SVR models.

Performance measures

To evaluate the performances of the aforementioned data driven models the following measures of goodness of fit were used:

where \(\bar{y}_{i}\) is the mean value taken over N, y i is the observed value, \(\hat{y}_{i}\) is the forecasted value and N is the number of samples. The coefficient of determination measures the degree of correlation among the observed and predicted values. It is a measure of the strength of the model in developing a relationship among input and output variables. The higher the value of R 2 (with 1 being the highest possible value), the better the performance of the model.

where SSE is the sum of squared errors, and N is the number of data points used. SSE is given by:

with the variables already having been defined. The RMSE evaluates the variance of errors independently of the sample size.

The MAE is used to measure how close forecasted values are to the observed values. It is the average of the absolute errors.

Results and discussion

In the following sections, the forecast results for the best data driven models at each sub-basin are presented. The forecasts presented are from the validation data sets for time series of SPI 3 and SPI 6, which are mostly used to describe short-term drought (agricultural drought).

SPI 3 forecasts

The SPI 3 forecast results for all data driven models are presented in Tables 3 and 4. As the forecast lead time is increased, the forecast accuracy deteriorates for all stations. In the Upper Awash basin, the best data driven model for SPI 3 forecasts of 1 month lead time was a WA-ANN model. The WA-ANN model at the Ziquala station had the best results in terms of RMSE and MAE, with forecast results of 0.4072 and 0.3918, respectively. The Ginchi station had the best WA-ANN model in terms of R 2, with forecast results of 0.8808. When the forecast lead time is increased to 3 months, the best models remain WA-ANN models. The Bantu Liben station had the model with the lowest RMSE and MAE values of 0.5098 and 0.4941, respectively. The Sebeta station had the best results in terms of R 2, with a value of 0.7301.

In the Middle Awash basin, for forecasts of 1 month lead time, WA-ANN and WA-SVR models had the best forecast results. The WA-ANN model at the Modjo station had the best results in terms of R 2 with a value of 0.8564. However, unlike the Upper Awash basin, the best forecast results in terms of RMSE and MAE were from a WA-SVR model. The WA-SVR model at the Modjo station had the lowest RMSE and MAE values of 0.4309 and 0.4018, respectively. For forecasts of 3 months lead time, WA-ANN models had the best results across all performance measures with the Modjo station having the highest value of R 2 at 0.6808 and the Gelemsso station having the lowest RMSE and MAE values of 0.5448 and 0.5334, respectively.

In the Lower Awash basin, for forecasts of 1 month lead time, the best results were from WA-ANN and WA-SVR models, similar to the Middle Awash basin. The highest value for R 2 was 0.7723 and it was from the WA-ANN model at the Eliwuha station. The lowest values for RMSE and MAE were 0.4048 and 0.3873, and were from the WA-SVR model at the Eliwuha station. For forecasts of 3 months lead time the best results were observed at the Bati station in terms of R 2. The WA-SVR model at this station had the highest R 2 value of 0.5915 and the WA-SVR model at the Eliwuha station had the lowest RMSE and MAE values of 0.5159 and 0.5129 respectively.

SPI 6 forecasts

The SPI 6 forecast data is presented in Tables 5 and 6. The forecast results for SPI 6 are significantly better than SPI 3 forecasts according to all three performance measures. In the Upper Awash Basin a WA-ANN model at the Bantu Liben station had the best forecast result in terms of RMSE with a result of 0.3438. Another WA-ANN model at the Ginchi station provided the lowest MAE value of 0.3212. The best forecast result in terms of R 2, 0.9163, was from a WA-SVR model at the Ziquala station. The forecast results in Tables 5 and 6 show that WA-ANN and WA-SVR models provide the best SPI 6 forecasts. Neither method is significantly better than the other. This is best illustrated by the fact the models provide very similar results according to the performance measures used.

As the forecast lead time is increased the forecast accuracy of all the models declines. This decline is most evident in the ARIMA, ANN and SVR models. The forecast accuracy for 3 month lead time forecasts are still significantly better for WA-ANN and WA-SVR models. In the Upper Awash Basin the best model in terms of R 2 is a WA-ANN model at the Sebeta station and has a forecast result of 0.7723. The best model in terms of RMSE and MAE is a WA-SVR model at the Ginchi station with forecast results of 0.4224 and 0.3864 respectively. In the Middle Awash Basin the best model in terms of R 2 is also a WA-ANN model and the Modjo station with a result of 0.7414 and the best model in terms of RMSE and MAE is a WA-SVR model at the Dire Dawa station with forecast results of 0.4049 and 0.3897 respectively. In the Lower Basin the best result in terms of R 2 and MAE is from a WA-SVR model at the Eliwuha and Dubti stations respectively and the best model in terms of RMSE is from a WA-ANN model at the Dubti station.

Discussion

As shown in the forecast results for both SPI 3 and SPI 6, the use of wavelet analysis increased forecast accuracy for both 1 and 3 month forecast lead times. This pattern is similarly shown in SPI forecasts within the Awash River Basin for lead times of 1 and 6 months in Belayneh and Adamowski (2012). Once the original SPI time series was decomposed using wavelet analysis it was found that the approximation series of the signal was disproportionally more important for future forecasts compared to the wavelet detail series of the signal. Irrespective of the number of decomposition levels, an absence of the approximation series would result in poor forecast results. Adding the approximation series to the wavelet details did not noticeably improve the forecast results compared to using the approximation series on its own in most models. Traditionally, the number of wavelet decompositions is either determined via trial and error or using the formula L = log [N], with N being the number of samples. Using this formula the optimal number of decompositions would be L = 3. In this study, the above method was repeated for wavelet decomposition levels 1 through 9 until the appropriate level was determined using the aforementioned performance measures.

In general, WA-ANN and WA-SVR models were the best forecast models in each of the sub-basins. Wavelet-neural networks were also shown to be the best forecast method in forecasting the SPI at 1 and 6 months lead time in Belayneh and Adamowski (2012). Unlike the study by Belayneh and Adamowski (2012), this study also coupled wavelet transforms with SVR models. Coupled WA-SVR models had improved results compared to SVR models and outperformed WA-ANN using some of the performance measures models at some stations.

While both the WA-ANN and WA-SVR models were effective in forecasting SPI 3, most WA-ANN models had more accurate forecasts. In addition, as shown by Figs. 2 and 3, the forecast from the WA-ANN model seems to be more effective in forecasting the extreme SPI values, whether indicative of severe drought or heavy precipitation. While the WA-SVR model closely mirrors the observed SPI trends, it seems to underestimate the extreme events, especially the extreme drought event at 170 months.

The reason why WA-ANN models seem to be slightly more effective than WA-SVR models for forecasts of SPI 3, and seem to be more effective in forecasting extreme events, is likely due to the inherent effectiveness of ANNs compared to SVR models, such as their simplicity in terms of development and their reduced computation time, as the wavelet analysis used for both machine learning techniques is the same. This observation is further supported by the fact that most ANN forecasts have better results than SVR models as shown in Table 3. Theoretically, SVR models should perform better than ANN models because they adhere to the structural risk minimization principle instead of the empirical risk minimization principle. They should, in theory, not be as susceptible to local minima or maxima. However, the performance of SVR models is highly dependent of the selection of the appropriate kernel and its three parameters. Given that there are no prior studies on the selection of these parameters for forecasts of the SPI, the selection was done via a trial and error procedure. This process is made even more difficult by the size of the data set (monthly data from 1970 to 2005), which contributes to the long computation time of SVR models. The uncertainty regarding the three SVR parameters increases the number of trials required to obtain the optimal model. Due to the long computational time of SVR models the same amount of trials cannot be done as for ANN models. For ANN models, even in complex systems, the relationship between input and output variables does not need to be fully understood. Effective models can be determined by varying the number of neurons within the hidden layer. Producing several models with varying architectures is not computationally intensive and allows for a larger selection pool for the optimal model. In addition, the ability of wavelet analysis to effectively forecast local discontinuities likely reduces the susceptibility in ANN models when they are coupled.

This study also shows that the à trous algorithm is an effective tool for forecasting SPI time series. The à trous algorithm de-noises a given time series and improves the performances of both ANN and SVR models. The à trous algorithm is shift invariant, making it more applicable for forecasting studies, which includes drought forecasting. The fact that wavelet based models had the best results is likely due to the fact that wavelet decomposition was able to capture non-stationary features of the data.

Conclusion

This study explored forecasting short-term drought conditions using five different data driven models in the Awash River basin, including newly proposed methods based on SVR and WA-SVR. With respect to wavelet analysis, this study found, for the first time, that the use of only the approximation series was effective in de-noising a given SPI time series. SPI 1 and SPI 3 were forecast over lead times of 1 and 3 months using ARIMA, ANN, SVR, WA-SVR and WA-ANN models. Forecast results for SPI 1 were low in terms of the coefficient of determination, likely a result of the low levels of autocorrelation of the data sets compared to SPI 3. Overall, the WA-ANN method, with a new method for determining the optimal number of neurons within the hidden layer, had the best forecast results with WA-SVR models also having very good results. Wavelet coupled models consistently showed lower values of RMSE and MAE compared to the other data driven models, possibly because wavelet decomposition de-noises a given time series subsequently allowing either ANN or SVR models to forecast the main signal rather than the main signal with noise.

Two of the three Awash River Sub-basins have semi-arid climates. The effectiveness of the WA-ANN and WA-SVR models indicate that these models may be effective forecast tools in semi-arid regions. Studies should also focus on different regions and try to compare the effectiveness of data driven methods in forecasting different drought indices. The forecasts did not show a particular trend with respect to a particular sub-basin. The climatology of a given sub-basin did not significantly affect the forecast results for any particular station. This study has not found a clear link between a particular sub-basin and performance indicating the need for further studies in different climates to determine whether there is a significant link between forecast accuracy and climate. The coupling of these data driven models with uncertainty analysis techniques such as bootstrapping should be investigated. In addition, coupling SVR models with genetic algorithms to make parameter estimation more efficient could be explored.

References

Adamowski J (2008) Development of a short-term river flood forecasting method for snowmelt driven floods based on wavelet and cross-wavelet analysis. J Hydrol 353:247–266

Adamowski J, Chan HF (2011) A wavelet neural network conjunction model for groundwater level forecasting. J Hydrol 407:28–40

Adamowski J, Sun K (2010) Development of a coupled wavelet transform and neural network method for flow forecasting of non-perennial rivers in semi-arid watersheds. J Hydrol 390:85–91

Adamowski K, Prokoph A, Adamowski J (2009) Development of a new method of wavelet aided trend detection and estimation. Hydrol Process 23:2686–2696

Adamowski J, Adamowski K, Bougadis J (2010) Influence of trend on short duration design storms. Water Resour Manage 24:401–413

Adamowski J, Chan H, Prasher S, Sharda VN (2012) Comparison of multivariate adaptive regression splines with coupled wavelet transform artificial neural networks for runoff forecasting in Himalayan micro-watersheds with limited data. J Hydroinform 3:731–744

ASCE Task Committee on Application of Artificial Neural Networks in Hydrology (2000a) Artificial neural networks in hydrology. I. Preliminary concepts. J Hydrol Eng 5(2):124–137

ASCE Task Committee on Application of Artificial Neural Networks in Hydrology (2000b) Artificial neural networks in hydrology. II. Hydrologic applications. Hydrol Eng 5(2):115–123

Asefa T, Kemblowski M, McKee M, Khalil A (2006) Multi-time scale stream flow 505 predicitons: the support vector machines approach. J Hydrol 318(1–4):7–16

Bacanli UG, Firat M, Dikbas F (2008) Adaptive neuro-fuzzy inference system for drought forecasting. Stoch Env Res Risk Assess 23(8):1143–1154

Barros A, Bowden G (2008) Toward long-lead operational forecasts of drought: an experimental study in the Murray-Darling River Basin. J Hydrol 357(3–4):349–367

Belayneh A, Adamowski J (2012) Standard Precipitation Index drought forecasting using neural networks, wavelet neural networks, and support vector regression. Appl Comput Intell Soft Comput 2012:794061. doi:10.1155/2012/794061

Belayneh A, Adamowski J, Khalil B, Ozga-Zielinski B (2014) Long-term SPI drought forecasting in the Awash River Basin in Ethiopia using wavelet-support vector regression models. J Hydrol 508:418–429

Box GEP, Jenkins GM (1976) Time series analysis. Forecasting and control. Holden-Day, San Francisco

Box GEP, Jenkins GM, Reinsel GC (1994) Time series analysis. Forecasting and control. Prentice Hall, Englewood Cliffs

Butler C, Adamowski J (2015) Empowering marginalized communities in water resources management: addressing inequitable practices in participatory model building. J Environ Manage 153:153–162

Cacciamani C, Morgillo A, Marchesi S, Pavan V (2007) Monitoring and forecasting drought on a regional scale: Emilia-Romagna region. Water Sci Technol Libr 62(1):29–48

Campisi S, Adamowski J, Oron G (2012) Forecasting urban water demand via wavelet- denoising and neural network models. Case study: city of Syracuse, Italy. Water Resour Manag 26:3539–3558

Cancelliere A, Di Mauro G, Bonaccorso B, Rossi G (2007) Stochastic forecasting of drought indices. In: Rossi G, Vega T, Bonaccorso B (eds) Methods and tools for drought analysis and management. Springer, Netherlands

Cannas B, Fanni A, Sias G, Tronci S, Zedda MK (2006) River flow forecasting using neural networks and wavelet analysis. In: Proceedings of the European Geosciences Union

Cimen M (2008) Estimation of daily suspended sediments using support vector machines. Hydrol Sci J 53(3):656–666

Coulibaly P, Anctil F, Bobee B (2000) Daily reservoir inflowvforecasting using artificial neural networks with stopped trainingvapproach. J Hydrol 230:244–257

Cutore P, Di Mauro G, Cancelliere A (2009) Forecasting palmer index using neural networks and climatic indexes. J Hydrol Eng 14:588–595

Edossa DC, Babel MS, Gupta AD (2010) Drought Analysis on the Awash River Basin, Ethiopia. Water Resour Manag 24:1441–1460

Guttman NB (1999) Accepting the standardized precipitation index: a calculation algorithm. J Am Water Resour Assoc 35(2):311–322

Haidary A, Amiri BJ, Adamowski J, Fohrer N, Nakane K (2013) Assessing the impacts of four land use types on the water quality of wetlands in Japan. Water Resour Manage 27:2217–2229

Halbe J, Pahl-Wostl C, Sendzimir J, Adamowski J (2013) Towards adaptive and integrated management paradigms to meet the challenges of water governance. Water Sci Technol Water Supply 67:2651–2660

Han P, Wang P, Zhang S, Zhu D (2010) Drought forecasting with vegetation temperature condition index. Wuhan Daxue Xuebao (Xinxi Kexue Ban)/Geomat Inf Sci Wuhan Univ 35(10):1202–1206 + 1259

Husak GJ, Funk CC, Michaelsen J, Magadzire T, Goldsberry KP (2013) Developing seasonal rainfall scenarios for food security early warning. Theor Appl Climatol. doi:10.1007/s00704-013-0838-8

Inam A, Adamowski J, Halbe J, Prasher S (2015) Using causal loop diagrams for the initialization of stakeholder engagement in soil salinity management in agricultural watersheds in developing countries: a case study in the Rechna Doab watershed, Pakistan. J Environ Manage 152:251–267

Karamouz M, Rasouli K, Nazil S (2009) Development of a hybrid index for drought prediction: case study. J Hydrol Eng 14:617–627

Keshavarz M, Karami E, Vanclay F (2013) The social experience of drought in rural Iran. J Land Use Policy 30:120–129

Khan MS, Coulibaly P (2006) Application of support vector machine in lake water level prediction. J Hydrol Eng 11(3):199–205

Kim T, Valdes JB (2003) Nonlinear model for drought forecasting based on a conjunction of wavelet transforms and neural networks. J Hydrol Eng 8:319–328

Kisi O, Cimen M (2009) Evapotranspiration modelling using support vector machines. Hydrol Sci J 54(5):918–928

Kisi O, Cimen M (2011) A wavelet-support vector machine conjunction model for monthly streamflow forecasting. J Hydrol 399:132–140

Kolinjivadi V, Adamowski J, Kosoy N (2014a) Juggling multiple dimensions in a complex socioecosystem: the issue of targeting in payments for ecosystem services. Geoforum 58:1–13

Kolinjivadi V, Adamowski J, Kosoy N (2014b) Recasting payments for ecosystem services (PES) in water resource management: a novel institutional approach. Ecosyst Serv 10:144–154

Labat D, Ababou R, Mangin A (1999) Wavelet analysis in Karstic hydrology 2nd Part: rainfall–runoff cross-wavelet analysis. Comptes Rendus de l’Academie des Sci Ser IIA Earth Planet Sci 329:881–887

Lane SN (2007) Assessment of rainfall–runoff models based upon wavelet analysis. Hydrol Process 21:586–607

Linsley RK, Kohler MA, Paulhus JLH (1988) Hydrology for engineers. International edition. McGraw-Hill, Singapore

Maity R, Bhagwat PP, Bhatnagar A (2010) Potential of support vector regression for prediction of monthly streamflow using endogenous property. Hydrol Process 24:917–923

Mallat SG (1998) A wavelet tour of signal processing. Academic, San Diego, p 577

Marj AF, Meijerink AM (2011) Agricultural drought forecasting using satellite images, climate indices and artificial neural network. Int J Remote Sens 32(24):9707–9719

McKee TB, Doesken NJ, Kleist J (1993) The relationship of drought frequency and duration to time scales. In: Paper presented at 8th conference on applied climatology. American Meteorological Society, Anaheim

Mishra AK, Desai VR (2005) Drought forecasting using stochastic models. Stoch Env Res Risk Assess 19(5):326–339

Mishra AK, Desai VR (2006) Drought forecasting using feed-forward recursive neural network. Ecol Model 198(1–2):127–138

Mishra AK, Singh VP (2010) A review of drought concepts. J Hydrol 391(1–2):202–216

Mishra AK, Singh VP (2012) Simulating hydrological drought properties at different spatial units in the United States based on wavelet-bayesian approach. Earth Interact 16(17):1–23

Mishra AK, Desai VR, Singh VP (2007) Drought forecasting using a hybrid stochastic and neural network model. J Hydrol Eng 12:626–638

Morid S, Smakhtin V, Bagherzadeh K (2007) Drought forecasting using artificial neural networks and time series of drought indices. Int J Climatol 27(15):2103–2111

Murtagh F, Starcj JL, Renaud O (2003) On neuro-wavelet modeling. Decis Support Syst 37:475–484

Nalley D, Adamowski J, Khalil B (2012) Using discrete wavelet transforms to analyze trends in streamflow and precipitation in Quebec and Ontario (1954–2008). J Hydrol 475:204–228

Nalley D, Adamowski J, Khalil B, Ozga-Zielinski B (2013) Trend detection in surface air temperature in Ontario and Quebec, Canada during 1967–2006 using the discrete wavelet transform. J Atmos Res 132(133):375–398

Nason GP, Von Sachs R (1999) Wavelets in time-series analysis. Philos Trans R Soc A Math Phys Eng Sci 357(1760):2511–2526

Nourani V, Baghanam A, Adamowski J, Kisi O (2014) Applications of hybrid wavelet-artificial intelligence models in hydrology: a review. J Hydrol 514:358–377

Ntale HK, Gan TY (2003) Drought indices and their application to East Africa. Int J Climatol 23(11):1335–1357

Ozger M, Mishra AK, Singh VP (2012) Long lead time drought forecasting using a wavelet and fuzzy logic combination model: a case study in Texas. J Hydrometeorol 13(1):284–297

Parrella F (2007) Online support vector regression. Master Thesis, University of Genoa

Partal T, Kisi O (2007) Wavelet and neuro-fuzzy conjunction model for precipitation forecasting. J Hydrol 342(1–2):199–212

Paulo A, Pereira L (2008) Stochastic predictions of drought class transitions. Water Resour Manag 22(9):1277–1296

Paulo A, Ferreira E, Coelho C, Pereira L (2005) Drought class transition analysis through Markov and Loglinear models, an approach to early warning. Agric Water Manag 77(1–3):59–81

Pingale S, Khare D, Jat M, Adamowski J (2014) Spatial and temporal trends of mean and extreme rainfall and temperature for the 33 urban centres of the arid and semi-arid state of Rajasthan, India. J Atmos Res 138:73–90

Rathinasamy M, Khosa R, Adamowski J, Ch S, Partheepan G, Anand J, Narsimlu B (2014) Wavelet-based multiscale performance analysis: an approach to assess and improve hydrological models. Water Resour Res 50(12):9721–9737. doi:10.1002/2013WR014650

Renaud O, Starck J, Murtagh F (2002) Wavelet-based forecasting of short and long memory time series. Department of Economics, University of Geneva

Rossi G, Castiglione L, Bonaccorso B (2007) Guidelines for planning and implementing drought mitigation measures. In: Rossi G, Vega T, Bonaccorso B (eds) Methods and tools for drought analysis and management. Springer, Netherlands, pp 325–247

Saadat H, Adamowski J, Bonnell R, Sharifi F, Namdar M, Ale-Ebrahim S (2011) Land use and land cover classification over a large area in Iran based on single date analysis of satellite imagery. J Photogramm Remote Sens 66:608–619

Saco P, Kumar P (2000) Coherent modes in multiscale variability of streamflow over the United States. Water Resour Res 36(4):1049–1067

Straith D, Adamowski J, Reilly K (2014) Exploring the attributes, strategies and contextual knowledge of champions of change in the Canadian water sector. Can Water Resour J 39(3):255–269

Tiwari M, Adamowski J (2014) Urban water demand forecasting and uncertainty assessment using ensemble wavelet-bootstrap-neural network models. Water Resour Res 49(10):6486–6507

Tiwari MK, Chatterjee C (2010) Development of an accurate and reliable hourly flood forecasting model using wavelet-bootstrap-ANN (WBANN) hybrid approach. J Hydrol 394:458–470

Tsakiris G, Vangelis H (2004) Towards a drought watch system based on spatial SPI. Water Resour Manage 18(1):1–12

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Wanas N, Auda G, Kamel MS, Karray F (1998) On the optimal number of hidden nodes in a neural network. In: Proceedings of the IEEE Canadian conference on electrical and computer engineering, pp 918–921

Wang WC, Chau KW, Cheng CT, Qiu L (2009) A comparison of performance of several artificial intelligence methods for forecasting monthly discharge time series. J Hydrol 374(3–4):294–306

Zhang GP (2003) Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 50:159–175

Acknowledgments

An NSERC Discovery Grant, and a CFI Grant, both held by Jan Adamowski were used to fund this research. The data was obtained from the Meteorological Society of Ethiopia (NMSA). Their help is greatly appreciated.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Belayneh, A., Adamowski, J. & Khalil, B. Short-term SPI drought forecasting in the Awash River Basin in Ethiopia using wavelet transforms and machine learning methods. Sustain. Water Resour. Manag. 2, 87–101 (2016). https://doi.org/10.1007/s40899-015-0040-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40899-015-0040-5