Abstract

We characterize functions of d-tuples of bounded operators on a Hilbert space that are uniformly approximable by free polynomials on balanced open sets.

Similar content being viewed by others

1 Introduction

What are non-commutative holomorphic functions in d variables? The question has been studied since the pioneering work of Taylor [21], but there is still no definitive answer. The class should certainly contain the non-commutative, also called free, polynomials, i.e. polynomials defined on d non-commuting variables. It should be some sort of generalization of the free polynomials, analogous to how holomorphic functions are generalizations of polynomials in commuting variables. Just as in the commutative case, the class will depend on the choice of domain. In this note, we shall consider domains that are sets of d-tuples of operators on a Hilbert space.

One approach is to study non-commutative convergent power series on domains in \({B(\mathscr {H})}^d\) (where \({B(\mathscr {H})}^d\) means d-tuples of bounded operators on some Hilbert space \(\mathscr {H}\)). This has been done systematically in Popescu’s monograph [20], following on earlier work such as [4, 5, 17–19].

Working with non-commutative power series is natural and appealing, but does present some difficulties. One is that assuming a priori that the series converges uniformly is a strong assumption, and could be hard to verify if the function is presented in some other form. On every infinite dimensional Banach space there is an entire holomorphic function with finite radius of uniform convergence [6, p. 461]. A second difficulty is dealing with domains that are not the domains of convergence of power series.

Another approach to non-commutative functions is the theory of nc-functions. Let \(\mathscr {M}_n\) denote the n-by-n complex matrices, which we shall think of as operators on a finite dimensional Hilbert space, and let \({\mathbb M}^{[d]}= \bigcup _{n=1}^\infty \mathscr {M}_n^d\).

If \(x = (x^1, \ldots , x^d) \) and \(y = (y^1, \ldots , y^d)\) are d-tuples of operators on the spaces \(\mathscr {H}\) and \(\mathscr {K}\) respectively, we let \(x {\oplus } y\) denote the d-tuple \( (x^1 {\oplus } y^1, \ldots , x^d {\oplus } y^d)\) on \(\mathscr {H}{\oplus } \mathscr {K}\); and if \(s \in B(\mathscr {H},\mathscr {K})\) and \(t \in B(\mathscr {K},\mathscr {H})\) we let sx and xt denote respectively \((sx^1, \ldots , sx^d)\) and \((x^1 t, \ldots , x^d t)\).

Definition 1.1

A function f defined on some set \(D \subseteq {\mathbb M}^{[d]}\) is called graded if, for each n, f maps \(D \cap \mathscr {M}_n^d\) into \(\mathscr {M}_n\). We say f is an nc-function if it is graded and if, whenever \(x, y \in D\) and there exists a matrix s such that \( s x = y s\), then \(s f(x) = f(y) s\).

The theory of nc-functions has recently become a very active area of research, see e.g. [7–12, 15, 16]. Kaliuzhnyi-Verbovetskyi and Vinnikov have written a monograph [13] which develops the important ideas of the subject.

Nc-functions are a priori defined on matrices, not operators. Certain formulas that represent them (such as (17) below) can be naturally extended to operators. This raises the question of how one can intrinsically characterize functions on \({B(\mathscr {H})}^d\) that are in some sense extensions of nc-functions.

The purpose of this note is to show that on balanced domains in \({B(\mathscr {H})}^d\) there is an algebraic property—intertwining preserving—that together with an appropriate continuity is necessary and sufficient for a function to have a convergent power series, which in turn is equivalent to the function being approximable by free polynomials on finite sets. Moreover, it is a variation on the idea of an nc-function. On certain domains \(G_{\delta }^\#\) defined below, the properties of intertwining preserving and continuity are equivalent in turn to the function being the unique extension of a bounded nc-function.

Definition 1.2

Let \(\mathscr {H}\) be an infinite dimensional Hilbert space, let \(D \subseteq {B(\mathscr {H})}^d\), and let \(F:D \rightarrow {B(\mathscr {H})}\). We say that F is intertwining preserving (IP) if:

-

(i)

Whenever \(x,y \in D\) and there exists some bounded linear operator \(T \in {B(\mathscr {H})}\) such that \( T x = y T\), then \(T F(x) = F(y) T\).

-

(ii)

Whenever \(( x_ n )\) is a bounded sequence in D, and there exists some invertible bounded linear operator \( s :\mathscr {H}\rightarrow \bigoplus \mathscr {H}\) such that

$$\begin{aligned} s^{-1} \begin{bmatrix} x_1&\quad 0&\quad \cdots \\ 0&\quad x_2&\quad \cdots \\ \vdots&\quad \vdots&\quad \ddots \end{bmatrix} s \in D, \end{aligned}$$then

$$\begin{aligned} F\left( s^{-1} \begin{bmatrix} x_1&\quad 0&\quad \cdots \\ 0&\quad x_2&\quad \cdots \\ \vdots&\quad \vdots&\quad \ddots \end{bmatrix} s \right) = s^{-1} \begin{bmatrix} F(x_1)&\quad 0&\quad \cdots \\ 0&\quad F(x_2)&\quad \cdots \\ \vdots&\quad \vdots&\quad \ddots \end{bmatrix} s. \end{aligned}$$

Note that every free polynomial is IP, and therefore this condition must be inherited by any function that is a limit of free polynomials on finite sets. Nc-functions have the property that \(f(x {\oplus } y) = f(x) {\oplus } f(y)\), and we would like to exploit the analogous condition (ii) of IP functions. To do this, we would like our domains to be closed under direct sums. However, we can only do this by some identification of \(\mathscr {H}{\oplus } \mathscr {H}\) with \(\mathscr {H}\).

Definition 1.3

Let \(\mathscr {H}\) be an infinite dimensional Hilbert space. We say a set \(D \subseteq {B(\mathscr {H})}^d\) is closed with respect to countable direct sums if, for every bounded sequence \(x_1, x_2, \ldots \in D\), there is a unitary \(u:\mathscr {H}\rightarrow \mathscr {H}{\oplus } \mathscr {H}{\oplus } \cdots \) such that the d-tuple \(u^* (x_1 {\oplus } x_2 {\oplus } \cdots )u \in D\).

Two natural examples are the sets

Definition 1.4

Let \(F:{B(\mathscr {H})}^d \rightarrow {B(\mathscr {H})}\). We say F is sequentially strong operator continuous (SSOC) if, whenever \(x_n \rightarrow x\) in the strong operator topology on \({B(\mathscr {H})}^d\), then \(F(x_n)\) tends to F(x) in the strong operator topology on \({B(\mathscr {H})}\).

Since multiplication is sequentially strong operator continuous, it follows that every free polynomial is SSOC, and this property is also inherited by limits on sets that are closed w.r.t. direct sums.

Here is our first main result. Recall that a subset B of a complex vector space is called balanced if whenever \(x \in B\) and \(\alpha \) is in the closed unit disk \(\overline{\mathbb {D}}\), then \(\alpha x \in B\).

Theorem 1.5

Let D be a balanced open set in \({B(\mathscr {H})}^d\) that is closed with respect to countable direct sums, and let \(F :D \rightarrow {B(\mathscr {H})}\). The following are equivalent:

-

(i)

The function F is intertwining preserving and sequentially strong operator continuous.

-

(ii)

There is a power series expansion

$$\begin{aligned} \sum _{k=0}^\infty P_k(x) \end{aligned}$$(2)that converges absolutely at each point \(x \in D\) to F(x), where each \(P_k\) is a homogeneous free polynomial of degree k.

-

(iii)

The function F is uniformly approximable on finite subsets of D by free polynomials.

Let \(\delta \) be an \(I {\times } J\) matrix of free polynomials in d variables, where I and J are any positive integers. Then

where if x is a d-tuple of matrices acting on \(\mathbb {C}^n\), then we calculate the norm of \(\delta (x)\) as the operator norm from \((\mathbb {C}^n)^J\) to \((\mathbb {C}^n)^I\). Notice that \(G_{\delta _1} \cap G_{\delta _2} = G_{\delta _1 \oplus \delta _2}\), so those sets form a base for a topology; we call this the free topology on \({\mathbb M}^{[d]}\).

For the rest of this paper, we shall fix \(\mathscr {H}\) to be a separable infinite dimensional Hilbert space, and let \({B_1(\mathscr {H})}\) denote the unit ball in \({B(\mathscr {H})}\). Let \(\{e_1, e_2, \ldots \}\) be a fixed orthonormal basis of \(\mathscr {H}\), and let \(P_n\) denote orthogonal projection onto \(\bigvee \{ e_1, \ldots , e_n \}\).

There is an obvious extension of (3) to \({B(\mathscr {H})}^d\); we shall call this domain \(G_{\delta }^\#\).

Both sets (1) are of the form (4) for an appropriate choice of \(\delta \). Note that every \(G_{\delta }^\#\) is closed with respect to countable direct sums.

By identifying \(\mathscr {M}_n\) with \(P_n {B(\mathscr {H})}P_n\), we can embed \({\mathbb M}^{[d]}\) in \({B(\mathscr {H})}^d\). If a function \(F :G_{\delta }^\#\rightarrow {B(\mathscr {H})}\) satisfies \( F (x) = P_n F(x)P_n\) whenever \(x = P_n x P_n\), then F naturally induces a graded function \(F^\flat \) on \(G_{\delta }\).

Here is a slightly simplified version of Theorem 5.4 (the assumption that 0 goes to 0 is unnecessary, but without it the statement is more complicated).

Theorem 1.6

Assume that \(G_{\delta }^\#\) is connected and contains 0. Then every bounded nc-function on \(G_{\delta }\) that maps 0 to 0 has a unique extension to an SSOC IP function on \(G_{\delta }^\#\). The extension has a series expansion in free polynomials that converges uniformly on \(G_{t \delta }^\#\) for each \(t>1\).

2 Intertwining preserving functions

The normal definition of an nc-function is a graded function f defined on a set \(D \subseteq {\mathbb M}^{[d]}\) such that D is closed with respect to direct sums, and such that f preserves direct sums and similarities, i.e. \(f(x {\oplus } y) = f(x) {\oplus } f(y)\) and if \( x = s^{-1} y s\) then \(f(x) = s^{-1} f(y) s\), whenever \(x,y \in D \cap \mathscr {M}_n^d\) and s is an invertible matrix in \(\mathscr {M}_n\). The fact that on such sets D this definition agrees with our earlier Definition 1.1 is proved in [13, Proposition 2.1].

There is a subtle difference between the nc-property and IP, because of the rôle of 0. For an nc-function, \(f(x {\oplus } 0) = f(x){\oplus } 0\), but for an IP function, we have \(f(x {\oplus } 0) = f(x) {\oplus } f(0)\). If \(f(0) = 0\), this presents no difficulty; but 0 need not lie in the domain of f, and even if it does, it need not be mapped to 0.

Consider, for an illustration, the case \(d=1\) and the function \(f(x) = x+1\). For each \(n \in \mathscr {N}\), let \(M_n\) be the n-by-n matrix that is 1 in the (1, 1) entry and 0 elsewhere. As an nc-function, we have

But now, if we wish to extend f to an IP function on \({B(\mathscr {H})}\), what is the image of the diagonal operator T with first entry 1 and the rest 0? We want to identify T with \(M_n {\oplus } 0\), and map it to \(f(M_n) {\oplus } 0\) — but then each n gives a different image.

In order to interface with the theory of nc-functions, we shall assume that all our domains contain 0. To avoid the technical difficulty we just described, we shall compose our functions with Möbius maps to ensure that 0 is mapped to 0.

Lemma 2.1

If F is an IP function on \(D \subseteq {B(\mathscr {H})}^d\), and \(P \in {B(\mathscr {H})}\) is a projection, then, for all \(c \in D\) satisfying \(c = cP\) (or \(c = Pc)\) we have

Proof

As \(Pa = a P\), we get \(P F(a) = F(a) P\). As \(P^\perp a = 0 = c P^\perp \), we get \(P^\perp F(a) = F(c) P^\perp \). Combining these, we get (5). \(\square \)

We let \(\phi _\alpha \) denote the Möbius map on \(\mathbb {D}\) given by

Lemma 2.2

Let \(D \subseteq {B(\mathscr {H})}^d\) contain 0, and assume F is an IP function from D to \({B_1(\mathscr {H})}\). Then

-

(i)

\(F(0) = \alpha I_\mathscr {H}\).

-

(ii)

The map \( H(x) = \phi _\alpha {\circ } F (x)\) is an IP function on D that maps 0 to 0.

-

(iii)

For any \(a \in D\) and any projection P we have

$$\begin{aligned} a = PaP \quad \Longrightarrow \quad H(a) = P H(a) P . \end{aligned}$$ -

(iv)

\(F = \phi _{-\alpha } {\circ } H\).

Proof

(i) By Lemma 2.1 applied to \(a = c = 0\), we get that F(0) commutes with every projection P in \({B(\mathscr {H})}\). Therefore it must be a scalar.

(ii) For all z in \({B_1(\mathscr {H})}\), we have

where the series converges uniformly and absolutely on every ball of radius less than one. By (i), we have \(H(0) = \phi _\alpha (\alpha I_\mathscr {H}) = 0\). If \(T x = yT\), then \(T F(x) = F(y)T\), and so \( T [ F(x)]^n = [F(y)]^n T\) for every n. Letting z be F(x) and F(y) in (6) and using the fact that the series converges uniformly, we conclude that \(T \phi _\alpha (F(x) ) = \phi _\alpha (F(y) ) T\), and hence H is IP.

(iii) follows from Lemma 2.1 with \( c = 0\).

(iv) follows from \(\phi _{-\alpha } {\circ } \phi _{\alpha } (z) = z\) for every \(z \in {B_1(\mathscr {H})}\). \(\square \)

By choosing a basis \(\{e_1, e_2, \ldots , \}\) for \(\mathscr {H}\), we can identify \(\mathscr {M}_n^d\) with \(P_n {B(\mathscr {H})}^dP_n\). Let us define

Applying Lemma 2.2, we get the following.

Proposition 2.3

Let \(D \subseteq {B(\mathscr {H})}^d\) contain 0, and assume F is an IP function from D to \({B_1(\mathscr {H})}\). Let \(H = \phi _\alpha {\circ } F\), where \(\alpha \) is the scalar such that \(F(0) = \alpha I_\mathscr {H}\). Then \( H |_{D \cap \mathscr {M}^{[d]}}\) is an nc-function that is bounded by 1 in norm, and maps 0 in \(\mathscr {M}_n^d\) to the matrix 0 in \({\mathscr {M}}_n^1 = P_n {B(\mathscr {H})}P_n\).

If we let \(H^\flat \) denote \( H |_{D \cap \mathscr {M}^{[d]}}\), we can ask

Question 2.4

To what extent does \(H^\flat \) determine H?

Question 2.5

Does every bounded nc-function from \({D \cap \mathscr {M}^{[d]}}\) to \({\mathscr {M}}^1\) extend to a bounded IP function on D?

If

then \(G_{\delta }^\#\) is non-empty, but \(G_{\delta }\) is empty, and the questions do not make much sense. But we do give answers to both questions in Theorem 5.4, in the special case that D is of the form \( G_{\delta }^\#\) and in addition is assumed to be balanced.

3 IP SSOC functions are analytic

Let us give a quick summary of what it means for a function to be holomorphic on a Banach space; we refer the reader to the book [6] by Dineen for a comprehensive treatment. Let D be an open subset of a Banach space X, and \(f:D \rightarrow Y\) a map into a Banach space Y. We say f has a Gâteaux derivative at x if

exists for all \(h \in X\). If f has a Gâteaux derivative at every point of D it is Gâteaux holomorphic [6, Lemma 3.3], i.e. holomorphic on each one dimensional slice. If, in addition, f is locally bounded on D, then it is actually Fréchet holomorphic [6, Proposition 3.7], which means that for each x there is a neighborhood G of 0 such that the Taylor series

converges uniformly for all h in G. The kth derivative is a continuous linear map from \(X^k \rightarrow Y\), which is evaluated on the k-tuple \((h,h, \ldots , h)\).

The following lemma is the IP version of [8, Proposition 2.5] and [13, Proposition 2.2].

Lemma 3.1

Let D be an open set in \({B(\mathscr {H})}^d\) that is closed with respect to countable direct sums, and let \(F :D \rightarrow {B(\mathscr {H})}\) be intertwining preserving. Then F is bounded on bounded subsets of D, continuous and Gâteaux differentiable.

Proof

(Locally bounded) Suppose there were \(x_n \in D\) such that \(\{ \Vert x_n \Vert \} \) is bounded, but \(\{ \Vert F(x_n) \Vert \}\) is unbounded. Since D is closed with respect to countable direct sums, there exists some unitary \(u :\mathscr {H}\rightarrow \mathscr {H}^\infty \) such that \(u^* ( \bigoplus x_n ) u \in D\). Since F is IP, by Definition 1.2, we have \( [\bigoplus F(x_n)]\) is bounded, which is a contradiction.

(Continuity) Fix \(a \in D \) and let \(\varepsilon > 0\). By hypothesis, there exists a unitary \(u :\mathscr {H}\rightarrow \mathscr {H}^2\) such that

Choose \(\delta _1 > 0\) such that \(B( a , \delta _1) \subseteq D\), \(B( \alpha , \delta _1) \subseteq D\), and such that on \(B( \alpha , \delta _1)\) the function F is bounded by M. Choose \(\delta _2 > 0\) such that \(\delta _2 < \min ( \delta _1/2, \varepsilon \delta _1/2M)\). Note that for any \(a,b \in {B(\mathscr {H})}^d\) and any \(\lambda \in \mathbb {C}\), we have

So by part (ii) of the definition of IP (Definition 1.2) we get that if \(\Vert b - a \Vert < \delta _2\), and letting \(\lambda = M/\varepsilon \), then

is bounded by M. In particular, since the norm of the (1, 2)-entry of the last matrix is bounded by the norm of the whole matrix, we see that \(\Vert M(F(b)-F(a))/\varepsilon \Vert < M\), so \(\Vert F(b)-F(a) \Vert < \varepsilon \).

(Differentiability) Let \(a \in D\) and \(h \in {B(\mathscr {H})}^d\). Let u be as in (8). Choose \(\varepsilon > 0\) such that, for all complex numbers t with \(|t| < \varepsilon \),

and \(a + th \in D\). Let \(b = a +th\) and \(\lambda =\varepsilon /t\) in (9), and as before we conclude that

As F is continuous, when we take the limit as \(t \rightarrow 0\) in (10), we get

Therefore \(DF(a) [h]\) exists, so F is Gâteaux differentiable, as required.\(\square \)

When we replace X by a Banach algebra (in our present case, this is \({B(\mathscr {H})}^d\) with coordinate-wise multiplication), we would like something more than Fréchet holomorphic: we would like the kth term in (7) to be an actual free polynomial, homogeneous of degree k, in the entries of h.

The following result was proved by Kaliuzhnyi-Verbovetskyi and Vinnikov [13, Theorem 6.1] and by Klep and Špenko [14, Proposition 3.1].

Theorem 3.2

Let

be an nc-function such that each matrix entry of g(x) is a polynomial of degree less than or equal to N in the entries of the matrices \(x^r\), \(1 \le r \le d\). Then g is a free polynomial of degree less than or equal to N.

We extend this result to multilinear SSOC IP maps. Each \(h_j\) will be a d-tuple of operators, \((h_j^1, \ldots , h_j^d)\).

Proposition 3.3

Let

be a continuous N-linear map from \(({B(\mathscr {H})}^{d})^N\) to \({B(\mathscr {H})}\) that is IP and SSOC. Then L is a homogeneous polynomial of degree N in the variables \(h_1^1, \ldots , h_N^d\).

Proof

By Proposition 2.3, if we restrict L to \({\mathscr {M}}^{dN}\), we get an nc-function. By Theorem 3.2, there is a free polynomial p of degree N that agrees with L on \({\mathscr {M}}^{dN}\). By homogeneity, p must be homogeneous of degree N. Define

Then \({\Delta }\) vanishes on \(({\mathscr {M}}^{d})^{N}\), and is SSOC. Since \(({\mathscr {M}}^{d})^{N}\) is strong operator topology dense in \(({B(\mathscr {H})}^{d})^N\), it follows that \({\Delta }\) is identically 0. \(\square \)

One of the achievements of Kaliuzhnyi-Verbovetskyi and Vinnikov in [13] is the Taylor–Taylor formula [13, Theorem 4.1]. This comes with a remainder term, which can be estimated. They show [13, Theorem 7.4] that with the assumption of local boundedness, this renders an nc-function analytic. The following theorem is an IP version of the latter result.

Theorem 3.4

Let D be an open neighborhood of 0 in \({B(\mathscr {H})}^d\), and let \(F :D \rightarrow {B(\mathscr {H})}\) be a function that is intertwining preserving and sequentially strong operator continuous. Then there is an open set \(U \subseteq D\) containing 0 and homogeneous free polynomials \(P_k\) of degree k such that

where the convergence is uniform for \(x \in U\).

Proof

Any open ball centered at 0 is closed with respect to countable direct sums, so we can assume without loss of generality that D is closed with respect to countable direct sums and bounded. By Lemma 3.1, F is bounded and Gâteaux differentiable on D, and so by [6, Proposition 3.7], F is automatically Fréchet holomorphic. Therefore, there is some open ball U centered at 0 such that

We must show that each \(D^k F(0) [h,\ldots , h]\) is actually a free polynomial in h.

Claim 3.5

For each \(k \in \mathscr {N}\), the function

is an IP function on \( U {\times } ({B(\mathscr {H})}^d)^k \subseteq ({B(\mathscr {H})}^d)^{k+1}\).

Proof

Indeed, when \(k=1\), we have

As F is IP, so is the right-hand side of (12). For \(k > 1\),

By induction, these are all IP. \(\blacksquare \)

Claim 3.6

For each \(k \in \mathscr {N}\), the function \(G^k\) from (11) is SSOC on \( U {\times } ({B(\mathscr {H})}^d)^k\).

Proof

Again we do this by induction on k. Let \(G^0 = F\), which is SSOC on \(U \subseteq D\) by hypothesis. Since \(G^{k-1}\) is IP on the set \(U^k\), it is locally bounded, and by Lemma 3.1 it is Gâteaux differentiable. Suppose

where each \(h^j_n\) and \( h^j \) is in U. Let h denote the \((k{+}1)\)-tuple \( (h^0, \ldots , h^{k})\) in \(U^{k+1}\), and let \({\widetilde{h}}\) denote the k-tuple \( (h^0, \ldots , h^{k-1})\); similarly, let \(h_n\) denote \( (h_n^0, \ldots , h_n^{k})\) and \({\widetilde{h}}_n\) denote \( (h_n^0, \ldots , h_n^{k-1})\). There exists some unitary u so that \( y = u^* \bigl (\widetilde{h} {\oplus } {\widetilde{h}}_1 {\oplus } {\widetilde{h}}_2 {\oplus } \cdots \bigr ) u\) is in \(U^{k}\). Since \(G^{k-1}\) is differentiable at y, and is IP, we have that the diagonal operator with entries

has a limit as \(t \rightarrow 0\).

Let \(\varepsilon > 0\), and let \(v \in \mathscr {H}\) have \(\Vert v \Vert \le 1\). Choose t sufficiently close to 0 that each of the difference quotients in (13) is within \(\varepsilon /3\) of its limit (which is \(G^k\) evaluated at the appropriate h or \(h_n\)). Let n be large enough so that

Then

So each \(G^k\) is SSOC on \(U^{k+1}\). As \(G^k\) is linear in the last k variables, it is SSOC on \( U {\times } ({B(\mathscr {H})}^d)^k\) as claimed. \(\blacksquare \)

Therefore for each k, the map

is a linear IP function that is SSOC in a neighborhood of 0, so by Proposition 3.3 is a free polynomial. \(\square \)

4 Power series

Proof of Theorem 1.5 (i) \(\Rightarrow \) (ii). As F is bounded on bounded subsets of D by Lemma 3.1, it is Fréchet holomorphic. By Theorem 3.4, the power series at 0 is actually of the form (2). We must show the series converges on all of D.

Fix \(x \in D\). Since D is open and balanced, there exists \(r > 1\) such that \(\lambda x \in D\) for every \(\lambda \in \mathbb {D}(0, r)\). As each \(P_k\) is homogeneous, we have that for \(\lambda \) in a neighborhood of 0,

Therefore, the function \(\psi :\lambda \mapsto F(\lambda x)\) is analytic on \(\mathbb {D}(0, r)\), with values in \({B(\mathscr {H})}\), and its power series expansion at 0 is given by (14). Let \(M = \sup \{ \Vert F(\lambda x) \Vert : | \lambda | < r \}\).

By the Cauchy integral formula, since \(\Vert F \Vert \) is bounded by M, we get that

Comparing (14) and (15), we conclude that

and so the power series in (14) converges uniformly and absolutely on the closed unit disk.

(ii) \(\Rightarrow \) (iii). Obvious.

(iii) \(\Rightarrow \) (i). (IP (i)). Let \(x,y \in D\), and assume there exists \(T \in {B(\mathscr {H})}\) such that \(Tx = yT\). Let \(\varepsilon > 0\), and choose a free polynomial p such that \(\Vert p(x) - F(x) \Vert < \varepsilon \) and \(\Vert p(y) - F(y) \Vert < \varepsilon \). Then

As \(\varepsilon \) is arbitrary, we conclude that \(TF(x) = F(y)T\).

(IP (ii)). Suppose \((x_n)\) is a bounded sequence in D, and assume it is infinite. (The argument for finite sequences is similar.) Let z be the diagonal d-tuple with entries \(x_1, x_2 , \ldots \), and let \(s :\mathscr {H}\rightarrow \mathscr {H}^\infty \) be such that \( y = s^{-1} z s\) is in D. For each fixed n, choose a sequence \(p_k\) of free polynomials that approximate F on \(\{ y, x_n \}\). Then

The nth diagonal entry of the right hand side is \(F(x_n)\); so we conclude as n is arbitrary that

(SSOC). Suppose \(x_n \) in D converges to x in D in the SOT. As before, by taking direct sums, we can approximate F by free polynomials uniformly on countable bounded subsets of D. So for any vector v, and any \(\varepsilon >0\), we choose a free polynomial p so that \(\Vert [ F(x_n) - p(x_n) ] v \Vert < \varepsilon /3\), and choose N so that for \(n \ge N\), we have \(\Vert [p(x) - p(x_n) ] v \Vert < \varepsilon /3\). Then \(\Vert [F(x) - F(x_n) ] v \Vert < \varepsilon \) for all \(n \ge N\). \(\square \)

In particular, we get the following consequence, which says that bounded IP functions leave closed algebras invariant.

In [3, Theorem 7.7] it is shown that for general nc-functions f, it need not be true that f(x) is in the algebra generated by x.

Corollary 4.1

Assume that D is balanced and closed with respect to countable direct sums, and that \(F:D \rightarrow {B(\mathscr {H})}\) is SSOC and IP. Then, for each \(x \in D\), the operator F(x) is in the closed unital algebra generated by \(x^1, \ldots , x^d\).

5 Free IP functions

Recall the definition of the sets \(G_{\delta }\) in (3); the topology they generate is called the free topology on \({\mathbb M}^{[d]}\).

Definition 5.1

A free holomorphic function on a free open set \(D \subseteq {\mathbb M}^{[d]}\) is an nc-function that, in the free topology, is locally bounded.

Free holomorphic functions are a class of nc-functions studied by the authors in [1, 2]. In particular, it was shown that there was a representation theorem for nc-functions that are bounded by 1 on \(G_{\delta }\).

Theorem 5.2

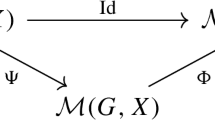

([1, Theorem 8.1]) Let \(\delta \) be an I-by-J matrix of free polynomials, and let f be an nc-function on \(G_{\delta }\) that is bounded by 1. There exists an auxiliary Hilbert space \(\mathscr {L}\) and an isometry

so that for \(x \in G_{\delta }\cap B(\mathscr {K})^d\),

Obviously, one can define a function on \(G_{\delta }^\#\) using the right-hand side of (16), replacing the finite dimensional space \(\mathscr {K}\) by the infinite dimensional space \(\mathscr {H}\). The following theorem gives sufficient conditions on a function to arise this way.

Theorem 5.3

Let \(\delta \) be an I-by-J matrix of free polynomials, and assume that \(G_{\delta }^\#\) is connected and contains 0. Let \(F :G_{\delta }^\#\rightarrow {B_1(\mathscr {H})}\) be sequentially strong operator continuous. Then the following are equivalent:

-

(i)

The function F is intertwining preserving.

-

(ii)

For each \(t > 1\), the function F is uniformly approximable by free polynomials on \(G_{t \delta }^\#\).

-

(iii)

There exists \(\alpha \in \mathbb {D}\) such that if \({\Phi } = \phi _\alpha {\circ } F\), then \({\Phi }^\flat \) is a free holomorphic function on \(G_{\delta }\) that is bounded by 1 in norm, and that maps 0 to 0.

-

(iv)

There exists an auxiliary Hilbert space \(\mathscr {L}\) and an isometry

$$\begin{aligned} \begin{bmatrix}\alpha&\quad B\\C&\quad D\end{bmatrix} :\,\mathbb {C}{\oplus } \mathscr {L}^{I} \rightarrow \mathbb {C}{\oplus } \mathscr {L}^{J} \end{aligned}$$so that for \(x \in G_{\delta }^\#\),

$$\begin{aligned} {\begin{matrix} F(x) = \alpha I_\mathscr {H}+ &{}(I_\mathscr {H}{\otimes } B) (\delta (x) {\otimes }I_\mathscr {L})\\ {} &{}\cdot \bigl [ I_\mathscr {H}{\otimes } I_{\mathscr {L}^J} - (I_\mathscr {H}{\otimes } D) (\delta (x) {\otimes }I_\mathscr {L}) \bigr ]^{-1} (I_\mathscr {H}{\otimes } C). \end{matrix}} \end{aligned}$$(17)

Proof

(i) \(\Rightarrow \) (iii). This follows from Proposition 2.3.

(iii) \(\Rightarrow \) (iv). By Theorem 5.2, we get such a representation for all \(x \in G_{\delta }\). The series on the right-hand side of (17) that one gets by expanding the Neumann series of

converges absolutely on \(G_{\delta }^\#\); let us denote this limit by H(x). By Theorem 1.5, since H is a limit of free polynomials, it is IP and SSOC. Moreover, as \( \begin{bmatrix} \alpha&B\\C&D \end{bmatrix}\) is an isometry, we get by direct calculation that

Indeed, to see the last equality without being deluged by tensors, let us write

where the dots denote appropriate tensors in (17). Then

Therefore, \(\Vert H(x) \Vert \le 1 \) for all \(x \in G_{\delta }^\#\). Let \({\Delta }(x) = H(x) - F(x)\). Then \({\Delta }\) is a bounded IP SSOC Fréchet holomorphic function on \(G_{\delta }^\#\) that vanishes on \(G_{\delta }^\#\cap \mathscr {M}^{[d]}= G_{\delta }\). There is a balanced neighborhood U of 0 in \(G_{\delta }^\#\) . By Theorem 1.5, \({\Delta }\) has a power series expansion \({\Delta }(x) = \sum P_k(x)\), and each \(P_k\) vanishes on \(U \cap {\mathbb M}^{[d]}\). This means each \(P_k\) vanishes on a neighborhood of zero in every \(\mathscr {M}_n^d\), and hence must be zero. Therefore, \({\Delta } \) is identically zero on U. By analytic continuation, \({\Delta }\) is identically zero on all of \(G_{\delta }^\#\), and therefore (17) holds.

(iv) \(\Rightarrow \) (ii). This follows because the Neumann series obtained by expanding (18) has the kth term bounded by \(\Vert \delta (x) \Vert ^k\). Therefore, it converges uniformly and absolutely on \(G_{t \delta }^\#\) for every \(t > 1\).

(ii) \(\Rightarrow \) (i). Repeat the argument of (iii) \(\Rightarrow \) (i) of Theorem 1.5. \(\square \)

In the notation of the theorem, let \(F^\flat = \phi _{-\alpha } {\circ } {\Phi }^\flat \). Then the proof of (iii) \(\Rightarrow \) (iv) shows that F and \(F^\flat \) determine each other uniquely. So we get

Theorem 5.4

Let \(\delta \) be an I-by-J matrix of free polynomials, and assume that \(G_{\delta }^\#\) is connected and contains 0. Then every bounded free holomorphic function on \(G_{\delta }\) has a unique extension to an IP SSOC function on \(G_{\delta }^\#\).

References

Agler, J., McCarthy, J.E.: Global holomorphic functions in several noncommuting variables. Canad. J. Math. 67(2), 241–285 (2015)

Agler, J., McCarthy, J.E.: Pick interpolation for free holomorphic functions (2013). arXiv:1308.3730 (to appear in Amer. J. Math.)

Agler, J., McCarthy, J.E.: The implicit function theorem and free algebraic sets (2014). arXiv:1404.6032 (to appear in Trans. Amer. Math. Soc.)

Alpay, D., Kalyuzhnyi-Verbovetzkii, D.S.: Matrix-\(J\)-unitary non-commutative rational formal power series. In: Alpay, D., Gohberg, I. (eds.) The State Space Method Generalizations and Applications. Operator Theory: Advances and Applications, vol. 161, pp. 49–113. Birkhäuser, Basel (2006)

Ball, J.A., Groenewald, G., Malakorn, T.: Conservative structured noncommutative multidimensional linear systems. In: Alpay, D., Gohberg, I. (eds.) The State Space Method Generalizations and Applications. Operator Theory: Advances and Applications, vol. 161, pp. 179–223. Birkhäuser, Basel (2006)

Dineen, S.: Complex Analysis on Infinite Dimensional Spaces. Springer Monographs in Mathematics. Springer, London (1999)

Helton, J.W., Klep, I., McCullough, S.: Analytic mappings between noncommutative pencil balls. J. Math. Anal. Appl. 376(2), 407–428 (2011)

Helton, J.W., Klep, I., McCullough, S.: Proper analytic free maps. J. Funct. Anal. 260(5), 1476–1490 (2011)

Helton, J.W., Klep, I., McCullough, S.: Free analysis, convexity and LMI domains. In: Dym, H., de Oliveira, M.C., Putinar, M. (eds.) Mathematical Methods in Systems, Optimization, and Control. Operator Theory: Advances and Applications, pp. 195–219. Springer, Basel (2012)

Helton, J.W., Klep, I., McCullough, S., Slinglend, N.: Noncommutative ball maps. J. Funct. Anal. 257(1), 47–87 (2009)

Helton, J.W., McCullough, S.: Every convex free basic semi-algebraic set has an LMI representation. Ann. Math. 176(2), 979–1013 (2012)

Kaliuzhnyi-Verbovetskyi, D.S., Vinnikov, V.: Singularities of rational functions and minimal factorizations: the noncommutative and the commutative setting. Linear Algebra Appl. 430(4), 869–889 (2009)

Kaliuzhnyi-Verbovetskyi, D.S., Vinnikov, V.: Foundations of Free Noncommutative Function Theory. Mathematical Surveys and Monographs, vol. 199. American Mathematical Society, Providence (2014)

Klep, I., Špenko, Š.: Free function theory through matrix invariants (2014). arXiv:1407.7551

Pascoe, J.E.: The inverse function theorem and the Jacobian conjecture for free analysis. Math. Z. 278(3–4), 987–994 (2014)

Pascoe, J.E., Tully-Doyle, R.: Free Pick functions: representations, asymptotic behavior and matrix monotonicity in several noncommuting variables (2013). arXiv:1309.1791

Popescu, G.: Free holomorphic functions on the unit ball of \(B(\fancyscript {H})^n\). J. Funct. Anal. 241(1), 268–333 (2006)

Popescu, G.: Free holomorphic functions and interpolation. Math. Ann. 342(1), 1–30 (2008)

Popescu, G.: Free holomorphic automorphisms of the unit ball of \(B(\fancyscript {H})^n\). J. Reine Angew. Math. 638, 119–168 (2010)

Popescu, G.: Operator Theory on Noncommutative Domains. Memoirs of the American Mathematical Society, vol. 205(964). American Mathematical Society, Providence (2010)

Taylor, J.L.: Functions of several noncommuting variables. Bull. Amer. Math. Soc. 79(1), 1–34 (1973)

Author information

Authors and Affiliations

Corresponding author

Additional information

Jim Agler is partially supported by National Science Foundation Grant DMS 1361720. John E. McCarthy is partially supported by National Science Foundation Grant DMS 1300280.

Rights and permissions

About this article

Cite this article

Agler, J., McCarthy, J.E. Non-commutative holomorphic functions on operator domains. European Journal of Mathematics 1, 731–745 (2015). https://doi.org/10.1007/s40879-015-0064-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40879-015-0064-2