Abstract

Purpose

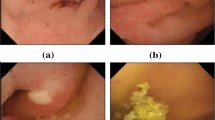

Wireless capsule endoscopy (WCE) is an effective and non-invasive advanced technology for the diagnosis of gastrointestinal (GI) abnormalities. From a clinical perspective, one of the most common and valuable indication for WCE is GI bleeding. However, the bleeding point may be incorrectly localized by bleeding detection methods, due to the small size of bleeding areas and the bubbles interference in the bleeding areas. These problems make it difficult to accurately localize bleeding point in GI images.

Methods

Therefore, a pixel-level segmentation method for GI bleeding is proposed, in which the dual network branches based on attention mechanism are designed to correctly classify the pixel samples of the bleeding areas. These two branches complement each other and focus on extracting the color and the texture features of the bleeding areas, respectively. The outputs of the dual network branches are combined finally by the feature fusion module to obtain a more accurate segmentation result.

Results

Extensive experiments have been done on public WCE image datasets to test the performance of our proposed network. The mean intersection over union (mIoU) of our proposed network is 86.858%, which shows its significant segmentation performance.

Conclusion

A novel network for GI bleeding segmentation is developed, which can obtain promising segmentation performance compared with some existing popular methods.

Similar content being viewed by others

References

Deeba, F., Bui, F., & Wahid, K. (2016) “Automated growcut for segmentation of endoscopic images,” International Joint Conference on Neural Networks (IJCNN), pp. 4650–4657.

Ali, A., Santisi, J. M., & Vargo, J. (2004). Video capsule endoscopy: A voyage beyond the end of the scope. Cleveland Clinic Journal of Medicine, 71(5), 415–425.

Iakovidis, D. K., & Koulaouzidis, A. (2015). Software for enhanced video capsule endoscopy: Challenges for essential progress. Nature Reviews Gastroenterology & Hepatology, 12(3), 172.

Fisher, L., Krinsky, M. L., Anderson, M. A., Appalaneni, et al. (2010). The role of endoscopy in the management of obscure gi bleeding. Gastrointestinal Endoscopy, 72(3), 471–479.

Sonnenberg, A. (2015). Modeling lengthy work-ups in gastrointestinal bleeding. Clinical Gastroenterology and Hepatology, 13(3), 433–439.

Jia, X., & Meng, Q. H. (2016). A deep convolutional neural network for bleeding detection in wireless capsule endoscopy images. 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 639–642.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 234–241.

Al-Rahayfeh, A. A., & Abuzneid, A. A. (2010). Detection of bleeding in wireless capsule endoscopy images using range ratio color. The International journal of Multimedia & Its Applications, 2(2).

Yuan, Y., Li, B., & Meng, Q. H. (2015). Bleeding frame and region detection in the wireless capsule endoscopy video. IEEE Journal of Biomedical & Health Informatics, 20(2), 624–630.

Jia, X., & Meng, Q. H. (2017). A study on automated segmentation of blood regions in wireless capsule endoscopy images using fully convolutional networks. IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), pp. 179–182.

Ghosh, T., Li, L., & Chakareski, J. (2018). Effective deep learning for semantic segmentation based bleeding zone detection in capsule endoscopy images. IEEE 25th International Conference on Image Processing (ICIP), pp. 3034–3038.

Hajabdollahi, M., Esfandiarpoor, R., Soroushmehr, S., Karimi, N., & P, K. (2019). Segmentation of bleeding regions in wireless capsule endoscopy for detection of informative frames. Biomedical Signal Processing and Control, 53, 101565.

Long, J., Shelhamer, E., & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(4), 640–651.

Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12), 2481–2495.

Peng, C., & Ma, J. (2020). Semantic segmentation using stride spatial pyramid pooling and dual attention decoder. Pattern Recognition, 107, 107498.

Krizhevsky, A., Sutskever, I., & Hinton, G. (2012). Imagenet classification with deep convolutional neural networks. NIPS.

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. Computer Science.

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., & Rabinovich, A. (2015). Going deeper with convolutions. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–9.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2016). Rethinking the inception architecture for computer vision. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2818–2826.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778.

Gao, S. H., Cheng, M. M., Zhao, K., Zhang, X. Y., Yang, M. H., & Torr, P. (2021). Res2net: A new multi-scale backbone architecture. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(2), 652–662.

Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid scene parsing network. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6230–6239.

Pogorelov, K., Suman, S., Hussin, A., et al. (2019). Bleeding detection in wireless capsule endoscopy videos - color versus texture features. Journal of Applied Clinical Medical Physics, 20, 8.

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., & Sang, N. (2018). Learning a discriminative feature network for semantic segmentation. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1857–1866.

Liu, W., Rabinovich, A., & Berg, A. C. (2015). Parsenet: Looking wider to see better. Computer Science.

Woo, S., Park, J., Lee, J.-Y., & Kweon, I. S. (2018). Cbam: Convolutional block attention module. European Conference on Computer Vision (ECCV), pp. 3–19.

Le Berre, C., Sandborn, W. J., Aridhi, S., Devignes, M.-D., et al. (2020). Application of artificial intelligence to gastroenterology and hepatology. Gastroenterology, 158(1), 76-94.e2.

Abdollahi, B., Tomita, N., & Hassanpour, S. (2020). Data augmentation in training deep learning models for medical image analysis. Deep Learners and Deep Learner Descriptors for Medical Applications, pp. 167–180.

Shorten, C., & Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. Journal of Big Data, 6.

Reddi, S. J., Kale, S., & Kumar, S. (2019). On the convergence of adam and beyond. International Conference on Learning Representations(ICLR).

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. European Conference on Computer Vision (ECCV), pp. 833–851.

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., & Lu, H. (2019). Dual attention network for scene segmentation. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3141–3149.

Funding

This work was supported by National Science Foundation of P.R. China (Grants: 61873239), Key R&D Program Projects in Zhejiang Province (Grant: 2020C03074).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Li, S., Si, P., Zhang, Z. et al. DFCA-Net: Dual Feature Context Aggregation Network for Bleeding Areas Segmentation in Wireless Capsule Endoscopy Images. J. Med. Biol. Eng. 42, 179–188 (2022). https://doi.org/10.1007/s40846-022-00689-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40846-022-00689-5