Abstract

The aspect-based sentiment analysis (ABSA) consists of two subtasks: aspect term extraction (AE) and aspect term sentiment classification (ASC). Previous research on the AE task has not adequately leveraged syntactic information and has overlooked the issue of multi-word aspect terms in text. Current researchers tend to focus on one of the two subtasks, neglecting the connection between the AE and ASC tasks. Moreover, the problem of error propagation easily occurs between two independent subtasks when performing the complete ABSA task. To address these issues, we present a unified ABSA model based on syntactic features and interactive learning. The proposed model is called syntactic interactive learning based aspect term sentiment classification model (SIASC). To overcome the problem of extracting multi-word aspect terms, the model utilizes part-of-speech features, words features, and dependency features as textual information. Meanwhile, we designs a unified ABSA structure based on the end-to-end framework, reducing the impact of error propagation issues. Interaction learning in the model can establish a connection between the AE task and the ASC task. The information from interactive learning contributes to improving the model’s performance on the ASC task. We conducted an extensive array of experiments on the Laptop14, Restaurant14, and Twitter datasets. The experimental results show that the SIASC model achieved average accuracy of 84.11%, 86.65%, and 78.42% on the AE task, respectively. Acquiring average accuracy of 81.35%, 86.71% and 76.56% on the ASC task, respectively. The SIASC model demonstrates superior performance compared to the baseline model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

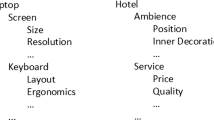

With the rapid development of e-commerce, online shopping has gradually become a habit in people’s lives. Web service platforms generate a large amount of comment data every day, from sources such as Amazon, eBay, Twitter and others. In this context, there is a growing need for technologies capable of processing vast amounts of textual data. Aspect-based sentiment analysis (ABSA) [1, 2] is not only a significant topic in data mining (DM) [3] but also a crucial task in natural language processing (NLP) [4]. The ABSA technology can identify aspect terms in sentences and analyze their sentiment polarity. Consequently, numerous scholars have conducted extensive research in this field. For instance, consider a restaurant review: “The pasta at this restaurant is delicious, but the service is poor”. The ABSA techniques can extract and analyze the sentiment of aspect terms, yielding “pasta, positive” and “service, negative”.

Researchers have categorized ABSA technology into two main tasks: aspect term extraction (AE) [5] and aspect sentiment classification (ASC) [6]. Currently, the majority of research is primarily focused on one of these two subtasks, overlooking the interrelation between them. Some researchers have proposed pipeline approaches [7] to handle these two subtasks sequentially. However, due to the issue of error propagation, the performance of the AE task directly influences the accuracy of the ASC task. Building an end-to-end unified model helps mitigate the issue of error propagation between multiple tasks. Simultaneously, there is a common occurrence of multi-word aspect terms in textual data, such as “battery life”, “wireless mice”, “salad dressings” and “foie gras saute”. These terms consist of multiple words, and accurately recognizing their boundaries is a challenge that can impact the AE task’s accuracy. Current researchers addressing the issue of multi-word aspect terms tend to focus on the dependency features and word features of sentences, neglecting the role of part-of-speech characteristics. Utilizing syntactic information is advantageous for the identification of boundaries in multi-word aspect terms. To address the above-mentioned issues, we propose a unified model for ABSA called syntactic interactive learning for aspect-based sentiment classification (SIASC).Footnote 1 The SIASC model is the structured of an end-to-end framework that reduce the effects of error propagation on both subtasks. To tackle the challenge of extracting multi-word aspect terms, we leverage textual information by incorporating part-of-speech features [8], word features, and dependency features [9] as inputs to the model. Adequate learning of syntactic knowledge enables the model to more accurately identify the boundaries of multi-word aspect terms. To enhance sentiment analysis performance, we introduce interactive learning [10] and a technique known as local context focus on syntax (LCFS) [11] into the model. Interactive learning contributes to the model capture the connection between the AE task and the ASC task. The LCFS technique enables the handling of irrelevant terms around aspect terms, thereby improving aspect sentiment classification accuracy.

In summary, this paper makes the following main contributions:

-

1.

We design a unified ABSA structure based on an end-to-end framework, which reduce the impact of error propagation between the AE and ASC tasks.

-

2.

We fully utilize textual features information by incorporating part-of-speech features, word features, and dependency features. These enhancements enable the model to accurately extract multi-word aspect terms.

-

3.

We employ interactive learning to facilitate the model in capturing the relationship between the AE and ASC tasks. Additionally, the LCFS technique effectively process irrelevant words in the context of aspect terms, thereby improving sentiment classification accuracy.

-

4.

We conducted experiments on the Laptop14, Restaurant14, and Twitter datasets. The results demonstrate that the SIASC model outperforms the baseline model.

Related work

In this section, we present previous research in aspect-based sentiment analysis: BERT and Attention, Syntactic knowledge and GNN, Pipeline and End-to-end.

BERT and attention

Vaswani et al [16] proposed that the attention mechanism (Attention) can assign a corresponding weight value to each word in the text. The Attention mechanism focuses on the key words, effectively enhancing model performance. Therefore, researchers have widely used Attention in ABSA task. For instance, Wang et al. [12] proposed an attention based long short-term memory network model (LSTM) [13]. Ma et al. [10] proposed the interactive attention networks model to generate representations of aspect term and context simultaneously. Fan et al. [14] proposed a fine-grained attention neural network model to achieve word-level interactions between aspect and context. Devlin et al. [15] based on the Attention and Transformer [16] model proposed a pre-training bidirectional encoder representations from Transformer model (BERT). Due to the significant advancements in text representation achieved by the BERT model, it has been widely adopted by numerous researchers. Dai and Song [17] proposed a neural network model based on BERT and mining rules. Song et al. [18] proposed a model based on BERT and attention encoder network. The model employed attention based encoder for the modeling between context and aspect term, avoiding the problem of difficult long-term memory due to recursion. Yang and Zeng et al. [19] proposed a local context focus sentiment classification mechanism based on BERT. The model utilized context feature dynamic masking and context feature dynamic weighting methods to focus on local context words. However, these studies do not fully leverage syntactic features, leading to difficulties in accurately extracting multi-word aspect terms from the text.

Syntactic knowledge and GNN

The syntactic knowledge assists the model in better understanding the syntactic correlations between words in the text. Utilizing the technique of dependency syntactic analysis effectively represent words. The graph neural network (GNN) [20] can take the results of dependency syntactic analysis as input in the form of a directed graph. It uses neural network models to learn syntactic features between words, with words as nodes and syntactic relations as directed edges in the graph. To leverage syntactic knowledge, some scholars have employed GNN to implement the ABSA task. Zhang et al. [21] proposed an aspect specific graph convolutional neural network model. The model acquires syntactical information and word dependencies from a dependency syntactic analysis tree of sentences, employing a graph convolutional neural network to further learn syntactic relations. Huang and Carley [22] proposed a new target dependency graph attention network model, which utilized the dependency relation among words. Li et al. [23] proposed a dual-graph convolutional neural network model to address the problem that inaccuracy of dependency syntactic analysis results. The model utilizes the syntax graph convolutional neural network (GCN) [24] to minimize errors in dependency syntactic analysis and employs the semantics GCN to capture semantic relevance. Additionally, the orthogonal regularizer is applied to mitigate the impact of text noise on Attention mechanism, and the differential regularizer is used to capture the difference in two GCN learning features. However, these studies focused on one of the two subtasks of ABSA, ignoring the connection between the AE task and the ASC task.

Pipeline and end-to-end

In order to model all subtasks of ABSA, researchers proposed the concept of a pipeline framework. The pipeline framework can construct corresponding models for all subtasks of ABSA, passing the model output to the next one. Some researchers have conducted studies on it. Shang et al. [25] designed a pipeline framework-based model that can capture the associations of targets and sentiment cues to enhance the overall performance of targeted sentiment analysis. Wu et al. [26] designed an effective grid tagging scheme inference strategy that exploited the mutual indication between different opinion factors to achieve more accurate extraction. However, the pipeline approach suffers from error propagation problem, and the accuracy of the AE task affects the performance of the ASC task.

Some researchers have proposed end-to-end framework to reduce the effects of error propagation. For example, Xu et al. [27] proposed an end-to-end model with a novel location-aware tagging scheme that enables joint acquisition of triples of aspect term, sentiment polarity, and opinions. Zhang et al. [28] proposed a multi-task learning encoder-decoder framework to jointly extract aspect term and opinion term while analyzing the sentiment dependencies between them using BiAffine [29] scorer. Peng et al. [30] proposed a two-stage framework to solve the aspect-opinion-sentiment triplet extraction task. Li et al. [31] proposed a novel unified model that adopted a unified labeling scheme for addressing the complete task.

Compared to the aforementioned approach, the end-to-end framework reduces the impact of error propagation. Abundant syntactic information enables the model to recognize multi-word aspect terms. Therefore, this paper proposes a unified ABSA model based on the end-to-end framework. Additionally, the model uses interactive learning to establish a connection between the AE task and the ASC task.

The overall structure of the SIASC model. Where the P1 and P2 are denoted as the hidden layer vectors after average pooling. The MaxPool1d is represented as the maximum 1-dimensional convolution. The LCFS denoted as local context focus on syntax mechanism, which includes context feature dynamic weighted (CDW) methods and context feature dynamic mask (CDM) methods. The CRF indicates the conditional random fields. The“unit number” refers to setting the number of final output units in the fully connected layer equal to the number of aspect terms in the model’s output

Proposed method

The overall structure of the SIASC model for aspect-based sentiment analysis is shown in Fig. 1. The model consists of three important parts: syntactic features representation, LCFS processing, and interactive learning. The part responsible for syntactic feature representation transforms the input sentence into part-of-speech features, words features, and dependency features using the BiAffine model [32]. Subsequently, these three features are concatenated to form the syntactic features of the sentence. The LCFS processing module can handle the context words of aspect terms in a sentence using the context feature dynamic mask (CDM) or context feature dynamic weighted (CDW) approach. This module can attenuate the influence of irrelevant words on sentiment classification. The interactive learning part uses the multi-head attention mechanism [33] to interactively learn between the LCFS processed feature vectors and syntactic feature vectors. Additionally, the SIASC model determines the number of final units in the fully connected layer based on the number of output aspect terms. When a sentence contains multiple aspect terms, they are combined into (aspect term, sentiment) pairs according to the order in which the model outputs aspect terms and sentiment polarities. Given a sequence of input sentences X = {\({x}_1, {x}_2, {x}_3,..., {x}_{n}\)} and their corresponding label y, where n is the number of words. For the AE task, the label y belongs to the set {B, I, O} (Begin, Inside, Outside). Where B represents the beginning of the aspect term, I represents the interior of the aspect term, and O represents the non-aspect term. For the ASC task, the label y belongs to the set {Positive, Neutral, Negative}.

The details of the syntactic feature representation. Where the BiAffine model was proposed by Stanford researchers. “DT, NN, VBZ,...” denotes the corresponding part-of-speech features of words, while “det, nsubj, cop,...” represents the dependency relationships among words. Both part-of-speech annotations and dependency parsing annotations adhere to the rules of the Stanford Parser

Syntactic features representation

The details of the syntactic features representation are shown in Fig. 2. In this section, the BiAffine modelFootnote 2 [33] is primarily used to convert sentence information into part-of-speech features, word features, and dependency features. The BiAffine model is a syntactic parsing toolFootnote 3 developed by the NLP Group at Stanford University. Firstly, the model employed BiLSTM and two multi-layer perceptron (MLP) layers to stack the obtained hidden vectors. Then, it utilized bi-affine transformation to compute the dependency relationships between any two words in the sentence. When given a sequence of sentences \({X=\{x_1, x_2, x_3,..., x_n\}}\), the BiAffine model performs part-of-speech tagging on each word, resulting in pairs (\({x_i}\), \({p_i}\)), where \({p_i}\) belongs to the part-of-speech set P. Simultaneously, it computes the dependency relationships between words, resulting in triples (\({x_i, d_i, x_j}\)), where \({d_i}\) belongs to the dependency relationships set D. The computational procedure for sentence analysis using the BiAffine model is as follows.

where \({i,j \in [1,n]}\) and \({i\ne j}\), n is the number of words. The BiAffine denotes BiAffine model.

Next, the BERT model is utilized to embed part-of-speech features, word features, and dependency features individually. The BERT model can transform these three types of feature information into vectors. Finally, the three feature vectors are concatenated, and one-dimensional max-pooling is applied to process them.

where \({v_i^p,v_i^w,v_i^d \in {{\mathbb {R}}^{1 \times 768}}}\) respectively represent the part-of-speech feature vector, words feature vector, and dependency feature vector, with 768 being the fixed dimension of BERT model output. \({v_i^t \in {{\mathbb {R}}^{1 \times 2304}}}\) is the vector obtained by concatenating the three vectors, and the dimensionality of 2034 is obtained by concatenating three 768-dimensional vectors. Concat indicates the concatenate operation. \({v_i^s \in {{\mathbb {R}}^{1 \times 768}}}\) is the result after pooling. MaxPool1d is the maximum one-dimensional pooling, where the pooling window size is set at 3.

Diagram of the LCFS (CDM). The gray node words will be masked and the blue node words will be completely preserved. The threshold value is 2. The example of context word in this picture is “service”, which is also an aspect term. “det, compound, nsubj, cop,...” represents the dependency relationships among words

LCFS processing

The local context focus on syntax (LCFS) mechanismFootnote 4 was developed by Phan et al. [11] in the ABSA Task. This method is an improvement on the local context focus (LCF) proposed by Zeng et al. [19]. The LCFS uses semantic relative distance (SRD) [34] to determine the processing of contextual words. It used two processing methods: context feature dynamic mask (CDM) and context feature dynamic weighted (CDW). SRD measure the relationship between the target word and the contextual words. Phan et al. introduced an innovative approach that uses the distance between nodes in a dependent syntax tree [35] as the SRD threshold. This approach also accounts for calculating the SRD threshold for multi-word aspect terms. When the aspect term comprises multiple words, the SRD threshold is computed as the average distance between the target word and each constituent word. However, the calculation of the SRD values in this method is affected by sentence noise and the accuracy of the dependency parser. For example, in Fig. 3 uses the distances between nodes in the syntactic tree are considered, and when the threshold is set to 2, it will retain the word “delicious” while processing the word “service”. We proposed using the computation result of the dot product between the node distance matrix and the attention score matrix as the SRD values.Footnote 5 The introduction of the attention score matrix helps mitigate the impact of sentence noise and syntactic parsing on LCFS, facilitating the capture of key nodes at longer distances. Since a sentence may contain multiple aspect words, we employ a multi-head attention mechanism with a head count of 4 when computing the attention weight matrix. The SRD threshold between the multi-word aspect terms “gourmet food” and the context word “delicious” is calculated as follows.

Context-feature dynamic mask (CDM)

The principle of the CDM method is shown in Fig. 3. The context words with smaller SRD values will be preserved and context words with larger SRD values will be masked. The computation process using the CDM method is as follows.

Where MHA is a multi-attention mechanism, \({h^a} \in {{\mathbb {R}}^{n \times n}}\) is the attention score matrix and \({h^T} \in {{\mathbb {R}}^{n \times n}}\) is the distance matrix between nodes. x is the value of the input and mean is the mean value calculation. The results of the mean computation rows for \({h^a}\) are the same, so we take the first result. O is the zero vector and I is the one vector. \(\alpha \) is the threshold of SRD. \({v_i^m} \in {{\mathbb {R}}^n}\) stores the value between the i-th word and the context word. \({M_1} \in {{\mathbb {R}}^{n \times n}}\) is the vector matrix for masking processing and the store’s values as 0 and 1. n is the length of the words in the sentence. \({V^L}\) denotes the word vector matrix of BERT output.

Context feature dynamic weighted (CDW)

The principle of the CDW method is shown in Fig. 4. The context words with larger SRD values will be weighted.

Where I is the one vector and \(\alpha \) is the threshold of SRD. \(v_i^w \in {{\mathbb {R}}^n}\) stores the value between the i-th word and the context word. \({M_2} \in {{\mathbb {R}}^{n \times n}}\) is the vector matrix for weighted processing and the stores values as 1 and 0-1 decimal. n is the length of the words in the sentence. \({V^{CDW}}\in {{\mathbb {R}}^{n \times h}}\)denotes the word vector matrix of LCFS (CDW) output.

The details of interactive learning. Where “\({h_1^l}\), \({h_2^l}\),..., \({h_n^l}\)”, “\({h_1^g}\), \({h_2^g}\),..., \({h_n^g}\)”, “\({h_1^s}\), \({h_2^s}\),..., \({h_n^s}\)”, and “\({h_1^a}\), \({h_2^a}\),..., \({h_n^a}\)” respectively represent the hidden layer vectors. The depth of color in the vector indicates the assignment of different attention scores

Interactive learning

The details of interactive learning in the model are shown in Figure 5. Interactive learning is based on the attention mechanism, which utilizes pooled hidden vectors as query vectors. Interacting through two different hidden vectors allows us to capture the relevant relationships between the vectors. Assigning appropriate weights to the context of aspect terms facilitates the model in accurately discriminating sentiment polarity. For improved performance in interaction learning, we use the multi-head attention mechanism. Firstly, the BiLSTM layer is employed to learn long-range dependencies between feature vectors. Then, multi-head attention mechanism is used to interactively learn features from both types of hidden vectors. Finally, the two obtained vectors are concatenated. The computational process for interactive learning is as follows.

Where \({v_i^s \in {{\mathbb {R}}^h}}\) is the vector after processing of syntactic features representation. \({v_i^w \in {{\mathbb {R}}^h}}\) is the vector after LCFS layer processing. \({i \in [1,n]}\) is the length of the word and h is the vector dimension of 768. \({h^l} \in {{\mathbb {R}}^{n \times 2\,h}}\) and \({h^g} \in {{\mathbb {R}}^{m \times 2\,h}}\) are the hidden layer vectors, h is the dimension of the unit output vector in the BiLSTM model. \({L_{avg}} \in {{\mathbb {R}}^{2\,h}}\) and \({G_{avg}} \in {{\mathbb {R}}^{2\,h}}\) are the hidden layer vectors after the average pooling process.

Then, the pooled vector \({G_{avg}}\) is used to calculate attention scores with another feature vector matrix \({h^l}\). The calculation is as follows:

Where \({\epsilon }\) is the score function that calculates the importance of \({h^l_i}\) in the context. The score function \({\epsilon }\) is defined as:

Where \({W_1} \in {{\mathbb {R}}^{2d \times 2d}}\) is weight matrix and \({b_1}\) is bias respectively. tanh is the non-linear function and \({G^T_{avg}}\) is the transpose of the \({G_{avg}}\). As above, we calculate attention scores between the hidden vector matrix \({h^g}\) and the pooled vector \({L_{avg}}\).

After computing the word attention weights, we can get context and target representations L and G based on the attention vectors \({a_i}\) and \({b_i}\) by:

Finally, the Multi-head Attention mechanism concatenates the results of each attention head calculation to obtain the hidden layer vector \({h^s=\{h_i^s, i \in [1,n]\}}\) and the hidden layer vector \({h^a=\{h_i^a, i \in [1,n]\}}\).

Where \({W_2}\) and \({W_3} \in {{\mathbb {R}}^{H \cdot u \times d}}\), \({d=H}\times {u}\) and H is the number of heads, u is the dimension of each attention head. For example, assume that d = 1024 and H = 16 for each attention header u = d ÷ H = 64. \({h^s} \in {{\mathbb {R}}^{n \times d}}\) and \({h^a} \in {{\mathbb {R}}^{n \times d}}\) are vector matrices of interactive learning outputs.

For the AE task, the model uses the conditional random fields (CRF) layer to predict the sequence labeling information of the final aspect terms in the sentence. The output of the AE task is calculated as follows.

Where \({y_i^s}\) denotes the probability that the ith word is the annotation start position. \({y_i^e}\) denotes the probability that the ith word is the annotation end position. For the ASC task, the model uses the fully connected layer to compute the sentiment polarity of aspect terms. The output of the ASC task is calculated as follows.

Where \({W_l}\) is the weight matrix in the fully connected layer and \({b_l}\) is the bias item.

Loss function

When training the AE task in the SIASC model, we use the cross-entropy loss function to predict the probabilities of the starting and ending positions for each word.

Where \({{y'_i}^s}\) represents the starting position of annotated words. \({{y'_i}^e}\) represents the ending position of annotated words. c is the datasets. When training the ASC task, we use a cross-entropy loss function along with L2 regularization to predict the sentiment polarity of aspect terms.

Where \({y'_i}\) is the predicted sentiment polarity corresponding to \({y_i}\). \({\Theta }\) is the parameter-set of the SIASC model. Finally, we employ a multi-task training strategy to combine the loss functions of the AE task and ASC task to train the final loss values of the model.

Where \({\alpha ,\beta \in [0,1]}\) are hyper-parameters to control the contributions of objectives. \({\alpha }\) and \({\beta }\) are initially set to 1/2 and continuously adjusted during the model training process for optimization.

Experiments

In this section, we first provide a detailed introduction to the datasets, experimental settings, and baseline models used in the experiments. Then, we separately present comparison study, ablation study, the SRD threshold study, attention visualization, and case study respectively to validate the performance of the SIASC model.

Datasets and experimental settings

To demonstrate the performance of SIASC model, we conducted experiments on the three benchmark datasets shown in Table 1. The Laptop and Restaurant datasets are from the SemEval-2014 Task 4 challengeFootnote 6 [36]. Additionally, the Twitter datasetsFootnote 7 [37] consists of online comments, which can verify the model’s performance on informal datasets. All three datasets include examples with positive, negative, and neutral sentiment polarities. Each sentence in these datasets is annotated with marked aspect terms and their corresponding polarities.

In addition to the hyper-parameter settings mentioned in the previous studies, we also conducted experiment on the impact of SRD thresholds. Analyzing the experimental results to optimize the hyper-parameter settings. The hyper-parameter settings in the SL-AESC model are shown in Table 2.

Baseline model

Comparison experiments are conducted to separately validate the performance of the SIASC model on the AE task and ASC task. The experimental details of the baseline models are as follows.

DTBCSNN [38]: (Ye et al. 2017) proposed a stacked convolutional neural network based on dependency trees which used an inference layer for aspect term extraction.

BiDTreeCRF [39]: (Luo et al. 2019) integrated word embedding representation and BiLSTM plus CRF to learn tree structure and sequential features.

Seq2Seq4ATE [40]: (Ma et al. 2019) designed the gated unit networks to incorporate the corresponding word representation into the decoder. The model also adopted position-aware attention to focus on the adjacent words of the aspect term.

ATAE-LSTM [12]: (Wang et al. 2016) utilized the Attention mechanism to capture the importance of different context information for the aspect term.

AEN-BERT [18]: (Song et al. 2019) employed the contextualized BERT and Attention mechanism to model the relation between context and aspect term.

RINANTE [17]: (Dai et al. 2019) designed an algorithm to automatically mine extraction rules from existing training examples based on dependency parsing results.

SPAN-BERT [41]: (Hu et al. 2019) proposed a pipeline method using BERT as the backbone. A multi-target model is used for aspect term extraction and sentiment classification.

JET-BERT [27]: (Xu et al. 2020) designed the end-to-end model with a novel position aware tagging scheme that is capable of jointly extracting the triplets.

Peng-to-stage [30]: (Peng et al. 2020) used the model with a two-stage framework. The first stage predicts aspect term, sentiment polarity and the causes of sentiment polarity. The second stage outputs the results of the first stage predictions as the triplet.

LCFS-BERT [11]: (Phan et al. 2020) used the local context focus on syntax mechanism that masks or weakens semantically distant context words.

R-GAT+BERT [42]: (Wang et al. 2020) defined a unified target aspect term dependency tree structure by reshaping and pruning the ordinary dependency parse tree.

OTE-MTL [28]: (Zhang et al. 2020) adopted the multi-task learning framework to jointly extract aspect term and opinion term. The model also utilized the BiAffine scorer to calculate the sentiment dependency relationship between the two.

RACL-BERT [43]: (Chen et al. 2020) used a relation aware collaborative Learning framework allows the subtasks to work in coordination.

DREGCN [44]: (Liang et al. 2021) designed a novel dependency syntactic knowledge augmented interactive architecture with multi-task learning.

BART-ABSA [45]: (Yan et al. 2021) proposed a unified generative model based on aspect-based sentiment analysis. This addresses all aspect-based sentiment analysis sub-tasks in the end-to-end framework.

PD-GAT [46]: (Wu et al. 2022) constructed a relational graph attention networks based on phrase dependency graphs.

SSEGCN [47]: (Zhang et al. 2022) proposed an aspect-aware attention mechanism combined with self-attention.

Sentic GCN [48]: (Liang et al. 2022) utilized specific aspects to exploit the sentiment dependencies of sentences to propose a graphical convolutional network based on SenticNet.

KGAN [49]: (Zhong et al. 2023) proposed a knowledge graph augmentation network combines external knowledge with explicit syntactic information.

WSIN [50]: (Gu et al. 2023) fully considered the information of the current aspect word interacting with the contextual information.

KDGN [51]: (Wu et al. 2023) combined domain knowledge, dependency annotation and syntactic paths to propose a dependency graph-based knowledge-aware network.

Comparison study

To demonstrate the performance of SIASC model, the experiments evaluate its performance in both the AE task and ASC task. We first analyzed the experimental results of the AE task and then those of the ASC task.

The results of the AE task are presented in Table 3. The experiments reveal that the SIASC model outperforms the baseline model. Our model achieved accuracy rates of 84.11%, 86.65%, and 78.42%, as well as F1 scores of 77.14%, 85.90%, and 75.90% on the three datasets, respectively. The best performance among the baseline models is the KDGN model. Compared to the KDGN model, our proposed SIASC model achieved F1 score improvements of 0.29%, 0.19%, and 0.45%, respectively. The accuracy on the Restaurant and Twitter datasets improved by 0.71% and 0.73%, respectively. Analyzing the specific reasons behind these improvements. On the one hand, the SIASC model employs the BiAffine model to parse sentence information, obtaining part-of-speech features, word features, and dependency features. The BiAffine model possesses representational capability needed for accurate dependency parsing. On the other hand, converting text into three types of feature information benefits the model training, particularly when dealing with datasets with limited resources. Compared to single-word features, incorporating part-of-speech features and dependency features is more advantageous for the model to extract multi-word aspect terms.

The results of the ASC task are presented in Table 4. The SIASC model also demonstrates superior performance in the ASC task compared to the baseline model. Specifically, the SIASC (CDW) model achieved accuracy scores of 81.35%, 86.71%, and 76.56% on the three datasets, respectively. In comparison to the KDGN model, our model showed improvements in accuracy of 0.06%, 0.04%, and 0.37%, respectively. Analyzing the reasons, the KDGN model integrates domain knowledge and dependency features to construct a graph neural network for learning the syntactic structure of sentences. However, it overlooks the part-of-speech features within the sentences. In contrast, our model makes full use of syntactic information, enabling more accurate extraction of aspect terms. Additionally, the Twitter dataset, sourced from online web reviews belonging to the informal and prone to sentence noise. In comparison to the KDGN model, our accuracy has improved by 0.37%, indicating that the SIASC model demonstrates better generalization on real web review data. The outstanding performance of the SIASC model can be attributed to the utilization of the LCFS mechanism and interactive learning method. When analyzing the specific reasons, several factors stand out. Firstly, the LCFS mechanism effectively manages context words that are unrelated to aspect terms. This benefits the model in determining the sentiment polarity of aspect terms. Secondly, interactive learning establishes a crucial between the AE task and the ASC task. Information from the AE task proves beneficial in improving the performance of the model on the ASC task. Finally, the design of the SIASC model’s structure aids in reducing error propagation between the AE and ASC tasks. Notably, the use of the CDW method is significantly more effective than the CDM method. This underscores the superiority of employing a weighted approach within the LCFS mechanism over the masking method.

To validate the performance of the SIASC model on sentences containing multi-word aspect terms, we also conducted comparative experiments and confusion matrix analysis for multi-word aspect terms. The experimental results for the AE task on sentences with multi-word aspect terms are shown in Table 5. The results indicate that the performance of the SIASC model is significantly better than other models on the Laptop, Restaurant, and Twitter datasets. Compared to the KDGN model, our model’s performance has improved by 1.32%, 1.31%, and 1.76%, indicating that the model effectively utilizes syntactic features to accurately extract multi-word aspect terms. The confusion matrix results for the ASC task on multi-word aspect terms are shown in Fig. 6. As observed from the figure, on the three datasets, the SIASC model demonstrates a more accurate prediction of the sentiment polarity of multi-word aspect terms compared to other models. Analyzing the reasons, our model adopts a unified structure to minimize the impact of error propagation between the two subtasks. The incorporation of the LCFS mechanism aids in determining the context of multi-word aspect terms, thus enhancing the discernment of sentiment polarity.

Ablation study

To verify the effectiveness of the syntactic feature representation and LCFS processing in the SIASC model, we conducted ablation study. In the AE task, we demonstrated the effectiveness of syntactic feature representation by progressively removing part-of-speech features and dependency features from the model. In the ASC task, we verified the effectiveness of using LCFS by removing it from the model. The results of the ablation experimental are presented in Table 6 and Figs. 7, 8, 9.

The results of the ablation experiments indicate that the inclusion of syntactic feature representation and LCFS processing in the SIASC model can enhance its performance. When the model simultaneously removes both the part-of-speech features and dependency features, its accuracy decreases by 2.43%, 1.53%, and 1.75%, respectively. Analyzing the specific reasons, the part-of-speech features and the dependency features are essential components of textual information. Leveraging syntactic knowledge in the text facilitates the model better understand the datasets. Removing the LCFS mechanism from the SIASC (CDM) model results in a decrease in accuracy by 0.93%, 0.98%, and 1.58%, respectively. While the accuracy of the SIASC (CDW) model was decreased by 1.11%, 1.32%, 1.80%. This indicates that the LCFS mechanism can significantly improve the model’s performance. Additionally, the experimental results show that the CDW method is superior to the CDM method. Analyzing the specific reasons, the CDW method is similar to the attention mechanism. It assigns appropriate weights to context words. However, the CDM method uses masking to remove words from the context, leading to a loss of aspect term contextual information.

The SRD threshold study

The setting of the SRD threshold size directly affects the performance of the LCFS mechanism. To find the optimal SRD threshold \({\alpha }\) regarding the input value x for the LCFS mechanism on the three datasets, we conducted SRD threshold experiment. The experiment is designed to compare the performance of the SIASC model on the ASC task by setting the SRD threshold to values between 1 and 6, respectively. The experimental results are shown in Table 7 and Fig. 10.

The experimental results demonstrate that the CDW method with an SRD threshold of 3 performs the best on the Laptop dataset, with accuracy of 81.35% and 82.58% on the test set and validation set, respectively. Similarly, the CDW method with an SRD threshold of 3 shows optimal performance on the Restaurant dataset, achieving 86.71% and 86.95% accuracy on the test and validation sets, respectively. For the Twitter dataset, on the test set, the CDW method with an SRD threshold of 5 achieves the best performance, with an accuracy of 76.56%, and additionally, a threshold of 4 also achieves a decent accuracy of 76.52%. On the validation set, the CDW method with an SRD threshold of 4 achieves the best performance, with an accuracy of 77.05%. The SRD threshold experiment results once again demonstrate that the CDW method is significantly superior to the CDM method within the LCFS mechanism. According to the above experimental results, we finally determined to set the SRD threshold in the LCFS mechanism to 3 for the laptop and restaurant datasets, and to set the SRD threshold to 4 for the Twitter dataset.

Attention visualization

To assess the impact of interactive learning on the model, we conducted a visualization study. Interactive learning in the SIASC model is based on the multi-head attention mechanism. Therefore, we can evaluate the functionality of interactive learning by visualizing the attention score matrix. The SIASC model includes 12 attention heads in its multi-head attention mechanism. We selected representative results for visualization in the experiments. The model takes the sentences: “The food was delicious but the wine was terrible” as input, with “food” and “wine” are aspect terms. The experimental results are presented in Figs. 11 and 12.

In Fig. 11, it’s evident that the scores for the phrases “the food was delicious” and “the wine was terrible” in the sentence are not very high. However, the scores between the target words and the context words are relatively high. For example, “food” has higher scores with the words in “the food was delicious”, whereas its scores with the words in “the wine was terrible” are lower. This is because the LCFS mechanism can handle context-irrelevant words around the target word. Contextually relevant words are retained, while irrelevant words are masked or assigned smaller weight values. Moving to Fig. 12, the score distribution in the score matrix after syntactic feature processing is not as regular. However, there are high scores between key words in both score matrices. For instance, there is a high score for “food” with “delicious” and “wine” with “terrible”. This confirms the impact of interactive learning on both score matrices. Interactive learning captures the differences between two hidden layer vectors, further establishing their connection.

Case study

In the case study, we selected the better performing WSIN and KDGN models as comparison models. The experimental results are shown in Table 8, which displays the performance of the three models on seven example sentences. To facilitate a better understanding of the results, we have applied red and blue highlighting to identify the aspect terms in the sentences. The experimental results unequivocally demonstrate that the SIASC model excels in accurately extracting multi-word aspect terms compared to the WSIN and KDGN models. For instance, the WSIN and KDGN models struggled to accurately extract the “blond wood decor” in sentence 5 and the “foie gras terrine with figs” in sentence 6. In stark contrast, the SIASC model can accurately identify both of these complex multi-word aspect terms. Regarding the ASC task, the WSIN model erroneously discriminated between the sentiment polarity of “hard disk” and “dinner special”. Similarly, the KDGN model encountered difficulty in accurately distinguish the affective polarity of the “hard disk”. Conversely, our proposed SIASC model accurately discerned sentiment polarity in the example sentences. The results of this case study underscore our model’s effectiveness in addressing the challenge of multi-word aspect terms and its commendable performance in sentiment classification.

Conclusion

To comprehensively address both the AE and ASC sub-tasks in ABSA, we design a unified model based on an end-to-end framework, known as the SIASC model. The results of comparison experiments and case experiments indicate that the overall performance of the SIASC model is superior to the baseline model on the three benchmark datasets. Fully utilizing the syntactic feature information of the text benefits the accurate extraction of multi-word aspect terms by the model. The results of the ablation experiment indicate that utilizing syntactic features and the LCFS component can enhance the model’s performance. Additionally, attention visualization further validates the impact of the LCFS mechanism and interactive learning on the model. The SRD threshold experiment explores the optimal threshold setting for the LCFS mechanism. In future work, we plan to employ GNN to learn syntactic structural features of text and utilize a multi-task learning framework [52] to establish connections between multiple subtasks, advancing research on ABSA tasks.

Data availability

The data is available at (1) The Laptop 14 and Restaurant 14.

Notes

The SIASC model code available at: https://github.com/ZouWang-spider/AESC.

The BiAffine model available at: https://github.com/chantera/biaffineparser.

The Stanford Parser available at: https://nlp.stanford.edu/software/lex-parser.shtml.

The LCFS mechanism available at: https://github.com/HieuPhan33/LCFS-BERT.

Our improved method available at: https://github.com/ZouWang-spider/AESC/blob/main/LCFS.py.

The Laptop 14 and Restaurant 14 datasets available at: http://alt.qcri.org/semeval2014/task4/.

The Twitter datesets available at: http://goo.gl/5Enpu7.

References

Li J, Zhao Y, Jin Z, Li G, Shen T, Tao Z, Tao C (2022) SK2: Integrating Implicit Sentiment Knowledge and Explicit Syntax Knowledge for Aspect-Based Sentiment Analysis. In: Proceedings of the 31st ACM International Conference on Information & Knowledge Management, pp 1114–1123. https://doi.org/10.1145/3511808.3557452

Hu Z, Wang Z, Wang Y, Tan AH (2023) MSRL-Net: A multi-level semantic relation-enhanced learning network for aspect-based sentiment analysis. Expert Syst Appl 217:119492. https://doi.org/10.1016/j.eswa.2022.119492

Hu M, Liu B (2004) Mining and summarizing customer reviews. In: Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining (KDD), pp 168-177. https://doi.org/10.1145/1014052.1014073

Tunca S, Sezen B, Wilk V (2023) An exploratory content and sentiment analysis of the guardian metaverse articles using leximancer and natural language processing. Journal of Big Data 10(1):82. https://doi.org/10.1186/s40537-023-00773-w

Liu N, Shen B (2023) Aspect term extraction via information-augmented neural network. Complex & Intelligent Systems 9(1):537–563. https://doi.org/10.1007/s40747-022-00818-2

Mukherjee A, Liu B (2012) Aspect extraction through semi-supervised modeling. In: Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics (ACL), pp 339-348

Pota M, Ventura M, Catelli R, Esposito, (2020) An effective BERT-based pipeline for Twitter sentiment analysis: A case study in Italian. Sensors 21(1):133. https://doi.org/10.3390/s21010133

Zou W, Zhang W, Tian Z, Wu W (2023) A hybrid model for text classification using part-of-speech features. Journal of Intelligent & Fuzzy Systems 45(1):1–15. https://doi.org/10.3233/JIFS-231699

Hu Z, Wang Z, Wang Y, Tan AH (2023) Aspect Sentiment Triplet Extraction Incorporating Syntactic Constituency Parsing Tree and Commonsense Knowledge Graph. Cogn Comput 15(1):337–347. https://doi.org/10.1007/s12559-022-10078-4

Ma D, Li S, Zhang X, Wang H (2017) Interactive attention networks for aspect-level sentiment classification. In: Proceedings of the 26th international joint conference on artificial intelligence (IJCAI), pp 4068-4074. https://doi.org/10.48550/arXiv.1709.00893

Phan MH, Ogunbona PO (2020) Modelling context and syntactical features for aspect-based sentiment analysis. In: Proceedings of the 58th annual meeting of the association for computational linguistics(ACL), pp 3211-3220. https://doi.org/10.18653/v1/2020.acl-main.293

Wang Y, Huang M, Zhao L, Zhu X (2016) Attention-based LSTM for aspect level sentiment classification. In: Proceedings of the 2016 conference on empirical methods in natural language processing (EMNLP), pp 606-615

Shobana J, Murali M (2021) An efficient sentiment analysis methodology based on long short-term memory networks. Complex & Intelligent Systems 7(5):2485–2501. https://doi.org/10.1007/s40747-021-00436-4

Fan F, Feng Y, Zhao D (2018) Multi-grained attention network for aspect-level sentiment classification. In: Proceedings of the conference on empirical methods in natural language processing (EMNLP), pp 3433-3442. https://doi.org/10.18653/v1/D18-1380

Devlin J, Chang M W, Lee K, Toutanova K (2018) Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805. https://doi.org/10.48550/arXiv.1810.04805

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. Advances in neural information processing systems

Dai H, Song Y (2019) Neural aspect and opinion term extraction with mined rules as weak supervision. arXiv preprint arXiv:1907.03750. https://doi.org/10.48550/arXiv.1907.03750

Song Y, Wang J, Jiang T, Liu Z, Rao Y (2019) Attentional encoder network for targeted sentiment classification. arXiv preprint arXiv:1902.09314. https://doi.org/10.48550/arXiv.1902.09314

Zeng B, Yang H, Xu R, Zhou W, Han X (2019) Lcf: A local context focus mechanism for aspect-based sentiment classification. Appl Sci 9(16):3389. https://doi.org/10.3390/app9163389

Scarselli F, Gori M, Tsoi AC, Hagenbuchner M, Monfardini G (2008) The graph neural network model. IEEE Trans Neural Networks 20:61–80. https://doi.org/10.1109/TNN.2008.2005605

Zhang C, Li Q, Song D (2019) Aspect-based sentiment classification with aspect-specific graph convolutional networks. In: Proceedings of the conference on empirical methods in natural language processing 9th international joint conference on natural language processing (EMNLP-IJCNLP), pp 4568-4578. https://doi.org/10.48550/arXiv.1909.03477

Huang B, Carley K (2019) Syntax-aware aspect level sentiment classification with graph attention networks. In: Proceedings of Conference on Empirical Methods in Natural Language Processing 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp 5469-5477

Li R, Chen H, Feng F, Ma Z, Wang X, Hovy E (2021) Dual graph convolutional networks for aspect-based sentiment analysis. In: Proceedings of the 59th annual meeting of the association for computational linguistics and 11th international joint conference on natural language processing (ACL-IJCNLP), pp 6319-6329. https://doi.org/10.18653/v1/2021.acl-long.494

Phan HT, Nguyen NT, Hwang D (2023) Aspect-level sentiment analysis: A survey of graph convolutional network methods. Information Fusion 91:149–172. https://doi.org/10.1016/j.inffus.2022.10.004

Shang L, Xi H, Hua J, Tang H, Zhou J (2023) A Lexicon Enhanced Collaborative Network for targeted financial sentiment analysis. Information Processing & Management 60(2):103187. https://doi.org/10.1016/j.ipm.2022.103187

Wu Z, Ying C, Zhao F, Fan Z, Dai X, Xia R (2020) Grid tagging scheme for aspect-oriented fine-grained opinion extraction. In: Proceedings of conference on empirical methods in natural language processing (EMNLP), pp 2576-2585

Xu L, Li H, Lu W, Bing L (2020) Position-aware tagging for aspect sentiment triplet extraction. In: Proceedings of the conference on empirical methods in natural language processing (EMNLP), pp 2339-2349

Zhang C, Li Q, Song D, Wang B (2020) A multi-task learning framework for opinion triplet extraction.In: Proceedings of the conference on empirical methods in natural language processing (EMNLP), pp 819-828

Li Y, Li Z, Zhang M, Wang R, Li S, Si L (2019) Self-attentive Biaffine Dependency Parsing. In: International joint conference on artificial intelligence (IJCAI), pp 5067-5073

Peng H, Xu L, Bing L, Huang F, Lu W, Si L (2020) Knowing what, how and why: A near complete solution for aspect-based sentiment analysis. In: Proceedings of the AAAI conference on artificial intelligence (AAAI), pp 8600-8607. https://doi.org/10.1609/aaai.v34i05.6383

Li X, Bing L, Li P, Lam W (2019) A unified model for opinion target extraction and target sentiment prediction. In: Proceedings of the AAAI conference on artificial intelligence (AAAI), pp 6714-6721. https://doi.org/10.1609/aaai.v33i01.33016714

Dozat T, Manning CD (2016) Deep biaffine attention for neural dependency parsing. arXiv preprint arXiv:1611.01734. https://doi.org/10.48550/arXiv.1611.01734

Chen Y, Zhuang T, Guo K (2021) Memory network with hierarchical multi-head attention for aspect-based sentiment analysis. Appl Intell 51:4287–4304. https://doi.org/10.1007/s10489-020-02069-5

Ismail HM, Belkhouche B, Zaki N (2018) Semantic Twitter sentiment analysis based on a fuzzy thesaurus. Soft Comput 22(18):6011–6024. https://doi.org/10.1007/s00500-017-2994-8

Zhang M, Li Z, Fu G, Min Z (2021) Dependency-based syntax-aware word representations. Artif Intell 292:103427. https://doi.org/10.1016/j.artint.2020.103427

Pontiki M, Galanis D, Pavlopoulos J, Papageorgiou H, Androutsopoulos I, Manandhar S (2014) SemEval-2014 task 4: Aspect based sentiment analysis. In: Proceedings of 8th international workshop on semantic evaluation (SemEval), pp 27-35

Dong L, Wei F, Tan C, Tang D, Zhou M, Xu K (2014) Adaptive recursive neural network for target-dependent Twitter sentiment classification. In: Proceedings of 52th annual meeting of the association for computational linguistics (ACL), pp 49-54

Ye H, Yan Z, Luo Z, Chao W (2017) Dependency-tree based convolutional neural networks for aspect term extraction. In: Advances in knowledge discovery and data mining: 21st pacific-Asia conference, pp 350-362. https://doi.org/10.1007/978-3-319-57529-2_28

Luo H, Li T, Liu B, Unge H (2019) Improving aspect term extraction with bidirectional dependency tree representation. IEEE/ACM Transactions on Audio, Speech, and Language Processing 27(7):1201–1212. https://doi.org/10.1109/TASLP.2019.2913094

Ma D, Li S, Wu F, Xie X, Wang H (2019) Exploring sequence-to-sequence learning in aspect term extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics (ACL), pp 3538-3547. https://doi.org/10.18653/v1/P19-1344

Hu M, Peng Y, Huang Z, Li D, Lv Y (2019) Open-domain targeted sentiment analysis via span-based extraction and classification. In: Proceedings of the 58th annual meeting of the association for computational linguistics (ACL), pp 537-546

Wang K, Shen W, Yang Y, Quan X, Wang R (2020) Relational graph attention network for aspect-based sentiment analysis. In:Proceedings of the 58th annual meeting of the association for computational linguistics (ACL), pp 3229-3238

Chen Z, Qian T (2020) Relation-aware collaborative learning for unified aspect based sentiment analysis. In: Proceedings of the 58th annual meeting of the association for computational linguistics (ACL), pp 3685-3694. https://doi.org/10.18653/v1/2020.acl-main.340

Liang Y, Meng F, Zhang J, Chen Y, Xu J, Zhou J (2021) A dependency syntactic knowledge augmented interactive architecture for end-to-end aspect-based sentiment analysis. Neurocomputing 454:291–302. https://doi.org/10.1016/j.neucom.2021.05.028

Yan H, Dai J, Ji T, Qiu X, Zhang Z (2021) A unified generative framework for aspect-based sentiment analysis. In: Proceedings of the 59th annual meeting of the association for computational linguistics 11th international joint conference on natural language processing (IJCNLP), pp 2416-2429

Wu H, Zhang Z, Shi S, Wu Q, Song H (2022) Phrase dependency relational graph attention network for Aspect-based Sentiment Analysis. Knowl-Based Syst 236:107736. https://doi.org/10.1016/j.knosys.2021.107736

Zhang Z, Zhou Z, Wang Y (2022) SSEGCN: Syntactic and semantic enhanced graph convolutional network for aspect-based sentiment analysis. In: Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp 4916-4925. https://doi.org/10.18653/v1/2022.naacl-main.362

Liang B, Su H, Gui L, Cambria E, Xu R (2022) Aspect-based sentiment analysis via affective knowledge enhanced graph convolutional networks. Knowl-Based Syst 235:107643. https://doi.org/10.1016/j.knosys.2021.107643

Zhong Q, Ding L, Liu J, Du B, Jin H, Tao D (2023) Knowledge graph augmented network towards multiview representation learning for aspect-based sentiment analysis. IEEE Trans Knowl Data Eng 35(10):10098–10111. https://doi.org/10.1109/TKDE.2023.3250499

Gu T, Zhao H, He Z, Min L, Di Y (2023) Integrating external knowledge into aspect-based sentiment analysis using graph neural network. Knowl-Based Syst 259:110025. https://doi.org/10.1016/j.knosys.2022.110025

Wu H, Huang C, Deng S (2023) Improving aspect-based sentiment analysis with Knowledge-aware Dependency Graph Network. Information Fusion 92:289–299. https://doi.org/10.1016/j.knosys.2022.110025

Zou W, Zhang W, Wu W, Tian Z (2024) A Multi-task Shared Cascade Learning for Aspect Sentiment Triplet Extraction Using BERT-MRC. Cognitive Computation 1–18. https://doi.org/10.1007/s12559-024-10247-7

Acknowledgements

This work was supported by the Hubei Province Key Research Project No. TA02002. Hubei Provincial Central Leading Local Science and Technology Development Special Project No. 2018ZYYD007. the Hubei Province Education Department Science and Technology Research Project No. Q20201801. Ph.D Research Startup Fund Project of Hubei University of Automotive Technology No. BK202004. Natural Science Foundation of Hubei Province No. 2022CFB538.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all the authors, the corresponding author states that there is no Conflict of interest.

Code availability

The code is available at: https://github.com/ZouWang-spider/AESC.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zou, W., Zhang, W., Tian, Z. et al. A syntactic features and interactive learning model for aspect-based sentiment analysis. Complex Intell. Syst. 10, 5359–5377 (2024). https://doi.org/10.1007/s40747-024-01449-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01449-5