Abstract

Network representation learning aims to map the relationship between network nodes and context nodes to a low-dimensional representation vector space. Directed network representation learning considers mapping directional of node vector. Currently, only sporadic work on direct network representation has been reported. In this work, we propose a novel algorithm that takes into account the direction of the edge with text’s attribute of the node in directed network representation learning. We then define the matrix based on in-degree of Laplacian and signless Laplacian for digraph, and it utilizes web page datasets from universities in the USA to evaluate the performance of vertex classification. We compare our algorithm with other directed representation learning algorithms. The experimental results show that our algorithm outperforms the baseline by over 20% when the training ratio ranges from 10 to 90%. We apply the in-degree-Laplacian and In-degree-signless-Laplacian to directed representation learning, which is one of the main contributions of this algorithm. Additionally, we incorporate text information through matrix completion in directed network representation learning and the experimental results show an increase in performance of up to 20% compared to the baseline, especially when the training ratio is 10%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Network Representation Learning (NRL) is a useful tool that maintains the structure feature of a network in low-dimensional space. NRL [1] makes people better understand semantic relationships between vertices and the topology of the interactions the components, which has been prove effective in a various of network processing and analysis tasks such as node classification [2], recommendation systems [3], and network visualization [4]. NRL learns dense and structural characteristics and represents them in into low-dimensional space, effectively denoising or removing redundant information while preserving the essential structure. In real-world networks, network edges usually have directionality, and nodes often contain rich information. For example, the web pages on Internet are connected each other, forming a graph where web pages serve as vertices. These webpages typically contain rich text information, and the tasks like web-page information retrieval often involve high-dimensional and directed data. While web-page information retrieval has been addressed through web rank algorithm like PageRank [5, 6], vertex classification and clustering remain challenging in directed network. Zhou [7] proposed a semi-supervised learning algorithm for classification on directed graph, but it only focuses in digraph partition. Chen et al. [8] proposed an algorithm for vertex embedding in directed networks using random walk, but this work only considers directional feature in directed network representation. In the past, there have been many network representation learning solutions available for undirected networks, but the directionality of edge and rich node information have been less explored in directed NRL. Therefore, a natural idea is to learn directed network representations by considering both the network direction and text information. The edge direction representations is derived from directed network structure, while the text information of vertices provides additional information for directed NRL. Nevertheless, the directional feature of network and rich information are typically treated independently and concatenated as separate representations. In our algorithm, we propose an approach that leverages the directional features and text attributes in the digraph. Our algorithm utilizes in-degree-based Laplacian and signless Laplacian with vertex text information through induction matrix completion(IMC) [9]. One main contribution is the matrix definition of in-degree-based Laplacian and signless Laplacian for digraph. In our experiment, we compared baseline on four open directed network datasets. The classification [10] accuracy in these datasets outperforms baseline by maximum of 20% when the training ratio ranges from 10 to 90%. The experimental results show an advantage of up to 20% over the compared baseline, especially when the training ratio is 10%.

Related work

Related method

In the realm of homogeneous information networks, they can be divided into three types of representation learning methods: those that consider only vertices, those that consider only edges, and the third synthesizes information from both edges and vertices, supplemented by external descriptors such as labels and text. Regarding Network Representation Learning-based primarily on network architecture, computational complexity escalates when calculating vertex adjacency matrices. Some researchers proposed the Laplace matrix method [11], which is a spectral decomposition method that uses k-nearest neighbor kernel functions to learn high similarly of vertex representations. Inspired by the Word2vec algorithm [12] in natural language processing, through Perozzi et al. [13] proposed the DeepWalk algorithm. This algorithm randomly walks through all the nodes in the network, generating an ordered sequence of nodes. The closer the nodes are the higher the co-occurrence probability. The skip-gram model is then used to predict the sequence left and right the single node, and the node is represented by a learned vector. Node2vec [14] improved upon DeepWalk by introducing breadth-first search and depth-first search into the random walk sequence generation process using two parameters p and q. The LINE [15] algorithm preserves the first-order node similarity and the second-order neighbor similarity in the network and can effectively preserve both the local structure and global network structure. The SDNE algorithm [16] uses a semi-supervised deep neural network to model multiple nonlinear mappings for NRL. It retains local information from the Laplacian matrix and global information based on the unsupervised deep self-encoding learning.

NRL methods that combine edge and vertex with external information include the work by Tu et al. [17]. It proposed a semi-supervised network model MMDW, which is based on matrix decomposition and can learn node representations containing both network structure and vertex information. TADW [18] is another matrix decomposition-based representation learning method that incorporates text information of vertices. However, such algorithm like TADW and MMDW have a high computational cost, and they simply combine the node attributes, leading to a loss of semantic information in the network. Sun et al. [19]. proposed the CENE algorithm, which uses a logistic regression function to learn an extended network and optimizes the objective function using negative sampling. This algorithm captures both the network structure and the semantic information between the node and the content. Pan et al. [20] proposed a deep learning model TriDNR that combines three types of network information, namely, network structure, node content and node label. The random walk of TriDNR model retains the structural similarity between vertices, and a neural network is used to learn the correlations of between the nodes contexts. The node labels are also used as input to learn the label vector and the word vector, but the model is based on an undirected network.

Currently, there is relatively little research on representation learning methods base on in-degree Laplace matrix of directed networks.

Text-enhanced method

In the undirected network context, DeepWalk [13] generated an ordered sequence of vertices by random walking through all vertices. Given an undirected graph, V as the vertex set and E as the edge set, the DeepWalk objective function is as follows,

where V is a set of random walk sampling vertices. \(v_i\) is current central vertex within the sampling window. For a given length t, namely size of sampling window. The vertex pair with as the center \(v_i\) and \(v_j\) as the context vertex, reachable from \(v_i\) via step t. \(Pr(v_j|v_i)\) defined by the Softmax function as:

Bold v represent vector of vertices. Yang et al. [18] proves that DeepWalk is equivalent to the factorization matrix. The matrix \(M_{ij}\) is adjacent matrix obtained by random walk t step, traversing the entire network. Instead of using \(M_{ij}\) for computational efficiency, Yang approximately factorizes the matrix using \(M = (A+A^2)/2 \).

Yang proposes Text-Associated DeepWalk (TADW) algorithm, which incorporates a corresponding text feature matrix T. They propose TADW to learn representation of each vertex \(v_i \in V \) from both the network structure and the text features T. The TADW algorithm has a complexity of \(O(|V|^3)\) when t is large. In fact, DeepWalk is based on neural networks to avoid explicitly computation of the accurate matrix M.

Let the size of the matrix M be \(n \times n\) and T be the text features, the optimization problem is solved. Yang proposes objective function as follows,

Yang also uses the inductive matrix completion (IMC) algorithm proposed by Nagarajan and Dhillon [9]. Nagarajan and Dhillon propose the IMC algorithm, which hypothesizes an association matrix that is generated by applying feature vectors associated with its rows and columns to low-rank matrix \(W^TH\). Let \(M^{'}\) be observation matrix, we aim to recover \(W^TH\), where \(W\in {\mathbb {R}}^{N_g\times k}\) and \(H\in {\mathbb {R}}^{N_t\times k}\) have a rank \(k \ll m,n\). Minimizing \(W^TH\approx M^{'}\) is an optimization problem. However, solving a rank constraint optimization is always NP-hard. Therefore, the NP-hard problem is relaxed by using square loss function and adding two regular items. These two regular terms can limit overfilling of the data. The IMC algorithm incorporates more information from row and column units by including two feature matrices in the objective function. In the IMC algorithm, let \(x_i\in {\mathbb {R}}^{f_g}\) denote the gene feature vector of genes i, \(y_j\in {\mathbb {R}}^{f_t}\) denote the feature vector j for diseases, \(X\in {\mathbb {R}}^{N_g \times f_g} \) denote the training feature matrix of \(N_g\) genes, and let \(Y \in {\mathbb {R}}^{N_t\times f_t}\) denote as the training feature matrix of \(N_t\) disease. The IMC algorithm aims to recover a low-rank matrix using the observed entries from the genes–disease association matrix \(M^{'}_{i,j}\). Denote observed entries by \(M^{'}_{i,j} \in {\mathbb {R}}^{f_g \times f_t} \) in \(\Omega \).

Natarajan and Dhillon formalized the objective function:

where \(\varepsilon \) is a regularization parameter. one idea is inspired by the inductive matrix completion for the directed network representation is to incorporate the in-direction and the text features of a vertex to obtain better directed networks representations. Our algorithm obtains representation vector for the vertices of directed network using in-degree-Laplacian, in-degree-signless-Laplacian and text features.

Embedding algorithm of directed network

World Wide Web is the largest network for which topological information is currently available. It is a typical directed network dataset. Our algorithm uses digraph theory to model the web-page hyperlink of World Wide Web in Internet. The vertices of the web-page are the documents (webpages) and the edges are the hyperlinks (URL’s) that connect one document to another. Let G denote a digraph, and E denote an edge set, and V denote a finite vertex set. An arc of a digraph is an ordered pair vertex \((v_i,v_j)\). Each arc may have a positive weight w. The in-degree \(d^-_i\) of a vertex \(v_i\) for digraph is defined as \(d^-_i=\sum _{v_j,v_j\rightarrow v_i}w(v_i,v_j)\), where \(v_j\rightarrow v_i\) means \(v_j\) has a directed arc pointing to \(v_i\). On the digraph, when a Markov random walk through the digraph, a transition probability matrix is defined as \(P=[p(v_i,v_j)]_{v_i,v_j}\), where \(p(v_i,v_j)\) is transition probability, which means a walker random walk from the \(v_i\) vertex to the \(v_j\) vertex. Meanwhile, \(\sum _{v_i}p(v_i,v_j)=1,\forall v_j\). A Markov random walk is a stochastic process in which the next state of each vertex depends only on its current state. The stationary distribution of vertices in a Markov random walk is the probability distribution that describes the long-term behavior of the walker. The stationary distribution of vertices is the probability distribution to which the visit frequencies of each vertex converge when the walk reaches a steady state. If the digraph is irreducible, the digraph is connected, for the stationary distribution of each vertex can assume is \(\pi \) and \(\sum _{v_i}\pi _{v_i}=1\) [21]. The entries of transition probability matrix can be defined as \( p(v_i,v_j)=w(v_i,v_j)/d^-(v_i)\), which means that a random walker on a vertex jumps to its neighbors with a probability proportional to the edge weight.

Preliminaries

Since the achievements of research on directed graphs are seldom used in network representation, a natural idea of directed network representation is to convert directed edges to undirected edges [22, 23]. Because an undirected networks representation are simpler and easier to analyze than directed networks. However, this conversion can also lead to the loss of some information, such as the direction of the edges. Therefore, the decision of whether or not to convert a directed network to an undirected network depends on the specific needs and constraints of the problem.

Case 1

One widely used method is the \(A+A^T\) symmetrization method. Let \(A_U\) be denoted by undirected network’s adjacency matrix. This method involves adding the original directed network’s adjacency matrix A with its transpose \(A^T\) to create a symmetric adjacency matrix for an undirected network. Essentially, this means replacing each directed edge with an undirected one. However, this symmetrization method does not fully capture in and out edges on node similarity.

Case 2

The symmetrization based on random walk transforms the directed normalized cuts of a set of nodes in the generated symmetrized undirected network into the normalized cuts of the same set [24]. Specifically, the adjacency matrix of the digraph can be represented as \(A=(\Pi P + P^T\Pi )/2\), where P is the transition matrix of random walks and \(P^T\) is the transport matrix of the transition matrix. \(\Pi = \text {diag}(\pi _1, \pi _2, \ldots , \pi _n)\) is a diagonal matrix with the probability of staying at each node in the stationary state (stationary distribution). However, due to its dependency on the normalized cut criterion, the symmetrization method follows the concept of density-based clustering, making it challenging to identify easily other types of meaningful structures. In various social networks, it is difficult to identify the connections between different communities. For instance, members belonging to the same community, often interact with each other and share common interests. By identifying these communities, we can observe that their connections with each other are dense, with relatively fewer connections to other communities. Nevertheless, the connections between communities is of great importance in practice.

Case 3

The symmetric combination methods commonly utilize the adjacency matrix of a directed graph, specifically \(A^TA\), identify the common in-edges (the number of shared nodes being pointed to by two nodes) between pairs of nodes. Meanwhile, \(AA^T\) captures the common out-edges. This combination method [25] that takes into account in-degree and out-degree for digraph, recognizes the equal importance of both the in-degree and out-degree of nodes in communities. Then, the combination matrix is a symmetric matrix.

Case 4

A major characteristic of real-world networks is that they exhibit a power-law degree distribution [26]. This means that within a network, there are a few nodes with very high degrees, while the majority of nodes have lower degrees. In a directed network, the contribution of each node to the community will be normalized based on its node degree, which includes both in-degree and out-degree. To symmetrize the adjacency matrix of a digraph, we consider the contribution of the node degree. Let \(\alpha \) denote the contribution of the in-degree and \(\beta \) denote the contribution of out-degree of the node.

where

when \(\alpha =\beta =0.5 \), the Eq. (9) has

Where \(D_{+}\) denotes out-degree matrix and \(D_{-}\) denotes in-degree matrix. The above method is a representation for converting a directed graph into an undirected graph, and it is an equivalent representation of the network.

Case 5

Another method is to translate directed graph into a bipartite graph. We will not go into further details on this here.

Similar to the adjacency matrix, Laplacian matrix can also be applied using these network representation method. Just as combination Laplacian matrix. Equation (12) is defined as a combination of Laplacian Laplacian matrix by Chung [21].

Definition

Our directed NRL algorithm defines the relevant concept and considers the vertex’s in-degree in the digraph as follows:

Definition 1

in-degree-Laplacian matrix \(L^-\) of digraph G is defined as an \( | n | \times | n |\) matrix,

If \(i=j\), let \(d^-_{ij}\) denote in-degrees of the ith vertex, and if \(i\not =j\), (i, j)th entry value is \(-1\) of in-degree-Laplacian matrix in digraph.

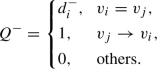

Definition 2

In-degree-signless-Laplacian matrix \(Q^-\) of digraph G is defined \( | n | \times | n |\) matrix,

where \(i=j\), \(d^-_{ij}\) be denoted by in-degrees of the ith vertex, and when \(i\not =j\), (i, j)th entry value is 1 of in-degree-signless-Laplacian matrix in digraph.

Embedding procedure

Next, we suppose A is Hermite matrix, thus we have \(A^H=A\).

Theorem 1

According to the characteristic equation of the matrix \(Ax=\lambda x\), where A is an n order Hermite matrix, and x is eigenvectors. We calculate the eigenvalues \(\lambda _1,\lambda _2,\ldots ,\lambda _n\) and eigenvectors \(x_1,x_2,\ldots ,x_n\), we have the orthogonal eigenvectors. Let \(\Lambda =\text {Diag}(\lambda _{1},\ldots ,\lambda _{n}),\Gamma =(x_1,x_2,\ldots ,x_n), x=\Gamma y.\) Then, we have the standard quadratic form of Hermite matrix,

Proof

\(\square \)

The adjacent matrix \(A^-\) of digraph is Hermitian and \(A^{-H}\) is the transpose matrix of \(A^-\). Through matrix operations, the diagonal of \(A^-A^{-H}\) is denoted as the out-degree of vertices, and the diagonal of \(A^{-H}A^-\) is denoted as the in-degree of vertices. Namely,

By Theorem 1, we obtain,

where \(\Lambda ^-\) is eigenvalue of in-degree based. According to Rayleigh quotient and Perron-Frobenius Theorem, a digraph has a transition probability matrix P and the Perron vector \(\pi \). For any \(f: V(G) \rightarrow {\mathbb {C}}\), we have an irreducible matrix \(A^-\) with non-negative entries that has a unique (left) eigenvector with all entries positive. Let \(\lambda _i^-\) denote the in-degree-based eigenvalue of the all positive eigenvector of transition probability matrix P. Therefore, a strongly connected digraph has a unique left eigenvector \(\pi _i\) with \(\pi (v_i)> 0\) for all \(v_i\), we have \(\Pi P = \Lambda ^-\Pi \). Let \(\Pi ^-_i\) be the stationary distribution of in-degree diagonal matrix, i.e. \(\Pi ^-=\text {diag}(\pi ^{-}_1,\pi ^{-}_2\ldots ,\pi ^{-}_n)\).

Next, the in-degree-based Laplacian standard quadratic form of directed network is derived as follows:

Proposition 1

By Theorem 1 and Proposition 1, we obtain representation learning of the directed network. The representations based on the in-degree-Laplacian and In-degree-signless-Laplacian is given by:

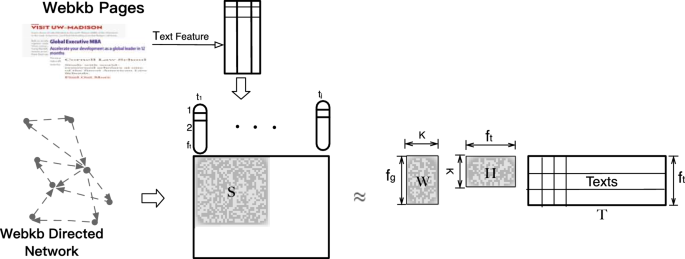

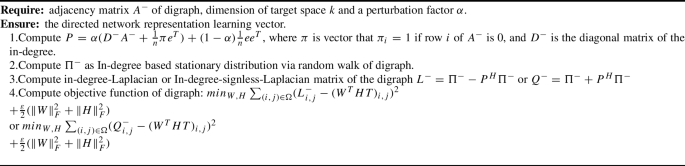

Our algorithm utilizes the IMC to supervised node classification on WebKb network database. IMC is to recover a low-rank matrix using the observed entries from the directional and text association matrix. The directional low-rank constraint problem is solved on S with rich text information. Our algorithm aims to minimize the following objective functions:

or

Let \(S^-\) denoted \(L^-\) or \(Q^-\), our algorithm framework is shown in Fig. 1, and and the pseudo code is given in Algorithm 3.3.

Our algorithm solves directed network embedding from in-degree-Laplacian matrix and in-degree-signless-Laplacian matrix with rich text information.

Complexity analysis

In this work, the complexity analysis of optimization problem is performed according to Yu [26]. For equation,

where W and HT are convex functions the complexity of each iteration involves minimizing W and HT. This is equivalent to a regularized least squares loss problem with k variables. If the variables k and \(|\Omega |\) are large, Conjugate Gradient iterative methods can be used. These methods offer a cheap updates and provide a good approximate solution within a few iterations. The method is particularly suitable for solving Eq. (10). Consequently, Yu’s techniques make the alternating minimization efficient enough to handle the large-scale problems, especially for the gradient calculations. Similarly, the complexity of our algorithm is equal to \(O(nnz(S)k +|V|f_tk+|V|k^2)\).

Experiments

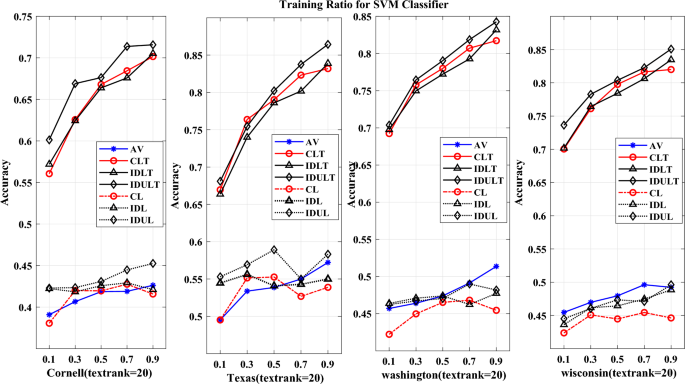

Our experiments utilize multi-class vertex classification to evaluate the performance of in-degree-based network representation learning for a directed graph. The final result is a low-dimensional representation of directed network datasets with rich text attributes. The experiments involve predicting the type of webpages based on web attributes, with SVM (Support Vector Machine) selected as the classifier. The proposed algorithm is evaluated using the baseline method in the experiments on the same publicly available web datasets.

Experiments settings

Datasets This dataset contains webpages of four universities: Cornell, Texas, Washington, Wisconsin, and labeled with whether they are professor, student, project, or other pages. The dataset of Cornell has 867 webpages, Texas 827 webpages, Washington 1205 webpages, and Wisconsin 1263 webpages.

Baseline CL Combina-Laplacian is the baseline method. The performance of node classification is between 36 and 53%, in the corresponding data set. Chen [8] proposed an algorithm embedding on the directed network based on Chung [21]. Chung proposed combination Laplacian of directed graph, \(L=\Phi -\frac{\Phi P+P^{*}\Phi }{2}\), where L is combination Laplacian matrix, P is the transition matrix of directed network, \(\Phi \) is a diagonal matrix of the stationary distribution, i.e. \(\Phi = \text {diag}(\phi _1,\ldots \phi _n)\) with entries \(\Phi (v, v) = \phi (v)\). Observing Chung’s definition of the combinatorial Laplacian matrix, it can be seen that the combinatorial Laplacian matrix is symmetric. In fact, the matrices of the digraph is asymmetrical, including adjacency matrix of directed graph, Laplacian matrix of directed graph, signless Laplacian matrix of directed graph. And our defined in-degree-Laplacian and in-degree-signless-Laplacian matrix are also asymmetrical.

Adjacent matrix average Adjacent matrix average method decomposes the adjacency matrix of the directed network, the accuracy ratio is 36% to 58%.

IDL In-degree-Laplacian is the Kirchhoff matrix factorization. It is a our proposed algorithm. The performance is at least 3 percentage better than the baseline method.

IDUL In-degree-signless-Laplacian is the signless Kirchhoff matrix factorization. The performance is at least 4 percentage better than the baseline method.

CLT Combina-Laplacian with text is our improved baseline method. It introduces vertex text information. It can be observed that the performance improvement is at least 20% better.

IDLT In-degree-Laplacian with text is the Kirchhoff matrix with text method. The improvement performance is at least 23 better.

IDULT In-degree-signless-Laplacian with text is signless Kirchhoff matrix with text method. The performance is at least 23 better than the baseline method.

Settings For all four university webpages datasets, we select \(k=80\), \(\lambda =0.2\), text attributes dimension \(T\in (10,20,30,50)\). For supervised classifier, linear SVM is implemented by liblinear [10]. The training ratio varies from 10 to 90% for linear SVM.

Results

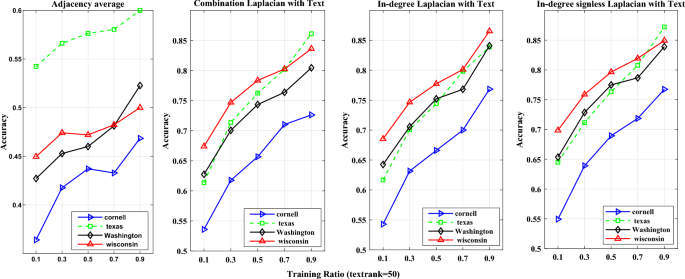

The application of directed network representation learning involves task of the vertex classification. In this work, we evaluate the vertex classification performance of the proposed DGRT (Directed Graph Representation with Text) algorithm on four university web page datasets in the USA in comparison with existing directed representation learning algorithms. The experimental results of the node classification are Tables 1, 2, 3 and 4, which display the classification accuracies for webpages of universities: Cornell, Texas, Washington, Wisconsin. The experimental simulation results of the accuracy rate for network node classification of difference training ratio and embedding parameter are shown in Tables 5, 6, 7 and 8. These results show that our algorithm outperforms the baseline by over \(20\%\) when the train ratio ranges from 10 to 90%. Based on in-degree (out-degree), it can more effectively capture the directional features of digraph and is more suitable for directed networks representation. Also, when we integrate text information through matrix completion in directed NRL experiments, we achieve an improvement of up to 20% compared to the baseline, particularly when the training ratio is 10%.

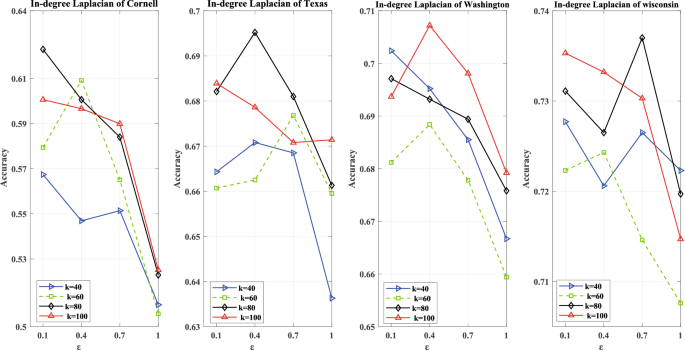

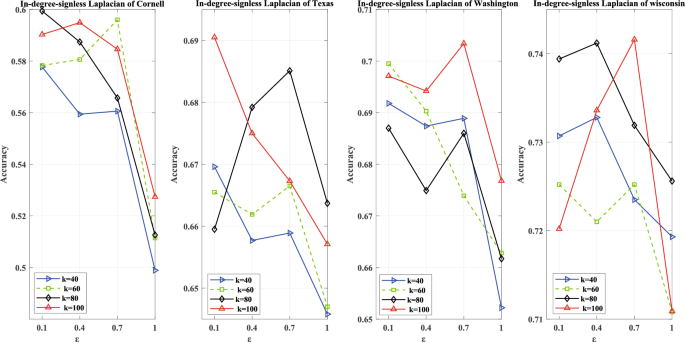

Parameter sensitivity The DGRT algorithm uses two hyper-parameters: the network representation vector and the regularization term. To analyze their impact on the learning performance of the network representation, we denote these hyper-parameters as the vector dimension k and the regularization term \(\epsilon \). These hyper-parameters can take different values. For the vector dimension k, we vary it from 40 to 100. For the regularization term \(\epsilon \), we vary it from 0.1 to 1. These variations are made when the training ratio is set to 10%. The corresponding vertex classification accuracies are shown in Figs. 2, 3, 4 and 5.

Conclusion

In this work, we propose a novel algorithm that utilizes the rich text features of vertices in directed network representation learning. Experimental results demonstrate its effectiveness and robustness across different training ratios using the WebKb datasets. The experiments show that the directed attribute is captured by the in-degree-Laplacian and the in-degree-signless-Laplacian matrix, outperforming the baseline method. Furthermore, the inclusion of text information enhances directed network representation learning. The experimental results show an advantage of up to 20% compared to the baseline method. Instead of simply concatenating features, our algorithm combines them to provide a novel approach to modeling for digraph. As for future work, a promising research direction is to explore online and distributed learning techniques for large-scale network data.

References

Xiao S, Wang S, Guo W (2023) SGAE: stacked graph autoencoder for deep clustering. IEEE Trans Big Data 9(1):254–266

Agibetov A (2023) Neural graph embeddings as explicit low-rank matrix factorization for link prediction. Pattern Recogn 133:108977

Lü L, Medo M, Yeung CH, Zhang YC, Zhang ZK, Zhou T (2012) Recommender systems. Phys Rep 519(1):1–49

Hamilton W, Ying Z, Leskovec J (2017) Inductive representation learning on large graphs. Neural Inf Process Syst 30:1024–1034

Brin S, Page L (1998) The anatomy of a large scale hypertextual web search engine. In: WWW Conf

Page L, Brin S, Motwani R, Winograd T (1998) The pagerank citation ranking: bringing order to the web. In: WWW Conf., pp 161–172

Zhou D, Bousquet O, Lal T, Weston J, Schölkopf B (2004) Learning with local and global consistency. In NIPS, pp. 321–328

Chen M, Yang Q, Tang X (2007) Directed graph embedding. In: Proceedings of IJCAI. pp 2707–2712

Natarajan N, Dhillon IS (2014) Inductive matrix completion for predicting gene-disease associations. Bioinformatics 30(12):60–68

Fan RE, Chang KW, Hsieh CJ, Wang XR, Lin CJ (2008) LIBLINEAR: A library for large linear classification. JMLR 9:1871–1874

Belkin M, Niyogi P (2002) Laplacian eigenmaps and spectral techniques for embedding and clustering. Adv Neural Inf Process Syst 14(6):585–59

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. In: 1st international conference on learning representations, Scottsdale, Arizona, USA

Perozzi B, Al-Rfou R, Skiena S (2014) Deepwalk: online learning of social representations. KDD pp 701–710

Grover A, Leskovec J (2016) node2vec: Scalable feature learning for networks. KDD. pp 855–864

Tang J, Qu M, Wang M, Zhang M, Yan J, Mei Q (2015) Line: large-scale information network embedding. In: WWW conference. pp 1067–1077

Wang D, Cui P, Zhu W (2016) Structural deep network embedding. In: ACM SIGKDD conference. pp 1225–1234

Tu C, Zhang W, Liu Z, Sun M (2016) Max-margin deepwalk: discriminative learning of network representation. In: IJCAI conference. AAAI Press, pp 3889–3895

Yang C, Liu Z, Zhao D, Sun M, Chang EY (2015) Network representation learning with rich text information. In: IJCAI conference. AAAI Press, pp 2111–2117

Sun X, Guo J, Ding X, Liu T (2016) A general framework for content-enhanced network representation learning. arXiv preprint arXiv:1610.02906

Pan S, Wu J, Zhu X, Zhang C, Wang Y (2016) Tri-party deep network representation. In: IJCAI conference. AAAI Press, pp 1895–1901

Chung F (2005) Laplacians and the Cheeger inequality for directed graphs. Ann Combin 9(1):1–19

Ou M, Cui P, Pei J, Zhang Z, Zhu W (2016) Asymmetric transitivity preserving graph embedding. In: ACM SIGKDD. pp 1105–1114

Katz L (1953) A new status index derived from sociometric analysis. Psychometrika 18:39–43

Shi J, Malik J (2000) Normalized cuts and image segmentation. Proceedings of IEEE CSCCVPR 731–737

Satuluri V, Parthasarathy, S (2011) Symmetrizations for clustering directed graphs. EDBT/ICDT Workshops. pp 343–354

Yu HF, Jain P, Kar P, Dhillon AIS. (2013). Large-scale Multi-label Learning with Missing Labels. International Conference on Machine Learning

Acknowledgements

This work was supported by National Science Foundation of China (Grant Nos.81960915).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, Y., Zhu, C., Chen, J. et al. Representation learning of in-degree-based digraph with rich information. Complex Intell. Syst. 10, 5379–5390 (2024). https://doi.org/10.1007/s40747-024-01435-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01435-x