Abstract

Digital badges are being adopted widely in educational settings as an alternative assessment model, but research on their impact on motivation is scarce. The present study examined college undergraduates (n = 53) enrolled in first-year writing courses, where badges represented essential course outcomes. Participants were categorized as either high or low expectancy-values, and intrinsic motivation to earn badges was measured repeatedly during the 16-week semester. Participants’ attitudes toward digital badges also were investigated. Findings reinforced previous research that digital badges function differently according to the type of learner. Results indicated a generally positive view of badges in English courses, though levels of intrinsic motivation to earn the badges increased for high expectancy-value learners only. It is suggested that incorporating digital badges as an assessment model benefits learners who have high expectations for learning and place value on learning tasks, but badges also could disenfranchise students with low expectancy-values. Digital badges are viable as assessment tools but heavily dependent upon individual learner types.

Similar content being viewed by others

Introduction

The use of digital badges is trending upward in higher education (Filsecker and Hickey 2014; Young 2012). Originating from game-based learning environments and massively open online courses (MOOCS), badges are seen used in a variety of contexts, including recognizing skill-based competencies, participatory awards, professional development, and digital portfolios. Educational badges are “visible proof of some quality of participation and contribution that previously wasn’t even defined” and this learning framework could indeed be a “tipping point” for traditional assessment (Davidson 2011, para. 2). Used in the context of undergraduate English composition courses, where literacy competencies are sometimes difficult to measure quantifiably and where instructor pedagogy varies widely, badges function not as simple motivators, but as pedagogical devices to objectively and uniformly assess specific writing and critical reading skills, ultimately providing the learner with an authentic signifier of learning.

The Coastal Composition Commons (CCC) is an online badge program that was developed in response to the researchers’ institutional findings that undergraduates often exhibited a deficiency in academic literacy skills critical to student success. Programmatic assessments from the 2011–2012 and 2012–2013 academic years indicated that learners needed improvement in three skills, specifically: analysis, critique, and synthesis of sources. The CCC translated each of these skills (and others) into instructional modules that underscore key principles and strategies necessary for effective composition. Further, this multimodal composition program shifted the curricular structure of the two required courses in the first-year composition experience from three to four credit hours. The CCC is a common digital space that provides continuity in content across all first-year composition courses without compromising instructor flexibility. The online modules are built around a system of badges that involves both instruction and interactive assignments and that signifies a learner’s understanding of the material. In addition, the CCC aimed to better prepare students for the academic writing which they will be asked to complete for future classes. This level of preparedness was also meant to aid retention efforts. Accordingly, the goals of the digital badge initiative were to:

-

Develop academic and literate practices

-

Establish a common composition experience

-

Implement a multimodal approach to teaching composition

-

Increase learner retention in first-year composition courses

The CCC implemented digital badges specifically for assessing competencies in literacy skills that aligned with existing learning outcomes in first-year composition courses, and, as such, badges were viewed as a fine-grained feedback and high-stakes assessment tool. Digital badges served as assessment, but also were designed to support learner motivation. While research on digital badging and motivation is increasing, to date there has been little research on the relationship between digital badges as assessment instruments and motivation in a higher education classroom. The purpose of this study was to investigate the effects of a digital badge system, primarily used as an assessment tool, on three aspects of undergraduate learners: levels of intrinsic motivation, expectancy and value of learning, and general attitudes toward digital badges. Overall, we sought to better understand the pedagogical value of badges in composition courses.

Digital badges

The Humanities, Arts, Science, and Technology Alliance and Collaboratory (HASTAC) defines a digital badge as a “validated indicator of accomplishment, skill, quality, or interest, that can be earned in many learning environments.” A digital badge can differ from analog badges, such as Boy Scout merit badges, in that it can contain metadata which details information about the learner, the badge issuer, and the evidence that was submitted in order to earn the badge. Ostensibly, a badge is a digital image that is distributed to a learner once he or she has demonstrated that the criteria for earning the badge have been met. The image is usually designed to reflect an accomplishment being recognized or the institution awarding the badge. Upon receipt of a badge, earners may choose to share their badge collection to an online portfolio host such as Credly or Mozilla Backpack as well as any online social media outlet or personal website. Ahn et al. (2014) identify three categories for badge uses: (1) badges as motivators, (2) badges as pedagogical tools, and (3) badges as signifiers or credentials. Recently, proponents of digital badges postulated that “well-designed badges can serve as signifiers of what knowledge and skills are valued, guideposts to help learners plan and chart a path, and as status mechanisms in the learning process” rather than simple tokens of participation or affirmation (Ahn et al. 2014, p. 4).

A badge system provides both the learning and technical infrastructure that delivers badges to learners for meeting common benchmarks and standards. According to the MacArthur Foundation, a sponsor of many digital badge initiatives, (http://www.macfound.org/programs/digital-badges/), a badge system is defined as an “assessment and credentialing mechanism that is housed and managed online. Badges are designed to make visible and validate learning in both formal and informal settings, and hold the potential to help transform where and how learning is valued.” A typical badge pathway includes instructional modules, usually self-paced, that require submission of an artifact to be assessed by a teacher, or, in some cases, peers.

The ecosystem for using badges is typically rooted in one of two assessment models: merit badges and gaming achievements, where the former model acknowledges specific knowledge and skills in informal learning environments, and the latter model recognizes learning achievements gained through pursuit of other learning outcomes (Abramovich et al. 2011). In educational settings, badges often embrace a hybrid of the two assessment models in an attempt to recognize learning (both formally and informally) and motivate the learner through game-like encouragement. A badge system can vary by design; learners may be required to satisfy multiple tasks before earning a badge, or a badge may represent each achievement. Some systems may allow learners complete control of the instructional sequence, and other system designs may require learners to follow a prescribed learning pathway. Regardless of how digital badges are designed and implemented, their effects on learner achievement and intrinsic motivation remain largely unclear.

Intrinsic motivation

In regards to motivation, it is evident that there is no maxim that can be applied to every learning opportunity. Prior studies on badge-based learning environments are scarce but have indicated that the impact on motivation depends upon the background of the learner as well as the type of accomplishment being validated by the badge (Abramovich et al. 2013). We suggest that this dependency is based partly on the deliberation of how motivation in educational settings is impacted by the use of external motivators. Some studies posit that external rewards have a negative effect on academic performance and subsequent intrinsic motivation, becoming extrinsic to learning motivation (Covington 2000; Deci et al. 2001); others see external motivators as having powerful benefits for learning (Lei 2010; Lowman 1990).

Ryan et al. (1983) differentiate tangible rewards as task-noncontingent, task-contingent, or performance-contingent. In our implementation, learners were required to earn badges as part of the course requirements, and so the badges can be considered task-contingent rewards, also referred to as completion-contingent rewards. However, because learners had to demonstrate an expected level of performance to earn each badge, these rewards should be considered performance-contingent rewards, or “rewards given explicitly for doing well at a task or for performing up to a specific standard” (Deci et al. 2001, p. 11). While some digital badges could be thought of as extrinsic incentivizers, the badges in this study were implemented as assessments of course learning outcomes. The badges were designed to ensure consistent instruction for learning objectives, provide clear feedback, and function as a targeted evaluation tool.

Consequently, measuring learners’ motivational goal orientation is necessary when evaluating the effectiveness of digital badges. A learner might view them as task-contingent, or “rewards that were offered for doing an activity that was easy enough that everyone could do it, so everyone got the same reward” (Ryan et al. 1983, p. 739). In this sense, the learner’s lack of intrinsic motivation to earn the digital badges might result in lower academic performance, thus fulfilling a self-prophecy. In contrast, a learner who has strong intrinsic motivation to earn the digital badges and perceives them as performance-contingent might yield higher academic performance. Alongside understanding motivational orientation, it also might be worthwhile to acknowledge the learner’s pre-existing expectations for success and valuation for the learning activity.

Expectancy-value

Expectancy-value theory posits that several factors contribute to a learner’s source of academic motivation, which can be represented as (1) a learner’s expectation for success, and (2) the value that the learner places on the goal (Wigfield 1994). Therefore, we applied expectancy-value theory to measure learner motivational orientation prior to their experience with the badge system. Expectancy-value can indicate the level of a learner’s intrinsic motivation to learn, which is critical to academic achievement (Bandura 1993). A learner’s expectation to learn can be task-contingent (e.g., I’m good at reading) or domain contingent (e.g., I’m good at Math). Valuation of the learning goal can be related to identity (e.g., I’m a writer) or intrinsic value (e.g., Writing is important to me). In combination, a learner’s expectation and values related to a learning goal will predict future performance, choices, and persistence (Wigfield and Eccles 2000). Because one’s expectancy-value orientation is critical to understanding his or her subsequent academic performance, we suggest that expectancy-value theory is appropriate for differentiating how learners might relate to badges. For example, a learner with a high expectation to be successful in English might feel validated by earning a badge. On the other hand, earning a badge might devalue the learning experience if the badge is viewed simply as an external reward rather than a performance assessment. Because of this, expectancy-value theory is not the only appropriate motivation theory for measuring the impact of badges. In fact, it cannot illuminate by itself whether learners become directly motivated to earn badges. It is entirely possible that a learner might want to earn a badge but not have it impact their expectation of learning or their valuation of what they are learning. Consequently, expectancy-value was used to categorize participants as either high or low expectancy-value learners, and a different instrument measured actual motivation toward earning badges.

Research method and background

Materials

Coastal composition commons

The Coastal Composition Commons (http://ccc.coastal.edu/) badge program was launched at the start of the Fall 2014 semester as a programmatic initiative meant to unify learners’ experiences in the first-year composition classroom. The CCC was developed jointly by English faculty members at Coastal Carolina University in response to institutional assessment findings that learners were exhibiting deficiencies in academic reading and writing competencies after their first-year composition courses: English 101: Composition and English 102: Composition and Critical Reading. The student learning outcomes for these two courses are as follows:

-

1.

Reading To demonstrate the ability to comprehend and analyze language, you will need to show that you can read with understanding and purpose by doing the following: (1) Integrate quotations smoothly and appropriately into your writing, (2) Summarize and paraphrase sources accurately, and acknowledge (cite) your sources honestly, and (3) Synthesize multiple written sources, using several sources to support one point or discussing likenesses and differences among several sources.

-

2.

Writing To demonstrate the ability to express yourself clearly and effectively, you must fulfill the following expectations for successful writing: (1) Establish a main point, a focus, or an argument (a thesis), (2) Provide supporting reasons or evidence, (3) Organize and structure the project logically, (4) Employ varied sentence structure, effective diction, and an engaging style, and (5) Conform to conventional mechanics, spelling, and grammar.

-

3.

Thinking critically To demonstrate the ability to comprehend, analyze, and critically evaluate information, you must use critical thinking in the following ways: (1) Choose appropriate, reliable written sources, (2) Respond to and comment on written sources, and (3) Critique written sources.

These outcomes were translated into eight individual digital badges. Table 1 illustrates each of the badges and its corresponding learning objective. All learners enrolled in the first-year composition courses (2326) attempted to earn each of the badges, as the badges accounted for 25 % of the overall course grade. Each badge took learners approximately an hour to complete, and so, the addition of the CCC badge program to all first-year composition courses added a fourth credit hour to the traditionally 3-credit hours courses.

The CCC operates as a standalone website powered by a combination of WordPress, Credly, and BadgeOS plugins. Although the site itself is open access, learners and faculty must log in with their university credentials in order to submit and review materials. The decision to build the program as an independent website rather than embedded in the university LMS was due to the variation in the extent to which instructors use the LMS as well as a number of instructors who employ an alternative LMS in their courses. Also, a mobile-friendly design was of high importance, given the number of instructors who implement a bring-your-own-device model in their classrooms and the learners who access course content via a smartphone or tablet. Figure 1 shows the CCC as viewed from a mobile device.

In its inaugural semester, the CCC was implemented by all 122 individual course sections of English 101 and English 102, which comprised 63 different faculty members and over 2300 learners. Each professor decided the sequencing and pacing of the badge content for his or her course, allowing for learners to progress through the badge module at his or her own pace, usually outside of class. The badge pages resembled a chapter from a digital textbook with embedded multimedia including interactive maps, infographics, narrated videos, faculty interviews, and audio files. All badges followed a consistent instructional design; introduce the skill (e.g., synthesizing), provide multiple examples of the skill in use, and prompt the learner with a writing assignment requiring demonstration of competency. Upon submission, the course professor evaluated the writing assignment, and the badge was either ‘approved’ or ‘denied’ according to the quality of the learner’s submission. In most cases, learners were allowed two submissions per badge so that if the professor found the first submission to be inadequate, the submission would be marked ‘denied,’ and the learner could submit a revised attempt according to specific feedback. Once evaluated, learners received a notification email, which enclosed the digital badge if approved, or a comment explaining why the initial submission had been denied. Upon approval, the digital badge was sent automatically to the learner’s “Badge Profile” page, located on the CCC site, which displayed a collection of earned badges. Learners uploaded 17,674 badge submissions during the four-month period, 10,976 of which were approved.

Participants

Fifty-three participants were derived from a population of undergraduate college students originating from one mid-sized university (student body population approximately 10,000) in the mid-Atlantic region. These learners were enrolled in either English 101: Composition or English 102: Composition and Critical Reading, as part of a first-year composition program. Although there were over 2300 students enrolled in first-year composition courses, voluntary participation in this study required completion of four surveys across 16 weeks, which predictably diminished the final number of qualified participants (n = 53). At the conclusion of the semester, an additional survey measuring general attitudes toward earning badges was distributed to all students enrolled in either English 101 or English 102. This one-time Likert-type survey yielded a larger but different sample (n = 202), which included many but not all of the original study participants.

Measures

Learner expectancy-value was self-reported using the scale developed by Wigfield and Eccles (2000). This 11-item scale was divided into three constructs: Ability belief items, expectancy items, and usefulness, importance, and interest items, and the alpha was .895 (see Appendix 1). The median score from the results of this survey was used to subdivide participants into a simple binomial distribution ‘low expectancy-value’ or ‘high expectancy-value’ learners, for the purposes of examining reactions to badges. We hypothesized that learners with high expectancy-value beliefs would have different reactions to the badges than learners with lower expectancy-value beliefs.

The intrinsic motivation inventory (IMI) was developed by McAuley et al. (1989), and it measures levels of intrinsic motivation to earn digital badges across four different dimensions: interest-enjoyment, perceived competence, effort-importance, and tension-pressure. The IMI was employed three separate times during the course of the 16-week semester. Consequently, participants had to complete all three measures. Although the original scale was 16 items, two items were omitted for this study because they were considered irrelevant to aim of this study. The remaining items were phrased in order to measure individual intrinsic motivation in regards to digital badges. The revised scale was 14 items total (see Appendix 2). Cronbach’s alpha was .806, .849, and .801 for each of the three iterations, respectively.

Learner attitudes toward the badges were measured using a badge opinion survey previously utilized by Abramovich et al. (2011). The wording of questions was modified slightly to apply specifically to English courses, and three additional open-ended questions were added to the survey. The instrument was delivered to all undergraduates in first-year composition courses and was a single measurement; consequently, the data set represented a larger but different sample population than reflected in the repeated measures of the IMI. The alpha was .870 for the 16 Likert-type items (see Appendix 3).

Phone interviews also were conducted to collect participants’ attitudes toward digital badges and responses were qualitatively analyzed for emerging themes using a grounded theory approach.

Procedure

A within-subjects pre-experimental design was conducted on 53 college undergraduates enrolled in two required first-year composition courses: English 101: Composition and English 102: Composition and Critical Reading. As part of the requirements for the first-year composition courses, the participants completed the self-paced badge assignments outside of the classroom. In addition to the course expectations, participants in this study completed the expectancy-value survey at the beginning of the semester, and the Intrinsic Motivation Inventory at three different time points throughout the semester (at the beginning, midpoint, and conclusion of the semester) in an effort to capture more accurate data through repeated measures. A badge opinion survey was distributed to all students enrolled in the first-year composition program at the end of the 16-week fall semester; this one-time Likert-type survey yielded a larger sample that included most but not all of the original study participants.

Research questions

-

1.

How does earning digital badges affect learners’ intrinsic motivation to earn badges?

-

2.

How are learners’ motivations to earn badges related to levels of expectancy-value beliefs?

-

3.

What are learner’ attitudes toward earning digital badges in their English courses?

Results

Table 2 presents the overall mean results for each of the measures.

How does earning digital badges affect learners’ intrinsic motivation to earn badges?

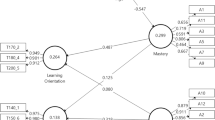

A repeated measures ANOVA with a Greenhouse-Geisser correction determined that mean intrinsic motivation scores for badges (IMI) differed statistically significantly between the first and second iteration (F(1.965, 102.18) = 5.629, p < .005). Post hoc tests using the Bonferroni correction revealed that earning digital badges elicited a significant reduction in motivation from the beginning to the middle of the semester (67.25 ± 7.13 vs. 63.51 ± 8.85, respectively), which was statistically significant (p = .006). While we did observe an increase in motivation to earn badges from the middle to the conclusion of the semester (63.51 ± 8.85 vs. 64.38 ± 11.09, respectively), it was not a statistically significant increase (see Fig. 2). Therefore, it can be concluded that participants experienced a statistically significant decrease in intrinsic motivation for badges after the first half of the semester, but that motivation stabilized for the rest of the semester after that initial decrease.

How are learners’ motivations to earn badges related to levels of expectancy-value beliefs?

Table 3 presents the overall mean results for the high and low expectancy-value groups for each of the iterations of the Intrinsic Motivation Inventory.

A one-way between subjects ANOVA was conducted to compare the levels of intrinsic motivation to earn badges in learners with high and low expectancy-value measures. The median score from the expectancy-value scale was used to categorize participants into two groups: high (>55) or low (≤55) expectancy-value learners. There was a significant effect of earning digital badges on intrinsic motivation at the p < .05 level for the two groups [F(1, 51) = 6.42, p = .014] on the overall mean score on the IMI for badge.

A Pearson product-moment correlation coefficient was computed to assess the relationship between the learners’ expectancy-values and their mean of the three iterations of the intrinsic motivation ratings. The analysis was significant, r(53) = .420, p < .002. Overall, there was a strong, positive correlation between learners’ expectancy-values and subsequent motivation ratings. Higher expectancy-values were positively correlated with higher levels of intrinsic motivation to earn badges. This finding suggested that learners who placed higher expectations and more value on the learning tasks possessed higher levels of motivation to earn digital badges.

To further unpack the relationship between expectancy-value and motivation to earn badges, we performed an independent samples t test to compare levels of intrinsic motivation in the high and low expectancy-value groups in regards to motivation to earn digital badges. There was a significant difference in the motivation scores between the two groups on the first and third iteration of the IMI, which was administered at the beginning and end of the semester, respectively. On the first iteration of the survey, the high expectancy-value learner group (M = 69.72, SD = 6.77) reported significantly higher levels of motivation compared to the low expectancy-value learner group (M = 65.04, SD = 6.81); t(51) = 2.51, p = .015. Likewise, on the third and final iteration of the survey, the high expectancy-value learner group reported significantly higher levels of motivation (M = 68.52, SD = 10.36), compared to the low expectancy-value learner group (M = 60.68, SD = 10.55); t(51) = 2.72, p = .009. Figure 3 illustrates the differences between the two groups on each of the three iterations of the Intrinsic Motivation Inventory. Taken together, these results indicated that learners with high levels of expectancy-value reported higher levels of intrinsic motivation compared to those with lower expectancy-values with regard to earning digital badges, but only at the beginning and end of the semester. These findings indicated that when learners earned the digital badges, they reported higher levels of intrinsic motivation to continue earning badges when they had higher expectations for learning and placed more value on the learning tasks.

What are the overall attitudes toward earning digital badges in English courses?

To effectively answer this research question, a separate qualitative analysis was used to supplement the findings from the Likert-type survey. At the conclusion of the fall semester, an attitude survey with regard to earning digital badges was delivered to all undergraduates enrolled in the first-year composition courses. Participation was voluntary and was not a repeated measure, so the number of respondents (n = 202) represented a different and larger sample than was used for the two previous research questions, which did require successful completion of repeated surveys.

Interestingly, results from the distributed survey revealed that attitudes toward the digital badge program relied heavily on the performance of the learner; that is, respondents who attempted or earned few or none of the badges had negative views compared to those who successfully earned multiple badges. Table 4 displays survey responses according to the number of badges earned. Responses were based on a Likert-type scale ranging from 1 to 7, where 1 = Strongly Disagree and 7 = Strongly Agree.

Results from the survey indicated mixed attitudes toward the digital badges, though the badge completion rate influenced the learners’ opinion of badges. Compared to the mean overall responses of the other groups, learners who earned all eight of the digital badges had more favorable responses on all of the survey items except for the statement “The badges were more important to me than my grades on my ENGL assignments.” Combined with our previous analysis, this suggests that as more badges were earned, learner valuation of badges also increased. Conversely, the fewer badges that were earned or attempted resulted in more dissatisfaction with the badge program, as reported on the survey. A majority of learners perceived the badges to be difficult to earn and wished the assignments were easier, even though the majority of respondents also understood why they were awarded the badge(s). Further, respondents agreed with the statement “The badges I earned represent what I learned in this class.” This suggests that learners might have wished for a less challenging curriculum rather than just easier to earn badges.

The survey included open-ended questions, and responses were analyzed qualitatively. In response to the question, “Did you treat the assignments with digital badges any differently than other course assignments?” participants responded that they did not regard the badge assignments differently than other course assignments; rather, badges were viewed with the same level of priority as other course assignments, mainly because of their attached grade values. Responses indicated that learners did not perceive that the badges were designed to reinforce foundational writing skills that inform later, more high-stakes, writing situations. Instead, badges were valued superficially for their grade worth, as experienced with most other course assignments. In general, respondents indicated that badges did not differ in importance from other course assignments. Specific responses included:

I treated the digital badge assignments as if they were any other assignment I was given in person. I work hard, try my best, and fix it, if need be.

I did not treat the assignments with digital badges any differently than other course assignments. To me, they were just as important to have done thoroughly and on time as my papers were.

No, because the badges actually helped with the course assignments.

I treated the badges the same as I treat my other assignments. I take great care with all my assignments and the same is to be said with these badges.

No, I did not treat the assignments with digital badges any differently than other course assignments, because I feel every badge and assignment are all equally important.

Participants also were asked to respond to the open-ended question, “Would you describe your experience with digital badges in this course as positive or negative?” Of the 202 survey respondents, 103 (51.0 %) believed their experiences with digital badges to be positive, and 64 (31.7 %) reported having a negative experience earning digital badges. Thirty-five respondents (17.3 %) gave a response that indicated they were neutral to their experiences with badges, or they did not answer the question directly.

Just over half of the survey respondents (103 statements—51 %) viewed digital badges favorably. And, this sentiment was most common when the learner perceived a connection between the badges and the rest of the course assignments. As indicated earlier, the number of badges earned impacted the learners’ view of the digital badge program overall. In response to the question, “Would you describe your experience with digital badges in this course as positive or negative?” some participants replied:

Positive because it related directly to what we were learning and will help me in my future experiences with writing.

Positive, the things we were working on in class matched up to badges we had to do.

It was a positive experience because it went along with what we needed in the papers that we were writing at the time. The badges prepared me for these assignments in all.

I would say it is positive because the badge did help me overall. They helped me on the papers that were due in the course.

A smaller percentage of participants reported having an overall negative experience with earning digital badges (64 statements—31.7 %). Common themes that existed in these participants were that the assignments were tedious, time consuming, or difficult to achieve. In some cases, respondents attributed their frustrations to their instructors’ lack of support and direction-giving. Other learners with negative experiences reported that the badge content was too foundational for a college-level course. Statements included:

I would describe my experience to be a negative one because I disliked doing them and also, I did not get approved for one of the badges and I do not understand why.

My experiences with digital badges in this course were negative because I think the badges are a waste of time and they are frustrating.

Negative. Instructions were too hard to understand.

The badges are a pain to do. If they were an in-class assignment then they wouldn’t be so bad.

I would describe my experience with these badges as a negative one because they were very difficult and stress causing.

Negative. They seemed more like busy work.

This seems like a great idea for high school freshman, not college level.

Some respondents (35 statements—17.3 %) detailed both positive and negative aspects of digital badges, so these responses were considered neutral. Those who responded neutrally often cited the amount of time that the badge assignments demanded, and viewed digital badges primarily as a function of grade values, rather than instruments for improving writing, particularly in connection with other related writing assignments.

I am in between with the badges because some of them helped, but a lot of them weren’t really necessary and [required] too much reading.

It was a neutral experience. I did not really enjoy doing them but it did help me.

I would say neutral. Although they were not very hard for me to complete but they were often tedious.

I would describe my experience with digital badges in this course as both positive and negative. Mostly positive, because now I am trying to apply what I learned to my other assignments in college.

Discussion and conclusion

The purpose of this study was to investigate the effects of a digital badge system on undergraduate learners’ levels of intrinsic motivation to earn badges, how it related to their expectancy-values, and to understand general attitudes toward digital badges in learning environments. Overall, we conclude that the effectiveness of digital badges is highly dependent on both the motivation and the expectancy-value of the learner. Specifically, digital badges proved to be more beneficial for learners with higher levels of expectancy-value motivational goals compared to those who did not have high expectations for learning or placed little value on the learning tasks. This finding is consistent with previous research, which suggests a positive relationship between learner motivations and expectations (Darby et al. 2011) and learner abilities and digital badging (Abramovich et al. 2013). It is important to note that digital badges were utilized in these first-year composition courses as an assessment metric, which certified proficiency in existing course outcomes, and not prioritized as a pedagogical tool to motivate unwilling learners to perform. Consequently, the digital badge system functioned as designed, providing consistency in instruction and assessment for the 53 study participants; who were derived from two courses, English 101 and English 102, taught by 21 different instructors in 33 different sections.

As our findings suggest, the simple presence of digital badges did not enhance learner motivations. Rather, learners recognized the importance of badges as grade values since they were employed as both completion-contingent and performance-contingent rewards. More deeply, responses from the attitudinal survey indicated that learners often did not understand why their badge submissions were denied. Feedback provided in performance-contingent rewards (i.e., an ‘accepted’ or ‘denied’ badge submission) may be categorized as either informational, where feedback explains why a badge was or was not earned, or controlling, where feedback reinforces the expectations for the learner as if to say, “Good, you’re doing as you should” (Ryan et al. 1983, p. 742). Controlling feedback has been shown to lower intrinsic motivation (Ryan et al. 1983); therefore, if the learners interpreted the feedback from the earned badge as controlling rather than informational, this also could have led to a decrease in intrinsic motivation to earn badges. Accordingly, we believe that badges should be part of an assessment model that is situated within a course instead of a decontextualized or supplemental reward system for meeting competencies. Once the value of badges is established as an assessment, then motivational effects can be developed. Extrinsic tangible rewards undermine intrinsic motivation (Deci et al. 2001), so it is imperative that badges function as an informational feedback tool in order to assess learning outcomes.

A significant drop-off in motivation to earn digital badges was observed around the middle of the semester for all participants, forming a u-shaped pattern in intrinsic motivation to earn the digital badges. Previous research on motivation indicates that this finding is normal, as people typically experience a lull in motivation during the activity, but that motivation increases as the goal’s end state approaches (Toure-Tillery and Fishbach 2011). Our findings show that learners fluctuated in their motivational strength to reach their end goals of earning the badges, but that high expectancy-value learners rebounded toward the end of the semester whereas learners with low expectancy-values did not. Understanding learners’ expectancy-values is critical to predicting future academic performance (Wigfield and Eccles 2000). Therefore, it can be recommended that more emphasis be placed on the expectations and value for earning the badges, and that this should be stressed consistently throughout the semester.

Overall, learners reported having a positive experience with the digital badge framework; though this, too, was dependent on learner performance. Participants responded more favorably toward badges when more badges had been earned. Likewise, participants who earned few or no badges expressed frustration with the program. This is not surprising given that previous research has found a positive correlation between learner performance and subsequent evaluations of instructor and course effectiveness (Krautmann and Sander 1999; Langbein 2008). In general, learners indicated that the badges were difficult to earn and adequately represented what was learned in class. From an assessment model standpoint, this is promising. However, learners also acknowledged that the badges were not more important than other course assignments; this is alarming given the heavy weighting and importance of demonstrating competency through earning the badges.

In closing, we do not conclude that digital badges are a magic bullet for learning in all environments. Rather, badges should be utilized as a way to measure learning progress in conjunction with many other instructional tools and strategies. Digital badges should function as recognizers of the learning that already has taken place rather than affirmations or rewards (Ahn et al. 2014; Hickey et al. 2013). The findings from this study suggest that the use of digital badges as an assessment tool may impact a learner’s level of intrinsic motivation to earn badges, but only if he or she already had expectancy for learning and placed a value on the learning task itself. In this sense, digital badges potentially may cultivate learner motivation and performance, but for learners who do not possess a high expectancy-value for learning, badges may have no such effect. Digital badges are not a universal solution to motivational shortcomings in all learners; however, this research argues that digital badges deserve a place in the broader context of assorted instructional devices and assessment instruments.

Limitations

There were limitations in this study. First, because the data collection period lasted the entire semester (16 weeks), the number of participants steadily declined as learners either withdrew from classes or stopped reporting their data during the semester, resulting in a smaller sample size. Also, it should be acknowledged that the data collection was self-report Likert-type surveys, which can be inaccurate. The study did not account for this as a covariate. Last, we did not measure instructor variability. Because this was the first semester in which the badge program was implemented, some instructors integrated the program into their courses more seamlessly than others. This possibly could have impacted learners’ attitudes and motivations toward earning the badges.

Future research

The relationship between earning digital badges and intrinsic motivation remains a complex issue. This study has indicated that expectancy-value plays a vital role in learner performance though future research on the use of digital badges in learning environments should explore this relationship between badges and individual learner characteristics in more depth. More research is necessary to unpack how digital badges can be used as effective assessment instruments that motivate learners positively and to better understand the impact of pedagogy on badging. Additional investigation also is needed to decode how learners view badges in relation to expectancy-value. Badge types may play a pivotal role in learner motivation, and it would be worthwhile to examine whether learners view badges as completion or performance-contingent, and how this perspective impacts motivation. Likewise, more investigation is needed into how the accumulation of earned badges affects intrinsic motivation. As the popularity of digital badging increases, these are important considerations, among many others, that remain to be fully understood.

References

Abramovich, S., Higashi, R., Hunkele, T., Schunn, C., & Shoop, R. (2011). An achievement system to increase achievement motivation. Paper presented at the games learning society 7.0, Madison, WI.

Abramovich, S., Schunn, C., & Higashi, R. M. (2013). Are badges useful in education? It depends upon the type of badge and expertise of learner. Educational Technology Research and Development, 61(2), 217–232.

Ahn, J., Pellicone, A., & Butler, B. S. (2014). Open badges for education: What are the implications at the intersection of open systems and badging? Research in Learning Technology, 22, 1–9. doi:10.3402/rlt.v22.23563.

Bandura, A. (1993). Perceived self-efficacy in cognitive development and functioning. Educational Psychologist, 28(2), 117–148.

Covington, M. V. (2000). Intrinsic versus extrinsic motivation in schools: A reconciliation. Current Directions in Psychological Science, 9, 22–25.

Darby, A., Longmire-Avital, J., Chenault, J., & Haglund, M. (2011). Students’ motivation in academic service-learning over the course of the semester. College Student Journal, 47(1), 185–192.

Davidson, C. (2011). Could badges for lifelong learning be our tipping point? HASTAC. Retrieved from http://www.hastac.org/blogs/cathy-davidson/2011/11/14/could-badges-lifelong-learning-be-our-tipping-point

Deci, E. L., Koestner, R., & Ryan, R. (2001). Extrinsic rewards and intrinsic motivation in education: Reconsidered once again. Review of Educational Research, 71(1), 1–27.

Filsecker, M., & Hickey, D. T. (2014). A multilevel analysis of the effects of external rewards on elementary students’ motivation, engagement, and learning in an educational game. Computers & Education, 75, 136–148.

HASTAC. (n.d.). What is a digital badge? Retrieved from http://www.hastac.org/digital-badges

Hickey, D. T., Itow, R. C., Rehak, A., Schenke, K., & Tran, C. (2013). Speaking personally—with Erin Knight. American Journal of Distance Education, 27(2), 134–138. doi:10.1080/08923647.2013.783268.

Krautmann, A. C., & Sander, W. (1999). Grades and student evaluations of teachers. Economics of Education Review, 18(1), 59–63. doi:10.1016/S0272-7757(98)00004-1.

Langbein, L. (2008). Management by results: Student evaluation of faculty teaching and the mis-measurement of performance. Economics of Education Review, 27(4), 417–428. doi:10.1016/j.econedurev.2006.12.003.

Lei, S. (2010). Intrinsic and extrinsic motivation: Evaluating benefits and drawbacks from college instructors’ perspectives. Journal of Instructional Psychology, 37(2), 153–160.

Lowman, J. (1990). Promoting motivation and learning. College Teaching, 38(4), 136–139.

McAuley, E., Duncan, T., & Tammen, V. V. (1989). Psychometric properties of the intrinsic motivation inventory in a competitive sport setting: A confirmatory factor analysis. Research Quarterly for Exercise and Sport, 60, 48–58.

Ryan, R., Mims, V., & Koestner, R. (1983). Relation of reward contingency and interpersonal context to intrinsic motivation: A review and test using cognitive evaluation theory. Journal of Personality and Social Psychology, 45(4), 736–750.

Toure-Tillery, M., & Fishbach, A. (2011). The course of motivation. Journal of Consumer Psychology, 21(4), 414–423.

Wigfield, A. (1994). Expectancy-value theory of achievement motivation: A developmental perspective. Educational Psychology Review, 6(1), 49–78. doi:10.1007/BF02209024.

Wigfield, A., & Eccles, J. S. (2000). Expectancy-value theory of achievement motivation. Contemporary Educational Psychology, 25, 68–81. doi:10.1006/ceps.1999.1015.

Young, J.R. (2012). Badges earned online pose challenge to traditional college diplomas. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/Badges-Earned-Online-Pose/130241/

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Expectancy-value (Wigfield and Eccles 2000)

Ability beliefs items

-

1.

How good at English are you? (not at all good/very good)

-

2.

If you were to list all the students in your English class from the worst to the best in English, where would you put yourself? (one of the worst/one of the best)

-

3.

Compared to most of your other school subjects, how good are you at English? (a lot worse in English than in other subjects/a lot better in English than in other subjects)

Expectancy items

-

1.

How well do you expect to do in your English class this year? (not at all well/very well)

-

2.

How good are you at learning something new in English? (not at all good/very good)

Usefulness, importance, and interest items

-

1.

In general, how useful is what you learn in your English class? (not at all useful/very useful)

-

2.

Compared to most of your other activities, how useful is what you learn in your English class? (not at all useful/very useful)

-

3.

For me, being good at English is (not at all important/very important)

-

4.

Compared to most of your other classes, how important is it for you to be good at English? (not at all important/very important)

-

5.

In general, I find working on English assignments (very boring/very interesting)

-

6.

How much do you like learning English? (not at all/very much)

Appendix B: Intrinsic motivation inventory (McAuley et al. 1989)

1 = Strongly disagree |

2 = Disagree |

3 = Somewhat disagree |

4 = Neutral |

5 = Somewhat agree |

6 = Agree |

7 = Strongly agree |

1. I enjoyed earning this digital badge very much | (INT-ENJ) |

2. I think I am very good at earning digital badges | (COMP) |

3. I put a lot of effort into earning this digital badge | (EFF-IMP) |

4. It was important to me to do well at earning this digital badge | (EFF-IMP) |

5. I felt tense while doing the assignment(s) to earn this digital badge | (TEN-PRES) |

6. I tried very hard to earn this digital badge | (EFF-IMP) |

7. Earning this digital badge was fun | (INT-ENJ) |

8. I would describe earning this digital badge as very interesting | (INT-ENJ) |

9. I felt pressured while completing the assignment(s) required for this badge | (TEN-PRES) |

10. I was anxious while completing the assignment(s) for this badge | (TEN-PRES) |

11. After I completed the assignment(s) for this badge, I felt very competent | (COMP) |

12. I was very relaxed while completing the assignment(s) for this badge | (TEN-PRES) |

13. I am very skilled at earning digital badges in this course | (COMP) |

14. Attempting to earn this badge held my attention | (INT-ENJ) |

Omitted | |

|

|

|

|

INT-ENJ | Interest-enjoyment dimension |

COMP | Perceived competence dimension |

EFF-IMP | Effort-importance dimension |

TEN-PRES | Tension-pressure dimension |

Appendix C: Badge opinion survey (Abramovich et al. 2011)

-

1 = Strongly disagree

-

2 = Disagree

-

3 = Somewhat disagree

-

4 = Neutral

-

5 = Somewhat agree

-

6 = Agree

-

7 = Strongly agree

-

1.

I understand why I earned all of my badges.

-

2.

The badges were more important to me than learning.

-

3.

I think the badges are a good addition to the course.

-

4.

I knew what badges were before I started working in this course.

-

5.

I wanted to earn more ENGL course badges.

-

6.

I do not care about the ENGL course badges.

-

7.

I like earning badges but not the ones in this ENGL course.

-

8.

I wish the ENGL course badges were harder to earn.

-

9.

I wish the ENGL course badges were easier to earn.

-

10.

I want to earn badges in future ENGL courses.

-

11.

I told others about my badges earned in this course.

-

12.

The ENGL course badges made me want to keep working.

-

13.

Compared to other assignments in this course, the digital badges motivated me to work harder.

-

14.

I shared my digital badges on a social networking site.

-

15.

The badges I earned represent what I learned in this class.

-

16.

The badges were more important to me than my grades on my ENGL assignments.

Open-ended questions

-

1.

Did you treat the assignments with digital badges any differently than other course assignments? Explain why or why not.

-

2.

Would you describe your experience with digital badges in this course as positive or negative? Please explain.

-

3.

Would you recommend that other classes use digital badges? Why or why not?

Phone interview questions

-

1.

Did you treat the assignments within the digital badges any differently than other course assignments? Explain why or why not.

-

2.

Did you find a difference in personal motivation toward completing the badge work when compared to working on other class assignments?

-

3.

When you received a badge, did you feel as though you had reached a level of accomplishment? Did you feel as though your work had been validated by your instructor?

-

4.

When you were denied a badge (or a badge submission), did you feel more motivated to be successful on the second attempt? Explain.

-

5.

Would you describe your overall experience with digital badges in this course as positive or negative? Please explain.

-

6.

To what degree have the digital badges impacted your writing skills?

-

7.

Would you recommend that other English classes use digital badges? Why or why not?

Rights and permissions

About this article

Cite this article

Reid, A.J., Paster, D. & Abramovich, S. Digital badges in undergraduate composition courses: effects on intrinsic motivation. J. Comput. Educ. 2, 377–398 (2015). https://doi.org/10.1007/s40692-015-0042-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40692-015-0042-1