Abstract

The extent to which a given extreme weather or climate event is attributable to anthropogenic climate change is a question of considerable public interest. From a scientific perspective, the question can be framed in various ways, and the answer depends very much on the framing. One such framing is a risk-based approach, which answers the question probabilistically, in terms of a change in likelihood of a class of event similar to the one in question, and natural variability is treated as noise. A rather different framing is a storyline approach, which examines the role of the various factors contributing to the event as it unfolded, including the anomalous aspects of natural variability, and answers the question deterministically. It is argued that these two apparently irreconcilable approaches can be viewed within a common framework, where the most useful level of conditioning will depend on the question being asked and the uncertainties involved.

Similar content being viewed by others

Introduction

Extreme weather and climate events are of great societal interest as they significantly affect people and property—usually adversely. They are also of public interest since they are unusual natural phenomena, which have scientific stories behind them. Just as weather is a topic of daily conversation, extreme weather events (including longer-duration climate extremes such as drought) provide a universal talking point. Whilst their proximate cause is meteorological, it is now inevitable that the question will be asked, “Was this event due to climate change?” This is a perfectly natural question to ask given that climate change is a reality and that in many cases, climate change will be felt most directly through its impact on extremes. (For example, sea level rise will generally impact society through storm surges leading to coastal inundation.) People relate to what they have experienced, so if extremes are the sharp edge of climate change, then it becomes important, from the standpoint of both communication and risk reduction, to address this question scientifically.

It is sometimes said that anthropogenic warming of the climate system will increase the energy of the atmosphere, which will lead to more storminess and thus more extreme behaviour. It is true that a warmer atmosphere can hold more moisture, which can provide more latent heat release in a convectively driven extreme event, and more precipitation in general. But atmospheric motions are driven by energy differences [1], not by energy itself, and the polar amplification that is a fundamental characteristic of global warming [2] will tend to reduce the pole-to-equator temperature gradient. Also, in a warming world, one would tend to expect a reduction in cold extremes. Thus, whilst climate change has undoubtedly affected weather and climate extremes, both the sign and the magnitude of the effect need to be assessed on a case-by-case basis. Although there may be general expectations based on global aspects of climate change, there can be local departures from that behaviour, and over any given time period, multi-decadal variability can also play a role in changes in extremes [3].

It has also to be recognized that an unprecedented event does not imply that climate has changed. Weather and climate records are of finite length, and as the record lengthens, new record-breaking events will continue to occur, even for stationary statistics. Thus, climate change is reflected in deviations from this behaviour [4]. The temporal inhomogeneity that results from defining a reference period at the beginning of a time series can also lead to spurious trends in extremes when the reference period is short and the variability is normalized by that of the reference period [5]; several high-profile papers have fallen prey to this error, e.g. [6].

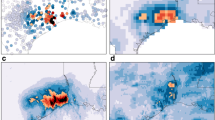

Weather concerns the instantaneous state of the atmosphere, but climate is generally understood to comprise its averaged behaviour (including higher-order statistics represented in probability distributions) over some period of time. The attribution of changes in the observed statistics of extremes is clearly a climate science question, which can be addressed using well-established detection-attribution methods [7]. In contrast, the role of climate change in a particular extreme event concerns only a single observed event, and thus involves no observed change, nor any averaging over observed events. This takes it out of the traditional domain of climate science and places it more within the domain of weather science or (for the longer-duration extremes) seasonal prediction. If a weather or climate event is truly extreme in the present climate, then perforce it requires unusual meteorological conditions, which means that climate change is at most only a contributing factor. (As noted by [8••], the failure to recognize this fact can lead to apparently contradictory conclusions concerning the same event.) The issues involved are illustrated for the extreme northern winter of 2013/2014 in Fig. 1. However, even a small contributing factor can have enormous consequences in the context of an extreme event, because the impacts are generally highly nonlinear in the hazard. The scientific question is then to determine that contribution.

The extreme northern winter of 2013/2014. a shows the instantaneous potential temperature distribution on the quasi-horizontal surface defined by Ertel potential vorticity equal to 2 PV units, approximately corresponding to the tropopause, on 5 January 2014. These maps are extremely useful for synoptic interpretation since the features behave quasi-materially; see [9]. The edge of the blue region approximately corresponds to the polar front (now sometimes called ‘polar vortex’), and the undulations are Rossby waves. A deep cold excursion is seen over the central USA, leading to the extreme cold snap experienced at that time. An extratropical cyclone is also seen over the North Atlantic, heading for the UK. This latter event was one of a series of storms that hit the UK that month, as the storm track was stuck in location for an extended period. Although no one storm was unusual, the persistence of the storm track was unusual and led to the record wet conditions shown in b. a Courtesy of Nick Klingaman and Paul Berrisford, using ECMWF operational analyses, and available from http://www.met.rdg.ac.uk/Data/CurrentWeather/. b Courtesy of Michaela Hegglin, using Hadley Centre data available from www.metoffice.gov.uk/hadobs [10]

In general, there seem to be two basic (and at first sight orthogonal) approaches for determining the impact of one factor on an effect involving multiple factors. One is what will be called the ‘risk-based’ approach, where the change in likelihood of the effect arising from the presence of that factor is estimated. It is understood that the attribution is only probabilistic, much as smoking increases the risk of lung cancer but is neither a necessary nor a sufficient cause of lung cancer in any particular individual. This approach to extreme event attribution was introduced to the climate science community by [11] and applied by [12] to the European heat wave of 2003. The second is what will be called the ‘storyline’ approach, where the causal chain of factors leading to the event is identified, and the role of each assessed. This approach is exemplified in [13••]’s study of the 2011 Texas drought/heat wave.

In considering the effects of climate change, there is a striking difference between those associated with purely thermodynamic aspects of the climate system and those also involving dynamical aspects [14•]. The former—which include continental- or basin-scale averages of quantities such as sea level, surface air temperature, sea-ice extent, snow cover, or upper-ocean heat content—exhibit changes that are generally robust in observations, in theory, and in models. However, regional aspects of climate change, including regional patterns of precipitation, generally involve dynamical aspects of climate change related to atmospheric and oceanic circulation, and these are robust neither in observations, in theory, or in models. This distinction is reflected in the strength of the various findings in the latest Summary for Policymakers of the IPCC [15•]. The reasons include the comparatively small signal-to-noise of forced changes in dynamically related quantities, the poor understanding of the mechanisms behind them, and sensitivity of model behaviour to parameterized processes. Figure 2 illustrates the issue for the case of annual-mean precipitation. To the extent that extreme weather and climate events involve dynamical processes—and most of course do—these uncertainties must be addressed when addressing the role of climate change in the event. Trenberth et al. [16••] have recently argued that in some cases, these uncertainties may prevent a reliable application of the risk-based approach and thus that the storyline approach is to be preferred.

Contrast between the robustness of projected changes in (a) surface temperature and (b) precipitation. Both panels show the mean changes projected over the twenty-first century by the CMIP5 model ensemble according to the RCP 8.5 scenario. Hatching indicates where the multi-model mean change is small compared to natural internal variability (less than one standard deviation of natural internal variability in 20-year means). Stippling indicates where the multi-model mean change is large compared to natural internal variability (greater than two standard deviations) and where at least 90 % of models agree on the sign of change. Although temperature changes are robust over all land areas, the mean precipitation changes over many populated regions are non-robust either because of natural variability or because of model discrepancies. Adapted from Figure SPM.8 of [15•]

The Risk-Based Approach

The risk-based approach to extreme event attribution is fundamentally probabilistic and requires creating two sample populations, a ‘factual’ (the world as it is) and a ‘counter-factual’ (the world as it would have been without climate change). The conceptual framework is illustrated in Fig. 3a, b, for the case of small and large shifts in the mean (for simplicity, the distribution shape is not altered). Given a factual event, the effect of climate change can be expressed in terms of either the altered frequency of occurrence of an event of that magnitude (the intercepts of p0 and p1 with the vertical grey line in Fig. 3a) or the altered magnitude of an event having that frequency in the factual climate (the intercepts of p0 and p1 with the horizontal grey line in Fig. 3a). It is evident that the relative role of climate change compared to natural variability may be quite different when viewed in terms of frequency or in terms of magnitude (cf. [8••]).Footnote 1 Yet both perspectives are clearly valid; the magnitude perspective is typical in a regulatory context, e.g. the need to protect against a 200-year event.

Schematic of probability distribution functions (PDFs) of some geophysical quantity for a factual (p1) and a counter-factual (p0) world. For simplicity, the distributions are taken to be Gaussian and climate change is represented as a simple shift in the mean. a, b Unconditional PDFs for the case of small and large shifts in the mean, relative to the internal variability. c Depicts a blow-up around the event indicated by the intersection of the grey lines in a, in the tail of the distributions; the grey lines in c correspond to the unconditional PDFs, and the black lines to PDFs conditioned on the dynamical situation leading to the event. This yields a large separation of the means and a situation analogous to b, albeit now highly conditioned

Implementing this approach involves several steps, which have both practical and philosophical implications. The first step is the event definition. The observed extreme event is unique, so it must be abstracted to a class of event amenable to statistical analysis. This requires a choice of physical variable and the spatial and temporal averaging used to define the event. There is obviously considerable freedom in this choice, yet any particular choice can have a strong effect on the result; in Fig. 3a, different choices would correspond to different locations of the grey lines, and the p1/p0 ratios will be quite sensitive to this choice. See [17•] for an explicit example.

The second step is the construction of the factual likelihood distribution p1. This will generally be done with a climate model. The fundamental challenge is that in order to estimate the likelihood of an extreme event, one needs to perform many years of simulation—the more extreme the event, the larger the number of years. Yet, in order to do so, the model must be computationally cheap to run, which means that it may not be able to simulate credible facsimiles of the event in question. Even for cases where attribution seems easy, such as large-scale heat waves, land-surface feedbacks may involve mesoscale processes that are not adequately represented in models, and deficiencies in the representation of precipitation, let alone precipitation extremes, in coarse-resolution climate models are legion [18]. Therefore, the appropriateness of the model for the study in question needs to be carefully assessed.

The third step is the construction of the counter-factual likelihood distribution p0. All the issues of model fidelity discussed above apply here as well of course, with the additional complication that the counter-factual observations do not exist with which one might evaluate the model. One might use historical observations instead, but those will be highly limited and perhaps nonexistent for the extreme of interest, and the assumption needs to be made that observed climate change is identical to anthropogenic climate change. If the climate model is coupled, then the attribution of differences between the factual and counter-factual climates is clear (assuming the imposed greenhouse gas changes are entirely anthropogenic), but to speed up computations, often the sea-surface temperatures (SSTs) are imposed in an atmosphere-only model. The typical choice is to use observed SSTs for the factual and define the counter-factual SSTs by subtracting an SST anomaly taken from coupled model simulations of climate change. Sensitivity to the choice of the latter must be assessed. Moreover, if the observed SSTs were important for inducing the particular extreme in question, then the attribution is conditional on this situation, and that too must be accounted for. See [17•] for an explicit example.

There are also philosophical issues. The risk-based approach uses concepts developed in epidemiology. In that context, attribution involves analysis of a population, and the question is asked whether the observed data are more consistent with an outbreak of an infectious disease (say) than with noise. That corresponds to the classic detection-attribution question in climate science. But if the attribution question concerns a single event, then the analogy with epidemiology is no longer there. Moreover, the observed event is only used to motivate the choice of event class, and confrontation with observations is not an intrinsic part of the analysis, as it is with detection-attribution. (Observations may be used to establish confidence in the climate model, but are not explicitly used for hypothesis testing.) The results therefore pertain very much to ‘model world’, and their physical connection to the actual event is not immediate. If there is a reliable long-term data record, this issue can be addressed by couching the event attribution within a more traditional detection-attribution framework, as illustrated by [19] for annual-mean Central England Temperature. However, this will generally constrain the spatiotemporal footprint of the event and will be limited to situations where such long-term records exist and exhibit attributable trends.

There is furthermore the question of interpretation. Classically, there are two kinds of causation: necessary and sufficient. These concepts have probabilistic analogues [20]. Necessary causation means the effect could not have occurred without the factor in question, but it may be that other factors were also necessary. As already noted, this is generally going to be the case with extremes, because extreme meteorological variability is usually required in order to be in the tail of the PDF—as in Fig. 3a. An important point is that with only necessary causation, there is no predictive power for single events; if the factor in question recurs, then the effect may not recur because it depends on the presence of other factors. (Strictly speaking, there is predictive power for nonevents in the counter-factual world, but that is not particularly useful information.) This situation may be contrasted with sufficient causation, where the factor in question is enough to make the effect occur irrespective of other factors. The latter situation is illustrated in Fig. 3b; here, what is extreme in the counter-factual world is normal in the factual world, and perhaps should not even be called an extreme at all. There is moreover predictive power for single events, because one can expect these so-called extremes (relative to the counter-factual) to recur frequently. This is increasingly the case with summertime continental extreme temperatures, as shown in Fig. 4. Confusion will ensue if the distinction between the two kinds of causation is not recognized, but commonly used extreme-event attribution measures such as the fraction of attributable risk (FAR) only reflect necessary causation and do not distinguish between the two [22••].

The changing map of record summer temperatures over Europe. The two panels show the spatial distribution of the decade in which the record-high summertime temperature occurred, over 1500–2000 (a) and over 1500–2010 (b). The height of the bar indicates the temperature anomaly, relative to 1970–1999, and the colour the decade. The additional 10 years included in b completely re-draw the map, showing that the most recent years have been exceptionally hot. The inset shows the corresponding percentage of European areas with summer temperatures above the indicated temperature (in units of standard deviation, SD) for 1500–2000 (dashed) and 1500–2010 (dotted). What used to be extreme has now become normal. From [21]. Copyright American Association for the Advancement of Science, used with permission

Dynamic and Thermodynamic Mechanisms

As discussed earlier, there is a striking difference between the robustness of purely thermodynamic aspects of climate change and of dynamic aspects involving the atmospheric or oceanic circulation. The former are quite certain, the latter highly uncertain [14•]. At the regional scale, the thermodynamic aspects are strongly modulated by the dynamic aspects so the latter must be taken into account. Part of the issue is the relatively small signal-to-noise of the circulation changes expected from models [23••]—although there are regional exceptions [24]—and part is the general non-robustness of the circulation response in models (Fig. 4 of [14•], [25]). The difficulty is compounded by the fact that the forced circulation response can be expected to project on the modes of variability [26], so is difficult to separate from the noise using fingerprinting methods, and is not well constrained theoretically [27].

Given this situation, a number of researchers have attempted to separate the thermodynamic from dynamic aspects in explaining the behaviour of observed extremes. [28••] examined the cold European winter of 2010 and argued that once one accounted for the anomalous circulation regime, including record persistence of a negative North Atlantic Oscillation (NAO) index, the winter was anomalously warm, in line with a warming climate. The results are illustrated in Fig. 5. Diffenbaugh et al. [29•] examined the recent California drought and showed that whilst there was no apparent change in observed precipitation, the systematic warming over the past century meant that dry years were now almost invariably also warm years (hence increasing the proclivity of drought), whereas in the past, the combination of the two conditions was less common. California precipitation is controlled by dynamical processes related to the storm track, and its future evolution is therefore highly uncertain [30]. Without a clear prediction of precipitation changes, [29•] argue that the risk of drought in California is increasing. In both cases, the authors regard the thermodynamic aspects of the observed changes as certain, and the dynamic aspects as uncertain and probably best interpreted as natural variability.

a Observed surface temperature anomalies in winter 2010 relative to the reference period 1949–2010. b Surface temperature anomalies expected from the circulation pattern experienced during that winter (including a record-persistent negative NAO index), based on the historical relationship with temperature. c The difference between the two, which is the ‘thermodynamic’ signal. Thus, the unusually cold winter was entirely consistent with thermodynamic warming. From [28••]. Copyright American Geophysical Union, used with permission

If an extreme event was mainly caused by purely thermodynamic processes, then the risk-based analysis using a climate model is probably reliable and a strong attribution statement can be made. If, on the other hand, an extreme event was caused in part by extreme dynamical conditions, then any risk-based analysis using a climate model also has to address the question of whether the simulated change in the likelihood or severity of such conditions is credible. Without attributed observed changes, or a theoretical understanding of what to expect, or a robust prediction from climate models, this would seem to be an extremely challenging prospect. And if plausible uncertainties are placed on those changes, then the result is likely to be ‘no effect detected’. This is indeed what tends to be concluded in event attribution studies of dynamically driven extremes [31]. But absence of evidence is not evidence of absence. Can we do better?

The Storyline Approach

Since climate change is an accepted fact [15•], it should no longer be necessary to detect climate change; rather, the question (for extreme event attribution) is what is the best estimate of the contribution of climate change to the observed event. In this case, effect size is the more relevant question than statistical significance [32]. Trenberth et al. [16••] argue that a physical investigation of how the event unfolded, and how the different contributing factors might have been affected by known thermodynamic aspects of climate change, is the more effective approach when the risk-based approach yields a highly uncertain outcome. This storyline approach, which is analogous to accident investigation (where multiple contributing factors are generally involved and their roles are assessed in a conditional manner), was employed by [13••] to investigate the 2011 Texas drought/heat wave. Although [13••] emphasized the dominance of natural variability, specifically the precipitation deficit associated with anomalous Pacific SSTs, they estimated that about 0.7 °C (20 %) of the heat-wave magnitude relative to the 1981–2010 mean was attributable to anthropogenic climate change. Thus, the storyline approach can quantify the magnitude of the anthropogenic effect, but only for that particular event. This could be useful for liability, or for planning if historical events are used as benchmarks for resilience. (It may be difficult to convince people to invest in defences against a hypothetical risk, but easier to do so if an event has previously occurred so clearly could occur again, but potentially with more impact.)

A limitation of this approach is that it is only a partial attribution, in that it does not address the potential change in likelihood of the dynamical situation leading to the event. The counter-argument is that it is useful to distinguish between the dynamical and purely thermodynamic factors leading to the extreme event, as they have very different levels of uncertainty. Recognizing that distinction allows the risk-based and the storyline approaches to be cast within a common framework. If the extreme event was mainly the result of a dynamical situation conducive to that extreme, then one can represent the probability of the event in the conditional manner.

where E is the extreme event, D is the dynamical situation, and ND is not the dynamical situation (i.e. the complement of D). For small changes, the change in probability from climate change is then

The risk-based approach estimates δP(E), or sometimes δP(E,D), the change in the joint occurrence of E and D (e.g. the combination of high temperature and anti-cyclonic circulation anomaly used by [8••] for the 2010 Russian heat wave). The dynamically conditioned attribution, in contrast, estimates δP(E|D); this is equivalent to the ‘circulation analogues’ approach described earlier and illustrated in Fig. 5, where anthropogenic changes in temperature for a particular extreme winter were estimated after conditioning on the circulation regime. The signal-to-noise of this estimate can be expected to be large, since the conditioning (especially if on a specific synoptic situation) eliminates most of the dynamical variability; the concept is illustrated in Fig. 3c. The product P(D) times δP(E|D) is simply the change in probability of the extreme event, assuming no change in occurrence of the dynamical situation that led to the event. The justification for the dynamically conditioned approach is that the latter change in occurrence, δP(D), is highly uncertain and best assumed to be zero unless there are strong grounds for assuming something else [16••]. In any case, its impact on δP(E) depends on the ratio of the signal-to-noise of the dynamical change, δP(D)/P(D), to that of the thermodynamic change, δP(E|D)/P(E|D). Since this ratio can generally be expected to be small, the neglect of this term is not unreasonable. There is also the last term in eq. 2, but assuming that D was a necessary condition for the occurrence of the extreme, it will be negligible. Since P(E,D) = P(E|D)P(D), neglect of the last term in eq. 2 is implicit in the risk-based approach when an event is defined by the joint occurrence P(E,D).

It may be noted that this approach is analogous to specifying the meteorology and quantifying the impact of a chemical change on atmospheric composition, which assumes that the composition change is too small to appreciably affect the meteorology. This was used by [33] to quantify the contribution of changes in ozone-depleting substances to the observed total-ozone record on a year-by-year basis, i.e. deterministically, rather than only statistically as would be the case with a free-running model.

For a weather extreme that is predictable, the dynamically conditioned approach can be implemented within a weather model that is capable of simulating the extreme in question. That is one of the great advantages of this approach: that one can obtain a credible estimate of δP(E|D). The main uncertainty probably lies in the specification of the counter-factual thermodynamic environment, but that is an issue for any attribution study. The concept is illustrated in Fig. 6, which shows the impact of cooler SSTs on re-forecasts of hurricane Sandy. Of course, since the atmosphere is chaotic, any small difference in conditions will lead to a difference in the outcome, and if the observed outcome was extreme, then one might generically expect a weakened extreme from any perturbation. This potential pitfall can be easily guarded against by also making a perturbation in the opposite direction. Lackmann [35] applied this approach to Sandy, finding that the hurricane’s intensity would have been slightly weaker had it occurred in 1900, but would be substantially greater if it re-occurred in 2100. Another application of this approach is [36•], who ran a nested convection-resolving model, constrained by the large-scale circulation, to simulate the 2012 Krymsk precipitation extreme. Remarkably, they identified a bifurcation whereby the mesoscale system leading to the extreme could only occur for Black Sea temperatures above a certain threshold (which they argued was of anthropogenic origin).

Effect of warmer sea-surface temperatures on hurricane Sandy. The two panels show the ensemble mean ECMWF forecast of mean sea level pressure (showing only contours at or below 990 hPa, in units of 5 hPa) from 24 October 2012 verifying on 29 October 2012, using (a) observed SSTs and (b) climatological SSTs over the previous 20 years. The SSTs are shown in the colours, with the 26C contour indicated. The unusually warm SSTs along the US coast in 2012 led to exceptionally strong surface latent heat fluxes which fueled a more powerful storm. To the extent that the warmer SSTs are partly anthropogenic, this was a contributor to Sandy’s intensity. From [34]. Copyright American Meteorological Society, used with permission

As illustrated by Fig. 3c, conditioning on the dynamical situation leading to the event can convert necessary causation to sufficient causation, in which case even a single event can distinguish between alternative hypotheses. For example, [37] argued that the exceptionally warm European fall/winter of 2006/2007 could not have occurred, in conjunction with the observed circulation anomaly, without anthropogenic warming. This then ties the attribution directly to the observed event, rather than being only probabilistic. The direct confrontation with data as an essential component of the attribution is a very attractive feature of this approach, as is its emphasis on a physically based causal narrative.

It may be that circulation changes are expected to be important in the future occurrence of an extreme. An example is provided in Fig. 7, where the spread in future cold-season Mediterranean drying—a model prediction with enormous socioeconomic implications for Europe—across the CMIP5 models is almost entirely explained by the spread in the circulation response [38]. In this case, eq. 2 is still informative because it allows one to separately estimate the uncertainty associated with the thermodynamic and dynamic aspects of climate change. Particular choices of δP(D) could be considered as plausible storylines.

Dependence of cold-season Mediterranean drying on the circulation response to climate change. a The distributions of yearly cold-season precipitation anomalies over the Mediterranean basin for all CMIP5 models in the historical period (1976–2005), relative to their climatological mean (black solid), and the projected anomalies during 2070–2099 under RCP8.5 for the 20 % of models with the strongest (dashed grey) and weakest (solid grey) circulation responses to climate change, where the circulation response is measured by the lower tropospheric (850 hPa) zonal wind change over North Africa. b The relation between the projected changes in cold-season Mediterranean precipitation, and in North Africa zonal wind, across the different CMIP5 models. The changes in circulation explain about 85 % of the CMIP5 mean precipitation response and 80 % of the variance in the inter-model spread. Figure courtesy of Giuseppe Zappa, adapted from [38]

Conclusions

In climate science, we are accustomed to strive for quantitative answers, but it is important to appreciate that being quantitative is not necessarily the same thing as being rigorous [39]. In particular, it is essential to distinguish between quantifiable uncertainty and Knightian (i.e. deep) uncertainty [40]. Uncertainty associated with sampling variance is quantifiable, e.g. through boot-strapping methods, but many of the uncertainties associated with climate change—especially the deep uncertainties associated with the atmospheric circulation response to climate change—are not easily quantifiable. Examining the sensitivity of a result to the choice of climate model, as is becoming common practice in risk-based approaches [19], is an important first step in determining robustness. However, model spread is not a quantification of model uncertainty because a multi-model ensemble does not represent a meaningful probability distribution [41]. It has therefore been argued that the quest for more accurate climate model predictions is illusory and that instead we need to be using models for understanding, not prediction [42, 43].

The risk-based approach to extreme event attribution is inherently probabilistic and does not claim to attribute the specific event that inspired the study; indeed, in [12], the observed event was excluded from the analysis to avoid selection bias, and the results concerned observed changes prior to the event itself, and expected future changes, rather than the event itself. Such analyses are clearly useful for policy and planning, and potentially also for liability [11, 44], if they can be established to be credible. However, for weather-related extremes, converting a weather question into a climate question by abstracting the particular event to an event class (e.g. regarding the extreme precipitation across a GCM grid cell as a proxy for extreme precipitation in a mountain valley or canyon) could be seen as substituting a simple problem in place of a complex one [45], and thus as falling prey to Whitehead’s fallacy of ‘misplaced concreteness’ [40]. If the quantitative estimates of altered risk are sensitive to the spatiotemporal footprint of the event, as they almost certainly will be, then the quantification provided by the climate model may not be relevant at the spatiotemporal scale of the extreme weather event itself. Furthermore, if dynamical aspects of climate change are crucial to the model result, the credibility of these changes needs to be established. The ideal situation is when a model can reliably simulate the dynamics leading to the extreme, and the modelled effect of climate change is mainly occurring through well-represented thermodynamic processes.

The storyline approach to event attribution has the merit of being strongly anchored in a physically based causal narrative, at the price of not addressing the potential change in likelihood of the dynamical situation leading to the event. It has been argued here that this apparent weakness may actually be a strength insofar as it explicitly distinguishes between quantifiable risk [through δP(E|D)] and Knightian uncertainty [through δP(D)]. In this respect, the storyline approach is not so much ignoring the possibility of a dynamical component to climate change, as treating it separately from the purely thermodynamic changes concerning which there is much higher confidence. [46•] have recently advocated a similar approach for climate projections, within a transdisciplinary framework. This has the further advantage that other anthropogenic factors (i.e. apart from climate change) can be explicitly included in the analysis. (This can be done in the risk-based approach too, of course, but the effects would be more challenging to isolate because of the lower signal-to-noise.) For example, rather than removing urban heat-island effects from the data in order to isolate the ‘true’ climate signal, would it not be more useful to include them in the analysis—since those effects do kill people—and understand how the different factors combine?

Of course, the two approaches to extreme event attribution are not mutually exclusive, and as argued here can be cast within a common framework; there is no reason why they could not be used in a complementary fashion, thereby bringing together climate-oriented and weather-oriented perspectives. Moreover, conditioning can be done to various degrees. Indeed, [17•] argue that for Africa, where inter-annual variability is strongly controlled by SSTs, an SST-conditioned attribution may in some cases be more useful to users than an unconditioned attribution since observed events serve as a benchmark for resilience, which people can relate to. Conditioning by SSTs is merely the first step towards conditioning by circulation, and ultimately by synoptic situation. The most useful level of conditioning will depend on the question being asked, and the confidence one has in the resulting answer.

Notes

For the case of a shifted Gaussian shown in Fig. 3a, it is easy to show that for a small shift, the relative change in frequency equals the relative change in magnitude times the square of the normalized magnitude, so e.g. is a factor of 9 greater for a 3σ event.

References

Papers of particular interest, published recently, have been highlighted as • Of importance •• Of major importance

Lorenz EN. Available potential energy and the maintenance of the general circulation. Tellus. 1955;7:157–67.

Pithan F, Mauritsen T. Arctic amplification dominated by temperature feedbacks in contemporary climate models. Nat Geosci. 2014;7:181–4.

Shepherd TG. Dynamics of temperature extremes. Nature. 2015;522:425–7.

Coumou D, Robinson A, Rahmstorf S. Global increase in record-breaking monthly-mean temperatures. Clim Chang. 2013;118:771–82.

Sippel S, Zscheischler J, Heimann M, Otto FEL, Peters J, Mahecha MD. Quantifying changes in climate variability and extremes: pitfalls and their overcoming. Geophys Res Lett. 2015;42:1–9.

Hansen J, Sato M, Ruedy R. Perception of climate change. Proc Natl Acad Sci U S A. 2012;109:E2415–23.

Hegerl GC, Hoegh-Guldberg O, Casassa G, Hoerling M, Kovats S, Parmesan C, Pierce D, Stott P. Good practice guidance paper on detection and attribution related to anthropogenic climate change. In: Stocker T, Field F, Dahe Q, Barros V, Plattner G-K, Tignor M, Midgley P, Ebi K. Report of the IPCC Expert Meeting on Detection and Attribution Related to Anthropogenic Climate Change. 2010 Geneva, Switzerland.

Otto FEL, Massey N, van Oldenborgh GJ, Jones RG, Allen MR. Reconciling two approaches to attribution of the 2010 Russian heat wave. Geophys Res Lett. 2012;39:L04702. A landmark paper which resolved the apparent discrepancy between two published studies of the 2010 Russian heat wave, one of which focused on changes in frequency of occurrence and found an anthropogenic effect, the other which focused on effect magnitude and found natural variability to be the dominant driver. Should be required reading because recent studies of extreme events still seem to fall into this trap.

Hoskins BJ, James IN. Fluid dynamics of the mid-latitude atmosphere. 2014. Wiley-Blackwell, 432 pp.

Alexander LV, Jones PD. Updated precipitation series for the UK and discussion of recent extremes. Atmos Sci Lett. 2001. doi:10.1006/asle.2001.0025.

Allen MR. Liability for climate change. Nature. 2003;421:891–2.

Stott PA, Stone DA, Allen MR. Human contribution to the European heatwave of 2003. Nature. 2004;432:610–4.

Hoerling M, Kumar A, Dole R, Nielsen-Gammon JW, Eischeid J, Perlwitz J, et al. Anatomy of an extreme event. J Clim. 2013;26:2811–32. A pioneering study which is an exemplar of the storyline approach applied to extreme meteorological events, here the 2011 Texas drought/heat wave. Although the authors emphasize the role of natural variability, they also make plausible estimates of the anthropogenic contribution.

Shepherd TG. Atmospheric circulation as a source of uncertainty in climate change projections. Nat Geosci. 2014;7:703–8. An exposition of the basic reasons why dynamic aspects of climate change are so much more uncertain than thermodynamic aspects.

Stocker TF, Qin D, Plattner G-K, Tignor M, Allen SK, Boschung J, et al. Climate change 2013. Cambridge: The Physical Basis, Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; 2014. As with all IPCC Reports, this represents the latest scientific consensus on the level of confidence that can be expressed concerning the various aspects of climate change, past and future, and is thus a key reference point.

Trenberth KE, Fasullo JT, Shepherd TG. Attribution of climate extreme events. Nat Clim Chang. 2015;5:725–30. Argues that the risk-based approach to extreme event attribution is prone to type II errors of inference (i.e. false negatives) and thus that the null hypothesis should instead be known thermodynamic aspects of climate change. Thereby argues for the storyline approach as the most effective way to quantify the climate-change contribution to particular meteorological extreme events.

Otto FEL, Boyd E, Jones RG, Cornforth RJ, James R, Parker HR, et al. Attribution of extreme weather events in Africa: a preliminary exploration of the science and policy implications. Clim Chang. 2015. doi:10.1007/s10584-015-1432-0. Argues that the user perspective should drive the framing of event attribution studies, since different framings can lead to very different framings of the change in risk. Although their focus is on Africa, the findings are of course more general.

Demory ME, Vidale PL, Roberts MJ, Berrisford P, Stachan J, Schiemann R, et al. The role of horizontal resolution in simulating drivers of the global hydrological cycle. Clim Dyn. 2014;42:2201–25.

King AD, van Oldenborgh GJ, Karoly DJ, Lewis SC, Cullen H. Attribution of the record high Central England temperature of 2014 to anthropogenic influences. Environ Res Lett. 2015;10:054002.

Pearl J. Causality: models, reasoning and inference. 2nd ed. Cambridge: Cambridge University Press; 2009. 484 pp.

Barriopedro D, Fischer EM, Luterbacher J, Trigo RM, García-Herrera R. The hot summer of 2010: redrawing the temperature record map of Europe. Science. 2011;332:220–4.

Hannart A, Pearl J, Otto FEL, Naveau P, Ghil M. Causal counterfactual theory for the attribution of weather and climate-related events. Bull Am Meteorol Soc. 2015. doi:10.1175/BAMS-D-14-00034.1. A lucid explanation of how Pearl’s theory of causal inference is relevant to extreme event attribution, with the commonly used FAR approach being a special case within a more general framework. Emphasizes the crucial distinction between necessary and sufficient causation, and in particular that several causal factors can have a FAR approaching unity (thus, a FAR approaching unity should not be interpreted as sufficient causation). Applies the concepts to the 2003 European heat wave.

Deser C, Phillips A, Bourdette V, Teng HY. Uncertainty in climate change projections: the role of internal variability. Clim Dyn. 2012;38:527–46. A landmark paper which emphasized the potentially enormous role of natural variability for regional aspects of climate change even over multi-decadal timescales, and the generally small signal-to-noise ratio of dynamically driven changes. This implies that the understanding of any dynamically driven extreme must take into account the role of natural variability.

Zappa G, Hoskins BJ, Shepherd TG. Improving climate change detection through optimal seasonal averaging: the case of the North Atlantic jet and European precipitation. J Clim. 2015;28:6381–97.

Barnes EA, Polvani LM. CMIP5 projections of Arctic amplification, of the North America/North Atlantic circulation, and of their relationship. J Clim. 2015;28:5254–71.

Deser C, Magnusdottir G, Saravanan R, Phillips A. The effects of North Atlantic SST and sea ice anomalies on the winter circulation in CCM3. Part II: direct and indirect components of the response. J Clim. 2004;17:877–89.

Hoskins BJ, Woollings T. Persistent extratropical regimes and climate extremes. Curr Clim Change Rep. 2015;1:115–24.

Cattiaux J, Vautard R, Cassou C, Yiou P, Masson-Delmotte V, Codron F. Winter 2010 in Europe: a cold extreme in a warming climate. Geophys Res Lett. 2010;37:L20704. A landmark paper which used a ‘circulation analogue’ approach to condition observed temperature variations on the atmospheric circulation, thereby greatly increasing the signal-to-noise of detected warming. In particular, they showed that the cold European winter of 2010 was warmer than it would have been without climate change. Thus an early example of the storyline approach to extreme event attribution.

Diffenbaugh NS, Swain DL, Touma D. Anthropogenic warming has increased drought risk in California. Proc Natl Acad Sci. 2015;112:3931–6. A nice study of the recent California drought, which argues from a risk perspective based on the relatively certain nature of thermodynamic aspects of climate change, and the relatively uncertain nature of the dynamic aspects.

Chang EKM, Zheng C, Lanigan P, Yau AMW, Neelin JD. Significant modulation of variability and projected change in California winter precipitation by extratropical cyclone activity. Geophys Res Lett. 2015;42:5983–91.

Herring SC, Hoerling MP, Kossin JP, Peterson TC, Stott PA. Explaining extreme events of 2014 from a climate perspective. Bull Am Meteorol Soc. 2015;96(12):S1–172.

Nicholls N. The insignificance of significance testing. Bull Am Meteorol Soc. 2001;81:981–6.

Shepherd TG, Plummer DA, Scinocca JF, Hegglin MI, Fioletov VE, Reader MC, et al. Reconciliation of halogen-induced ozone loss with the total-column ozone record. Nat Geosci. 2014;7:443–9.

Magnusson L, Bidlot J-R, Lang STK, Thorpe A, Wedi N. Evaluation of medium-range forecasts for Hurricane Sandy. Mon Weather Rev. 2014;142:1962–81.

Lackmann GM. Hurricane Sandy before 1900 and after 2100. Bull Am Meteorol Soc. 2015;96:547–59.

Meredith EP, Semenov VA, Maraun D, Park W, Chernokulsky AV. Crucial role of Black Sea warming in amplifying the 2012 Krymsk precipitation extreme. Nat Geosci. 2015;8:615–20. An interesting study of an intense rainfall event, which uses a weather model conditioned on the large-scale circulation to argue that warming of the Black Sea has revealed a tipping point in the local mesoscale meteorology. Whether or not the study stands up, it shows the powerful potential of this kind of approach.

Yiou P, Vautard R, Naveau P, Cassou C. Inconsistency between atmospheric dynamics and temperatures during the exceptional 2006/2007 fall/winter and recent warming in Europe. Geophys Res Lett. 2007;34:L21808.

Zappa G, Hoskins BJ, Shepherd TG. The dependence of wintertime Mediterranean precipitation on the atmospheric circulation response to climate change. Environ Res Lett. 2015;10:104012.

Silver N The signal and the noise. 2012. Penguin, 545 pp.

Smith LA, Stern N. Uncertainty in science and its role in climate policy. Phil Trans R Soc A. 2011;369:1–24.

Knutti R, Masson D, Gettelman A. Climate model genealogy: generation CMIP5 and how we got there. Geophys Res Lett. 2013;40:1194–9.

Stainforth DA, Allen MR, Tredger ER, Smith LA. Confidence, uncertainty and decision-support relevance in climate predictions. Phil Trans R Soc A. 2007;365:2145–61.

Dessai S, Hulme M, Lempert R, Pielke Jr R. Do we need better predictions to adapt to a changing climate? Eos. 2009;90:111–2.

James R, Otto F, Parker H, Boyd E, Cornforth R, Mitchell D, et al. Characterizing loss and damage from climate change. Nat Clim Chang. 2014;4:938–9.

Kahneman D. Thinking, fast and slow. New York: Farrar, Straus and Giroux; 2011. 512 pp.

Hazeleger W, van den Hurk BJJM, Min E, van Oldenborgh GJ, Petersen AC, Stainforth DA, et al. Tales of future weather. Nat Clim Chang. 2015;5:107–13. Makes the case for a storyline approach to future weather (which they call ‘Tales’), in light of the manifold uncertainties which severely challenge a probabilistic approach, but also discusses its possible application to past events. Further argues for a transdisciplinary dialogue concerning weather events, since different users may have very different perspectives on what is ‘extreme’.

Acknowledgments

The author acknowledges the support provided through the Grantham Chair in Climate Science at the University of Reading, and the numerous constructive comments provided by two anonymous reviewers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author states that there is no conflict of interest.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by the author.

Additional information

This article is part of the Topical Collection on Extreme Events

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Shepherd, T.G. A Common Framework for Approaches to Extreme Event Attribution. Curr Clim Change Rep 2, 28–38 (2016). https://doi.org/10.1007/s40641-016-0033-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40641-016-0033-y