Abstract

How sensitive the climate system is to the atmospheric concentration of CO2 is one of the most important and long-standing questions in climate science. This problem is well-suited to the Bayesian approach. Early estimates were highly uncertain, but recent research appears to show some convergence with both high and low values excluded with increasing confidence. There is, however, increasing evidence that many of these estimates ignore some significant sources of uncertainty, correctly accounting for which would probably broaden the estimates somewhat. Conversely, different lines of evidence tend to generate consistent results, and it should be possible to synthesise these so as to decrease our uncertainties.

Similar content being viewed by others

Introduction

The equilibrium climate sensitivity (henceforth S) is a fundamental parameter in determining the long-term response of the climate system to the anthropogenically induced increase in atmospheric CO2 concentration. It is a first-order determinant of the carbon budget implied by the UNFCCC “dangerous” 2 ∘C warming threshold that society has committed to not exceeding [8] and more generally is one of the principal parameters by which we understand the behaviour of the climate system. Thus, it has long been an important topic of research, and it has featured prominently in [9] and the subsequent IPCC Assessment Reports. Here, I review recent developments in Bayesian methods and applications for the estimation of S and discuss prospects for future progress. In Section “Subjective and Objective Approaches to the Bayesian Paradigm”, I introduce the topic of Bayesian probability and discuss recent results using both subjective and objective approaches to estimate S. I then discuss some recent evidence in Section “Model Inadequacy” which points to important limitations of these estimates. In Section “Combining Multiple Constraints”, I consider the potential for combining estimates which arise from different lines of evidence into an overall synthesis, and finally, in Section “Constraints on Shape and Tails of pdfs”, I discuss how well we can constrain the probability of very high sensitivity, sometimes referred to as the problem of the “fat tail”.

Subjective and Objective Approaches to the Bayesian Paradigm

The Bayesian paradigm applies the methods and language of probability to describe uncertainties concerning the real world. The climate sensitivity is not intrinsically a random parameter, rather it is fixed but its value is unknown to us, and thus, it is a suitable subject for such a treatment. Although linguistic imprecision can occasionally be found in the literature, uncertainty regarding S is not a property of the real world (i.e. “the probability of high/low sensitivity”) but rather under the subjective Bayesian paradigm, the uncertainty attaches to the researcher (“my/our probability for high/low sensitivity”). The Bayesian paradigm has long been popular in the estimation of S (e.g. [10, 31]) and continues to feature strongly in recent research [17, 26, 30, 33]. Probabilities are typically presented in the form of a probability density function (pdf) which can easily be interpreted in a variety of ways such as credible intervals or probability of exceeding a threshold. By far, the most common application of the Bayesian approach for estimating the equilibrium climate sensitivity (including all papers cited above) is in the interpretation of the warming during the observational period, roughly the twentieth century, though it is now usual to use what data are available from the late nineteenth and of course early twenty-first centuries.

A powerful argument in support of the Bayesian paradigm for interpreting uncertainty comes from decision theory: any admissible decision rule (where “admissible” means that no decision rule can be found which is better in all circumstances) can be viewed as the minimisation of expected loss under a Bayesian posterior (e.g. Chapter 8 of [27]). Thus, if we wish to make admissible decisions, we have little alternative but to implement a de facto Bayesian approach. Note however that the application of Bayes’ Theorem requires the specification of the “prior” P(S). There are two main doctrines in the selection of P(S). In the subjective Bayesian approach, P(S) represents (at least in principle) the belief of the researcher prior to making the observations and thus can be chosen at will. The credibility of their posterior will of course depend on the reasonableness of the prior, and it is commonplace for this to be tested by sensitivity analyses in which a range of priors are considered. Nevertheless, the dependence on a subjectively chosen prior is frequently a basis for criticism of the subjective Bayesian paradigm. Alternative approaches to prior specification have been developed under the umbrella term of the “objective Bayesian” approach [6, 16, 18] in which probability is viewed as a rational interpretation of the data. The goal here is to automatically determine a so-called “non-informative” prior which maximises the influence (in some mathematically definable manner) of a data set on the posterior. Although in earlier work, some researchers used uniform priors under the assumption that these represented a minimally informative prior state, this is typically not the case, and more recently, there has been a more sophisticated application of objective Bayesian ideas in climate science, in particular by [20, 21]. However, it is important to remember that even a prior that satisfies particular the mathematical properties which are denoted by the term “non-informative” actually does convey specific information with direct consequences for any subsequent decision-making. As [7] note, “There is no ‘objective’ prior that represents ignorance” and additionally “every prior specification has some informative posterior or predictive implications”. This argument is explored with particular reference to climate sensitivity in [4], who demonstrate how the historically popular choice of uniform prior carries with it an expectation of very high climate sensitivity and therefore extremely high costs (in an economic analysis) due to future climate change.

The priors used by [20–22] decay strongly for high values of S, and the resulting posterior pdfs have median estimates a little lower than 2 ∘C with 5–95 % credible intervals that vary from about 1–3 to 1–4.5 ∘C. While these results are rather lower than many of those reported earlier in the literature (e.g. [10, 11]), they are not so dissimilar to recent results using the subjective paradigm [1, 17, 26, 30]. These researchers found best estimates ranging from 1.8–2.8 ∘C and the latter two studies generated upper 95 % bounds of 3.2 ∘C. This downward shift of estimates in recent years is likely due to a combination of several factors, including changes in priors (which generally no longer emphasise high values so strongly), longer time series of more accurate data through extension into the past and/or the accumulation of additional years in real time for both atmosphere and ocean, and recent evaluation of aerosol forcing towards lower values [24]. These data updates reduce the probability of high sensitivity even when a uniform prior is used.

All aspects of the research involve a number of subjective decisions, so that even the most innocuous-seeming observation is actually “theory-laden” [19]. The focus on priors should not be allowed to overshadow the importance of decisions made elsewhere in the process. One important decision in the Bayesian analyses discussed here is the choice of underlying model, which is now discussed in more detail in the following section.

Model Inadequacy

Estimates of sensitivity using the warming throughout the observational record typically rely on a relatively simple model of the climate system, in which the radiative balance is described by a zero or low-dimensional equation of the form

in which all Δ terms are usually defined as anomalies from an assumed quasi-equilibrium pre-industrial state. ΔN is the total heat uptake (primarily in the ocean, which may be modelled in a variety of ways), ΔF is the net radiative forcing at the top of the atmosphere, ΔT is the global mean temperature anomaly and λ is the radiative feedback parameter. In this model, it is assumed that the total radiative feedback can be described by a constant feedback coefficient λ multiplied by the globally averaged surface temperature anomaly. However, much evidence has accumulated from model simulations that suggests that this may not be the case in reality. For many state of the art climate models, the “effective” feedback (that is, the value of (ΔF−ΔN)/ΔT at a specific point in time) can change, typically (though not always) decreasing in standard scenarios of increasing greenhouse gas forcing [2, 5]. A decrease in this feedback implies that the effective sensitivity early in the warming will be lower than the equilibrium sensitivity, and this suggests that methods which use the historical period for estimation may underestimate the true equilibrium sensitivity. Furthermore, the sensitivity to qualitatively different forcings may also vary, and/or combine in nonlinear ways, and there is evidence from state of the art climate models that this is indeed the case over distant past climates [35, 36]. While these issues are an interesting challenge for climate science, they complicate our attempts to understand and predict the response to greenhouse gases. They could in principle be accounted for by using more complex climate models in the Bayesian analysis, but this would greatly increase the computational cost and complexity of the estimation process and also reduce the identifiability of the parameters, especially since the historical period is too short and its data too uncertain to strongly constrain any nonlinearity. Better understanding and quantification of these nonlinearities would seem to be an important area for future research.

Combining Multiple Constraints

A well-established lemma of probability theory is that we always expect to learn from additional information [23]. Note that we can be unlucky in the sense that new information can cause an increase in our uncertainty, and [12] demonstrates some situations where observing additional years of warming could rationally lead to an increase in our uncertainty on S. However, prior to making an observation, our expectation is that it will lead to a reduction of uncertainty. In light of this, it is important to consider how to combine evidence arising from multiple sources. The issue of combining constraints on climate sensitivity were brought to prominence by the papers of [14] and [3], although multiple sets of observations had already often been implicitly assumed to provide independent constraints in previous work [10].

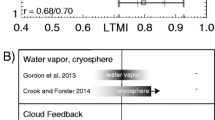

In particular, as well as using the transient warming over the modern observational period, we may consider evidence from the paleoclimatic records such as ice cores and sedimentary records [29] and also that emerging from physical principles as embedded in state of the art climate system models. To first order, these two additional lines of argument both point to a sensitivity around the canonical value of 3 ∘C [9]—perhaps a little higher than estimates based on the observational warming, but certainly highly consistent with them—but each approach has significant uncertainties and inbuilt assumptions. A simple but possibly naive way to treat these estimates would be that the errors and uncertainties of the different likelihoods are statistically independent. This assumption of independence has the convenient property that the joint likelihood will then simply be the product of the individual likelihoods, and thus, combining the evidence would be a simple matter of multiplying together the different likelihood functions. However, this may be too optimistic. If two analyses use the same (or even similar) models, they may share biases due to model inadequacy and thus an assumption of independence would not be appropriate. It is also possible that the assumption of independence is too pessimistic, with complementary observations generating a more precise result when properly handled [32]. It would be useful to analyse the inbuilt assumptions and uncertainties of the various methods in a more clear and formal approach which might allow a more careful and convincing synthesis of the various lines of evidence.

Constraints on Shape and Tails of pdfs

As discussed in Section “Subjective and Objective Approaches to the Bayesian Paradigm”, formally a pdf for S is generally considered to be a representation of the subjective belief of a researcher (in the case of “objective” methods, it is still influenced by many subjective decisions), and as such there can be no absolute constraints on its shape or form. However, in practice there is widespread agreement on what is reasonable. It has been known for many years that observational analyses based on the modern warming period will tend to generate distributions with long tails that decline only slowly at high values (e.g. [4, 13, 28]). This is primarily because the likelihood arising from data uncertainties is roughly symmetric for the radiative feedback (inverse of sensitivity) and inverting from even a Gaussian distribution on feedback results in a highly skewed distribution for sensitivity. Thus, it can be argued that any reasonable likelihood function for S arising from observations over the twentieth century will be essentially flat and non-zero for S above some (high) threshold [4]. Weitzman [34] goes further than this, arguing that a simple form of Bayesian learning will always generate a pdf for S which is unbounded and decays only quadratically at high values (i.e. as S −2). When this is integrated into an economic analyses in which the negative utility due to climate change is a rapidly escalating function of warming, then this “fat tail” implies an unbounded loss which will always dominate our decision-making. However, Nordhaus [25] and more recently [15] dispute various details of the analysis and present alternative interpretations with bounded cost. Whether the “fat tail” is in principle inevitable or not, this result may be best viewed as a (somewhat theoretical) problem with economics rather than a fundamental difficulty in climate science. Recent analyses based on the observational record increasingly assign only very small probabilities to high values for the equilibrium sensitivity (e.g. [17, 30]), so it may be reasonable to conclude that this problem is largely resolved. However, there will always be a concern that model limitations mean that assessing low probability (but high impact) events will remain largely a matter of judgement.

Conclusion

The subjective Bayesian approach is now widely utilised for estimation of the equilibrium climate sensitivity. Progress has been steady, although even the newest estimates can be seen to have limitations. While estimates based on the recent observational record are increasingly converging to a moderate value with a best estimate rarely far from 2 to 2.5 ∘C, and a range which is confidently bounded between about 1 and 4.5 ∘C (or less), these estimates are themselves conditional on approximations that are now recognised to introduce significant additional uncertainties (and perhaps a bias) into the results. The real climate system is more complex than any model, and the concept of an equilibrium sensitivity may not be precisely definable in the real world. Therefore, there must be a limit to how accurately this parameter can be meaningfully estimated. Nevertheless, there is no reason to presume we have yet reached this limit, and it provides a useful basis for predicting the magnitude of future climate change. There are many opportunities for improving our estimates, and better understanding and quantifying our uncertainties. One area for research that has not been explored in much detail is the possibility of synthesising different lines of research, all of which inform on the equilibrium sensitivity. Such an analysis has the potential for generating a more precise and credible result.

References

Aldrin M, Holden M, Guttorp P, Skeie R B, Myhre G, Berntsen T K. Bayesian estimation of climate sensitivity based on a simple climate model fitted to observations of hemispheric temperatures and global ocean heat content. Environmetrics 2012;23(3):253–271. doi:10.1002/env.2140.

Andrews T, Gregory JM, Webb MJ. The dependence of radiative forcing and feedback on evolving patterns of surface temperature change in climate models. J Clim 2015;28(4):1630–1648. doi:10.1175/jcli-d-14-00545.1.

Annan JD, Hargreaves JC. Using multiple observationally-based constraints to estimate climate sensitivity. Geophys Res Lett 2006;33(L06704).

Annan JD, Hargreaves JC. 2009. On the generation and interpretation of probabilistic estimates of climate sensitivity. Clim Change. doi:10.1007/s10584-009-9715-y.

Armour KC, Bitz CM, Roe GH. Time-varying climate sensitivity from regional feedbacks. J Clim 2013; 26(13):4518–4534.

Berger J. The case for objective Bayesian analysis. Bayesian Anal 2006;1(3):385–402.

Bernardo JM, Smith AFM. Bayesian theory. Chichester: Wiley; 1994.

Cancún Agreement. 2010. Framework convention on climate change: report of the conference of the parties on its 16th session, held in Cancún from 29 November to 10 December. Technical report, FCCC/CP/2010/7/Add. 1.

Charney JG. Carbon dioxide and climate: a scientific assessment. Washington: NAS; 1979.

Forest CE, Stone PH, Sokolov AP, Allen MR, Webster M D. Quantifying uncertainties in climate system properties with the use of recent climate observations. Science 2002;295(5552):113–117.

Frame DJ, Booth BBB, Kettleborough JA, Stainforth DA, Gregory JM, Collins M, Allen MR. Constraining climate forecasts: the role of prior assumptions. Geophys Res Lett 2005;32(L09702).

Hannart A, Ghil M, Dufresne J-L, Naveau P. Disconcerting learning on climate sensitivity and the uncertain future of uncertainty. Clim Change 2013;119(3–4):585–601. doi:10.1007/s10584-013-0770-z.

Hansen J, Russel G, Lacis A, Fung I, Rind D. Climate response times: dependence on climate sensitivity and ocean mixing. Science 1985;229:857–859.

Hegerl GC, Crowley TJ, Hyde WT, Frame DJ. Climate sensitivity constrained by temperature reconstructions over the past seven centuries. Nature 2006;440:1029–1032.

Horowitz J, Lange A. Cost–benefit analysis under uncertainty — a note on Weitzman’s dismal theorem. Energy Econ 2014;42:201–203. doi:10.1016/j.eneco.2013.12.013.

Jeffreys H. Theory of probability. Oxford: Oxford University Press; 1939.

Johansson DJA, O’Neill BC, Tebaldi C, Häggström O. Equilibrium climate sensitivity in light of observations over the warming hiatus. Nat Clim Change 2015;5(5):449–453. doi:10.1038/nclimate2573.

Kass RE, Wasserman L. The selection of prior distributions by formal rules. J Amer Stat Assoc 1996;91(435):1343–1370. doi:10.1080/01621459.1996.10477003.

Kuhn TS. 1962. The structure of scientific revolutions. University of Chicago Press.

Lewis N. An objective Bayesian improved approach for applying optimal fingerprint techniques to estimate climate sensitivity. J Clim 2013;26(19):7414–7429. doi:10.1175/jcli-d-12-00473.1.

Lewis N. Objective inference for climate parameters: Bayesian, transformation-of-variables, and profile likelihood approaches. J Clim 2014;27(19):7270–7284.

Lewis N. 2015. Implications of recent multimodel attribution studies for climate sensitivity. Clim Dyn. doi:10.1007/s00382-015-2653-7.

Lindley DV. On a measure of the information provided by an experiment. Ann Math Stat 1956;27(4):986–1005. ISSN 0003-4851.

Myhre G. Consistency between satellite-derived and modeled estimates of the direct aerosol effect. Science;325(5937):187–190. doi:10.1126/science.1174461.

Nordhaus WD. 2009. An analysis of the dismal theorem. Cowles Foundation Discussion Paper Number 1686.

Olson R, Sriver R, Goes M, Urban NM, Matthews HD, Haran M, Keller K. A climate sensitivity estimate using Bayesian fusion of instrumental observations and an Earth system model. J Geophys Res 2012;117(D4). ISSN 0148-0227. doi:10.1029/2011JD016620.

Robert CP. The Bayesian choice: from decision-theoretic foundations to computational implementation. Springer texts in statistics. New York: Springer-Verlag; 2001.

Roe GH, Armour KC. How sensitive is climate sensitivity? Geophys Res Lett 2011;38(14). doi:10.1029/2011gl047913.

Rohling EJ, Sluijs A, Dijkstra HA, Köhler P, van de Wal RSW, von der Heydt AS, Beerling DJ, Berger A, Bijl PK, Crucifix M, et al. Making sense of palaeoclimate sensitivity. Nature 2012;491:683–691.

Skeie RB, Berntsen T, Aldrin M, Holden M, Myhre G. A lower and more constrained estimate of climate sensitivity using updated observations and detailed radiative forcing time series. Earth Syst Dyn 2014;5(1):139–175.

Tol RSJ, De Vos AF. A Bayesian statistical analysis of the enhanced greenhouse effect. Clim Change 1998;38(1):87–112.

Urban NM, Keller K. Complementary observational constraints on climate sensitivity. Geophys Res Lett 2009;36:L04708.

Urban NM, Keller K. Probabilistic hindcasts and projections of the coupled climate, carbon cycle, and Atlantic meridional overturning circulation system: a Bayesian fusion of century-scale observations with a simple model. Tellus A 2010;62(5):737–750.

Weitzman ML. On modeling and interpreting the economics of catastrophic climate change. Rev Econ Stat 2009; 91(1):1–19.

Yoshimori M, Yokohata T, Abe-Ouchi A. A comparison of climate feedback strength between CO 2 doubling and LGM experiments. J Clim 2009;22(12):3374–3395. doi:10.1175/2009jcli2801.1.

Yoshimori M, Hargreaves JC, Annan JD, Yokohata T, Abe-Ouchi A. Dependency of feedbacks on forcing and climate state in perturbed parameter ensembles. J Clim 2011;24:6440–6455.

Acknowledgments

Thanks are due to Julia Hargreaves and three anonymous reviewers for many helpful comments and suggestions for the paper.

Conflict of interest

The corresponding author states that he has no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Constraints on Climate Sensitivity

Rights and permissions

About this article

Cite this article

Annan, J.D. Recent Developments in Bayesian Estimation of Climate Sensitivity. Curr Clim Change Rep 1, 263–267 (2015). https://doi.org/10.1007/s40641-015-0023-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40641-015-0023-5