Abstract

We study the problem of allocating an indivisible object to one of several agents on the full preference domain when monetary transfers are not allowed. Our main requirement is strategy-proofness. The other properties we seek are Pareto optimality, non-dictatorship, and non-bossiness. We provide characterizations of strategy-proof rules that satisfy Pareto optimality and non-bossiness, non-dictatorship and non-bossiness, and Pareto optimality and non-dictatorship. As a consequence of these characterizations, we show that a strategy-proof rule cannot satisfy Pareto optimality, non-dictatorship, and non-bossiness simultaneously.

Similar content being viewed by others

1 Introduction

We consider the following problem. A central agency allocates an indivisible object to one of several agents (e.g., a task to employees, a house to applicants) where monetary transfers are not possible. We study this allocation problem on the full preference domain—in particular, an agent may prefer to receive the object, prefer not to receive it, or be indifferent. In practice, agents are often indifferent when they do not know the quality or features of an object. In such situations, it may be costly to restrict the full preference domain to the strict one by treating indifferent agents as if they prefer to receive the object or prefer not to. For example, an agent is indifferent, and he is considered as preferring the object on the strict preference domain, and he receives it. When there are agents who actually prefer to receive the object, allocating it to an indifferent agent results in an efficiency loss. Similarly, if the indifferent agent is considered as not preferring the object on the strict preference domain, an efficient rule requires that the object is not allocated to this agent. This requirement introduces an artificial constraint for an efficient rule, which unnecessarily restricts the set of efficient rules when there are agents who actually prefer not to receive the object. Erdil and Ergin (2008) establish this result by showing that random tie-breaking in school choice districts in the United States adversely affects the welfare of students by introducing artificial constraints.

In this paper, our main requirement is strategy-proofness, which means that no agent can gain from reporting false preference. This strategic robustness property has been widely used to guarantee that agents reveal their true preferences.Footnote 1 In addition to strategy-proofness, we are interested in Pareto optimality, non-dictatorship, and non-bossiness. Pareto optimality is a standard efficiency notion used in almost all economic applications, and non-dictatorship and non-bossiness are fairness properties. A rule is Pareto optimal if there is no other rule that provides weakly better outcomes for all agents and strictly better outcomes for some agents. A rule is non-dictatorial if there is no agent who receives the object whenever he prefers to do so and does not receive it whenever he prefers not to. A rule is non-bossy if there is no agent who can change the outcome of another agent without changing his own outcome.

We first show, interestingly, that Pareto optimality implies strategy-proofness (Proposition 1). We prove that a strategy-proof and non-bossy rule has a ranking function (Proposition 2). We then characterize strategy-proof rules as follows. A Pareto optimal and non-bossy rule is dictatorial (Proposition 3), a non-dictatorial and non-bossy rule is suboptimal (Proposition 4), and a Pareto optimal and non-dictatorial rule is bossy (Proposition 5). As a result of these characterizations, we show that a strategy-proof rule cannot satisfy Pareto optimality, non-dictatorship, and non-bossiness simultaneously (Proposition 6).

2 Model and analysis

There is an indivisible object and a set \(N=\{1,2,\ldots ,n\}\) of finite agents, where \(n\ge 2\). The indivisible object can be allocated to an agent or may not be allocated at all. If the object is allocated to agent \(i\in N\), the outcome of the allocation \( x=i\); if the object is not allocated at all, the outcome of the allocation \(x=0\). Then, the set of possible outcomes is \(N_{0}=\{0,1,2,\ldots ,n\}\). An agent \(i\in N\) may or may not receive the object, so the allocation of agent i in the outcome x is \(x_{i}\in \{0,i\}\).

The preference of agent \(i\in N\) is \(R_{i}\), where \(R_{i}\) is reflexive for each agent i, and the strict component of \(R_{i}\) is \(P_{i}\). If an agent prefers to receive the object, we denote it by \(R_{i}=R_{i}^{+}\); if he is indifferent, we denote it by \(R_{i}=R_{i}^{0}\); and if he prefers not to receive the object, we denote it by \(R_{i}=R_{i}^{-}\). The set of preference profiles for agent \(i \in N\) is \({\mathfrak {R}}_{{\mathfrak {i}}}\in \{R_{i}^{+},R_{i}^{0},R_{i}^{-}\}\), where \({\mathfrak {R}}=\times _{i\in N}{\mathfrak {R}}_{{\mathfrak {i}}}\). For notational convenience and ease of distinguishing preference types, we define a binary relationship (a strict partial order) “\(\vartriangleright \)” such that for \(i\in N\) and \(R_{i}\in \) \({\mathfrak {R}}_{i}\), we have \(R_{i}^{+}\vartriangleright R_{i}^{0}\vartriangleright R_{i}^{-}\). For example, \(R_i^+ \vartriangleright \ {\tilde{R}}_{i}\) means \({\tilde{R}}_{i} \in \{R_{i}^{0},R_{i}^{-}\}\); and \({\tilde{R}}_{i} \vartriangleright \ R_{i}^-\) means \({\tilde{R}}_{i} \in \{R_{i}^{+}, R_{i}^{0}\}\).

If all agents prefer to receive the object, we denote it by \(R^{+}\), where \(R^{+}=(R_{1}^{+},R_{2}^{+} \ldots ,R_{n}^{+})\); if all agents are indifferent, we denote it by \(R^{0}\), where \(R^{0}=(R_{1}^{0},R_{2}^{0} \ldots ,R_{n}^{0})\); and if all agents prefer not to receive the object, we denote it by \(R^{-}\), where \(R^{-}=(R_{1}^{-},R_{2}^{-} \ldots ,R_{n}^{-})\). Moreover, when an agent is indifferent or prefers not to receive the object, we denote it by \(R_{i}=R_{i}^{\lnot }\), where \(R_{i}^{\lnot }\in \{R_{i}^{0},R_{i}^{-}\}\). A rule \(f:{\mathfrak {R}} \longrightarrow N_{0}\) is a function that associates a feasible outcome to each preference profile. The outcome of agent i by rule f under the preference profile R is \(f_{i}(R)\), i.e., when \(f(R)=x\), \(f_{i}(R)=x_{i}\).

We next define the properties studied in the paper. First, strategy-proofness requires that no agent ever gains by misrepresenting his true preferences. For all \(i\in N\), \({\tilde{R}}_{i}\in {\mathfrak {R}}_{i}\) and \(R\in {\mathfrak {R}}\), a rule f is strategy-proof if \(f_{i}(R)R_{i}f_{i}({\tilde{R_{i}}},R_{-i})\). If a rule is not strategy-proof, then it is manipulable. For some \( i\in N\), \({\tilde{R}}_{i}\in {\mathfrak {R}}_{i}\) and \(R\in {\mathfrak {R}}\), a rule f is manipulable and agent i can manipulate it at R if \( f_{i}({\tilde{R}}_{i},R_{-i})P_{i}f_{i}(R)\). Second, a rule is Pareto optimal if there is no other rule that provides weakly better outcomes for all agents and strictly better outcomes for some agents. Formally, a rule f is Pareto optimal if for all \(R\in {\mathfrak {R}}\), there is no \(x\in N_{0}\) such that \(x_{i}R_{i}f_{i}(R)\) for all \(i\in N\), and \(x_{j}P_{j}f_{j}(R)\) for some \(j\in N\). Third, dictatorship requires that there is an agent who receives the object whenever he prefers to receive, and does not receive it whenever he prefers not to. Formally, a rule f is dictatorial if there exists \(i\in N\) such that whenever \(R_{i}=R_{i}^{+}\), \(f(R)=i\), and whenever \(R_{i}=R_{i}^{-}\), \(f_{i}(R)=0\). In this case, agent i is a dictator with respect to f. A rule f is non-dictatorial if there is no dictator agent with respect to f. Fourth, bossiness requires that there is an agent who can change another agent’s outcome without changing his own outcome. Formally, a rule f is bossy if there exist \(i,j\in N\), \({\tilde{R}}_{i}\in {\mathfrak {R}}_{i}\), and \(R\in {\mathfrak {R}}\) such that \(f_{i}(R)=f_{i}({\tilde{R}}_{i},R_{-i})\) and \(f_{j}(R)\ne f_{j}({\tilde{R}}_{i},R_{-i})\), and in this case, agent i becomes bossy with agent j at R. If a rule f is not bossy, it is non-bossy. Finally, full range requires that every possible outcome occurs at least at one preference profile. Formally, a rule f is full range if for all \(x\in N_{0}\), there exists \(R\in {\mathfrak {R}}\) such that \(f(R)=x\).

The following proposition shows, interestingly, that Pareto optimality implies strategy-proofness.

Proposition 1

If a rule f is Pareto optimal, then f is strategy-proof.

Proof

Suppose that f is Pareto optimal but not strategy-proof. Then, there exists an agent \(i\in N\), \({\tilde{R}}_{i}\in {\mathfrak {R}}_{i}\), and \(R\in {\mathfrak {R}}\) such that \(f_{i}({\tilde{R}}_{i},R_{-i})P_{i}f_{i}(R)\). If \(R_i=R_i^0\), agent i cannot manipulate f because he cannot be better off. If \(R_{i}= R_{i}^{-}\), we need \(f(R)=i\), which can be improved with \(f_i(R)=0\), and this contradicts the Pareto optimality of f. If \(R_i=R_i^+\), for f to be manipulable, we need \(f_i(R)=0\), \(f({\tilde{R}}_{i},R_{-i})=i\). Pareto optimality of f requires that there exists \(j\in N\) such that \(R_{j}=R^+_j\) and \(f(R)=j\). Then, \(f({\tilde{R}}_{i},R_{-i})=i\) can be Pareto improved by \(f({\tilde{R}}_{i},R_{-i})=j\), contradiction.\(\square \)

Pápai (2001) proves that strategy-proofness, non-bossiness, and full range are sufficient for Pareto optimality on the strict preference domain. The following example shows that this result does not hold on the full preference domain.

Example 1

Let \(N=\{1,2\}\). Let the rule f be such that \( f(R_{1}^{-},R_{2}^{+})=2\), \(f(R_{1}^{-},R_{2}^{0})=2\), \( f(R_{1}^{-},R_{2}^{-})=0\) and for all \(R\in {\mathfrak {R}}{\setminus } \{(R_{1}^{-},R_{2}^{+}),(R_{1}^{-},R_{2}^{0}),(R_{1}^{-},R_{2}^{-})\}\), \( f(R)=1\). Agent 1 receives the object whenever he prefers to do so (or he is indifferent) and he does not receive it whenever he does not prefer it. Then, agent 1 does not need to manipulate. When agent 2 prefers to receive the object, he either gets it or cannot get it because agent 1 gets it. Moreover, agent 2 does not receive the object whenever he prefers not to. Thus, f is strategy-proof. Whenever agent 1 reports \(R_{1}^{0}\) or \( R_{1}^{+}\), he receives the object, and hence, he cannot be bossy with agent 2, and agent 2 cannot be bossy with agent 1. Then, f is non-bossy. All possible outcomes (\(x=0,1,2\)) occur at least at one preference profile, so f is full range. Yet, f is not Pareto optimal because the outcome can be improved by letting \(f(R_{1}^{0},R_{2}^{+})=2\) instead of \(f(R_{1}^{0},R_{2}^{+})=1\).

Example 1 shows that strategy-proofness, non-bossiness, and full range are not sufficient for Pareto optimality on the full preference domain because the object is assigned to an indifferent agent even though another agent prefers to receive it. We define this case formally as follows. A rule f is forceful if there exist \(i,j\in N\) and \(R\in {\mathfrak {R}}\) such that \(R_{i}=R_{i}^{+},R_{j}=R_{j}^{0}\), and \(f(R)=j\). If f is not forceful, it is non-forceful. In Lemma 2, we show that strategy-proofness, non-bossiness, full range, and non-forcefulness are sufficient for Pareto optimality on the full preference domain.

Before showing Lemma 2, we define two more properties and derive a necessary and sufficient condition for Pareto optimality. A rule f is wasteful if there exists an agent \(i\in N\) such that \(R_{i}=R_{i}^{+}\) and \(R\in {\mathfrak {R}}\), and \(f(R)=0\); i.e., f is wasteful if there is an agent who prefers to receive the object, but the object is not allocated at all. If a rule f is not wasteful, it is non-wasteful. An agent \(i\in N\) is a discard agent with respect to f if there exists \(R_{-i}\in {\mathfrak {R}}_{-i}\) such that \(f(R_{i}^{-},R_{-i})=i\); i.e., if an agent prefers not to receive the object but receives it, he is a discard agent. Lemma 1 proves that non-forcefulness, non-wastefulness, and containing no discard agent are necessary and sufficient for Pareto optimality.

Lemma 1

A rule f is Pareto optimal if and only if f is non-forceful, non-wasteful, and contains no discard agent.

Proof

-

(a)

As it is obvious, we omit this proof, but it is available from the author upon request.

-

(b)

If a rule f is non-forceful, non-wasteful, and contains no discard agents, then f is Pareto optimal. Suppose to the contrary that f is not Pareto optimal. Then, there exists \(x\in N_{0}\) and \(R \in {\mathfrak {R}}\) such that \(x_{i}R_{i}f_{i}(R)\) for all \(i\in N\), and \(x_{j}P_{j}f_{j}(R)\) for (at least) an agent \(j\in N{\setminus } \left\{ i\right\} \) . There are three cases: (i) \(R_j=R_j^0\), (ii) \(R_j=R_j^-\), and (iii) \(R_j=R_j^+\). (i): \(R_j=R_j^0\), agent j cannot be strictly better off. (ii): \(R_j=R_j^-\) and \(f(R)=j\), then \(x_j=0\) is such that \(x_{j}P_{j}f_{j}(R)\) and \(x_{i}R_{i}f_{i}(R)\) for all \(i\in N{\setminus }\{j\}\). However, \(R_j=R_j^-\) and \(f(R)=j\) means that j is a discard agent, contradiction. (iii): \(R_j=R_j^+\) and \(f_j(R)=0\). Then \(x=j\) is such that \(x_{j}P_{j}f_{j}(R)\) and \(x_{i}R_{i}f_{i}(R)\) for all \(i\in N{\setminus }\{j\}\) if a) \(f(R)=0\) or b) \(f(R)=i\), where \(R_i=R_i^0\) or c) \(f(R)=i\), where \(R_i=R_i^-\). a) \(R_j=R_j^+\) and \(f(R)=0\) contradicts non-wastefulness of f. b) \(R_j=R_j^+\) and \(f(R)=i\), where \(R_i=R_i^0\) contradicts non-forcefulness of f. Case c) \(f(R)=i\), where \(R_i=R_i^-\) contradicts not containing a discard agent. \(\square \)

Lemma 2

If a rule f is strategy-proof, non-bossy, full range, and non-forceful, f is Pareto optimal.

Proof

Suppose that f is not Pareto optimal. Because f is non-forceful, one of the two cases occurs:

-

(i)

(wastefulness): For some \(i\in N\), there is \(R\in {\mathfrak {R}}\) such that \(R_{i}=R_{i}^{+}\) and \(f(R)=0\). Let \(R_{1}=R_{1}^{+}\), without loss of generality. Since f is full range, there exists \({\tilde{R}}\in \mathfrak {R}\) such that \( f({\tilde{R}})=1\). Then, consider the sequence of profiles given below:

$$\begin{aligned} (R_{1},\ldots ,R_{n})\rightarrow & {} (R_{1},R_{2},\ldots ,\tilde{R}_{n})\rightarrow \cdots \rightarrow (R_{1},\ldots ,R_{j-1},{\tilde{R}}_{j},\ldots ,{\tilde{R}}_{n})\rightarrow \cdots \\\rightarrow & {} (\tilde{R}_{1},\ldots ,{\tilde{R}}_{n}). \end{aligned}$$Since f is non-bossy, when \(R_{j}\) changes to \({\tilde{R}}_{j}\), either the outcome does not change or by strategy-proofness, \({\tilde{R}}_{j}\ne R_{j}^{-}\), \(R_{j}\ne R_{j}^{+}\) and \(f(R_{1},\ldots ,R_{j-1},{\tilde{R}}_{j},\ldots , {\tilde{R}}_{n})=j\) for \(j\in \{1,\ldots ,n\}\). First, \({\tilde{R}}_{j}\ne R_{j}^{-}\) because otherwise, j can manipulate by reporting \(R_{j}\) when his actual preference is \({\tilde{R}}_{j}\). Second, \(R_{j}\ne R_{j}^{+}\) because otherwise, j can manipulate by reporting \({\tilde{R}}_{j}\) when his actual preference is \(R_{j}\). In the last step, when \(R_{1}\) is replaced by \({\tilde{R}} _{1}\), we know that the outcome changes to \(f({\tilde{R}}_{1},{\tilde{R}}_{2} \ldots ,{\tilde{R}}_{n})=f({\tilde{R}})=1\). Then \({\tilde{R}} _{1}\) should be different than \(R_{1}\). Because \(R_{1}=R_{1}^{+}\), agent 1 can manipulate by reporting \({\tilde{R}} _{1}\) when his actual preference is \(R_{1}\). Thus, this contradicts strategy-proofness of f.

-

(ii)

(discard agent) Let agent 1 be a discard agent without loss of generality, i.e., there exists \({\tilde{R}}\in {\mathfrak {R}}\) such that \(f({\tilde{R}})=1\) and \( {\tilde{R}}_{1}=R_{1}^{-}\). Since f is full range, there exists \(R\in {\mathfrak {R}}\) such that \(f(R)=0\). Then, by the argument above, we can establish that \({\tilde{R}}_{j}\ne R_{j}^{-}\). Using the same reasoning, when \(R_{1}\) changes to \({\tilde{R}}_{1}\) in the last step, \({\tilde{R}}_{1}\ne R_{1}^{-}\), which is a contradiction. \(\square \)

3 Characterizations of strategy-proof rules

In this section, we characterize strategy-proof rules that satisfy (i) Pareto optimality and non-bossiness, (ii) non-dictatorship and non-bossiness, and (iii) Pareto optimality and non-dictatorship. We start by defining new terms. First, an agent-preference pair \(\left( i,R_{i}\right) \) is a pair where agent \(i\in N\) has a preference \(R_{i}\in {\mathfrak {R}}_{i}\), and \(\left( i,R_{i}\right) \) is an element of \(\varPi =N\times {\mathfrak {R}}_{i}\), where \(\varPi \) is the set of agent-preference pairs. Moreover, \(\varPi _{0}=\varPi \cup \left\{ 0\right\} \), where \(\left\{ 0\right\} \) refers to the case in which the object is not assigned at all. Second, a rule f has a complete hierarchy if there exists an injective ranking function \(r:\varPi _{0}\rightarrow \mathbb {N}\) such that the following property holds: for all \(j\in N{\setminus } \left\{ i\right\} \) and \(R\in {\mathfrak {R}}\), if \(r\left( i,R_{i}\right) > r(j,R_{j})\) and \(r\left( i,R_{i}\right) > r\left( 0\right) \), then \(f\left( R\right) =i\); and if \(r\left( 0\right) > r\left( k,R_{k}\right) \) for all \(k\in N,\) then \(f\left( R\right) =0\). The intuition of the ranking function is as follows. An agent receives the object when the ranking of his agent-preference pair is higher than the ranking of other agents’ agent-preference pairs and it is higher than the ranking of the case where the object is not allocated at all. Moreover, if the ranking of the case where the object is not allocated at all is higher than the ranking of all agents’ agent-preference pairs, the object is not allocated at all. Third, we define hierarchical choice function in the context of the full preference domain as follows.

Definition 1

Let \(R,{\tilde{R}}\in {\mathfrak {R.}}\) A rule f is a Hierarchical Choice Function (hereinafter, HCF) if it has a complete hierarchy with a ranking function r that satisfies the following two conditions:

-

(a)

For all \(i\in N,\) if \(R_{i}\vartriangleright {\tilde{R}}_{i}\) and \(r\left( i,R_{i}\right) > r\left( 0\right) \), there exists no \(j\in N{\setminus } \left\{ i\right\} \) such that \(r(i,{\tilde{R}}_{i})> r(j,R_{j})> r\left( i,R_{i}\right) \).

-

(b)

For all \(i\in N,\) if \(r(i,{\tilde{R}}_{i})> r\left( 0\right) > r\left( i,R_{i}\right) ,\) then \({\tilde{R}}_{i}\vartriangleright R_{i}\).

We next present a remark that is used in the proof of Proposition 2.

Remark 1

-

(a)

Let f be a non-bossy rule, \(i,j\in N\), and \(R\in {\mathfrak {R}} .\) If \(f\left( R\right) =i,\) then for all \({\tilde{R}}\in {\mathfrak {R}}\), \( f_{j}(R_{\left\{ i,j\right\} },{\tilde{R}}_{-\left\{ i,j\right\} })=0\).

-

(b)

Let f be a strategy-proof and non-bossy rule, \(i,j\in N\), \(R_{i}\in {\mathfrak {R}}_{i}\), and \(R_{j}\in {\mathfrak {R}}_{j}\). Suppose that \(\left( i,R_{i}\right) \curlyeqsucc (j,{\tilde{R}}_{j})\). Then, for all \({\tilde{R}}_{i}\in {\mathfrak {R}}_{i}\) such that \({\tilde{R}}_{i}\vartriangleright R_{i}\) (if any), \((i,{\tilde{R}}_{i})\curlyeqsucc (j,{\tilde{R}}_{j})\). Moreover, for all \({\tilde{R}}_{j}\in {\mathfrak {R}}_{j}\) such that \(R_{j}\vartriangleright {\tilde{R}}_{j}\) (if any), \(\left( i,R_{i}\right) \curlyeqsucc (j,{\tilde{R}}_{j})\).

-

(c)

Let f be a strategy-proof and non-bossy rule. For \(i\in N\) and \(R\in {\mathfrak {R}}\), if \(f\left( R\right) =0\), then for all \({\tilde{R}} _{i}\in {\mathfrak {R}}_{i}\) such that \(R_{i}\vartriangleright {\tilde{R}}_{i}\) (if any), \(f({\tilde{R}}_{i},R_{-i})=0\). Moreover, if \(f\left( R\right) =i\), then for all \({\tilde{R}}_{i}\in {\mathfrak {R}}_{i}\) such that \({\tilde{R}}_{i}\vartriangleright R_{i}\) (if any), \(f({\tilde{R}}_{i},R_{-i})=i\).

The proof of Remark 1 is available upon request from the author. The following proposition characterizes strategy-proof and non-bossy rules.

Proposition 2

A rule f is strategy-proof and non-bossy if and only if f is a HCF.

Proof

-

(a)

A HCF f with a ranking function r is strategy-proof and non-bossy. Suppose to the contrary that there is an agent \(i\in N\) that can manipulate f at R. \(R_{i}\not =R_{i}^{0}\) because an indifferent agent cannot manipulate f. Then, there are two possible manipulations: either \(R_{i}=R_{i}^{+}\) or \(R_{i}=R_{i}^{-}\). If \(R_{i}=R_{i}^{+}\) and \(f(R)=i\), since agent i achieves the best outcome, he cannot manipulate f. If \(R_{i}=R_{i}^{+}\), \(f_{i}\left( R\right) =0\), and \(r(i,R_{i}^{+})> r\left( 0\right) \), then there is an agent \(j\in N\ \)such that \(f(R)=j\). Then, by definition of complete hierarchy, \(r(j,R_{j})> r(i,R_{i}^{+})\). By the first condition of HCF, \(r(j,R_{j})> r(i,R_{i}^{-})\) and \(r(j,R_{j})> r(i,R_{i}^{0})\). If \(r\left( 0\right) > r(i,R_{i}^{+})\), by the second condition of HCF, \(r(0)> r(i,R_{i}^{-})\) and \(r\left( 0\right) > r(i,R_{i}^{0})\). Either case, agent i cannot receive the object by reporting a false preference. If \(R_{i}=R_{i}^{-}\ \)and \(f_{i}(R)=0\), since this is the best outcome, he cannot manipulate f. If \(R_{i}=R_{i}^{-}\ \)and \(f(R)=i\), for all \(j\in N\), \(r(i,R_{i}^{-})> r(j,R_{j})\). Then, by the first condition of HCF, \(r(i,R_{i}^{0})> r(j,R_{j})\) and \(r(i,R_{i}^{+})> r(j,R_{j})\). Thus, agent i retains the object even if he reports his preference as \(R_{i}^{0}\) or \(R_{i}^{+}.\) This contradicts the manipulability of f. Thus, f is strategy-proof. Suppose that f is bossy. Then, there exist \(i,j\in N\) such that agent i is bossy with agent j, i.e., there exists \(R\in \) \({\mathfrak {R}}\) such that \(f(R)=j\) , \(f_{j}(\widetilde{R}_{i},R_{-i})=0\), and \(f_{i}(\widetilde{R} _{i},R_{-i})=0\). \(f(R)=j\) implies that \(r(j,R_{j})> r\left( 0\right) \) and \(r(j,R_{j})> r\left( k,R_{k}\right) \) for all \(k\in N{\backslash } \left\{ j\right\} \). If \(f_{j}(\widetilde{R}_{i},R_{-i})=0\), we have \(r(j,R_{j})> r\left( k,R_{k}\right) \) for all \(k\in N{\backslash } \left\{ i,j\right\} \) and we have \(r(i,{\tilde{R}}_{i})> r\left( j,R_{j}\right) \). Thus, we have \(r(i,{\tilde{R}}_{i})>r(j,R_{j})>r \left( 0\right) \) and \(r(i,{\tilde{R}}_{i})>r(j,R_{j})>r\left( k,R_{k}\right) \) for all \(k\in N{\backslash } \left\{ i\right\} \), and hence we have \(f(\widetilde{R}_{i},R_{-i})=i\). However, \(f(\widetilde{R}_{i},R_{-i})=i\) contradicts \(f_{i}(\widetilde{R}_{i},R_{-i})=0\) (i.e., the bossiness of f), so f is non-bossy.

-

(b)

A strategy-proof and non-bossy f is a HCF. We construct a ranking function r as follows:

-

Initialization: start with \(\varPi =N\times {\mathfrak {R}}_{i}\) and \(\varPi _{0}=\varPi \cup \left\{ 0\right\} .\) Let

$$\begin{aligned} R_{i}^{\max }=\left\{ \text {for all }{\tilde{R}}_{i}\in {\mathfrak {R}} _{i}{\backslash } \left\{ R_{i}\right\} , R_{i}\in {\mathfrak {R}}_{{\mathfrak {i}}}\mid R_{i}\vartriangleright {\tilde{R}}_{i}\right\} \text { and }R^{\max }=\left( R_{i}^{\max }\right) _{i=1}^{n}\text {.} \end{aligned}$$Initially, \(R^{\max }=R^{+}\) and \(k=0.\) Rank the agent-preference pairs as follows:

-

Step 1: for all \(R\in {\mathfrak {R}}\), if \(f(R^{\max })=0\), \(f\left( R\right) =0.\) Let \(r\left( 0\right) =3n+1\,-k\), and rank the remaining agent-preference pairs in \(\varPi _{0}\) arbitrarily from 1 to \( 3n-k\) and stop. Otherwise, go to step 2.

-

Step 2: for some agent \(i\in N\), if \(f(R^{\max })=i\), let \(r\left( i,R_{i}^{\max }\right) =3n+1-k\) and \(k\leftarrow k+1.\) Let \({\mathfrak {R}} _{i}\leftarrow {\mathfrak {R}}_{i}\diagdown \left\{ R_{i}\right\} \) and \(\varPi _{0}\leftarrow \varPi _{0}{\backslash } \left\{ \left( i,R_{i}^{\max }\right) \right\} \). If \({\mathfrak {R}}_{i}=\emptyset ,\) let \(r\left( 0\right) =3n+1\,-k \), and rank the remaining agent-preference pairs in \(\varPi _{0}\) arbitrarily from 1 to \(3n-k\) and stop. Otherwise, let \(\mathfrak { R\leftarrow }\times _{i\in N}{\mathfrak {R}}_{i}\), recalculate \(R^{\max }\) and return to step 1.

-

To show that f is a HCF, we need to show that (i) the generated ranking function r forms a complete hierarchy and (ii) the two conditions of HCF are satisfied. (i) Suppose \(r\left( i,R_{i}\right) >r\left( 0\right) \) and \(r\left( i,R_{i}\right) >r(j,R_{j})\) for \(R\in {\mathfrak {R}}\) and for all \(j\in N{\setminus } \left\{ i\right\} \). Then, from the algorithm, there must exist \({\tilde{R}}\in {\mathfrak {R}}\) such that \(f(R_{i},{\tilde{R}}_{-i})=i\) (this must occur before the algorithm stops after \(r\left( 0\right) \)). Moreover, since the algorithm always considers \(f\left( R^{\max }\right) \) at step 2 and for all \(j\in N{\setminus } \left\{ i\right\} \), we have \(r\left( i,R_{i}\right) >r(j,R_{j})\). Then, for all \(j\in N{\setminus } \left\{ i\right\} \), either \( {\tilde{R}}_{j}\vartriangleright R_{j}\) or \({\tilde{R}}_{j}=R_{j}\) (\((j,R_{j})\)s have lower ranks, which means that we encounter them later in the algorithm, and (\(R_{i}^{\max }\)) gets smaller over time). Since \(f(R_{i},{\tilde{R}}_{-i})=i\), for all \(j\in N{\setminus } \left\{ i\right\} \), \(\left( i,R_{i}\right) \curlyeqsucc (j,{\tilde{R}}_{j})\) by Remark 1 and definition of \(\curlyeqsucc \). Then, for all \(j\in N{\setminus } \left\{ i\right\} \), we have \(\left( i,R_{i}\right) \curlyeqsucc (j,R_{j})\) by Remark 1. Thus, by Remark 1 and non-bossiness of f, \(f\left( R\right) =i.\) Hence, the first condition of complete hierarchy is satisfied. For the second condition of complete hierarchy, suppose \(r\left( 0\right) >r(j,R_{j})\) for all \(j\in N\). This immediately implies that the algorithm generating r, stops at step 1. Then, there exists \({\tilde{R}}\in {\mathfrak {R}}\) such that \(f({\tilde{R}})=0\), and for all \(j\in N,\) either \( {\tilde{R}}_{j}\vartriangleright R_{j}\) or \({\tilde{R}}_{j}=R_{j}\) (since we consider \(R^{\max }\) every time and when \(f({\tilde{R}})=0\) is encountered, \((j,R_{j})\)s are not assigned a rank). By changing \({\tilde{R}}_{j}\) to \(R_{j}\) one by one, and each time using Remark 1, we have \(f\left( R\right) =0\). Thus, r is a complete hierarchy.

(ii) The ranking function r first ranks \((i,R_{i}^{\max })\) before other possible agent-preference pairs of \(i\in N\) and the algorithm that generates the ranking function r guarantees the second condition of HCF. Moreover, since \((i,R_{i}^{\max })\) is ranked before other agent-preference pairs with smaller preferences of \(i\in N\), the first condition of HCF is guaranteed. Note that the first condition does not say anything about the agent-preference pairs with rank lower than \(r\left( 0\right) ,\) so ranking such pairs arbitrarily does not violate it. Thus, f is a HCF with the ranking function r. \(\square \)

This result is important as it not only characterizes the strategy-proof and non-bossy rules but also helps characterize strategy-proof rules that are Pareto optimal and non-bossy and that are non-dictatorial and non-bossy.

3.1 Pareto optimal and non-bossy rules

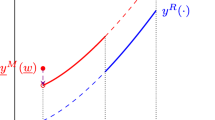

In this section, we characterize strategy-proof, Pareto optimal, and non-bossy rules; and we start by defining new terms. An agent i is the top agent with respect to f if \(f(R^{+})=i\), i.e., the top agent receives the object when all agents prefer to receive it. We next define two special cases of a HCF as follows.

Definition 2

-

(i)

A rule f is a Serial Dictatorship if it has a complete hierarchy with \(r:\varPi _{0}\rightarrow \mathbb {N}\) that satisfies (a) for all \(i\in N\), \(r(i,R_{i}^{+})> r\left( 0\right) > r(i,R_{i}^{-})\) and (b) for all \(i\in N\) and \(j\in N{\backslash } \left\{ i\right\} \), \(r(i,R_{i}^{+})> r(j,R_{j}^{0})\).

-

(ii)

A Constrained HCF is a HCF with ranking function \(r:\varPi _{0}\rightarrow \mathbb {N}\) that satisfies one of the two conditions: (a) there is no top agent or (b) there is a top agent \(i \in N\), but there exists \(R\in {\mathfrak {R}}\) such that \(r(i,R_{i}^{-})> r\left( 0\right) \) and \(r(i,R_{i}^{-})> r(j,R_{j})\) for all \(j\in N{\backslash } \left\{ i\right\} \).

Definition 2(i) means that a Serial Dictatorship has a ranking function r and it requires that the rule is non-wasteful and non-forceful with no discard agent. Definition 2(ii) means that a Constrained HCF has a ranking function r and it requires that either there is no top agent or there is a top agent but he is also a discard agent. The following proposition characterizes Pareto optimal and non-bossy rules.

Proposition 3

A rule is Pareto optimal and non-bossy if and only if it is a Serial Dictatorship.

Proof

Let f be a Serial Dictatorship. Since a Serial Dictatorship is a special case of a HCF, f is a HCF. By Proposition 2, f is strategy-proof and non-bossy. Definition 2(i) guarantees that f is non-wasteful and non-forceful with no discard agent. Then, by Lemma 1, f is Pareto optimal.

Let f be a Pareto optimal and non-bossy rule. As Proposition 1 shows, Pareto optimality implies strategy-proofness. Then, f is strategy-proof and non-bossy, so it is a HCF by Proposition 2. Then, it has a complete hierarchy with a ranking function \(r:\varPi _{0}\rightarrow \mathbb {N}\). By Lemma 1, Pareto optimality implies that f is non-wasteful and non-forceful with no discard agent. Being non-wasteful with no discard agent is guaranteed by part (a) of Definition 2(i), and being non-forceful is guaranteed by part (b) of Definition 2(i).\(\square \)

We next provide an example for Pareto optimal and non-bossy rules as follows.

Example 2

Let the rule f be such that \(f(R)=\begin{Bmatrix} \min \left\{ i\in N|R_{i}=R_{i}^{+}\right\}&\text {if }R\ne R^{\lnot }\\ 0&\text {if }R=R^{\lnot } \end{Bmatrix}\) for all \(R\in {\mathfrak {R}}.\) The object is not awarded to agents who are indifferent and who prefer not to receive it. Then, f is non-forceful and contains no discard agent. Moreover, if there is an agent who prefers to receive the object, the object does not remain unassigned, so f is non-wasteful. Thus, f is Pareto optimal by Lemma 1. When an agent changes his preference, either his own outcome changes, or his outcome stays the same but other agents’ outcomes also stay the same. Hence, f is non-bossy. The first agent obtains the object whenever he prefers to do so, and he does not obtain it whenever he prefers not to, and hence the first agent is a dictator. Thus, f is dictatorial.

The following result is a corollary to Proposition 3 along with Lemma 2.

Corollary 1

A rule is strategy-proof, non-bossy, full range, and non-forceful if and only if it is a Serial Dictatorship.

3.2 Non-dictatorial and non-bossy rules

In this section, we characterize strategy-proof, non-dictatorial, and non-bossy rules as follows.

Proposition 4

A rule f is strategy-proof, non-dictatorial, and non-bossy if and only if it is a Constrained HCF.

Proof

Let f be a Constrained HCF. Since f is a special case of HCF, it is strategy-proof and non-bossy by Proposition 2. Moreover, f satisfies one of the following requirements of being a Constrained HCF: (i) there is no top agent or (ii) there is a top agent i, but there exists \(R\in {\mathfrak {R}}\) such that \(r(i,R_{i}^{-})>r\left( 0\right) \) and \(r(i,R_{i}^{-})>r(j,R_{j})\) for all \(j\in N{\backslash } \left\{ i\right\} \). If the first requirement holds, then there is no agent who receives the object whenever he prefers to do so because \(f(R^+)=0\). If the second requirement holds, there is a top agent i, who is the only candidate for being a dictator, but he is a discard agent, so he cannot be a dictator. Thus, f is non-dictatorial.

Let f be a strategy-proof, non-dictatorial, and non-bossy rule. By Proposition 2, f is a HCF with a ranking function \(r:\varPi _{0}\rightarrow \mathbb {N}\). Since f is non-dictatorial, either there is no agent who receives the object whenever he prefers to do so, or such an agent exists but he receives the object even if he does not want to. If the former case occurs, for all \(i\in N\), there exists \(R\in {\mathfrak {R}}\) such that \(r\left( 0\right) >r\left( i,R_{i}^+\right) \) or \(r\left( j,R_j \right) >r\left( i,R_{i}^+\right) \) for some \(j \in N {\setminus } \{i\}\). Suppose to the contrary that there is a top agent i, i.e., \(f(R^+)=i\). Then, in any case, \(f_i(R_i^+,R_{-i})=0.\) However, this means that there exists an agent \(k \in N {\setminus } \{i\}\) such that when agent k changes his preference from \(R_{k}^+\) to \(R_{k}\), agent i loses the object although he prefers to receive it. If k is the one that receives the object, f is not strategy-proof. If k does not receive the object either, f is bossy. Thus, there is a contradiction. Hence, there cannot exist any top agent. This condition satisfies the first requirement of a Constrained HCF. Now suppose that there is an agent who receives the object whenever he prefers to do so but he receives the object even if he does not want to. Then, there exists \(R\in {\mathfrak {R}}\) such that \(r(i,R_{i}^{-})>r\left( 0\right) \) and \(r(i,R_{i}^{-})>r(j,R_{j})\) for all \(j\in N{\backslash } \left\{ i\right\} \). This condition satisfies the second requirement of a Constrained HCF. Thus, f is a Constrained HCF. \(\square \)

We next provide an example for strategy-proof, non-dictatorial, and non-bossy rules as follows.

Example 3

Let \(f(R)=0\) for all \(R\in {\mathfrak {R}}\) and \(R_i=R_i^+\). The object is not assigned, so no agent can manipulate f, and hence f is strategy-proof. The object is not awarded at any profile, so there is no agent who obtains the object whenever he prefers to do so. Thus, f is non-dictatorial. Since the object is not assigned at all, there is no agent who can change the outcome of another agent, and hence f is non-bossy. Because f does not assign the object even though agent i prefers to receive it, f is wasteful, so f is not Pareto optimal by Lemma 1.

3.3 Pareto optimal and non-dictatorial rules

In this section, we characterize strategy-proof, Pareto optimal, and non-dictatorial rules, and start by defining new terms in the context of the full preference domain. First, a rule is constrained bossy if there exist two agents \(i,j\in N\) and preference profiles \(R,\widetilde{R}\in {\mathfrak {R}}\) such that \(R_{j}=R_{j}^{+}\), \(f(R)=j\), and \(f_{i}(\widetilde{R}_{i},R_{-i})=f_{j}( \widetilde{R}_{i},R_{-i})=0.\) In this case, agent i is constrained bossy with agent j. Second, recall that if \(f(R^{+})=i\), agent i is the top agent with respect to f. Then, if there is an agent who is constrained bossy with the top agent, this rule is a top-bossy rule. Third, we define Top-Bossy Choice Function as follows.

Definition 3

If f is a top-bossy rule in which there is no discard agent, and f is non-forceful and non-wasteful, then f is a Top-Bossy Choice Function.

The following proposition characterizes Pareto optimal and non-dictatorial rules.

Proposition 5

A rule f is Pareto optimal and non-dictatorial if and only if it is a Top-Bossy Choice Function.

Proof

Let f be a Top-Bossy Choice Function. By definition, f is non-forceful, non-wasteful, and contains no discard agent. Then f is Pareto optimal by Lemma 1. Suppose to the contrary that f is dictatorial. Then, there exists a dictator agent \(j\in N\) who receives the object whenever he prefers to do so, i.e., the top agent. Since f is top-bossy, there is an agent \(i\in N\) such that i is constrained bossy with j, i.e., there exist \(R\in {\mathfrak {R}}\) and \(\widetilde{R}_{i}\in {\mathfrak {R}}_{i}\) such that \(R_{j}=R_{j}^{+}\), \(f(R)=j\), and \(f_{i}(\widetilde{R}_{i},R_{-i})=f_{j}(\widetilde{R}_{i},R_{-i})=0\). Since \(R_{j}=R_{j}^{+}\) and \(f_{j}(\widetilde{R}_{i},R_{-i})=0\), agent j cannot obtain the object although he prefers to do so. Thus, agent j is not a dictator, contradiction.

Let f be Pareto optimal and non-dictatorial. Pareto optimality of f implies that f is non-forceful, non-wasteful, and contains no discard agent by Lemma 1. Suppose to the contrary that f is not top-bossy. By Lemma 1, Pareto optimality implies that the object is assigned at \(R^{+}\). Thus, there exists a top agent \(i\in N\) such that \(f(R^{+})=i\). Pareto optimality also implies strategy-proofness of f by Proposition 1. Since f is strategy-proof and not top-bossy, \(f(R_{i}^{+},R_{-i})=i\). Also, by Lemma 1, Pareto optimality requires that there is no discard agent, i.e., \(f_{i}(R_{i}^{-},R_{-i})=0\). Then, agent i receives the object whenever he prefers to do so, and does not receive it whenever he prefers not to. Thus, agent i is a dictator, contradiction.\(\square \)

We next provide an example for Pareto optimal and non-dictatorial rules.

Example 4

Let the rule f be such that (if \(n\ge 3\))

The object is not assigned to agents who are indifferent and who prefer not to receive it. Then, f is non-forceful with no discard agent. Moreover, the object is not unassigned when there is an agent who prefers to receive it, so f is non-wasteful. Thus, f is Pareto optimal by Lemma 1. The first agent receives the object when all agents prefer to receive it, and the object is not awarded to the first agent when he prefers not to receive it. Then, the first agent is the only candidate for being a dictator. However, when the last agent does not prefer to receive the object, the first agent cannot receive it even if he wants to. Thus, the first agent is not a dictator, so f is non-dictatorial. Because the last agent can change the first agent’s outcome, the last agent is constrained bossy with the first agent, so f is constrained bossy.

As a result of Propositions 3, 4, and 5, we obtain the following impossibility result.

Proposition 6

No strategy-proof rule satisfies Pareto optimality, non-dictatorship, and non-bossiness simultaneously.

Proposition 6 is a direct result of Proposition 3 (which shows that a strategy-proof, Pareto optimal, and non-bossy rule is dictatorial), Proposition 4 (which shows that a strategy-proof, non-dictatorial, and non-bossy rule is not Pareto optimal), and Proposition 5 (which shows that a strategy-proof, Pareto optimal, and non-dictatorial rule is (constrained) bossy).

Lemma 3 shows that the impossibility result is tight except when there are only two agents, Pareto optimality implies dictatorship.

Lemma 3

If \(n=2\) and a rule f is Pareto optimal, then f is dictatorial.

Proof

Suppose that \(n=2\) and f is Pareto optimal. Suppose to the contrary that f is non-dictatorial. By Lemma 1, Pareto optimality implies that f is non-wasteful and non-forceful with no discard agent. Because of non-wastefulness, \(f(R^{+})>0\), and suppose that \(f(R^{+})=1\) without loss of generality. Since there is no dictator, there must be a case such that \(f(R_{1}^{+},R_{2}^{\lnot })\ne 1\). Then, either \(f(R_{1}^{+},R_{2}^{\lnot })=0\) or \(f(R_{1}^{+},R_{2}^{\lnot })=2\) holds. However, \(f(R_{1}^{+},R_{2}^{\lnot })=0\) contradicts non-wastefulness. If \(f(R_{1}^{+},R_{2}^{\lnot })=2\), then either \(f(R_{1}^{+},R_{2}^{-})=2\) or \(f(R_{1}^{+},R_{2}^{0})=2\). If \(f(R_{1}^{+},R_{2}^{-})=2\), agent 2 is a discard agent; and if \(f(R_{1}^{+},R_{2}^{0})=2\), f is forceful. Thus, all three cases contradict the Pareto optimality of f. Therefore, when there are two agents, Pareto optimality implies dictatorship.\(\square \)

4 Related literature

This paper belongs to the literature on the allocation problem of an indivisible object. This problem goes back to Glazer and Ma (1989) who consider allocating a prize to the agent who values it most without monetary transfers, and Perry and Reny (1999) and Olszewski (2003) generalize the results of Glazer and Ma (1989). Pápai (2001) considers the allocation problem of an indivisible object without monetary transfers, and shows the impossibility of finding a strategy-proof rule that satisfies Pareto optimality, non-dictatorship, and non-bossiness simultaneously. While Pápai (2001) restricts attention to the strict preference domain, we allow agents to be indifferent, and generalize the results of Pápai (2001) to the full preference domain.

The allocation problem of an indivisible object has been studied by allowing for monetary transfers as well, see for example, Tadenuma and Thomson (1993, 1995) and Ohseto (1999). Moreover, Ohseto (2000) proves the impossibility of finding a strategy-proof and Pareto optimal rule, Fujinaka and Sakai (2009) analyze whether possible manipulations (i.e., the absence of strategy-proofness) can have a serious impact on the outcome of agents, and in a recent study, Athanasiou (2013) characterizes strategy-proof rules satisfying other properties. This paper is also related to the literature on the allocation problem of multiple indivisible objects without monetary transfers (e.g., Pápai 2000; Ehlers and Klaus 2007, and Kesten and Yazici 2012) and to the literature on the impartial allocation of a prize (e.g., Holzman and Moulin 2013).

Although agents are often indifferent in practice, most of the prior literature restricts attention to the strict preference domain for simplicity, except for several studies that consider the full preference domain. For instance, Bogomolnaia et al. (2005) show that all efficient allocations of indivisible objects can be generated using serially dictatorial rules; Katta and Sethuraman (2006) prove that strategy-proofness is incompatible with efficiency on the full preference domain; Larsson and Svensson (2006) generalize strategy-proof voting rules to the full preference domain; Yilmaz (2009) characterizes individually rational, efficient, and fair rules on the full preference domain; Alcalde-Unzu and Molis (2011) and Jaramillo and Manjunath (2012) generalize top trading cycles mechanism to the full preference domain; and Athanassoglou and Sethuraman (2011) prove that individual rationality, efficiency, and strategy-proofness are incompatible on the full preference domain.

Notes

See Barbera (2001) for a comprehensive survey on strategy-proof rules.

References

Alcalde-Unzu, J., Molis, E.: Exchange of indivisible goods and indifferences: the top trading absorbing sets mechanisms. Games Econ. Behav. 73, 1–16 (2011)

Athanasiou, E.: A Solomonic solution to the problem of assigning a private indivisible good. Games Econ. Behav. 82, 369–387 (2013)

Athanassoglou, S., Sethuraman, J.: House allocation with fractional endowments. Int. J. Game Theory 40, 481–513 (2011)

Barbera, S.: An introduction to strategy-proof social choice functions. Soc. Choice Welf. 18, 619–653 (2001)

Bogomolnaia, A., Deb, R., Ehlers, L.: Strategy-proof assignment on the full preference domain. J. Econ. Theory 123, 161–186 (2005)

Ehlers, L., Klaus, B.: Consistent house allocation. Econ. Theory 30, 561–574 (2007)

Erdil, A., Ergin, H.: What’s the matter with tie-breaking? Improving efficiency in school choice. Am. Econ. Rev. 98(3), 669–689 (2008)

Fujinaka, Y., Sakai, T.: The positive consequences of strategic manipulation in indivisible good allocation. Int. J. Game Theory 38, 325–348 (2009)

Glazer, J., Ma, C.A.: Efficient allocation of a prize—King Solomon’s dilemma. Games Econ. Behav. 1, 222–233 (1989)

Holzman, R., Moulin, H.: Impartial nominations for a prize. Econometrica 81(1), 173–196 (2013)

Jaramillo, P., Manjunath, V.: The difference indifference makes in strategy-proof allocation of objects. J. Econ. Theory 147, 1913–1946 (2012)

Katta, A., Sethuraman, J.: A solution to the random assignment problem on the full preference domain. J. Econ. Theory 131, 231–250 (2006)

Kesten, O., Yazici, A.: The Pareto-dominant strategy-proof and equitable rule for problems with indivisible goods. Econ. Theory 50, 463–488 (2012)

Larsson, B., Svensson, L.G.: Strategy-proof voting on the full preference domain. Math. Soc. Sci. 52, 272–287 (2006)

Ohseto, S.: Strategy-proof allocation mechanisms for economies with an indivisible good. Soc. Choice Welf. 16, 121–136 (1999)

Ohseto, S.: Strategy-proof and efficient allocation of an indivisible good on finitely restricted preference domains. Int. J. Game Theory 29, 365–374 (2000)

Olszewski, W.: A simple and general solution to King Solomon’s problem. Games Econ. Behav. 42, 315–318 (2003)

Pápai, S.: Strategy-proof multiple assignments using quotas. Rev. Econ. Des. 5, 91–105 (2000)

Pápai, S.: Strategy-proof single unit award rules. Soc. Choice Welf. 18, 785–798 (2001)

Perry, M., Reny, P.: A general solution to King Solomon’s dilemma. Games Econ. Behav. 26, 279–285 (1999)

Tadenuma, K., Thomson, W.: The fair allocation of an indivisible good when monetary compensations are possible. Math. Soc. Sci. 25, 117–132 (1993)

Tadenuma, K., Thomson, W.: Games of fair division. Games Econ. Behav. 9, 191–204 (1995)

Yilmaz, O.: Random assignment under weak preferences. Games Econ. Behav. 66(1), 546–558 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

We would like to thank Onur Kesten, Ersin Korpeoglu, and Ozgun Ekici for their useful suggestions.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Korpeoglu, C.G. Allocation of an indivisible object on the full preference domain: axiomatic characterizations. Econ Theory Bull 6, 41–53 (2018). https://doi.org/10.1007/s40505-017-0122-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40505-017-0122-7