Abstract

Background and Objective

Development of clear and effective discrete-choice experiment surveys is an important step toward ensuring accurate and usable preference results. Pretest interviews and pilot testing are common in the development of discrete-choice experiments, and it is important for researchers to report details of survey changes resulting from patient feedback elicited in pilot work. This paper details pilot testing of an online discrete-choice experiment to elicit preferences for long-acting antiretroviral therapies among patients with HIV.

Methods

The survey included an introduction to hypothetical treatment options, descriptions of attributes, comprehension questions, instructions for completing a discrete-choice experiment, a discrete-choice experiment with 17 choice tasks, and questions about personal characteristics. We piloted the survey with 50 respondents over ten waves. Each wave incorporated design improvements based on observations made during the previous wave. Respondents completed the online survey while screen sharing with a researcher, allowing interactive discussion. We developed a scheme for assessing and categorizing the survey changes.

Results

Changes to the pilot were categorized by ways they impacted aspects of the discrete-choice experiment or the likely quality of resulting data. The four categories of impact are: understanding of attributes, underlying discrete-choice experiment and understanding of the choice question, collection of individual characteristics hypothesized to affect preference, and changes that improved clarity and usability of the survey without directly affecting the other categories (e.g., survey navigation and instructional clarity, formatting changes).

Conclusions

Detailed attention to the respondent experience in this large pilot allowed survey improvements that will likely reduce ambiguity, ensure more accurate capture of patient preferences and, ultimately, improve product development for long-acting antiretroviral therapies.

Similar content being viewed by others

This paper details the pilot testing process for a discrete-choice experiment that examines patient preferences for long-acting antiretroviral therapies, and summarizes revisions to the survey that improved respondent understanding of the attributes, clarified the discrete-choice experiment choice questions and design, and optimized collection of individual characteristics that may affect choice. |

Pretest interviews and pilot testing are common in the development of discrete-choice experiments, and it is important for researchers to report details of survey changes resulting from patient feedback elicited in pilot work. |

Detailed attention to the respondent experience in this large pilot allowed survey improvements, such as the addition of visual aids, that will likely reduce ambiguity, ensure more accurate capture of patient preferences, and provide an example for other researchers to follow in conducting and describing qualitative work that supports the development of discrete-choice experiments. |

1 Introduction

Discrete-choice experiments (DCEs) are a valuable method for quantifying patient preferences for emerging health technologies [1]. Results of these studies allow product developers to align new product attributes with patient preferences, which improves acceptability of these new products. Most published reports of DCEs focus on study results, but it is also important for researchers to publish descriptions of their methodology and processes for building and executing DCEs. Such descriptions of formative qualitative research, pretest interviews, and pilot testing are important, and should be included in reports of DCEs in order to improve evaluation of survey performance in eliciting preferences and the interpretability of results. In this paper, we address this gap by describing the pilot testing of a DCE examining patient preferences for long-acting antiretroviral therapy (LA-ART) for HIV treatment, and documenting the changes that were made to the DCE survey as a result of pilot testing.

Currently, most people living with HIV in the USA take one or more daily oral tablets combining two or more antiretroviral medications for treatment, the success of which is evaluated by viral suppression (i.e., an undetectable HIV viral load). Despite advances including the availability of single-tablet daily regimens for HIV, many patients still face challenges with antiretroviral therapy (ART) initiation and adherence [2]. Of the 1.1 million people in the USA living with HIV, approximately 86% have been diagnosed, 65% are receiving ART, and 56% are virally suppressed [3]. Long-acting formulations of antiretroviral medications, taken in combination, are an emerging HIV treatment modality with the potential to increase ART uptake and adherence [4]. New LA-ART modalities will provide increased options for patients who face barriers to taking daily oral pills, but the acceptability of these treatment regimens remains unclear. For this reason, our research team is conducting a DCE in the USA, which will establish the LA-ART product attributes and individual patient characteristics that will drive end user acceptability.

Attributes and levels for a DCE designed to elicit preferences for potential LA-ART options were identified using a systematic process [5]. This process started with eight attributes defining potential LA-ART products, based on existing literature and knowledge of products in development. Then, we conducted 12 key informant interviews with HIV treatment experts. These interviews allowed iterative updating of the list of attributes, the set of plausible levels for each attribute, and restrictions on the combinations of attribute levels. Key informants converged on four delivery modes (long-acting oral tablets, subcutaneous injections, intramuscular injections, implants), and six other attributes of LA-ART: frequency of dosing, location of treatment, pain, pre-treatment time undetectable, pre-treatment negative reaction testing, and late-dose leeway [5].

The ISPOR checklist for conjoint analysis applications in health advises researchers to be fully transparent about their methodology and processes for study design and execution [6]. This paper documents the processes of pilot testing and refinement of our DCE of LA-ART acceptability, and fills the gap between attribute development and survey fielding.

2 Methods

2.1 Study Setting and Population

The pilot testing of this survey included ten waves of survey administration to 50 respondents and was conducted from September 2020 through February 2021. Respondents were recruited from the University of Washington (UW) HIV registry and directly from the UW HIV clinics in western Washington. The UW HIV Information System contains clinical information on patients with HIV seen since 1 January, 1995 at a network of HIV clinics run by the UW in Seattle and the surrounding region who have consented to the use of their de-identified data for research. Respondents were considered eligible for this study if they met the following inclusion criteria: (1) HIV-positive status, (2) enrollment in care at the UW Madison Clinic or one of its satellite clinics in western Washington, (3) age 18 years or older, (4) fluent in English, and (5) capable of providing informed consent. Additionally, respondents were excluded if they met the following exclusion criteria: (1) persons who are cognitively impaired or were under the influence of drugs or alcohol at the time of the pilot session; (2) persons who are “long-term non-progressors,” meaning they have maintained an undetectable viral load despite not taking ART; and (3) persons who are taking long-acting injectable ART already as part of a clinical trial. Because in-person participation was very limited owing to COVID-19, respondents also had to be able to use Zoom video teleconferencing and have an active e-mail address.

2.2 Pilot Process

The pilot testing occurred using Health Information Portability and Accountability Act-compliant Zoom (Zoom Video Communications, San Jose, CA, USA). Using this platform, the respondent completed the online survey using their own device (a computer, tablet, or smartphone), while screen sharing and discussing the survey with a member of the research team (ATB). As they completed the survey, respondents were encouraged to “think aloud,” and asked to describe their understanding of the product attributes and levels, as well as their understanding of the survey and DCE instructions. All pilot interviews were recorded on Zoom, with both audio and video of the screen sharing.

After each respondent completed the pilot test, the researcher who conducted the pilot test (ATB) completed a qualitative assessment of the performance and comprehension of the respondent. This included a rating of whether and the extent to which the respondent required prompting to describe the choices in the DCE. Full details of this evaluation are included in the Appendix in the Electronic Supplementary Material (ESM).

2.3 Survey Instrument

The survey featured 88 questions, and was administered using SurveyEngine (SurveyEngine GmbH [7], Berlin, Germany), a web-based platform for administering DCE surveys. The first portion of the survey presented background information about the purpose of the study. We explained the details of the hypothetical modalities of LA-ART products, and the relevant attributes of these treatments. The presentation of this information featured comprehension questions to ensure respondent understanding of the content (see the ESM for the full text of these questions), and two “practice DCE” scenarios, where respondents viewed the information in the same format as the DCE. The survey also included a series of questions about the respondent’s history with HIV and HIV treatment; these characteristics are hypothesized to affect patient preferences for LA-ART.

The next section was the DCE, which initially presented 16 choice scenarios to each respondent (a 17th scenario was later added). Every choice featured three alternatives, which varied across the seven product attributes. Two of the alternatives were hypothetical LA-ART regimens, and one alternative was the respondent’s current treatment (daily oral tablets). Respondents were randomly assigned to one of four blocks. Each block was associated with 16 out of 64 possible choice scenarios. The 16 scenarios for the respondent’s block were shown to the respondent in a random order. The DCE used an unlabeled experimental design developed with Ngene software (ChoiceMetrics, Sydney, NSW, Australia), with a modified Federov algorithm and D-error minimization for at least 3 minutes. The final choice sets had a D-error of 0.053978.

Following the DCE, respondents were asked a series of questions about other personal characteristics that were hypothesized to affect LA-ART preferences. This includes access to healthcare, internalized HIV stigma, sociodemographic characteristics, sexuality, employment, and the experience of making choices in the DCE. Institutional review board approval for the entire study (including key informant interviews, pilot testing, and the full survey) was granted by the University of Washington Human Subjects Division (STUDY00007390). Respondents consented to participate both verbally and electronically at the time of pilot testing.

2.4 Pilot Feedback and Improvements

Recordings of the pilot tests were shared with members of the research team in a Health Information Portability and Accountability Act-compliant cloud storage drive. The study team evaluated these recordings for points of confusion or any other potential area of improvement for the survey. Each week, the team discussed these potential improvements during a regular meeting, and iteratively incorporated changes into subsequent waves of the pilot. All changes to the survey were systematically logged. Successive waves had the following number of unique respondents: wave 1 (8 respondents), 2 (12), 3 (6), 4 (1), 5 (4), 6 (3), 7 (4), 8 (1), 9 (3), 10 (8). The number of respondents varied across wave owing to the iterative nature of the survey improvements and to differences in the time needed for survey edits and recruitment across waves. We ended with ten waves, as this was the number of iterations that were undertaken before completing the interview with the 50th respondent.

Upon completion of the pilot testing, every change to the survey from the pilot log was categorized according to its impact on the DCE and the quality of the resulting data. Substantive changes were those that we deemed to have probable effects on the DCE and survey data collection, and these changes converged into three major categories that were identified post-hoc: (1) changes that likely impacted the understanding of the attributes, (2) changes that likely impacted the underlying DCE design and understanding of the choice question, and (3) changes that likely impacted the collection of individual characteristics that may affect choice. Finally, there was a fourth category that included the changes unlikely to be substantive; these were changes that improved survey navigation and instructional clarity, clarified the meaning of questions and their options, or adjusted formatting. After all changes were made, the full survey (ESM) included 88 questions (five comprehension questions, two practice DCE scenarios, 64 covariates, and 17 DCE scenarios).

2.5 Data Analysis

Summary statistics were used to present characteristics of the pilot test respondent sample. We also used summary statistics to describe the respondents’ performance on the comprehension questions across waves (proportion correct on first try, and proportion ever correct), and the proportion of respondents who were able to describe the treatment choices in the practice DCEs without prompting.

3 Results

Characteristics of the pilot respondents in all waves are displayed in Table 1. From wave 1 to wave 10, the proportion of respondents who required prompting to describe the choices in the first practice DCE decreased from 63 to 0%, and the proportion who required prompting to describe the choices in the second practice DCE decreased from 38 to 0% (Table 2). However, the comprehension questions completed by respondents, which were true/false questions about each attribute, showed no meaningful change in performance over the course of the pilot waves (Table 2). Table 1 also shows that among scenarios with long-acting oral included as an option, 61% of choices were for long-acting oral (the same figure was 54% for injections, and 47% for implants). Current therapy was an option in every scenario, and 17% of choices were for this option.

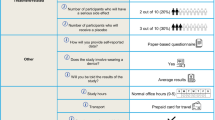

The full list of changes that occurred during the pilot testing, within their respective categories, are displayed in Fig. 1, with annotations to indicate in which wave the change was made. Figure 1a shows the changes that were made to improve the respondents’ understanding of the attributes of the hypothetical LA-ART products. All attributes received specific changes, except frequency of dosing and pain, which were well understood by all respondents from the beginning. We added clickable icons within the DCE, which show the descriptions of each attribute, using the exact same wording that was used during the section of the survey that first presented the attribute description. Another important change was the wording of the concept of “time undetectable,” which refers to the amount of time a respondent would need an undetectable viral load prior to initiating a hypothetical LA-ART product. Initially, this undetectability was referred to as having achieved “viral suppression,” but we found that not all respondents were familiar with this terminology. Being “undetectable” is more commonly discussed by respondents with their healthcare providers, and we therefore changed this wording to “pre-treatment time undetectable.” We also expanded the content that describes the “late-dose leeway” attribute, to add examples of short and long leeway time periods, and used precise wording to explain that if doses are received outside the leeway period, then the HIV viral load can become detectable.

a Changes that likely impact the understanding of the attributes, across waves. b Changes that likely impact the underlying discrete-choice experiment design, and the understanding of the choice question, across waves. c Changes that likely impact the collection of individual characteristics that may affect choice, across waves. W wave

Figure 1b lists the changes that impacted the underlying DCE design and the understanding of the choice question. This included adding emphasis that each choice was between two hypothetical long-acting treatment options and current therapy (daily oral tablets). In the early waves of the pilot, some respondents struggled to understand how to interpret and complete the practice DCE scenarios that are shown early in the survey. To alleviate this problem, we added two videos that explained the elements of the DCE choice scenario (see Fig. 2 for a screenshot of the second video, and the ESM for the full videos). In the videos, a voiceover from a research team member described the details of all three alternatives (displayed in vertical columns), including the level of each attribute for that alternative, with red arrows to guide the respondent’s attention as each row within each column was described. The researcher who conducted the interviews (ATB) noted that the sequence of two separate videos was helpful: the first video featured a practice DCE with only four relatively simple attributes (mode, location, frequency, and pain), and allowed respondents to become oriented with the structure of the choice sets. Then, the second video added the more complex attributes. This sequence helped certain respondents to more fully appreciate the variations between choice sets. Another important change was the addition of a 17th scenario to the DCE, in which the respondent chose between long-acting oral options and their current treatment. This scenario was added in order to observe the trade-offs that people make among the other attributes (aside from treatment type), within the long-acting oral modality, which we thought might be more popular than other treatment modalities.

Figure 1c shows the changes that were related to the collection of personal characteristics that we hypothesize could be related to treatment choice preferences. In the first nine waves of the survey, we used the 12-item version of the Berger Stigma Scale, and this was emotionally burdensome on many respondents [8]. For the tenth wave, we switched to a shorter questionnaire, which reduced this burden [9]. We also added two questions to the end of the survey, which inquire about the respondent’s decision-making process in the DCE. This was intended to identify respondents who did not consider all attributes of the alternatives when making their choice.

In the figure in the ESM, we show the full list of all other changes that were made to the survey during the pilot testing. These changes were important for improving the clarity and usability of the survey, but did not directly affect the DCE attributes, choice question, or collection of individual characteristics. These changes were made in order to improve survey navigation and instructional clarity, adjust formatting, and clarify the meaning of questions and their options.

4 Discussion

This paper describes the pilot testing of a DCE that will examine patient preferences for LA-ART, which are important emerging therapies for the treatment of HIV. We used a systematic and standardized process for conducting and recording the details of each respondent’s experience, therefore allowing our research team to identify the parts of the survey that were not clear to respondents or could be improved. Our process included both qualitative and quantitative assessments of the respondents’ experience. In a relatively large sample of respondents (n = 50), we made iterative improvements to the survey over ten waves. These changes clarified points of confusion regarding the hypothetical LA-ART products, as well as confusion in the process of completing a DCE.

During our pilot testing, we found that respondents understood our instructions for the survey quite well, especially after we added videos that showed examples of how to review, consider, and complete the choice tasks. The respondents showed good understanding of most of the attributes. Pain, frequency, and product type were well understood by respondents throughout. Even pre-treatment negative reaction testing, which we assumed was a more complicated concept, was well understood from the start. The more challenging concepts were pre-treatment time undetectable and late-dose leeway, which are important attributes of the first LA-ART regimen on the market, Cabenuva (cabotegravir-rilpivirine). The two key clinical trials demonstrating efficacy of this regimen required participants to have an undetectable viral load (<50 copies/mL) on their current HIV regimen for at least 6 months [10], or after 16 weeks on an oral regimen of dolutegravir-abacavir-lamivudine [11]. Moreover, because of concerns about the emergence of antiretroviral resistance if doses are delayed, detailed guidelines have been developed for close patient management by providers and clinics [12].

The resulting alterations in survey content and clarity were aimed to enhance accurate capture of patient preferences and characteristics, which can reduce ambiguity and potentially increase precision [13]. These changes increased our confidence that the survey is measuring what we intend to measure, and that it will ultimately establish how certain patient characteristics are associated with the acceptability of the tested attributes. This process will therefore facilitate maximum influence of the data that results from our DCE, and ultimately improve understanding of product development needs with respect to LA-ART, with long-term implications for patient acceptability, adherence, and health.

Strengths of our study include the large sample of pilot respondents, the recordings and specific attention to each respondent’s experience with the survey, and systematic logging of all changes made to the survey. One limitation of the study is that despite our efforts, it is possible that some feedback from respondents was overlooked or missed because we did not conduct in-depth exit interviews. Additionally, there is not a direct link between every piece of information gained and specific changes to the survey. Another limitation is that because of a requirement for online pilot testing owing to COVID-19, all of our pilot respondents had sufficient computer literacy to complete the survey on Zoom while screen sharing with a member of our research team; this may have inadvertently led to the selection of respondents with higher education and better computer literacy, who may not be representative of the general population of people living with HIV. Similarly, our recruitment, which occurred strictly within western Washington, reduces the representativeness of the sample. Finally, while we believe that these changes improved the survey and will lead to more precise data collection, we do not have proof of the connection between the survey changes and the quality of the resulting data.

5 Conclusions

Reporting on the pilot testing of this DCE survey provides transparency in our methodology and processes for study design and execution, which we hope will improve the interpretability of our findings. While details of formative qualitative research to inform DCE design are sometimes published [14], the processes of pilot testing are not. We recommend that for all DCEs, the process of testing the survey instrument, and the details of changes that may directly impact the survey’s data collection, be reported somewhere easily accessible to readers of the results, either as supplementary material or in a stand-alone article. This is the first paper, to our knowledge, to fully report on these practices. This reporting demonstrates that the survey worked as intended, and measured what it intended to measure, which increases the credibility of the results.

References

Soekhai V, Bekker-Grob EW, Ellis AR, et al. Discrete choice experiments in health economics: past, present and future. Pharmacoeconomics. 2019;37(2):201–26.

Cohen J, Beaubrun A, Bashyal R, et al. Real-world adherence and persistence for newly-prescribed HIV treatment: single versus multiple tablet regimen comparison among US medicaid beneficiaries. AIDS Res Ther. 2020;17(1):1–12.

Hogg RS. Understanding the HIV care continuum. Lancet HIV. 2018;5(6):e269–70.

Kapadia SN, Grant RR, German SB, et al. HIV virologic response better with single-tablet once daily regimens compared to multiple-tablet daily regimens. SAGE Open Med. 2018;6:2050312118816919.

Brah AT, Barthold D, Hauber B, et al. The systematic development of attributes and levels for a discrete choice experiment of HIV patient preferences for long-acting antiretroviral therapies. Unpublished Research Square preprint. 2021. https://doi.org/10.21203/rs.3.rs-719332/v1.

Bridges JF, Hauber AB, Marshall D, et al. Conjoint analysis applications in health: a checklist. A report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14(4):403–13.

SurveyEngine GmbH. SurveyEngine. 2021. http://www.surveyengine.com. Accessed 27 Apr 2022.

Reinius M, Wettergreen L, Wiklander M, et al. Development of a 12-item short version of the HIV stigma scale. Health Qual Life Outcomes. 2017;15(1):1–9.

Kalichman SC, Simbayi LC, Cloete LC, et al. Measuring AIDS stigmas in people living with HIV/AIDS: the Internalized AIDS-Related Stigma Scale. AIDS Care. 2009;21(1):87–93.

Swindells S, Andrade-Villanueva JF, Ricmond Gj, et al. Long-acting cabotegravir and rilpivirine for maintenance of HIV-1 suppression. N Engl J Med. 2020;382(12):1112–23.

Orkin C, Arasteh K, Górgolas Hernández-Mora M, et al. Long-acting cabotegravir and rilpivirine after oral induction for HIV-1 infection. N Engl J Med. 2020;382(12):1124–35.

National Alliance of State and Territorial AIDS Directors and the HIV Medicine Association. Preparing for long-acting antiretroviral treatment. 2021. https://www.hivma.org/globalassets/idsa/public-health/covid-19/long-acting-arvs-_final-.pdf. Accessed 27 Apr 2022.

Johnson FR, Lancsar E, Marshall D, et al. Constructing experimental designs for discrete-choice experiments: report of the ISPOR Conjoint Analysis Experimental Design Good Research Practices Task Force. Value Health. 2013;16(1):3–13.

Hollin IL, Craig BM, Coast J, et al. Reporting formative qualitative research to support the development of quantitative preference study protocols and corresponding survey instruments: guidelines for authors and reviewers. Patient. 2020;13(1):121–36.

Author information

Authors and Affiliations

Contributions

Conceptualization: DB, ATB, SMG, JMS, BH. Methodology: DB, ATB, SMG, JMS, BH. Formal analysis and investigation: DB, ATB, SMG, JMS, BH. Writing, original draft preparation: DB. Writing, review and editing: DB, ATB, SMG, JMS, BH. Funding acquisition: SMG, JMS. Supervision: SMG, JMS, BH.

Corresponding author

Ethics declarations

Funding

Financial support for this study was provided in part by a grant from the National Institutes of Health (NIH) [R01 MH121424], and by the University of Washington/Fred Hutch Center for AIDS Research, an NIH-funded program under award number AI027757, which is supported by the following NIH institutes and centers: NIAID, NCI, NIMH, NIDA, NICHD, NHLBI, NIA, NIGMS, NIDDK. The funding agreements ensured the authors’ independence in designing the study, interpreting the data, and writing and publishing the report.

Conflicts of interest/competing interests

Brett Hauber was an employee of RTI Health Solutions at the time this research was conducted. Susan M. Graham has received support from Gilead and Cepheid. Jane M. Simoni has received support from Pfizer. Douglas Barthold and Aaron T. Brah have no conflicts of interest directly relevant to the content of this article.

Ethics approval

Institutional review board approval for the entire study (including key informant interviews, pilot testing, and the full survey) was granted by the University of Washington Human Subjects Division (STUDY00007390).

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Availability of data and material

The data are confidential.

Code availability

Details of code and methods not specified in the article are available upon request.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file3 (MOV 10197 KB)

Supplementary file4 (MOV 16733 KB)

Rights and permissions

About this article

Cite this article

Barthold, D., Brah, A.T., Graham, S.M. et al. Improvements to Survey Design from Pilot Testing a Discrete-Choice Experiment of the Preferences of Persons Living with HIV for Long-Acting Antiretroviral Therapies. Patient 15, 513–520 (2022). https://doi.org/10.1007/s40271-022-00581-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40271-022-00581-z