Abstract

Objectives

The aims of the study were to evaluate usage rates of warfarin in stroke prophylaxis and the association with assessed stages of stroke and bleeding risk in long-term care (LTC) residents with atrial fibrillation (AFib).

Methods

A cross-sectional analysis of two LTC databases (the National Nursing Home Survey [NNHS] 2004 and an integrated LTC database: AnalytiCare) was conducted. The study involved LTC facilities across the USA (NNHS) and within 19 states (AnalytiCare). It included LTC residents diagnosed with AFib (International Classification of Diseases, Ninth Revision, Clinical Modification [ICD-9-CM] diagnostic code 427.3X). Consensus guideline algorithms were used to classify residents by stroke risk categories: low (none or 1+ weak stroke risk factors), moderate (1 moderate), high (2+ moderate or 1+ high). Residents were also classified by number of risk factors for bleeding (0–1, 2, 3, 4+). Current use of warfarin was assessed. A logistic regression model predicted odds of warfarin use associated with the stroke and bleeding risk categories.

Results

The NNHS and AnalytiCare databases had 1,454 and 3,757 residents with AFib, respectively. In all, 34 % and 45 % of residents with AFib in each respective database were receiving warfarin. Only 36 % and 45 % of high-stroke-risk residents were receiving warfarin, respectively. In the logistic regression model for the NNHS data, when compared with those residents having none or 1+ weak stroke risk and 0–1 bleeding risk factors, the odds of receiving warfarin increased with stroke risk (odds ratio [OR] = 1.93, p = 0.118 [1 moderate risk factor]; OR = 3.19, p = 0.005 [2+ moderate risk factors]; and OR = 8.18, p ≤ 0.001 [1+ high risk factors]) and decreased with bleeding risk (OR = 0.83, p = 0.366 [2 risk factors]; OR = 0.47, p ≤ 0.001 [3 risk factors]; OR = 0.17, p ≤ 0.001 [4+ risk factors]). A similar directional but more constrained trend was noted for the AnalytiCare data: only 3 and 4+ bleeding risk factors were significant.

Conclusions

The results from two LTC databases suggest that residents with AFib have a high risk of stroke. Warfarin use increased with greater stroke risk and declined with greater bleeding risk; however, only half of those classified as appropriate warfarin candidates were receiving guideline-recommended anticoagulant prophylaxis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Atrial fibrillation (AFib), a condition that becomes more prevalent with advancing age [1], is the most common sustained cardiac arrhythmia [1, 2]. Lifetime risks for developing AFib are 1 in 4 for men and women ≥40 years of age [3]. AFib is a major independent risk factor for stroke; patients with this condition have a nearly fivefold excess in age-adjusted incidence of stroke [4].

The potential benefit of stroke risk reduction from warfarin prophylaxis is substantial. In a meta-analysis of clinical trials, when compared with no antithrombotic, adjusted-dose warfarin reduced stroke in AFib by 64 % and death by 26 %, and compared with antiplatelet therapy, it reduced stroke in AFib by 39 % (all significant at 95 % confidence interval (CI); a 9 % reduction in death for warfarin vs. antiplatelets was not significant) [5]. Recent evidence suggests that net clinical benefit (annual rate of ischaemic strokes and systemic emboli prevented by warfarin minus intracranial haemorrhages attributable to warfarin, then multiplied by an impact weight) is clear among patients having a Cardiac Failure, Hypertension, Age, Diabetes, [and] Stroke [Doubled] [6] (CHADS2) score of ≥2 [7, 8].

Prescribing guidelines for antithrombotic (anticoagulant and antiplatelet) prophylaxis in patients with AFib were issued by the American College of Cardiology (ACC) and the American Heart Association (AHA) jointly with the European Society of Cardiology (ESC) in 2006 [1], by the ESC alone in 2010 [9], and by the American College of Chest Physicians (ACCP) in 2008 [10] and were updated by the ACCP in 2012 [11]. The American Medical Directors Association (AMDA) recently released an updated stroke management guideline that addresses, in part, the use of anticoagulant therapy in nursing home residents with AFib [12].

The guidelines above state that AFib patients with moderate or high risk factors for stroke are candidates for warfarin therapy. Although specific, listed stroke and bleeding risk factors vary somewhat among guidelines, ACC/AHA/ESC (2006), ACCP (2008) and ESC (2010) recommend long-term use of aspirin in patients with no stroke risk factors (ACCP 2012 recommends no use of antithrombotics), aspirin or oral anticoagulation in patients with 1 moderate risk factor (ACCP 2012 recommends oral anticoagulation as preferred), and oral anticoagulation as preferred in patients with 1+ high risk factor(s) or 2+ moderate risk factors. The AMDA 2011 guidelines recommend using CHADS2, but do not link specific scores with a recommendation for warfarin use.

All guidelines above recommend that oral anticoagulation prophylaxis be considered on the basis of degree of stroke risk, but also with consideration of the risk of bleeding. In both the ACC/AHA/ESC 2006 and the ACCP 2008 guidelines, studies regarding bleeding risk and warfarin use are discussed, but no systematic scoring algorithm is recommended. The ESC 2010, AMDA 2011 and ACCP 2012 guidelines specifically demonstrate the use of various algorithms for scoring bleeding risk. However, in contrast to the evaluation of stroke risk, none of these guidelines specifically suggests when to withhold warfarin on the basis of a particular assessment of bleeding risk.

Previous local and regional long-term care (LTC) studies have shown that warfarin was used in only 17–57 % of residents with AFib [13–17]. Lau et al. [16] further found that warfarin was often inconsistently prescribed in LTC when considering resident risk factors for stroke and bleeding, where many optimal candidates for warfarin therapy received suboptimal treatment and residents at high risk for bleeding received excessive treatment. In a recent study, Ghaswalla et al. [18] examined the US National Nursing Home Survey (NNHS) database and concluded that 54 % of US residents with AFib who had indications for, but no contraindications against, warfarin use were prescribed neither warfarin nor antiplatelet agents, suggesting underuse of antithrombotic therapy. In an earlier study, McCormick et al. [17] found that warfarin use increased with magnitude of overall stroke risk and decreased with overall bleeding risk in all residents with AFib, but that this relationship was significant only for high bleeding risk (having 2+ risk factors).

The aim of the current study was to expand the method used by McCormick et al. [17] to quantify, by assignment to stages of increasing severity, combined assessment of overall bleeding risk and stroke risk among all LTC residents with AFib (i.e. without removal of subjects from the analysis who had been screened as candidates for or against warfarin use). In this updated approach we examined the relationship of warfarin use with these risk stages during a period following publication of CHADS2 and release of formalized guidelines for assessing the risk and benefit of warfarin for stroke prevention in AFib. At the time of the current study, warfarin was the only prescribed oral anticoagulant in the USA. We assessed whether warfarin use increases and declines, respectively, across stages of increasing stroke and bleeding risk. We further evaluated rates of warfarin use among stroke risk and bleeding risk category combinations and compared overall usage rates with earlier studies.

2 Methods

2.1 Study Design

This study applied a retrospective cross-sectional analysis of residents across multiple LTC facilities. Two databases were analysed: the publicly available cross-sectional NNHS database and the proprietary longitudinal AnalytiCare database.

2.2 National Nursing Home Survey Database

The NNHS is currently administered by the US Centers for Disease Control and Prevention (CDC; http://www.cdc.gov/nchs/nnhs.htm). This database consists of a continuing series of national sample surveys of nursing homes, their residents and their staff. Eligible facilities consisted of those having three or more beds that are certified to provide reimbursable services by Medicare or Medicaid or that are licensed by an individual state. In the most recent survey year, calendar year 2004, 1,500 facilities were randomly drawn from 17,000 nursing homes listed in either the Centers for Medicare and Medicaid Services file of US nursing homes (skilled and other nursing facilities) or state nursing home licensing lists. De-identified, public-use, single-point-in-time data were obtained through computer-assisted personal interviews with facility administrators and designated staff. Interviewees used administrative records to answer questions about the facilities, staff, services and programmes, and used medical records to answer questions about the residents. Data for residents were drawn by simple random sampling in facilities that agreed to participate.

For the current study, eligible residents included those of the 13,507 sampled residents in the NNHS database who had an open-ended entry for AFib (International Classification of Diseases, Ninth Revision, Clinical Modification [ICD-9-CM] diagnostic code 427.3X) in any of the 15 available current diagnosis fields on the resident questionnaire. In the most recent 2004 release, current drug therapy was available and was used in this study. This included all agents taken the day before the resident questionnaire was completed or recorded as ‘regularly scheduled’. Resident demographics, comorbid conditions, activities of daily living (ADL) assessments, history of falling, and specific stroke and bleeding risk assessments were obtained from the resident data file.

2.3 AnalytiCare Long-Term Care Database

The AnalytiCare study database (http://www.analyticare.com) was drawn from a universe of ~100,000 LTC residents of ~200 nursing homes in 19 states over the study period 1 January 2007–30 June 2009. Available data included all elements from the Minimum Data Set (MDS) version 2.0 [19] and pharmacy dispensing records. The MDS, which is used in the USA, Canada and more than 20 other countries, is a detailed collection of measures and indicators (including assessments of physical and cognitive functioning, listings of current conditions, assessment of pain, among many others) that are completed by LTC staff to provide a comprehensive assessment of each resident’s functional capabilities and to identify health problems. In the USA, the MDS is mandated to be completed for all residents in federally certified facilities at least once quarterly, and also upon admission, discharge or a significant change in resident health status (version 3.0 was implemented in October 2010). Prior to their release for the study, data were de-identified by AnalytiCare according to Health Insurance Portability and Accountability Act–compliant safe harbour rules and were exempt from the requirement of review by an internal review board.

The MDS was used to identify chronic resident conditions, including AFib. AFib is not one of the listed ‘checkbox’ conditions in MDS Sect. I1, but is identified in the section permitted for open-ended entry of conditions in Sect. I3 [19]. Eligible residents in the current study (1) had complete pharmacy data, ≥2 MDS assessments, and an entry for AFib (ICD-9-CM diagnostic code 427.3X) in any MDS assessment completed during the study period (these were the minimum criteria for production of the de-identified database released by AnalytiCare); (2) had an MDS assessment at least 1 year prior to the end of the study period (to assure adequate follow-up was not constrained by the study database); (3) had a diagnosis for AFib within 1 year of the earliest MDS assessment; (4) had a complete admission or annual assessment within 1 year of the earliest MDS assessment (since these forms provide space for up to five open-ended ICD-9 code entries vs. only two for quarterly assessments); and (5) were ≥18 years of age on 1 January 2007 and his/her sex was known. For study residents, current drug therapy included all agents dispensed 30 days before through to 60 days after the earliest AFib entry described in item 3 above (to establish a limited date range to evaluate concurrent drug use with a single indexed notation of the AFib condition). Comorbid condition data were obtained on all MDS (checkbox) or open-ended entries during the 1-year period starting from the earliest MDS assessment.

2.4 Measurement of Stroke and Bleeding Risk

For the NNHS, data from the single-point-in-time survey and, for AnalytiCare, from a summary of the 90-day drug therapy period and the 1-year MDS assessment period (noted above) were used to identify stroke and bleeding risk factors for individual residents. Specific stroke risk factors (listed in Table 1), based primarily on CHADS2 [6] were obtained directly from AHA/ACC/ESC [1] and ACCP [10] guidelines and were stratified by ‘high risk’ for stroke and ‘moderate risk’ for stroke. Fuster et al. [1] also listed some factors with ‘less validated’ or ‘weak association’ with stroke. In the current study, residents were assigned to one of the following stroke-risk categories: low (none or 1+ weak stroke risk factors), moderate (1 moderate), high (2+ moderate or 1+ high).

At the time this study was designed, the AFib risk-specific HAS-BLED (Hypertension, Abnormal renal/liver function, Stroke, Bleeding history or predisposition, Labile international normalized ratio, Elderly [>65 years], Drugs/alcohol concomitantly) [20] and atherothrombotic risk-specific REACH (REduction of Atherothrombosis for Continued Health) [21] bleeding algorithms had not yet been published. Bleeding risk factors were identified from the Atrial Fibrillation Follow-up Investigation of Rhythm Management (AFFIRM) study [22] and from other studies [23, 24]. Bleeding risk factors (listed in Table 1) were tallied by count and assigned to one of four levels: 0–1, 2, 3 and 4+.

Available ADL measures were comparable between NNHS and AnalytiCare databases, because these were derived from the same source: the MDS 2.0. The method of Carpenter et al. [25] was used to derive a single ADL functioning score from seven physical function assessment items. Logistic regression models were used to predict the odds of being prescribed warfarin by including, for each resident, only stroke-risk and bleeding-risk category assignments (no other resident characteristics). NNHS-provided sampling weights were applied to derive all estimates from that database. Intercooled Stata version 8.0 (Stata Corporation, College Station, TX, USA) was used for all analyses.

3 Results

3.1 Resident Characteristics

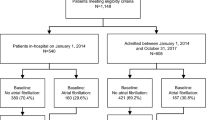

After applying inclusion criteria, NNHS had 1,454 eligible residents with AFib (Table 2), representing (after applying sampling weights) an LTC population of 162,061 having AFib in the year 2004 (55,061 warfarin users and 107,000 non-users). The AnalytiCare database had 3,757 eligible residents with AFib.

Table 3 shows characteristics of residents with AFib. In the NNHS, the median age was 85 years; 70 % were female. In AnalytiCare, median age was 83 years; 63 % were female. In the NNHS, age and sex distributions were similar between residents having AFib who were receiving warfarin therapy and those who were not. In AnalytiCare, a smaller proportion of residents over 85 years of age (37 %) were receiving warfarin compared with those not receiving warfarin (50 %; p < 0.001 for age distribution); the sex distribution was similar between these groups.

In both the NNHS and AnalytiCare, residents receiving warfarin had a more favourable distribution of ADL functioning (less physical dependence; p = 0.050 and p < 0.001, respectively, Table 3) compared with non-users. In both databases, warfarin users had a higher mean CHADS2 score (indicating greater stroke risk) than non-users, although group differences in scores were small: 0.2 (NNHS; p = 0.001) and 0.1 (AnalytiCare; p = 0.003) points. Except for deep vein thrombosis, rates of common chronic conditions (excluding stroke or bleeding risk factors) were generally similar among warfarin users and non-users in both databases (Table 3).

3.2 Individual Stroke and Bleeding Risk Factors

Table 1 compares the proportion of residents having each of the individual stroke risk factors. The distribution of high risk factors was similar among warfarin users and non-users in AnalytiCare. In the NNHS, warfarin users had higher rates of previous stroke (p = 0.001) and mitral stenosis (p = 0.046) than non-users (previous stroke is also listed in combination with transient ischaemic attack [TIA] as a bleeding risk factor). For moderate stroke risk factors, warfarin users and non-users in the NNHS had similar distributions. However, in AnalytiCare, compared with non-users, a lower proportion of warfarin users were aged ≥75 years (p = 0.003), a larger proportion had diabetes mellitus (p = 0.026), and a larger proportion had congestive heart failure (CHF) (p < 0.001) (diabetes mellitus and CHF are also listed as bleeding risk factors). The distribution of less validated or weak association factors was similar between warfarin users and non-users between databases, except for a higher proportion of residents in the 65–74 age group among warfarin users in AnalytiCare (p = 0.002).

Table 1 also compares the proportion of residents having each of the individual bleeding risk factors. In the NNHS, a lower proportion of warfarin users (11 %) were taking aspirin compared with non-warfarin users (48 %; p < 0.001). Similarly, in AnalytiCare, a lower proportion of warfarin users (5 %) were taking aspirin compared with non-warfarin users (8 %; p < 0.001), although under-reporting of over-the-counter aspirin use is noted as a limitation of the latter database. In both databases, compared with non-users, a significantly smaller proportion of warfarin users had dementia or cognitive impairment (p = 0.042 NNHS; p < 0.001 AnalytiCare) or were currently using antiplatelet therapy (p < 0.001 for both databases). In AnalytiCare only, a smaller percentage of warfarin users were anaemic when compared with non-users (p = 0.011).

3.3 Rate of Warfarin Use by Bleeding and Stroke Risk Category

Table 4 shows the rate of warfarin use for each combination of stroke risk and bleeding risk category for residents in each database. In the NNHS, 34 % of all residents with AFib were receiving warfarin (95 % CI 31.1–36.8). Thirty-six per cent of all AFib residents with high stroke risk (2+ moderate or 1+ high risk factors) were receiving warfarin. Findings were similar in the AnalytiCare database: a minority (45 % of all residents with AFib) were currently receiving warfarin (95 % CI 43.0–46.1) and 45 % of residents with high stroke risk were receiving warfarin. In both databases, warfarin use generally increased with higher stroke risk among residents in the same bleeding risk category.

3.4 Distribution of Resident Counts by Stroke and Bleeding Risk

Figure 1 shows the distribution of residents in each category of stroke and bleeding risk, without regard to warfarin use. Findings were similar among both databases. A majority of all residents with AFib in the NNHS database (78 %) and in the AnalytiCare database (87 %) were classified as having high stroke risk. In both databases, approximately three out of every four of these high-stroke-risk residents had at least three or more bleeding risk factors.

3.5 Modelling Warfarin Use from Stroke and Bleeding Risk

Figure 2 shows, from the single logistic regression model within each database, the odds of a resident being prescribed warfarin according to stroke and bleeding risk category.

In the NNHS, compared with the ‘none or 1+ weak’ stroke risk factor(s) and ‘0–1’ bleeding risk factors’ referent categories (odds ratio [OR] = 1), the odds of receiving warfarin consistently increased with greater stroke risk: 1 moderate, OR = 1.93 (p = 0.118, 95 % CI 0.85–4.38); 2+ moderate, OR = 3.19 (p = 0.005, 95 % CI 1.42–7.17); and 1+ high, OR = 8.18 (p ≤ 0.001, 95 % CI 3.49–19.16). The odds of receiving warfarin consistently decreased with greater bleeding risk: 2 risk factors, OR = 0.83 (p = 0.366, 95 % CI 0.56–1.24); 3 risk factors, OR = 0.47 (p ≤ 0.001, 95 % CI 0.31–0.70); and 4+ risk factors, OR = 0.17 (p ≤ 0.001, 95 % CI 0.11–0.26).

A similar, consistent trend was observed for AnalytiCare, although only two risk factors were significant. For stroke risk: 1 moderate, OR = 0.99 (p = 0.973, 95 % CI 0.55–1.78); 2+ moderate, OR = 1.55 (p = 0.138, 95 % CI 0.87–2.75); and 1+ high, OR = 1.79 (p = 0.052, 95 % CI <1.0–3.23). For bleeding risk: 2 risk factors, OR = 0.91 (p = 0.479, 95 % CI 0.69–1.19); 3 risk factors, OR = 0.68 (p = 0.004, 95 % CI 0.52–0.88); and 4+ risk factors, OR = 0.54 (p ≤ 0.001, 95 % CI 0.41–0.70).

4 Discussion

Our study showed that of every ten LTC residents with AFib, eight (NNHS) and nine (AnalytiCare) were at a high risk for stroke (i.e. had 2+ moderate or 1+ high stroke risk factors). A majority of residents (six and seven of every ten, respectively) had high stroke risk combined with 3+ bleeding risk factors. This is not unexpected, since several key risk factors listed are common to both stroke and bleeding risk assessments (e.g. previous stroke or TIA, CHF and diabetes mellitus). An earlier study of Canadian LTC residents with AFib by Lau et al. [16], using ACCP guidelines [10] to define risk, found that nearly 97 % had high stroke risk. Thus our study affirms Lau et al.’s [16] finding of a high and continuing level of need for consideration for stroke risk reduction in the LTC population.

4.1 Rates of Warfarin Use

Findings from the present study also revealed that fewer than half of all residents with AFib (including fewer than half of all residents with high stroke risk) were receiving warfarin prophylaxis. Although warfarin usage increased from 34 % in the 2004 NNHS database to 45 % in the 2007–2009 AnalytiCare database, overall usage of warfarin in residents with AFib remains low. These findings are generally consistent with five earlier regional LTC studies that evaluated the use of warfarin in AFib among US and Canadian nursing home facilities prior to 2004 [13–17] and one that examined the same NNHS database used in the current study [18]. Abdel-Latif et al. [13] found that 46 % of residents with AFib were using warfarin, and Gurwitz et al. [14] found that 32 % were. Three of these studies also evaluated the use of warfarin when patients were stratified by stroke and bleeding risk. Lau et al. [16] found that warfarin was prescribed for 57 % of AFib residents. Among high-stroke-risk/low-bleeding-risk candidates, the warfarin prescribing rate remained similar at 60 %. Lackner and Battis [15] found that only 17 % of patients with non-valvular AFib received warfarin, whereas among residents with 1+ additional risk factor for stroke and no contraindication, 20 % received warfarin. McCormick et al. [17] reported that although 42 % of residents with AFib received warfarin, only 53 % of ‘ideal’ candidates for warfarin therapy (i.e. no bleeding risk factors) received oral anticoagulant prophylaxis. Ghaswalla et al. [18] found usage of warfarin among only 30 % of appropriate candidates in the NNHS database (residents who had an indication for warfarin and no contraindications against its use); these authors further found that of the remaining 70 % not receiving warfarin, only 23 % had been placed on antiplatelet therapy (aspirin or clopidogrel). Findings regarding low anticoagulant usage have also been noted within non-LTC community settings. In a recent systematic review, Ogilvie et al. [26] found suboptimal (<70 %) anticoagulant usage in seven of nine studies of patients who had both AFib and a CHADS2 score of ≥2.

4.2 Modelling of Stroke and Bleeding Risk

Findings from our logistic regression analysis showed that stroke and bleeding risk components, when evaluated together within the same resident with AFib, have a consistent, directional relationship with warfarin use. Increased stroke risk and reduced bleeding risk are associated with greater odds of receiving warfarin; the converse also applies. This finding contrasts with Lau et al.’s [16] earlier finding of discordance between antithrombotic use and relative risk of bleeding but agrees with McCormick et al.’s [17] finding of lower warfarin use among residents with 2+ bleeding risk factors. An unexpected finding in the NNHS data is that residents with high stroke risk classified as having 1+ high stroke risk factor(s) usually had greater odds of receiving warfarin than those with high stroke risk classified as 2+ moderate risk factors, despite the risk equivalence of these categories.

Despite consistency in the stroke–bleeding risk relationship with warfarin use, we found evidence of an upper limit or plateau in usage—a finding also noted in earlier studies [15–17] and in a recent systematic review that concluded that bleeding risk alone may not explain low rates of warfarin use for AFib in LTC [27]. Except for the small number of residents with a combination of 0–2 bleeding risk factors and 1+ high stroke risk factors in the NNHS database (who had a 78–80 % rate of warfarin use), warfarin use did not otherwise exceed 60 % among residents with AFib in either database, and was typically lower, regardless of which category of combined stroke and bleeding risk was evaluated (Table 4).

4.3 Potential Reasons for Limits in Warfarin Use

Addressing similar limits to warfarin use as observed among residents with higher stroke risk, McCormick et al. [17] and Lau et al. [16] cited the potential unavailability of patient preferences, care directives, or other data from the medical records they examined as possible explanations for the low rate of warfarin use in AFib. Additional reasons for warfarin underuse included difficulty in monitoring anticoagulation therapy [17], concerns about the risk of bleeding complications that outweigh concerns about the risk of stroke [17], knowledge deficits regarding risk factors for stroke and the effectiveness of warfarin for stroke prevention in older patients with AFib [16, 17], under-recognition of AFib [16], and past experiences with antithrombotics [16].

Two studies of physicians who responded to hypothetical AFib case studies in LTC settings showed that they were most concerned about the risk of falls [28, 29], dementia [28], limited life expectancy [28], a history of gastrointestinal (GI) bleeding and non-CNS bleeding [29], and a history of ischaemic stroke [29]. We found no effect on warfarin use from a history of falling (Table 1), but our findings support many of these physician-reported factors, including a strong effect of dementia or cognitive impairment (both databases), effects due to limited life expectancy (both databases), and GI bleeding (AnalytiCare).

Both databases revealed that LTC residents receiving warfarin appeared to have better physical functioning (i.e. lower ADL dependence). Although it has not been reported to be a bleeding risk factor, poor physical functioning can be added to the list of factors that might negatively influence the use of warfarin in the LTC facility.

4.4 Limitations

A primary source of data in both the NNHS and AnalytiCare databases was the MDS 2.0. Although the validity and reliability of the MDS can vary by a given indicator [30], the MDS 2.0 has been reported to generally have moderate, or moderate to high, validity and reliability [31]. In both study databases, current medication use was evaluated by temporal proximity to the AFib diagnosis (under-reporting of aspirin use in the AnalytiCare database was described above). Other non-AFib indications for warfarin use, such as post-myocardial infarction secondary prevention, were possible. No distinction was made by type of AFib (e.g. valvular/non-valvular, paroxysmal/persistent), although warfarin is used for stroke prophylaxis among AFib variants [9]. Specific stroke and bleeding risk factors identified in consensus guidelines have not been validated against stroke and bleeding outcomes in the LTC setting. Our model tested an amalgam of summary measures of stroke and bleeding risk taken from AFib guidelines and medical literature. Other risk factors considered in new models may have been relevant but were not included (e.g. bleeding risk factors: some items listed in HAS-BLED [20] [such as hypertension and poor International Normalised Ratio (INR) control] and some items listed in REACH [21] [e.g. smoking and hypercholesterolaemia]). As noted, several factors in our list are counted as both stroke and bleeding risks, and this remains a limitation even among newer models such as HAS-BLED and REACH. Although a difficult issue to reconcile, this degree of overlap limits the ability of models to discriminate among summary stroke and bleeding categories when these are considered together.

5 Conclusion

Although our two study databases differed in design, scope and time period of data collection, findings from the analysis of each appear similar. High-stroke-risk patients, who are known to have the greatest net clinical benefit from warfarin use [5, 29], comprised approximately 80 % or more of AFib residents in our two databases. Consistent with our findings and those from earlier research [15, 16, 21], warfarin use continues to appear low among residents with AFib in the LTC setting. Further research is needed to evaluate the degree to which this low usage rate represents appropriate balancing of stroke and bleeding risk or other concerns in these unique patients, or whether this represents a potentially large lost clinical benefit from otherwise preventable stroke.

References

Fuster V, Ryden LE, Cannom DS, Crijns HJ, Curtis AB, Ellenbogen KA, et al. ACC/AHA/ESC 2006 Guidelines for the Management of Patients with Atrial Fibrillation: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines and the European Society of Cardiology Committee for Practice Guidelines (Writing Committee to Revise the 2001 Guidelines for the Management of Patients With Atrial Fibrillation): developed in collaboration with the European Heart Rhythm Association and the Heart Rhythm Society. Circulation. 2006;114(7):e257–354.

Wyndham CR. Atrial fibrillation: the most common arrhythmia. Tex Heart Inst J. 2000;27(3):257–67.

Lloyd-Jones DM, Wang TJ, Leip EP, Larson MG, Levy D, Vasan RS, et al. Lifetime risk for development of atrial fibrillation: the Framingham Heart Study. Circulation. 2004;110(9):1042–6.

Wolf PA, Abbott RD, Kannel WB. Atrial fibrillation as an independent risk factor for stroke: the Framingham Study. Stroke. 1991;22(8):983–8.

Hart RG, Pearce LA, Aguilar MI. Meta-analysis: antithrombotic therapy to prevent stroke in patients who have nonvalvular atrial fibrillation. Ann Intern Med. 2007;146(12):857–67.

Gage BF, Waterman AD, Shannon W, Boechler M, Rich MW, Radford MJ. Validation of clinical classification schemes for predicting stroke: results from the National Registry of Atrial Fibrillation. JAMA. 2001;285(22):2864–70.

Singer DE, Chang Y, Fang MC, Borowsky LH, Pomernacki NK, Udaltsova N, et al. The net clinical benefit of warfarin anticoagulation in atrial fibrillation. Ann Intern Med. 2009;151(5):297–305.

Hart RG, Halperin JL. Do current guidelines result in overuse of warfarin anticoagulation in patients with atrial fibrillation? Ann Intern Med. 2009;151(5):355–6.

Camm AJ, Kirchhof P, Lip GY, Schotten U, Savelieva I, Ernst S, et al. Guidelines for the management of atrial fibrillation: the Task Force for the Management of Atrial Fibrillation of the European Society of Cardiology (ESC). Eur Heart J. 2010;31(19):2369–429.

Singer DE, Albers GW, Dalen JE, Fang MC, Go AS, Halperin JL, et al. Antithrombotic therapy in atrial fibrillation: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines (8th Edition). Chest. 2008;133(6 Suppl):546S–92S.

You JJ, Singer DE, Howard PA, Lane DA, Eckman MH, Fang MC, et al. Antithrombotic therapy for atrial fibrillation: antithrombotic therapy and prevention of thrombosis, 9th ed: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest. 2012;141(2 Suppl):e531S–75S.

American Medical Directors Association. Stroke management in the long-term care setting clinical practice guideline. Columbia (MD): AMDA; 2011.

Abdel-Latif AK, Peng X, Messinger-Rapport BJ. Predictors of anticoagulation prescription in nursing home residents with atrial fibrillation. J Am Med Dir Assoc. 2005;6(2):128–31.

Gurwitz JH, Monette J, Rochon PA, Eckler MA, Avorn J. Atrial fibrillation and stroke prevention with warfarin in the long-term care setting. Arch Intern Med. 1997;157(9):978–84.

Lackner TE, Battis GN. Use of warfarin for nonvalvular atrial fibrillation in nursing home patients. Arch Fam Med. 1995;4(12):1017–26.

Lau E, Bungard TJ, Tsuyuki RT. Stroke prophylaxis in institutionalized elderly patients with atrial fibrillation. J Am Geriatr Soc. 2004;52(3):428–33.

McCormick D, Gurwitz JH, Goldberg RJ, Becker R, Tate JP, Elwell A, et al. Prevalence and quality of warfarin use for patients with atrial fibrillation in the long-term care setting. Arch Intern Med. 2001;161(20):2458–63.

Ghaswalla PK, Harpe SE, Slattum PW. Warfarin use in nursing home residents: results from the 2004 national nursing home survey. Am J Geriatr Pharmacother. 2012;10(1):25–36.e2.

Centers for Medicaid and Medicaid Services, Nursing Home Quality Initiative. 2012. http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/NursingHomeQualityInits/index.html. Accessed 15 Nov 2012.

Pisters R, Lane DA, Nieuwlaat R, de Vos CB, Crijns HJ, Lip GY. A novel user-friendly score (HAS-BLED) to assess 1-year risk of major bleeding in patients with atrial fibrillation: the Euro Heart Survey. Chest. 2010;138(5):1093–100.

Ducrocq G, Wallace JS, Baron G, Ravaud P, Alberts MJ, Wilson PW, et al. Risk score to predict serious bleeding in stable outpatients with or at risk of atherothrombosis. Eur Heart J. 2010;31(10):1257–65.

DiMarco JP, Flaker G, Waldo AL, Corley SD, Greene HL, Safford RE, et al. Factors affecting bleeding risk during anticoagulant therapy in patients with atrial fibrillation: observations from the Atrial Fibrillation Follow-up Investigation of Rhythm Management (AFFIRM) study. Am Heart J. 2005;149(4):650–6.

Beyth RJ, Quinn LM, Landefeld CS. Prospective evaluation of an index for predicting the risk of major bleeding in outpatients treated with warfarin. Am J Med. 1998;105(2):91–9.

Hylek EM, Evans-Molina C, Shea C, Henault LE, Regan S. Major hemorrhage and tolerability of warfarin in the first year of therapy among elderly patients with atrial fibrillation. Circulation. 2007;115(21):2689–96.

Carpenter GI, Hastie C, Morris J, Fries B, Ankri J. Measuring change in activities of daily living in nursing home residents with moderate to severe cognitive impairment. BMC Geriatr. 2006;6(1):7.

Ogilvie IM, Newton N, Welner SA, Cowell W, Lip GY. Underuse of oral anticoagulants in atrial fibrillation: a systematic review. Am J Med. 2010;123(7):638–45.e4.

Neidecker M, Patel AA, Nelson WW, Reardon G. Use of warfarin in long-term care: a systematic review. BMC Geriatr. 2012;12(1):14.

Dharmarajan TS, Varma S, Akkaladevi S, Lebelt AS, Norkus EP. To anticoagulate or not to anticoagulate? A common dilemma for the provider: physicians’ opinion poll based on a case study of an older long-term care facility resident with dementia and atrial fibrillation. J Am Med Dir Assoc. 2006;7(1):23–8.

Monette J, Gurwitz JH, Rochon PA, Avorn J. Physician attitudes concerning warfarin for stroke prevention in atrial fibrillation: results of a survey of long-term care practitioners. J Am Geriatr Soc. 1997;45(9):1060–5.

Poss JW, Jutan NM, Hirdes JP, Fries BE, Morris JN, Teare GF, et al. A review of evidence on the reliability and validity of Minimum Data Set data. Healthc Manage Forum. 2008;21(1):33–9.

Shin JH, Scherer Y. Advantages and disadvantages of using MDS data in nursing research. J Gerontol Nurs. 2009;35(1):7–17.

Acknowledgments

The study was sponsored by Janssen Scientific Affairs, LLC. The involvement of the sponsor in the study design, the collection, analysis and interpretation of data, the writing of the report and the decision to submit the paper for publication was limited to the working contribution of two coauthors (A.A.P. and W.W.N.) who were employees of the study sponsor at the time of this study.

Conflicts of interest and funding

At the time this research was conducted, authors G.R., T.P. and M.N. were consultants to the study sponsor. G.R. also received funding from the sponsor for manuscript writing. A.A.P. and W.W.N. were employees of the sponsor (a Johnson & Johnson company) and shareholders of Johnson & Johnson. G.R. has received funding for research and for manuscript writing from Pfizer Inc. T.P. received from Ortho-McNeil (a former Johnson & Johnson company) an earlier research grant and served on the speakers bureau for products unrelated to this research. T.P. has also received other grants from the pharmaceutical industry (currently including Endo Pharmaceuticals), participates from time to time on advisory panels and is currently on the speakers bureau for Glaxo, Avanir, and Johnson & Johnson (for Nucynta).

Authors Contribution

G.R. and M.N.: study design, article search and summarization, graphics production, statistical analysis, manuscript production; A.A.P.: initial study concept, study design, interpretation of findings, manuscript rewrite; W.W.N.: study design, interpretation of findings, manuscript rewrite; and T.P.: study design, interpretation of findings, manuscript rewrite. The authors would like to acknowledge Ruth Sussman, PhD, who provided editorial review of this author-prepared manuscript and whose work was supported with funding from the study sponsor.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Reardon, G., Nelson, W.W., Patel, A.A. et al. Warfarin for Prevention of Thrombosis Among Long-Term Care Residents with Atrial Fibrillation: Evidence of Continuing Low Use Despite Consideration of Stroke and Bleeding Risk. Drugs Aging 30, 417–428 (2013). https://doi.org/10.1007/s40266-013-0067-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40266-013-0067-y