Abstract

This paper introduces an approach based on hybrid operational matrix to obtain a numerical scheme to solve fractional differential equations. The idea is to convert the given equations into a system of equations, based on the block-pulse and Legendre polynomials. Also, we employ the Banach fixed-point theorem to analyze the problem on the Banach algebra C[0, b] for some fractional differential equations, which include many key functional differential equations that arise in linear and nonlinear analysis.

Similar content being viewed by others

Introduction

The fractional differential equation (FDE) has received considerable interest and has constituted many applications in various scientific such as continuum and statistical mechanics [10], dynamical systems [8], and optimal control problems [2, 7, 9]. The most of FDEs cannot be solved analytically, and on the other hand, many applications of these problems motivate us to develop numerical schemes for their solutions. For this purpose, some techniques were suggested and there are some studies on the numerical method to solve FDEs, for example, see [9, 11, 12, 14, 15]. Another technique applied to solve FDE is to use the operational matrix of fractional order [2, 6, 11, 17]. In this study, we present a numerical technique to solve FDEs

where \(m \in \mathbb {N}\) and \(^{C}\!D^{.}\) denotes the Caputo fractional derivative [3, 14]. Our suggested method is based upon the piecewise continuous functions and Legendre polynomials, depending on the operational matrices of fractional integration. The exclusivities of hybrid functions with the operational matrix are used to convert the FDE to an algebraic equation, and then, are utilized to evaluate the upon expanding unknown function by the basis functions with unknown coefficients.

The following is an overview of this article. In Sect. 2, briefly some definitions and mathematical preliminaries of the fractional calculus have been introduced. In Sect. 3, we consider existence and uniqueness theorems of the desired FDEs. Some proper properties of the hybrid basis consisting of the block-pulse functions and Legendre polynomials, and approximation of function by these basis are presented in Sect. 4. The relevant operational matrix is obtained in Sect. 5, end of this section is devoted to applying the hybrid functions method for solving FDEs. In Sect. 6, through the provided examples, our numerical finding reported and the reliability and performance of the proposed scheme is demonstrated.

Preliminaries and basic definitions

We give a few traits concerning of fractional calculus [3, 14]. Let \({g\in L}_1[0,b]\) \(\text {(space of Lebesgue integrable real functions)},\) and \(\mu \in R_+=(0,\infty ),\) be a fixed number.

Definition 1

The operator \(J^\mu\), defined on \(L_1[0,b]\) by

for \(0\le s\le b\), is denominated the Riemann–Liouville fractional integral operator of order \(\mu\), where \(\Gamma (.)\) denotes the Gamma function.

The operator \(J^{\mu }\) transforms the space \(L_1[0,b]\) into itself [14].

Definition 2

The operator \(^{\mathrm{RL}}\!D^{\mu }\), defined by

\((\left\lceil .\right\rceil\) denote ceiling function, \(\left\lceil x \right\rceil = \mathrm{min} \left\{ z\in \mathbb Z: z\ge x \right\} )\) is called the Riemann–Liouville fractional differential operator.

Definition 3

For \(g\in L_1[0,b]\),

is the Caputo fractional derivative.

Note that \(\; J^{\mu }\;^{C}\!D^{\mu }g\left( s\right) =g\left( s\right) -\sum ^{ m-1}_{ j=0}g^{\left( j\right) }\left( 0^+\right) \frac{ s^j}{ j!},\quad m-1<\mu \le m,\ \ m\in \mathbb {N}.\)

Lemma 1

[3] Let \(\mu \ge 0\). Assume that g is such that both \(^{C}\!D^{\mu }g\) and \(^{\mathrm{RL}}\!D^{\mu }g\) exist. Then,

Under the hypotheses of Lemma 1, \(^{C}\!D^{\mu }g\left( s\right) =^{\mathrm{RL}}\!D^{\mu }g\left( s\right)\) holds if and only if g has an \(\left\lceil \mu \right\rceil\)-fold zero at 0, i.e., if and only if \(D^jy\left( 0\right) =0,\) for \(j=0, 1, \dots , \left\lceil \mu \right\rceil -1.\)

Existence and uniqueness

We study the solvability of Problem () for \(g\in C[0,b]\). In what follows, we suppose that \(F(s,.):[0,b]\times \mathbb R\longrightarrow {\mathbb R},\) be satisfied in the Lipschitz condition respect to the second component, with Lipschitz constant l, and there exist the constants \(\lambda\) and \(\eta\) such that \(\left| F\left( s,g(s)\right) \right| \le \lambda +\eta \left| g(s)\right|\)(sublinear nonlinearity), for all \(s\in [0,b]\) and \(g(s)\in {\mathbb R}\).

Theorem 1

For \(0<\theta =\frac{l b^{\mu }}{\Gamma (\mu +1)} <1\), Eq. (1a) and (1b) has a unique solution.

Proof

To prove this result, we define operator \(\Lambda\) on the space C[0, b] with

We shall show that \(\Lambda :[0,b]\times \mathbb R\longrightarrow {\mathbb R}\) is a contraction map. For \(g_1,g_2\in C[0,b]\) and \(s\in [0,b]\), we have

Therefore, according to condition \(0<\theta <1,\) the mapping \(\Lambda\) is contraction, so by the Banach’s principle has a unique fixed-point, and there exists a unique solution to problem (1). \(\square\)

Theorem 2

\(\Lambda\) maps bounded sets into equicontinuous sets of C[0, b].

Proof

Let \(s_1, s_2\in [0,b],\) \(s_1<s_2,\) and g belong to a bounded set, then we have

As \(s_1\rightarrow s_2\) the last term tends to zero. The equicontinuous for the cases \(s_1<s_2\le 0\) and \(s_1\le 0\le s_2\) is explicit. \(\square\)

Basis functions

A set of block-pulse functions \(b_p(s),p=1,2,\dots ,P\) for \(s\in [0,1)\) is defined as follows [11, 12]:

These functions are disjoint and have the property of orthogonality on [0, 1).

The hybrid functions \(h_{pq}(s), p=1,2,\ldots ,P,\ q=0,1,\ldots ,Q-1,\) on \([0,s_f)\) are defined as

where p is the order of the block-pulse functions and \(L_q(s)\)s are the well-known Legendre polynomials of order q with the following recursive formula:

It is obvious that the set of hybrid functions is orthogonal. A function g(s), defined on \([0,s_f)\) can be expanded as

where \(S=PQ\),

and

Applying operational matrices

Let

where \(P^\mu _{S\times S}\) is obtained as the operational matrix of the fractional integration for hybrid functions, by the following formula:

Also, matrix \(\Phi _{S\times S}\) is an invertible matrix and define using vector \(H_{S}(s)\) in collocation points \(s_p =\frac{2p-1}{2S},\ p=1,2,\dots ,S\) as following:

and

with \(\varepsilon _j=(j+1)^{\mu +1} - 2j^{\mu +1}+(j-1)^{\mu +1},\) for \(j=1,2,\ldots ,S-1\).

Furthermore, using Eq. (2) and taking \(B_{S}(s)=[b_1(s),b_2(s),\ldots ,b_S(s)]^\mathrm{T},\) hybrid functions can be expanded by S-term of the block-pulse functions as

and since \(F_{S\times S}^\mu\) is the operational matrix associated with the block-pulse functions, we get

Finally, from Eqs. (6)–(8), one can conclude that

Method implementation

Consider a nonlinear differential equation of fractional order. We approximate \(^{C}\!D^{\mu }g(s)\) by the hybrid functions as

where \(C_S=\left[ c_1,c_2,\dots ,c_{S}\right] ^\mathrm{T}\) is an unknown vector. From Eq. (10), we get

from Eq. (9), we have

Substituting \(^{C}\!D^{\mu }g(s)\) and g(s) from relations (10), (11) in Eq. (1a) and (1b), we obtain a system of algebraic equations. Implementation of the proposed method is presented in the next section via numerical experiments.

Numerical experiments

We present some examples to comprehend overview and demonstrate the efficiency of the described method.

Example 1

Consider the following FDE [1]:

with \(g(0)=0\), where \(h(s)=s^2+\frac{2}{\Gamma (2.5)}s^{1.5}\) and the exact solution is \(g(s)=s^2\). To solve Eq. (12), let \(^{C}\!D^{0.5}g(s)={C_S}^\mathrm{T} H_{S}(s)\), using Eqs. (3), (11), we have

where \(h^\mathrm{T}_{S}\) is a known constant vector.

Substituting these equations into Eq. (12), we give

We solved the problem, applying the technique described in Sect. 4, the absolute errors for \(Q=3, P=2,4,6\) are listed in Table 1.

From Table 1, observed that, we have an acceptable approximation of the exact solution. Also, increasing the number of basis functions, provide improvement in the accuracy of the solutions.

Example 2

has been solved by different methods. The exact solution is as follows [4]:

where

is the Mittag–Leffler function of order \(\mu\).

Since \(J^{\mu }\;^{C}\!D^{\mu }g(s)=g(s)-g(0)-tg'(0),\) we have the following algebraic system for Eq. (14):

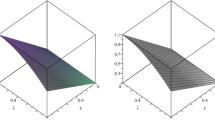

For \(\mu =1\) and \(\mu =2\), the exact solutions of Eq. (14) are \(g(s)=\mathrm{e}^{-s}\) and \(g(s)= \cos s\), respectively. Figure 1 displays the numerical results for g(s) with \(S=12\), \(\mu = 0.25, 0.5, 0.75, 0.95,1;\) and \(\mu = 1, 1.25, 1.5, 1.75, 1.95, 2\). It is evident that as \(\mu\) approaches close to 1 or 2, the numerical solution by the presented hybrid method in previous sections converges to the exact solution.

Table 2 shows the absolute errors for \(\mu = 0.85, 1.2, 1.5\) and \(S= 8, 10, 24\). Clearly, the approximations achieved through the hybrid scheme are in accordance with those established with other mentioned numerical schemes [16, 17].

Numerical solutions of Example 2, for \(S =12,\) \(0<\mu \le 1\) (left) and \(1\le \mu \le 2\) (right)

Example 3

Consider the following fractional Riccati equation [1, 13, 18]:

Assume \(^{C}\!D^{\mu }g(s)= {C_S}^TH_S(s)\), using Eq. (11), we have

Let

applying virtues of the block-pulse function, we get

Substituting these equations into FDE (15), we have the following system of nonlinear equations:

For \(\mu =1\), the analytic solution of Eq. (15) is

In Table 3, the results for Example 3 with \(\mu = 0.5, 1\) and \(S= 16,\) by the hybrid method in some points \(s\in [0,1],\) are given. Also, these outcomes are compared with Refs. [13, 18]. Moreover, absolute errors of approximate solutions of Example 3 for \(S=48\) are shown in Fig. 2.

Absolute errors of Example 3 for \(S=48\)

Example 4

[5] We implement the presented hybrid method in this study for solving nonlinear FDE

with the exact solution \(g(s)=s^2\). The behavior of the results with \(S=4,8,12\) is plotted in Fig. 3.

Comparison of g(s) for \(S=4, 8, 12\) with exact solution of Example 4

Example 5

the absolute errors for \(\mu =0.2,0.4,\ldots ,1.8\) and \(S=4\) reported in Table 4.

Example 6

Finally, consider the multi-order FDE

with the exact solution \(g(s)=\cos s\) and nonlocal boundary value conditions

where \({_pF_q}(\mu _1,\mu _2,\dots ,\mu _p;\nu _1,\nu _2,\dots ,\nu _q;s)\) denotes the generalized hypergeometric function. Applying our proposed approach with Eqs. (11), (18), we have [12],

therefore,

From boundary condition (18) and Eq. (19), one concludes that

Consequently, FDE (17) can be shown as the following algebraic system:

where \({A_S}^\mathrm{T}=[a^2_1, a^2_2, \dots , a^2_S]\), with

and

Using the hybrid method with \(Q=5, P=5,10,20\) for \(s\in (0,1)\), the maximum absolute errors of Example 6 are reported in Table 5. Also, the approximate error of our proposed scheme for this example is illustrated in Fig. 4.

Absolute error of Eq. (17) with \(S=75\)

References

Arikoglu, A., Ozkol, I.: Solution of fractional differential equations by using differential transform method. Chaos Solitons Fractals 34, 1473–1481 (2007)

Bhrawy, A.H., Ezz-Eldien, S.S.: A new Legendre operational technique for delay fractional optimal control problems. Calcolo 53, 521–543 (2016)

Diethelm, K.: The Analysis of Fractional Differential Equations. Springer, Berlin (2010)

Diethelm, K., Ford, N.J., Freed, A.D.: A predictor–corrector approach for the numerical solution of fractional differential equation. Nonlinear Dyn. 29, 3–22 (2002)

El-Kalla, I.L.: Error estimate of the series solution to a class of nonlinear fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 16, 1408–1413 (2011)

Ezz-Eldien, S.S., Bhrawy, A.H., El-Kalaawy, A.A.: Direct numerical technique for isoperimetric fractional variational problems based on operational matrix. J. Vib. Control. https://doi.org/10.1177/1077546317700344 (2017)

Ezz-Eldien, S.S., El-Kalaawy, A.A.: Numerical simulation and convergence analysis of fractional optimization problems with right-sided Caputo fractional derivative. J. Comput. Nonlinear Dyn. https://doi.org/10.1115/1.4037597 (2017)

Lakshmikantham, V., Vatsala, A.S.: Theory of fractional differential inequalities and applications. Commun. Appl. Anal. 11, 395–402 (2007)

Liu, Z., Li, X.: A Crank–Nicolson difference scheme for the time variable fractional mobile-immobile advection–dispersion equation. J. Appl. Math. Comput. 56, 391–410 (2018)

Mainardi, F.: Fractional Calculus: Some Basic Problems in Continuum and Statistical Mechanics. Springer, Wien (1997)

Maleknejad, K., Nouri, K., Torkzadeh, L.: Study on multi-order fractional differential equations via operational matrix of hybrid basis functions. Bull. Iran. Math. Soc. 43, 307–318 (2017)

Nouri, K., Baleanu, D., Torkzadeh, L.: Study on application of hybrid functions to fractional differential equations. Iran. J. Sci. Technol. Trans. Sci. https://doi.org/10.1007/s40995-017-0224-y (2017)

Odibat, Z., Momani, S.: Modified homotopy perturbation method: application to quadratic Riccati differential equation of fractional order. Chaos Solitons Fractals 36, 167–174 (2008)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications. Academic Press, New York (1999)

Rawashdeh, E.A.: Numerical solution of fractional integro-differential equations by collocation method. Appl. Math. Comput. 176, 1–6 (2006)

Rehman, M., Khan, R.: The Legendre wavelet method for solving fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 16, 4163–4173 (2011)

Saadatmandi, A., Dehghan, M.: A new operational matrix for solving fractional-order differential equations. Comput. Math. Appl. 59, 1326–1336 (2010)

Yuanlu, L.: Solving a nonlinear fractional differential equation using Chebyshev wavelets. Commun. Nonlinear Sci. Numer. Simul. 15, 2284–2292 (2010)

Acknowledgements

This research was supported by the Research Council of Semnan University and in part by the Grant 94802181 from Iran National Science Foundation (INSF). The authors are grateful to the anonymous referees for their careful reading, insightful comments and helpful suggestions which have led to improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Nouri, K., Torkzadeh, L. & Mohammadian, S. Hybrid Legendre functions to solve differential equations with fractional derivatives. Math Sci 12, 129–136 (2018). https://doi.org/10.1007/s40096-018-0251-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-018-0251-7

Keywords

- Fractional differential equations

- Operational matrix

- Legendre polynomials

- Block-pulse function

- Fixed-point theorem