Abstract

The time–cost tradeoff problem is one of the most important and applicable problems in project scheduling area. There are many factors that force the mangers to crash the time. This factor could be early utilization, early commissioning and operation, improving the project cash flow, avoiding unfavorable weather conditions, compensating the delays, and so on. Since there is a need to allocate extra resources to short the finishing time of project and the project managers are intended to spend the lowest possible amount of money and achieve the maximum crashing time, as a result, both direct and indirect costs will be influenced in the project, and here, we are facing into the time value of money. It means that when we crash the starting activities in a project, the extra investment will be tied in until the end date of the project; however, when we crash the final activities, the extra investment will be tied in for a much shorter period. This study is presenting a two-objective mathematical model for balancing compressing the project time with activities delay to prepare a suitable tool for decision makers caught in available facilities and due to the time of projects. Also drawing the scheduling problem to real world conditions by considering nonlinear objective function and the time value of money are considered. The presented problem was solved using NSGA-II, and the effect of time compressing reports on the non-dominant set.

Similar content being viewed by others

Introduction

Discrete time–cost tradeoff problem (DTCTP) has many applications, and a lot of research has been conducted on this area. In these studies, customer needs to get services in shorter time periods and necessity of reducing the project cost were considered together, and this approach raised the importance of DTCTP for business owners and researchers. In 1991, Hindelang and Muth presented the DTCTP for the first time (Hindelang and Muth 1979). Prabuddha et al. (1997) and Vladimir et al. (2001) showed that this problem belongs to NP-hard problem. One of the basic assumptions in this problem is that the activities cost is a function of the activities duration, which is a decision variable. The lower limit of duration is crash duration, and the upper limit of duration is normal duration. Kelley and Walker (1959), Fulkerson (1961), Kelly (1961), Ford and Fulkerson (1962), Siemens (1971), Goyal (1975), and Elmaghraby and Salem (1981) presented the linear mathematical models and Moder et al. (1983) considered continuous activities cost. DTCTP was solved using many different exact methods, such as dynamic programing (Hindelang and Muth 1979), enumeration algorithm (Patterson and Harvey 1979), and branch and bound (Demeulemeester et al. 1996, 1998; Erenguc et al. 2001), but none of the methods could solve the DTCTP in large scale, so many researchers decided to use heuristics and metaheuristic algorithms to solve this problem. Akkan (1998) used a heuristic algorithm based on Lagrange released, while Liu et al. (2000) used genetic algorithm (GA) for solving DTCTP and Elmaghraby and Kamburowski (1992) considered reward and penalty on objective function. Ann and Erenguc (1998) introduced activities compression, and Van Slyke was the first researcher who used the Monte Carlo simulation method for compressing activities. It has many advantages, such as longer projects time, flexibility of selecting distribution functions of activities time, and ability to calculate the value of the path critically (Van Slyke 1963), and DTCTP was considered with time switch in it (Vanhoucke et al. 2002). All the presented researchers have only one objective function, and the aim of all is decreasing the project cost or time. The researchers found that for determining the duration of activities, many things must be considered. For example, one may wish to finish the project in shortest time with minimum cost. To this effect, two finishing time and project cost objective functions can be considered in a scalar unique objective function (Sasaki and Gen 2003a, b), and can solve this multi-objective problem using the LP-metric method (Pasandideh et al. 2011). Mohammadreza Shahriari et al. introduced a mathematical model for time–cost tradeoff problem with budget limitation based on a mixed-integer programing, considering the time value of money (Mohammadreza Shahriari et al. 2010). Jaeho Son et al. (2013) presented a new formulation technique is introduced to merge the two independent scenarios mathematically, and it guarantees the optimal solution. Öncü Hazır (2014) presented a mathematical model to support project managers from a wide range of industries in scheduling activities to minimize deviations from project goals. Tiwari and Johari (2015) introduced an approach, which was experimented on several case studies that proved its usefulness. The intertwined approach using simple and popular Microsoft Office tools (Excel and MSP) is logical, fast, and provides a set of feasible project schedule meeting the deadline and that do not violate resource limits. Mario Vanhoucke extend the standard electromagnetic metaheuristic with problem specific features and investigate the influence of various EM parameters on the solution quality (Vanhoucke 2014).

Kaveh et al. (2015) showed that two new metaheuristic algorithms, charged system search (CSS) and colliding body optimization (CBO), are utilized for solving this problem. The results show that both of these algorithms find reasonable solutions; however, CBO could find the result in a less computational time having a better quality.

Ke et al. proposed a model to deal with an intelligent algorithm combining stochastic simulations and GA, where a stochastic simulation technique is employed to estimate random functions and GA is designed to search optimal schedules under different decision-making criteria (Ke et al. 2012).

In this study, we used NSGA-II for solving this two-objective problem.

The assumption of activities compressing in time–cost tradeoff problem was presented to achieve the specific solutions. In this solution, with spending money, the project time will decrease. Generally, delay in finishing the projects is inevitable. The World Commission on Dams (WCD) made a research on 99 large projects and reported that only 50 of these projects finished in due time, 30 % of them have 1–2 years delay, and 20 % have more than 2 years delay (4 projects have more than 10 years delay), and the main reasons of delays are financial problems, inefficiency of contractors and operation management, unreal scheduling, and employers dissatisfaction (WCD 2000). Unlike the studies in this area, we consider the “maximum duration” for the upper level of activities duration, whatever moving from normal time to compressed time caused more compressing cost, and whatever moving from normal time to presented upper bound of the time caused more saving of money. The final front of the solutions in NSGA-II presents a variety of solutions for decision makers, and they have opportunity to select a proper solution based on the available budget and appropriate time for the project. Another problem considered in this study is the time value of money. In large projects, it is important to know the proper time of spending or saving the money due to the time value of money. This study has five parts. The second part presents a mathematical model. Third part deals with solving algorithm. In part 4, a numerical example is presented, and the final part belongs to conclusion and further studies.

Mathematical model

Nomenclatures

- \(t_{i}\) :

-

Happening time of event \(i\)

- \(D_{{f\left( {ij} \right)}}\) :

-

Minimum allowed time of activity \(i - j\)

- \(D_{{n\left( {ij} \right)}}\) :

-

Normal time for activity \(i - j\)

- \(D_{{m\left( {ij} \right)}}\) :

-

Maximum allowed time of activity \(i - j\)

- \(d_{ij}\) :

-

Scheduled (actual) time of activity \(i - j\)

- \(H\) :

-

Indirect (overhead) project cost

- \(K_{n}\) :

-

Direct project cost

- \(C_{{f\left( {ij} \right)}}\) :

-

Compressing cost of activity \(i - j\)

- \(C_{{n\left( {ij} \right)}}\) :

-

Normal cost of activity \(i - j\)

- \(C_{{m\left( {ij} \right)}}\) :

-

The cost of delaying in activity \(i - j\)

- \(C_{ij}\) :

-

Compressing cost rate of activity \(i - j\)

- \(C^{\prime}_{ij}\) :

-

Saving rate of delaying for activity \(i - j\)

- \(t_{\text{Max}}\) :

-

Maximum allowed time for finishing the project

- \(C_{\text{Max}}\) :

-

Maximum available budget

- \(I_{0}\) :

-

Interest rate

Problem definitions

When the beginning activities of a project compressed, the compressing budget would be involved until the finishing project time, and this value of money would be involved for shorter time. This effect is obvious for the money that is involved with delaying in activities time, so the time value of money is an effective factor in this area. In the presented model, we used the time value of money to calculate the best time for compressing or delaying the activities. We added the compressing cost to the total cost function and subtract the venue of saving money of delaying on the activities from the total cost function, so the Pert network make better tradeoff between time and cost, considering the time value of money. The cost function contains direct cost, overhead cost, compressing cost, and delaying cost as follows:

For interpolation of the equations that contain the time value of money for compressing, we act as follows. Consider some of the activities have been compressed, and for each time unit of compression, \(C_{ij}\) is the unit of money spend, and therefore, \(\sum\nolimits_{i} {\sum\nolimits_{j} {C_{ij} \left( {t_{n} - t_{i} } \right)} }\) is the total money that spends for compression involved from day \(t_{i}\) to day \(t_{n}\) (finishing time of project). For calculating the saving money of delaying, we act the same, so we have:

Much research has been conducted on the effects of the project cost and the cost of compression. Ameen (1987) presented the definition of cost gradient and offered a new technique, “CAPERTSIM”, for decision making under uncertainty in time–cost tradeoff in compressing and the relation between them. In this research, a cost gradient index is defined as the ratio of money spend to compressing value and considering the time duration of each activity as a probabilistic variable presents a simple simulation-based model for time compressing.

In this study, we present a nonlinear relation between activities durations and \(C_{ij}\) and \(C^{\prime}_{ij}\), as shown in Fig. 1:

According to Fig. 1, if the relation between compressing time and compressing cost was an exponential distribution, then we would have:

If we had the coordination of \(D_{n}\) and \(D_{f}\), the values of \(\alpha\) and \(\beta\) could be calculated as follows:

Using the same approach for the saving coefficient, we have:

The second objective function is considered as the finishing time of the project as follows:

Mathematical model

Equation (10) is the first objective function that deals with minimizing project cost, considering the time value of money. Equation 11 is the second objective function that minimizes the finishing time of project. Considering this two objective function together moves the problem to balance the compression and delaying of the project activities.

Solving algorithm

Because the presented problem belongs to NP-hard problem, the metaheuristic algorithms were used to solve the problem. One of these algorithms is GA. Sou-Sen Leu (2000) used GA based on fuzzy theory and considered the effects of uncertainty of the parameters in time–cost tradeoff. Li and Cao (1999) created “MLGAS” technique by combining GA and Learning machines method and claimed that when the relation between activities and cost is nonlinear, the presented technique has better solutions. Heng et al. (Burns 1994) presented a new algorithm based on GA and prepared a computational program to evaluate the efficiency of the presented algorithm. This research is the most complete research in this area that used GA for time–cost tradeoff. Chau and Chan (1997) claimed that exact methods, such as DP and LP, have very long solving time and are not suitable for solving time–cost tradeoff, so he developed the GA for this problem and considered the resource constraints for each activity.

The single objective optimization algorithm could find the better solution for one objective function, and if more than one objective considered for a problem, these algorithms have no efficiency. When the problem has more than one objective function, the results can be shown as a Pareto front of non-dominant solutions. This Pareto front contains the solutions that are acceptable operation for all objective functions. When we have Pareto front of solutions, none of the solutions in Pareto frons has better result for all objective function comparing with other solutions in Pareto front, and we have not a single optimal solution (Tavakkoli-Moghaddam et al. 2008). Figure 2 shows the Pareto sets in multi-objective problems (Deb 2001).

Pareto sets in multi-objective problems (Deb 2001)

NSGA-II algorithm

Non-dominated sorting genetic algorithm (NSGA-II) is one of the most efficient and famous multi-objective algorithms, which was presented by Deb (2001) and Zade et al. (2014) and proved its usefulness in multi-objective problems (Deb et al. 2002). The NSGA-II can convergent with Pareto sets of solutions, and the results could spread to all sets. NSGA-II uses non-dominant sorting for convergent confidence and also crowding distance for cutting the bad solutions for earning better solutions (Gen and Cheng 1997; Amiri and Khajeh 2016). Totally, its higher competence makes this algorithm a good selection for multi-objective problems.

The steps of NSGA-II are as follows.

Initialization

The structure of the chromosome that used in this study is shown in Fig. 3. This chromosome is a \(1 \times n\) matrix, and each genome in this chromosome is duration of activities.

In addition, the initial information of this algorithm is:

- \(npop\) :

-

The population size represents the number of chromosomes in each iteration of algorithm.

- \(P_{C}\) :

-

Probability of crossover operator that represents the number of parents in the mating pool.

- \(P_{M}\) :

-

Probability of mutation operator that represents the number of chromosomes mutating in each iteration of algorithm.

- \(nIt\) :

-

Maximum algorithm iterations.

The values of these parameters are shown if Table 1.

Fast non-dominant sorting and crowding distance

In this step, all chromosomes ranked using fast non-dominant sorting and crowding distance concepts. In fast non-dominant, sorting the population is sorted based on domination concept. Each solution in this step is compared with all other chromosomes and determines which one is dominant or non-dominant. Finally, we have a set of non-dominant solutions that forms the first boundary of solutions. For determining the second boundary, the solutions that located in first boundary ignore and the procedure is repeated again. This procedure runs until all solutions are located in solution boundaries. In this procedure, the worst situation happened when each boundary contains one solution. In this situation, the complexity of algorithm is \(O\left( {MN^{2} } \right)\), where M is the number of objectives and N is the population size. The procedure on non-dominant sorting is shown in Fig. 4.

For determining the density of the solutions around a specific solution, the average distance between the specific solutions and two adjacent solutions is calculated and considered as a crowding distance. In other words, if we draw a rectangle that two adjacent solutions located on its vertex, sum of its length and width would be the crowding distance of the specific solution, as shown in Fig. 5. A specific solution with less crowding distance means less solution density around the specific solution, so for selecting the solutions for the next generation, the more crowding distance is better than less crowding distance (Deb 2001).

Crowding distance of a specific solution (Deb 2001)

Parents

After non-dominant sorting and calculating crowding distance of each solution, the parents are ready for crossover and mutation operators based on selecting strategy.

Selecting strategy

Parents are selected for crossover and mutation operators based on crowded tournament selection operator. In this operator, two solutions are compared with each other, and winner is selected. The ith solution has two properties:

-

1.

Has a rank or degree on non-domination that shows by \(r_{i}\),

-

2.

Has a crowding distance that shows by \(d_{i}\),

The ith solution is the winner of the competition comparing with jth solution if and only if, one of two below conditions is established (Deb 2001):

-

1.

The ith solution has better rank in a non-dominant sorting procedure (\(r_{i} < r_{j}\)) that means this solution has better non-domination degree comparing with jth solution,

-

2.

If ith solution has more crowding distance comparing with jth solution (\(d_{i} > d_{j}\)), when the rank of both solutions are equal.

Crossover operator

For crossover operator, two parents were randomly selected, and two offspring produced using a uniform crossover operator. In this operator, for each genome of the parent’s chromosome, a binary random variable is produced. If this variable is 1, the genome of the parents is changed with each other, and if this variable is 0, the genome stays in its place (Chau and Chan 1997; Deb et al. 2000). The crossover operator is shown in Fig. 6.

Mutation operator

The mutation operator is done in all four matrices of solution chromosome. For each genome, a uniform random variable between 0 and 1 is produced. If the value of this random variable is less than mutation rate, the genome mutates randomly, and if the value of the random variable is greater than mutation rate, the genome does not change (Chau and Chan 1997). The mutation operator is shown in Fig. 7.

Offsprings evaluation and combining with parents

In this section, we evaluate the offsprings that are created with crossover and mutation operators and assign a fitness quantity to each offspring. Then, we combine the parents and offsprings, and create a new population. This combination keeps the better solutions in new population. In multi-objective optimization problems, elithism has ambiguity. In these cases, we use a non-domination rank for each solution, so that each solution is rated based on non-domination.

After combination of the parents and offsprings, each solution is ranked based on the fast non-dominant sorting and crowding distances.

The mechanism of NSGA-II evolution is shown in Fig. 8.

Stop condition

The last step of NSGA-II is checking the stop condition. In multi-objective metaheuristic algorithms, there is no standard stop condition, so we consider a predefined algorithm iteration.

Numerical example

For illustrating the steps of presented algorithm, we used the numerical example of the paper entitled “Crashing PERT network using mathematical Programming” published in “International Journal of Project Management” in 2001. This example has been used in many studies as an authentic example for time compressing. In this example, all activities are assumed to be done in normal or compressed time. We extend this example for considering the delay in project activities. The values of example parameters are presented in Table 2.

The other parameters are \(K_{n} = 100,000,\,\,H = 2000,\,\,I_{0} = 0.1,\,\,C_{\text{Max}} = 1000,000,{\text{ and }}t_{\text{Max}} = 140\).

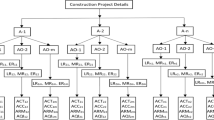

The AOA network of presented example is shown in Fig. 9.

The results of solving the example with NSGA-II are presented as a Pareto front in Fig. 10.

As it is shown in Fig. 10, for faster finishing of projects, we need to pay more money, and in this situation, we must compress the activities more than normal situations. On the other hand, if we want to finish the projects in maximum acceptable due time, we have more money saving. The result of the first and second objective functions for 50 runs of NSGA-II and the values of compressing and delaying on activities are presented in Table 3.

As it is shown in Table 3, only 20 results are unique in 50 obtained ones, and the other results are repetitive. In addition, from the result no. 1 to no. 50, the time of finishing project increases, the project cost increases, and the compression and delaying activities show increasing trends. The duration of activities in each solution presented in Tables 4 and 5 consequently.

Conclusion and further studies

In this study, we showed that adding some assumptions to DTCTP can draw the problem nearer to real-world situations. One of these assumptions is adding the time value of money, because in many projects scheduling, the time value of money has a very important effect on making decision about compressing of the activities. In addition, adding the ability of delaying on project activities is another important factor appended to time–cost tradeoff problems. Moreover, presenting a Pareto front of results to decision makers gives them the opportunity to select the better solution due to project limitations and make the decision making procedure more flexible.

References

Akkan C (1998) A Lagrangian heuristic for the discrete time–cost tradeoff problem for activity-on-arc project networks. Working Paper, Koc University, Istanbul

Ameen (1987) A computer assisted pert simulation. J Syst Manage

Amiri Maghsoud, Khajeh Mostafa (2016) Developing a bi-objective optimization model for solving the availability allocation problem in repairable series–parallel systems by NSGA II. J Indus Eng Int 12(1):61–69

Ann T, Erenguc SS (1998) The resource constrained project scheduling problem with multiple crashable modes: a heuristic procedure. Eur J Operat Res 107(2):250–259

Burns SA (1994) The LP/IP hybrid method for construction time–cost trade off analysis. Construct Manage Econ J

Chau DKH, Chan WT, Govindan K (1997) A time-cost trade-off model with resource consideration using genetic algorithm. Civil Eng Syst 14:291–311

Deb K (2001) Multi-objective optimization using evolutionary algorithms. Wiley, Chichester

Deb K, Agrawal S, Pratap A, Meyarivan T (2000) A fast elitist non-dominated sorting genetic algorithm for multi-objective optimization: NSGA-II. In: Proceedings of the parallel problem solving from nature VI (PPSN-VI) conference, pp 849–858

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Deineko VG, Woeginger GJ (2001) Hardness of approximation of the discrete time–cost tradeoff problem. Operat Res Lett 29(5):207–210

Demeulemeester E, Herroelen W, Elmaghraby SE (1996) Optimal procedures for the discrete time/cost trade-off problem in project networks. Eur J Operat Res 88(1):50–68

Demeulemeester E, De Reyck B, Foubert B et al (1998) New computational results on the discrete time/cost trade-off problem in project networks. J Operat Res Soc 49(6):1153–1163

Elmaghraby SE, Kamburowski J (1992) The analysis of activity network under generalized precedence relations. Manage Sci 38(9):1245–1263

Elmaghraby SE, Salem A (1981) Optimal linear approximation in project compression. OR Technical Report 171, North Carolina State University at Raleigh

Erenguc SS, Ahn T, Conway DG (2001) The resource constrained project scheduling problem with multiple crashable modes: an exact solution method. Naval Res Log 48(2):107–127

Ford LR, Fulkerson DR (1962) Flows in networks. Princeton University Press, Princeton

Fulkerson DR (1961) A network flow computation for project cost curves. Manage Sci 7:167–178

Gen M, Cheng R (1997) Genetic algorithms and engineering design. Wiley, New York

Goyal SK (1975) A note on the paper: a simple CPM time/cost trade-off algorithm. Manage Sci 21:718–722

Hazır O, Haouari M, Erel E (2014) Robust optimization for the discrete time-cost tradeoff problem with cost uncertainty. Handbook on project management and scheduling, vol 2, Part of the series International Handbooks on Information Systems pp 865–874

Hindelang TJ, Muth JF (1979) A dynamic programming algorithm for decision CPM networks. Operat Res 27(2):225–241

Kaveh A, Mahdavi VR (2015) Resource allocation and time-cost trade-off using colliding bodies optimization. Colliding Bodies optimization, pp 261–277

Ke H, Ma W, Chen X (2012) Modeling stochastic project time–cost trade-offs with time-dependent activity durations. Appl Math Comput 218:9462–9469

Kelley JE (1961) Critical path planning and scheduling: mathematical basis. Oper Res 9:296–320

Kelley JE, Walker MR (1959) Critical path planning and scheduling: an introduction. Mauchly Associates, Ambler

Leu SS (2000) A GA-based fuzzy optimal model for construction time- cost trade off. Int J Project Manage

Li H, Cao JN (1999) Using machine learning and GA to solve time-cost trade-off problems. J Project Manage

Liu SX, Wang MG, Tang LX et al (2000) Genetic algorithm for the discrete time/cost trade-off problem in project network. J Northeast Univ China 21(3):257–259

Moder JJ, Phillips CR, Davis EW (1983) Project management with CPM, PERT and precedence diagramming, 3rd edn. Van Nostrand Reinhold Company, New York

Pasandideh SHR, Niaki STA, Hajipour V (2011) A multi-objective facility location model with batch arrivals: two parameter-tuned meta-heuristic algorithms. J Intell Manuf. doi:10.1007/s10845-011-0592-7

Patterson JH, Harvey RT (1979) An implicit enumeration algorithm for the time/cost tradeoff problem in project network analysis. Found Control Eng 4(2):107–117

Prabuddha DE, Dunne EJ, Ghosh JB et al (1997) Complexity of the discrete time–cost tradeoff problem for project networks. Operat Res 45(2):302–306

Sasaki M, Gen M (2003a) A method of fuzzy multi-objective nonlinear programming with GUB structure by hybrid genetic algorithm. Int J Smart Eng Des 5(4):281–288

Sasaki M, Gen M (2003b) Fuzzy multiple objective optimal system design by hybrid genetic algorithm. Appl Softw Comput 2(3):189–196

Shahriari M et al (2010) A new mathematical model for time cost trade-off problem with budget limitation based on time value of money. Appl Math Sci 4(63):3107–3119

Siemens N (1971) A simple CPM time/cost trade-off algorithm. Manage Sci 17:B-354–B-363

Son J, Hong T, Lee S (2013) A mixed (continuous + discrete) time-cost trade-off model considering four different relationships with lag time. KSCE J Civil Eng 17(2):281–291

Tavakkoli-Moghaddam R, Safari J, Sassani F (2008) Reliability optimization of series-parallel systems with a choice of redundancy strategies using a genetic algorithm. Reliab Eng Syst Safe 93:550–556

The report of the world commission on Dams (WCD) (2000) Dams and development. Earthscan publications L + d, London and sterling

Tiwari S, Johari S (2015) Project scheduling by integration of time cost trade-off and constrained resource scheduling. J Inst Eng (India) Ser 96(1):37–46

Van Slyke RM (1963) Monte Carlo methods and the PERT problem. Operat Res. 33:141–143

Vanhoucke M (2014) Generalized Discrete Time-Cost Tradeoff Problems. Handbook on project management and scheduling, vol. 1 Part of the series International Handbooks on Information Systems pp 639–658

Vanhoucke M, Demeulemeester E, Herroelen W (2002) Discrete time/cost trade-offs in project scheduling with time-switch constraints. J Operat Res Soc 53(7):741–751

Zade AE, Sadegheih A, Lotfi M (2014) A modified NSGA-II solution for a new multi-objective hub maximal covering problem under uncertain shipments. J Indus Eng Int 10(4):185–197

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Shahriari, M. Multi-objective optimization of discrete time–cost tradeoff problem in project networks using non-dominated sorting genetic algorithm. J Ind Eng Int 12, 159–169 (2016). https://doi.org/10.1007/s40092-016-0148-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40092-016-0148-8