Abstract

The Video Browser Showdown addresses difficult video search challenges through an annual interactive evaluation campaign attracting research teams focusing on interactive video retrieval. The campaign aims to provide insights into the performance of participating interactive video retrieval systems, tested by selected search tasks on large video collections. For the first time in its ten year history, the Video Browser Showdown 2021 was organized in a fully remote setting and hosted a record number of sixteen scoring systems. In this paper, we describe the competition setting, tasks and results and give an overview of state-of-the-art methods used by the competing systems. By looking at query result logs provided by ten systems, we analyze differences in retrieval model performances and browsing times before a correct submission. Through advances in data gathering methodology and tools, we provide a comprehensive analysis of ad-hoc video search tasks, discuss results, task design and methodological challenges. We highlight that almost all top performing systems utilize some sort of joint embedding for text-image retrieval and enable specification of temporal context in queries for known-item search. Whereas a combination of these techniques drive the currently top performing systems, we identify several future challenges for interactive video search engines and the Video Browser Showdown competition itself.

Similar content being viewed by others

1 Introduction

In the twenty-first century, digital cameras decorate almost every corner in city centers and most pedestrians carry a smartphone capable of high quality video. While humankind has reached the point where digital video data are so easily produced, stored and shared, a huge remaining challenge is effective and efficient access to these vast volumes of stored audio-visual information. So far, many commercial search engines have been established, allowing users to satisfy certain search needs over video collections with sufficient retrieval precision. Primarily, these search engines focus on returning matches to free-form text queries. However, high retrieval recall and interactive retrieval remain difficult challenges for current video search models.

The scientific community has reacted to the high recall challenge with evaluation campaigns attracting research teams focusing on video retrieval. TRECVID [38], Video Browser Showdown (VBS) [37] and Lifelog Search Challenge [13] define retrieval tasks where both high recall and precision are essential to achieve a good score. Every year, the results of these campaigns confirm that achieving high recall in arbitrary tasks over general videos remains a hard problem. So far, there is no clear solution to the problem, despite the limited scale of the competition datasets, compared to web-scale media collections. Nevertheless, one observation confirmed every year is that system-user interactions have a positive effect on effectiveness.

Two important task types for interactive retrieval evaluation are known-item search (KIS), where there is only a single correct item to be found, and ad-hoc video search (AVS), where the goal is to retrieve as many items as possible matching a description. This paper focuses on the Video Browser Showdown 2021, a virtual event (see Fig. 1) where a record number of participating teams tried to solve a large number of AVS and KIS tasks with their interactive video search systems. We emphasize that while user-centric evaluations of this kind and extent are rare and discrete events, they do provide invaluable insights to the performance of participating approaches. The key contributions of this paper can be summarized as:

-

Description of VBS 2021, including an overview of participating systems and their rich set of tested approaches;

-

Results of the first remote VBS 2021, where a record number of 16 scoring teams participated;

-

Findings from the competition, comprehensive AVS task analysis, and result set log analysis;

-

Critical analysis of current challenges with interactive AVS evaluations and suggestions for upcoming VBS evaluations.

The remainder of this paper is structured as follows: Sect. 2 gives an overview of VBS 2021 and its tasks, Sect. 3 introduces the participating systems and summarizes their approaches, Sect. 4 shows the results of the interactive evaluation with a particular focus on AVS analysis, and Sect. 5 gives an outlook toward the future and concludes the paper.

2 Video Browser Showdown

The Video Browser Showdown [37, 54], collocated with the International Conference on Multimedia Modeling (MMM), started its annual comparative live evaluations in 2012 and reached its tenth anniversary in 2021. Unlike other benchmark evaluations, VBS represents a unique evaluation platform where teams compete on a task at the same time, in the same environment, and with user-centric video search tools.

Like in previous years, VBS 2021 used the V3C1 [60] dataset, which contains approximately 1000 hours of video. The task types were unchanged, consisting of visual, where the target sequence was shown to participants, and textual, where the target sequence was described, known-item search (KIS) tasks and ad-hoc video search (AVS) tasks. Table 1 shows an example of how the description for a Textual KIS task gets progressively more detailed, and Fig. 2 shows keyframes of a Visual KIS task. For the sake of completeness, we will briefly recap the scoring function which was the same as in 2020 [37], albeit with minor adjustments. In KIS tasks, the goal is to reward quickly finding the correct item,Footnote 1 while punishing wrong submissions. Given a linearly decreasing function \(f_{TS}\) based on search time, the time of correct submission t and the number of wrong submissions ws, the score for a given KIS task is as follows:

\(f_{KIS}\) thus awards at least 50 points for a correct submission if no wrong submission was made, and penalizes each wrong submission with a malus of 10 points.

In AVS tasks, the goal is to reward both precision and recall. Given correct submissions C and incorrect submissions I of a team, all correct submissions of all teams for a task P and a quantization function q which merges temporally close correct shots into ranges,Footnote 2 the scoring function for AVS tasks is as follows:

While the overall setting was very similar to previous events, VBS 2021 introduced two major novelties. First, and most importantly, the competition took place fully remotely due to the COVID-19 pandemic. This setting was facilitated by adopting the new ‘Distributed Retrieval Evaluation Server’ (DRES)Footnote 3 [55], which has been explicitly designed for such a distributed and scalable setup. Teams, consisting of two active participants each, could access the main overview (displaying tasks and scores) via their browser and submit results via a REST service to the server. In addition, participants, judges and organizers were connected in a video conferencing session for communication. The participants were also asked to provide a camera view that shows the screen of their VBS tool so that everyone could see how the respective tools are operated (and to ensure that everyone adheres to the rules.Footnote 4). The public VBS session was live-streamed on Twitch. Figure 1 shows a screen-capture from the virtual event. While this setup relaxed the “same environment” setting, the teams nevertheless solved the tasks at the same time using the same dataset.

The second major novelty was a briefing session with the judges for AVS tasks before the competition, in which the task descriptions were discussed, and clarifications added. The aim was to eliminate ambiguities and ensure that the assessment of the judges is more consistent than in previous years. The task selection procedure was the same as described in [37]. This aim has not been fully reached, as some potential ambiguities become only apparent when seeing candidate results. Thus, a trial-run involving stand-in participants might be useful for the judges to come to a common understanding of a task.

3 Participating systems

Tables 2 and 3 list the retrieval and interaction methods of the different systems at VBS 2021, respectively. In this section, we summarize the methods used and in doing so, also provide an extensive overview of state-of-the-art methods in multimedia retrieval. The categories used are similar to the ones from the 2020 review [37], with a new subsection added for interaction modalities, given that there were two virtual reality systems this year.

3.1 Text search

The trend from previous iterations of VBS to textual queries [37, 54] continues this year. The effectiveness of embedding-based methods such as the W2VV++ model used by last year’s highest scoring system, SOMHunter [26], as also shown in an evaluation of SOMHunter and vitrivr [53], makes such models a valuable addition to retrieval systems. The W2VV++ model and its variants [31, 34, 40] was used by VIRET, SOMHunter, VBS2020 Winner, and in the form of features for image search CollageHunter. vitrivr and vitrivr-VR used a similar approach [68]. VIRET used the CLIP model [46], VIREO the interpretable embeddings of the dual-task model [74], EOLAS a conventional textual embedding approach using autoencoders, VERGE an attention-based dual encoding model [12], and VISIONE the Transformer Encoder Reasoning Network (TERN) model [39].

Concept-based search was also used by several teams this year. vitrivr, vitrivr-VR and VideoGraph applied a combination of several neural networks [58] for concept detection. VideoGraph additionally contextualized and extended them by linking the extended concepts to Wikidata.Footnote 5 VERGE used a multitude of concept detection models, including EfficientNets trained on ImageNet1000 [11] and TRECVID SIN [38], EventNet [77], a style model [70] pre-trained models on MS COCO [32] and OpenImageV4 [27], a 3D-CNN model [14] pre-trained on the Kinetics-400 dataset [23] and VGG16 [65] trained on Places365 [79]. The last combination was also used by IVIST. Other concept detectors used include [3] by VISIONE, YOLO 9k [47] by NoShot and EnlightenGan [21] combined with HTC [8] together with 3D ResNet-200 [14] by IVIST. VIREO utilized the decoded concept list of visual embedding [74]. HTW uses tagged image archives [18] to generate concepts, and Exquisitor uses pylucene to search the ResNeXt-101 visual concepts and their text descriptions [76] to provide positive examples to its relevance feedback process.

For ASR search, vitrivr, vitrivr-VR, VIREO, EOLAS, Exquisitor and VideoGraph all rely on the generated speech resource from the V3C1 dataset [60]. For OCR search, vitrivr, vitrivr-VR, and VideoGraph used the data from [58], VIREO tesseractOCR [66] and IVIST used ASTER [64].

3.2 Image and sketch search

For image similarity, VIRET, SOMHunter, VBS2020 Winner and CollageHunter all used embedded W2VV++ model features [31, 34, 40]. VIREO uses visual embeddings of the dual-task model [74], VERGE the last pooling layer of a fine-tuned GoogleNet [45], HTW a CNN with DARAC-Pooling [61] and VISIONE Resnet101-GeM [50] and TERN [39]. For color or semantic sketches, vitrivr supports a plethora of features [51, 56], VERGE clusters to twelve predefined colors using the Color Layout MPEG-7 descriptor, and HTW uses a handcrafted low-level feature [18]. VIREO [42] and VISIONE [1] also support sketch search, with VISIONE extracting dominant colors with pretrained color hash tables [5, 72] and objects using pretrained neural networks [47, 48, 78]. CollageHunter allows image collages, which enable localization of example image queries on a canvas. In diveXplore, similar video summaries can be retrieved by image feature similarity [29]. EOLAS employs an image search mechanism using the positions of the user and the shots chosen in an embedded latent space.

3.3 Fusion approaches

Multiple teams offer the option to formulate a query with a temporal modality. In vitrivr, users can specify multiple temporally ordered queries which are independently evaluated and then aggregated with the scoring function rewarding videos which have matching segments for the individual queries in the correct order [17]. VIRET uses a context-aware ranking model [44] which requires that all independently formulated queries should be sufficiently answered by a segment of a video. Many teams allow users to specify two ordered queries, which are then executed independently. SOMHunter, VBS2020 Winner and CollageHunter all use the same algorithm as in 2020 [36], where the score for an item is determined by fusing its own score with the score of the best match for the second query within a specified time delta. HTW and VERGE used a similar algorithm for temporal queries. Similarly, for VISIONE, two independent queries describing two distinct keyframes of a target video can be submitted by the user; results from the same video which are within a specified time threshold are paired in the result visualization, and ranked using the normalized sum of the scores of the outcomes in the pair. In VIREO, no temporal distance is specified, the ranking algorithm looks for sequences with the highest combined rank, ignoring temporal distance [43].

Besides the temporal context, there are also systems which offer different query modalities to the user. vitrivr and vitrivr-VR both score result items for each modality separately, and then offer a configurable choice of max- or average-pooling the score over the different modalities, with average-pooling being used in the competition. In VISIONE, all modalities are mapped to text, which allows the usage of Apache Lucene as a search backend. Each modality is a sub-query and the Lucene QueryRescorer combines their search results [1]. In contrast, vitrivr uses a specialized database allowing vector, text and Boolean retrieval [15]. VIREO uses a linear function to fuse ranking lists of concept-based search and embedding-based search [74], and VERGE provides the option to re-rank the results of a search modality, based on the results of any other modality. Exquisitor supports fusing the results of semantic classifiers, e.g., through intersection of classifiers, where videos are returned if they rank highly in both classifiers. This can be augmented by a temporal constraint, where a keyframe from one model must precede a keyframe from another by a specified minimum or maximum number of segments.

3.4 Relevance feedback

While some teams offered support for simple more-like-this queries, such as vitrivr using deep features based on MobileNet V1Footnote 6 [19], there were also more sophisticated approaches to relevance feedback.

The goal of the Exquisitor system is to study the role of interactive learning in large-scale multimedia analytics applications. To that end, Exquisitor relies on user relevance feedback as its main user interaction strategy. The general goal of interactive learning is to develop a semantic classifier that captures the information need of the user well [25]. At the search-oriented VBS competition, however, the goal of this interaction is to build a classifier that can identify the most likely solution candidates, allowing the user to then explore the candidates in more detail to determine their relevance to the task.

SOMHunter, VBS2020 Winner and CollageHunter all use the same approach [9] as in 2020, which is a “Bayesian-like update rule to maintain current relevance scores of frames based on selected positive and implicit negative examples” [37].

3.5 Result set visualization and browsing

Turning to the user interaction strategies presented in Table 3, the most common approach is still to present query results in an ordered list of small thumbnails representing keyframes (similar to previous iterations of VBS). The temporal context of results can then often be inspected based on user input, e.g., as a video preview or by browsing neighboring keyframes. This is also the approach used by the highest-scoring team, vitrivr. Some systems also offer a video player, or an option to view a summary of the entire video. Several teams have experimented with different browsing or visualization approaches.

Rather than displaying individual frames, VIRET focuses on displaying top-ranked video segments (i.e., fixed-length sequences of consecutive frames extracted from a video), where the best per-segment answers for each sub-query are visually highlighted. All three systems relying on the SOMHunter engine provide three result set visualization modes: ranked list of frames, ranked list of scenes (i.e., matched frame with its temporal context per row) and a self-organized map (SOM) evaluated dynamically over all scored database frames. The SOM-based display allows exploratory investigation of the result set, providing more diverse but semantically collocated items in the result set grid view.

HTW enables browsing of the whole video collection on keyframe or shot level by arranging the images on a hierarchical self-sorting map (SSM) [18]. Furthermore, the top-2000 results are presented in either a list, a hierarchical SSM or video summary consisting of five shots.

The diveXplore system introduces a new way of browsing video summaries. Search results for this mode contain lists of videos appearing in panels that contain all shots as thumbnails. These panels can be browsed horizontally by search concept ranking and vertically by video summary similarity to the entire database.

The two VR systems used different approaches, which we discuss in the next subsection.

3.6 Interaction modalities and paradigms

The user interface of a retrieval system has a large impact on its performance by enabling and restricting interaction modalities. In this iteration of the VBS, for the first time, not all systems used a conventional desktop-based user interface, as EOLAS and vitrivr-VR became the first two systems to participate in VBS with virtual reality-based user interfaces.

Virtual reality as multimedia retrieval user interface offers both opportunities as well as challenges when compared to conventional desktop user interfaces. With the trend toward deep learning-assisted textual queries, VR interfaces require alternative text-entry methods in the absence of a physical keyboard. Both EOLAS and vitrivr-VR employ speech-to-text as the primary text entry method. vitrivr-VR additionally uses a direct interaction-based virtual keyboard as backup text entry method.

The approaches of EOLAS and vitrivr-VR differ the most to the other teams in regards to results visualization and interaction. EOLAS visualizes results as clusters in 3D space, laid out according to their feature similarities, which can be traversed to explore the result set. vitrivr-VR employs a more conventional approach to result set visualization, by displaying the result set in a sorted grid, wrapped cylindrically around the user. In addition to a standard video player in VR, vitrivr-VR additionally makes use of virtual space by providing a video segment summary display resembling a file cabinet drawer, which allows quickly riffling through a temporally ordered box containing the segments of a video.

4 Results of VBS 2021

In this section, we present the results of the competition, and provide an analysis of submissions and retrieval models. Additionally, we are able to analyze AVS data for the first time since 2018, and provide insights into both system performance and task properties. The availability of AVS data is one of the reasons we focus on AVS tasks; KIS tasks are also analyzed in depth in previous papers [37, 54]. We exclude one participating system altogether [49], as the team experienced technical difficulties on both days of the evaluation. Analysis regarding result logs is only available for a subset of teams, since not all teams logged their results in the common format.

4.1 Overall results

Table 4 shows an overview of all teams and the scores achieved per category, highlighting the top two scores per category. Scores are normalized per category such that the best team receives 100 points in said category. Categories are scored independently; the overall score is calculated by summing up the individual categories.

When looking at Table 4, the highest scoring team is different for every task category, and no team is among the two top-scoring systems of more than one category. This is an indication of well-designed tasks and meaningful differences between the top systems and their operators.

Comparing the scores of the two VR systems, EOLAS and vitrivr-VR shows that while VR can be competitive, the approach used by EOLAS for the user interface was not very suitable for the competition format. EOLAS’s interface focused on exploring in a 3D environment involving VR locomotion, which caused difficulties in finding a sufficient number of shots in a limited time.

Most teams were able to solve a substantial number of Visual and Textual KIS tasks, as shown in Table 5. The easiest task was solved by 15 out of 16 of teams, and the most challenging one was not solved by any team. Across all tasks, the mean number of teams which solved a task was approximately 9.4.

4.2 Result log analysis

In addition to the submissions, most teams logged the result sets of their queries, either storing the logs locally or sending them directly to the competition server. In this section, we take a closer look at the logs, giving insight into the retrieval models and the differences in systems and operators. One thing to note is that different teams have different units of retrieval, e.g., SOMHunter logs frames, while vitrivr and VIRET log shots and intervals. To check if an item in the result set would have been correct, we compare the logged unit of retrieval to the ground truth. Although different units of retrieval may affect chances of a correct segment hit (i.e., a chance for interval overlap), we did not discriminate between the units of retrieval in the following analysis involving ranks of correct items.

4.2.1 Browsing efficiency

One interesting question is how long it took operators to find an item once it was present in a result set. This is both dependent on the system, i.e., how good the browsing capabilities of a system are, and on the operator, since some operators prefer to browse a result set exhaustively, while others prefer to reformulate and execute new queries.

Figure 3 shows the elapsed time between the first and last appearance of the correct shot in the result set and submission time of the correct item. Note that it is possible that between one user receiving the correct result from their query and submitting it, the other user formulated a query which contained the correct result, and hence the time delta between last appearance and submission may not reflect the browsing time accurately. It is also possible that a correct item was found through the video and not the shot.

To visualize the dependency between the rank of a found item and the time until correct submission, we show in Fig. 4 each correct submission as a datapoint with the rank it was found at first, and the time it took until correct submission. Overall, the figure shows that, as expected, the time between the first appearance and a correct submission increases. However, the figure also demonstrates that variance increases as well, indicating that operator differences are indeed occurring: while some operators might have browsed for a long time, others reformulated their query or found the correct item through the correct video.

We have conducted several other analyses, such as only considering items below a certain cutoff (which could be considered browsable), or considering the appearance of the best rank. These analyses have not produced new insights, and hence are omitted from the paper. The absence of standardized interaction logging which could indicate scrolling and currently visible results makes this analysis challenging.

4.2.2 Comparison of retrieval models

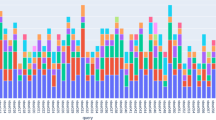

For the comparison of retrieval models, Fig. 5 shows where the best achieved rank of a correct item before submission was per system across tasks.

Figure 5 shows that the retrieval model and search strategy strengths of the top teams are somewhat matched, with VIRET and SOMHunter finding the desired items in the first ten results more consistently. vitrivr, VIREO and CollageHunter have a lower sample size, which is explained by the fact that they were often able to find the correct item through a video-level hit and subsequent browsing (see Fig. 6). Also of note is that in a previous evaluation of vitrivr and SOMHunter, results clearly showed that SOMHunter had a better retrieval approach [53]. In the meanwhile, vitrivr added a joint embedding and improved its temporal scoring, allowing it to be competitive again in the retrieval model and having to rely less on browsing. vitrivr-VR also used the joint embedding but lacked the ability to specify temporal context, which explains the lower ranks compared to vitrivr, even though both systems had access to the same features, indicating that having an easy way for users to specify temporal context in a query is essential for successful interactive video retrieval.

4.2.3 Analysis of submissions

A more comprehensive overview of the result logs is shown in Fig. 6, which shows best logged rank of the correct shot and video, the time it took for the item to appear at the given rank and the time of the correct submission. It also shows browsing misses, meaning the correct item (cell colored in red) or video (cell colored in orange) was present in the result set, but not submitted. Note that the logs for some teams, such as VideoGraph, can be incomplete due to technical difficulties.

Green cells show the best achieved logged rank \(r_s\) between 1 and 300 in time t of a correct scene frame in a task. The best rank \(r_v\) of a correct video frame from the same result log is included, while \(t_{cs}\) presents the time of the tool’s correct submission. Red values are for the best detected ranks of searched video frames if searched scene frames were not present in the logged result sets for a task. Red or orange cells show a browsing failure where the frame or video was retrieved but the team did not submit a correct result

The data show that a substantial number of teams had video-level browsing misses, meaning the correct video was found, but not the correct segment. Shot-level misses were rarer, but still happened, e.g., for vitrivr and SOMHunter in three tasks, with the rank of the correct shot ranging from 1 (t-7, CollageHunter) to 9704 (t-7, vitrivr-VR). While missing the correct item at rank 1 is a browsing-level miss which can be attributed to the operator (and also the result visualization component), when missing an item at higher ranks it is not knowable, with the current logging specification, whether the operator browsed that far or whether they simply formulated another query after looking at a subset of high-ranked results.

Additionally, many correct submissions originated from a video-level hit, with operators subsequently exploring the video through neighboring frames, a video overview or with a video player. These cases are indicated by red numbers in Fig. 6 and show that the ability to inspect a video is key to good performance in KIS tasks.

4.3 AVS analysis

In the 2019 and 2020 iterations of VBS, there was no analysis of AVS tasks due to technical issues [37, 63]. This year, the new evaluation server [55] improved testing by teams before the competition, which helped improve data quality. In this section, we are therefore able to present insight into questions surrounding AVS tasks.

Both retrieval and judgment of AVS is done interactively at VBS. This has so far in every year resulted in different understandings, both between different judges and between judges and teams. Additionally, some tools, such as VISIONE, had issues with result submission, partially due to network overload and partially due to suboptimal implementations. We believe these issues did not significantly affect the results discussed in this section, which are presented in an aggregate form, as the number of submissions that were not submitted successfully by the affected teams is only a small fraction of the total number of submissions made by all teams.

Table 6 shows all AVS tasks and their description in the order which they were solved in the competition. All plots going forward include the task identifiers.

4.3.1 Judgment and submissions during tasks

One area of interest is how the assessed correctness of submissions changes during the time allocated to a task. The hypothesis being that at the start of a task, there is some ambiguity between the task description and judge and operator understanding of the description, which is resolved as teams see thumbnails of submissions judged as correct or incorrect.

In Fig. 7, we show the ratio of submissions judged as correct over time. What stands out is that there were two tasks with a large degree of difference in task understanding, a-3 (person skiing with their own skis in the picture) and a-11 (person skiing, camera looking into the sun). For a-3, the difference (the task intention was for point-of-view shots) was clarified with a comment from a judge; however, the ratio remains low since not all teams followed the discussion. For a-11 the different understandings persisted. Overall, no clear trend emerges. Some tasks exhibit consistently high agreement (e.g., a-6, looking for fish underwater and a-5, person with a flag), while most tasks have a high variance during the task.

In Fig. 8, we show how the number of submissions varies over time, where it seems that some time is needed until a query is found which is suitable for the task at hand, and afterward the rate of submissions stays relatively steady over time. This poses the question at which point in time there would be a drop-off in the number of submissions, if the AVS tasks had a longer duration.

Figure 9 shows that in addition to the rate of submissions remaining steady, the number of unique correct videos that are found also continues to increase toward the end of the task, showing that even at the end of the time limit, new videos matching the description are still being found. This indicates that given a longer task duration, the number of unique correct submissions would probably still increase, as long as there exist relevant segments in the collection.

4.3.2 Differences between AVS tasks

Another interesting question is what differences, if any, there are between AVS tasks. For some tasks, looking at a thumbnail is sufficient (e.g., underwater shot of fish), while for tasks describing an action, the video needs to be inspected (e.g., shots of two women walking and talking). Additionally, some tasks might have a very wide range of acceptable results, while others are quite narrow.

Figure 10, with all submissions, and Fig. 11, with only correct submissions, show the difference between the AVS tasks in terms of selected metrics: the number of overall submissions (shown as bars), time until first (correct) submission, time to first (correct) submission by half the teams and time until first ten (correct) submissions by half the teams. The y-axis indicating the time, on the right, has been inverted, so that higher y-axis values indicate that a task is easier for all metrics. On the x-axis, tasks are ordered by their appearance in the competition.

Looking at these three graphs, the data indicates that there are relevant differences between the AVS tasks. For example, looking at a-1, it took almost five minutes for half of the teams to find 10 submissions which were judged as correct.

Additionally, we are interested in which kind of strategies are rewarded by the current evaluation metrics. In Fig. 12, we show the performance of each team per task as a colored dot, with the color indicating the score in that task. The figure shows that the current scoring scheme seems to reward recall, in that teams which have a high share of overall submissions get higher score, even at lower precision. The ability of a user interface to quickly submit many solutions thus seemed essential for success in AVS this year.

4.3.3 Judge and team agreement analysis

In Table 7, we show numbers of submissions where several teams agreed or disagreed with a judgment for a shot. Each column represents the number of teams with the same opinion about a particular submission. The first column shows that there are many unique submissions by teams and that there are frequent one-to-one disagreements. Although teams might prefer risky submissions, there might be also uncertain cases depending on text interpretation. The second column shows that in 80% of tasks two teams agree with a judge more often than two teams disagree with a judge.

Looking at extreme cases, in tasks a-2 and a-10 there was a correct submission provided even by eleven teams. This indicates that there might be a clear match between an AVS task text description and the visual content of an easy-to-find shot. This high level of agreement can be observed among multiple other tasks. In comparison, task a-3 represents an example of frequent disagreement between and the judge’s verdict. Some teams misunderstood the task a-3 and did not realize the first-person view of skis is required. In order to prevent this issue in future evaluations, there are many options [35]. Statistics present in Table 7 could also be automatically reported by the evaluation server to indicate problems.

4.3.4 Submission similarity analysis

After having analyzed the judge-team agreement, this section further investigates the inter-judge agreement through determining similar images that are judged differently. Figure 13 shows a selection of keyframe pairs exhibiting high similarities to each other, while judges disagree on their correctness. The similarities are determined by computing the Euclidean distance of the last fully connected layer vectors using Inception Net v3 [69]. By analyzing the distances per task, we find that all tasks contain questionable judgments. Similarly to above findings, task a-3 is interpreted very diversely, i.e., sometimes only a first-person view of ski tips are accepted and other times also third-person views of skiers on a slope. Such disagreements can be observed for other tasks as well; oftentimes different judgments are given on scenes that merely are a few shots apart (cf. Figs. 13a–d). In other cases, the submitted scenes are not related but, nevertheless, the judges’ agreement on content correctness diverges (cf. Figs. 13e–f). Overall, when considering the lower 20% of all differently judged image distances per task, we identify an average of 109 similar items (excluding the outlier task a-3, which has 3508 such items). Although not all similar yet differently scored images necessarily include misjudgments, it appears that the pre-task judge briefing was not an effective way to avoid them. Some differences in task understanding seem to become only apparent when seeing actual examples arriving. Thus, a trial-run with judges or using multiple judgments with voting could be better alternatives.

5 Conclusion and outlook

Ten years after the first Video Browser Showdown, the recent iteration had the largest number of participating systems so far. Despite organizational challenges with the fully remote setting, this iteration was very successful. For future evaluation campaigns, we see multiple challenges, which we will outline here.

On-Site versus remote VBS event. While the remote setting has many advantages, such as lower barrier to participation and cost, the conference setting had multiple key advantages which cannot be fully replicated in the remote setting, such as the ability of VBS to double as an informal demo session, where participants can try out other systems and ask questions. Networking and collaboration effects were diminished in comparison to previous years. Also, the novice session—which is a unique and important part of VBS—could not take place in the virtual setting.

Barrier to participation. While the barrier to participation in VBS remains somewhat high, this year had the highest number of participants so far, indicating that efforts to lower the barrier helped. Several pre-extracted features are available for V3C1 [6, 58] and V3C2 [59], enabling teams to focus on particular aspects. For completely new participants, it might be beneficial to further encourage authors to open source their systems. Currently, vitrivr is fully open-source,Footnote 7 and SOMHunter has an open-source release,Footnote 8 but full reproducibility of the competition would require all used systems to be open-source.

Result robustness. As demonstrated in [54], the difference in performance between users of the same system is rather large and increasing the number of users per system makes the results more statistically significant. In the current VBS format, two users operate the same system as a team. A larger number of users which solve tasks independently would increase confidence in the evaluation results and enable interesting analysis questions. This would, however, make it more difficult to consider systems which use explicitly collaborative retrieval strategies.

The optimal task format is still an open research question for both AVS and KIS tasks, and there are many options for future tasks [35]. While this was the fifth year in a row that included AVS tasks, this time they have caused extended discussions amongst teams and organizers. Although the queries for these tasks have been carefully selected and judges briefed in advance, we still encountered several difficulties that could be discussed in a dedicated paper and should only be briefly mentioned here:

Disagreement: As Table 7 has shown, there is a substantial number of submissions where multiple teams perceived a segment as correct, but the judge disagreed. In Sect. 4.3.4 we also showed that semantically identical shots are sometimes judged differently by different judges, further underlining the challenge of AVS task evaluation. In future iterations of VBS, we might consider a voting scheme to rectify this issue.

Evaluation Limits: Our analysis indicates that with a longer task duration, more unique items could be found. Due to the large number of submissions per task, however, extending the time would require more judges.

Resource Limits: While in previous years the old server software itself caused delays in submission processing, this year we faced severe network issues. Due to the fully virtual session and the high number of judges and participants—who were not only submitting many results, but also following the competition status via the server’s web interface—the LAN and WAN limits (10 Gbps) of the server’s location (Klagenfurt University) were reached. This unfortunately resulted in laggy behavior with packet losses and re-transmissions, slowing down the entire submission process.

Synchronous Submissions: In addition to the problems with the network load, some teams implemented their system such that submissions had to be confirmed by the server, which seriously limited their submission capacity due to the high network delay.

VBS 2021 successfully demonstrated that a fully virtual setting is feasible. In particular, for KIS tasks, the evaluation procedure went smoothly and almost all competing teams were able to solve some tasks, with most teams being able to solve more than 50% of KIS tasks.

There is still a large difference between the performance of the top teams, indicating no need to extensively modify task difficulty. With the move toward a larger dataset next year, we expect strong retrieval models to become more important, as approaches which rely on browsing must deal with twice as much data. At the same time, the evaluation procedure itself will become more challenging too, especially for AVS tasks which might have even more results (and need more judges) due to the larger dataset.

Even though VBS has been running for 10 years already, interactive video retrieval remains a hot topic with many challenges, which cannot be easily solved with only improved deep learning models. A strong focus on the retrieval efficiency, as well as the user interface, will be key to further push large-scale interactive video search.

Availability of data and materials

The raw data used for the analyses presented in this paper are available via https://zenodo.org/record/5566853.

Code availability

Where evaluated system are open source, links are given in the paper references from Table 2. As mentioned, the source code for the evaluation server is provided on Github. https://github.com/dres-dev/DRES. The full analysis code used to produce all graphs and tables is also available on Github. https://github.com/vGsteiger/VBS-Analysis.

Notes

KIS tasks have a correct video sequence, a submission of any frame within the correct sequence counts as correct.

“Since VBS 2018, ranges are fixed static non-overlapping segments of 180s duration” [62], in 2021 the ranges were dynamic and based on shot segmentation.

DRES v0.8.1 was used, see: https://github.com/dres-dev/DRES/releases/tag/0.8.1.

VBS has few rules, the most relevant being that screenshots are prohibited for Visual KIS tasks. Additionally, all server logs are made publicly available at https://zenodo.org/record/5566853.

References

Amato G, Bolettieri P, Carrara F, Debole F, Falchi F, Gennaro C, Vadicamo L, Vairo, C (2021) The VISIONE video search system: exploiting off-the-shelf text search engines for large-scale video retrieval. J Imag 7(5). https://doi.org/10.3390/jimaging7050076

Amato G, Bolettieri P, Falchi F, Gennaro C, Messina N, Vadicamo L, Vairo C (2021) VISIONE at video browser showdown 2021. In: International conference on multimedia modeling. Springer, pp 473–478. https://doi.org/10.1007/978-3-030-67835-7_47

Amato G, Falchi F, Gennaro C, Rabitti F (2017) Searching and annotating 100M images with yfcc100m-hnfc6 and mi-file. In: Workshop on content-based multimedia indexing. ACM, pp 26:1–26:4. https://doi.org/10.1145/3095713.3095740

Andreadis S, Moumtzidou A, Gkountakos K, Pantelidis N, Apostolidis K, Galanopoulos D, Gialampoukidis I, Vrochidis, S, Mezaris V, Kompatsiaris I (2021) VERGE in vbs 2021. In: International conference on multimedia modeling. Springer, pp. 398–404. https://doi.org/10.1007/978-3-030-67835-7_35

Benavente R, Vanrell M, Baldrich R (2008) Parametric fuzzy sets for automatic color naming. JOSA A 25(10):2582–2593. https://doi.org/10.1364/JOSAA.25.002582

Berns F, Rossetto L, Schoeffmann K, Beecks C, Awad G (2019) V3C1 dataset: an evaluation of content characteristics. In: International conference on multimedia retrieval. ACM, pp 334–338. https://doi.org/10.1145/3323873.3325051

Bochkovskiy A, Wang CY, Liao HYM (2020) Yolov4: optimal speed and accuracy of object detection. CoRR. arXiv:2004.10934

Chen K, Pang J, Wang J, Xiong Y, Li X, Sun S, Feng W, Liu Z, Shi J, Ouyang W, Loy CC, Lin D (2019) Hybrid task cascade for instance segmentation. In: Conference on computer vision and pattern recognition, pp. 4969–4978. https://doi.org/10.1109/CVPR.2019.00511

Cox I, Miller M, Omohundro S, Yianilos P (1996) Pichunter: Bayesian relevance feedback for image retrieval. In: International conference on pattern recognition, vol 3. IEEE, pp 361–369. https://doi.org/10.1109/ICPR.1996.546971

Deng D, Liu H, Li X, Cai D (2018) Pixellink: detecting scene text via instance segmentation. In: Proceedings of the thirty-second AAAI conference on artificial intelligence, (AAAI-18), the 30th innovative applications of artificial intelligence (IAAI-18), and the 8th AAAI symposium on educational advances in artificial intelligence (EAAI-18). AAAI, pp 6773–6780

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: Conference on computer vision and pattern recognition. IEEE, pp 248–255. https://doi.org/10.1109/CVPR.2009.5206848

Galanopoulos D, Mezaris V (2020) Attention mechanisms, signal encodings and fusion strategies for improved ad-hoc video search with dual encoding networks. In: International conference on multimedia retrieval. ACM, pp 336–340. https://doi.org/10.1145/3372278.3390737

Gurrin C, Jónsson BÞ, Schöffmann K, Dang-Nguyen D, Lokoc J, Tran M, Hürst W, Rossetto L, Healy G (2021) Introduction to the fourth annual lifelog search challenge, lsc’21. In: International conference on multimedia retrieval. ACM, pp 690–691. https://doi.org/10.1145/3460426.3470945

Hara K, Kataoka H, Satoh Y (2018) Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? https://doi.org/10.1109/CVPR.2018.00685

Gasser R, Rossetto L, Heller S, Schuldt H (2020) Cottontail DB: an open source database system for multimedia retrieval and analysis. In: The 28th ACM international conference on multimedia, virtual event. Seattle, WA, pp. 4465–4468. https://doi.org/10.1145/3394171.3414538

Heller S, Gasser R, Illi C, Pasquinelli M, Sauter L, Spiess F, Schuldt H (2021) Towards explainable interactive multi-modal video retrieval with vitrivr. In: International conference on multimedia modeling. Springer, pp. 435–440. https://doi.org/10.1007/978-3-030-67835-7_41

Heller S, Sauter L, Schuldt H, Rossetto L (2020) Multi-stage queries and temporal scoring in vitrivr. In: International conference on multimedia & expo workshops (ICMEW). IEEE, pp 1–5. https://doi.org/10.1109/ICMEW46912.2020.9105954

Hezel N, Schall K, Jung K, Barthel KU (2021) Video search with sub-image keyword transfer using existing image archives. In: International conference on multimedia modeling. Springer, pp 484–489. https://doi.org/10.1007/978-3-030-67835-7_49

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: Efficient convolutional neural networks for mobile vision applications. CoRR. arXiv:1704.04861

Jégou H, Douze M, Schmid C (2010) Product quantization for nearest neighbor search. IEEE Trans Pattern Anal Mach Intell 33(1):117–128. https://doi.org/10.1109/TPAMI.2010.57

Jiang Y, Gong X, Liu D, Cheng Y, Fang C, Shen X, Yang J, Zhou P, Wang Z (2021) Enlightengan: deep light enhancement without paired supervision. IEEE Trans Image Process 30:2340–2349. https://doi.org/10.1109/TIP.2021.3051462

Karisch C, Leibetseder A, Schoeffmann K (2021) Noshot video browser at vbs2021. In: International conference on multimedia modeling. Springer, pp. 405–409. https://doi.org/10.1007/978-3-030-67835-7_36

Kay W, Carreira J, Simonyan K, Zhang B, Hillier C, Vijayanarasimhan S, Viola F, Green T, Back T, Natsev P, Suleyman M, Zisserman A (2017) The kinetics human action video dataset. CoRR. arXiv:1705.06950

Khan OS, Jónsson BÞ, Larsen M, Poulsen L, Koelma DC, Rudinac S, Worring M, Zahálka J (2021) Exquisitor at the video browser showdown 2021: relationships between semantic classifiers. In: International conference on multimedia modeling. Springer, pp 410–416. https://doi.org/10.1007/978-3-030-67835-7_37

Khan OS, Jónsson BÞ, Rudinac S, Zahálka J, Ragnarsdóttir H, Þorleiksdóttir Þ, Guðmundsson GÞ, Amsaleg L, Worring M (2020) Interactive learning for multimedia at large. In: Proceedings of the European conference on information retrieval. Springer, pp 410–416. https://doi.org/10.1007/978-3-030-45439-5_33

Kratochvíl M, Veselý P, Mejzlík F, Lokoč J (2020) Som-hunter: video browsing with relevance-to-som feedback loop. In: International conference on multimedia modeling. Springer, pp. 790–795. https://doi.org/10.1007/978-3-030-37734-2_71

Kuznetsova A, Rom H, Alldrin N, Uijlings JRR, Krasin I, Pont-Tuset J, Kamali S, Popov S, Malloci M, Duerig T, Ferrari V (2018) The open images dataset V4. Int J Comput Vis 128(7):1956–1981. https://doi.org/10.1007/s11263-020-01316-z

Lee Y, Choi H, Park S, Ro YM (2021) IVIST: interactive video search tool in VBS 2021. In: International conference on multimedia modeling. Springer, pp 423–428. https://doi.org/10.1007/978-3-030-67835-7_39

Leibetseder A, Schoeffmann K (2021) Less is more—divexplore 5.0 at VBS 2021. In: International conference on multimedia modeling. Springer, pp 455–460. https://doi.org/10.1007/978-3-030-67835-7_44

Leibetseder A, Schoeffmann K (2021) lifexplore at the lifelog search challenge 2021. In: Proceedings of the fourth annual workshop on lifelog search challenge. ACM, pp 23–28. https://doi.org/10.1145/3463948.3469060

Li X, Xu C, Yang G, Chen Z, Dong J (2019) W2VV++: fully deep learning for ad-hoc video search. In: International conference on multimedia. ACM, pp. 1786–1794. https://doi.org/10.1145/3343031.3350906

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft COCO: common objects in context. In: Computer vision—ECCV. Springer, pp 740–755. https://doi.org/10.1007/978-3-319-10602-1_48

Lokoč J, Bátoryová J, Smrž D, Dobranský M (2021) Video search with collage queries. In: International conference on multimedia modeling. Springer, pp 429–434. https://doi.org/10.1007/978-3-030-67835-7_40

Lokoč J, Souček T, Veselý P, Mejzlík F, Ji J, Xu C, Li X (2020) A W2VV++ case study with automated and interactive text-to-video retrieval. In: International conference on multimedia. ACM. https://doi.org/10.1145/3394171.3414002

Lokoč J, Bailer W, Barthel KU, Gurrin C, Heller S, Jónsson BÞ, Peška L, Rossetto L, Schoeffmann K, Vadicamo L, Vrochidis S, Wu J (2022) A task category space for user-centric comparative multimedia search evaluations. In: International conference on multimedia modeling

Lokoč J, Kovalčík G, Souček T, Moravec J, Čech P (2019) A framework for effective known-item search in video. In: International conference on multimedia. ACM, pp. 1777–1785. https://doi.org/10.1145/3343031.3351046

Lokoč J, Veselý P, Mejzlík F, Kovalčík G, Souček T, Rossetto L, Schoeffmann K, Bailer W, Gurrin C, Sauter L, Song J, Vrochidis S, Wu J, Jónsson BÞ (2021) Is the reign of interactive search eternal? findings from the video browser showdown 2020. ACM Trans Multim Comput Commun Appl 17(3). https://doi.org/10.1145/3445031

Markatopoulou F, Moumtzidou A, Galanopoulos D, Avgerinakis K, Andreadis S, Gialampoukidis I, Tachos S, Vrochidis S, Mezaris V, Kompatsiaris I, Patras I (2017) ITI-CERTH participation in TRECVID 2017. In: TREC video retrieval evaluation. NIST. https://doi.org/10.5281/zenodo.1183440

Messina N, Falchi F, Esuli A, Amato G (2020) Transformer reasoning network for image-text matching and retrieval. In: International conference on pattern recognition. IEEE. https://doi.org/10.1109/ICPR48806.2021.9413172

Mettes P, Koelma DC, Snoek CGM (2020) Shuffled imagenet banks for video event detection and search. ACM Trans Multim Comput Commun Appl 16(2):44:1–44:21. https://doi.org/10.1145/3377875

Monfort M, Vondrick C, Oliva A, Andonian A, Zhou B, Ramakrishnan K, Bargal SA, Yan T, Brown LM, Fan Q, Gutfreund D (2020) Moments in time dataset: one million videos for event understanding. IEEE Trans Pattern Anal Mach Intell 42(2):502–508. https://doi.org/10.1109/TPAMI.2019.2901464

Nguyen PA, Lu YJ, Zhang H, Ngo CW (2018) Enhanced VIREO KIS at VBS 2018. In: International conference on multimedia modeling. Springer, pp 407–412. https://doi.org/10.1007/978-3-319-73600-6_42

Nguyen PA, Wu J, Ngo CW, Francis D, Huet B (2020) VIREO @ video browser showdown 2020. In: International conference on multimedia modeling. Springer, pp 772–777. https://doi.org/10.1007/978-3-030-37734-2_68

Peška L, Kovalčík G, Souček T, Škrhák V, Lokoč J (2021) W2VV++ BERT model at VBS 2021. In: International conference on multimedia modeling. Springer, pp 467–472. https://doi.org/10.1007/978-3-030-67835-7_46

Pittaras N, Markatopoulou F, Mezaris V, Patras I (2017): Comparison of fine-tuning and extension strategies for deep convolutional neural networks. In: International conference on multimedia modeling. Springer, pp 102–114. http://doi.org/10.1007/978-3-319-51811-4_9

Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J, Krueger G, Sutskever I (2021) Learning transferable visual models from natural language supervision. CoRR. arXiv:2103.00020

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger. In: Conference on computer vision and pattern recognition. IEEE, pp 7263–7271. https://doi.org/10.1109/CVPR.2017.690

Redmon J, Farhadi A (2018) YOLOv3 on the open images dataset. https://pjreddie.com/darknet/yolo/ (Online; Accessed 22 April 2021)

Ressmann A, Schoeffmann K (2021) Ivos-the itec interactive video object search system at vbs2021. In: International conference on multimedia modeling. Springer, pp 479–483

Revaud J, Almazan J, Rezende R, de Souza C (2019) Learning with average precision: training image retrieval with a listwise loss. In: International conference on computer vision. IEEE, pp 5106–5115. https://doi.org/10.1109/ICCV.2019.00521

Rossetto L (2018) Multi-modal video retrieval. Ph.D. thesis, University of Basel. https://doi.org/10.5451/unibas-006859522

Rossetto L, Baumgartner M, Ashena N, Ruosch F, Pernisch R, Heitz L, Bernstein A (2021) Videograph—towards using knowledge graphs for interactive video retrieval. In: International conference on multimedia modeling. Springer, pp 417–422. https://doi.org/10.1007/978-3-030-67835-7_38

Rossetto L, Gasser R, Heller S, Parian-Scherb M, Sauter L, Spiess F, Schuldt H, Peska L, Soucek T, Kratochvil M et al (2021) On the user-centric comparative remote evaluation of interactive video search systems. IEEE Multim. https://doi.org/10.1109/MMUL.2021.3066779

Rossetto L, Gasser R, Lokoč J, Bailer W, Schoeffmann K, Muenzer B, Souček T, Nguyen PA, Bolettieri P, Leibetseder A et al (2020) Interactive video retrieval in the age of deep learning-detailed evaluation of VBS 2019. IEEE Trans Multim 23:243–256. https://doi.org/10.1109/TMM.2020.2980944

Rossetto L, Gasser R, Sauter L, Bernstein A, Schuldt H (2021) A system for interactive multimedia retrieval evaluations. In: International conference on multimedia modeling. Springer. https://doi.org/10.1007/978-3-030-67835-7_33

Rossetto L, Gasser R, Schuldt H (2019) Query by semantic sketch. CoRR. arXiv:1909.12526

Rossetto L, Giangreco I, Schuldt H (2014) Cineast: A multi-feature sketch-based video retrieval engine. In: International symposium on multimedia. IEEE, pp 18–23. https://doi.org/10.1109/ISM.2014.38

Rossetto L, Parian MA, Gasser R, Giangreco I, Heller S, Schuldt H (2019) Deep learning-based concept detection in vitrivr. In: International conference on multimedia modeling. Springer, pp. 616–621. https://doi.org/10.1007/978-3-030-05716-9_55

Rossetto L, Schoeffmann K, Bernstein A (2021) Insights on the V3C2 dataset. CoRR arXiv:2105.01475

Rossetto L, Schuldt H, Awad G, Butt AA (2019) V3C—a research video collection. In: International conference on multimedia modeling. Springer, pp 349–360. https://doi.org/10.1007/978-3-030-05710-7_29

Schall K, Barthel KU, Hezel N, Jung K (2019) Deep aggregation of regional convolutional activations for content based image retrieval. In: International workshop on multimedia signal processing. IEEE, pp. 1–6. https://doi.org/10.1109/MMSP.2019.8901787

Schoeffmann K (2021) Vbs 2021 overview. https://www.youtube.com/watch?v=8Kg_5BQon9I&t=587s

Schoeffmann K (2019) Video browser showdown 2012-2019: a review. In: Conference on content-based multimedia indexing. IEEE, pp 1–4. https://doi.org/10.1109/CBMI.2019.8877397

Shi B, Yang M, Wang X, Lyu P, Yao C, Bai X (2019) ASTER: an attentional scene text recognizer with flexible rectification. IEEE Trans Pattern Anal Mach Intell 41(9):2035–2048. https://doi.org/10.1109/TPAMI.2018.2848939

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Smith R (2007) An overview of the tesseract OCR engine. In: International conference on document analysis and recognition. IEEE, pp 629–633. https://doi.org/10.1109/ICDAR.2007.4376991

Soucek T, Lokoc J (2020) Transnet V2: an effective deep network architecture for fast shot transition detection. CoRR arXiv:2008.04838

Spiess F, Gasser R, Heller S, Rossetto L, Sauter L, Schuldt H (2021) Competitive interactive video retrieval in virtual reality with vitrivr-vr. In: International conference on multimedia modeling. Springer, pp 441–447. https://doi.org/10.1007/978-3-030-67835-7_42

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Conference on computer vision and pattern recognition. IEEE, pp 2818–2826. https://doi.org/10.1109/CVPR.2016.308

Tan WR, Chan CS, Aguirre HE, Tanaka K (2016) Ceci n’est pas une pipe: a deep convolutional network for fine-art paintings classification. In: International conference on image processing, pp 3703–3707. https://doi.org/10.1109/ICIP.2016.7533051

Tran L, Nguyen M, Nguyen T, Healy G, Caputo A, Nguyen BT, Gurrin C (2021) A VR interface for browsing visual spaces at VBS2021. In: International conference on multimedia modeling. Springer, pp 490–495. https://doi.org/10.1007/978-3-030-67835-7_50

Van De Weijer J, Schmid C, Verbeek J, Larlus D (2009) Learning color names for real-world applications. IEEE Trans Image Process 18(7):1512–1523. https://doi.org/10.1109/TIP.2009.2019809

Veselý P, Mejzlík F, Lokoč J (2021) Somhunter V2 at video browser showdown 2021. In: International conference on multimedia modeling. Springer, pp 461–466. https://doi.org/10.1007/978-3-030-67835-7_45

Wu J, Ngo CW (2020) Interpretable embedding for ad-hoc video search. In: International conference on multimedia. ACM, pp 3357—3366. https://doi.org/10.1145/3394171.3413916

Wu J, Nguyen PA, Ma Z, Ngo CW (2021) Sql-like interpretable interactive video search. In: International conference on multimedia modeling. Springer, pp 391–397. https://doi.org/10.1007/978-3-030-67835-7_34

Xie S, Girshick RB, Dollár P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: Conference on computer vision and pattern recognition. IEEE, pp 5987–5995. https://doi.org/10.1109/CVPR.2017.634

Ye G, Li Y, Xu H, Liu D, Chang SF (2015) Eventnet: A large scale structured concept library for complex event detection in video. In: International conference on multimedia. ACM, pp 471—480. https://doi.org/10.1145/2733373.2806221

Zhang H, Wang Y, Dayoub F, Sunderhauf N (2021) Varifocalnet: an iou-aware dense object detector. In: Conference on computer vision and pattern recognition. IEEE, pp 8514–8523

Zhou B, Lapedriza A, Khosla A, Oliva A, Torralba A (2018) Places: a 10 million image database for scene recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1452–1464. https://doi.org/10.1109/TPAMI.2017.2723009

Acknowledgements

This work was partially funded by the Czech Science Foundation (GAČR) under project 19-22071Y, by the EU’s Horizon 2020 research and innovation programme under the grant agreements n\(^o\) 825079, MindSpaces, and n\(^o\) 951911, AI4Media - A European Excellence Centre for Media, Society and Democracy, by the FWF Austrian Science Fund under grant P 32010-N38, by the Swiss National Science Foundation (project “Participatory Knowledge Practices in Analog and Digital Image Archives”, contract no. CRSII5_193788) and by Science Foundation Ireland under grant numbers 18/CRT/6223, 18/CRT/6224, SFI/12/RC/2289_2 and SFI/13/RC/2106.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Heller, S., Gsteiger, V., Bailer, W. et al. Interactive video retrieval evaluation at a distance: comparing sixteen interactive video search systems in a remote setting at the 10th Video Browser Showdown. Int J Multimed Info Retr 11, 1–18 (2022). https://doi.org/10.1007/s13735-021-00225-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-021-00225-2