Abstract

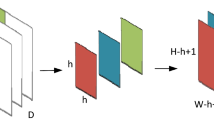

One of the most fundamental challenges in computer vision is pedestrian detection since it involves both the classification and localization of pedestrians at a location. To achieve real-time pedestrian detection without having any loss in detection accuracy, an Optimized MobileNet + SSD network is proposed. There are four important components in pedestrian detection: feature extraction, deformation, occlusion handling and classification. The existing methods design these components either independently or in a sequential format, and the interaction among these components has not been explored yet. The proposed network lets the components work in coordination in such a manner that their strengths are improved and the number of parameters is decreased compared to recent detection architectures. We propose a concatenation feature fusion module for adding contextual information in the Optimized MobileNet + SSD network to improve the detection accuracy of pedestrians. The proposed model achieved 80.4% average precision with a detection speed of 34.01 frames per second (fps) when tested on the Jetson Nano board, which is much faster compared to standard video speed (30 fps). Experimental results have shown that the proposed network has a better detection effect during low light conditions and for darker pictures. Therefore, the proposed network is well suited for low-end edge devices.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, & Hinton GE (2012) “Imagenet classification with deep convolutional neural networks,” In Advances in neural information processing systems. 1097–1105. doi: https://doi.org/10.1145/3065386

Simonyan K, & Zisserman (2014) “A, Very deep convolutional networks for large-scale image recognition,”arXiv preprint arXiv: 1409.1556

Chollet F (2017) "Xception: deep learning with depthwise separable convolutions,"2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, HI, pp. 1800–1807, https://doi.org/10.1109/CVPR.2017.195.

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T& Adam H (2017) “Mobilenets: Efficient convolutional neural networks for mobile vision applications,” arXiv preprint arXiv: 1704.04861

Huang G, Liu Z, Van Der Maaten L, and Weinberger KQ (2017) "Densely Connected Convolutional Networks,"2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, HI, 2261–2269, https://doi.org/10.1109/CVPR.2017.243.

Cao G, Xie X, Yang W, Liao Q, Shi G, & Wu J (2017) “Feature-fused SSD: Fast detection for small objects,” In Ninth International Conference on Graphic and Image Processing (ICGIP), Vol. 10615, p. 106151E. International Society for Optics and Photonics.

Dollár P, Appel R, Belongie S, Perona P (2014) Fast feature pyramids for object detection. IEEE Trans Pattern Anal Mach Intell 36(8):1532–1545. https://doi.org/10.1109/TPAMI.2014.2300479

Gkioxari G, Girshick R, and Malik J (2015) "Contextual Action Recognition with R*CNN,"2015 IEEE International Conference on Computer Vision (ICCV), Santiago, pp. 1080–1088, doi: https://doi.org/10.1109/ICCV.2015.129.

Girshick R (2015) "Fast R-CNN," 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, pp. 1440–1448, https://doi.org/10.1109/ICCV.2015.169.

Ren S, He K, Girshick R, & Sun J (2015) “Faster r-cnn: Towards real-time object detection with region proposal networks,” In Advances in neural information processing systems. 91–99

Redmon J, Divvala S, Girshick R and Farhadi A (2016) "You Only Look Once: Unified, Real-Time Object Detection," 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, pp. 779–788, https://doi.org/10.1109/CVPR.2016.91.

Redmon J and Farhadi A (2017) "YOLO9000: Better, Faster, Stronger," 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, pp. 6517–6525, https://doi.org/10.1109/CVPR.2017.690.

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu CY, & Berg AC (2016) “Ssd: Single shot multibox detector,” In European conference on computer vision pp. 21–37, Springer, Cham. https://doi.org/10.1007/978-3-319-46448-0_2

He K, Gkioxari G, Dollár P and Girshick R (2017) "Mask R-CNN," 2017 IEEE International Conference on Computer Vision (ICCV), Venice, pp. 2980–2988, https://doi.org/10.1109/ICCV.2017.322.

Murthy CB, Hashmi MF, Bokde ND, & Geem ZW (2020) “Investigations of Object Detection in Images/Videos Using Various Deep Learning Techniques and Embedded Platforms-A Comprehensive Review,”Appl Sci. 10(9), 3280. https://doi.org/10.3390/app10093280

Zhao Z, Zheng P, Xu S, Wu X (2019) Object detection with deep learning: a review. IEEE Transactions on Neural Networks and Learning Systems 30(11):3212–3232. https://doi.org/10.1109/TNNLS.2018.2876865

Dalal N and Triggs B (2005) "Histograms of oriented gradients for human detection," 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05), San Diego, CA, USA, pp. 886–893 vol. 1. https://doi.org/10.1109/CVPR.2005.177.

Viola, Jones and Snow (2003) "Detecting pedestrians using patterns of motion and appearance," Proceedings Ninth IEEE International Conference on Computer Vision, Nice, France, pp. 734–741 vol.2. https://doi.org/10.1109/ICCV.2003.1238422.

Felzenszwalb PF, Girshick RB, McAllester D, Ramanan D (2010) Object detection with discriminatively trained part-based models. IEEE Trans Pattern Anal Mach Intell 32(9):1627–1645. https://doi.org/10.1109/TPAMI.2009.167

Keras. https://keras.io/. Accessed: 2019–12–31.

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J & Kudlur M (2016) Tensorflow: “A system for large-scale machine learning,” In 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI}, pp. 265–283

Everingham M, Eslami SA, Van Gool L, Williams CK, Winn J & Zisserman A (2015) “The pascal visual object classes challenge: A retrospective,” International journal of computer vision. 111(1), pp.98–136.

Dollar P, Wojek C, Schiele B and Perona P (2009) "Pedestrian detection: A benchmark," 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, pp. 304–311. doi: https://doi.org/10.1109/CVPR.2009.5206631.

Szarvas M, Yoshizawa A, Yamamoto M, Ogata J (2005) “Pedestrian detection with convolutional neural networks,” IEEE Proceedings. Intelligent Vehicles Symposium 2005. Las Vegas, NV, USA 3:224–229. https://doi.org/10.1109/IVS.2005.1505106

Sadeghi MA & Forsyth D (2014) “30hz object detection with dpm v5,” In European Conference on Computer Vision, pp. 65–79, Springer, Cham. doi: https://doi.org/10.1007/978-3-319-10590-1_5

Fukui H, Yamashita T, Yamauchi Y, Fujiyoshi H, Murase H (2015) “Pedestrian detection based on deep convolutional neural network with ensemble inference network,” 2015 IEEE Intelligent Vehicles Symposium (IV). Seoul. 9:223–228. https://doi.org/10.1109/IVS.2015.7225690

Ouyang W, Zhou H, Li H, Li Q, Yan J, Wang X (2018) Jointly learning deep features, deformable parts, occlusion and classification for pedestrian detection. IEEE Transactions on Pattern Analysis and Machine Intelligence 40(8):1874–1887. https://doi.org/10.1109/TPAMI.2017.2738645

Zhang S, Yang J and Schiele B (2018) "Occluded pedestrian detection through guided attention in CNNs," 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, pp. 6995–7003, doi: https://doi.org/10.1109/CVPR.2018.00731.

Kuang P, Ma T, Li F, & Chen Z (2018) “Real-time pedestrian detection using convolutional neural networks,” International Journal of Pattern Recognition and Artificial Intelligence, vol; 32, no.11, 1856014. doi: https://doi/abs/https://doi.org/10.1142/S0218001418560141

Liu W, Liao S, Ren W, Hu W and Yu Y (2019) "High-level semantic feature detection: a new perspective for pedestrian detection," 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp. 5182–5191, doi: https://doi.org/10.1109/CVPR.2019.00533.

Cheng Y, Chen C, & Gan Z (2019) “Enhanced single shot multibox detector for pedestrian detection,” In Proceedings of the 3rd International Conference on Computer Science and Application Engineering, pp. 1–7. doi: https://doi.org/10.1145/3331453.3361665

Yang F, Chen H, Li J, Li F, Wang L, Yan X (2019) Single shot multibox detector with kalman filter for online pedestrian detection in video. IEEE Access 7:15478–15488. https://doi.org/10.1109/ACCESS.2019.2895376

Afifi, Mohamed, Yara Ali, Karim Amer (2019) Mahmoud Shaker and Mohamed ElHelw, "Robust real-time pedestrian detection in aerial imagery on Jetson TX2," arXiv preprint arXiv: 1905.06653.

Redmon, Joseph and Ali Farhadi (2018) "Yolov3: An incremental improvement," arXiv preprint arXiv: 1804.02767.

Yi Z, Yongliang S, Jun Z (2019) An improved tiny-yolov3 pedestrian detection algorithm. Optik 183:17–23. https://doi.org/10.1016/j.ijleo.2019.02.038

Murthy CB, Farukh Hashmi M (2020) Real time pedestrian detection using robust enhanced tiny-YOLOv3. 2020 IEEE 17th India Council International Conference (INDICON). New Delhi, India 6:1–5. https://doi.org/10.1109/INDICON49873.2020.9342082

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Murthy, C.B., Hashmi, M.F. & Keskar, A.G. Optimized MobileNet + SSD: a real-time pedestrian detection on a low-end edge device. Int J Multimed Info Retr 10, 171–184 (2021). https://doi.org/10.1007/s13735-021-00212-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-021-00212-7