Abstract

We provide a methodology by which an epidemiologist may arrive at an optimal design for a survey whose goal is to estimate the disease burden in a population. For serosurveys with a given budget of C rupees, a specified set of tests with costs, sensitivities, and specificities, we show the existence of optimal designs in four different contexts, including the well known c-optimal design. Usefulness of the results are illustrated via numerical examples. Our results are applicable to a wide range of epidemiological surveys under the assumptions that the estimate’s Fisher-information matrix satisfies a uniform positive definite criterion.

Similar content being viewed by others

1 Introduction

Total disease burden at a given time is the fraction of the population that were infected in the past or are currently having active infection of the disease. This key indicator provides a snapshot of the state of the pandemic at that time. An accurate estimate of total disease burden during a pandemic can enable evidence-based public health management, e.g., tracing, testing, treatment and triage, non-pharmaceutical interventions, and vaccination planning. One way to estimate the total disease burden and infer the state of the pandemic is to conduct surveys. The surveys use multiple tests on participants to gather evidence of both past infection and active infection. For example, the recently concluded first round of the Karnataka state COVID-19 serosurvey (Babu et al. 2020) involved three kinds of tests on participants: the serological test on serum of venous blood for detection of immunoglobulin G (IgG) antibodies, the rapid antigen detection test (RAT), and the quantitative reverse transcription polymerase chain reaction (RT-PCR) test for detection of viral RNA. The tests provide different kinds of information, e.g. past infection or active infection. The tests have remarkable variation in reliability, e.g., the RAT has sensitivity (i.e probability of a positive test given that the patient has the disease.) of about 50% while the RT-PCR test has a sensitivity of 95% or higher (assuming no sample collection- or transportation-related issues); there is variation, albeit less spectacular, in specificities (i.e probability of a negative test given that the patient is well.). The test costs also vary, e.g., the RAT cost was Rs. 450 while the RT-PCR test cost was Rs. 1600 on 10 December 2020. Conducting all three tests on each participant is ideal but can lead to high cost.

Suppose J denotes the set of tests and T = 2J ∖∅ denotes all the nonempty subsets of tests. If a subset of tests t ∈ T is used on a participant, the cost ct is the sum of the costs of the individual tests constituting t. A survey design is defined as (wt, t ∈ T), where wt ≥ 0 is the number of participants who are administered the subset t of tests. We relax the integrality requirementFootnote 1 on wt and will assume \(w_{t} \in \mathbb {R}_{+}\). The cost of this design is obviously \({\sum }_{t \in T} w_{t} c_{t}\). The question of interest is:

Given a budget of C rupees, a set of tests, their costs, their sensitivities, specificities, and the goal of estimating the disease burden, what is a good survey design?

In this short note we address the above question for four specific optimisation criteria (c-optimal design, worst-case design, optimal design across strata, and optimal design with additional information such as symptoms observed). Motivated by the sero-survey discussed above we propose a specific model in Section 2 and present our main results for each of the four criteria in Section 3. We discuss prior work and provide the context for our work in Section 4. Our results are not limited to the assumed settings and can be applied to more general epidemiological surveys as well, see Remark 1 in Section 4. We also present numerical examplesFootnote 2 illustrating the applicability of the theorems, see in particular Table 3 in Section 5. In Section 6, we provide the proofs of the main results.

2 Model

As discussed in the introduction, the primary motivation for this note was to find optimal designs when one needs to use multiple tests to estimate COVID-19 burden in a region. We shall formulate our results with the model used in the Karnataka serosurvey, see Babu et al. (2020). As we will see in Remark 1 our results have wider applicability to other models and surveys.

Each individual of the population will be assumed to be in one of four states. Namely: (i) having active infection but no antibodies, (ii) having antibodies but no evidence of active infection, (iii) having both antibodies and active infection, and (iv) having neither active infection nor antibodies. The state of the participant in a survey is inferred from the RAT, the RT-PCR, and the antibody test outcomes, or a subset thereof. As the tests have known sensitivities and specificities their outcomes are thus noisy observations of the (unknown) disease state of the individual.

The main focus is on estimating the total disease burden, which is ℘ := p1 + p2 + p3, where pi are the probabilities of the various states and are unknown to the epidemiologist (See Table 1). We will assume the parameter space \(\mathcal {P}\) to be a convex and compact subset of [0,1]3. Let the indices j = 1,2, and 3 stand for RAT, RT-PCR and antibody tests, respectively. The last three columns of Table 1 provide the nominal responses on the RAT, RT-PCR and the antibody tests for each of the four states. Write M(s, j) for the nominal test outcome for an individual in state s; M(s, j) = 1 indicates a nominal positive outcome, and M(s, j) = 0 indicates a nominal negative outcome.

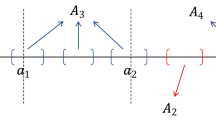

As alluded to earlier, the actual test outcomes may differ from the nominal outcomes. In Fig. 1, there are three channels for the three tests. Each channel has a deterministic binary-output channel followed by a noisy binary asymmetric channel. The deterministic output is M(s, j) for test j on input s. Let σ(0, j) denote the specificity of test j, and let σ(1, j) denote the sensitivity of test j. The final output of test j is the output of the noisy binary asymmetric channel with this sensitivity and specificity, see Fig. 1. We can thus view each test outcome (RAT or RT-PCR or antibody) as the output of two channels in tandem, with the disease state as input.

Noise model for the RAT, RT-PCR, and antibody test outcomes. The left-most subfigure is for RAT, j = 1. The middle subfigure is for the RT-PCR test, j = 2. The right-most figure is for the antibody test, j = 3. In each subfigure, the first connection is a “noiseless” channel indicated by M(s, j). In each subfigure, the second channel is a noisy binary asymmetric channel whose correct outcome probabilities are given by the specificity σ(0, j) and sensitivity σ(1, j). The values are indicated in Table 2. The cross-over probabilities are 1 minus these

The specificities and sensitivities used for the estimationsFootnote 3 are in Table 2. Let t = (t1, t2, t3) represent the subset of tests carried out, or a test pattern. If tj = 1, then test j was conducted; if tj = 0, then test j was not conducted. Let y = (y1, y2, y3), where yj ∈{0,1,NA}, denoting a negative outcome, a positive outcome, or test not conducted, respectively. Let \(\mathcal {Y}_{t}\) denote the set of possible outcomes for the subset of tests t.

We assume that the test outcomes of an individual are conditionally independent given the disease state of the individual. The assumption of conditional independence is a strong one. Indeed it is possible that a symptomatic person can get tested earlier and during the infectious state, leading to a higher predictive value for the test. For simplicity, we do not model these complications. Then for an individual in state s with test pattern t = (t1, t2, t3), the conditional probability of test outcome y = (y1, y2, y3) is given by

where M and σ are given in Tables 1 and 2, respectively, and 1{M(s, j) = yj} is the indicator function that takes the value 1 when yj equals the nominal response M(s, j) and 0 otherwise. Note that in Eq. 2.1 if the test pattern t = 0 = (0,0,0), we have an empty product which by convention is set to 1. In all other cases, when t≠0, Eq. 2.1 gives the conditional probability of the outcome y given the tests in the test pattern t. If one of the test outcomes is inconclusive, we reject the test, set the corresponding tj = 0, and it does not enter into the product in Eq. 2.1. Note that the individual effect does enter into the conditional probability calculation through the state of the individual s.

This leads to a parametric model for the probabilities of test outcomes (observations) given the disease-state probabilities (parameters of the model) denoted p = (p1, p2, p3). Then total burden will be a linear combination uTp (i.e. u = (1,1,1)T). While our focus is on the total disease burden, p1 + p2 + p3, we will continue to use the notation uTp instead of p1 + p2 + p3 because our results apply for more general u; see Remark 1.

The Fisher information matrix, denoted It(p), captures information on the reliability of the outcomes when a subset t of tests is conducted on the participant. For i = 1,2,3 and j = 1,2,3, the (i, j)th entry of It(p) for the above model can be expressed as

where Ep[⋅|t] and Pp(⋅|t) are expectation and probability, respectively, on the responses for a test pattern t ∈ T at parameter p. This will play an important role in the definition of the optimality criteria.

For a given survey design (wt, t ∈ T), using the above likelihood function, the maximum likelihood estimate (MLE) \(\hat {p}\) can be obtained by solving the so-called likelihood equation, see Poor(1994, eqn. (IV.D.3)). Standard results (e.g. (Poor, 1994, Chapter 4)) then will assert the consistency and asymptotic normality of the MLE, \(\hat {p}\), as the number of samples goes to infinity while keeping the survey design proportion fixed. We will now state our main results in the next section.

3 Main Results

We will consider four designs and present results for each. In Theorem 1, we establish the existence of a c-optimal design. We then consider a worst-case design in Theorem 2, optimal design of surveys conducted across different strata in Theorem3, and optimal design when additional information about the participants, such as symptoms observed, in Theorem 4.

3.1 The c-optimal Design

By the asymptotic optimality property, the estimated total burden \(u^{T} \hat {p}\) is approximately Gaussian with mean uTp and variance \(u^{T} \left ({\sum }_{t \in T} w_{t} I_{t}(p) \right )^{-1} u\). This suggests the use of the so-called local c-optimal design criterion. The adjective ‘local’ refers to local optimality at p and the criterion is called c-optimal because the variance of \(c^{T} \hat {p}\) was minimised, for a vector c, in Pronzato and Pázman (2013, Chapter 5). Accuracy of the estimate is generally a combination of precision (narrow confidence intervals) and unbiasedness. Broadly speaking, given a budget, the c-optimal design attempts to resolve the tension between the accuracy of the estimate and cost efficiency. We shall refer to a survey design \((w^{*}_{t}, t \in T)\) as c-optimal if it minimizes the variance

given the budget C. Our first result is on the c-optimal design.

Theorem 1.

Let the budget C > 0, let the parameter p be fixed. Then the c-optimal survey design \((w^{*}_{t}, t \in T)\) is given by

where v∗ is a solution to the optimisation problem:

Furthermore, the minimum variance is given by a(v∗;p)/C.

In other words, for c-optimality, we must allocate \(v^{*}_{t}\) fraction of the budget C to the subset of tests t; the number of participants \(w^{*}_{t}\) that should be administered the subset t of tests is therefore given by Eq. 3.1. Note that v∗ is independent of the budget C. A subset t may be highly informative, i.e., uTIt(p)− 1u may be small, yet t may not be attractive when relativised by its cost ct because what ultimately matters is uT(It(p)/ct)− 1u; see Eq. 3.2. Section 6 contains the proof with additional structural results on the support of the solution. The last statement of the theorem gives, assuming that the bias is negligible, the level of accuracy (asymptotic variance of the re-scaled estimate) that the budget can buy: accuracy is inversely proportional to the budget. It also suggests how to solve a related problem: what is the required budget to meet a certain margin of error target with confidence 1 − α? The answer to this question is to find the C that lowers \({\Phi }^{-1}(\alpha /2) \sqrt {a(v^{*}; p)/C}\) below the target margin of error, where Φ is the normal cumulative distribution function.

3.2 Worst-Case Design

Theorem 1 is a locally optimal design at parameter p, and the optimal design v∗ may depend on p in general. Whenever we want to highlight this dependence, we will write v∗(p). In practice, we often design for a guessed p; for e.g., the Karnataka serosurvey (Babu et al. 2020) assumed a seroprevalence of 10% to arrive at the required number of antibody tests. One could also use a prior for p and extend Theorem 1 straightforwardly. Our next result, however, is a design for the worst case, i.e., a design (wt, t ∈ T) that minimises

where \(\mathcal {P}\) is a convex and compact uncertainty region for the parameter, subject to the budget constraint C. In our setting, p being a sub-probability vector, the uncertainty region is a bounded set. Often the uncertainty region is a convex and compact confidence region obtained from a previous survey, see for example (Babu et al. 2020). If it is not convex or closed, taking convex hull and closure yields a relaxed uncertainty region \(\mathcal {P}\) that is convex and compact.

As optimal designs for the worst case usually go, our solution will involve the Nash equilibria of a two-player zero-sum game G defined as follows: The pay-off of the game G is

with the minimising player chooses v from the probability simplex on T; the maximising player simultaneously chooses p from a convex and compact \(\mathcal {P}\). The design for the worst case is as follows.

Theorem 2.

Any design w∗ for the worst case is of the form \(w^{*}_{t} = v^{*}_{t} C/c_{t}\), t ∈ T, where v∗ is a Nash equilibrium strategy for the minimising player in the game G.

As we shall see, the strategy sets are convex and compact, the pay-off function is concave in p for a fixed v, convex in v for a fixed p, and by Glicksberg’s theorem (1952) there exists a Nash equilibrium. While the equilibrium may not be unique, it is well-known that this is not an issue for two-player zero-sum games. The minimising player may pick any Nash equilibrium and play his part of the equilibrium, i.e., there is no “communication problem” in a two-player zero-sum game.

3.3 Allocation Across Strata

Surveys are also conducted across different strata or districts with differing disease burdens. For example, consider D districts, with p(d) being the disease spread parameter in district d, d ∈ [D] = {1,…, D}. If the population fraction of district d is nd, with \({\sum }_{d \in [D]} n_{d} = 1\), then the weighted overall disease burden in the D districts is \({\sum }_{d \in [D]} n_{d} (u^{T} \hat {p}(d))\) where \(\hat {p}(d)\) is the MLE for district d when the true parameter is p(d). The next result indicates how the total budget must be allocated across the D districts to minimise the variance of \({\sum }_{d \in [D]} n_{d} (u^{T} \hat {p}(d))\), subject to a total budget of C.

Theorem 3.

Let the parameters for the districts be p(d) and let the population fractions be nd, d = 1,…, D. The (local) c-optimal design for the weighted estimate \({\sum }_{d \in [D]} n_{d} (u^{T} \hat {p}(d))\) is as follows. The optimal budget allocation Cd for district d is proportional to

i.e.,

where v∗(p(d)) solves Eq. 3.2 for district d. The optimal design in each district is given by \(w_{t}^{*}(d) = v_{t}^{*}(p(d))C_{d} /c_{t}\) for each t and d.

Thus a larger fraction of the total budget should be allocated to districts with a larger population, and to districts with a greater uncertainty in the estimate as measured by the standard error. The precise mathematical relation is the content of the above theorem. If there is no basis for a guess of p(d) in each district, then the worst-case design could be employed in each district. Theorem3 then implies that the budget allocation is in the proportion of the population. Theorem 3 is also applicable to other kinds of stratification, for e.g., sex, age groups, risk categorisation, pre-existing health conditions, etc., so long as the fraction in each component of the stratification under consideration is known. For example, if we know that the prevalence is different among males and among females in a population, one should consider an additional sex-based stratification, and replace nd in Theorem3 by the appropriate fractions that sum up to nd.

3.4 Enabling On-the-Ground Decisions

Often the surveyors have access to additional information about the participants that might affect the quality of the test outcomes. Let us consider the example of participants presenting with or without symptoms. This is useful information that can inform what tests should be employed. The Karnataka serosurvey found that the RAT is more sensitive on participants with symptoms than on participants without symptoms (68% and 47%, respectively, see Babu et al. (2020)). Let s be the symptom-related observable with s = 0 denoting the absence of symptoms and s = 1 denoting the presence of symptoms. Let rs, s = 0,1 denote the asymptomatic and the symptomatic fractions of the population. Let p(s) be the model parameter for substratum s, s = 0,1. The goal is to estimate the total burden \({\sum }_{s=0,1} r_{s} (u^{T} p(s))\). Assuming MLE in each substratum, the objective continues to be the minimisation of the variance of \({\sum }_{s=0,1} r_{s} (u^{T} \hat {p}(s))\).

Since the tests’ reliability depend on whether a participant is symptomatic or asymptomatic, let \({I_{t}^{s}}(p(s))\) be the Fisher information matrix associated with the group s, when the subset of tests employed is t and the group s parameter is p(s). Let v∗(p(s)) denote the optimum allocation of the budgetFootnote 4, given to group s, across the subsets of tests. Further, let as(v∗(p(s));p(s)) denote the value of Eq. 3.2 for group s, when the parameter is p(s) and the Fisher information matrices are \({I_{t}^{s}}(p(s))\), t ∈ T. The next result is the following.

Theorem 4.

The optimal budget allocation Cs for group s is in proportion to

i.e.,

where v∗(p(s)) solves (3.2) for group s. The optimal design for each group s is given by \(w_{t}^{*}(s) = v_{t}^{*}(p(s))C_{s}/c_{t}\) for each t and s.

Note that the optimal allocation in Theorem 4 is similar to that in Theorem3, with the difference being as(v∗(p(s));p(s)) depending on the group s in Theorem 4. The optimal budget for C0 and C1 suggests how many asymptomatics and symptomatics must be tested. Furthermore, the test prescription adapts to the presence or absence of symptoms presented by the participant. Finally, Theorem 4 is also applicable to other settings where information about participants’ variables and factors are observable, and are known to affect the quality of testing, e.g., severity of the symptoms and the possible high viral load leading to better sensitivity of viral RNA detection tests.

4 Prior Work and Context for our Findings

The locally c-optimal design criterion is well-known (Pronzato and Pázman, 2013, Chapter 5). Elfving’s seminal work (1952) shows that, for a linear regression model, the c-optimal design is a convex combination of the extreme points in the design space. Dette and Holland-Letz (2009) extended the characterisation of the c-optimal design to nonlinear regression models with unequal noise variances. Our Theorem 1 could be recovered from the results of Dette and Holland-Letz (2009) with some additional work to characterise the solution. Chernoff (1953) considered the closely related criterion of minimising the trace of \(({\sum }_{t \in T} w_{t} I_{t}(p))^{-1}\) and obtained a convex-combination-of-extreme-points characterisation. This criterion is also called A-optimal design and is useful in our setting if the surveyor is interested in estimating the individual p1, p2, and p3 with good accuracies, not just their sum, and gives equal importance to the three variances. Other design criteria include an optimality criterion that takes into account the probability of observing a desired outcome in generalised linear models (McGree and Eccleston, 2008), variance minimisation for linear models but with non-Gaussian noise (Gao and Zhou, 2014), maximisation of the determinant of the information matrix (D-optimal design), etc. Since our interest is in estimating uTp, a linear combination of the vector p, c-optimality is the natural criterion.

While the above works deal with locally optimal designs, we also consider the worst-case design problem, weighted design, and design for on-the-ground decisions in Theorems 2,3, and 4, respectively. The worst-case design problem was considered in Pronzato and Pázman (2013, Chapter 8) where a necessary and sufficient condition for optimality is given. In principle it should be possible to derive Theorem 2 from that starting point. Our game-theoretic characterisation was more appealing. To the best of our knowledge, Theorem 4 and applications of Theorems 1–4 to COVID-19 survey design are new. We hope that other survey designers will make use of our findings at this critical juncture of the pandemic, so that immunisation plans can be better optimised.

Remark 1.

Our focus in this note has been optimal design of serosurveys for disease burden estimation. However our results hold for more general epidemiological surveys. The dimensionality of the parameter p can be any natural number k, and the u defining the estimate uTp can be any vector in \({\mathbb R}^{k}\). In any such model, our results in Theorems 1,3, and 4 will hold as long as the MLE exists and the corresponding Fisher information matrix, It(p) for the parameter p, satisfies the assumption (A1) stated below, and our result in Theorem 2 will hold as long as assumption (A2), also stated below, holds, see Remark 2 in the next section.

For t ∈ T and \(p \in \mathcal {P}\), let λp(t) denote the smallest eigenvalue of It(p). We now make precise the assumptions.

-

(A1)

For a given \(p \in \mathcal {P}\), there exists t∗∈ T such that the matrix \(I_{t^{*}}(p)\) is positive definite.

-

(A2)

There exists t∗∈ T such that \(\inf _{p \in \mathcal {P}} \lambda _{p}(t^{*}) > 0\).

5 Numerical Examples

In this section we shall discuss several numerical examples illustrating the applications of our main results. An optimal design calculator is available at Athreya et al. (2020).

We begin with some examples connected to Theorem 1. Consider a local c-optimal design at p = (0.10,0.30,0.01), the fractions of those with active infection alone, those with antibodies alone, and those that show both active infection and antibodies. Suppose that the budget is Rs. 1,00,00,000 = Rs. 1 Crore. Let us fix the RAT and antibody test costs at Rs. 450 and Rs. 300, respectively. In the first row of Table 3, the RT-PCR test costs Rs. 1,600. The optimal allocation is to run antibody tests alone on 521 participants (t = (0,0,1)) and the RAT and antibody tests on 13,125 participants (t = (1,0,1)). In particular, no RT-PCR tests are done. In the second row of Table 3, the RT-PCR test cost comes down to Rs. 1000. Then the optimal allocation is to run antibody tests (t = (0,0,1)) on a larger number of participants, namely 8,000, and the RT-PCR and antibody tests on 5,846 participants (t = (0,1,1)). In particular, RT-PCR is sufficiently competitive that no RAT tests are done. In the third row of Table 3, the RT-PCR test costs onlyFootnote 5 Rs. 100. The optimal allocation is to run exactly one test pattern, the RT-PCR and the antibody tests (t = (0,1,1)), on 25,000 participants. The corresponding variances per rupee are given on the last column. The improved accuracy is remarkable for the third case when we rely more on RT-PCR tests.

Our next set of example is with regard to Theorem 2. Consider the numerical example in the fourth row of Table 3 where the RT-PCR test cost is only Rs. 100. The range for p2 comes from the Karnataka’s serosurvey (Babu et al. 2020) where the districts’ total burden varied from 8.7% to 45.6%. The ranges for p1 and p3 also come from Babu et al. (2020). The worst-case (obtained via a grid search with 0.01 increments) is near p = (0.06,0.45,0.0). The optimal design is to spend 2.5% of the budget on antibody tests alone, and 97.5% of the budget on the combination of RT-PCR and antibody tests. For the indicated costs, this comes to 838 participants getting only antibody tests and 24,371 participants getting the RT-PCR and antibody tests, see the fourth row of Table 3.

Since an overwhelming part of the budget is for the test pattern (0,1,1), for logistical reasons, all participants will be administered RT-PCR and antibody tests in the second serosurvey for Karnataka (January 2021). Calculations (that we do not present here) indicate that the worst-case variance increases by a factor 1.0023, and so the number of samples must be increased by this factor to achieve the same margin of error and confidence. But the logistical simplicity is well worth extra cost, considering the magnitude and decentralised nature of the survey.

We now discuss an insightful numerical example related to Theorem 4. Consider a setting where the RAT costs Rs. 450, the RT-PCR costs Rs. 1,600 and the antibody test costs Rs. 300. Let us go back to the local c-optimal design criterion for p = (0.1,0.3,0.01). Additionally, let 10% of the population be symptomatic (r1 = 0.1). The optimal allocations under Theorem 4 indicate that roughly 91.2% of the budget must be spent on the asymptomatic population and 8.8% on the symptomatic population. The reduction for the symptomatic population is because the estimate is more reliable (given the better sensitivity of the RAT). As expected, the RT-PCR test is too expensive and is completely skipped. More interestingly, all asymptomatic participants should be administered the RAT and the antibody tests whereas among the symptomatic participants, a sizable fraction should be administered the antibody test alone. In other words, a smaller fraction of the symptomatic fraction get the RAT. This might be paradoxical at first glance, for one might expect an increased use of RAT on participants where it is more effective. There is however an insightful resolution to this paradox: given that the active infection fraction estimate is likely to be better in the symptomatic population than in the asymptomatic population, we must invest a portion of the budget for the symptomatic population on antibody tests alone to improve the estimation of the fraction with past infection. In the last row in Table 3, we have indicated the numbers to be tested according to the optimal design.

The above examples provide interpretations of our designs. We now give one example to show the superiority of the our design methodology. The Karnataka COVID-19 serosurvey round 1 design (Babu et al. 2020), arrived at using only antibody-test considerations at 10% antibody prevalence, had 288 participants with test pattern (1,1,1) (all three tests) and 144 with test pattern (0,1,1) (no RAT). Assume RAT cost of Rs. 450, a subsidised RT-PCR cost of Rs. 100, and antibody test cost of Rs. 300. The total budget then comes to Rs. 3,02,400. One of the unit’s estimated parameters was p = (0.050,0.149,0.007) (BBMP Yelahanka, see [2, Supplementary Appendix A]). We now compare the confidence intervals of the above design and the optimal design. For the above design, the variance can be evaluated and is 6.25 × 10− 4. The optimal design for the same cost has 50 participants undergoing the test pattern (0,1,0) (only RT-PCR test) and 743 participants undergoing test pattern (0,1,1) (no RAT). In particular, the optimal design does not use the RAT but increases the number of RT-PCR and antibody tests. The reduced variance is 3.01 × 10− 4, which is a 50.4% reduction.

6 Proof of Theorems

Recall that C > 0 is the total budget, T is all the nonempty subsets of tests, ct is the sum of the costs of the individual tests constituting t ∈ T, and the survey design is denoted by (wt, t ∈ T), where wt ≥ 0 is the number of participants (relaxed to a real-value) who are administered the subset t of tests, \(w_{t} \in \mathbb {R}_{+}\). The cost of this design is \({\sum }_{t \in T} w_{t} c_{t}\).

We begin with a specific observation with regard to our model.

Remark 2.

Let t∗ = (1,1,1). When the specificities and sensitivities are as given by Table 2, it is easy to verify (A1) and (A2) hold. Indeed, from Eq. 2.2, defining

and the probability

we have for any nonzero \(x \in \mathbb {R}^{3}\)

where the last strict inequality holds because \(\{ v(y,t^{*}), y \in \mathcal {Y}_{t^{*}} \}\) is linearly independent, a fact that can verified for the given sensitivities and the specificities. This not only verifies assumption (A1) for our example, but also assumption (A2).

We shall use the fact the model satisfies assumption (A1) or (A2) in the proofs below.

Proof of Theorem 1.

Let vt = wtct/C, t ∈ T. Recall a(v;p) from Eq. 3.2. It is immediate that a minimiser of Eq. 3.2 will yield a c-optimal design given by Eq. 3.1. To find a minimiser, first consider the following optimisation problem

By Assumption 1, there exists a v(0) such that \(a(v^{(0)}; p) < \infty \), and hence the minimisation can be restricted to the set \(\mathcal {C} = \{(v_{t}, t \in T): a(v;p) \leq a(v^{(0)}; p), v_{t} \geq 0, t \in T, {\sum }_{t \in T} v_{t} \leq 1\}\). With this restriction on the constraint set, the above problem is a convex optimisation problem. Indeed, the objective function is convex on \(\mathcal {C}\); for any λ ∈ (0,1) and any two designs v(a) and v(b) in \(\mathcal {C}\), the matrix

is positive semidefinite, and the constraint set \(\mathcal {C}\) is a convex subset of \(\mathbb {R}^{|T|}\). Moreover \(\mathcal {C}\) is also compact: for any sequence {v(n), n ≥ 1} in \(\mathcal {C}\), being a sequence in the compact set (6.2), we can extract a subsequence that converges to an element \(\tilde {v}\) of Eq. 6.2; from the definition of \(\mathcal {C}\), we must have \(a(\tilde {v};p) \leq a(v^{(0)};p)\) which implies that \(\tilde {v} \in \mathcal {C}\). Hence there exists a \(v^{*} = (v^{*}_{t}, t\in T)\) that solves the above problem, see Boyd and Vandenberghe (2004, Section 4.2.2). If \({\sum }_{t \in T} v_{t}^{*} < 1\), then we may scale up the v∗ by a factor to use the full budget, satisfy the sum-constraint with equality, and strictly reduce the objective function by the same factor, which is a contradiction to v∗’s optimality. Hence \({\sum }_{t \in T} v_{t}^{*} = 1\). Hence \(w^{*}_{t} = v^{*}_{t} C / c_{t}\) is the desired c-optimal design and the minimum variance is given by a(v∗;p)/C. This completes the proof. □

For information on the structure of an optimal solution, consider the Lagrangian

For each t ∈ T,

By the Karush–Kuhn–Tucker conditions (Boyd and Vandenberghe, 2004, Chapter 5), there exist non-negative numbers \((\lambda ^{*}_{t}, t \in T)\) and μ∗ such that

Clearly v∗≢0 and μ∗ > 0, otherwise Eq. 6.3 is violated. Conditions (6.3) and (6.4) together with the fact that \(w^{*}_{t} = v^{*}_{t} C/c_{t}\) imply that whenever \(w^{*}_{t} > 0\) for some t ∈ T, we must have

We can compute μ∗ easily: multiplying by \(v_{t}^{*}\), summing over t, and using \({\sum }_{t \in T} v_{t}^{*} = 1\), we see that

The proof of Theorem 2 requires a preliminary lemma. Fix K ≥ 3 and let \(v_{1}, \ldots , v_{K} \in \mathbb {R}^{3}\). For \(a \in \mathbb {R}^{K}\) such that \({\sum }_{i=1}^{K} a_{i} = 1\), define

We first prove the following lemma. A similar concavity property holds in the context of parallel sum of positive definite matrices; see (Bhatia, 2007, Theorem 4.1.1). Let \(\mathbb {R}^{K}_{++}\) denote the set of K-vectors whose entries are strictly positive.

Lemma 1.

Assume I(a) is invertible for all \(a \in \mathbb {R}^{K}_{++}\). The mapping a↦uTI(a)− 1u is concave on \(\mathbb {R}^{K}_{++}\).

Proof.

Let f(a) = uTI(a)− 1u. We have, for i = 1,2,…, K,

We also have, for i, j = 1,2,…, K,

Hence

Let βi = uTI(a)− 1vi and let \(B_{i,j} = {v_{i}^{T}} I(a)^{-1} v_{j}\). Then

For positive semidefiniteness of the Hessian of f, it suffices to show that

for all \(x \in \mathbb {R}^{K}\). With \(\alpha _{i} = \frac {x_{i} \beta _{i}}{a_{i} \sqrt {a_{i}}}\), the above condition becomes

which is the same as asking for the largest eigenvalue of the matrix with entries

to be at most 1. Note that

Let \(\tilde {v}_{i} = \frac {v_{i}}{\sqrt {a_{i}}}\) and \(\tilde {V} = [\tilde {v}_{1}, \ldots , \tilde {v}_{K}]\). Then the above entry is the (i, j) entry of the matrix \(\tilde {V}^{T} (\tilde {V} \tilde {V}^{T})^{-1} \tilde {V}\). It therefore suffices to show that, in positive semidefinite ordering,

that is, for each \(z \in \mathbb {R}^{K}\), we must have

Let \(\tilde {V}\) have the singular value decomposition UΣVT, where U and V are unitary matrices of appropriate sizes. Since I(a) is invertible, it follows that \((\tilde {V}\tilde {V}^{T})^{-1} = U {\Lambda }^{-1} U^{T}\) where Λ = ΣΣT. Also ΣT(ΣΣT)− 1Σ is a block diagonal matrix with I3 and OK− 3 (all zero matrix square matrix of dimension K − 3) on the diagonal. Therefore, the left-hand side of the above display becomes

where Om, n is the all zero matrix of dimensions m × n. Since V is orthonormal, the lemma follows. □

Proof of Theorem 2.

Consider the zero-sum game G. By Assumption 1, there exists a design v(0) such that \(\sup _{p \in \mathcal {P}} a(v^{(0)};p) < \infty \); hence, we may restrict the set of designs to

Recall that, for a given pair of strategies (v, p), the pay-off of the maximising player is \(a(v; p) = u^{T} \left ({\sum }_{t \in T} v_{t} I_{t}(p)/c_{t} \right )u\). For each \(p \in \mathcal {P}\), as argued in the proof of Theorem 1, the mapping v↦a(v;p) is convex on \(\mathcal {C}\), and \(\mathcal {C}\) is a convex and compact subset of the design space. Recall q from Eq. 2.1 and the Fisher information matrix from Eq. 2.2. The mapping

is linear. So by Lemma 1, for each fixed \(v \in \mathcal {C}\), the mapping p↦a(v;p) is concave. Also, \(\mathcal {P}\) is convex and compact. Hence by Glicksberg’s fixed point theorem (Glicksberg, 1952) there exists a Nash equilibrium for the game G. The result now follows from Theorem 1 and the interchangeability property of Nash equilibria in two-player zero-sum games (see (Tijs, 2003, Theorem 3.1)). □

Proof of Theorem3.

A local c-optimal design that minimises the variance of the estimator \({\sum }_{d \in [D]}n_{d} (u^{T}\hat {p}(d))\) is obtained by finding a solution \(v_{t,d}^{*}, t \in T, d = 1, \ldots , D\), to the following optimisation problem:

With additional variables (md, d = 1,…, D) which can be interpreted as the budget fractions given to each district, the value of the above optimisation problem is equal to the value of the following problem:

For a given (md, d = 1,…D), the optimisation in Eq. 6.7 over the variables (vt, d, t ∈ T, d = 1,…, D) can be performed separately for each d. As argued in the proof of Theorem 1, using Assumption 1 for each p(d), d = 1,…, D, there exists a solution to the problem (3.2) with p(d) in place of p; we denote it by v∗(p(d)) and the corresponding value by a(v∗(p(d));p(d)). Hence the above problem reduces to

Note that Eq. 6.8 is a convex problem in the variables (md, d = 1,…, D); indeed the mapping \((m_{d}, d=1,\ldots , D) \mapsto {\sum }_{d \in [D]} \frac {{n_{d}^{2}}}{m_{d}} a(v^{*}(p(d));p(d))\) is lower-semicontinuous, convex and the constraint set is a compact and convex subset of \(\mathbb {R}_{+}^{D}\). Hence, there exists a solution \((m_{d}^{*}, d=1, \ldots , D)\) to the above problem. Consider the Lagrangian

By the Karush-Kuhn-Tucker conditions (Boyd and Vandenberghe, 2004, Chapter 5), there exist non-negative numbers \((\lambda _{d}^{*}, d=1,\ldots , D)\) and μ∗ such that the following conditions hold:

If μ∗ = 0, then Eq. 6.9 is violated for all d; hence μ∗ > 0 and by Eq. 6.11, \({\sum }_{d \in [D]} m^{*}_{d} = 1\). Whenever \(m_{d}^{*} >0\), we must have \(\lambda _{d}^{*} =0\), and by Eq. 6.9, \(m_{d}^{*} = n_{d}\sqrt {\mu ^{*} a(v^{*}(p(d)); p(d))}\). Using \({\sum }_{d=1}^{D} m_{d}^{*} = 1\), we can solve for μ∗ to get

This solves problem Eq. 6.8. By setting

where \(m_{d}^{*}\) is as given in Eq. 6.12, we also solve problem Eq. 6.7. By the equivalence of the problems Eq. 6.6 and Eq. 6.7, we have a solution to problem Eq. 6.6, which can be described as follows: Allocate \(C_{d} = C m_{d}^{*}\) to district d, and by Theorem 1, allocate \(w^{*}_{t}(d) = v^{*}_{t}(p(d)) C_{d}/c_{t}\) for test pattern t in district d. This completes the proof. □

Proof of Theorem 4.

The proof is straightforward and follows the same arguments used in the proof of Theorem3; we only have to use s in place of d and recognise that the Fisher information matrices may depend on s. □

Notes

Integrality can be met if we randomise and constrain the expectation.

The source code (in R and Python) that was used to generate the results corresponding to Theorems 1 and 2 in Table 3 can be found at the following URL: https://github.com/cni-iisc/optimal-sero-survey-design

The reliability indicators for the antibody test were measured on the same lot and batch used for the Karnataka sero-survey (Babu et al. 2020) and those for the other tests are the same as those used therein.

Note that a more proper notation would have been v∗(p(s); s) at the expense of cumbersome notation. We will omit the dependence of v∗ on s for notational convenience.

This big reduction in cost may come from pooled testing strategies.

References

Athreya, S., Babu, G.R., Iyer, A., Mohammed Minhaas, B.S., Rathod, N., Shriram, S., Sundaresan, R., Vaidhiyan, N.K. and Yasodharan, S. (2020). Optimal design of serosurveys for disease burden estimation. https://optimaldesign.readiness.in/.

Babu, G. R. et al. (2020). The burden of active infection and anti-SARS-cov-2 IgG antibodies in the general population: Results from a statewide survey in Karnataka, India. medRxiv medRxiv 2020.12.04.20243949.

Bhatia, R. (2007). Positive definite matrices. Hindustan Book Agency, India.

Boyd, S. and Vandenberghe, L. (2004). Convex optimization. Cambridge University Press, Cambridge.

Chernoff, H. (1953). Locally optimal designs for estimating parameters. The Annals of Mathematical Statistics, 586–602.

Dette, H. and Holland-Letz, T. (2009). A geometric characterization of c-optimal designs for heteroscedastic regression. Ann. Stat. 37, 4088–4103.

Elfving, G. (1952). Optimum allocation in linear regression theory. Ann. Math. Stat. 23, 255–262.

Gao, L. L. and Zhou, J. (2014). New optimal design criteria for regression models with asymmetric errors. J. Stat. Plan. Inference 149, 140–151.

Glicksberg, I. L. (1952). A further generalization of the kakutani fixed point theorem, with application to nash equilibrium points. Proc. Am. Math. Soc. 3, 170–174.

McGree, J. M. and Eccleston, J. A. (2008). Probability-based optimal design. Aust. N. Z. J. Stat. 50, 13–28.

Poor, H.V. (1994). Introduction to Signal Detection and Estimation, 2nd edn. Springer, Berlin.

Pronzato, L. and Pázman, A. (2013). Design of experiments in nonlinear models. Lecture notes in statistics, 212.

Tijs, S. (2003). Introduction to game theory. Hindustan Book Agency, India.

Acknowledgments

SA’s research was supported in part by MATRICS. GRB’s research is funded by an Intermediate Fellowship by the Wellcome Trust DBT India Alliance (Clinical and Public Health Research Fellowship); grant number: IA/CPHI/14/1/501499. AI and NKV were supported by the IISc-Cisco Centre for Networked Intelligence (CNI), Indian Institute of Science, through a Google grant. MM and SS were supported by the CNI through a Hitachi grant. NR and SY works were supported by CNI through a Cisco-IISc PhD Research Fellowship. RS’s work was supported by the Google Gift Grant. RS’s work was done while on sabbatical leave at Strand Life Sciences, and RS gratefully acknowledges discussions with and feedback from Vamsi Veeramachaneni and Ramesh Hariharan.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Athreya, S., Babu, G.R., Iyer, A. et al. COVID-19: Optimal Design of Serosurveys for Disease Burden Estimation. Sankhya B 84, 472–494 (2022). https://doi.org/10.1007/s13571-021-00267-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13571-021-00267-w

Keywords

- c-optimal design

- serosurvey

- COVID-19

- worst-case design

- Fisher information

- weighted estimate

- adjusted estimate.