Abstract

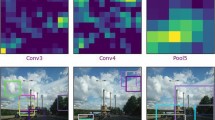

This paper presents a new visual place recognition (VPR) method based on dynamic time warping (DTW) and deep convolutional neural network. The proposal considers visual place recognition in environments that exhibit changes in several visual conditions like appearance and viewpoint changes. The proposed VPR method belongs to the sequence matching category, i.e., it utilizes the sequence-to-sequence image matching to recognize the best matching to the current test image. This approach extracts the image’s features from a deep CNN, where different layers of a two selected CNNs are investigated and the best performing layer along with the DTW is identified. Also, the performance of the deep features is compared to the one of classical features (handcrafted features like SIFT, HOG and LDB). Our experiments also compare the performance with other state-of-the-art visual place recognition algorithms, Holistic, Only look once, NetVLAD and SeqSLAM in particular.

Similar content being viewed by others

References

Lowry, S.; Sünderhauf, N.; Newman, P.; Leonard, J.J.; Cox, D.; Corke, P.; Milford, M.J.: Visual place recognition: a survey. IEEE Trans. Robot. 32(1), 1–19 (2015)

Abdul Hafez, A.H.; Agarwal, N.; Jawahar, C.: Connecting visual experiences using max-flow network with application to visual localization. arXiv preprint arXiv:1808.00208 (2018)

Chancán, M.; Hernandez-Nunez, L.; Narendra, A.; Barron, A.B.; Milford, M.: A hybrid compact neural architecture for visual place recognition. IEEE Robot. Autom. Lett. 5(2), 993–1000 (2020)

Naseer, T.; Burgard, W.; Stachniss, C.: Robust visual localization across seasons. IEEE Trans. Robot. 34(2), 289–302 (2018)

Hausler, S.; Jacobson, A.; Milford, M.: Multi-process fusion: Visual place recognition using multiple image processing methods. IEEE Robot. Autom. Lett. 4(2), 1924–1931 (2019)

Alshehri, M.: A content-based image retrieval method using neural network-based prediction technique. Arab. J. Sci. Eng. 1–17 (2019)

Mehmood, Z.; Abbas, F.; Mahmood, T.; Javid, M.A.; Rehman, A.; Nawaz, T.: Content-based image retrieval based on visual words fusion versus features fusion of local and global features. Arab. J. Sci. Eng. 43(12), 7265–7284 (2018)

Abdul Hafez, A.H.; Arora, M.; Krishna, K.M.; Jawahar, C.: Learning multiple experiences useful visual features for active maps localization in crowded environments. Adv. Robot. 30(1), 50–67 (2016)

Fu, R.; Li, B.; Gao, Y.; Wang, P.: Content-based image retrieval based on cnn and svm. In: 2016 2nd IEEE International Conference on Computer and Communications (ICCC), pp. 638–642. IEEE (2016)

Du, K.; Cai, K.Y.: Comparison research on IOT oriented image classification algorithms. In: ITM Web of Conferences, vol. 7, p. 02006. EDP Sciences (2016)

Kate, R.J.: Using dynamic time warping distances as features for improved time series classification. Data Min. Knowl. Discov. 30(2), 283–312 (2016)

Petitjean, F.; Forestier, G.; Webb, G.I.; Nicholson, A.E.; Chen, Y.; Keogh, E.: Faster and more accurate classification of time series by exploiting a novel dynamic time warping averaging algorithm. Knowl. Inf. Syst. 47(1), 1–26 (2016)

Hafez, A.H.A.; Tello, A.; Alqaraleh, S.: Visual place recognition by dtw-based sequence alignment. In: 2019 27th Signal Processing and Communications Applications Conference (SIU), pp. 1–4 (2019)

Lu, F.; Chen, B.; Guo, Z.; Zhou, X.: Visual sequence place recognition with improved dynamic time warping. In: 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), pp. 1034–1041 (2019)

Milford, M.J.; Wyeth, G.F.: Seqslam: Visual route-based navigation for sunny summer days and stormy winter nights. In: 2012 IEEE International Conference on Robotics and Automation, pp. 1643–1649. IEEE (2012)

Cummins, M.; Newman, P.: Fab-map: Probabilistic localization and mapping in the space of appearance. Int. J. Robot. Res. 27(6), 647–665 (2008)

Yue-Hei Ng, J.; Yang, F.; Davis, L.S.: Exploiting local features from deep networks for image retrieval. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 53–61 (2015)

Chandrasekhar, V.; Lin, J.; Liao, Q.; Morere, O.; Veillard, A.; Duan, L.; Poggio, T.: Compression of deep neural networks for image instance retrieval. In: 2017 Data Compression Conference (DCC), pp. 300–309. IEEE (2017)

Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J.: Netvlad: CNN architecture for weakly supervised place recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5297–5307 (2016)

Chen, Z.; Jacobson, A.; Sünderhauf, N.; Upcroft, B.; Liu, L.; Shen, C.; Reid, I.; Milford, M.: Deep learning features at scale for visual place recognition. In: 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 3223–3230. IEEE (2017)

Hafez, A.A.; Alqaraleh, S.; Tello, A.: Encoded deep features for visual place recognition. In: 2020 28th Signal Processing and Communications Applications Conference (SIU), pp. 1 – 4. IEEE (2020)

Khaliq, A.; Ehsan, S.; Chen, Z.; Milford, M.; McDonald-Maier, K.: A holistic visual place recognition approach using lightweight CNNs for significant viewpoint and appearance changes. IEEE Trans. Robot. 36(2), 561–569 (2020)

Chen, Z.; Liu, L.; Sa, I.; Ge, Z.; Chli, M.: Learning context flexible attention model for long-term visual place recognition. IEEE Robot. Autom. Lett. 3(4), 4015–4022 (2018)

Mousavian, A.; Kosecka, J.: Deep convolutional features for image based retrieval and scene categorization. arXiv preprint arXiv:1509.06033 (2015)

Wang, T.H.; Huang, H.J.; Lin, J.T.; Hu, C.W.; Zeng, K.H.; Sun, M.: Omnidirectional cnn for visual place recognition and navigation. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 2341–2348. IEEE (2018)

Li, Z.; Zhou, A.; Wang, M.; Shen, Y.: Deep fusion of multi-layers salient CNN features and similarity network for robust visual place recognition. In: 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), pp. 22–29. IEEE (2019)

Chen, Z.; Maffra, F.; Sa, I.; Chli, M.: Only look once, mining distinctive landmarks from convnet for visual place recognition. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 9–16. IEEE (2017)

Sivic, J.; Zisserman, A.: Video google: A text retrieval approach to object matching in videos. p. 1470. IEEE (2003)

Zaffar, M.; Ehsan, S.; Milford, M.; McDonald-Maier, K.: Cohog: A light-weight, compute-efficient, and training-free visual place recognition technique for changing environments. IEEE Robot. Autom. Lett. 5(2), 1835–1842 (2020)

Li, H.: On-line and dynamic time warping for time series data mining. Int. J. Mach. Learn. Cybern. 6(1), 145–153 (2015)

Zhang, X.; Zou, J.; He, K.; Sun, J.: Accelerating very deep convolutional networks for classification and detection. IEEE Trans. Pattern Anal. Mach. Intell. 38(10), 1943–1955 (2015)

He, K.; Zhang, X.; Ren, S.; Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; Berg, A.C.; Fei-Fei, L.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 115(3), 211–252 (2015). https://doi.org/10.1007/s11263-015-0816-y

Fang, C.: From dynamic time warping (DTW) to hidden markov model (HMM), p. 19. University of Cincinnati, Cincinnati (2009)

Sakoe, H.; Chiba, S.: Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process 26(1), 43–49 (1978)

Alsmadi, M.K.: Content-based image retrieval using color, shape and texture descriptors and features. Arab. J. Sci. Eng. 1–14 (2020)

Lowe, D.G.: Object recognition from local scale-invariant features. In: Proceedings of the Seventh IEEE International Conference on Computer Vision, vol. 2, pp. 1150–1157. IEEE (1999)

Dalal, N.; Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), vol. 1, pp. 886–893. IEEE (2005)

Yang, X.; Cheng, K.T.T.: Local difference binary for ultrafast and distinctive feature description. IEEE Trans. Pattern Anal. Mach. Intell. 36(1), 188–194 (2013)

Krizhevsky, A.; Sutskever, I.; Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Sünderhauf, N.; Shirazi, S.; Dayoub, F.; Upcroft, B.; Milford, M.: On the performance of convnet features for place recognition. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4297–4304. IEEE (2015)

Olid, D.; Fácil, J.M.; Civera, J.: Single-view place recognition under seasonal changes. In: PPNIV Workshop at IROS 2018 (2018)

Acknowledgements

The Titan Xp used for this research was donated by the NVIDIA Corporation. This work is partially supported by TUBITAK under project number 117E173.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Alqaraleh, S., Hafez, A.H.A. & Tello, A. Dynamic Time Warping of Deep Features for Place Recognition in Visually Varying Conditions. Arab J Sci Eng 46, 3675–3689 (2021). https://doi.org/10.1007/s13369-020-05146-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-020-05146-6