Abstract

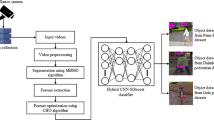

Intelligent video surveillance systems require effective techniques in order to detect objects accurately and rapidly. The most suitable algorithms for performing this task are based on convolutional neural networks. Existing approaches encounter a wide range of difficulties in terms of dealing with different sizes, high definition, or colored images turning these latter slower and less precise. The real-time sensitive application offers an interesting challenge for the optimization of the quality and quantity of previous approaches, thus obtaining an efficient system with regard to surveillance environment. This paper presents a novel, fast, and precise technique for advanced object detection as far as intelligent video surveillance systems are concerned. Thus, we propose the transfer learning of an efficient pre-trained network to appropriate datasets for our application and its integration in the architecture of our algorithm. Accordingly, we implement a fine-tuning on this pre-trained model via replacing the softmax layer and running backpropagation. Then, we compare the results of the previous algorithms using common evaluation parameters. The experimental results reveal that with this technique, we can enhance the precision and the accuracy of object detection in video surveillance scenes to more than \(90 \%\). Furthermore, along with dealing with different input dimensions, the detector runs in real time. To conclude, our application of machine learning for intelligent video surveillance systems maximizes their efficiency in highly difficult situations.

Similar content being viewed by others

Notes

We are the first researchers to test a detector on Daimler pedestrian segmentation benchmark.

References

Ahmadi, M.; Walha, A.; Wali, A.; Alimi, A.M.: A new motion estimation technique for video coding. In: 2015 IEEE International Conference on Intelligent Systems Design and Applications-Marrakesh (ISDA-Marrakesh), pp. 110–115 (2015)

Redmon, J.; Farhadi, A.: Yolo9000: Better, faster, stronger. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6517–6525 (2017). https://doi.org/10.1109/CVPR.2017.690

Zeng, D.; Zhu, M.: Multiscale fully convolutional network for foreground object detection in infrared videos. IEEE Geosci. Remote Sens. Lett. PP(99), 1–5 (2018). https://doi.org/10.1109/LGRS.2018.2797538

Wang, Y.; Jodoin, P.; Porikli, F.; Konrad, J.; Benezeth, Y.; Ishwar, P.: Cdnet 2014: An expanded change detection benchmark dataset. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 393–400 (2014). https://doi.org/10.1109/CVPRW.2014.126

Wang, Y.; Luo, Z.; Jodoin, P.M.: Interactive deep learning method for segmenting moving objects. Pattern Recogn. Lett. 96(C), 66–75 (2017). https://doi.org/10.1016/j.patrec.2016.09.014

Bianco, S.; Ciocca, G.; Schettini, R.: How far can you get by combining change detection algorithms? CoRR (2015). arXiv:1505.02921

St-Charles, P.; Bilodeau, G.; Bergevin, R.: Subsense: a universal change detection method with local adaptive sensitivity. IEEE Trans. Image Process. 24(1), 359–373 (2015). https://doi.org/10.1109/TIP.2014.2378053

Elgammal, A.; Duraiswami, R.; Harwood, D.; Davis, L.S.: Background and foreground modeling using nonparametric kernel density estimation for visual surveillance. Proc. IEEE 90(7), 1151–1163 (2002). https://doi.org/10.1109/JPROC.2002.801448

Maddalena, L.; Petrosino, A.: The sobs algorithm: What are the limits? In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 21–26 (2012). https://doi.org/10.1109/CVPRW.2012.6238922

Barnich, O.; Droogenbroeck, M.V.: Vibe: a universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 20(6), 1709–1724 (2011). https://doi.org/10.1109/TIP.2010.2101613

Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H.: Mobilenets: Efficient convolutional neural networks for mobile vision applications. In: CoRR (2017). arXiv:1704.04861

Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; Murphy, K.: Speed/accuracy trade-offs for modern convolutional object detectors. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3296–3297 (2017). https://doi.org/10.1109/CVPR.2017.351

Stewart, R.; Andriluka, M.; Ng, A.Y.: End-to-end people detection in crowded scenes. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2325–2333 (2016). https://doi.org/10.1109/CVPR.2016.255

Wang, W.; Shen, J.; Shao, L.: Video salient object detection via fully convolutional networks. IEEE Trans. Image Process. 27(1), 38–49 (2018). https://doi.org/10.1109/TIP.2017.2754941

Nguyen, T.P.; Pham, C.C.; Ha, S.V.U.; Jeon, J.W.: Change detection by training a triplet network for motion feature extraction. IEEE Trans. Circuits Syst. Video Technol. PP(99), 1–1 (2018). https://doi.org/10.1109/TCSVT.2018.2795657

Ombabi, A.H.; Lazzez, O.; Ouarda, W.; Alimi, A.M.: Deep learning framework based on word2vec and cnnfor users interests classification. In: 2017 Sudan Conference on Computer Science and Information Technology (SCCSIT), pp. 1–7 (2017). https://doi.org/10.1109/SCCSIT.2017.8293054

Islem Jarraya Wael Ouarda, A.M.A.: Deep neural network features for horses identity recognition using multiview horses’ face pattern. In: 2017 Ninth International Conference on Machine Vision (ICMV), vol. 10341 (2017). https://doi.org/10.1117/12.2269064

Jung, S.; Hwang, S.; Shin, H.; Shim, D.H.: Perception, guidance and navigation for indoor autonomous drone racing using deep learning. IEEE Robot. Autom. Lett. PP(99), 1–1 (2018). https://doi.org/10.1109/LRA.2018.2808368

Ouarda, W.; Trichili, H.; Alimi, A.; Solaiman, B.: Towards a novel biometric system for smart riding club. J. Inf. Assur. Secur. 11(4), 12 (2016)

Dalal, N.; Triggs, B.: Histograms of oriented gradients for human detection. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit 1, 886–893 (2005). https://doi.org/10.1109/CVPR.2005.177

Nguyen, T.Q.; Kim, S.H.; Na, I.S.: Fast pedestrian detection using histogram of oriented gradients and principal components analysis. Int. J. Contents 9, 1 (2013)

Ghabri, S.; Ouarda, W.; Alimi, A.: Towards human behavior recognition based on spatio temporal features and support vector machines. In: 2017 Ninth International Conference on Machine Vision (ICMV), p. 103410E (2017). https://doi.org/10.1117/12.2269060

Din, S.; Paul, A.; Ahmad, A.; Gupta, B.; Rho, S.: Service orchestration of optimizing continuous features in industrial surveillance using big data based fog-enabled internet of things. IEEE Access PP(99), 1–1 (2018). https://doi.org/10.1109/ACCESS.2018.2800758

Ouarda, W.; Trichili, H.; Alimi, A.M.; Solaiman, B.: Bag of face recognition systems based on holistic approaches. In: 2015 15th International Conference on Intelligent Systems Design and Applications (ISDA), pp. 201–206 (2015). https://doi.org/10.1109/ISDA.2015.7489225

Dollar, P.; Wojek, C.; Schiele, B.; Perona, P.: Pedestrian detection: A benchmark. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 304–311 (2009). https://doi.org/10.1109/CVPR.2009.5206631

Ren, S.; He, K.; Girshick, R.B.; Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: CoRR (2015). arXiv:1506.01497

Girshick, R.: Fast r-cnn. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 1440–1448 (2015). https://doi.org/10.1109/ICCV.2015.169

Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A.: You only look once: unified, real-time object detection. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788 (2016). https://doi.org/10.1109/CVPR.2016.91

Redmon, J.; Farhadi, A.: Yolov3: An incremental improvement. In: CoRR (2018). arXiv:1804.02767

Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L.: Microsoft COCO: common objects in context. In: CoRR (2014). arXiv:1405.0312

Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P.: Focal loss for dense object detection. In: CoRR (2017). arXiv:1708.02002

Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C.: SSD: single shot multibox detector. In: CoRR (2015). arXiv:1512.02325

Fu, C.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C.: DSSD : Deconvolutional single shot detector. In: CoRR (2017). arXiv:1701.06659

Dai, J.; Li, Y.; He, K.; Sun, J.: R-FCN: object detection via region-based fully convolutional networks. In: CoRR (2016). arXiv:1605.06409

Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J.: Feature pyramid networks for object detection. In: CoRR (2016). arXiv:1612.03144

He, K.; Zhang, X.; Ren, S.; Sun, J.: Deep residual learning for image recognition. In: CoRR (2015). arXiv:1512.03385

Muhammad, K.; Ahmad, J.; Baik, S.W.: Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 288, 30–42 (2018). https://doi.org/10.1016/j.neucom.2017.04.083. (Learning System in Real-time Machine Vision)

Muhammad, K.; Hamza, R.; Ahmad, J.; Lloret, J.; Wang, H.H.G.; Baik, S.W.: Secure surveillance framework for iot systems using probabilistic image encryption. IEEE Trans. Ind. Inf. PP(99), 1–1 (2018). https://doi.org/10.1109/TII.2018.2791944

Wang, L.; Shi, J.; Song, G.; Shen, I.: Object detection combining recognition and segmentation. In: Yagi, Y., Kang, S.B., Kweon, I.S., Zha, H. (eds.) Computer Vision–ACCV 2007, pp. 189–199. Springer, Berlin (2007)

Fabian Flohr. (Daimler), D.G.D.: Pedcut: an iterative framework for pedestrian segmentation combining shape models and multiple data cues. In: Proceedings of the British Machine Vision Conference. BMVA Press (2013)

Sokolova, M.; Lapalme, G.: A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 45(4), 427–437 (2009). https://doi.org/10.1016/j.ipm.2009.03.002

Chicco, D.: Ten quick tips for machine learning in computational biology. BioData Min. 10(1), 35 (2017). https://doi.org/10.1186/s13040-017-0155-3

Khandelwal, G.; Anandi, V.; Deepak, M.V.; Prasad, V.N.; Manikantan, K.; Francis, F.: Pedestrian detection using single box convergence with iterative dct based haar cascade detector and skin color segmentation. In: 2015 IEEE International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN), pp. 32–37 (2015). https://doi.org/10.1109/ICRCICN.2015.7434205

Liao, W.H.; Huang, L.W.: Pedestrian detection using covariance descriptor and on-line learning. In: 2011 International Conference on Technologies and Applications of Artificial Intelligence, pp. 179–182 (2011). https://doi.org/10.1109/TAAI.2011.38

Tetik, Y.E.; Bolat, B.: Pedestrian detection from still images. In: 2011 International Symposium on Innovations in Intelligent Systems and Applications, pp. 540–544 (2011). https://doi.org/10.1109/INISTA.2011.5946164

Acknowledgements

This project was funded by the European Union via PASRI/MOBIDOC [Session 2013/N38] in partnership with the industrial Innov4All. The research leading to these results received funding from the Ministry of Higher Education and Scientific Research of Tunisia under the Grant Agreement Number LR11ES48.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ahmadi, M., Ouarda, W. & Alimi, A.M. Efficient and Fast Objects Detection Technique for Intelligent Video Surveillance Using Transfer Learning and Fine-Tuning. Arab J Sci Eng 45, 1421–1433 (2020). https://doi.org/10.1007/s13369-019-03969-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-019-03969-6