Abstract

This paper presents a new approach to selecting knots at the same time as estimating the B-spline regression model. Such simultaneous selection of knots and model is not trivial, but our strategy can make it possible by employing a nonconvex regularization on the least square method that is usually applied. More specifically, motivated by the constraint that directly designates (the upper bound of) the number of knots to be used, we present an (unconstrained) regularized least square reformulation, which is later shown to be equivalent to the motivating cardinality-constrained formulation. The obtained formulation is further modified so that we can employ a proximal gradient-type algorithm, known as GIST, for a class of nonconvex nonsmooth optimization problems. We show that under a mild technical assumption, the algorithm is shown to reach a local minimum of the problem. Since it is shown that any local minimum of the problem satisfies the cardinality constraint, the proposed algorithm can be used to obtain a spline regression model that depends only on a designated number of knots at most. Numerical experiments demonstrate how our approach performs on synthetic and real data sets.

Similar content being viewed by others

Data Availability

Data sets generated in this paper and MATLAB codes are available from the corresponding author on reasonable request.

References

De Boor, C.: A Practical Guide to Splines. Springer, New York (1978)

O’Sullivan, F.: A statistical perspective on ill-posed inverse problems. Stat. Sci. 502–518 (1986)

Eilers, P.H., Marx, B.D.: Flexible smoothing with B-splines and penalties. Stat. Sci. 11(2), 89–121 (1996)

Goepp, V., Bouaziz, O., Nuel, G.: Spline regression with automatic knot selection. arXiv preprint arXiv:1808.01770 (2018)

Yagishita, S., Gotoh, J.: Exact penalization at d-stationary points of cardinality-or rank-constrained problem. arXiv preprint arXiv:2209.02315 (2022)

Wright, S.J., Nowak, R.D., Figueiredo, M.A.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 2479–2493 (2009)

Gong, P., Zhang, C., Lu, Z., Huang, J., Ye, J.: A general iterative shrinkage and thresholding algorithm for non-convex regularized optimization problems. In: International Conference on Machine Learning, pp. 37–45. PMLR (2013)

Gotoh, J., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. 169(1), 141–176 (2018)

Lu, Z., Li, X.: Sparse recovery via partial regularization: models, theory, and algorithms. Math. Oper. Res. 43(4), 1290–1316 (2018)

Bertsimas, D., Copenhaver, M.S., Mazumder, R.: The trimmed lasso: sparsity and robustness. arXiv preprint arXiv:1708.04527 (2017)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Yagishita, S., Gotoh, J.: Pursuit of the cluster structure of network lasso: recovery condition and non-convex extension. arXiv preprint arXiv:2012.07491 (2020)

Kim, S.-J., Koh, K., Boyd, S., Gorinevsky, D.: \(\ell _1\) trend filtering. SIAM Rev. 51(2), 339–360 (2009)

Tibshirani, R.J., Taylor, J.: The solution path of the generalized lasso. Ann. Stat. 39(3), 1335–1371 (2011)

Tibshirani, R.J.: Adaptive piecewise polynomial estimation via trend filtering. Ann. Stat. 42(1), 285–323 (2014)

Amir, T., Basri, R., Nadler, B.: The trimmed lasso: sparse recovery guarantees and practical optimization by the generalized soft-min penalty. SIAM J. Math. Data Sci. 3(3), 900–929 (2021)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23(4), 707–716 (1986)

Grippo, L., Sciandrone, M.: Nonmonotone globalization techniques for the Barzilai–Borwein gradient method. Comput. Optim. Appl. 23, 143–169 (2002)

Nakayama, S., Gotoh, J.: On the superiority of PGMs to PDCAs in nonsmooth nonconvex sparse regression. Optim. Lett. 15(8), 2831–2860 (2021)

Rippe, R.C., Meulman, J.J., Eilers, P.H.: Visualization of genomic changes by segmented smoothing using an L0 penalty. PLoS One 7(6), 38230 (2012)

Frommlet, F., Nuel, G.: An adaptive ridge procedure for L0 regularization. PLoS One 11(2), 0148620 (2016)

Hastie, T., Tibshirani, R.: Generalized additive models. Stat. Sci. 1(3), 297–310 (1986)

Alizadeh, F., Eckstein, J., Noyan, N., Rudolf, G.: Arrival rate approximation by nonnegative cubic splines. Oper. Res. 56(1), 140–156 (2008)

Acknowledgements

The authors would like to thank the anonymous reviewer for many insightful suggestions. J. Gotoh is supported in part by JSPS KAKENHI Grant 20H00285.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proofs of propositions

The proofs omitted in the main body of the paper are displayed in the following.

1.1 Proof of Theorem 2.1

To prove Theorem 2.1 we start with the following lemma.

Lemma A.1

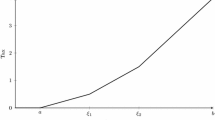

For \(p\ge 0\), let \(s=\sum _{j=-p}^{l-1}\alpha _{j+p+1}B^{(p)}_j,~ \varvec{\alpha }=(\alpha _1,\dots ,\alpha _{p+l})^\top \) and \(i\in \{1,\ldots ,l-1\}\). The coefficient of the pth order term of s over the interval \((t_i,t_{i+1})\) is denoted by the ith element of the vector \(\varvec{D}^{(p+1)}_+\varvec{\alpha }\), and that over the interval \((t_{i-1},t_{i})\) is denoted by the ith element of the vector \(\varvec{D}^{(p+1)}_-\varvec{\alpha }\).

Proof

We will prove by induction. For \(p=0\), we see that

\((\varvec{D}^{(1)}_+\varvec{\alpha })_i=\alpha _{i+1}\), and \((\varvec{D}^{(1)}_-\varvec{\alpha })_i=\alpha _i\). Thus the statement holds for \(p=0\).

Next, we assume that the statement holds for \(p-1\). We obtain from the definition of \(B^{(p)}_j\) that

for all \(x\in [t_0,t_l)\), where the third equality follows from \(B^{(q-1)}_{-q}=B^{(q-1)}_l=0\) on \([t_0,t_l)\). Using the induction hypothesis, we see that the coefficient of the \((p-1)\)th order term of s on the interval \((t_i,t_{i+1})\) is \((\varvec{D}^{(p)}_+\varvec{\Delta }^{(p+1)}\varvec{\alpha })_i=(\varvec{D}^{(p+1)}_+\varvec{\alpha })_i\), and that on the interval \((t_{i-1},t_{i})\) is \((\varvec{D}^{(p)}_-\varvec{\Delta }^{(p+1)}\varvec{\alpha })_i=(\varvec{D}^{(p+1)}_-\varvec{\alpha })_i\). This completes the proof. \(\square \)

proof of Theorem 2.1

Note that \(B^{(p)}_{-p},\ldots ,B^{(p)}_{l-1}\) restricted to \([t_0,t_l)\) is a basis of the linear space consisting of piece wise polynomials of order p on \([t_0,t_l)\) with breakpoints \(t_1,\ldots ,t_{l-1}\) whose derivatives coincide up to order \(p-1\) at all the breakpoints [1, pp. 97–98]. Thus the function s does not use the ith knot if and only if the coefficient of the pth order term of s over the interval \((t_i,t_{i+1})\) coincides with that over the interval \((t_{i-1},t_{i})\). Since it holds that \(\varvec{D}^{(p+1)}=\varvec{D}^{(p+1)}_+-\varvec{D}^{(p+1)}_-\), \((\varvec{D}^{(p+1)}\varvec{\alpha })_{i}=0\) is equivalent to \((\varvec{D}^{(p+1)}_+\varvec{\alpha })_i=(\varvec{D}^{(p+1)}_-\varvec{\alpha })_i\). Therefore, we have the desired result from Lemma A.1. \(\square \)

1.2 Proof of Proposition 3.1

Proof

Let \(\varvec{\beta }^*\) be a local minimum of (10), that is, there exists a neighborhood \({\mathcal {N}}\) of \(\varvec{\beta }^*\) such that \(F(\varvec{\beta }^*)\le F(\varvec{\beta })\) holds for any \(\varvec{\beta }\in {\mathcal {N}}\). Noting that \(F(\varvec{\beta })=G(\varvec{\beta },g(\varvec{\beta }))\) holds for any \(\varvec{\beta }\in {\mathbb {R}}^{l-1}\), we have

for any \(\varvec{\beta }\in {\mathcal {N}}\) and \(\varvec{\beta }'\in {\mathbb {R}}^{p+1}\), which implies that \((\varvec{\beta }^*,g(\varvec{\beta }^*))\) is locally optimal to (9). It is clear that the local optimality of \((\varvec{\beta }^*,g(\varvec{\beta }^*))\) to (9) and \(\varvec{\Sigma }^{(p+1)}(\varvec{\beta }^{*\top },g(\varvec{\beta }^*)^\top )^\top \) to (8) are equivalent. This completes the proof of the former argument. The latter claim can be proved as well. \(\square \)

1.3 Proof of Theorem 3.1

Proof

Let \(h(\varvec{\beta }):=\frac{1}{2}\big \Vert \varvec{z}_1-\varvec{L}_1\varvec{\beta }\big \Vert _2^2+\frac{c}{2}\big \Vert \varvec{z}_2-\varvec{L}_2\varvec{\beta }\big \Vert _2^2\). We see from the d-stationarity of \(\varvec{\beta }^*\) that

Since it is easy to see that \(T_K'(\varvec{\beta }^*;-\varvec{\beta }^*)=-T_K(\varvec{\beta }^*)\), we have

from (A1), the convexity of h, and the nonnegativity of \(T_K\). This leads to

and hence we can evaluate as

for all d such that \(\Vert d\Vert _1=1\) and \(d\in \{-1,0,1\}^{l-1}\). From Lemma 4 of Yagishita and Gotoh [5], we obtain \(\Vert \varvec{\beta }^*\Vert _0\le K\). Noting that

and that \(\varvec{\Sigma }^{(p+1)}(\varvec{\beta }^{*\top },g(\varvec{\beta }^*)^\top )^\top \) is local minimum of (8) according to Proposition 3.1, we have the desired result. \(\square \)

1.4 Proof of Theorem 3.2

Proof

Let \(\Omega :=\{\varvec{\beta }\mid F(\varvec{\beta })\le F(\varvec{\beta }_0)\}\). Note that \(\{\varvec{\beta }_t\}\subset \Omega \). From the nonnegativity of \(T_K\), it holds that \(h(\varvec{\beta })\le F(\varvec{\beta }_0)\) for any \(\varvec{\beta }\in \Omega \), which leads to

Thus, we have

for any \(\varvec{\beta }\in \Omega \) where \(\Vert \cdot \Vert \) is the operator norm, which implies that h is Lipschitz continuous on \(\Omega \). Furthermore, \(T_K\) is also Lipschitz continuous because it is expressed as the difference between the \(\ell _1\) norm and the largest-K norm. As a result, F is Lipschitz continuous on \(\Omega \), namely, is also uniformly continuous on \(\Omega \). Combining the uniform continuity and nonnegativity of F with the Lipschitz continuity of \(\nabla h\) yields \(\Vert \varvec{\beta }_{t+1}-\varvec{\beta }_t\Vert _2\rightarrow 0\), similarly to the proof of Lemma 4 of Wright et al. [6]. Let \(\varvec{\beta }^*\) be an accumulation point of \(\{\varvec{\beta }_t\}\) and \(\{\varvec{\beta }_{t_i}\}\) be a subsequence that converges to \(\varvec{\beta }^*\). Since it is easy to see that \(\{\eta _t\}\) is bounded (see, for example, Lu and Li [9, Theorem 5.1]), for any \(\varvec{d}\in {\mathbb {R}}^{l-1}\), it follows from the optimality of \(\varvec{\beta }_{t_i+1}\) that

for \(\xi >0\), where \(\eta _{\max }:=\sup _t\eta _t\). We obtain from the continuity of \(\nabla h\) and \(T_K\) that

Dividing both sides by \(\xi \) and taking the limit \(\xi \rightarrow 0\) give

which implies that \(\varvec{\beta }^*\) is a d-stationary point of (10), that is, a local minimum of (10).

Next, let \(\varvec{\alpha }^*\) be an accumulation point of \(\big \{\varvec{\Sigma }^{(p+1)}(\varvec{\beta }_t^\top ,g(\varvec{\beta }_t)^\top )^\top \big \}\) and \(\big \{\varvec{\Sigma }^{(p+1)}(\varvec{\beta }_{t_i}^\top ,g(\varvec{\beta }_{t_i})^\top )^\top \big \}\) be a subsequence that converges to \(\varvec{\alpha }^*\). We see that

which implies that \(\varvec{D}^{(p+1)}\varvec{\alpha }^*\) is a local minimum of (10) because it is an accumulation point of \(\{\varvec{\beta }_t\}\). It follows from (A2) and the continuity of g that \(g(\varvec{D}^{(p+1)}\varvec{\alpha }^*)=\varvec{A}\varvec{\alpha }^*\) and hence we obtain from Proposition 3.1 that

is locally optimal to (8). \(\square \)

Appendix B: Construction of expanded difference matrix and its inverse

In this section, concrete constructions of \(\hat{\varvec{D}}^{(p+1)}\) and \(\varvec{\Sigma }^{(p+1)}\) are shown. Let us define

by expanding \(\varvec{D}^{(1)}\) with \(s_1\ne 0\) and

by expanding \(\varvec{\Delta }^{(q+1)}\) with \(s_{q+1}\ne 0\) for \(1\le q\le p\). Let

recursively, then there exists a \((p+1)\times (l+p)\) matrix \(\varvec{A}\) such that

By constructions of \(\hat{\varvec{D}}^{(1)}\) and \(\hat{\varvec{\Delta }}^{(q+1)}\), they are non-singular, and hence \(\hat{\varvec{D}}^{(p+1)}\) is also non-singular. It is not hard to see that

and

for \(1\le q\le p\), where \(\varvec{U}\) is the upper triangular matrix of size \((l-1)\times (l-1)\) such that all non-zero elements equal 1 and

As a result, we can compute as

About this article

Cite this article

Yagishita, S., Gotoh, Jy. Exact penalty method for knot selection of B-spline regression. Japan J. Indust. Appl. Math. 41, 1033–1059 (2024). https://doi.org/10.1007/s13160-023-00631-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13160-023-00631-5

Keywords

- B-spline regression

- Automatic knot selection

- Sparse optimization

- Cardinality constraint

- Exact penalty

- Nonconvex nonsmooth optimization