Abstract

Educators have increasingly adopted formalized approaches for teaching literacy skills in early childhood education. In line with an emergent critique of this approach, the present study investigated the design and effectiveness of a literacy intervention that blended Gagné’s nine events of instructional design with storytelling. Three classes in a public preschool in Indonesia participated in an experimental study involving 45 children, aged 5–6 years. Across 3 weeks, one experimental condition received storytelling activities and a second experimental condition received digital storytelling activities. The control condition received regular literacy classroom activities. Before, and after, the 3-week storytelling intervention, measures of literacy and digital literacy skills were administered to all groups. In the digital storytelling condition, children’s literacy skills increased significantly compared to children in the control condition. Other exploratory data analyses suggested that both types of storytelling activities enhanced digital literacy skills. The findings need to be replicated with an extended series of storytelling activities that involve larger groups of participants.

Résumé

Les éducateurs adoptent de plus en plus des approches formelles d’enseignement des habiletés en alphabétisation en éducation de la petite enfance. Conformément à une critique émergente de cette approche, la présente étude a examiné la conception et l’efficacité d’une intervention en alphabétisation combinant les neuf événements du modèle d’enseignement de Gagné avec la narration (numérique) d’histoires. Trois classes d’une maternelle publique en Indonésie ont participé à une étude expérimentale impliquant 45 enfants, âgés de 5 à 6 ans. La condition témoin était constituée des activités de classe habituelles. Pendant trois semaines, une condition expérimentale consistait à raconter des histoires, une autre condition expérimentale consistait à raconter des histoires numériques. Avant et après les trois semaines d’intervention en alphabétisation par narration d’histoires, des mesures de compétences en alphabétisation numérique ont été effectuées. Dans la condition de narration numérique, les capacités en alphabétisation des enfants ont augmenté de façon significative comparativement à celles des enfants dans la condition témoin. D’autres analyses exploratoires des données suggèrent que les deux types d’activités de narration ont renforcé la compétence en alphabétisation numérique plus que les activités habituelles d’alphabétisation. Les résultats doivent être reproduits avec une série élargie d’activités de narration auprès de plus grands groupes de participants.

Resumen

Cada día más, los educadores adoptan métodos formales en la enseñanza de lectura y escritura en la educación temprana. De acuerdo con una reciente crítica de este método, este estudio investiga el diseño y eficacia de una intervención en lectoescritura que combinó los nueve eventos de diseño instructivo de Gagné, con la narración de historias (digitales). Tres clases de un jardín infantil público en Indonesia participaron en un estudio experimental que incluyó a 45 niños entre 5 y 6 años de edad. El grupo de control recibió actividades curriculares en forma regular. Durante tres semanas, el grupo experimental recibió actividades de narración de historias y segundo grpo experimental recibió actividades de narración de historias en formato digital. Antes y después de la intervención de lectoescritura por tres semanas, se midieron las habilidades digitales de lectoescritura. En la condición de narración de historias en formato digital, las habilidades de lectoescritura de los niños aumentaron en forma significativa en comparación con los niños del grupo control. Otros análisis exploratorios de los datos sugieren que ambos tipos de actividades de narración de historias mejoraron las habilidades de lectoescritura digital más que las actividades de lectoescritura regular. Los hallazgos deben ser replicados con una serie extensa de actividades de narración de historias que involucren grupos con mayor número de participantes.

Similar content being viewed by others

Introduction

Success in school is dependent, to a great extent, upon the development of skills in reading and writing gained during the early childhood years (NAEYC 1998). The development of literacy skills should therefore be nurtured from an early age. Accordingly, an increasing number of parents and other educators now hold the opinion that a child should be able to learn to read and write in kindergarten. In response, many kindergartens have adopted formalized methods of instruction for teaching literacy. However, this approach has left little room for young children’s natural and playful way of learning (Bassok et al. 2016). This has led to a call for a design approach that supports literacy development and greater child engagement in the learning processes. One response to this call is a focus on storytelling. Storytelling is a natural way of communicating with young children.

Digital technology has also become a part of most children’s everyday experiences. By the time children reach kindergarten, they are likely to have had countless encounters with various digital forms of communication. Therefore, for today’s young children, to be literate should also include developing a range of digital skills and knowledge. Digital literacy development has received far less attention than traditional forms of literacy. The increasing integration of digital forms of reading and writing into everyday life suggests that the role of technology for (digital) literacy development of kindergarten children is also a timely and important topic.

The main aim of the present study is to describe a series of activities to support literacy and digital literacy skills in which storytelling is infused within an instructional design framework. In addition, a set of literacy and digital literacy measures were developed to assess the effects of these activities. To our knowledge, the effort to create a series of activities that blend a more formal type of instruction with storytelling and to assess the effects on digital literacy skills in early childhood education presents a relatively novel approach.

Storytelling and Digital Storytelling

Storytelling is a process in which someone (the storyteller) uses vocalization, narrative structure, and mental imagery to communicate with an audience (the listener) (Peck 1989). The listener also uses mental imagery and communicates back to the storyteller primarily through body language and facial expressions (Roney 1996). This interaction is repeated in multiple communication cycles during any storytelling event. Storytelling thus becomes an act of mutual meaning-making and learning by all participants (Katuscáková and Katuscák 2013). Storytelling supports literacy development because it allows children to also hear models about how language can be used. Storytelling can also promote writing skills by encouraging children to create their own stories, modify stories that they have heard, and even write plays based on familiar tales (Cassell 2004; Nicolopoulou et al. 2015).

For a long time, storytelling was employed spontaneously rather than as a deliberate and planned instructional approach in early childhood education (Coskie et al. 2010; Phillips 2013). The use of storytelling as an engaging and meaningful teaching approach in early literacy education began with the work of Kieran Egan (1985, 1986). Egan proposed that teaching is best shaped in story formats because storytelling stimulates children’s imagination which is a very powerful learning tool. Agosto (2016) noted that storytelling can nurture cognitive engagement, critical thinking, and story sequencing. Her research also showed that follow-up activities such as discussion, retelling, and topic-related activities (written, drama, oral) can further enhance literacy development.

Storytelling has always been at the core of human activity (Lambert 2010). Individuals and societies have continuously explored new ways to make stories compelling, moving, empowering, and everlasting. Recently, this has occurred by integrating information and communication technologies (ICT), yielding a form of digital storytelling (Brígido-Corachán and Gregori-Signes 2014). Generally, digital storytelling revolves around presenting short, personal narratives (Meadows 2003) that combine images with text, narration, voice, and music (Robin 2008). In other words, digital storytelling offers children story content in digital, technology-based formats.

Porter (2005) explained digital storytelling as integrating the ancient art of oral storytelling with an array of technical tools to present personal tales with images, graphics, music, and sound, including the storyteller’s voice. This definition resembles that of Robin (2008) and Psomos and Kordaki (2015) who characterized digital storytelling as traditional storytelling with multimedia. Similarly, Kearney et al. (2011) describes digital storytelling as a short narrative captured in video format. Several studies with older children have shown that digital storytelling can generate children’s interest and learning motivation and facilitate their understanding of complex subject matter (Robin 2008; Sadik 2008).

Empirical studies on digital storytelling in early childhood education have concentrated on the teacher (e.g., Kildan and Incikabi 2015; Yuksel-Arslan et al. 2016). Effects of digital storytelling on children have, to our knowledge, been investigated for math or ICT, but not literacy (e.g., Preradovic et al. 2016). The relationship of digital storytelling to children’s overall literacy development in early childhood education is therefore yet to be explored.

Measuring Literacy and Digital Literacy Development

Literacy is the use of social practices of creating and interpreting meaning through text (Kern 2000). A person who focuses on text comprehension is reading to learn, and while doing so becomes literate. To be able to read to learn, children should learn to read (Robinson et al. 2013). There are considerable debates about when children should develop their reading skills and how the acquisition of literacy should manifest itself in the early years (Fletcher-Flinn 2015).

In early literacy development, a distinction is often made between code- and meaning-related skills (Lonigan et al. 2011). Code-related skills include print knowledge, alphabet knowledge, and phonological awareness, among other things (Owodally 2015). Meaning-related skills include vocabulary, grammatical ability, and oral narrative ability (Westerveld et al. 2015). Knowledge about causes, correlates, and predictors of children’s reading successes and failures in primary and secondary education has expanded greatly in recent decades. This knowledge has led to the development of standardized and nonstandardized methods of measuring literacy development in early childhood (Lonigan et al. 2011).

A common form of nonstandardized methods for measuring literacy development revolves around teacher observations and related assessments such as checklists, rating scales, and portfolios of children’s products. Since the procedures are not standardized, the demonstration of children’s skills may not be uniformly measured across all children. A common critique of nonstandardized methods is that teacher observations are often informal rather than well-structured, resulting in measurement of skills that only reflect the teacher’s judgment of the child (Brown and Rolfe 2005).

A popular standardized method for literacy development assessment is the dynamic indicators of basic early literacy skills test (DIBELS; Kamii and Manning 2005). The DIBELS mainly focuses on code-related skills. It consists of the following subtests: initial sounds fluency, letter naming fluency, phonemic segmentation fluency, nonsense word fluency, and word use fluency. The suitability of the DIBELS, and other standardized instruments, for measurement of early childhood literacy development, has generated considerable debate (Myers et al. 1996; National Research Council 2000). Concerns have been voiced about time required for such assessment, distractions that interfere with accurate measurement of capabilities, and young children’s limited test-taking abilities to assess competence. In view of these concerns, this study has adapted content from standardized tests to align with the directions of the local curriculum. The measurement of skills in this study focused on just three key skills of literacy development: alphabet recognition, phonological awareness, and print awareness. Meaningful items were created for authenticity of the child assessments.

The increasing use of technology in children’s everyday life has stimulated the emergence of digital literacy which has also been referred to as computer, technology, information, media, and communication literacies (Martin and Grudziecki, 2006). All these terms share the view that they involve technology other than text. Throughout this paper, we use the term digital literacy to refer to the use of social practices of creating and interpreting meaning through texts with technology (e.g., Kern 2000). According to Ng (2015), a digitally literate person is a competent user of three dimensions of digital technology: technical, cognitive, and socio-emotional. The dimension of technical skills is the most developed area of digital skills measurement. It includes knowledge of devices and operating skills with a focus on technical usage of computer (Ba et al. 2002), online abilities (Sonck et al. 2011), exploring tablets (Marsh 2016), and operational use of digital devices (Eshet-Alkalai and Chajut 2009; Eshet-Alkali and Amichai-Hamburger 2004; Eshet 2012). However, there is little research related to the assessment of cognitive or socio-emotional dimensions of digital literacy.

The present study considers cognitive and socio-emotional skills dimensions because they are important prerequisites of digital literacy. This choice is also in line with a definition of digital literacy in early childhood education that has emphasized the use of digital and non-digital practices in using different digital technologies (Burnett et al. 2014; Sefton-Green et al. 2016). The cognitive skills dimension of digital literacy includes critical thinking and multimodality, which infers that communication and representation is more than just about language. The socio-emotional skills dimension includes communicative and social skills. We did not assess technical skills development in this study because available tests are too complex for young children, and we wanted to stay away from highly specific facets of technical skills and investigate more general cognitive and socio-emotional dimensions.

Measurement for both literacy and digital literacy development in the present study has taken into account two critical considerations: (1) a focus on content and determining what should be measured, in order to be able to assess the effectiveness of designed activities; (2) establishing a procedure which was not time-limited in order not to put children in stressful situations.

The Current Study

The main research question addressed in this research is: Do oral or digital storytelling activities increase literacy and digital literacy development? In order to answer this question, an experimental design was adopted that included three different conditions: a control condition with usual literacy practices implemented in one classroom and two experimental conditions implemented in two other classrooms. One condition focused on literacy through oral storytelling, and the other condition focused on digital literacy using technology-based storytelling. No differences in children’s outcomes for literacy were expected between the two experimental conditions.

Method

Participants

The study was conducted in a public preschool in Indonesia. The study involved three classrooms that accommodated 45 children (25 girls and 20 boys), with an average age of 5.39 years (SD = 0.41). All classrooms used the same curriculum.

Research Design

The research design was quasi-experimental with three conditions. Classrooms were randomly assigned to conditions: control (C); oral storytelling (S); and digital storytelling (DS). In the control condition (C), the children received their regular classroom literacy enhancement activities. This condition yielded baseline data and information on maturation of literacy and digital literacy development across the time of the study. In one experimental condition, the children received oral storytelling activities (S), in which a story was read aloud and presented with some visual clues. In the other experimental condition, the children received digital storytelling activities (DS) and the children watched and listened to a recorded story.

Instructional Materials

There were three storytelling sessions for each of the experimental conditions. The theme for the three storytelling sessions was “Me.” This is a common theme for classroom activities at the beginning of the school year. The sessions focused on “My Name,” “My Birthday,” and “My Hobby.” The content of sessions had an identical story line across the experimental conditions. The didactic approach in each activity session followed Gagné’s events of instructional design (Smith and Ragan, 2005). For example, the theme of the first activity was “My name” (See Table 1). The main objective was to enhance the children’s understanding of their own identity as presented in oral and printed form. More specifically, the activities supported the development of the recognition of letters and the sounds associated with letters in children’s full name and nickname. The story line revolves around a boy who likes his name, recognizes his name written on his belongings, and also recognizes the sounds of his name.

Assessment Instruments

Literacy Measures

The literacy measures assessed three core skills of early reading and writing, namely alphabet knowledge, phonological awareness, and print awareness. Alphabet knowledge and phonological awareness play different roles in literacy acquisition and development. These two sets of knowledge are both necessary for the acquisition of literacy (Muter 1994). The same literacy measures were used before and after the children had participated in the three intervention activities.

Alphabet knowledge is one important aspect in the acquisition of literacy during the early childhood years (Foulin 2005; Wood and McLemore 2001). It is defined as the ability to name, distinguish shapes, write, and identify the sounds of the alphabet (Piasta and Wagner 2010).

Phonological awareness is another important skill linked to the acquisition and development of reading skills in school (McLachlan and Arrow 2010). It is an awareness of sounds in spoken words that is revealed by abilities such as rhyming, matching initial consonants, and counting the number of phonemes in spoken words. Phonological awareness has been measured, and consequently defined, by many different tasks. One of them is isolating single sounds from words (Murray et al. 1996).

Print awareness refers to children’s ability to recognize the function and form of print and the relationship between oral and written language (Pullen and Justice 2003). Important reading prerequisites include children’s ability to recognize environmentally embedded and contextualized print, to understand the form and function of print, and to perceive the relationship between speech and print (Kassow 2006). In their study, Justice and Ezell (2001) highlight the importance of measuring this ability by using storybooks in order to provide a meaningful context for the words.

Literacy Assessment

This involved assessment of five skills: (1) identify the initial sound of words (phonological awareness), (2) know your own name (print awareness), (3) recognize letters (alphabet knowledge), (4) recognize everyday words (print awareness), and (5) recognize names in written form (print awareness). The child’s skill level was measured by asking increasingly more complex questions for each feature. Assessment of any of the literacy skills was stopped if a child failed to correctly answer a question. The children’s responses were scored with a rubric using a 4-point scale for each task (See “Appendix A”). Before beginning each part of the literacy assessment, the child was given two practice items.

For example, the process for the literacy assessment item of “identify the initial sound of words” involved stimulus material consisting of 12 words in everyday use, and each word consisted of two to three phonemes. After hearing the word, the child was asked to say the first sound in the word. Because many children would identify the name of the letter rather than the sound, they were given feedback on their responses to the practice items to make sure that they understood the task. If a child failed to identify the initial sound of the first word given, he/she would receive no points for that part of the assessment. If a child answered the first item correctly, a new word was then presented. Reliability analyses using Cronbach’s alpha showed that there were satisfactory results for the overall literacy pretest (α = 0.63) and posttest (α = 0.79).

Digital Literacy Measures

Measurement involved an assessment of cognitive and socio-emotional skills that are considered prerequisites of digital literacy. Since this procedure was supplemental to, and independent of, the general classroom literacy assessment, data on these measures were gathered from five randomly chosen participants from each classroom (i.e., five children per condition). The average age of these 15 children (eight girls and seven boys) was 5.47 years (SD = 0.33) which was similar to the average age for the whole sample.

Cognitive skills items assessed early expressions of multimodality and critical thinking, abstract and reflective thinking (Kazakoff 2015; Wenner et al. 2008). The measure linked the items to storytelling and operationalized these items as recall of a past event, planning for a new event and picture reading. For instance, recall of a past experience is important for story comprehension. It hinges on reflection about the context of an event (who, where, and when) and on the ability to explain an unfolding story (how and why). The ability to “read” a picture is an important skill in digital literacy. This facet in the digital literacy measure was the counterpart of the purely text-oriented items in the literacy assessment.

Socio-emotional skills were assessed by communication about the self with others through digital platforms (Ng 2015). An important aspect of this skill in early childhood concerns is having a sense of self-identity (Marsh et al. 2005). The development of self-identity includes knowing one’s name, age, and gender. In addition, it concerns how the child’s understands his/her place in the world (Raburu 2015).

The digital literacy measure consisted of a rubric with the following items: (1) recall a past event (cognitive skills); (2) plan an event (cognitive skills); (3) read a picture (multimodality—cognitive skills); (4) understand one’s own identity (socio-emotional skills); and (5) engage in a conversation (socio-emotional skills).

Digital Literacy Assessment

Administration of the digital literacy measures followed the same procedure as for the literacy test (see “Appendix B” for all items and the scoring rubric). For the measure “recall a past event,” the items were focused on children’s ability to identify details from a single, personal experience. Each child was asked to recall a recent holiday experience. If a child could not recall a single experience, the score for this test item would be zero points. If a child recalled an event, he/she was asked to provide details about that experience. The allotted points would then depend on the number of details that were given (i.e., what, where, when, with who, and how). If a child started to mention another event, the experimenter would redirect the discussion to the original event. If the child could not do this or could not give details, the questions for this item ended. Reliability analyses using Cronbach’s alpha showed that there were satisfactory results for the digital literacy pretest (α = 0.65) and posttest (α = 0.87).

Procedure

The intervention study consisted of three phases: pretest (Week 1), implementation (Weeks 2–4), and posttest (Week 5). In the pretest, a group of three to five children gathered in the reading room of the school where the experimenter and a research assistant were present. The experimenter would then engage with each child, in turn, for the assessments. Administration of the literacy assessment took 10–15 min for each child, while the digital literacy assessment took 25–35 min for each child. During the assessment process for each child, the other children in the room engaged in playful activities led by the research assistant.

During the implementation phase, in the control condition, the children participated in the regular literacy-focused classroom activities led by the teacher and with the experimenter present. In the experimental conditions (storytelling and digital storytelling), the activities were given by the experimenter while the regular classroom teacher was present to assist with the children. The overall structure of these activities was the same in both conditions (see also Table 1). First, there was storytelling. Next, there was a whole-class discussion about the story, and finally, there were follow-up activities. Each storytelling session began with the experimenter explaining the rules for the session and preparing the children for the story by informing them about the title, characters, and main idea.

In the oral storytelling condition, the experimenter then read the story along with presenting some visual clues to the children. While in the digital storytelling condition, the story was prerecorded and included texts, pictures, voices, and sounds and then was digitally presented using a projection device. The story in the first storytelling session was about a boy who likes his name, followed by a whole-class discussion about the story. This was followed by two story-related activities. One of these was an individual project with a focus on children’s creativity; for example, children designed a book label using the letters of their own name. The second follow-up activity was a group project, such as dramatic play involving an exchange of the children’s name cards.

The posttest was administered in the week following the three activity sessions with a similar procedure to the pretest sessions.

Data Analysis

Eight of the 45 children participating in the intervention study did not complete both the literacy pretest and posttest because of absence (five children) or because they were special needs students (three children). Only complete data for the 37 children who completed all assessment tasks were used in the final analyses.

Because the distribution of scores on some measures in the pretest and posttest violated measurement assumptions for normality and homogeneity of variance, we report the findings using nonparametric tests (i.e., Kruskal–Wallis H test). Significant findings were followed by post hoc tests (i.e., Mann–Whitney U test); two-tailed tests were used with alpha set at 0.017 (0.05/3), using Bonferroni corrections for multiple tests. For effect size, we report the r-statistic (Field 2005). This statistic is qualified as small, medium, and large effects for the values r = 0.10, r = 0.30, and r = 0.50, respectively. For change in assessment from pre to post, key assumptions for gain scores (i.e., posttest score minus the pretest score) were met to assess change using analysis of variance (ANOVA), followed by Tukey HSD post hoc analysis after significance. For these effect sizes, we report the d-statistic (Cohen 1988). The findings are classified as follows: small for d = 0.20, medium for d = 0.50, and large for d = 0.80, respectively.

Results

Development of Literacy Skills

Table 2 presents the mean item scores and standard deviations for the literacy and digital literacy assessments for each group (control condition; oral storytelling condition; and digital storytelling conditions). These mean scores showed that the overall scores of the participants in all three conditions at pretest and posttest were below the mid-scale value of 2. In addition, there were moderate levels of variance, as indicated by the standard deviations.

The scores on the literacy pretest did not differ between conditions, H (36) = 0.648, p = 0.723. In contrast, there was a statistically significant difference on the literacy posttest between conditions, H (36) = 8.26, p = 0.016. Post hoc tests showed that there was a statistically significant and large effect for the comparison between the control condition and digital storytelling condition, U (25) = 128.50, z = 2.82, p = 0.005, r = 0.56. There was no difference between the control and storytelling condition or between the storytelling and digital storytelling condition, respectively, U (25) = 81.50, z = 0.193, p = 0.847; U (25) = 90.50, z = 0.485, p = 0.485.

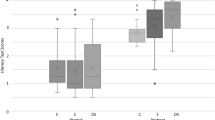

The boxplots for the literacy posttest, shown in Fig. 1, provide a visual presentation of differences in outcomes by condition. The shaded areas in each box represent the mid 50% of the scores. The horizontal line in the box is the median score in each condition. The top (or bottom) 25% of scores are shown in the distance between the highest (or lowest) horizontal line and the highest (or lowest) edge of the shaded box. The shaded boxes of the control and digital storytelling condition do not overlap (indicating significant difference between these two groups as identified by the statistical tests). In contrast, there is some overlap between the oral storytelling and digital storytelling conditions. The median scores of the two experimental conditions are also similar.

Data analyses for the learning gain (Lgain) scores showed a mean overall improvement for literacy skills of 0.21 (SD = 0.21). For the control, storytelling, and digital storytelling condition, the mean Lgain score was, respectively, for each condition, M = 0.03 (SD = 0.08), M = 0.20 (SD = 0.19), and M = 0.38 (SD = 0.18). The ANOVA for Lgain scores showed that there was a statistically significant difference between conditions, F (2, 33) = 14.74, p < 0.001. Post hoc comparisons indicated that the control had a lower Lgain score than the storytelling condition, p = 0.014, d = 1.17, and also a lower Lgain score than the digital storytelling condition, p < 0.001, d = 2.53. For both comparisons, a large effect size was obtained. In addition, the analysis showed that there was a significantly higher Lgain score in the digital storytelling compared to the storytelling condition, p = 0.008, d = 0.97, and with a large effect size.

Development of Digital Literacy Skills

The pretest results showed that all participants (n = 15) in the three conditions (control, oral storytelling, digital storytelling) had comparable digital skills at the start of the activities, M = 1.88 (SD = 0.23), M = 1.92 (SD = 0.18), and M = 1.88 (SD = 0.23). After the activities, the posttest results were M = 2.04 (SD = 0.22), M = 2.80 (SD = 0.28), and M = 3.00 (SD = 0.42). The results also showed that the median score and the distribution of the digital literacy posttest scores in the control condition did not overlap with those of the experimental conditions. In contrast, there was an overlap of the distribution of the scores between the two experimental conditions and also the median scores were close to each other.

The Kruskal–Wallis test showed that there was no statistically significant difference between conditions on the digital literacy pretest, H (15) = 0.153, p = 0.926. In contrast, a statistically significant difference was found on the posttest, H (15) = 9.40, p = 0.09. Post hoc tests showed that there was a statistically significant and large difference for the comparison between the control and storytelling condition, U (10) = 24.50, z = 2.58, p = 0.008, r = 0.82, and for the comparison between the control and digital storytelling condition, U (10) = 25, z = 2.65, p = 0.008, r = 0.84. The two experimental conditions did not differ significantly, U (10) = 15.50, z = 0.64, p = 0.523.

Data analyses for the digital learning gain (DLgain) scores showed that all conditions showed a mean overall improvement for digital literacy skills of 0.72 (SD = 0.45). For the control, storytelling and digital storytelling condition, the mean DLgain was, respectively, M = 0.16 (SD = 0.09), M = 0.88 (SD = 0.18), and M = 1.12 (SD = 0.23). The ANOVA for DLgain showed that there was a statistically significant difference between conditions, F (2, 14) = 40.70, p < 0.001. Post hoc comparisons indicated that the control had a lower DLgain than the storytelling condition, p < 0.001, d = 5.06, and also a lower DLgain than the digital storytelling condition, p < 0.001, d = 5.50. For both comparisons, a large effect size was obtained. In addition, the analysis showed that there was no significant difference for DLgain between the digital storytelling compared to the storytelling condition, p = 0.051.

Discussion

Increased attention to (digital) literacy goals in early childhood education has been accompanied by more formalized educational practices. These endeavors have been criticized as being out of tune with how young children (like to) learn (Miller and Almon 2009). The present study investigated the design and effectiveness of a storytelling approach to achieving digital literacy goals. The storytelling activities were systematically structured by adopting the events of instructional design proposed by Gagné (Smith and Ragan 2005).

The core content of these activities involved storytelling to enable a natural, playful way of learning. This also is in accord with the view that storytelling is a cornerstone of digital literacy development. Storytelling provided the objectives, models, and motivation for literacy skills application. For example, storytelling in the first activity revolved around a child’s name and included how to say, spell, and write that name. Follow-up activities provided opportunities for practice of the presented skills. These activities stimulated the children to apply what they had learned to their own situations. This format was the same across the two experimental conditions, although in the digital storytelling condition the story was prerecorded, using multimedia including texts, pictures, voices, and sounds. In the design of the stories, special attention was given to considerations from multimedia learning theory (Mayer and Moreno 2003). That is, the design aimed for a strong match between the verbal and nonverbal information in the stories (Takacs et al. 2015). In the digital storytelling activities, the digital content aimed to support the children’s activities and engagement with the real world was highlighted in accord with the statement published by NAEYC and Fred Rogers Center (2012).

The measures used assessed a broad spectrum of literacy skills in early childhood. The use of rubrics to implement authentic assessment tasks (Bagnato et al. 2014) also avoided subjectivity in the measurement processes by the use of the rubric.

The administration of a pretest afforded an assessment of learning gains and enabled us to test the comparability of the children’s starting levels of (digital) literacy skills across classrooms. Pretest scores indicated that there were no differences in the mean skills levels in classrooms/conditions at the pretest assessments.

The digital storytelling activities had a significant effect on the children’s literacy skills development. Whereas the children in the control condition showed little improvement across the intervention period, the children in the experimental conditions achieved significant gains in literacy skills. Findings suggested that the digital storytelling activities also enhanced the children’s digital literacy skills. For digital literacy development, significant gains were found only for the experimental conditions. Storytelling activities seemed to be equally effective, across the experimental conditions, for developing the children’s digital literacy skills.

The findings showed that the effects of the digital literacy activities on digital literacy development were promising. However, this part of the research was exploratory and involved only a small sample of children who participated in the assessments. It is recommended that future research include bigger sample sizes to form more definitive conclusions on the effects of storytelling sessions on digital literacy development. A second methodological limitation of the research was that the storytelling activities were led by the experimenter while the regular activities in the control condition were taught by the classroom teacher. During the activities, the teacher or experimenter served as a teaching assistant and vice versa. We decided to adopt this course of action to disrupt as little as possible the normal classroom routine in the control classroom.

Conclusions

Overall, the research indicated that the integration of a storytelling approach with the didactic approach of Gagné’s nine events of instruction is a promising approach for enhancing literacy and digital literacy development in early childhood classrooms. Nevertheless, to move beyond the entertainment or novelty effect that digital storytelling might bring (Campbell 2012), future studies might consider designing a more extensive series of activities to reduce the novelty effect and to maximize all possible benefits that might result from digital storytelling activities.

References

Agosto, D. (2016). Why storytelling matters. Children and Libraries, 14(2), 21–26. https://doi.org/10.5860/cal.14n2.

Ba, H., Tally, W., & Tsikalas, K. (2002). Investigating children’s emerging digital literacies. The Journal of Technology, Learning, and Assessment, 1(4), 1–47.

Bagnato, S. J., Goins, D. D., Pretti-Frontczak, K., & Neisworth, J. T. (2014). Authentic assessment as “best practice” for early childhood intervention: National consumer social validity research. Topics in Early Childhood Special Education, 34(2), 116–127. https://doi.org/10.1177/0271121414523652.

Bassok, D., Latham, S., & Rorem, A. (2016). Is kindergarten the new first grade? AERA Open, 2(1), 1–31. https://doi.org/10.1177/2332858415616358.

Brígido-Corachán, A. M., & Gregori-Signes, C. (2014). Digital storytelling and its expansion across educational context. In A. M. Brígido-Corachán & C. Gregori-Signes (Eds.), Appraising digital storytelling across educational contexts (pp. 13–29). Valéncia: Universitat de Valéncia.

Brown, J., & Rolfe, S. A. (2005). Use of child development assessment in early childhood education: Early childhood practitioner and student attitudes toward formal and informal testing. Early Child Development and Care, 175(3), 193–202. https://doi.org/10.1080/0300443042000266240.

Burnett, C., Merchant, G., Pahl, K., & Rowsell, J. (2014). The (im)materiality of literacy: The significance of subjectivity to new literacies research. Discourse: Studies in the Cultural Politics of Education, 35(1), 90–103. https://doi.org/10.1080/01596306.2012.739469.

Campbell, T. A. (2012). Digital storytelling in an elementary classroom: Going beyond entertainment. Paper presented at the ICEEPSY 2012, Turkey.

Cassell, J. (2004). Towards a model of technology and literacy development: Story listening systems. Journal of Applied Developmental Psychology, 25(1), 75–105. https://doi.org/10.1016/j.appdev.2003.11.003.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Erlbaum.

Coskie, T., Trudel, H., & Vohs, R. (2010). Creating community through storytelling. Talking Points, 22(1), 2–9.

Egan, K. (1985). Teaching as storytelling: A non-mechanistic approach to planning teaching. Journal of Curriculum Studies, 17(4), 397–406. https://doi.org/10.1080/0022027850170405.

Egan, K. (1986). Teaching as storytelling: An alternate approach to teaching and curriculum in the elementary school. Chicago: University of Chicago Press.

Eshet, Y. (2012). Thinking in the digital era: A revised model for digital literacy. Issues in Informing Science and Information Technology, 9, 267–276. https://doi.org/10.28945/1621.

Eshet-Alkalai, Y., & Chajut, E. (2009). Changes over time in digital literacy. Cyberpsychology & Behavior, 12(X), 1–3. https://doi.org/10.1089/cpb.2008.0264.

Eshet-Alkali, Y., & Amichai-Hamburger, Y. (2004). Experiments in digital literacy. Cyberpsychology & Behavior, 7(4), 421–429.

Field, A. (2005). Discovering statistics using IBM SPSS statistics (2nd ed.). London: Sage.

Fletcher-Flinn, C. M. (2015). Editorial: Frontiers in the acquisition of literacy. Frontiers in Psychology, 6, 1–2. https://doi.org/10.3389/fpsyg.2015.01019.

Foulin, J. N. (2005). Why is letter-name knowledge such a good predictor of learning to read? Reading and Writing, 18(2), 129–155. https://doi.org/10.1007/s11145-004-5892-2.

Justice, L. M., & Ezell, H. K. (2001). Word and print awareness in 4-year old Children. Child Language Teaching and Theraphy, 17(3), 20. https://doi.org/10.1177/026565900101700303.

Kamii, C., & Manning, M. (2005). Dynamic indicators of basic early literacy skills (DIBELS): A tool for evaluating student learning? Journal of Research in Childhood Education, 20(2), 75–90. https://doi.org/10.1080/02568540509594553.

Kassow, D. Z. (2006). Environmental print awareness in young children. Talaris Research Institute, 1(3), 1–8.

Katuscáková, M., & Katuscák, M. (2013). The effectiveness of storytelling in transferring different types of knowledge. Paper Presented at the European Conference on Knowledge Management, Kidmore End.

Kazakoff, E. R. (2015). Technology-based literacies for young children: Digital literacy through learning to code. In K. L. Heider & M. R. Jalongo (Eds.), Young children and families in the information age, educating the young child (pp. 43–60). Dordrecht: Springer.

Kearney, M., Jones, G., & Roberts, L. (2011). An emerging learning design for student-generated ‘iVideos’. Paper Presented at the 6th International LAMS & Learning Design Conference, Sydney: LAMS Foundation.

Kern, R. (2000). Literacy and language teaching. UK: Oxford University Press.

Kildan, A. O., & Incikabi, L. (2015). Effects on the technological pedagogical content knowledge of early childhood teacher candidates using digital storytelling to teach mathematics. Education 3-13, 43(3), 238–248. https://doi.org/10.1080/03004279.2013.804852.

Lambert, J. (2010). Digital storytelling cookbook (pp. 1–35). Retrieved from https://wrd.as.uky.edu/sites/default/files/cookbook.pdf.

Lonigan, C. J., Allan, N. P., & Lerner, M. D. (2011). Assessment of preschool early literacy skills: Linking children’s educational needs with empirically supported instructional activities. Psychology in the Schools, 48(5), 488–501. https://doi.org/10.1002/pits.20569.

Marsh, J. (2016). The digital literacy skills and competences of children of pre-school age. Media Education: Studies and Research, 7(2), 197–214. https://doi.org/10.14605/MED721603.

Marsh, J., Brooks, G., Hughes, J., Ritchie, L., Roberts, S., & Wright, K. (2005). Digital beginnings: Young children’s use of popular culture, media and new technologies. Retrieved from http://www.digitalbeginnings.shef.ac.uk/DigitalBeginningsReport.pdf.

Martin, A., & Grudziecki, J. (2006). DigEuLit: Concepts and tools for digital literacy development. Innovation in Teaching and Learning in Information and Computer Sciences, 5(4), 1–19. https://doi.org/10.11120/ital.2006.05040249.

Mayer, R. E., & Moreno, R. (2003). Nine ways to reduce cognitive load in multimedia learning. Educational Psychologist, 38(1), 43–52. https://doi.org/10.1207/S15326985EP3801_6.

McLachlan, C., & Arrow, A. (2010). Alphabet and phonological awareness: Can it be enhanced in the early childhood setting? International Research in Early Childhood Education, 1(1), 84–94.

Meadows, D. (2003). Digital storytelling: Research-based practice in new media. Visual Communication, 2(2), 189–193. https://doi.org/10.1177/1470357203002002004.

Miller, E., & Almon, J. (2009). Crisis in the kindergarten: Why children need to play in school. College Park, MD: Alliance for Childhood.

Murray, B. A., Stahl, S. A., & Ivey, M. G. (1996). Developing phoneme awareness through alphabet books. Reading and Writing, 8(4), 307–322. https://doi.org/10.1007/bf00395111.

Muter, V. (1994). Influence of phonological awareness and letter knowledge on beginning reading and spelling development. In C. Hulme & M. Snowling (Eds.), Reading development and dyslexia (pp. 45–62). London: Whurr.

Myers, C. L., McBride, S. L., & Peterson, C. A. (1996). Transdisciplinary, play-based assessment in early childhood special education: An examination of social validity. Topics in Early Childhood Special Education, 16(1), 102–126. https://doi.org/10.1177/027112149601600109.

NAEYC. (1998). Learning to read and write: Developmentally appropriate practices for young children. Young Children, 53(4), 30–46.

NAEYC, & Fred Rogers Center for Early Learning and Children’s Media. (2012). [Position Statement] Technology and interactive media as tools in early childhood programs serving children from birth through age 8. Retrieved from https://www.naeyc.org/resources/topics/technology-and-media.

National Research Council. (2000). Assessment in early childhood education eager to learn: Educating our preschoolers (pp. 233–260). Washington: The National Academies Press. https://doi.org/10.17226/9745.

Ng, W. (2015). Digital literacy: The overarching element for successful technology integration new digital technology in education: Conceptualizing professional learning for educators (pp. 125–145). Switzerland: Springer.

Nicolopoulou, A., Cortina, K. S., Ilgaz, H., Cates, C. B., & de Sa, A. B. (2015). Using a narrative- and play-based activity to promote low-income preschoolers’ oral language, emergent literacy, and social competence. Early Childhood Research Quarterly, 31, 147–162. https://doi.org/10.1016/j.ecresq.2015.01.006.

Owodally, A. M. A. (2015). Code-related aspects of emergent literacy: How prepared are preschoolers for the challenges of literacy in an EFL context? Early Child Development and Care, 185(4), 509–527. https://doi.org/10.1080/03004430.2014.936429.

Peck, J. (1989). Using storytelling to promote language and literacy development. The Reading Teacher, 43(2), 138–141.

Phillips, L. (2013). Storytelling as pedagogy. Literacy Learning: The Middle Years, 21(2), ii–iv.

Piasta, S. B., & Wagner, R. K. (2010). Developing early literacy skills: A meta-analysis of alphabet learning and instruction. Reading Research Quarterly, 45(1), 8–38. https://doi.org/10.1598/RRQ.45.1.2.

Porter, B. (2005). Digitales: The art of telling digital stories. Denver: Bernajean Porter Consulting.

Preradovic, N. M., Lesin, G., & Boras, D. (2016). Introduction of digital storytelling in preschool education: A case study from Croatia. Digital Education Review, 30, 94–105.

Psomos, P., & Kordaki, M. (2015). A novel educational digital storytelling tool focusing on students misconceptions. Procedia -Social and Behavioral Sciences, 191, 82–86. https://doi.org/10.1016/j.sbspro.2015.04.476.

Pullen, P. C., & Justice, L. M. (2003). Enhancing phonological awareness, print awareness, and oral language skills in preschool children. Intervention in School and Clinic, 39(2), 87–98.

Raburu, P. A. (2015). The self-who am I?: Children’s identity and development through early childhood education. Journal of Educational and Social Research, 5(1), 95–102. https://doi.org/10.5901/jesr.2015.v5n1p95.

Robin, B. R. (2008). Digital storytelling: A powerful technology tool for the 21st century classroom. Theory Into Practice, 47(3), 220–228. https://doi.org/10.1080/00405840802153916.

Robinson, E. J., Einav, S., & Fox, A. (2013). Reading to learn: Prereaders’ and early readers’ trust in text as a source of knowledge. Developmental Psychology, 49(3), 505–513. https://doi.org/10.1037/a0029494.

Roney, R. C. (1996). Storytelling in the classroom: Some theoretical thoughts. Storytelling World, 9(Winter/Spring), 7–9.

Sadik, A. (2008). Digital storytelling: A meaningful technology-integrated approach for engaged student learning. Educational Technology Research and Development, 56(4), 487–506. https://doi.org/10.1007/s11423-008-9091-8.

Sefton-Green, J., Marsh, J., Erstad, O., & Flewitt, R. (2016). Establishing a research agenda for the digital literacy practices of young children: A white paper for COST Action IS1410. Retrieved from http://digilitey.eu/wp-content/uploads/2015/09/DigiLitEYWP.pdf.

Smith, P. L., & Ragan, T. J. (2005). Instructional design (3rd ed.). Danvers: Wiley.

Sonck, N., Livingstone, S., Kuiper, E., & de Haan, J. (2011). Digital literacy and safety skills. Retrieved from http://eprints.lse.ac.uk/33733/1/Digital%20literacy%20and%20safety%20skills%20(lsero).pdf.

Takacs, Z. K., Swart, E. K., & Bus, A. G. (2015). Benefits and pitfalls of multimedia and interactive features in technology-enhanced storybooks: A meta-analysis. Review of Educational Research, 85(4), 698–739. https://doi.org/10.3102/0034654314566989.

Wenner, J. A., Burch, M. M., Lynch, J. S., & Bauer, P. J. (2008). Becoming a teller of tales: Associations between children’s fictional narratives and parent-child reminiscence narratives. Journal of Experimental Child Psychology, 101(1), 1–19. https://doi.org/10.1016/j.jecp.2007.10.006.

Westerveld, M. F., Trembath, D., Shellshear, L., & Paynter, J. (2015). A systematic review of the literature on emergent literacy skills of preschool children with autism spectrum disorder. The Journal of Special Education, 50(1), 37–48. https://doi.org/10.1177/0022466915613593.

Wood, J., & McLemore, B. (2001). Critical components in early literacy—knowledge of the letters of the alphabet and phonics instruction. The Florida Reading Quarterly, 38(2), 1–8.

Yuksel-Arslan, P., Yildirim, S., & Robin, B. R. (2016). A phenomenological study: Teachers’ experiences of using digital storytelling in early childhood education. Educational Studies, 42(5), 427–445. https://doi.org/10.1080/03055698.2016.1195717.

Acknowledgements

The authors acknowledge the funding by DIKTI scholarship from Directorate General of Higher Education, Ministry of Research, Technology and Higher Education of the Republic of Indonesia.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in this study involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. The study has been approved by the Ethical Committee of University of Twente.

Informed Consent

Informed consent was obtained from the parents/guardians of all individual participants included in the study.

Appendices

Appendix A: Scoring Rubric for the Literacy Assessment

# | Key skills assessed | 0 point | 1 point | 2 points | 3 points | 4 points |

|---|---|---|---|---|---|---|

1 | Phonological awareness identifies initial sound of words; oral presentation | Needs help to identify initial sound | 1–4 words identified | 5–6 words identified | 7–8 words identified | 9–10 words identified |

2 | Print awareness and early comprehension know own name; oral presentation | Needs help to say own name | Own name said clearly | Own name said clearly; spells it correctly; | Own name said clearly; spells it correctly; writes name from example | Own name said clearly; spells it correctly; writes name without example |

3 | Alphabet recognition recognizes letters; written (storybook) | Needs cues to recognize any letters in a storybook | 1–4 letters recognized in a storybook | 5–6 letters recognized in a storybook | 7–8 letters recognized in a storybook | 9–10 letters recognized in a storybook |

4 | Print awareness recognizes daily words; written presentation (storybook) | Needs help to recognize any words in a storybook | 1 word recognized in a storybook | 2–3 words recognized in a storybook | 4–5 words recognized in a storybook | 6 words recognized in a storybook |

5 | Print awareness recognizes names in written form; written presentation | Needs help to recognize friend’s name on attendance list | Friend’s name recognized on attendance list | 2–3 names recognized on attendance list | 4–5 names recognized on attendance list | 6 names recognized on attendance list |

Appendix B: Scoring Rubric for the Digital Literacy Test

# | Item (skill) | 0 point | 1 point | 2 points | 3 points | 4 points |

1 | Recall a past event (cognitive) | No response given | Contains information about: “what” | Contains information about: what and where, when, who | Contains information on: what, where, when, who (three or four pieces of information) | Contains information on: what, where, when, who, how, why |

2 | Plan an event (cognitive) | No response given | Contains information about “what” | Contains information on what and where/when/who | Contains some information on: what, who, where, when (3 or 4) | Contains information on: what, who, where, when, how, why |

3 | Read a picture (cognitive) | Needs help to identify features observed in a picture | Identifies 2 features observed in a picture(s) | Mentions 2 features observed in picture; infers story of picture | Mentions 3–4 features observed in pictures; infers story of two pictures | Mentions 3–4 features observed in pictures; infers story of three pictures |

4 | Recognize own identity (socio-emotional) | Needs help to mention own full name | Mentions own full name | Mentions own full name and age | Mentions own full name clearly; age and own birthday (incomplete) | Mentions own full name clearly; age own birthday (complete: date, month, and year) |

5 | Engage in conversation (socio-emotional) | Passively responds to questions | Needs reminders to follow etiquette of conversation | Needs reminders to follow some etiquette rules of conversation | Needs reminders to follow a few etiquette rules of conversation | Follows all rules of conversation (e.g., maintains eye contact; waits for his/her turn to speak; asks correctly; and responds correctly) |

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Maureen, I.Y., van der Meij, H. & de Jong, T. Supporting Literacy and Digital Literacy Development in Early Childhood Education Using Storytelling Activities. IJEC 50, 371–389 (2018). https://doi.org/10.1007/s13158-018-0230-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13158-018-0230-z