Abstract

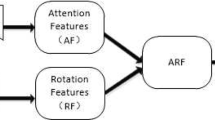

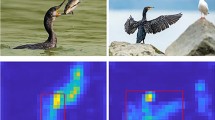

Aggregating deep features for image retrieval has shown excellent performance in terms of accuracy. However, exploring visual perception properties to activate the dormant discriminative cues of deep convolutional feature maps has received little attention. To address this issue, we present a novel representation, namely the deep orientation aggregation histogram, to image retrieval via aggregating deep orientation structure features. Its main highlights are: (1) A statistical orientation computation model is proposed to detect candidate directions. It will help to use the feature maps to exploit various orientation to provide robust representation. (2) A computed module is proposed to active the discriminative orientation cues hidden in the deep convolutional feature maps. It can boost the representation of deep features with aid of the statistical orientation and their orientation structures. (3) The proposed method can stimulate orientation-selectivity mechanism to provide a strong discriminative yet compact representation. Experimental results on five popular benchmark datasets demonstrated that the proposed method could improve retrieval performance in terms of mAP scores. Furthermore, it outperforms some existing state-of-the-art methods without complex fine-tuning. The proposed method benefits to retrieve the scene images with various color and direction details.

Similar content being viewed by others

References

Geirhos R, Rubisch P, Michaelis C, et al (2019) ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. International Conference on Learning Representations, New Orleans, LA, USA.

Liu G-H, Yang J-Y (2023) Exploiting deep textures for image retrieval. Int J Mach Learn Cybern 14(2):483–494

Hubel D, Wiesel TN (1962) Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol 160:106–154

Marr D, Hildreth E (1980) Theory of edge detection. Proc R Soc Lond B 207(1167):187–217

Liu G-H, Yang J-Y (2021) Deep-seated features histogram: a novel image retrieval method. Pattern Recogn 116:107926

Rossi LF, Harris KD, Carandini M (2020) Spatial connectivity matches direction selectivity in visual cortex. Nature 588:648–652

Liu G-H, Yang J-Y (2013) Content-based image retrieval using color deference histogram. Pattern Recogn 46(1):188–198

Liu G-H, Yang J-Y, Li ZY (2015) Content-based image retrieval using computational visual attention model. Pattern Recogn 48(8):2554–2566

Hua J-Z, Liu G-H, Song S-X (2019) Content-based image retrieval using color volume histograms. Int J Pattern Recognit Artif Intell 33(9):1940010

Liu G-H, Yang J-Y (2019) Exploiting color volume and color difference for salient region detection. IEEE Trans Image Process 28(1):6–16

Lowe DG (2004) Distinctive image features from scale-invariant key-points. Int J Comput Vision 60:91–110

Bay H, Ess A, Tuytelaars T, Gool LV (2008) Speeded-Up robust features (SURF). Comput Vis Image Underst 110(3):346–359

Csurka G, Dance C, Fan L et al (2004) Visual categorization with bags of keypoints. European Conference on Computer Vision 1:1–22

Perronnin F, Liu Y, Sanchez J, Poirier H (2010) Large-scale image retrieval with compressed Fisher vectors. IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, pp: 3384–3391.

Jégou H, Perronnin F, Douze M et al (2012) Aggregating local image descriptors into compact codes. IEEE Trans Pattern Anal Mach Intell 34(9):1704–1716

Jégou H, Zisserman A (2014) Triangulation Embedding and Democratic Aggregation for Image Search. IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, pp: 3310–3317.

Husain SS, Bober M (2017) Improving large-scale image retrieval through robust aggregation of local descriptors. IEEE Trans Pattern Anal Mach Intell 39(9):1783–1796

Alex K, Ilya S, Hinton G (2012) ImageNet classification with deep convolutional neural networks. Proceedings of the 25th International Conference on Neural Information Processing Systems, Red Hook, NY, United States, pp: 1097–1105.

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. International Conference on Learning Representations, San Diego, CA.

Szegedy C, Liu W, Jia Y, et al (2015) Going deeper with convolutions. IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, pp: 1–9.

He K, Zhang X, Ren S, Sun J (2018) Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, pp: 770–778.

Huang G, Liu Z, Van Der Maaten L, Weinberger K.Q (2017) Densely Connected Convolutional Networks. IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, pp: 2261–2269.

Yandex A.B, Lempitsky V (2015) Aggregating local deep features for image retrieval. IEEE International Conference on Computer Vision, Santiago, Chile, pp: 1269–1277.

Razavian AS, Sullivan J, Carlsson S, Maki A (2016) Visual instance retrieval with deep convolutional networks. ITE Transactions on Media Technology and Applications 4(3):251–258

Tolias G, Sicre R, Jégou H (2016) Particular object retrieval with integral max-pooling of CNN activations. International Conference on Learning Representations, San Juan, Puerto Rico, pp: 1–12.

Arandjelović R, Gronat P, Torii A et al (2018) NetVLAD: CNN architecture for weakly supervised place recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1437–1451

Kalantidis Y, Mellina C, Osindero S (2016) Cross-dimensional weighting for aggregated deep convolutional features. European Conference on Computer Vision 9913:685–701

Jiménez A, Alvarez J, Giro X (2018) Class-weighted convolutional features for visual instance search. Proceedings of the 28th British Machine Vision Conference, Newcastle, UK, pp: 1–12.

Forcén JI, Pagola M, Barrenechea E, Bustince H (2020) Co-occurrence of deep convolutional features for image search. Image Vis Comput 97:103909

Zhang B-J, Liu G-H, Hu J-K (2022) Filtering deep convolutional features for image retrieval. Int J Pattern Recognit Artif Intell 36(1):2252003

Lu F, Liu G-H (2022) Image retrieval using contrastive weight aggregation histograms. Digital Signal Processing 123:103457

Lu Z, Liu G-H, Lu F, Zhang B-J (2023) Image retrieval using dual-weighted deep feature descriptor. Int J Mach Learn Cybern 14(3):643–653

Liu G-H, Li Z-Y, Yang J-Y, Zhang D (2024) Exploiting sublimated deep features for image retrieval. Pattern Recogn 147:110076

Garg AK, Li P, Rashid MS, Callaway EM (2019) Color and orientation are jointly coded and spatially organized in primate primary visual cortex. Science 364(6447):1275–1279

Lu F, Liu G-H (2023) Image retrieval using object semantic aggregation histogram. Cogn Comput 15:1736–1747

Philbin J, Chum O, Isard M, et al (2007) Object retrieval with large vocabularies and fast spatial matching. IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, pp: 1–8.

Philbin J, Chum O, Isard M, et al (2008) Lost in quantization: improving particular object retrieval in large scale image databases. IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, pp: 1–8.

Jégou H, Douze M, Schmid C (2008) Hamming embedding and weak geometric consistency for large scale image search. Proceedings of the 10th European Conference on Computer Vision: Part I, Marseille, France, pp: 304–317.

Radenovic F, Iscen A, Tolias G, Avrithis Y, Chum O (2018) Revisiting Oxford and Paris: Large-Scale Image Retrieval Benchmarking. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, pp: 5706–5715.

Siméoni O, Avrithis Y, Chum O (2019) Local Features and Visual Words Emerge in Activations. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, pp: 11643–11652.

Funding

This study is supported by the National Natural Science Foundation of China (grant no. 62266008), the Foundation of Development Research Centre of Guangxi in Humanities and Social Sciences (grant no. ZXZJ202201), and the Foundation of Guangxi Normal University (grant no. 2021JC007).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Data availability

The author has used third-party data and therefore does not own the data, and those benchmark datasets are public. The code of the proposed method is available on request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, F., Liu, GH. Image retrieval by aggregating deep orientation structure features. Int. J. Mach. Learn. & Cyber. (2024). https://doi.org/10.1007/s13042-024-02172-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13042-024-02172-w