Abstract

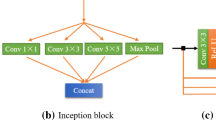

With the development of convolutional neural network (CNN) technology, No-reference Image Quality Assessment (NR-IQA) based on CNN has attracted the attention of many scholars. However, most of the previous methods improved the evaluation performance by increasing the network depth and various feature extraction mechanisms. This maybe causes some problems such as insufficient feature extraction, detail loss and gradient disappearance due to the limited samples with labels in the existing database. To learn feature representation more effectively, this paper proposes a Two-channel Deep Recursive Multi-Scale Network Based on Multi-Attention (ATDRMN), which can accurately evaluate image quality without relying on reference images. The network is a two-channel convolution networks with original image and gradient image as inputs. In two sub-branch networks, the Multi-scale Feature Extraction Block based on Attention (AMFEB) and the Improved Atrous Space Pyramid Pooling Network (IASPP-Net) are proposed to extend the attention-required feature information and obtain different levels of hierarchical feature information. Specifically, each AMFEB makes full use of image features in convolution kernels of different sizes to expand feature information, and further inputs these features into the attention mechanism to learn their corresponding weights. The output of each AMFEB is extended by the cavity convolution algorithm in IASPP-Net to obtain more context information and learn its hierarchical features. Finally, multiple AMFEBs and IASPP-Nets are respectively deeply and recursively fused to further obtain the most effective feature information, and then the output features are inputted to the regression network for final quality evaluation. The experimental results on seven databases showed that the proposed method has good robustness and is superior to the most advanced NR-IQA methods.

Similar content being viewed by others

References

Wang Z, Bovik AC (2002) Universal image quality index. IEEE Sign Process Lett 9(3):81–84

Wang Z, Bovik A, Sheikh H (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 2004:600–612

Zhang L, Mou X (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Zhang L, Shen Y, Li H (2014) VSI: a visual saliency-induced index for perceptual image quality assessment. IEEE Trans Image Process 2014:427

Wang Z, Simoncelli P (2005) Reduced-reference image quality assessment using a wavelet-domain natural image statistic model. Proc SPIE 5666:149–159

Soundararajan R, Bovik AC (2012) Rred indices: reduced reference entropic differencing for image quality assessment. IEEE Trans Image Process 21(2):517–526

Mittal A, Moorthy AK, Bovik AC (2012) No-reference image quality assessment in the spatial domain. IEEE Trans Image Process A Publ IEEE Signal Process Soc 21(12):4695–4708

Moorthy AK, Bovik AC (2011) Blind image quality assessment: from natural scene statistics to perceptual quality. IEEE Trans Image Process A Publ IEEE Signal Process Soc 20(12):3350

Mittal A, Soundararajan R, Bovik AC (2013) Making a completely blind image quality analyzer. IEEE Signal Process Lett 20(3):209–212

Krizhevsky A, Sutskever I, Geoffrey E (2012) Hinton, Imagenet classification with deep convolutional neural networks. In: International conference on neural information processing systems, pp 1097–1105

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. Adv Neural Inf Process Syst 27:568–576

Gong D, Yang J, Liu L, Zhang Y, Reid I, Shen C, Hengel A van den, Shi Q (2017) From motion blur to motion flow: a deep learning solution for removing heterogeneous motion blur. In Computer vision and pattern recognition, pp 1827–1836

Zhang L, Wei W, Zhang Y, Shen C, van den Hengel A, Shi Q (2018) Cluster sparsity field: an internal hyperspectral imagery prior for reconstruction. Int J Comput Vis 2018:1–25

Yang J, Gong D, Liu L, Shi Q (2018) Seeing deeply and bidirectionally: a deep learning approach for single image reflection removal. In European conference on computer vision (ECCV)

Yang Q, Gong D, Zhang Y (2018) Two-stream convolutional networks for blind image quality assessment. IEEE Trans Image Process 28:2200–2211

Kang L, Ye P, Li Y, Doermann D (2014) Convolutional neural networks for no-reference image quality assessment. In: Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp 1733–1740

Dash PP, Wong A, Mishra A, VeNICE (2017) A very deep neural network approach to no-reference image assessment. In: Proc. IEEE Int. Conf. Ind. Technol. (ICIT), Toronto, QN, Canada, vol 43, pp 1091–1096

Li Y, Po LM, Feng L, Yuan F (2016) No-reference image quality assessment with deep convolutional neural networks. In Proc. IEEE Int. Conf. Digit. Signal Process. (DSP), Beijing, China, pp 685–689

Kim J, Lee S (2017) Fully deep blind image quality predictor. IEEE J Select Top Signal Process 11(1):206–220

Pan D, Shi P, Hou M et al (2018) Blind predicting similar quality map for image quality assessment. In: Proceedings of IEEE conference on computer vision and pattern recognition, pp 6373C6382

Zuo L, Wang H, Fu J (2016) Screen content image quality assessment via convolutional neural network. In Proc. IEEE Int. Conf. Image Process. (ICIP), Phoenix, AZ, USA, pp 2082–2086

Lin K Y, Wang G (2018) Hallucinated-IQA: no-reference image quality assessment via adversarial learning. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR), IEEE

Guan J, Yi S, Zeng X et al (2017) Visual importance and distortion guided deep image quality assessment framework. IEEE Trans Mult 19:2505–2520

Xu L et al (2017) Multi-task rank learning for image quality assessment. IEEE Trans Circ Syst Video Technol 27(9):1833–1843

Zhang W, Martin RR, Liu H (2018) A saliency dispersion measure for improving saliency-based image quality metrics. IEEE Trans Circ Syst Video Technol 28(6):1462–1466

Po L et al (2019) A novel patch variance biased convolutional neural network for no-reference image quality assessment. IEEE Trans Circ Syst Video Technol 29(4):1223–1229

Li, Fang F, Mei K, Zhang G (2018) Multi-scale residual network for image super-resolution. In Proc. Eur. Conf. Comput. Vis., pp 527–542

Zhang W, Ma K, Yan J, Deng D, Wang Z (2020) Blind image quality assessment using a deep bilinear convolutional neural network. IEEE Trans Circ Syst Video Technol 30(1):36–47

Wang C, Shao M et al (2022) Dual-pyramidal image inpainting with dynamic normalization. IEEE Trans Circ Syst Video Technol. https://doi.org/10.1109/TCSVT.2022.3165587

Sun W, Min X, Zhai G et al (2021) Blind quality assessment for in-the-wild images via hierarchical feature fusion and iterative mixed database training[J]. arXiv preprint arXiv:2105.14550

Li F, Zhang Y et al (2021) MMMNet: an end-to-end multi-task deep convolution neural network with multi-scale and multi-hierarchy fusion for blind image quality assessment. IEEE Trans Circ Syst Video Technol 31:4798–4811

Sheikh H, Sabir M, Bovik A (2006) A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans Image Process 15(11):3440–3451

Larson C, Chandler M (2010) Most apparent distortion: full-reference image quality assessment and the role of strategy. J Electron Imaging 19(1):011006

Ponomarenko N, Lukin V, Zelensky A et al (2009) TID2008-a database for evaluation of full-reference visual quality assessment metrics. Adv Modern Radio Electron 10(4):30–45

Ponomarenko N et al (2015) Image database TID2013: peculiarities, results and perspectives. Signal Process Image Commun 2015:57–77

Lin H, Hosu V, Saupe D (2019) KADID-10k: A large-scale artificially distorted IQA database. In: Proc. 11th Int. Conf. Qual. Multimedia Exper. (QoMEX), pp 1–3

Jayaraman D, Mittal A, Moorthy AK, Bovik AC (2012) Objective quality assessment of multiply distorted images. In: Proceedings of the conference record of the forty sixth asilomar conference on signals, systems and computers (ASILOMAR), IEEE, pp 1693–1697

Deepti G, Alan C (2015) Massive online crowdsourced study of subjective and objective picture quality. IEEE Trans Image Process 25(1):372–387

Zhang W, Ma K, Zhai G et al (2021) Uncertainty-aware blind image quality assessment in the laboratory and wild. IEEE Trans Image Process 2021:3474–3486

Ye P, Kumar J, Kang L, Doermann D (2012) Unsupervised feature learning framework for no-reference image quality assessment. In: Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp 1098–1105

Xu J, Ye P, Li Q, Du H, Liu Y, Doermann D (2016) Blind image quality assessment based on high order statistics aggregation. IEEE Trans Image Process 25(9):4444–4457

Ren H, Chen D, Wang Y (2018) RAN4IQA: restorative adversarial nets for no-reference image quality assessment. In: Proc. 32nd AAAI Conf. Artif. Intell., pp 7308–7314

Liu X, Weijer J, Bagdanov AD (2017) RankIQA: learning from rankings for no-reference image quality assessment[J]. IEEE Comput Soc

Chen D, Wang Y, Gao W (2020) No-reference image quality assessment: an attention driven approach. IEEE Trans Image Process 29(99):6496–6506

Ghadiyaram D, Bovik AC (2017) Perceptual quality prediction on authentically distorted images using a bag of features approach. J Vis 2017:32–32

Kim J, Lee S (2017) Fully deep blind image quality predictor. IEEE J Sel Top Signal Process 11(1):206–220

Kim J, Zeng H, Ghadiyaram D, Lee S, Zhang L, Bovik AC (2017) Deep convolutional neural models for picture-quality prediction: challenges and solutions to data-driven image quality assessment. IEEE Signal Proc Mag 34(6):130–141

Bosse S, Maniry D, Mller K et al (2017) Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans Image Process 27(1):206–219

Kim J, Nguyen A, Ahn S, Luo C, Lee S (2018) Multiple level feature-based universal blind image quality assessment model. In Proc ICIP, pp 291–295

Wu J, Zhang M, Li L, Dong W, Lin GW (2019) No-reference image quality assessment with visual pattern degradation. Inf Sci 2019:487–500

Chen X, Zhang Q, Lin M et al (2019) No-reference color image quality assessment: from entropy to perceptual quality. J Image Video Proc 2019:258

Yang S, Jiang Q, Lin W, Wang Y (2019) SGDNet: an end-to-end saliency-guided deep neural network for no-reference image quality assessment. In: Proc. ACM international conference on multimedia association for computing machinery, pp 1383–1391

Dendi S, Dev C et al (2019) Generating image distortion maps using convolutional autoencoders with application to no reference image quality assessment. IEEE Signal Process Lett 26(1):89–93

Rajchel M, Oszust M (2021) No-reference image quality assessment of authentically distorted images with global and local statistics[J]. Signal Image Video Process 15(1):83–91

Yang X, Wang T, Ji G (2020) No-reference image quality assessment via structural information fluctuation. IET Image Proc 14(2):384–396

Wu J, Ma J, Liang F, Dong W, Shi G, Lin W (2020) End-to-end blind image quality prediction with cascaded deep neural network. IEEE Trans Image Process 2020:7414–7426

Xue W, Zhang L, Mou X (2013) Learning without human scores for blind image quality assessment. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp 995–1002

Min X, Zhai G, Gu K, Liu Y et al (2018) Blind image quality estimation via distortion aggravation. IEEE Trans Broadcast 64(2):508–517

Li D, Jiang T, Lin W, Jiang M (2018) Which has better visual quality: the clear blue sky or a blurry animal? IEEE Trans Multimedia 21(5):1221–1234

Su S, Yan Q, Zhu Y et al (2020) Blindly assess image quality in the wild guided by a self-adaptive hyper network. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp 3667–3676

Kang L, Ye P, Li Y, Doermann D (2015) Simultaneous estimation of image quality and distortion via multi-task convolutional neural networks. In: Proc. IEEE Int. Conf. Image Process (ICIP), pp 2791–2795

Xue W, Mou X, Zhang L, Bovik AC, Feng X (2014) Blind image quality assessment using joint statistics of gradient magnitude and Laplacian features. IEEE Trans Image Process 23(11):4850–4862

Funding

This work was supported by National Natural Science Foundation of China under Grants 61976027, 61572082, Liaoning Revitalization Talents Program (XLYC2008002).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, C., Lv, X., Fan, X. et al. Two-channel deep recursive multi-scale network based on multi-attention for no-reference image quality assessment. Int. J. Mach. Learn. & Cyber. 14, 2421–2437 (2023). https://doi.org/10.1007/s13042-023-01773-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01773-1