Abstract

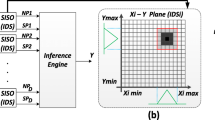

In artificial intelligence (AI), proposing an efficient algorithm with an appropriate hardware implementation has always been a challenge because of the well-accepted fact that AI hardware implementations should ideally be comparable to biological systems in terms of hardware area. Active learning method (ALM) is a fuzzy learning algorithm inspired by human brain computations. Unlike traditional algorithms, which employ complicated computations, ALM tries to model human brain computations using qualitative and behavioral descriptions of the problem. The main computational engine in ALM is the ink drop spread (IDS) operator, but this operator imposes high memory requirements and computational costs, making the ALM algorithm and its hardware implementation unsuitable for some of the applications. This paper proposes an adaptive alternative method for implementing the IDS operator; a method which results in a marked reduction in the algorithm’s computational complexity and in the amount of memory required and hardware. To check its validity and performance, the method was used to carry out modeling and pattern classification tasks. This paper used challenging and real-world datasets and compared with well-known algorithms (adaptive neuro-fuzzy inference system and multi-layer perceptron) in software simulation and hardware implementation. Compared to traditional implementations of the ALM algorithm and other learning algorithms, the proposed FPGA implementation offers higher speed, less hardware, and improved performance, thus facilitating real-time application. Our ultimate goal in this paper was to present a hardware implementation with an on-chip training that allows it to adapt to its environment without dependency on the host system (on-chip learning).

Similar content being viewed by others

References

Tsoukalas LH, Uhrig RE (1996) Fuzzy and neural approaches in engineering. Wiley, New York

Samarasinghe S (2006) Neural networks for applied sciences and engineering: from fundamentals to complex pattern recognition. CRC Press, Boca Raton

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Zadeh LA (1973) Outline of a new approach to the analysis of complex systems and decision processes. IEEE Trans Syst Man Cybern SMC-3(1):28–44

Widrow B, Rumelhart DE, Lehr MA (1994) Neural networks: applications in industry, business and science. Commun ACM 37(3):93–106

Basheer I, Hajmeer M (2000) Artificial neural networks: fundamentals, computing, design, and application. J Microbiol Methods 43(1):3–31

Ukil A (2007) Intelligent systems and signal processing in power engineering. Springer Science & Business Media, Berlin

Paliwal M, Kumar UA (2009) Neural networks and statistical techniques: a review of applications. Expert Syst Appl 36(1):2–17

Haykin S (1994) Neural networks: a comprehensive foundation. Macmillan College Publishing Company, New York

Park B-J, Pedrycz W, Oh S-K (2010) Polynomial-based radial basis function neural networks (P-RBF NNs) and their application to pattern classification. Appl Intell 32(1):27–46

Sun X-Y et al (2016) Improved probabilistic neural network PNN and its application to defect recognition in rock bolts. Int J Mach Learn Cybern 7(5):909–919

Bezdek JC (1973) Fuzzy mathematics in pattern classification. Ph. D. Dissertation, Applied Mathematics, Cornell University

Mamdani EH (1974) Application of fuzzy algorithms for control of simple dynamic plant. In: Electrical engineers, Proceedings of the Institution of 1974, vol 121, no 12, pp 1585–1588

Takagi T, Sugeno M (1985) Fuzzy identification of systems and its applications to modeling and control. IEEE Trans Syst Man Cybern SMC-15(1):116–132

Tanaka K, Wang HO (2004) Fuzzy control systems design and analysis: a linear matrix inequality approach. Wiley, New York

Terano T, Asai K, Sugeno M (2014) Applied fuzzy systems. Academic Press, New York

Bosque G, Campo I, Echanobe J (2014) Fuzzy systems, neural networks and neuro-fuzzy systems: a vision on their hardware implementation and platforms over two decades. Eng Appl Artif Intell 32:283–331

Singh P (2017) A brief review of modeling approaches based on fuzzy time series. Int J Mach Learn Cybern 8(2):397–420

Misra J, Saha I (2010) Artificial neural networks in hardware: A survey of two decades of progress. Neurocomputing 74(1):239–255

Monmasson E et al (2011) FPGAs in industrial control applications. IEEE Trans Ind Inform 7(2):224–243

Saldaña HJB, Cárdenas CS (2010) Design and implementation of an adaptive neuro-fuzzy inference system on an FPGA used for nonlinear function generation. In: ANDESCON, 2010 IEEE. IEEE

Gomperts A, Ukil A, Zurfluh F (2011) Development and implementation of parameterized FPGA-based general purpose neural networks for online applications. IEEE Trans Ind Inform 7(1):78–89

Eldredge JG, Hutchings BL (1994) RRANN: a hardware implementation of the backpropagation algorithm using reconfigurable FPGAs. In: Neural networks, 1994. IEEE world congress on computational intelligence, IEEE international conference on. 1994. IEEE

Yun SB et al (2002) Hardware implementation of neural network with expansible and reconfigurable architecture. In: Neural information processing, ICONIP’02. Proceedings of the 9th international conference on. 2002. IEEE

Del Campo, I et al (2008) Efficient hardware/software implementation of an adaptive neuro-fuzzy system. IEEE Trans Fuzzy Syst 16(3):761–778

Baptista FD, Morgado-Dias F (2017) Automatic general-purpose neural hardware generator. Neural Comput Appl 28(1):25–36

Lacey GJ (2016) Deep learning on FPGAs. Dissertation

Ortega-Zamorano F et al (2017) Layer multiplexing FPGA implementation for deep back-propagation learning. Integr Comput Aided Eng 24(2):171–185

Himavathi S, Anitha D, Muthuramalingam A (2007) Feedforward neural network implementation in FPGA using layer multiplexing for effective resource utilization. IEEE Trans Neural Netw 18(3):880–888

Ortega-Zamorano F et al (2016) Efficient implementation of the backpropagation algorithm in fpgas and microcontrollers. IEEE Trans Neural Netw Learn Syst 27(9):1840–1850

Soudry D et al (2015) Memristor-based multilayer neural networks with online gradient descent training. IEEE Trans Neural Netw Learn Syst 26(10):2408–2421

Ortigosa EM et al (2006) Hardware description of multi-layer perceptrons with different abstraction levels. Microprocess Microsyst 30(7):435–444

Shouraki SB (2000) A novel fuzzy approach to modeling and control and its hardware implementation based on brain functionality and specifications. Dissertation

Sugeno M, Yasukawa T (1993) A fuzzy-logic-based approach to qualitative modeling. IEEE Trans Fuzzy Syst 1(1):7–31

Murakami M, Honda N (2007) A study on the modeling ability of the IDS method: a soft computing technique using pattern-based information processing. Int J Approx Reason 45(3):470–487

Bahrpeyma F, Zakerolhoseini A, Haghighi H (2015) Using IDS fitted Q to develop a real-time adaptive controller for dynamic resource provisioning in Cloud’s virtualized environment. Appl Soft Comput 26:285–298

Sakurai Y (2005) A study of the learning control method using PBALM—a nonlinear modeling method. Ph.D., The University of Electro-Communications, Tokyo

Shahdi SA, Shouraki SB (2002) Supervised active learning method as an intelligent linguistic controller and its hardware implementation. In: 2nd IASTEAD international conference on artificial intelligence and applications (AIA’02), Malaga, Spain

Shouraki SB, Honda N (1998) Fuzzy controller design by an active learning method. In: 31th symposium of intelligent control. Tokyo, Japan

Murakami M (2008) Practicality of modeling systems using the IDS method: performance investigation and hardware implementation. The University of Electro-Communications

Firouzi M, Shouraki SB, Conradt J (2014) Sensorimotor control learning using a new adaptive spiking neuro-fuzzy machine, spike-IDS and STDP. In: International conference on artificial neural networks. Springer

Cranganu C, Bahrpeyma F (2015) Use of active learning method to determine the presence and estimate the magnitude of abnormally pressured fluid zones: a case study from the Anadarko Basin, Oklahoma. In: Artificial intelligent approaches in petroleum geosciences. Springer, pp 191–208

Merrikh-Bayat F, Merrikh-Bayat F, Shouraki SB (2014) The neuro-fuzzy computing system with the capacity of implementation on a memristor crossbar and optimization-free hardware training. IEEE Trans Fuzzy Syst 22(5):1272–1287

Ghorbani MJ, Choudhry MA, Feliachi A (2014) Distributed multi-agent based load shedding in power distribution systems. In: Electrical and computer engineering (CCECE), IEEE 27th Canadian conference on. 2014. IEEE

Shouraki SB, Honda N, Yuasa G (1999) Fuzzy interpretation of human intelligence. Int J Uncertain Fuzziness Knowl Based Syst 7(04):407–414

Firouzi M, Shouraki SB, Afrakoti IEP (2014) Pattern analysis by active learning method classifier. J Intell Fuzzy Syst 26(1):49–62

Javadian M, Shouraki SB, Kourabbaslou SS (2017) A novel density-based fuzzy clustering algorithm for low dimensional feature space. Fuzzy Sets Syst 318:34–55

Klidbary SH et al (2017) Outlier robust fuzzy active learning method (ALM). In: 2017 7th international conference on computer and knowledge engineering (ICCKE)

Shahraiyni TH et al (2007) Application of the Active Learning Method for the estimation of geophysical variables in the Caspian Sea from satellite ocean colour observations. Int J Remote Sens 28(20):4677–4683

Sagha H et al (2008) Real-Time IDS using reinforcement learning. In: Intelligent information technology application, 2008. IITA’08. Second international symposium on. IEEE

Merrikh-Bayat F, Shouraki SB, Rohani A (2011) Memristor crossbar-based hardware implementation of the IDS method. IEEE Trans Fuzzy Syst 19(6):1083–1096

Shouraki SB, Honda N (1999) Recursive fuzzy modeling based on fuzzy interpolation. J Adv Comput Intell 3(2):114–125

Shouraki SB, Honda N (1998) Outlines of a soft computer for brain simulation. In: International conference on soft computing information/intelligence systems

Bagheri S, Honda N (1999) Hardware simulation of brain learning process. In: 15 fuzzy symposium

Murakami M, Honda N (2004) Hardware for a new fuzzy-based modeling system and its redundancy. In: Fuzzy information, processing NAFIPS’04. IEEE annual meeting of the. 2004. IEEE

Tarkhan M, Shouraki SB, Khasteh SH (2009) A novel hardware implementation of IDS method. IEICE Electron Express 6(23):1626–1630

Rabaey JM, Chandrakasan AP, Nikolic B (2002) Digital integrated circuits, vol 2. Prentice Hall, Englewood Cliffs

Firouzi M et al (2010) A novel pipeline architecture of replacing ink drop spread. In: Nature and biologically inspired computing (NaBIC), 2010 second world congress on. IEEE

Mazumder P, Kang S-M, Waser R (2012) Memristors: devices, models, and applications. In: Proceedings of the IEEE, 2012, vol 100, no 6, pp 1911–1919

Afrakoti IEP, Shouraki SB, Haghighat B (2014) An optimal hardware implementation for active learning method based on memristor crossbar structures. IEEE Syst J 8(4):1190–1199

Klidbary SH, Shouraki SB, Afrakoti IEP (2016) Fast IDS computing system method and its memristor crossbar-based hardware implementation. arXiv:1602.06787

Klidbary SH, Shouraki SB (2018) A novel adaptive learning algorithm for low-dimensional feature space using memristor-crossbar implementation and on-chip training. Appl Intell 48:4174–4191

Klidbary SH, Shouraki SB, Afrakoti IEP (2018) An adaptive efficient memristive ink drop spread (IDS) computing system. Neural Comput Appl. https://doi.org/10.1007/s00521-018-3604-0

Iakymchuk T et al (2014) An AER handshake-less modular infrastructure PCB with ×8 2.5 Gbps LVDS serial links. In: Circuits and systems (ISCAS), 2014 IEEE international symposium on. IEEE

Saldaña HJB, Silva-Cárdenas C (2012) A digital hardware architecture for a three-input one-output zero-order ANFIS. In: Circuits and systems (LASCAS), 2012 IEEE third Latin American symposium on. IEEE

Gómez-Castañeda F et al (2014) Photovoltaic panel emulator in FPGA technology using ANFIS approach. In: Electrical engineering, computing science and automatic control (CCE), 11th international conference on. 2014. IEEE

Bahoura M, Park C-W (2011) FPGA-implementation of high-speed MLP neural network. In: Electronics, circuits and systems (ICECS), 2011 18th IEEE international conference on. IEEE

Gironés RG et al (2005) FPGA implementation of a pipelined on-line backpropagation. J VLSI Signal Process Syst Signal Image Video Technol 40(2):189–213

Echanobe J, Finker R, del Campo I (2015) A divide-and-conquer strategie for FPGA implementations of large MLP-based classifiers. In: Neural networks (IJCNN), 2015 international joint conference on. 2015. IEEE

Acknowledgements

The authors would like to thank Mohsen Firouzi and Menoua Keshishian for their generous contribution to our analysis. This work was partially supported by the INSF (Iran National Science Foundation) Grant number 96000943.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix A: Proof of convergence

Appendix A: Proof of convergence

As it has also been discussed, the training mode in the original ALM algorithm is batch-Mode, and in NAALM is Sample-Mode. In order to prove the convergence of \({{\text{v}}_{{\text{NP}}}}\) vector, first, we show the change in one element of this vector for training samples:

where, \(({{\text{x}}_{\text{t}}},{{\text{y}}_{\text{t}}})\) is training sample, \({\text{I}}\) is the index of the vector for updating, \({{\text{K}}_I}\) is the total number of training samples in element \(I\), \(\omega\) is learning rate, \({{\text{x}}_{\text{t}}}\) is the input that vary between one and the quantization level of \(X\) axis (\({{\text{L}}_x}\)). For each training sample associated with each quantization level, following equation holds:

\(K\) is the total number of training samples (number of iterations) in the IDS plane. For the benefit of simplicity and less computations, if \({{\text{x}}_{\text{t}}}={\text{I}}\), the previous equation can be simplified:

If we expand the summation, a recursive equation can be obtained as follows:

If \({{\text{K}}_{\text{I}}} \gg 1,\) in the previous equation, the first term of the equation tends to zero, and by assuming \({\text{m}}={{\text{k}}_{\text{I}}} - {\text{i}},\) following equation can be obtained:

This equation shows that the final output of the algorithm converges to the weighted sum of outputs. With regard to forgetting property introduced in the proposed method (NAALM), the impact of training samples that were shown later in the training phase is higher than that of training samples that were shown to the algorithm earlier. It should be mentioned that because of the fact that NAALM algorithm partition the inputs domains. Therefore, for the mentioned recursive algorithm, the standard deviation of \(v_{{NP}}^{~}\) is small and because the computations are conducted in the fuzzy space (space with uncertainty), the lack of equivalency (the difference between Batch-Mode and Sample-Mode in training phase) do not significantly affect the final result.

With regard to assumptions we made about the convergence of describing vectors, the value of degree of Belief would be:

If we expand the summation, a recursive equation can be obtained as follows:

If \({{\text{K}}_{\text{I}}} \gg 1,{\text{~}}\)in the previous equation, following equation can be obtained:

This equation shows that as the number of training samples (or the number of iterations) increases, the value of Belief increases, and eventually our belief to the occurrence of that event converge to constant value (\({K_{\text{I}}}<\infty \,~{\text{and~}}\,{{\varvec{\upalpha}}}<1\)).

Rights and permissions

About this article

Cite this article

Klidbary, S.H., Shouraki, S.B. & Linares-Barranco, B. Digital hardware realization of a novel adaptive ink drop spread operator and its application in modeling and classification and on-chip training. Int. J. Mach. Learn. & Cyber. 10, 2541–2561 (2019). https://doi.org/10.1007/s13042-018-0890-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-018-0890-x