Abstract

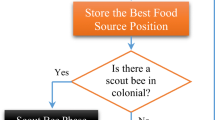

During the past decade, the dramatic increase in the computational capabilities of chip processing and the lower costs of computing hardware have led to the emergence of deep learning, which refers to a sub-field of machine learning that focuses on learning features extracted from data and classifying them through multiple layers in the hierarchical architectures of neural networks. Using convolution neural networks (CNN) is one of the most promising deep learning methods for dealing with several pattern recognition tasks. However, as with most artificial neural networks, CNNs are susceptible to multiple local optima. Hence, in order to avoid becoming trapped within the local optima, improvement of the CNNs is thus required. The optimization methods based on a metaheuristic are very powerful in solving optimization problems. However, research on the use of metaheuristics to optimize CNNs is rarely conducted. In this work, the artificial bee colony (ABC) method, one of the most popular metaheuristic methods, is proposed as an alternative approach to optimizing the performance of a CNN. In other words, we aim to minimize the classification errors by initializing the weights of the CNN classifier based on solutions generated by the ABC method. Moreover, the distributed ABC is also presented as a method to maintain the amount of time needed to execute the process when working with large training datasets. The results of the experiment demonstrate that the proposed method can improve the performance of the ordinary CNNs in both recognition accuracy and computing time.

Similar content being viewed by others

References

Schmidhuber J (2015) Deep learning in neural networks: An overview. Neural Netw 61:85–117

Collobert R, Weston J ((2008)) A unified architecture for natural language processing: deep neural networks with multitask learning. In: Proceedings of the 25th international conference on Machine learning, pp 160–167

Yu D, Deng L (2011) Deep learning and its applications to signal and information processing. IEEE Signal Process Mag 28:145–154

Graves A, Mohamed AR, Hinton G (2013) Speech recognition with deep recurrent neural networks. In: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, pp 6645–6649

Deng L (2014) A tutorial survey of architectures, algorithms, and applications for deep learning. APSIPA Trans Signal Inf Process 3:1–29

Wang XZ, Zhang T, Wang R (2017) Noniterative deep learning: incorporating restricted boltzmann machine into multilayer random weight neural networks. IEEE Trans Syst Man Cybern Syst. https://doi.org/10.1109/tsmc.2017.2701419

Wang Z, Wang XZ (2017) A deep stochastic weight assignment network and its application to chess playing. J Parallel Distrib Comput. https://doi.org/10.1016/j.jpdc.2017.08.013

Jordan A (2002) On discriminative vs. generative classifiers: A comparison of logistic regression and naive bayes. Advances in Neural Information Processing Systems, pp 841–848

Fischer A, Igel C (2014) Training restricted Boltzmann machines: an introduction. Pattern Recogn 47:25–39

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural Comput 18:1527–1554

Salakhutdinov R, Hinton GE (2009) Deep Boltzmann Machines. In: Proceedings of the 12th International Conference on Artificial Intelligence and Statistics, pp 448–455

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, pp 1097–1105

Hinton GE, Deng L, Yu D, Dahl G, Mohamed AR, Jaitly N, Senior A, Vanhoucke V, Nguyen P, Sainath T, Kingsbury B (2012) Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process Mag 29:82–97

Graves A, Mohamed AR, Hinton GE (2013) Speech recognition with deep recurrent neural networks. In: IEEE International Conference on Acoustics, Speech and Signal Processing, pp 6645–6649

Radzi SA, Khalil-Hani M (2011) Character recognition of license plate number using convolutional neural network. In: Proceedings of the International Visual Informatics Conference, pp 45–55

Hu B, Lu Z, Li H, Chen Q (2014) Convolutional neural network architectures for matching natural language sentences. In: Advances in Neural Information Processing Systems, pp 2042–2050

Yalcin H, Razavi S (2016) Plant classification using convolutional neural networks. In: IEEE proceedings of the Fifth International Conference on Agro-Geoinformatics, pp 1–5

Ramadhan I, Purnama B, Al Faraby S (2016) Convolutional neural networks applied to handwritten mathematical symbols classification. In: IEEE Proceedings of the 4th International Conference on Information and Communication Technology, pp 1–4

Bashkirova D (2016) Convolutional neural networks for image steganalysis. BioNanoScience 6:246–248

Parejo JA, Ruiz-Cortés A, Lozano S, Fernandez P (2012) Metaheuristic optimization frameworks: a survey and benchmarking. Soft Comput 16:527–561

He YC, Wang XZ, He YL, Zhao SL, Li WB (2016) Exact and approximate algorithms for discounted {0–1} knapsack problem. Inf Sci 369:634–647

Zhu H, He Y, Wang XZ, Tsang EC (2017) Discrete differential evolutions for the discounted {0–1} knapsack problem. Int J Bio-Inspired Comput 10:219–238

He Y, Xie H, Wong TL, Wang XZ (2018) A novel binary artificial bee colony algorithm for the set-union knapsack problem. Future Gener Comput Syst 78:77–86

Banharnsakun A, Tanathong S (2014) Object detection based on template matching through use of best-so-far ABC. Comput Intell Neurosci 2014:1–8

Banharnsakun A (2017) Hybrid ABC-ANN for pavement surface distress detection and classification. Int J Mach Learn Cybernet 8:699–710

Brooks SP, Morgan BJT (1995) Optimization using simulated annealing. The Statistician 44:241–257

Goldberg DE, Holland JH (1988) Genetic algorithms and machine learning. Mach Learn 3:95–99

Kennedy J, Eberhart RC (1995) Particle swarm optimization. In: Proceedings of the 1995 IEEE International Conference on Neural Networks, vol 4, pp 1942–1948

Liu DC, Nocedal J (1989) On the limited memory BFGS method for large scale optimization. Math Program 45:503–528

Karaboga D, Gorkemli B, Ozturk C, Karaboga N (2014) A comprehensive survey: artificial bee colony (ABC) algorithm and applications. Artif Intell Rev 42:21–57

Caliskan A, Yuksel ME, Badem H, Basturk A (2018) Performance improvement of deep neural network classifiers by a simple training strategy. Eng Appl Artif Intell 67:14–23

Badem H, Basturk A, Caliskan A, Yuksel ME (2017) A new efficient training strategy for deep neural networks by hybridization of artificial bee colony and limited-memory BFGS optimization algorithms. Neurocomputing 266:506–526

Rere LR, Fanany MI, Arymurthy AM (2015) Simulated annealing algorithm for deep learning. Proc Comput Sci 72:137–144

Ijjina EP, Chalavadi KM (2016) Human action recognition using genetic algorithms and convolutional neural networks. Pattern Recogn 59:199–212

Albeahdili HM, Han T, Islam NE (2016) Hybrid algorithm for the optimization of training convolutional neural network. Int J Adv Comput Sci Appl 6:79–85

Banharnsakun A, Achalakul T, Sirinaovakul B (2010) Artificial bee colony algorithm on distributed environments. In: Proceedings of the IEEE 2nd World Congress on Nature and Biologically Inspired Computing, pp. 13–18

Grama A, Gupta A, Karypis G, Kumar V (2003) Introduction to parallel computing. Addison-Wesley, New York

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. In: Proceedings of the IEEE 86, pp 2278–2324

LeCun Y, Kavukcuoglu K, Farabet C (2010) Convolutional networks and applications in vision. In: Proceedings of the IEEE International Symposium on Circuits and Systems, pp 253–256

Yang XS (2010) A new metaheuristic bat-inspired algorithm. In: Proceedings of Nature Inspired Cooperative Strategies for Optimization (NICSO 2010), pp 65–74

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Banharnsakun, A. Towards improving the convolutional neural networks for deep learning using the distributed artificial bee colony method. Int. J. Mach. Learn. & Cyber. 10, 1301–1311 (2019). https://doi.org/10.1007/s13042-018-0811-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-018-0811-z