Abstract

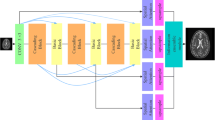

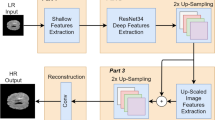

In recent decades, computer-aided medical image analysis has become a popular techniques for disease detection and diagnosis. Deep learning-based image processing techniques, have gained popularity in areas such as remote sensing, computer vision, and healthcare,compared to conventional techniques. However, hardware limitations, acquisition time, low radiation dose, and patient motion are factors that can limit the quality of medical images. High-resolution medical images are more accurate in localizing disease regions than low-resolution images. Hardware limitations, patient motion, radiation dose etc. can result in low-resolution (LR) medical images. To enhance the quality of LR medical images, we propose a multi-image super-resolution architecture using a generative adversarial network (GAN) with a generator architecture that employs multi-stage feature extraction, incorporating both residual blocks and an attention network and a discriminator having fewer convolutional layers to reduce computational complexity. The method enhances the resolution of low-resolution (LR) prostate cancer MRI images by combining multiple MRI slices with slight spatial shifts, utilizing shared weights for feature extraction for each MRI image. Unlike super-resolution techniques in literature, the network uses perceptual loss, which is computed by fine-tuning the VGG19 network with sparse categorical cross entropy loss. The features to compute perceptual loss are extracted from the final dense layer, instead of the convolutional block in VGG19 the literature. Our experiments were conducted on MRI images having a resolution of \(80\times 80\) for lower resolution and \(320\times 320\) for high resolution achieving an upscaling of x4. The experimental analysis shows that the proposed model outperforms the existing deep learning architectures for super-resolution with an average peak-signal-to-noise ratio (PSNR) of 30.58 ± 0.76 dB and average structural similarity index measure (SSIM) of 0.8105 ± 0.0656 for prostate MRI images. The application of a CNN-based SVM classifier confirmed that enhancing the resolution of normal LR brain MRI images using super-resolution techniques did not result in any false positive cases. This same architecture has the potential to be extended to other medical imaging modalities as well.

Similar content being viewed by others

Data availability

The datasets used for this research are available within the PROSTATE-DIAGNOSIS dataset, accessible at https://doi.org/10.7937/K9/TCIA.2015.FOQEUJVT.

References

Bloch BN, Jain A, Jaffe CC (2015) Data from prostate-diagnosis. https://doi.org/10.7937/K9/TCIA.2015.FOQEUJVT. Accessed 5 Dec 2020

Bose N, Kim H, Valenzuela H (1993) Recursive implementation of total least squares algorithm for image reconstruction from noisy, undersampled multiframes. In: 1993 IEEE international conference on acoustics, speech, and signal processing, vol 5, pp 269–272. https://doi.org/10.1109/ICASSP.1993.319799

Chatterjee S, Sciarra A, Dünnwald M et al (2021) Shuffleunet: super resolution of diffusion-weighted MRIs using deep learning. In: 2021 29th European Signal Processing Conference (EUSIPCO), pp 940–944. https://doi.org/10.23919/EUSIPCO54536.2021.9615963

Chatterjee S, Breitkopf M, Sarasaen C et al (2022) Reconresnet: regularised residual learning for MR image reconstruction of undersampled cartesian and radial data. Comput Biol Med 143:105321. https://doi.org/10.1016/j.compbiomed.2022.105321

Clark K, Vendt B, Smith K et al (2013) The cancer imaging archive (TCIA): maintaining and operating a public information repository. J Digit Imaging 26(6):1045–1057. https://doi.org/10.1007/s10278-013-9622-7

Costa P, Galdran A, Meyer MI et al (2018) End-to-end adversarial retinal image synthesis. IEEE Trans Med Imaging 37(3):781–791. https://doi.org/10.1109/TMI.2017.2759102

Dharejo FA, Deeba F, Zhou Y et al (2021) TWIST-GAN: towards wavelet transform and transferred GAN for spatio-temporal single image super resolution. ACM Trans Intell Syst Technol 12(6):1–20. https://doi.org/10.1145/3456726

Dong C, Loy CC, He K et al (2016) Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell 38(2):295–307. https://doi.org/10.1109/TPAMI.2015.2439281

Elad M, Feuer A (1997) Restoration of a single superresolution image from several blurred, noisy, and undersampled measured images. IEEE Trans Image Process 6(12):1646–1658. https://doi.org/10.1109/83.650118

Ganguly D, Chakraborty S, Balitanas M et al (2010) Medical imaging: a review, vol 78, pp 504–516. https://doi.org/10.1007/978-3-642-16444-6_63

Greenspan H, Oz G, Kiryati N et al (2002) MRI inter-slice reconstruction using super-resolution. Magn Reson Imaging 20(5):437–446. https://doi.org/10.1016/S0730-725X(02)00511-8

HEEMALI C (2018) Brain MRI images for brain tumor detection dataset. https://www.kaggle.com/code/heemalichaudhari/brain-tumour-detection-using-deep-learning/input. Accessed 15 January 2023

Hu B, Tang Y, Chang EIC et al (2019) Unsupervised learning for cell-level visual representation in histopathology images with generative adversarial networks. IEEE J Biomed Health Inform 23(3):1316–1328. https://doi.org/10.1109/jbhi.2018.2852639

Irani M, Peleg S (1991) Improving resolution by image registration. CVGIP Graph Models Image Process 53:231–239. https://doi.org/10.1016/1049-9652(91)90045-L

Jiang M, Zhi M, Wei L et al (2021a) FA-GAN: fused attentive generative adversarial networks for MRI image super-resolution. Comput Med Imaging Graph 92:101969. https://doi.org/10.1016/j.compmedimag.2021.101969

Jiang Y, Gong X, Liu D et al (2021b) Enlightengan: deep light enhancement without paired supervision. IEEE Trans Image Process 30:2340–2349. https://doi.org/10.1109/TIP.2021.3051462

Kim J, Lee JK, Lee KM (2016) Accurate image super-resolution using very deep convolutional networks. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 1646–1654. https://doi.org/10.1109/CVPR.2016.182

Kim G, Park J, Lee K et al (2020) Unsupervised real-world super resolution with cycle generative adversarial network and domain discriminator. In: 2020 IEEE/CVF conference on computer vision and pattern recognition workshops (CVPRW), pp 1862–1871. https://doi.org/10.1109/CVPRW50498.2020.00236

Lecouat B, Chang K, Foo CS et al (2018) Semi-supervised deep learning for abnormality classification in retinal images. https://doi.org/10.48550/arXiv.1812.07832. arXiv:1812.07832

Ledig C, Theis L, Huszar F et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR). IEEE Computer Society, Los Alamitos, CA, USA, pp 105–114. https://doi.org/10.1109/CVPR.2017.19

Lin Z, Garg P, Banerjee A et al (2022) Revisiting rcan: Improved training for image super-resolution. https://doi.org/10.48550/arXiv.2201.11279. arXiv preprint arXiv:2201.11279

Lyu Q, You C, Shan H et al (2018) Super-resolution MRI through deep learning. https://doi.org/10.48550/arXiv.1810.06776

Mannam V, Howard SS (2023) Small training dataset convolutional neural networks for application-specific super-resolution microscopy. J Biomed Opt 28(3):036501. https://doi.org/10.1117/1.JBO.28.3.036501

Mirza M, Osindero S (2014) Conditional generative adversarial nets. https://doi.org/10.48550/arXiv.1810.06776. arXiv:1411.1784

Molahasani Majdabadi M, Choi Y, Deivalakshmi S et al (2022) Capsule GAN for prostate MRI super-resolution. Multimed Tools Appl 81:4119–4141. https://doi.org/10.1007/s11042-021-11697-z

Park SC, Park MK, Kang MG (2003) Super-resolution image reconstruction: a technical overview. IEEE Signal Process Mag 20(3):21–36. https://doi.org/10.1109/MSP.2003.1203207

Plenge E, Poot D, Bernsen M et al (2012) Super-resolution methods in MRI: can they improve the trade-off between resolution, signal-to-noise ratio, and acquisition time? Magn Reson Med 68:1983–1993. https://doi.org/10.1002/mrm.24187

Ran M, Hu J, Chen Y et al (2019) Denoising of 3D magnetic resonance images using a residual encoder–decoder Wasserstein generative adversarial network. Med Image Anal 55:165–180. https://doi.org/10.1016/j.media.2019.05.001

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. https://doi.org/10.48550/arXiv.1409.1556

Sivadas D, Ameer P (2021) Automated categorization of brain tumor from MRI using CNN features and SVM. J Ambient Intell Humaniz Comput 12:1–13. https://doi.org/10.1007/s12652-020-02568-w

Stark H, Oskoui P (1989) High-resolution image recovery from image-plane arrays, using convex projections. J Opt Soc Am A 6(11):1715–1726. https://doi.org/10.1364/JOSAA.6.001715

Tom B, Katsaggelos A, Galatsanos N (1994) Reconstruction of a high resolution image from registration and restoration of low resolution images. In: Proceedings—international conference on image processing, ICIP, vol 3, pp 553–557. https://doi.org/10.1109/ICIP.1994.413745

Trinh DH, Luong M, Dibos F et al (2014) Novel example-based method for super-resolution and denoising of medical images. IEEE Trans Image Process 23(4):1882–1895. https://doi.org/10.1109/TIP.2014.2308422

Wang X, Yu K, Wu S et al (2018a) Esrgan: enhanced super-resolution generative adversarial networks. In: Proceedings of the European conference on computer vision (ECCV) workshops

Wang Y, Yu B, Wang L et al (2018) 3d conditional generative adversarial networks for high-quality pet image estimation at low dose. NeuroImage 174:550–562. https://doi.org/10.1016/j.neuroimage.2018.03.045

Wu X, Tian X (2020) An adaptive generative adversarial network for cardiac segmentation from x-ray chest radiographs. Appl Sci 10(15):5032. https://doi.org/10.3390/app10155032

Xue Y, Xu T, Zhang H et al (2018) Segan: adversarial network with multi-scale L1 loss for medical image segmentation. Neuroinformatics 16(3):383–392. https://doi.org/10.1007/s12021-018-9377-x

Yang Q, Yan P, Zhang Y et al (2018) Low-dose CT image denoising using a generative adversarial network with Wasserstein distance and perceptual loss. IEEE Trans Med Imaging 37(6):1348–1357. https://doi.org/10.1109/TMI.2018.2827462

Yang W, Zhang X, Tian Y et al (2019) Deep learning for single image super-resolution: a brief review. IEEE Trans Multimed 21(12):3106–3121. https://doi.org/10.1109/TMM.2019.2919431

Yi X, Walia E, Babyn P (2019) Generative adversarial network in medical imaging: a review. Med Image Anal 58:101552. https://doi.org/10.1016/j.media.2019.101552

Yue L, Shen H, Li J et al (2016) Image super-resolution: the techniques, applications, and future. Signal Process 128:389–408. https://doi.org/10.1016/j.sigpro.2016.05.002

Zhang K, Hu H, Philbrick K et al (2022) SOUP-GAN: super-resolution MRI using generative adversarial networks. Tomography 8(2):905–919. https://doi.org/10.3390/tomography8020073

Zhang Y, Li K, Li K et al (2018) Image super-resolution using very deep residual channel attention networks. In: Proceedings of the European conference on computer vision (ECCV), pp 286–301. https://doi.org/10.1007/978-3-030-01234-2_18

Zhang Z, Du H, Qiu B (2023) FFVN: an explicit feature fusion-based variational network for accelerated multi-coil MRI reconstruction. Magn Reson Imaging 97:31–45. https://doi.org/10.1016/j.mri.2022.12.018

Zhu JY, Park T, Isola P et al (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE international conference on computer vision (ICCV), pp 2242–2251. https://doi.org/10.1109/ICCV.2017.244

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nimitha, U., Ameer, P.M. Multi image super resolution of MRI images using generative adversarial network. J Ambient Intell Human Comput 15, 2241–2253 (2024). https://doi.org/10.1007/s12652-024-04751-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-024-04751-9