Abstract

Purpose

We systematically reviewed existing critical care electroencephalography (EEG) educational programs for non-neurologists, with the primary goal of reporting the content covered, methods of instruction, overall duration, and participant experience. Our secondary goals were to assess the impact of EEG programs on participants’ core knowledge, and the agreement between non-experts and experts for seizure identification.

Source

Major databases were searched from inception to 30 August 2020. Randomized controlled trials, cohort studies, and descriptive studies were all considered if they reported an EEG curriculum for non-neurologists in a critical care setting. Data were presented thematically for the qualitative primary outcome and a meta-analysis using a random effects model was performed for the quantitative secondary outcomes.

Principal findings

Twenty-nine studies were included after reviewing 7,486 citations. Twenty-two studies were single centre, 17 were from North America, and 16 were published after 2016. Most EEG studies were targeted to critical care nurses (17 studies), focused on processed forms of EEG with amplitude-integrated EEG being the most common (15 studies), and were shorter than one day in duration (24 studies). In pre-post studies, EEG programs significantly improved participants’ knowledge of tested material (standardized mean change, 1.79; 95% confidence interval [CI], 0.86 to 2.73). Agreement for seizure identification between non-experts and experts was moderate (Cohen’s kappa = 0.44; 95% CI, 0.27 to 0.60).

Conclusions

It is feasible to teach basic EEG to participants in critical care settings from different clinical backgrounds, including physicians and nurses. Brief training programs can enable bedside providers to recognize high-yield abnormalities such as non-convulsive seizures.

Résumé

Objectif

Nous avons réalisé une revue systématique des programmes éducatifs d’électroencéphalographie (EEG) en soins intensifs s’adressant aux non-neurologues, avec pour but principal de rapporter le contenu couvert, les méthodes d’enseignement, la durée globale et l’expérience des participants. Nos objectifs secondaires étaient d’évaluer l’impact des programmes d’EEG sur les connaissances de base des participants, et l’accord entre non-experts et experts pour l’identification des convulsions.

Méthode

Les principales bases de données ont été consultées depuis leur création jusqu’au 30 août 2020. Les études randomisées contrôlées, les études de cohorte et les études descriptives ont toutes été prises en compte si elles décrivaient un programme de formation en EEG pour les non-neurologues en milieu de soins intensifs. Les données ont été présentées thématiquement en ce qui touchait notre critère d’évaluation principal qualitatif, et une méta-analyse utilisant un modèle à effets aléatoires a été exécutée pour les critères secondaires quantitatifs.

Constatations principales

Vingt-neuf études ont été incluses après avoir examiné 7486 citations. Vingt-deux études étaient monocentriques, 17 provenaient d’Amérique du Nord et 16 avaient été publiées après 2016. La plupart des études sur l’EEG visaient le personnel infirmier en soins intensifs (17 études); elles se concentraient sur les formes analysées d’EEG; l’EEG à amplitude intégrée était le thème le plus fréquemment abordé (15 études), et la plupart duraient moins d’un jour (24 études). Dans les études avant-après, les programmes d’EEG ont considérablement amélioré les connaissances des participants du matériel testé (changement moyen normalisé, 1,79; intervalle de confiance [IC] à 95 %, 0,86 à 2,73). L’accord en matière d’’identification des convulsions entre non-experts et experts était modéré (kappa de Cohen = 0,44; IC 95 %, 0,27 à 0,60).

Conclusion

Il est possible d’enseigner l’EEG de base dans des milieux de soins intensifs à des participants provenant de différents milieux cliniques, y compris les médecins et le personnel infirmier. De brefs programmes de formation peuvent permettre aux fournisseurs de soins au chevet de reconnaître les anomalies à haut impact comme par exemple des crises épileptiques non convulsives.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Use of electroencephalography (EEG) in critical care settings is recommended in multiple guidelines for a variety of indications.1,2,3,4 Owing partly to the expansion of evidence-based indications for EEG, there has been a rapid and sustained uptake in both intermittent and continuous EEG (cEEG) use in critical care environments.5 For cEEG alone, the rate of growth across adult and pediatric settings has been estimated at 30% per year.5,6 Nevertheless, even as monitoring capabilities and personnel are mobilized to meet the increase in need for EEG, current demands far outstrip availability. In a survey of neurophysiologists across 97 adult intensive care units (ICUs) in the United States, most respondents stated that if given additional resources they would monitor between 10 and 30% more patients with cEEG, and 18% would increase the duration of cEEG recordings.7

Among other logistical challenges, the expansion of EEG across ICUs has led to a substantial increase in the workload of epileptologists, neurophysiologists, and other trained experts who interpret raw EEG recordings. Interpretation of a 24- or 48-hr cEEG can be lengthy and burdensome. Moreover, while rates of EEG acquisition have risen dramatically, the number of trained experts has remained largely constant.8 Finally, even in large tertiary and quaternary centres, there are often delays of up to several hours between seizure occurrence and time to EEG interpretation.9 This can lead to therapeutic delays, which may increase patient morbidity and mortality in the case of subclinical seizures or non-convulsive status epilepticus.10,11,12,13

One potential method to expedite diagnosis and management is to train non-experts to interpret EEGs at the bedside. Multiple studies have described EEG educational programs for non-experts in adult and pediatric/neonatal acute care settings.14,15,16 Nevertheless, the overall structure, content, and efficacy of these programs remains unknown. We conducted a systematic review and meta-analysis of educational programs for EEG interpretation in adult and pediatric/neonatal critical care settings (emergency departments, ICUs, and postanesthetic care units), with the goal of describing important educational elements and outcomes across programs.

Methods

This systematic review was performed according to a published protocol17 and followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) recommendations.18 The protocol was also registered with PROSPERO: International prospective register of systematic reviews (CRD42020171208).

Literature search

We searched for relevant studies published on MEDLINE, Embase, Cochrane central (OVID), CINAHL (EbscoHost), and Web of Science (Clarivate Analytics) from inception to 30 August 2020, with an update performed before submission for publication. The search strategy was created by a health information specialist (ME) using a combination of free-text keywords and medical subject headings. A sample of the MEDLINE search is provided in eAppendix 1 in the Electronic Supplemental Material (ESM). We also searched the grey literature including http://clinicaltrials.gov to identify unpublished material. The reference lists of studies selected for inclusion were scanned to identify any remaining studies. Lastly, cited reference searching via the Web of Science was conducted on ten seminal papers. No language restrictions were applied.

Eligibility criteria

We searched for studies reporting educational methods to teach EEG to non-experts in the ICU, emergency department, or postanesthesia care unit. To be eligible for inclusion, studies were required to present sufficient details of their educational program. Randomized controlled trials, cohort studies, and descriptive studies were all considered. Studies from both adult and pediatric/neonatal settings were included with the goal of being broadly inclusive. Studies were excluded if participants had prior advanced expertise interpreting and reporting EEGs (e.g., neurophysiologists or epileptologists). Medical trainees, EEG technicians, neurocritical care nurses, and other individuals with exposure to EEG but without dedicated EEG specialization were all considered non-experts for the purpose of this review.

Finally, we considered studies describing multiple forms of EEG, including intermittent EEG, raw EEG (cEEG), and processed forms of cEEG with data displayed as compressed tracings (e.g., amplitude-integrated EEG [aEEG], colour density spectral array [CDSA]) or as a numerical output (e.g., bispectral index). Figure 1 presents a simplified schematic used to classify common forms of EEG for the purposes of this study. We decided a priori to include various forms of EEG (including both raw and processed), with the rationale that excluding certain modalities would potentially misrepresent important findings on the overall scope and landscape of existing EEG programs.

Study selection and data extraction

Studies were initially screened by title and abstract by one reviewer (S.T.). Articles selected for further consideration were reviewed independently and in full by two reviewers (S.T., W.A.). Data reported in different publications (e.g., conference abstract and full manuscript) but originating from the same study were included only once in the analysis, with the most comprehensive source being represented. Both reviewers (S.T., W.A.) compared their study lists at the end of the selection process to ensure concordance. Differences were resolved in consensus to generate the final list of studies.

Two reviewers (S.T. and W.A.) independently extracted data on study design, methods, and outcomes into a standardized data collection form. The full data form (available as ESM, eAppendix 2) was pre-piloted on five studies and iteratively modified to ensure completeness. We collected information on participant background, study setting, and details of the EEG learning program. Quantitative data were also extracted for the subset of studies reporting our secondary outcomes of interest. Study authors were contacted up to three times to obtain any missing data. Author responses are reported in eAppendix 3 (available as ESM). Differences in extracted data were resolved in consensus by the two primary reviewers.

Funnel plots were considered for assessing publication bias via inspection for asymmetry. Nevertheless, the small number of studies (< ten) in each meta-analysis precluded a formal assessment of publication bias and funnel plots were instead used to show an overall estimate of small-study effects, as per the Cochrane Handbook.19

Outcomes

Our primary outcome was to characterize educational methods to teach EEG to non-experts in critical care settings. For the assessment of this qualitative outcome, results were reported according to the following themes: 1) clinical background of the participants, 2) content of the training program, 3) teaching methods, 4) assessment methods, 5) duration of the program, and 6) participant feedback. Our secondary outcomes included 1) comparison of learners’ performance on written or bedside assessments before and after receiving the training program and 2) inter-rater agreement for seizure identification between non-experts receiving the EEG program and experts (i.e., neurophysiologists or epileptologists). For the assessment of secondary outcomes, we analyzed only the subset of studies reporting the relevant data.

Quality assessment of observational studies and risk of bias of randomized-controlled trials

Two reviewers (S.T. and W.A.) independently assessed the quality of observational studies using the National Institute of Health (NIH) study quality assessment tools (available from https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools and also presented in the ESM, eAppendix 4). The optimal quality assessment tool is transparent, offers a comprehensive analysis of a study’s internal validity, is specific to the study design being evaluated, and avoids representing the study’s overall quality with a single numeric score.20 The NIH tools were chosen because they met all of these recommendations. Risk of bias was assessed at the study level and studies were assigned a quality of “good,” “fair,” or “poor” based on items considered essential for internal validity (full adjudication details are presented as ESM, eAppendix 4). One randomized-controlled trial was included in this systematic review and its risk of bias was evaluated with the Cochrane Collaboration’s Risk of Bias 2 (RoB 2) tool.21

Assessing the certainty of evidence

As discussed in our protocol, we anticipated difficulty providing Grading of Recommendations Assessment, Development and Evaluation (GRADE) summary statements for our chosen outcomes because of heterogeneity between studies. Our final review of the included studies confirmed this to be the case, so we did not generate GRADE recommendations for this systematic review.

Data analysis

The qualitative primary outcome was broken down by theme and described in the form of written summaries, graphs, and tables. Synthesized data for each of the two secondary outcomes were presented as written summaries and forest plots. A meta-analysis of studies contributing to our secondary outcomes was not initially planned because of anticipated clinical heterogeneity between studies.17 However, after finalizing our study list and reviewing their clinical methods, we felt there was sufficient similarity between studies to undertake a standard meta-analysis following the Cochrane Handbook’s recommendations.19

We used the standardized mean change for comparing evaluation scores pre-post EEG training program using patient-level data when available22,23,24 or the mean (standard deviation [SD]) of the overall cohort assuming a correlation of 0.5 between pre-post scores25,26,27 to calculate the SD of the mean change. The standardized mean change is a measure that allows meta-analysis of data across pre-post studies that have different scores reported across studies.28 Standardized mean changes were then pooled using the inverse variance method with DerSimonian–Laird random effects. We performed two sensitivity analyses to estimate the SD of the change using correlations of 0.3 and 0.7 between pre-post scores. Cohen’s kappa coefficients were pooled using similar methods. Cohen’s kappa assumes that subjects being rated are independent of each other, the categories of ratings are independent and mutually exclusive, and the two raters operate independently.29 The data available in some papers had the same patients’ EEGs evaluated by multiple non-experts (i.e., the subject independence assumption was incompletely met), leading to estimates of kappa’s confidence intervals that were potentially narrower than they should have been.

Results

Literature search

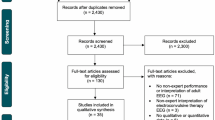

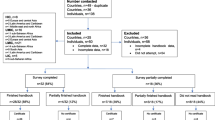

The search yielded 7,486 studies, of which 6,035 were screened and 29 were included (Fig. 2). Table 1 presents the characteristics of included studies. Studies were all published in English and included 26 cohort studies,14,15,16,22,23,24,25,26,27,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46 two descriptive studies,47,48 and one randomized-controlled trial.49

Study characteristics

All studies described a program for educating non-experts in EEG in the acute care environment. Twenty-two studies were single centre in design, 17 originated in North America, and 16 were published after 2016. Sample sizes ranged from four educational participants in the smallest studies16,32,41 to 250 in the largest.46 Only seven studies had more than 50 participants.14,25,31,33,35,36,46 One study was performed in the ED,49 18 in adult ICUs (either general medical-surgical ICU or neuro-ICU),14,22,23,24,25,26,30,32,33,38,39,40,41,42,43,44,45,46 four in pediatric ICUs,15,31,34,37 and six in neonatal ICUs.16,27,35,36,47,48 In three studies, participants learned intraoperative EEG from trained experts during various neurosurgical procedures, although this was in the context of a broader educational curriculum that largely occurred within the neuro-ICU.22,23,33 None of the studies were conducted in the postanesthesia care unit. Additional study characteristics are presented as ESM (eAppendix 5).

Four studies were conducted by the same primary author,22,23,24,33 but because the educational program and participants were different between the studies (confirmed by correspondence with the study author), all four were included and analyzed separately. Two studies outlined programs for EEG training of non-experts but did not pilot them in a group of participants.47,48 Both were classified as descriptive studies and included only in the assessment of the qualitative primary outcome.

Outcome analysis

Primary outcome

Clinical background of participants

Most EEG training programs were geared towards nurses. Overall, critical care nurses were the most common participants (17 studies),15,25,27,30,31,32,34,35,36,37,38,39,42,43,44,46,47 followed by critical care physicians (11 studies)14,15,16,27,36,37,40,41,44,46,48 and critical care fellows (seven studies).15,24,25,26,34,44,46 Medical residents comprised the minority of participants in EEG educational programs. Only one study included ED physicians.49

Content of EEG training

The most common learning objective among programs was seizure identification (Table 1). Few studies focused on recognition of other EEG patterns, such as normal background, sleep/wake patterns, and artefacts. In several studies, the learning program involved a mix of EEG theory, practical considerations (e.g., how to set up the recording system or apply electrodes), and a general approach to waveform interpretation.

Processed forms of EEG were more common in learning programs than raw cEEG or intermittent EEG. As shown in Table 1, although raw data were collected by cEEG in most studies, participants rarely reviewed these tracings and the educational program was mostly performed using simplified outputs (e.g., aEEG and CDSA). By far, the most common training program involved aEEG (15 studies);15,16,27,31,32,34,35,36,37,38,39,40,41,43,47 whereas raw cEEG14,26,35,40,42,46,48 and intermittent EEG22,23,24,25,33,45,49 were evaluated in seven studies each.

Teaching methods

EEG education programs involved a mix of large group didactic lectures, small group sessions, and self-learning or one-on-one review with EEG experts. Didactic lectures were common whereas small group simulations, case-based learning, and textbook readings were used in a smaller proportion of programs. Full details of the teaching methods for each study, including the content of the training program and teaching methods, are presented as ESM eAppendices 6 and 7.

Assessment methods

Assessments occurred in the form of written quizzes or bedside/real-time EEG interpretation. Among studies reporting assessment methods, 18 used written quizzes15,22,23,24,25,26,27,30,33,34,36,38,42,44,46,47,48,49 and nine used bedside interpretations.16,31,32,37,39,40,41,43,45 Only four studies assessed participants’ long-term retention of EEG concepts.33,42,46,49 Knowledge was assessed between two and 11 months in these studies and each indicated statistically significant improvement in EEG test scores compared with the baseline test administered before the start of the education program (data could not be meta-analyzed). Additional details are presented as ESM, eAppendix 6.

Duration of the learning program

In most studies, the overall length of the training program was one day or shorter. Among studies reporting training length, program duration was fewer than three hours in eight studies,15,30,34,38,39,41,43,46 between three and six hours in six studies,16,26,27,31,32,36 and between seven hours and one day in three studies.40,47,48 Only in four studies was the overall duration of the training program longer than one day.22,23,33,37

Participant feedback

Participant feedback was available in three studies.24,27,47 Results were reported using different measures and could not be meta-analyzed. In the first study, 70.4% of participants felt that the program allowed them to interpret a normal aEEG with confidence and 77.8% believed the duration of the teaching program was optimal.27 In the second study, satisfaction with the EEG curriculum was moderately high [mean (SD) score, 3.7 (0.9)] on a Likert scale of 1–5.24 In the final study, feedback was assessed qualitatively and indicated that participants felt more comfortable with EEG equipment and tracings.47

Secondary outcomes

Pre-post performance on structured assessments

Meta-analysis was performed for six pre-post studies.22,23,24,25,26,27 Each study compared trainee performance on a written assessment before and after receiving the EEG training program. There was a significant improvement in trainees’ test scores following the EEG program (standardized mean change, 1.79; 95% CI, 0.86 to 2.73), although statistical heterogeneity was considerable (I2 = 85.5%). For interpretability, a standardized mean difference (SMD) of 0.2 represents a “small” effect, an SMD of 0.5 represents a “medium” effect, and an SMD of 0.8 represents a “large” effect.50 The forest plot of pre-post studies is shown in Fig. 3. Subgroup analysis was not performed owing to the small number of available studies.

Comparison between non-experts and experts for seizure identification

Six studies assessed agreement for seizure identification between experts and non-experts who had received brief EEG training.14,30,31,39,44,45 Meta-analysis indicated moderate agreement for seizure identification (Cohen’s kappa = 0.44; 95% CI, 0.27 to 0.60) although statistical heterogeneity was considerable (I2 = 98.0%). The forest plot is shown in Fig. 4. Meta-analysis could not be performed for agreement in other aspects of EEG interpretation (e.g., artefact, burst suppression), given that these were not assessed in most studies.

Funnel plots for both secondary outcomes are displayed as ESM, eAppendices 8 and 9. There do not appear to be any small studies reporting large effect estimates in either meta-analysis. Assessment for publication bias was deferred because the number of studies was low.

Quality of reported studies

Summary results from the NIH quality assessment for non-descriptive observational studies are presented in Table 2. Study quality was assigned as “good”, “fair”, or “poor” based on items deemed crucial for strong internal validity, a qualitative approach that is in line with the broader recommendation to avoid assigning global numeric scores during quality assessment.20 The majority of studies in this review ranked as fair to good in quality. One randomized-controlled trial was included,49 and its overall risk of bias was judged to be low (ESM, eAppendix 10).

Discussion

Our systematic review summarizes the published literature on EEG training programs for non-experts in critical care settings. We identified 29 studies spanning multiple countries. Most programs were targeted to critical care nurses, focused on processed EEG (e.g., aEEG and CDSA), and were shorter than one day in duration. Participants’ short-term knowledge of EEG theory significantly improved after the program, and analysis of studies assessing learners against experts showed moderate agreement for seizure identification. The overall quality of published studies was fair to good as measured by the NIH quality assessment scales.

Our study has several important implications. First, by systematically reviewing the existing content, structure, and design of EEG programs for critical care clinicians, our study provides comprehensive information to guide the development of future EEG programs. Educators will find the answers to important logistical questions in our review, such as who could deliver EEG content, which teaching methods should be considered, and how knowledge could be evaluated. Although we were unable to assess these concepts quantitatively, our study nevertheless provides curriculum designers with a framework to aid in the development of institution-specific programs.

Second, our study highlights the typically condensed and limited nature of EEG programs for non-experts. We found that most studies focused on seizure identification in critically ill patients but did not emphasize other indications for which EEG may be requested. This may reflect the specific nature of critical care environments, where an overarching priority is the diagnosis of non-convulsive seizures and non-convulsive status epilepticus (NCSE). Nevertheless, it also highlights the important point that EEG interpretation is a complex skill that cannot be mastered from a short educational program. In Canada and the United States, for example, the average length of neurophysiology fellowship is six months to one year and follows a four- or five-year neurology residency.51,52 Approximately 50% of overall training time in most fellowships is devoted to EEG or the epilepsy monitoring unit.52 Conversely, EEG training programs for non-experts cannot be nearly as comprehensive, leading to emphasis on topics that are easily teachable. Our study supported this notion with the observation that most programs focused on seizure identification with processed forms of EEG rather than raw cEEG. Processed EEG, such as CDSA or aEEG, allows for rapid detection of pathologies and may be particularly suited for non-experts owing to its condensed nature and more obvious demonstration of seizure activity.53 This observation may help educators maximize the yield of a brief EEG curriculum.

Third, our study draws attention to a wider and more recent trend in neurocritical care, in which non-neurologists are playing a larger role in EEG interpretation. In a recent survey of members of the American Clinical Neurophysiology Society, 21% of respondents indicated that non-experts took part in EEG interpretation at their hospitals.54 Another survey found that EEG technologists reviewed full cEEGs at 26% of respondents’ home institutions.7 With increasing participation of non-neurologists in EEG review, an important concern is that clinicians may interpret EEGs incorrectly and take clinical decisions based on erroneous information. This is compounded by the issue of overconfidence, as highlighted by the study from Poon et al. in which 70.4% of participants felt comfortable in their ability to diagnose seizures after receiving only a brief EEG curriculum.27 Nevertheless, as EEG programs become more standardized, it is foreseeable that non-experts will acquire a more robust and dependable skillset to recognize and act upon basic patterns. Such a transition might follow the example of critical care echocardiography. Whereas ten years ago echocardiography was a cardiology-led initiative, it is now recognized as a critical care competency that plays an important role in bedside patient management.55 A final additional advantage of EEG training programs is their potential to reduce the time to an initial diagnosis. Currently, even in large academic centres with specialized EEG expertise, there may be delays of several hours for EEG interpretation.9,56,57 For non-convulsive seizures and NCSE, delayed diagnosis is associated with increased morbidity and mortality.10,11,13 Bedside interpretation of EEG by non-experts could substantially shorten these delays and expedite patient management.

The strengths of our review lie in its transparent reporting process and rigorous methodology. As per best practices,18 our study was registered in PROSPERO and adhered to a pre-specified study protocol.17 Meta-analysis was performed following the Cochrane Handbook recommendations.19 Finally, our study was performed by a diverse team of clinicians (including intensivists, neurologists and neuro-intensivists), research scientists, and health information specialists who had specific subject matter and methodologic expertise.

This review also has important limitations. First, our quantitative analysis was limited to seizure identification. Only a few studies described other important EEG tracings such as normal background, burst suppression, sleep/wake cycle, or artefact.31,38 In the ICU, artefacts are common and may result from equipment (e.g., mechanical ventilator, hemodialysis machine), patient physiology (e.g., excessive sweating, cardiac arrhythmias), and monitoring devices (e.g., electrocardiogram).58 These are worth noting because non-experts might overcall seizures if they cannot distinguish true seizures from artefacts or other abnormalities.30 Second, the studies in our review implemented a wide range of teaching methods and multiple EEG modalities. Since we were unable to explore possible associations between outcomes and specific teaching methods or EEG modality, important differences in efficacy between various approaches remain unknown. Nevertheless, the fact that short-term knowledge still improved across a diverse set of EEG programs targeted to different participants is hypothesis generating and supports the need for further research into the best methods to teach EEG to non-experts. Third, our meta-analysis of pre-post studies was limited to short-term knowledge outcomes. We could not draw robust conclusions on long-term content retention since only a minority of studies assessed this variable. Fourth, the large effect (> 0.8) observed in the standardized mean change in pre-post studies may over-estimate the true effect of the EEG intervention as the meta-analysis included four small studies with fewer than ten participants and only two studies with 30 and 57 participants. Therefore, the SDs may not be representative of the true variability existing in the population.50 Finally, our meta-analysis of Cohen’s kappa may have been affected by the fact that not all studies met the subject independence assumption, leading to potentially narrower confidence intervals from each study. We proceeded with meta-analysis because the summary estimate for kappa would be unaffected (i.e., our kappa estimate of 0.44 is unlikely to be substantially changed); furthermore, in a foundational paper describing reporting methods for Cohen’s kappa, a meta-analysis was reported for a representative group of studies despite incomplete adherence to the subject independence assumption, similar to our case.29

Conclusions

Our systematic review and meta-analysis offers a comprehensive summary of existing EEG training programs for non-experts. It shows that EEG programs may improve short-term knowledge in mixed groups of participants and suggests that non-experts can be trained to detect seizures on EEG after receiving brief training. Finally, our review offers educators and curriculum developers a framework to develop institution-specific EEG programs.

References

Claassen J, Taccone FS, Horn P, et al. Recommendations on the use of EEG monitoring in critically ill patients: consensus statement from the neurointensive care section of the ESICM. Intensive Care Med 2013; 39: 1337-51.

Brophy GM, Bell R, Claassen J, et al. Guidelines for the evaluation and management of status epilepticus. Neurocrit Care 2012; 17: 3-23.

Herman ST, Abend NS, Bleck TP, et al. Consensus statement on continuous EEG in critically ill adults and children, part I: indications. J Clin Neurophysiol 2015; 32: 87-95.

Shellhaas RA, Chang T, Tsuchida T, et al. The American Clinical Neurophysiology Society’s guideline on continuous electroencephalography monitoring in neonates. J Clin Neurophysiol 2011; 28: 611-7.

Ney JP, van der Goes DN, Nuwer MR, Nelson L, Eccher MA. Continuous and routine EEG in intensive care: utilization and outcomes, United States 2005-2009. Neurology 2013; 81: 2002-8.

Sanchez SM, Carpenter J, Chapman KE, et al. Pediatric ICU EEG monitoring: current resources and practice in the United States and Canada. J Clin Neurophysiol 2013; 30: 156-60.

Gavvala J, Abend N, LaRoche S, et al. Continuous EEG monitoring: a survey of neurophysiologists and neurointensivists. Epilepsia 2014; 55: 1864-71.

Powers L, Shepard KM, Craft K. Payment reform and the changing landscape in medical practice: implications for neurologists. Neurol Clin Pract 2012; 2: 224-30.

Gururangan K, Razavi B, Parvizi J. Utility of electroencephalography: experience from a U.S. tertiary care medical center. Clin Neurophysiol 2016; 127: 3335-40.

Abend NS, Topjian AA, Gutierrez-Colina AM, Donnelly M, Clancy RR, Dlugos DJ. Impact of continuous EEG monitoring on clinical management in critically ill children. Neurocrit Care 2011; 15: 70-5.

Claassen J, Mayer SA, Kowalski RG, Emerson RG, Hirsch LJ. Detection of electrographic seizures with continuous EEG monitoring in critically ill patients. Neurology 2004; 62: 1743-8.

Abend NS, Gutierrez-Colina AM, Topjian AA, et al. Nonconvulsive seizures are common in critically ill children. Neurology 2011; 76: 1071-7.

Shneker BF, Fountain NB. Assessment of acute morbidity and mortality in nonconvulsive status epilepticus. Neurology 2003; 61: 1066-73.

Citerio G, Patruno A, Beretta S, et al. Implementation of continuous qEEG in two neurointensive care units by intensivists: a feasibility study. Intensive Care Med 2017; 43: 1067-8.

Du Pont-Thibodeau G, Sanchez SM, Jawad AF, et al. Seizure detection by critical care providers using amplitude-integrated electroencephalography and color density spectral array in pediatric cardiac arrest patients. Pediatr Crit Care Med 2017; 18: 363-9.

Rennie JM, Chorley G, Boylan GB, Pressler R, Nguyen Y, Hooper R. Non-expert use of the cerebral function monitor for neonatal seizure detection. Arch Dis Child Fetal Neonatal Ed 2004; 89: F37-40.

Taran S, Ahmed W, Bui E, Prisco L, Hahn CD, McCredie VA. Educational initiatives and implementation of electroencephalography into the acute care environment: a protocol of a systematic review. Syst Rev 2020; DOI: https://doi.org/10.1186/s13643-020-01439-x.

Moher D, Liberati A, Tetzlaff J, Altman DG; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. J Clin Epidemiol 2009; 62: 1006-12.

Higgins JP, Thomas J, Chandler J, et al. Cochrane Handbook for Systematic Reviews of Interventions version 6.0. Chichester (UK) John Wiley & Sons; 2019.

Viswanathan M, Ansari MT, Berkman ND, et al. Assessing the Risk of Bias of Individual Studies in Systematic Reviews of Health Care Interventions. Methods Guide for Effectiveness and Comparative Effectiveness Reviews. AHRQ Methods for Effective Health Care. Rockville (MD): Agency for Healthcare Research and Quality (US); 2008.

Sterne JA, Savović J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ 2019; DOI: https://doi.org/10.1136/bmj.l4898.

Fahy BG, Chau DF, Bensalem-Owen M. Evaluating the requirements of electroencephalograph instruction for anesthesiology residents. Anesth Analg 2009; 109: 535-8.

Fahy BG, Chau DF, Owen MB. The effectiveness of a simple novel approach on electroencephalograph instruction for anesthesiology residents. Anesth Analg 2008; 106: 210-4.

Fahy BG, Vasilopoulos T, Chau DF. Use of flipped classroom and screen-based simulation for interdisciplinary critical care fellow teaching of electroencephalogram interpretation. Neurocrit Care 2020; 33: 298-302.

Leira EC, Bertrand ME, Hogan RE, et al. Continuous or emergent EEG: can bedside caregivers recognize epileptiform discharges? Intensive Care Med 2004; 30: 207-12.

Chau D, Bensalem-Owen M, Fahy BG. The impact of an interdisciplinary electroencephalogram educational initiative for critical care trainees. J Crit Care 2014; 29: 1107-10.

Poon WB, Tagamolila V, Toh YP, Cheng ZR. Integrated approach to e-learning enhanced both subjective and objective knowledge of aEEG in a neonatal intensive care unit. Singapore Med J 2015; 56: 150-6.

Becker BJ. Synthesizing standardized mean-change measures. Br J Math Stat Psychol 1988; 41: 257-78.

Sun S. Meta-analysis of Cohen’s kappa. Health Serv Outcomes Res Method 2011; 11: 145-63.

Amorim E, Williamson CA, Moura LM, et al. Performance of spectrogram-based seizure identification of adult EEGs by critical care nurses and neurophysiologists. J Clin Neurophysiol 2017; 34: 359-64.

Bourgoin P, Barrault V, Loron G, et al. Interrater agreement between critical care providers for background classification and seizure detection after implementation of amplitude-integrated electroencephalography in neonates, infants, and children. J Clin Neurophysiol 2020; 37: 259-62.

Dericioglu N, Yetim E, Bas DF, et al. Non-expert use of quantitative EEG displays for seizure identification in the adult neuro-intensive care unit. Epilepsy Res 2015; 109: 48-56.

Fahy BG, Vasilopoulos T, Bensalem-Owen M, Chau DF. Evaluating an interdisciplinary EEG initiative on in-training examination EEG-related item scores for anesthesiology residents. J Clin Neurophysiol 2019; 36: 127-34.

Lalgudi Ganesan S, Stewart CP, Atenafu EG, et al. Seizure identification by critical care providers using quantitative electroencephalography. Crit Care Med 2018; 46: e1105-11.

Goswami I, Bello-Espinosa L, Buchhalter J, et al. Introduction of continuous video EEG monitoring into 2 different NICU models by training neonatal nurses. Adv Neonat Care 2018; 18: 250-9.

Griesmaier E, Neubauer V, Ralser E, Trawoger R, Kiechl-Kohlendorfer U, Keller M. Need for quality control for aEEG monitoring of the preterm infant: a 2-year experience. Acta Paediatr 2011; 100: 1079-83.

Guan Q, Li S, Li X, Yang HP, Wang Y, Liu XY. Feasibility of using amplitude-integrated electroencephalogram to identify epileptic seizures by pediatric intensive care unit medical staff independently (Chinese). Zhonghua Er Ke Za Zhi 2016; 54: 823-8.

Herta J, Koren J, Fürbass F, et al. Applicability of NeuroTrend as a bedside monitor in the neuro ICU. Clin Neurophysiol 2017; 128: 1000-7.

Kang JH, Sherill GC, Sinha SR, Swisher CB. A trial of real-time electrographic seizure detection by neuro-ICU nurses using a panel of quantitative EEG trends. Neurocrit Care 2019; 31: 312-20.

Lybeck A, Cronberg T, Borgquist O, et al. Bedside interpretation of simplified continuous EEG after cardiac arrest. Acta Anaesthesiol Scand 2020; 64: 85-92.

Nitzschke R, Muller J, Engelhardt R, Schmidt GN. Single-channel amplitude integrated EEG recording for the identification of epileptic seizures by nonexpert physicians in the adult acute care setting. J Clin Monit Comput 2011; 25: 329-37.

Seiler L, Fields J, Peach E, Zwerin S, Savage C. The effectiveness of a staff education program on the use of continuous EEG with patients in neuroscience intensive care units. J Neurosci Nurs 2012; 44: E1-5.

Swisher CB, White CR, Mace BE, et al. Diagnostic accuracy of electrographic seizure detection by neurophysiologists and non-neurophysiologists in the adult ICU Using a panel of quantitative EEG trends. J Clin Neurophysiol 2015; 32: 324-30.

Topjian AA, Fry M, Jawad AF, et al. Detection of electrographic seizures by critical care providers using color density spectral array after cardiac arrest is feasible. Pediatr Crit Care Med 2015; 16: 461-7.

Kyriakopoulos P, Ding JZ, Niznick N, et al. Resident use of EEG cap system to rule out nonconvulsive status epilepticus. J Clin Neurophysiol 2020; DOI: https://doi.org/10.1097/WNP.0000000000000702.

Legriel S, Jacq G, Lalloz A, et al. Teaching important basic EEG patterns of bedside electroencephalography to critical care staffs: a prospective multicenter study. Neurocrit Care 2020; DOI: https://doi.org/10.1007/s12028-020-01010-5.

Sacco L. Amplitude-integrated electroencephalography interpretation during therapeutic hypothermia: an educational program and novel teaching tool. Neonat Netw 2016; 35: 78-86.

Whitelaw A, White RD. Training neonatal staff in recording and reporting continuous electroencephalography. Clin Perinatol 2006; 33: 667-77.

Chari G, Yadav K, Nishijima D, Omurtag A, Zehtabchi S. Improving the ability of ED physicians to identify subclinical/electrographic seizures on EEG after a brief training module. Int J Emerg Med 2019; DOI: https://doi.org/10.1186/s12245-019-0228-9.

Cohen J. Statistical Power Analysis for the Behavioral Sciences. Hillsdale, NJ: Lawrence Erlbaum Associates; 1988 .

Task Force of the Canadian Society of Clinical Neurophysiologists. Minimal standards for electroencephalography in Canada. Can J Neurol Sci 2002; 29: 216-20.

Haneef Z, Chiang S, Rutherford HC, Antony AR. A survey of neurophysiology fellows in the United States. J Clin Neurophysiol 2017; 34: 179-86.

Kim JA, Moura LM, Williamson C, et al. Seizures and quantitative EEG. In: Varelas PN, Claassen J, editors. Seizures in Critical Care: A Guide to Diagnosis and Therapeutics. Cham: Springer International Publishing; 2017. p. 51-75.

Swisher CB, Sinha SR. Utilization of quantitative EEG trends for critical care continuous EEG monitoring: a survey of neurophysiologists. J Clin Neurophysiol 2016; 33: 538-44.

Vieillard-Baron A, Millington SJ, Sanfilippo F, et al. A decade of progress in critical care echocardiography: a narrative review. Intensive Care Med 2019; 45: 770-88.

Quigg M, Shneker B, Domer P. Current practice in administration and clinical criteria of emergent EEG. J Clin Neurophysiol 2001; 18: 162-5.

Vespa PM, Olson DM, John S, et al. Evaluating the clinical impact of rapid response electroencephalography: the DECIDE multicenter prospective observational clinical study. Crit Care Med 2020; 48: 1249-57.

White DM, Van Cott AC. EEG Artifacts in the intensive care unit setting. Am J Electroneurodiagnostic Technol 2010; 50: 8-25.

Author contributions

Shaurya Taran was responsible for project conception, performing the title and abstract screening, reviewing the full texts of eligible articles, extracting data, performing the NIH quality assessment on included studies, compiling the figures and tables, and writing the final manuscript. Wael Ahmed performed the literature search, data extraction, and NIH quality assessment. Ruxandra Pinto performed the statistical analysis, meta-analysis, and created the forest and funnel plots. Esther Bui was responsible for project conception, critical revisions, and manuscript preparation. Lara Prisco was responsible for project conception, critical revisions, finalizing the data extraction sheet, and manuscript preparation. Cecil D. Hahn was responsible for project conception, critical revisions, and manuscript preparation. Marina Englesakis was responsible for the creation of the search strategies, critical revisions, and writeup of the search details. Victoria A. McCredie was responsible for project conception, methodologic guidance, critical revisions, statistical interpretation, and manuscript preparation.

Acknowledgements

The authors would like to thank Dr. Edilberto Amorim, Dr. Jennifer Kang, Dr. Enrique Leira, Dr. Woei Bing Poon, Ms. Lisa Seiler, and Dr. Alexis Topjian for providing additional data from their studies. None were paid for their contributions.

Disclosures

Dr. Cecil Hahn reported receiving grants from Takeda Pharmaceuticals and UCB Pharma outside the submitted work. No other authors have any affiliations that may be perceived as conflicts of interest.

Funding statement

None.

Editorial responsibility

This submission was handled by Dr. Hilary P. Grocott, former Editor-in-Chief, Canadian Journal of Anesthesia.

Data availability statement

Additional data and materials not in the manuscript or supplemental appendices can be made available upon reasonable request.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Taran, S., Ahmed, W., Pinto, R. et al. Educational initiatives for electroencephalography in the critical care setting: a systematic review and meta-analysis. Can J Anesth/J Can Anesth 68, 1214–1230 (2021). https://doi.org/10.1007/s12630-021-01962-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12630-021-01962-y