Abstract

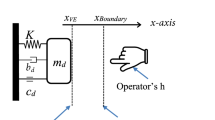

Haptic augmented virtuality systems can provide users with a highly immersive haptic experience by visuo-haptic collocation of physical objects with their corresponding virtual objects. The collocation can be accomplished by an encounter-type haptic display system where a robotic device provides a physical prop that represents a whole or partial virtual object in real-time and in the space occupied by the virtual object in the virtual environment. For efficient and accurate visuo-haptic collocation, this paper proposes a novel yet simple, efficient and accurate calibration method. The method employs a high-accuracy position device such as an industrial robot that can place physical objects quickly and accurately. The transformation between the reference frames of the real (robot) and virtual worlds is obtained by the simple motion of the robot’s end-effector equipped with a real object that is in turn tracked by a VR tracker system such as the HTC VIVE tracker. An evaluation of the proposed method indicates that it is possible to achieve acceptable accuracy with a few data points as compared to existing calibration methods that require a large number of data points.

Similar content being viewed by others

References

P. Milgram and F. Kishino, “A taxonomy of mixed reality visual displays,” IEICE Transactions on Information and Systems, vol. 77, no. 12, pp. 1321–1329, 1994.

Z. Yang, D. Weng, Z. Zhang, Y. Li, and Y. Liu, “Perceptual issues of a passive haptics feedback based MR system,” Adjun. Proc. of IEEE Int. Symp. Mix. Augment. Reality, ISMAR-Adjunct 2016, pp. 310–317, 2016.

M. Hong and J. W. Rozenblit, “A haptic guidance system for computer-assisted surgical trauning using virtual fixtures,” Proc. of IEEE International Conference on Systems, Man, and Cybernetics (SMC), pp. 002230–002235, 2016.

D. M. Vo, J. M. Vance, and M. G. Marasinghe, “Assessment of haptics-based interaction for assembly tasks in virtual reality,” Proc. of World Haptics 2009-Third Joint Euro-Haptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, IEEE, 2009.

C. Limongelli, G. Mosiello, S. Panzieri, and F. Sciarrone, “Virtual industrial trauning: joining innovative interfaces with plant modeling,” Proc. of Int. Conf. Inf. Technol. Based High. Educ. Trauning, ITHET 2012, pp. 1–6, 2012.

S. H. Oh and T. K. Whangbo, “A study on the effective interaction method to improve the presence in social virtual reality game,” Proc. of 4th Int. Conf. Comput. Appl. Inf. Process. Technol., pp. 1–2, 2017.

P. Olsson, F. Nysjo, S. Seipel, and Carlbom, “Physically co-located haptic interaction with 3D displays,” Proc. Haptics 2012, pp. 267–272, 2012.

D. Swapp, V. Pawar, and C. Loscos, “Interaction with co-located haptic feedback in virtual reality,” Virtual Real., vol. 10, no. 1, pp. 24–30, 2006.

M. Harders, G. Bianchi, B. Knoerlein, and G. Szekely, “Calibration, registration, and synchronization for high precision augmented reality haptics,” IEEE Trans. Vis. Comput. Graph., vol. 15, no. 1, pp. 138–149, 2009.

G. Bianchi, B. Knoerlein, G. Szekely, and M. Harders, “High precision augmented reality haptics,” Simulation, vol. 6, pp. 169–178, 2006.

M. Ikits, C. D. Hansen, and C. R. Johnson, “A comprehensive calibration and registration procedure for the visual haptic workbench,” Proc. Work. Virtual Environ. 2003 - EGVE’03, pp. 247–254, 2003.

U. Eck, F. Pankratz, C. Sandor, G. Klinker, and H. Laga, “Precise haptic device co-location for visuo-haptic augmented reality,” IEEE Trans. Vis. Comput. Graph., vol. 21, no. 12, pp. 1427–1441, 2015.

C. G. Lee, G. L. Dunn, I. Oakley, and J. Ryu, “Visual guidance for encountered type haptic display: a feasibility study,” Adjun. Proc. IEEE Int. Symp. Mix. Augment. Reality, ISMAR-Adjunct 2016, pp. 74–77, 2016.

HTC, “VIVETM I VIVE Arts,” [Online] Avaulable: https://arts.vive.com/us/[Accessed: 23-Nov-2018].

“Collaborative industrial robotic robot arms I Cobots from universal robots,” [Online] Avaulable: https://www.universal-robots.com/ [Accessed: 24-Nov-2018].

D. C. Niehorster, L. Li, and M. Lappe, “The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research,” i-Perception, vol. 8, no. 3, pp. 1–23, 2017.

R. K. Lenz, “A new technique for fully autonomous and efficient 3D robotics hand/eye calibration,” IEEE Trans. Robot, vol. 5, pp. 345–358, 1989.

P. Milgram and F. Kishino, “A taxonomy of mixed reality visual displays,” IEICE Trans. Inf. Syst., vol. 77, pp. 1321–1329, 1994.

“Oculus,” [Online] Avaulable: https://www.oculus.com/[Accessed: 29-Apr-2019].

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Recommended by Associate Editor Vu Nguyen under the direction of Editor Doo Yong Lee. This work was supported by a grant (14IFIP-B085984-02) from the Plant Research Program funded by the Ministry of Land, Infrastructure and Transport (MOLIT) of the Korea government and Korea Agency for Infrastructure Technology Advancement (KAIA). First and second authors contributed equally.

Yoosung Bae received his B.S. degree in Electrical Engineering and Computer Science from GIST (Gwangju Institute of Science and Technology), Gwanju, Korea in 2017. He is a Integrated Course student (MS & Ph. D degree) in the School of Integrated Technology at GIST, Gwangju, Korea. His research interests are VR/AR applications and haptic interaction technology.

Baekdong Cha received his B.S. degree in Bio Medical Engineering from Yonsei University, Wonju, Korea, in 2015, and his M.S. degree in Bio Medical Science and Engineering from the GIST (Gwangju Institute of Science and Technology), Gwanju, Korea in 2017. He is currently a Ph.D. student in the School of Integrated Technology at GIST, Gwangju, Korea. His research interests are VR/AR applications and haptic interaction technology.

Jeha Ryu received his B.S., M.S., and Ph.D. degrees in Mechanical Engineering from Seoul National University, Seoul, Korea, in 1982, from the KAIST (Korea Advanced Institute of Science and Technology), Daejeon, Korea in 1984, and from the University of Iowa, United States of America in 1991, respectively. He was a professor in the Department of Mechatronics, and now in the School of Integrated Technology at GIST, Gwangju, Korea. Prof. Ryu is a member of ICASE, KSME, and IEEE. More than 300 of his research articles and reports have been published. His research interests include VR, AR, haptic interaction control, haptic modeling and rendering, haptic application for various multimedia systems, and teleoperation.

Rights and permissions

About this article

Cite this article

Bae, Y., Cha, B. & Ryu, J. Calibration and Evaluation for Visuo-haptic Collocation in Haptic Augmented Virtuality Systems. Int. J. Control Autom. Syst. 18, 1335–1342 (2020). https://doi.org/10.1007/s12555-018-0882-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12555-018-0882-3