Abstract

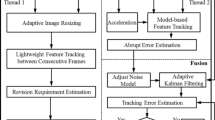

Virtual reality tracking devices are being investigated for application to motion tracking of robots, human bodies, indoor drones, mobile systems, etc., but most studies so far have been limited to performance analysis of commercialized tracking devices in static conditions. This paper investigated methods for improving the measurement accuracy of dynamic positioning and orientation of the HTC tracker. The signals from the photodiodes in the tracker were extracted and fused together, as well as with external Inertial Measurement Units (IMUs) signals using the Extended Kalman Filter (EKF) and the Unscented Kalman Filter (UKF). Multiple base stations, trackers and IMUs were applied to evaluate the measurement accuracy. Multiple paths were used to test different dynamic operating conditions. The results show that the proposed tracking system can improve accuracy by up to several mm by using the UKF as opposed to the EKF algorithm, increasing the number of base stations, increasing the number of trackers and fusing IMUs.

Similar content being viewed by others

References

Baek, J., Noh, G., & Seo, J. (2021). Robotic camera calibration to maintain consistent percision of 3D trackers. International Journal of Precision Engineering and Manufacturing, 22, 1853–1860. https://doi.org/10.1007/s12541-021-00573-3

Editor, K. M. P. (2021). Robotic metrology-assisted assembly combines photogrammetry with laser tracker. Metrology and Quality News Online Magazine, Feb. 24, 2021. https://metrology.news/automated-metrology-assisted-assembly-photogrammetry-laser-tracker-and-robot-technology/ . Accessed 20 March 2023.

Kim, S. H., Nam, E., Ha, T. I., Hwang, S. H., Lee, J. H., Park, S. H., & Min, B. K. (2019). Robotic machining: A review of recent progress. International Journal of Precision Engineering and Manufacturing, 20, 1629–1642. https://doi.org/10.1007/s12541-019-00187-w

Filion, A., Joubair, A., Tahan, A. S., & Bonev, I. A. (2018). Robot calibration using a portable photogrammetry system. Robotics and Computer-Integrated Manufacturing, 49, 77–87. https://doi.org/10.1016/j.rcim.2017.05.004

Moeller, M., et al. (2017). Real time pose control of an industrial robotic system for machining of large scale components in aerospace industry using laser tracker system. SAE International Journal of Aerospace, 10(2) 100–108. https://doi.org/10.4271/2017-01-2165

Kleinkes, D. M., Loser, D. R., & Kleinkes, D. M. (2011). Laser tracker and 6DoF measurement strategies in industrial robot applications. In 2011 Coordinate Metrology System Conference, 25–28.

Leica Absolute Tracker AT960. Hexagon. https://hexagon.com/products/leica-absolute-tracker-at960. Accessed 29 April 2023.

Yang, D., & Zou, J. (2022). Precision analysis of flatness measurement using laser tracker. International Journal of Precision Engineering and Manufacturing, 23(7), 721–732. https://doi.org/10.1007/s12541-022-00660-z

Furtado, J. S., Liu, H. H. H. T., Lai, G., Lacheray, H., & Desouza-Coelho, J. (2019). Comparative analysis of OptiTrack motion capture systems. In Advances in Motion Sensing and Control for Robotic Applications: Selected Papers from the Symposium on Mechatronics, Robotics, and Control (SMRC’18)-CSME International Congress 2018, May 27-30, 2018 Toronto, Canada, 15–31. Springer International Publishing. https://doi.org/10.1007/978-3-030-17369-2_2

Pérez, L., Rodríguez, Í., Rodríguez, N., Usamentiaga, R., & García, D. F. (2016). Robot guidance using machine vision techniques in industrial environments: A comparative review. Sensors, 16(3), 335. https://doi.org/10.3390/s16030335

Niehorster, D. C., Li, L., & Lappe, M. (2017). The accuracy and precision of position and orientation tracking in the HTC Vive virtual reality system for scientific research. i-Perception, 8(3), 2041669517708205.

Borges, M., Symington, A., Coltin, B., Smith, T., & Ventura, R. (2018). HTC Vive: Analysis and accuracy improvement. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2610–2615. IEEE. https://doi.org/10.1109/IROS.2018.8593707

Astad, M. A., Arbo, M. H., Grøtli, E. I., & Gravdahl, J. T. (2019). Vive for robotics: Rapid robot cell calibration. In 2019 7th International conference on control, mechatronics and automation (ICCMA), 151–156. https://doi.org/10.1109/ICCMA46720.2019.8988631

Borges, M., Symington, A., & Ventura, R. (2019). Accurate absolute localization methods for the HTC vive motion capture system.

Greiff, M., Robertsson, A., & Berntorp, K. (2019). Performance bounds in positioning with the VIVE lighthouse system. In 2019 22th International Conference on Information Fusion (FUSION), 1–8.

Leal, A. M. M. (2018). autodiff, a modern, fast and expressive C++ library for automatic differentiation. 2018. [Online]. Available: https://autodiff.github.io. Accessed 14 March 2023.

Agarwal, S., Mierle, K., Team, T. C. S. (2022). Ceres Solver. Mar. 2022. [Online]. Available: https://github.com/ceres-solver/ceres-solver. Accessed 14 March 2023.

Tips for setting up the base stations. https://www.vive.com/ca/support/vive/category_howto/tips-for-setting-up-the-base-stations.html. Accessed 13 March 2023.

Bancroft, J. B., & Lachapelle, G. (2011). Data fusion algorithms for multiple inertial measurement units. Sensors (Basel, Switzerland), 11(7), 6771–6798. https://doi.org/10.3390/s110706771

Ribeiro, M. I. (2004). Kalman and extended kalman filters: Concept, derivation and properties. Institute for Systems and Robotics, 43, 46.

Runge-Kutta Method—An overview | ScienceDirect Topics. https://www.sciencedirect.com/topics/mathematics/runge-kutta-method. Accessed 26 Oct 2022.

Process Noise—an overview | ScienceDirect Topics. https://www.sciencedirect.com/topics/engineering/process-noise. Accessed 14 March 2023.

Becker, A. Online Kalman Filter Tutorial. https://www.kalmanfilter.net/. Accessed 14 March 2023.

Julier, S. J., & Uhlmann, J. K. (1997). New extension of the Kalman filter to nonlinear systems. In Signal Processing, Sensor Fusion, and Target Recognition VI, 3068, 182–193. International Society for Optics and Photonics.

Tsai, R. Y., & Lenz, R. K. (1989). A new technique for fully autonomous and efficient 3D robotics hand/eye calibration. IEEE Trans. Robot. Automat., 5(3), 345–358. https://doi.org/10.1109/70.34770

Zhang, Z. (2000). A flexible new technique for camera calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(11), 1330–1334. https://doi.org/10.1109/34.888718

Park, F. C., & Martin, B. J. (1994). Robot sensor calibration: Solving AX=XB on the Euclidean group. IEEE Transactions on Robotics and Automation, 10(5), 717–721. https://doi.org/10.1109/70.326576

Acknowledgements

The authors would like to acknowledge the Natural Sciences and Engineering Research Council of Canada (NSERC) and Alberta Innovates (AI) for supporting this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Weber, M., Hartl, R., Zäh, M.F. et al. Dynamic Pose Tracking Accuracy Improvement via Fusing HTC Vive Trackers and Inertia Measurement Units. Int. J. Precis. Eng. Manuf. 24, 1661–1674 (2023). https://doi.org/10.1007/s12541-023-00891-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12541-023-00891-8