Abstract

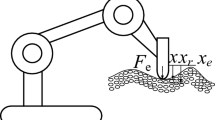

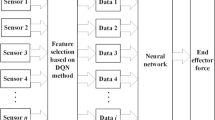

Aiming to solve the problem that the contact force at a robot end effector when tracking an unknown curved-surface workpiece is difficult to keep constant, a robot force control algorithm based on reinforcement learning is proposed. In this paper, a contact model and force mapping relationship are established for a robot end effector and surface. For the problem that the tangential angle of the workpiece surface is difficult to obtain in the mapping relationship, a neural network is used to identify the tangential angle of the unknown curved-surface workpiece. To keep the normal force of the robot end effector constant, a compensation term is added to a traditional explicit force controller to adapt to the robot constant force tracking scenario. For the problem that the compensation term parameters are difficult to select, the reinforcement learning algorithm A2C (advantage actor critic) is used to find the optimal parameters, and the return function and state values are modified in the A2C algorithm to satisfy the robot tracking scenario. The results show that the neural network algorithm has a good recognition effect on the tangential angle of the curved surface. The force error between the normal force and the expected force is substantially within ± 2 N after 60 iterations of the robot force control algorithm based on A2C; additionally, the variance of the force error decreases by 50.7%, 34.05% and 79.41%, respectively, compared with the force signals obtained by a fuzzy iterative algorithm and an explicit force control with two sets of fixed control parameters.

Similar content being viewed by others

References

Ziliani, G., Visioli, A., & Legnani, G. (2008). Gain scheduling for hybrid force/velocity control in contour tracking task. International Journal of Advanced Robotic Systems,3(4), 367–374.

Guo, Y., Dong, H., & Ke, Y. (2015). Stiffness-oriented posture optimization in robotic machining applications. Robotics and Computer-Integrated Manufacturing,35, 69–76.

Calanca, A., Muradore, R., & Fiorini, P. (2016). A review of algorithms for compliant control of stiff and fixed-compliance robots. IEEE/ASME Transactions on Mechatronics,21(2), 613–624.

Winkler, A. & Suchy, J. (2013). Force controlled contour following on unknown objects with an industrial robot. Robotic and Sensors Environments(ROSE), IEEE International Symposium on. 208–213.

Ye, B., Bao, S., Li, Z., et al. (2013). A study of force and position tracking control for robot contact with an arbitrarily inclined plane. International Journal of Advanced Robotic Systems,10(1), 1.

Visioli, A., Ziliani, G., & Legnani, G. (2010). Iterative-learning hybrid force/velocity control for contour tracking. IEEE Transactions on Robotics,26(2), 388–393.

Abu-Mallouh, M. & Surgenor, B. (2007). Force/velocity control of a pneumatic gantry robot for contour tracking with neural network compensation. In ASME 2007 International design engineering technical conferences and computers and information in engineering conference (pp. 829–837).

Mendes, N., & Neto, P. (2015). Indirect adaptive fuzzy control for industrial robots: A solution for contact applications. Expert Systems with Applications,42(22), 8929–8935.

Silver, D., Huang, A., Maddison, C., et al. (2016). Mastering the game of Go with deep neural networks and tree search. Nature,529(7587), 484–489.

Mnih, V., Kavukcuoglu, K., Silver, D., et al. (2016). Playing Atari with Deep Reinforcement Learning. arXiv 1312.5602.

La, P., & Bhatnagar, S. (2011). Reinforcement learning with function approximation for traffic signal control. IEEE Transactions on Intelligent Transportation Systems,12(2), 412–421.

Gu, S., Holly, E., Lillicrap, T., et al. (2017). Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In 2017 IEEE international conference on robotics and automation (ICRA) (pp. 3389–3396).

Zhu, Y., Mottaghi, R., Kolve, E., et al. (2017). Target-driven visual navigation in indoor scenes using deep reinforcement learning. In 2017 IEEE international conference on robotics and automation (ICRA) (pp. 3357–3364).

Pane, Y.P., Nageshrao, S.P., Babuska, R., et al. (2016). Actor-critic reinforcement learning for tracking control in robotics. In 2016 IEEE 55th conference on decision and control (CDC) (pp. 5819–5826).

Kim, B., Park, J., Park, S., et al. (2010). Impedance learning for Robotic contact tasks using natural actor-critic algorithm. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics),40(2), 433–443.

Zeng, G., & Hemami, A. (1997). An overview of robot force control. Robotica,15(5), 473–482.

Qin, F., Xu, D., Xing, D., et al. (2017). An active radial compliance method with anisotropic stiffness learning for precision assembly. International Journal of Precision Engineering and Manufacturing,18(4), 471–478.

Komati, B., Pac, M.R., Ranatunga, I., et al. (2015). explicit force control vs impedance control for micromanipulation. In ASME 2013 international design engineering technical conferences and computers and information in engineering, V001T09A018-V001T09A018.

Winker, A. & Suchy, J. (2016). Explicit and implicit force control of an industrial manipulator—An experimental summary. In 21st International conference on methods and models in automation and robotics (MMAR) (pp.19–24).

Sheng, X., Xu, L., & Wang, Z. (2017). A position-based explicit force control strategy based on online trajectory prediction. International Journal of Robotics and Automation,32(1), 93–100.

Li, Y. (2017). Deep reinforcement learning: An overview. arXiv, 1701.07274.

Wei, Y., Yu, F. R., Song, M., et al. (2018). User scheduling and resource allocation in HetNets with hybrid energy supply: an actor-critic reinforcement learning approach. IEEE Transactions on Wireless Communications,17(1), 680–692.

Grondman, I., Busoniu, L., Lopes, G. A. D., et al. (2012). A survey of actor-critic reinforcement learning: Standard and natural policy gradients. IEEE Transactions on Systems Man, and Cybernetics, Part C (Applications and Reviews),42(6), 1291–1307.

Busoniu, L., Ernst, D. & Schutter, B.D., et al. (2011). Approximate reinforcement learning: An overview. In 2011 IEEE symposium on adaptive dynamic programming and reinforcement learning (ADPRL) (pp. 1–8).

Pilarski, P.M., Dawson, M.R., Degris, T., et al. (2011). Online human training of a myoelectric prosthesis controller via actor-critic reinforcement learning. In 2011 IEEE international conference on rehabilitation robotics (pp. 134–140).

Phaniteja, S., Dewangan, P., Guhan, P., et al. (2018). Learning dual arm coordinated reachability tasks in a humanoid robot with articulated torso. In 2018 IEEE-RAS 18th international conference on humanoid robots (Humanoids) (pp. 1–9).

Heess, N., Silver, D. & Teh, Y.W. (2012). Actor-critic reinforcement learning with energy-based policies. In Proc. European workshop on reinforcement learning (pp. 43–57).

Konda, V. R., & Tsitsiklis, N. (2003). Actor-critic algorithms. SIAM Journal on Control and Optimization,42(4), 1143–1166.

Sutton, R. S., & Barto, A. G. (2017). Reinforcement Learning: An Introduction. Cambridge: MIT Press.

Acknowledgements

This research has been supported by National Science and Technology Major Project of China (2015ZX04005006), Science and Technology Planning Project of GuangDong Province, China (2014B090921004, 2015B010918002), Science and Technology Planning Project of Zhongshan City (2016F2FC0006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, T., Xiao, M., Zou, Yb. et al. Robotic Curved Surface Tracking with a Neural Network for Angle Identification and Constant Force Control based on Reinforcement Learning. Int. J. Precis. Eng. Manuf. 21, 869–882 (2020). https://doi.org/10.1007/s12541-020-00315-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12541-020-00315-x