Abstract

Marine infrastructures such as harbours, bridges, and locks are particularly exposed to salt water and are therefore subject to increasing deterioration. This makes regular inspection of the structures necessary. The inspection is carried out manually, using divers under water. To improve this costly and time-consuming process, we propose to scan the surface and underwater structure of the port with a multi-sensor system (MSS) and classify the obtained point cloud into damaged and undamaged areas fully automatically. The MSS consists of a high-resolution hydro-acoustic underwater multi-beam echo-sounder, an above-water profile laser scanner, and five HDR cameras. In addition to the IMU-GPS/GNSS method known from various applications, hybrid referencing with automatically tracking total stations is used for positioning. The key research idea relies on 3D data from TLS, multi-beam or dense image matching. For this purpose, we build a rasterised heightfield of the point cloud of a harbour structure by reducing the CAD-based geometry from the measured 3D point cloud. To do this, we fit regular shapes into the point cloud and determine the distance of the points to the geometry. To detect anomalies in the data, we use two methods in our approach. First, we use the VGG19 Deep Neural Network (DNN), and second, we use the Local-Outlier-Factors (LOF) method. To test and validate the developed methods, training data was simulated. Afterwards, the developed methods were evaluated on real data set in Lübeck, Germany, which were acquired with the developed Multi-Sensor-System (MSS). In contrast to the traditional, manual method by divers, we have presented an approach that allows for automated, consistent, and complete damage detection. We have achieved an accuracy of 90.5% for the method. The approach can also be applied to other infrastructures such as tunnels and bridges.

Similar content being viewed by others

Introduction

The infrastructure at ports in Germany, both on the sea and inland, is aging and in need of new technologies and methods for managing its lifespan. Traditional processes, which are labour-intensive and time-consuming, need to be replaced with automated, smart, and innovative measurement and analysis processes to improve transparency, efficiency, and reliability for more accurate lifetime predictions.

The infrastructure at ports is prone to degradation over time due to human activities and environmental factors, particularly the impact of saltwater on the material at seaports. This can lead to damage to concrete structures, sheet pile walls, and wooden construction. It is important to identify and assess the severity of this damage in order to take timely maintenance measures and prevent costly repairs or even infrastructure collapses.

Testing and monitoring of port infrastructural buildings typically involves both above and underwater parts. The above water portion is usually tested through manual and visual inspections, while the underwater portion is more difficult to assess. Divers must manually inspect the structure by touching it with their hands, but this method is unreliable and subjective, making it difficult to accurately classify and track damages. Additionally, the underwater inspection process is typically limited in scope, meaning that only a small percentage of the structure is actually examined. As a result, damage is often not detected until it has become severe or is not discovered at all.

Structural health monitoring with kinematic multi-sensor system

Sea and inland ports need to be inspected comprehensively on a regular basis. However, it is nearly impossible to visually inspect underwater areas, especially in river regions due to the high sediment content.

For this reason, we use a kinematic multi-sensor system (k-MSS) to record the object’s surface both above and below water.

Only in this way is it possible to scan the entire structure, reliably detect damage, and subsequently assess the current condition of the building in detail. In order to accurately record the building’s geometry and condition, high-resolution 3D data is required for both the underwater and above-water parts of the building.

The carrier platform, which functions as a boat-like vehicle, plays a central role in recording measurement data. However, it is also exposed to variables such as wind, waves, and currents. The carrier platform also serves to hold the sensor platform, and its design aims to prevent flow-related influences from causing quality-reducing deformation and constraining forces on the sensor platform. For this purpose, a drift and torsion compensation is carried out.

In addition to recording data, structural analyses are conducted to assess the service life and lifecycle of the infrastructures.

Port operators can use the results of these analyses to implement maintenance concepts and construction measures in accordance with the structure inspection as transparently as possible. Cost-intensive maintenance measures and long downtimes are significantly reduced by this procedure.

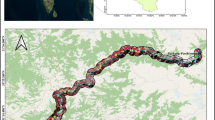

The k-MSS integrates various sensors for object recording, including a high-resolution hydroacoustic underwater multibeam echo sounder, a surface profile laser scanner, and five HDR cameras. Positioning is achieved through a combination of an IMU and GNSS combination, and there is the option for hybrid positioning with an automatically measuring total station from the shore (see Fig. 1).

A 3D recording of a port facility above and below water (Hesse et al. 2019)

The underwater multibeam echo sounder and the profile laser scanner measure 2D profiles, and the movement of the carrier platform creates an unstructured point cloud. The expected noise of the point clouds is in the millimetre range above water and in the centimetre range underwater.

In modern data processing, damage detection is often done using pattern recognition methods (see Hesse et al 2019). This work focuses on ensuring the quality, completeness, reproducibility, and automation of damage detection from 3D point clouds of port infrastructures above and underwater.

The main focus of this study is the detection of geometric damage in point clouds. In order to fully investigate this topic, we decided to only use point cloud data in our analysis. This decision was made in order to provide a more focused and in-depth examination of the subject and to avoid any potential complications or distractions that might arise from using additional data sources. As a result, the captured images from the installed cameras were not included in our analysis. The selection of damage types is highly dependent on the intended use and the specific application. In this study, we tested a methodology using two different data sets: a synthetic set and a real set from the harbour of Lübeck, Germany. The results of this testing will help us better understand the effectiveness of the methodology. The following state of the art is limited to damage detection and does not include data collection and mapping (for more details on mapping and data collection, see Hesse et al. 2019).

Structural monitoring by detection methods is addressed in several publications over water or in clear offshore areas.

Damage detection is mostly divided into two approaches: classification based on images or based on point clouds. In the case of point clouds, as in the present work, a distinction is made between point-based, area-based, and geometry-based methods (Neuner et al. 2016). A deformation monitoring and damage detection of large retaining structures using motor-vehicle-based mobile mapping systems was presented by Kalenjuk et al. (2021). Hadavandsiri et al. (2019) introduced a new approach for automatic, preliminary detection of damage in concrete structures with terrestrial ground scanners and a systematic threshold using a point-based approach. In Zhang et al. (2013), a geometry-based (using quadrics) approach is implemented for the segmentation of point clouds.

Image-based methods are divided into various approaches and methods. In recent years, neural networks have been increasingly used for classification and segmentation tasks. Tung et al. (2013) take repeated images of a retaining wall with a standard camera to perform digital image correlation. O’Byrne et al. (2013) are detecting disturbances by texture segmentation of colour images, while Gatys et al. (2015) showed that neural networks trained on natural images learn to represent textures in a way that enables synthesising realistic textures and even whole scenes. Neural networks, as feature extraction, are thus preferred over hand-crafted features (Yosinski et al. 2014; Carvalho et al. 2017; Abati et al. 2019).

When detecting damage in point clouds or images, one very often has to deal with the problem of having very little damage and very many undamaged areas. This leads to a great imbalance of classes. One possible way around this problem is to look for instances that are most different from the majority of the data. Such methods that distinguish non-normal instances from the majority are often called anomaly detection (AD).

Anomaly detection with transfer learning

Anomaly detection is a technique used to identify unusual or suspicious events or data sets. It was first mentioned by Grubbs in 1969 for identifying outlying data points, but the definition has since evolved to include a wider range of applications. Anomalies are now understood as data that is different from the norm and is rare compared to other instances in a data set. Two commonly used methods for anomaly detection are transfer learning and local outlier factors (LOF). Transfer Learning involves using pre-trained neural networks to create models more quickly and efficiently (see, e.g. Andrews et al. 2016), while LOF evaluates an object based on its isolation from other data in its local environment (Breunig et al. 2000).

Nowadays, anomaly detection algorithms are often used in many application domains. García-Teodoro et al. (2009) describe a method to use anomaly detection algorithms to identify network-based-intrusion. In this context, anomaly detection is also often called behavioural analysis, and these systems typically use simple but fast algorithms. Other possible scenarios are Fraud detection (Li et al. 2012), medical applications like patient monitoring during electrocardiography (Lin et al. 2005), data leakage prevention (Sigholm and Raciti 2012), and other more specialised applications like movement detection in surveillance cameras (Basharat et al. 2008) or for document authentication in forensic applications (Gebhardt et al. 2013).

Contribution

This work focuses on using anomaly detection methods to segment point clouds into two classes: damaged and not damaged. Because there are significantly more areas without damage, the classes are unequal in size. Additionally, the amount of available training data with true labels is limited, so we use pre-trained networks as generic feature generators. Before applying the anomaly detection algorithms, the point clouds must be pre-processed to make them suitable for analysis. We create a height field (also known as a digital elevation model (DEM) (Skidmore 1989) from the point clouds. Unlike natural images, the statistics of height fields depend on the scan resolution of the sensor, which makes it difficult to transfer pre-trained networks. The anomaly detection method we present can successfully differentiate between damaged and undamaged areas in point cloud-derived height fields. This is a novel approach to automated damage detection in structural monitoring, and it provides a foundation for further research in this field.

Methodology

In this paper, we convert point clouds into heightfields and use them as input for a deep neural network (DNN). The features extracted by the DNN are then used to calculate the local outlier factor (LOF) for individual sections (or "tiles") of the heightfield. By setting a threshold, we can classify each tile as either a damaged or undamaged region. This allows us to identify damage in the heightfield using the LOF and DNN.

Pre-processing of the point clouds

To accurately evaluate the results, we need to manually label the damage (concrete spalling) in the existing data. Additionally, objects such as fenders or ladders appear in the data and should not be included in the calculation of the heightfield. These objects are also manually labelled and assigned an index. This results in a point cloud with different indices for the quay wall, damage, and other objects. An example of this can be seen in Section III. This labelling process is necessary for accurate and reliable analysis.

Generation of the heightfield

To create the heightfield, regular shapes are fitted into the point cloud, and the distance of the points to the geometry is calculated. In this study, we use a simple plane according to Drixler (1993) as the reference geometry. We first rotate the point clouds in a consistent direction using principal component analysis and then cut regular square sections from the point cloud. These sections overlap by 50% in the X and Y directions. This allows us to more easily fit geometries into the point cloud. After cutting, a plane is estimated in each section.

When estimating the plane, we only use the points corresponding to the quay wall and damaged areas, taking into account the different indices. We then calculate the distance of each point to the estimated plane using the formula:

This process enables us to generate a heightfield from the point cloud data.

The vector \(\overrightarrow{{\varvec{n}}}\) is the normal vector of the plane with the entries \({n}_{x}\), \({n}_{y},\) and \({n}_{z}\). \(d\) is the distance to the origin, and \({p}_{x}\), \({p}_{y}\), and \({p}_{z}\) are the coordinates of the point. An example of the determined distances is shown in Fig. 2. Blue represents a small distance to the plane and green via yellow and red an increasing distance.

To make deviations due to damage more noticeable, the distance to the plane is manually set to small values for points that indicate the presence of additional objects. The point clouds are then converted into a heightfield by rasterizing them. The raster size should be selected based on the resolution of the point cloud, and distances in overlapping areas are averaged during the rasterization process. The resulting heightfield is represented as a grayscale image, with black indicating a distance of zero and lighter shades indicating increasing distance. A corresponding label image, which is a binary image showing points with damage indices in white and all other points in black, is also created. Examples of the heightfield and label images are shown in Figs. 3 and 4.

Convolutional neural network

The feature extraction from the heightfield is carried out using the VGG19 DNN (Simonyan & Zisserman 2014). Afterwards, the local outlier factors (LOF) is calculated out of the resulting feature maps.

The used VGG19 DNN is a variant of the VGG network which achieved very good results at the ImageNet Large Scale Visual Recognition Competition in 2014. The VGG19 DNN consists of 19 layers, thereof 16 convolutions and 3 fully connected layers. Because of the good results, the VGG19 DNN is used with weights pre-trained on the ImageNet data set (Deng et al. 2009). There is no adjustment of the weights carried out. For the extension with LOF, the DNN is truncated after Layer pool_4. At this point, the feature maps are available. Small sections or “tiles” of the original depth image are used as input to the DNN. Accordingly, one output per tile is obtained. The size of the tiles is a variable parameter in the DNN. In addition, the tiles overlap by 50%.

The LOF is then calculated from these different feature maps for each tile. Each of the individual tiles is assigned its value. In the overlapping areas, the average value of the calculated LOF is determined. Using a threshold for the resulting LOF of the sections of the point cloud, the actual binary classification is carried out.

Data

In this work, we will use two data sets for analysis: one consisting of simulated sheet pile walls with randomly distributed damage and another consisting of terrestrially surveyed quay walls from the port in Lübeck, Germany. The simulated data set will be used to demonstrate the suitability of our chosen methodology, while the real data set will be used to confirm that our method can be applied in practice.

In Fig. 3, there is a point cloud that has been simulated to depict quay walls above the water’s surface. This point cloud has been divided into three categories: quay walls (coloured blue), concrete spalling (coloured green), and additional objects (coloured red). These categories can be seen in the label image that accompanies the point cloud. The distance between points in the point cloud can vary, but is generally about 1 cm. There is also some noise present, with a range of 1–2 mm. Figure 4 displays the classified point cloud along with its corresponding depth and label image.

Experiments

In the following section, the results of various experiments will be presented. The experiments were conducted on both simulated and real data. There were a total of 500 simulated images and 395 created heightfield and label images used as the basis for the experiments. The purpose of the experiments is to determine whether the proposed method, which utilizes DNN and LOF, is effective at detecting damage on quay walls. There are two primary criteria that must be considered when assessing the success of damage detection. The first is precision, which refers to the proportion of damage regions identified by the network that are actually damaged in reality. The second is recall, which refers to the proportion of actual damage that is identified by the network. In addition to precision and recall, other measures such as accuracy (the percentage of correctly classified regions in the entire data set), the F1 score (a harmonic mean of precision and recall), and the Mathews correlation coefficient (MCC) will also be reported. The MCC takes all four categories of the confusion matrix into account and ranges from – 1 (strong misclassification) to + 1 (excellent classification). A value of 0 indicates random classification (Chicco and Jurman 2020).

Simulated data

The best results were obtained when using tile sizes of 32 × 32 pixels and normalized features as output from the DNN. Figure 5 shows the results for different threshold values. Using a threshold of – 1.5, the confusion matrix in Table 1 was obtained, resulting in an accuracy of 99.9%, a precision of 96.3%, and a recall of 96.2%. This leads to an F1 score of 96.3%. The MCC was also found to be 0.96, indicating a good classification. Figure 6 shows the results for two of the simulated images, with green rectangles indicating true positive (TP) classification, red indicating false negative (FN), and yellow indicating false positive (FP). Overall, the results using simulated data were very good, and the LOF appears to be effective for the automated classification of quay walls.

Real data

The method has shown promise in identifying potential damage areas, so the next step is to test it on a real data set using various tile sizes. The normalized features from the DNN are used as the output. The best result was achieved using a tile size of 32 × 32 pixels, as shown in Fig. 7.

A threshold of – 1.55 was selected, and the resulting confusion matrix is shown in Table 2. At this threshold, the accuracy is 90.5%, precision is 72.2%, recall is 72.6%, and the F1 score is 72.4%. The MCC is 0.66, indicating good classification, but slightly lower than the results obtained using simulated data.

The classification result for the two images is shown in Fig. 8. Green, red, and yellow again indicate TP, FN, and FP. Here, the original heightfield is shown with higher contrast in the middle again to make grey value differences in the heightfield more visible for human eye.

Although the results were not as good as those obtained using simulated data, the method still appears to be effective when applied to real data.

The presented methodology is supposed to be used to automatically create a suspicion plan with suspected damage regions from point clouds. To be able to use the methodology in reality, all damage regions must be found as far as possible. In addition, only the damaged regions as such should be detected. This means that precision and recall together should be as high as possible. The methodology was first tested on simulated data and then applied to real data.

The method demonstrated strong performance on simulated data, with a F1 score of 96.3% and an MCC of 0.96. These results indicate that the method is suitable for generating suspicion maps of damage on quay walls, meeting the desired criteria for accuracy and completeness.

The results from the real data were slightly worse than those from the simulated data, with a F1 score of 72.4% and an MCC of 0.66. There are several potential reasons for this discrepancy. One factor could be the presence of additional objects such as ladders, fenders, plants, and ropes in the real data, which can affect the measurements. Additionally, the data has not been cleaned or filtered, potentially leading to outliers and sensor artefacts that impact the results. The noise in the real data may also not be normally distributed and could contain systematic components, in contrast to the simulated data. Finally, we used a pre-existing CAD model for the simulated data to determine distances, while we created our own model for the real data. Despite these differences, the results still have value in terms of automated damage detection and digitally assisted building inspections.

Conclusion and outlook

Identifying damaged areas is important for maintaining aging port infrastructure. In this study, point clouds collected using multi-beam echo sounder and profile laser scanners were converted into depth images and processed using a pre-trained CNN with LOF extension. The automated method of creating heightfields from point clouds and then classifying them using a DNN in combination with LOF was found to be effective. The experiments yielded very good results for the simulated data, and while the classification of the real data was slightly worse, it was still sufficient. Overall, the method is useful for detecting damage and can be applied to improve inspection procedures. In the future, it may be possible to improve classification by more effectively removing additional objects from the data. Another area of focus for future research will be combining 3D point clouds and colour images to detect small cracks and non-geometric damage such as rust or efflorescence, as well as developing more advanced and knowledge-based data cleansing techniques.

References

Abati D, Porrello A, Calderara S, Cucchiara R (2019) Latent space autoregression for novelty detection. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, pp 481–490. https://doi.org/10.1109/CVPR.2019.00057

Andrews J, Tanay T, Morton EJ, Griffin LD (2016) Transfer representation-learning for anomaly detection. In: Proceedings of the 33rd International Conference on Machine Learning. JMLR, New York

Basharat A, Gritai A Shah M (2008) Learning object motion patterns for anomaly detection and improved object detection. In: 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, pp 1–8. https://doi.org/10.1109/CVPR.2008.4587510

Breunig MM, Kriegel HP, Ng RT, Sander J (2000) LOF: identifying density-based local outliers. SIGMOD Rec 29(2):93–104. https://doi.org/10.1145/335191.335388

Carvalho T, de Rezende ERS, Alves MTP, Balieiro FKC, Sovat RB (2017) Exposing computer generated images by eye’s region classification via transfer learning of VGG19 CNN. In: 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, pp 866–870. https://doi.org/10.1109/ICMLA.2017.00-47

Chicco D, Jurman G (2020) The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom 21, Nr. 1, 1–13.

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) ImageNet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, pp 248–255. https://doi.org/10.1109/CVPR.2009.5206848

Drixler E (1993) Analyse der Form und Lage von Objekten im Raum (German). München, DGK Series C, Volume 409, Dissertation

Feng C, Zhang H, Wang S, Li Y, Wang H, Yan F (2019) Structural damage detection using deep convolutional neural network and transfer learning. KSCE J Civ Eng 23 10 4493–4502. –ISSN 1226–7988

García-Teodoro P, Díaz-Verdejo J, Maciá-Fernández G, Vázquez E (2009) Anomaly-based network intrusion detection: Techniques systems and challenges. Comput Secur 28:18–28

Gatys L, Ecker AS, Bethge M (2015) Texture synthesis using convolutional neural networks. In: Cortes C, Lawrence N, Lee D, Sugiyama M, and Garnett R, editors, Advances in Neural Information Processing Systems, vol 28. Curran Associates, Inc. https://proceedings.neurips.cc/paper/2015/file/a5e00132373a7031000fd987a3c9f87b-Paper.pdf

Gebhardt J, Goldstein M, Shafait F, Dengel A (2013) Document authentication using printing technique features and unsupervised anomaly detection. In: 2013 12th International Conference on Document Analysis and Recognition, Washington, pp 479–483. https://doi.org/10.1109/ICDAR.2013.102

Goldstein M, Uchida S (2016) A comparative evaluation of unsupervised anomaly detection algorithms for multivariate data. Plos One 11:1–31. https://doi.org/10.1371/journal.pone.0152173

Gopalakrishnan K, Khaitan SK, Choudhary A, Agrawal A (2017) Deep convolutional neural networks with transfer learning for computer vision-based data-driven pavement distress detection. Constr Build Mater 157:322–330 (– ISSN 09500618)

Grubbs FE (1969) Procedures for detecting outlying observations. Samples Technometrics 11:1–2

Hadavandsiri Z, Lichti DD, Jahraus A, Jarron D (2019) Concrete preliminary damage inspection by classification of terrestrial laser scanner point clouds through systematic threshold definition. ISPRS Int J Geo-Inf 8:585. https://doi.org/10.3390/ijgi8120585

Hake F, Göttert L, Neumann I, Alkhatib H (2022) Using machine-learning for the damage detection of harbour structures. Remote Sens 14:2518. https://doi.org/10.3390/rs14112518

Hake F, Hermann M, Alkhatib H, Hesse C, Holste K, Umlauf G, Kermarrec G, Neumann I (2020) Damage detection for port infrastructure by means of machine-learning-algorithms. In: FIG Working Week 2020, Amsterdam. https://www.fig.net/resources/proceedings/fig_proceedings/fig2020/papers/ts08b/TS08B_hake_alkhatib_et_al_10441.pdf

Hesse C, Holste K, Neumann I, Hake F, Alkhatib H, Geist M, Knaack L, Scharr C (2019) 3D HydroMapper: Automatisierte 3D-Bauwerksaufnahme und Schadenserkennung unter Wasser für die Bauwerksinspektion und das Building Information Modelling. Hydrographische Nachr-J Appl Hydrography 113:26–29

Kalenjuk S, Lienhart W, Rebhan MJ (2021) Processing of mobile laser scanning data for large-scale deformation monitoring of anchored retaining structures along highways. Comput Aided Civ Inf 36:678–694. https://doi.org/10.1111/mice.12656

Li SH, Yen DC, Lu WH, Wang C (2012) Identifying the signs of fraudulent accounts using data mining techniques. Comput Hum Behav 28:1002–1013

Lin J, Keogh E, Fu A, Van Herle H (2005) Approximations to magic: finding unusual medical time series. In: 18th IEEE Symposium on Computer-Based Medical Systems (CBMS'05), Dublin, pp 329–334. https://doi.org/10.1109/CBMS.2005.34

Neuner H, Holst C, Kuhlmann H (2016) Overview on current modelling strategies of point clouds for deformation analysis. Allg Vermessungs-Nachr (AVN) 123:328–339

O’Byrne M, Schoefs F, Ghosh B, Pakrashi V (2013) Texture analysis based damage detection of ageing infrastructural elements. Computer-Aided Civil and Infrastructure Engineering 28:162–177. https://doi.org/10.1111/j.1467-8667.2012.00790.x

Sigholm J, Raciti M (2012) Best-effort Data Leakage Prevention in inter-organizational tactical MANETs. In: MILCOM 2012 - 2012 IEEE Military Communications Conference, Orlando, pp 1–7. https://doi.org/10.1109/MILCOM.2012.6415755

Simony M, Milzy S, Amendey K, Gross HM (2018) Complex-YOLO: an Euler-region-proposal for real-time 3D object detection on point clouds. Eur Conf Comput Vision (ECCV) Workshops.

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Preprint at https://arxiv.org/abs/1409.1556

Skidmore AK (1989) A comparison of techniques for calculating gradient and aspect from a gridded digital elevation model. Int J Geogr Inf Syst 3:323–333

Tung SH, Weng MC, Shih MH (2013) Measuring the in situ deformation of retaining walls by the digital image correlation method. Eng Geol 166:116–126

Yosinski J, Clune J, Bengio Y, Lipson H (2014) How transferable are features in deep neural networks? In: Advances in Neural Information Processing Systems 27; Z. Ghahramani.; M. Welling.; C. Cortes.; N. D. Lawrence.; K. Q. Weinberger., Eds.; CurranAssociates, Inc, 3320–3328

Zhang G, Vela PA, Brilakis I (2013) Detecting, fitting, and classifying surface primitives for infrastructure point cloud data. In: Computing in Civil Engineering, pp. 589–596. https://doi.org/10.1061/9780784413029.074

Acknowledgements

This work was carried out as part of the joint research project “3DHydroMapper–Bestandsdatenerfassung und modellgestützte Prüfung von Verkehrswasserbauwerken.” It consists of five partners and one associated partner: Hesse und Partner Ingenieure (multisensor system and kinematic laser scanning), WK Consult (structural inspection, BIM, and maintenance planning), Niedersachsen Ports (sea and inland port operation), Fraunhofer IGP (automatic modelling and BIM), Leibniz University Hannover (route planning and damage detection), and Wasserstraßen- und Schifffahrtsverwaltung des Bundes (management of federal waterways).

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was funded by German Federal Ministry of Transport and Digital Infrastructure grant number 19H18011C.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hake, F., Lippmann, P., Alkhatib, H. et al. Automated damage detection for port structures using machine learning algorithms in heightfields. Appl Geomat 15, 349–357 (2023). https://doi.org/10.1007/s12518-023-00493-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12518-023-00493-z