Abstract

In the last years, there have been rapid developments in social robotics, which bring about the prospect of their application as persuasive robots to support behavior change. In order to guide related developments and pave the way for their adoption, it is important to understand the factors that influence the acceptance of social robots as persuasive agents. This study extends the technology acceptance model by including measures of social responses. The social responses include trusting belief, compliance, liking, and psychological reactance. Using the Wizard of Oz method, a laboratory experiment was conducted to evaluate user acceptance and social responses towards a social robot called SociBot. This robot was used as a persuasive agent in making decisions in donating to charities. Using partial least squares method, results showed that trusting beliefs and liking towards the robot significantly add the predictive power of the acceptance model of persuasive robots. However, due to the limitations of the study design, psychological reactance and compliance were not found to contribute to the prediction of persuasive robots’ acceptance. Implications for the development of persuasive robots are discussed.

Similar content being viewed by others

1 Introduction

Social robotics is a domain that focuses on the design of robots for social communications with humans using verbal and non-verbal cues [1, 2]. Nowadays, social robots can be used to assist humans in daily life and might linger most of the time in the vicinity of humans, comparable to smartphones [3, 4]. The growing interest in social robotics makes it relevant to examine the potential of robots as persuasive technologies that can support people in changing their attitudes and behaviours, which for brevity we refer to them as persuasive robots. Earlier studies demonstrated that social robots could play an important role in persuading people [5,6,7]. Persuasive robots have been employed in a broad range of applications for instance as an assistant to the elderly community [6] and providing recommendations in several decision-making tasks like helping people to choose food [5] and movies [8].

Research in persuasive robots draws heavily from the study of persuasion in the context of human–human interaction. According to the Media Equation [9], people engage with non-living things such as robots just like they behave and respond to other people in real life. Thus, the interaction with robots is more in line with human–human interaction rather than human-technology interaction [10, 11]. Research in social robotics has elaborated on this theme, producing a wealth of knowledge regarding social responses to robotics. More recently, special attention has been paid to the social responses to persuasive robots. Related research informs us regarding social responses to persuasive robots such as engagement, psychological reactance, trust and liking [12,13,14].

The recent versions of TAM have some issues to be used as an acceptable model for persuasive robots. That is, earlier research has claimed that interaction with robots differs from the interaction with other technological artefacts like laptops or smartphones due to the robots’ embodiment and the explicitly designed social features in the interaction with humans [15, 16]. This claim leads to the question of how such social features and social responses to technologies can influence the acceptance of robots as persuasive agents. Bartneck et al. [17] argued that the biggest challenge in designing social robots is to ensure that people are willing to interact with and accept to use these robots in everyday life.

Luoho et al. [18] defined technology acceptance as of how people accept to adopt a specific technology for usage. Based on the theory of reasoned action (TRA), Davis [19] designed the first technology acceptance model (original TAM) [20] to explain people’s acceptance of information systems and technology adoption in 1989. The original TAM predicts people’s intention to use technology by individuals based on several key determinants like perceived usefulness and attitude toward using [20]. Subsequently, emphasising social and cognitive factors such as subjective norms, demonstrability, voluntariness and experience as key determinants, TAM 2 [21] aimed to predict user adoption behaviour towards systems used in organisations over time. Later on, a unified theory of acceptance and use of technology (UTAUT) [22] was introduced to evaluate users’ intentions to use any technology or information system in general by explaining user intentions to use an information system and subsequent usage behavior. The key determinants in the UTAUT include performance and effort expectancies, social influence as well as facilitating conditions. After that in 2008, the TAM 3 [23] was developed to support decision making in an organisation by combining the key determinants from TAM 2 and introducing new determinants for perceived ease of use such as perceived enjoyment and computer anxiety. Eventually, UTAUT 2 [24] was introduced by adding hedonic motivation, price value and habit as determinants of acceptance and use, especially when predicting consumer behaviour.

We argue that social responses that might be invoked by a robot in persuasive attempts are also the key determinants for people to accept social technology. In this paper, we are interested in investigating the roles of social responses (e.g., compliance and reactance) in shaping positive experiences and inducing users’ satisfaction toward persuasive interactions with a robot. On the one hand, we can expect that users may reject to use a robot that annoys them. On the other hand, we can expect that users may have the interest to touch a robot or show affective response (such as highly engage) with a robot that makes them happy. Related studies [13, 14, 25] demonstrated how social cues displayed by robots could influence the affective responses toward persuasive robots. Persuasive robots with minimal social cues, for example, evoked less reactance compared to persuasive robots with enhanced social cues in a decision-making game [26]. Siegel et al. [12] found that persuasive robots with the opposite gender than the users were more trustworthy and engaging compared to the similar gender robots in the donation task. Ham and Midden [27] provided evidence that people complied more with the persuasive robots that provided negative feedbacks in promoting energy-saving behaviour than the same robots with positive feedbacks. However, these studies do not yet help us to understand whether social responses like reactance and compliance are the key determinants for people to accept robots as persuasive agents.

This study investigates the acceptance of persuasive robots using all key determinants from the original TAM [20] and a key determinant from TAM 3, that is, perceived enjoyment [23]. TAM is considered as the most popular acceptance model and widely used in several fields due to its parsimony and specificity in predicting acceptance for diverse populations of users, strong theoretical base, and substantial empirical support for its exploratory power [28, 29]. We used TAM as the basis for our model instead of other acceptance models like UTAUT since UTAUT models [22, 24] require a large number of key determinants and moderators (e.g., up to forty-one variables to predict intentions) [30] in attaining high reliability of prediction [31]. Thus, UTAUT models [22, 24] suffer from vast numbers of key determinants which later require a big sample size in testing the model. To retain the simplicity of our proposed model, we only selected the key determinants of the original TAM [20] and a key determinant from TAM 3 [23]. This selection has been done since several key determinants from social responses will be integrated into the model later. More importantly, most of the additional key determinants in TAM 2 [21], TAM 3 [23], and UTAUT models [22, 24] (compared to the original TAM [20]) are not very relevant for the acceptance and use of persuasive robots. Therefore, measuring output quality and self-efficacy (examples of key determinants in TAM 3 [23]) are out of our research interests. However, we included the variable of perceived enjoyment from TAM 3 [23] in our model since enjoyment is a type of social response. Similar to TAM 2 [21], TAM 3 [23], and UTAUT models [22, 24], key determinants proposed in other acceptance models such as confirmation in Confirmation-Expectation Model [32] and interactivity in Uses and Gratifications Theory [33] are also not applicable and do not apply to our experimental context. Original TAM [20] consists of several key determinants which are perceived usefulness (Usefulness), perceived ease of use (Ease), attitude towards using (Attitude) and behavioral intentions (Intentions). As mentioned earlier, we also used perceived enjoyment (Enjoy) as a key determinant in our model adopted from TAM 3 [23]. Within the context of our experiment, Usefulness is defined as the degree to which people believe that the persuasive robots would be assistive in making decisions [19]. The term Ease refers to the degree to which people believe that using the technology (i.e., the persuasive robot) would be free of effort [19]. Whereas Attitude covers the user’s feelings (evaluative effect) about the technology (in this study the persuasive robot) [19] while Intentions refer to the strength of people’s intention about using the persuasive robots [19]. Enjoy can be defined as the pleasant feelings associated with the use of persuasive robots, apart from the (positive) performance consequences [34].

TAM has been extended to evaluate technology acceptance in other domains such as in the e-learning system [35], smart in-store technology [36] and internet banking adoption [37]. To date, TAM has been applied to predict the acceptance of social robots in [29] frontline service [38], education [39] and healthcare [40]. Earlier research pointed out that social presence was one of the predictors in modelling the acceptance of robots in human–robot interaction [41]. Others, like Heerink et al. [42], claimed that social influence [22] and trust were the key features for the acceptance of assistive social agents by the elderly.

We propose to extend the existing TAM with the evaluation of social responses towards the robot by measuring users’ trust (trusting beliefs (Beliefs) and trusting behaviours (Compliance)), psychological reactance (Reactance), and liking (Liking). Scholars like McKnight et al. [43] explained that the concept of trust consists of several elements, including trusting beliefs (Beliefs) and trusting behaviours (Compliance). Vidotto et al. [44] elaborated on trusting beliefs as a modulator in inducing people to believe that the persuasive robot is a trustworthy advisor. Trusting behaviour is defined as “actions that demonstrate how a trustor relies upon a trustee, thereby avoiding any control” [43]. On the other hand, compliance is a key measure of successful persuasive attempts. When someone complies with the attempts, it can be seen through his/her behaviour that he/she acts in accordance with the advice given. In this study, we measured trusting behaviours using the compliance score, reflecting how many times the users comply with the order or advice given by the persuasive robot as used in the earlier study [14]. Liking describes the feelings of friendliness, kind and nice towards the robots [45].

On the other hand, the concept of Reactance was introduced by Brehm [46] and elaborated in later studies [47, 48]. Psychological reactance can be triggered in persuasive attempts, especially when someone’s freedom in making decisions is limited. Reactance may be manifested in people’s behavior causing them not to comply or even do the opposite than what is requested by the persuadee (in our case the persuasive robots). Indeed, psychological reactance may also lead to irrational behaviors and thoughts aimed at re-establishing individual freedom [49, 50]. Earlier research shows that reactance can be assessed using the intertwined model of reactance, consisting of feelings of anger and negative cognitions [51] using questionnaires [47, 52, 53].

In this paper, we report an experiment that used SociBot as a persuasive robot in a decision-making game. The following sections describe the methods used and dwells on the results of our study. While the implications of social responses for the development of TAM for persuasive robots is the concluding part.

1.1 The Current Study

Earlier works [54,55,56] reported both positive and negative responses to social cues in robots. Perhaps counter-intuitively, the studies by Ghazali et al. [14, 25, 57] suggested that persuasive robots were more effective when endowed with only minimal social cues such as eye-blinking rather than implementing several cues at once, e.g., combining head movement, eye expression and intonation in the voice. Further, it has been shown that persuasive robots should be designed with likable social features such as having a neutral face (less expressive) and facial characteristics that were known to evoke trust (see [58]). Additionally, the robot should mimic the humans head’s movement, and praise humans only at appropriate times during interaction [59]. Such social cues contributed to positive social responses toward persuasive robot namely low psychological reactance, high trusting beliefs, high trusting behaviours (compliance) and/or high liking. While such social responses have been demonstrated experimentally, it is not yet clear what their importance is in regard to whether people will be prepared to adopt social robots as persuasive agents. For example, we do not yet know if people will be more likely to use a robot they trust more, they like more and that will make them feel less reactant as a persuasive agent.

Using the social cues that were found to be positively perceived by humans in the earlier studies [14, 25, 57], this study mainly aims to extend the technology acceptance (TAM) to account for the influence of social responses onwards the persuasive robot. This study is developed to:

Obj1

Propose an explanation of the acceptance of persuasive robots using TAM.

We used the framework of TAM as a baseline in this study to explain the acceptance of persuasive robots. Earlier research (see [60]) in social robotics [40, 42, 61] utilized measurements from TAM in understanding the acceptance of robots for daily usage. The key determinants taken from TAM include Usefulness, Ease, Attitude, and Intentions originated from the original TAM [20] and Enjoy from TAM 3 [23]. Based on the original TAM [19, 20], we expect that Usefulness is a determinant of Attitude and Intentions, Ease is a determinant of Attitude, and Attitude is a determinant of Intentions. Based on the prediction in TAM 3 [23], Enjoy is a determinant of Ease.

Obj2

Extend TAM to account for social responses to persuasive robots.

We suggest adding four key determinants to represent social responses within the PRAM to increase the power of prediction for the persuasive robots’ acceptance. The key determinants include Compliance, Beliefs, Reactance, and Liking because of the arguments presented below in this section.

Although there was no clear relation of Compliance with the key determinants of TAM in earlier studies especially on the acceptance of persuasive robots, Kelman [62] highlighted that Compliance could be predicted by Attitude in the process of adopting induced behaviour; although this study was performed in a different domain (desegregation in public schools). Additionally, the Theory of Reasoned Action (TRA) [63] claimed that motivation to comply (known as Compliance) is a predictor for subjective norms, and subjective norms are a predictor for Intentions. However, we did not include subjective norms in our analysis since we are not interested in investigating whether perceived social pressure influenced people in the way they behave. While dropping subjective norms in our analysis, we expect that Compliance is a predictor for Intentions. Furthermore, in this paper we investigated whether social responses like Compliance are a key determinant for people to accept robots as persuasive agents. Relatedly for exploring the roles of Compliance in determining Attitude and Intentions, we investigated whether (as the first hypothesis contained in PRAM) the higher a user’s Compliance, the higher his or her Attitude and Intentions [64] to use the system in the future.

Beliefs have been empirically established as a determinant of Usefulness and Attitude in earlier studies [65,66,67]. Also, previous research by Ghazali et al. [14] demonstrated a negative correlation between Beliefs and Reactance. That is participants who have more trust in the robot will experience less reactance in following the advice given by the robot. Therefore, it is predicted in our second hypothesis that higher Beliefs on the persuasive robot will cause people to think that the robot can provide the best advice in selecting charity organisations (Usefulness), causing them to comply more with the advice given (Compliance) with positive Attitude and less reactance (Reactance).

Although there was no study directly investigating the effect of Liking on Intentions, earlier work [22] illustrated that Liking is an example of intrinsic motivation associated with technology usage based on Cognitive Evaluation Theory [68]. A more recent study by Rogers [69] showed that behavioural intentions (Intentions) in using mobile internet is influenced by intrinsic motivation. Thus, in our third hypothesis, we expect that Liking is one of the determinants of Intentions. Other researchers [70, 71] reported that Liking towards a persuasive robot is positively correlated with Beliefs and negatively correlated with Reactance. That is, participants who like the robot more will have more trust in it, experience less reactance to follow the advice given, and have more intentions to use the robot again in the future. Earlier research [14] investigating psychological reactance showed that Liking is a full mediator between the facial characteristics of a social robot (an independent variable) on Beliefs and Reactance. Thus, it is anticipated that Liking is more likely to influence the level of Beliefs and Reactance.

In a model of sustainable energy technology acceptance, Huijts et al. [72] highlighted those negative feelings like anger, fear and worries influence the level of Attitude towards using novel technologies. A similar concept of negative attitudes, psychological reactance (Reactance) was used in this study. Thus, based on the implication of negative feelings on Attitude [72], we expect that participants who experience less reactance will have a more positive attitude towards using the robot (as they like the robot [14]). Thus, our fourth hypothesis predicts that Reactance determines Attitude in the technology acceptance model for the persuasive robot.

As described in an earlier study [73], it is expected in the fifth hypothesis that people will like (Liking) the persuasive robot more if the robot is easy to be used, compared to the robots that are cumbersome to use, or even later caused frustration. Thus, it is predicted in the fifth hypothesis that Ease is a determinant of Liking towards the persuasive robot.

As proposed by the Cognitive Evaluation Theory [68], perceived enjoyment (Enjoy) is one of the intrinsic motivations next to Liking. Thus, similar to Liking, we expect that Enjoy is also a determinant of Intentions [69]. This expectation is in line with the flow theory [74]. Also, based on earlier research on the acceptance of instant messaging technology [75] which combined the theory of planned behavior [76], TAM [20], and flow theory [74], we propose that Enjoy is a determinant of Attitude and Intentions. Thus, we expect that participants who enjoy using the robot more will like it more, have a more positive attitude towards using the robot and have more intentions to use the robot again in the future in the sixth hypothesis.

In summary (refer to Fig. 1), when developing the PRAM, we hypothesized that:

- H1.:

-

Compliance.

- H1(a).:

-

There is a significant difference in attitude towards using the robot between participants who comply more with the request made by the robot and those who comply less with the request made by the robot.

- H1(b).:

-

There is a significant difference in intentions to use the robot again in the future between participants who comply more with the request made by the robot and those who comply less with the request made by the robot.

- H2.:

-

Trusting beliefs.

- H2(a).:

-

There is a significant difference in reactance score between participants who have more trust in the robot and those who have less trust in the robot.

- H2(b).:

-

There is a significant difference in perceived usefulness score between participants who have more trust in the robot and those who have less trust in the robot.

- H2(c).:

-

There is a significant difference in attitude towards using the robot between participants who have more trust in the robot and those who have less trust in the robot.

- H2(d).:

-

There is a significant difference in compliance score between participants who have more trust in the robot and those who have less trust in the robot.

- H3.:

-

Liking.

- H3(a).:

-

There is a significant difference in trusting beliefs score between participants who like the robot more and those who like the robot less.

- H3(b).:

-

There is a significant difference in reactance score between participants who like the robot more and those who like the robot less.

- H3(c).:

-

There is a significant difference in intentions to use the robot again in the future between participants who like the robot more and those who like the robot less.

- H4.:

-

Psychological reactance.

- H4(a).:

-

There is a significant difference in attitude towards using the robot between participants who experience less reactance and those who experience more reactance.

- H5.:

-

Ease of use.

- H5(a).:

-

There is a significant difference in liking score between participants who find the robot easy to use and those who find the robot hard to use.

- H6.:

-

Enjoyment.

- H6(a).:

-

There is a significant difference in liking score between participants who enjoy using the robot more and those who enjoy using the robot less.

- H6(b).:

-

There is a significant difference in attitude towards using the robot between participants who enjoy using the robot more and those who enjoy using the robot less.

- H6(c).:

-

There is a significant difference in intentions to use the robot again in the future between participants who enjoy using the robot more and those who enjoy using the robot less.

Obj3

Compare the predictive power in explaining the acceptance of the persuasive robots using a technology acceptance model and the PRAM.

To determine whether the prediction of behavioural intentions (Intentions) in using the persuasive robots is improved by the inclusion of social responses (Reactance, Beliefs, Compliance, and Liking).

2 Methods and Materials

This study was carried out following the recommendations of the Code of Ethics of the NIP (Nederlands Instituut voor Psychologen—Dutch Institute for Psychologists) and the research group on Human-Technology Interaction at Eindhoven University of Technology. All subjects gave written informed consent following the Declaration of Helsinki. This study was reviewed and approved by the Human-Technology Interaction ethics board at the Eindhoven University of Technology.

2.1 Participants

Seventy-eight participants (41 males and 37 females) were recruited with ages ranging between 19 and 52 (M = 26.949, SD = 6.524). The experiments lasted 45 min in which participants were given a €7.5 voucher for university students or staff and an extra €2 to the external participants as a token of appreciation. The participants were required to meet the inclusion criteria: Normal colour vision and fit for a simple exercise. Participants were randomly selected from a local participant database with no restriction of age, gender, and nationality.

2.2 Persuasive Robot

As in the earlier experiments by Ghazali et al. [14, 25, 57], SociBot a fully programmable robot developed by Engineering Arts Limited was used as a persuasive agent.Footnote 1 It is a half-body (from head to waist) humanoid robot which can display realistic back-projected faces with animated facial expressions, mouth movements and a fully articulated neck. This robot is capable of detecting and tracking humans’ faces using a high definition RGB camera embedded in its forehead and maintaining eye-contact by moving its eyes and head. It also can show emotional expressions through programmable face parameters and speech intonation. The robot can have different animated faces and is equipped with lip-synched speech output with high-quality audio.

In this experiment, the SociBot was given a facial image of a man with hazel eyes and light brown skin colour tone as used in the earlier studies [14, 25, 57] to minimise the reactance towards the persuasive robots [14]. We used the Wizard of Oz (WoZ) method [77, 78] in this study to create a user-friendly human–robot interactive system [79]. Using this method, the advice by the SociBot was controlled by the experimenter in a control area adjacent to the experiment locale. This method was used only to close the loop between the participant’s responses and trigger the response of the robot. That is, the experimenter controlled the sequence of dialogues delivered by the SociBot based on the actions of the participants. For example, the SociBot acknowledged the decision made by the participant (e.g., ‘Thank you for your selection’) only after the participant showed the selected envelope in front of the robot’s camera (with two to four seconds of delay). All participants debriefed after finishing the experiment about the true purpose of this study.

2.3 Task

SociBot was used as a robotic advisor to guide the participants in performing two activities. In the first activity, the participants were required to do a simple one-minute weight shifting exercise. Instructed by the robot, the participants were asked to move their body left and right two times, each side approximately for three seconds. A manual for this exercise was placed on the right-hand side of the experimental table. This first activity was designed to increase the participants’ awareness of the robot capability in mimicking the participants’ head movements.

Persuasive attempts by the robot only started in the second activity. For the second activity, the participants were asked to make several decisions for donations. Five coins with €1 value were placed in front of the participants. In this experiment, the participants must allocate those euros to charity organisations. In five tasks, the participants were presented with different charity organisations in five different colours of folders. For example, in the first task, several animal charity organisations were presented in the red colour folder. The participants had to choose and give one of these animal charity organisations €1.

So, generally, in each of the five tasks, the participants chose one charitable organisation to which they decided to give the €1. In each task, the robot will introduce the charity organisations. Then, the robot will advise the participants on which of these to give the €1. As emphasised by the experimenter and the robot, the participants could choose either to follow the robot’s advice (donate the €1 to the charity as suggested) or make their selection by choosing one of the other charities listed (ignore the advice). The experimenter and the robot emphasised that there is no right or wrong answer in this donation task before the experiment started. In the folder of each task, there were envelopes representing the charity organisations. The participants selected a charity organisation by putting the €1 coin into the charity organisation’s envelope.

The participants were told that this experiment involves real money and real choices. That is, the total of €5 coins donated by them in five tasks would be paid afterwards to the selected charity organisations by the experimenter. This was done to increase the ecological validity of the choice behaviour and to ensure involvement by participants. The latter has been found to be important in earlier studies [25, 80]. In avoiding the participants donating money based on their personal preferences, we kept the identity of the charity organisations as ambiguous as possible by only putting the initial for the charity organisations on the envelope.

2.4 Procedure

Each participant was greeted upon arrival and sat on a chair facing the robot (see Fig. 2). A laptop was placed near the participant, for filling in the pre- and post-experiment questionnaires. An Internet Protocol (IP) camera was attached near to the robot to record the activities during the experimental session. This experiment consisted of three phases: (1) Introduction [10 min] (2) Experiment [20 min] (3) Closing [15 min].

In the first phase, the participant read and signed a consent form. After that, the experimenter summarised the experimental procedure and demonstrated how to do the exercise and donate the money. The experimenter left the experimental room after asking the participant to fill in their demographic information using the laptop provided.

The second phase started as the robot introduced itself after detecting the participant’s face. During this phase, the participant was asked first, to do a simple exercise as explained earlier. The robot praised the participant at the end of the first activity only if the participant had done the instructed exercise by saying ‘Good job!’ Next, the robot introduced the first task for donation activity, for the first charity organisation (e.g., animal charity organisation). The participant was asked to take a specific colour folder (e.g., green folder) and take a look at the envelopes with the names of animal charity organisations inside that folder. After that, the robot would provide advice to the participant using high controlling language by asking him/her to donate the €1 coin to a specific charity. After making up his/her mind to which charity the participant wished to donate the money, the participants were required to put the €1 coin in the selected charity’s envelope and show the envelope to the robot for record. The robot praised the participant’s selection in case the participant chose to donate the money to the charity suggested by the robot. Examples of social praise are ‘Thank you, it is a wise selection’ and ‘Nice, I like your choice.’ Alternatively, the robot acknowledged the selection made by saying ‘I acknowledge your decision.’ High controlling language was used during the persuasive attempts for higher chances of compliance as demonstrated in the earlier study [57], e.g., ‘You have to select the < charity A > to donate the €1 for the animal charity organizations’ and ‘Definitely, you need to donate the €1 to the < charity A>’.

After making all donation activities, the robot asked the participant to fill in the questionnaire on the laptop provided in the third phase of the experiment. The participant was debriefed by the experimenter in oral form and received a small monetary reward or research credits for the participation at the end of the experiment.

2.5 Measures

Each questionnaire was constructed based on the questions and methods used in earlier technology acceptance studies. The question items were taken from such earlier research (for details see below), while the wording of some questions had to be adapted to the technology under investigation here (persuasive robots). Specifically for the TAM constructs, the questionnaire used in this paper was mainly from the Almere model [42] which was developed to investigate the acceptance of social robots by the elderly (see also [29]). For social response constructs, the questionnaire was taken from the latest studies [14, 26] which relate to persuasion activity in human–robot interaction applications.

The phrasing of the questionnaires was adapted to the content of our study without changing the original content of the questions—see Table 1. Each measure that overlaps between the constructs was asked only once. The question items assessing the psychological constructs measured using our questionnaires showed high internal reliability (see high Cronbach’s α values reported below).

Being different from other constructs, the compliance of participants was measured as the number of times participants changed their initial decision to comply with the agent’s advice. Participants had five-choice moments during the experimental session. In case the initial choice was the same as the final choice, then the participants would not get any compliance points for that particular task. In contrast, if the initial and final choices were inconsistent, it showed that the participants were successfully being persuaded by the advisor to change their choice, and they were awarded 1-point for that particular task. E.g., if a particular participant would follow the social agent’s advice and changed his/her final choice as instructed for task number 1,3,4 and was incompliant for the other two tasks, then he/she would be given the compliance score of 3.

We used the SmartPLS version 3.2.7 to estimate the validity of the PRAM using partial least square (PLS) path modelling method [81]. Instead of evaluating covariance of the variables (like in AMOS, Stata, etc.), SmartPLS uses variance values to identify the relationship between key determinants (or known as latent variables in PLS terms) [82, 83]. SmartPLS was chosen since it is suitable for non-normally distributed data (as we find that some of our latent variables are skewed and have kurtosis: see Table 1) and small sample sizes (less than 200). Additionally, SmartPLS is good at handling a large number of indicators [84].

3 Findings

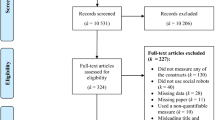

3.1 Preliminary Analysis

Preliminary analysis searched for outliers in the data and showed that there were none. The descriptive statistics for the latent variables used in this study are shown in Table 2.

According to George and Mallery [85], the acceptable range for skewness and kurtosis are ± 1.96. It is demonstrated in Table 2 that Reactance, Ease, and Attitude strayed from a normal distribution.

To check the potential effects of participants’ age and gender on the constructs, two Multivariate Analysis of Variance (MANOVA) tests was conducted on Usefulness, Enjoy, Intentions, Beliefs, Liking and Compliance. Results showed that (1) no significant effect of ageFootnote 2 and (2) no significant effect of genderFootnote 3 on the stated dependent variables. For the non-parametric constructs, the Kruskal–Wallis H test showed that there was no statistically significant difference in ageFootnote 4 and genderFootnote 5 on Ease and Attitude. Additionally, the Kruskal–Wallis H test was conducted to check the main effect of participants’ age and gender on Reactance (feelings of anger and negative cognitions). As expected, we found that (1) no significant effect of age, χ2(2)=17.84, p =0.72 and (2) no significant effect of gender, χ2(2)=2.41, p =0.12 on Reactance.

The 2-tailed Pearson correlation between the two components of Reactance, that is feelings of anger and negative cognitions, was 0.28, p = 0.02. In line with the proposed conceptualisation of reactance [47], our results showed that feelings of anger and negative cognitions were correlated. Results implied an overlap between the Reactance constructs. Thus, to test the hypotheses in the PRAM, the reactance score (Reactance) for each participant was calculated by averaging the participant’s score on feelings of anger and negative cognitions.

3.2 Objective 1

The first aim of this study was to verify that the technology acceptance model (TAM) can be employed to explain and predict the acceptance of persuasive robots. This model composed of five latent variables as Usefulness, Ease, Enjoy, Attitude and Intentions.

3.2.1 Psychometric Properties of the TAM Model

We ran a confirmatory factor analysis to observe the reliability and validity of the data by examining how well the measured observed variables represent the latent variables [86]. The analysis included measuring Average Variance Extracted (AVE), reliability (Cronbach’s α), Composite Reliability (CR), Discriminant Validity (DV), and collinearity [87].

3.2.1.1 Average Variance Extracted (AVE)

AVE reflects the number of observed variables correlated with their respective latent variables due to measurement errors [88]. In observing the convergent validities, the AVE for each latent variable, which is the mean of factor loading square, should be bigger than 0.50 (AVE > 0.50) [83, 89]. Results showed that the AVE for all latent variables was higher than 0.50. Thus, convergent validity was established.

3.2.1.2 Reliability

We conducted reliability analysis by observing the internal consistency values (known as Cronbach’s α) and the overall reliability of the latent variables by assessing the standardised loading, error variance, and R2 values of each observed variable [88,89,90]. The values for both Cronbach’s α and CR should be equal to or greater than 0.70 to be considered as adequate [89, 91]. Without eliminating any observed variables, the Cronbach’s α and CR for all latent variables were above 0.70.

3.2.1.3 Discriminant Validity

We used discriminant validity as an indicator to ensure that all latent variables are independent of one another [92]. That is, the factorial loads of the observed variables for a latent variable must be greater than to the other latent variables [88]. According to Fornell–Larcker Criterion [89], the convergent validity of the measurement model can be assessed by the AVE and CR. Applying this criterion, we confirmed the discriminant validity of our data.

3.2.1.4 Collinearity Statistics (VIF)

Collinearity of the latent variables was observed by using variance inflation factors (VIF). Ringle et al. (2015) [81] stated that the maximum value of VIF should be ‘5.00’ in avoiding multicollinearity issues. VIF for our data showed excellent results, presenting in all cases values lower than 2.00.

3.2.2 Evaluation of the TAM Model

The acceptance of persuasive robots using TAM was tested by examining the significance level (t test) [93] using bootstrapping [94] with 1000 subsamples (recommended by Hair et al. [95]). f2 (Cohen’s Indicator) value was used to reflect the effect size of each predictor in explaining the predicted variable [96]. Hair et al. [95] suggested that Cohen’s effect size values of 0.02, 0.15, and 0.35 are considered as small, medium, and large effect, respectively.

In our results, we found that medium effect on Ease in predicting Attitude (f2 = 0.21) and Usefulness (f2 = 0.220), and Enjoy in predicting Ease (f2 = 0.30). Results also showed a large effect of Attitude in predicting Intentions (f2 = 1.36) and Usefulness in predicting Attitude (f2 = 0.43). Results presented in Fig. 3 show that almost all paths (except for the prediction of Intentions by Usefulness) were statistically significant. The path coefficient for each prediction was also observed in this analysis.

For a global view of the TAM, results demonstrated a satisfactory R2 of 0.52 for Attitude, and high R2 of 0.73 for Intentions [42].

Based on the insignificant path of Usefulness in predicting Intentions, no effect (f2 = 0.02) was found on the mentioned latent variables. We used regression analysis to investigate whether Attitude mediates the effect of Usefulness on Intentions. First, this analysis showed that Usefulness was a significant predictor of Intentions (B = 0.68, SD = 0.96), t = 6.24, F(1, 76) = 38.93 (path c). Second, we checked for a positive relationship between Usefulness and Attitude. Results confirmed that Usefulness was a significant predictor of Attitude (B = 0.62, SD = 0.80), t = 6.92, F(1, 76) = 47.90 (path a). Third, we checked whether the suspected mediator (Attitude) affect the outcome (Intentions). Indeed, Attitude was a significant predictor of Intentions (B = 0.85, SD = 0.63), t = 13.86, F(1, 76) = 191.99 (path b). Finally, this analysis showed the effect of Usefulness on Intentions became non-significant when taking into account Attitude in the regression analysis (B = 0.09, SD = 0.80), t = 1.17, F(2, 75) = 97.14 (path c’). These results supported the hypothesis that Attitude was a full mediator of the relationship between the Usefulness and Intentions.

3.3 Objective 2

The second goal of this study was to describe the acceptance of persuasive robots using a proposed model called Persuasive Robots Acceptance Model (PRAM), by incorporating the technology acceptance models (original TAM [20] and TAM3 [23]) and social responses factors. The PRAM consisted of ten latent variables. Latent variables that stemmed from the TAM as Usefulness, Ease, Enjoy, Attitude and Intentions. We added four latent variables representing social responses (Beliefs, Compliance, Reactance, and Liking) in the PRAM.

3.3.1 Psychometric Properties of the PRAM

Similar steps as in the earlier section were taken in running the confirmatory factor analysis.

3.3.1.1 Average Variance Extracted (AVE)

Almost all latent variables, except Liking, presented AVE higher than 0.50. To ensure convergent validity, two observed variables of Liking which have factorial loads less than 0.50 were removed from the analysis (confident and informed). As results, AVE for Liking increased to 0.48, but still, the construct did not converge with a satisfactory range. The elimination of two more Liking observed variables (interesting and competent) with the factorial loads less than 0.60 permitted AVE to increase to 0.54. All AVE showed satisfactory results, presenting in all latent variables higher than 0.50.

3.3.1.2 Reliability

The consistency values of all latent variables (Cronbach’s α) ranging from 0.72 to 0.94 were satisfactory (above 0.70 thresholds). Results indicated that composite reliability was not an issue.

3.3.1.3 Discriminant Validity (DV)

By using Fornell–Larcker Criterion [89], it could be observed that the correlation of Beliefs’ observed variables was higher for Liking than Beliefs. Thus, two observed variables for Liking (interesting and honest) that have the smallest differences in factorial crossed loads were taken out from the analysis, thus confirming discriminant validity.

3.3.1.4 Collinearity Statistics (VIF)

The PRAM did not have any multicollinearity issue, while the inner VIF ranged from 1.00 to 3.20.

3.3.2 Evaluation of the PRAM

Similar to the earlier model testing, the hypotheses for the PRAM were tested by examining the path coefficients and the significance level (t test) of the model [93] using bootstrapping [94] with 1000 subsamples (recommended by Hair et al. [95]). Results demonstrated that Compliance had no effect in predicting Attitude, Reactance had no effect in predicting Attitude, and Usefulness had no effect in predicting Intentions (from original TAM) with f2 smaller than 0.02. Other predictors had significant effects in predicting the respective predicted variables.

To design the final version of the PRAM for the acceptance of persuasive robots, the insignificant paths from the hypothesis testing were eliminated one-by-one, starting with the path that had no effect size. At the same time, the changes of p values for other paths were observed after each path elimination. The insignificant paths from the TAM [20, 21] (as shown in Fig. 3) were retained in the final model to preserve the prediction by the original TAM [20, 21]. As results, only three paths from the hypothesis for social responses prediction were removed: prediction of Attitude by Compliance (H1a), prediction of Intentions by Compliance (H1b), and prediction of Attitude by Reactance (H4). The rest of the paths were statistically significant.

Predictions based on TAM showed that Usefulness was a predictor for Attitude (f2= 0.08) with small effect size but not a predictor for Intentions (f2= 0.00). Whereas with small effect, Ease was a predictor for Usefulness (f2= 0.06), Ease was predicted by Attitude (f2= 0.10) and Enjoy predicted Ease with medium effect (f2= 0.27). Importantly, Attitude had a large effect on predicting Intentions (f2= 0.75).

Hypothesis 1 was rejected. That is, Attitude (f2= 0.00) and Intentions (f2= 0.01) were not predicted by Compliance. Reactance (f2= 0.09), Usefulness (f2= 1.12), Attitude (f2= 0.02) and Compliance (f2= 0.19) were significantly determined by Beliefs, therefore Hypothesis 2 was accepted. Importantly, Beliefs had a large effect on predicting Usefulness, a medium effect in predicting Compliance, and a small effect in predicting Reactance and Attitude. Hypothesis 3 predicted that higher Liking causes higher Beliefs and Intentions, which causes lower Reactance. All significant paths confirmed this hypothesis, and Liking had a large effect on predicting Beliefs (f2= 1.26) and a small effect on other predictions (f2= 0.07 for Intentions and f2= 0.03 for Reactance). Hypothesis 4 was rejected, in which Reactance was not a predictor for Attitude (f2= 0.01). Hypothesis 5 was accepted with Ease predicting Liking with medium effect size (f2= 0.15). Hypothesis 6 was accepted. That is, Enjoy was a predictor for Liking (f2= 0.20), Attitude (f2= 0.15) and Intentions (f2= 0.03) with small effect size.

We also observed the path coefficient for each prediction in this analysis (refer to Fig. 4). More importantly, the PRAM illustrated the increment of R2 values for Attitude and Intentions compared to the earlier TAM (model without the social responses shown in Fig. 3). That is, a large R2 for Attitude (0.61) and a large R2 for Intentions (0.76) [42].

3.4 Objective 3

This study aimed to test whether the social responses add predictive power to the TAM specifically for the persuasive robot. Using the same method as in [97], we compared the R2 for Attitude and Intention from TAM (presented in Objective 1) and PRAM (inclusion of social responses presented in Objective 2) by calculating the F-ratio and its significance. Results of these analyses confirmed that adding social responses as determinants to TAM significantly increased the predictive power in explaining the acceptance of the persuasive robot (demonstrated by PRAM).

In evaluating the goodness of fit for partial least square (PLS) method for SEM, Henseler and Sarstedt [93] claimed that global goodness of fit for PLS proposed by Tenenhaus et al. [98] did not represent a fit measure. Later on, Hair et al. [95] highlighted that there was no global goodness of fit in PLS. Thus, in this paper, we chose R2 (also known as the coefficient of determination) value as the model’s predictive in judging the quality of the PRAM [93]. The R2 for each endogenous variable was evaluated since it reflects the fitness of the model in the context of regression analysis. If the model fits the data 100%, or in other words, the model explains all of the variations in the endogenous variable, then the R2 for such variable is equal to 1.00. The R2 value was used in the earlier study [99] especially in human–robot interaction applications [97, 100] as a model-fit measure [101]. According to Cohen [96], R2 of 0.02, 0.13 and 0.26 are considered as small, medium and large effects respectively in the field of social and behavioural science.

As results, the R2 for Attitude and Intentions increased with the inclusion of social responses based on the observation of overall prediction using SmartPLS. That is 9.1% increment of R2 for Attitude (TAM: 0.52 and PRAM: 0.61). Also, the inclusion of social responses in the PRAM (R2 of 0.76) compared to the TAM (R2 of 0.73) resulting in the increment of 2.8% explains the variance for Intentions. To examine the significances of the R2′s increments, we conducted hierarchical multiple regressions using SPSS since SmartPLS does not offer such a test. Hierarchical multiple regression analysis is a framework for model comparison rather than a statistical method. This analysis is effective in comparing multiple regression models by evaluating the changes in R2 and its significance. It determines whether the increment (or decrement) of R2 value for the dependent variable (e.g., Attitude) is statistically significant after including a new set of independent variables (we called it model 2) into the original set of independent variables (we called it model 1) [102, 103]. This analysis was conducted in earlier research by extending TAM [20] with the evaluation of robot characteristics in predicting robot acceptance [104] and extending TRA [63] with a key determinant from TPB [105] in determining the intention to work with a social robot [106].

To compare the changes of Attitude from TAM and PRAM, we ran hierarchical multiple regression with Attitude as dependent variable, Usefulness, and Ease as independent variables for the first model (based on TAM) besides Beliefs and Enjoy as additional independent variables for the second model (based on PRAM). Results demonstrated that the addition of Beliefs and Enjoy (model 2) led to a statistically significant increment in R2 of 9.5%, F(2, 73) = 8.02, p < 0.001. The full model of Usefulness, Ease, Beliefs and Enjoy to predict Attitude was statistically significant, R2 = 0.58, F(4, 73) = 25.18, p < 0.001.

We ran another hierarchical multiple regression to determine if the addition of Enjoy and Liking improved the prediction of Intentions (model 2) over and above Attitude and Usefulness alone (model 1). As results, the addition of Enjoy and Liking to the prediction of Intentions (model 2) led to a statistically significant increment in R2 of 2.9%, F(2, 73) = 4.20, p < 0.05. The full model of Enjoy, Liking, Attitude and Usefulness to predict Intentions was statistically significant, R2 = 0.75, F(4, 73) = 54.81, p < 0.001.

In summary, these hierarchical multiple regressions demonstrated clearly that social responses, Beliefs in predicting Attitude and Liking in predicating Intentions, enhanced the predictive power in explaining the acceptance of persuasive robot as demonstrated by PRAM.

4 Discussion

This work enriches the body of research on TAM from the standpoint of social robotic user acceptance field to ensure that people are willing to interact with and accept to use these robots in everyday life. The first goal of the study was to empirically test the TAM in explaining the acceptance of persuasive robots. To achieve this goal, we employed five latent variables which originated from original TAM [20]) and TAM 3 [23] namely perceived usefulness, perceived ease of use, perceived enjoyment, attitude towards using and behavioural intentions. Based on self-reported measures, our results suggested that TAM demonstrates good predictive powers in understanding the acceptance of persuasive robots with satisfactory and high R2 for attitude towards using and behavioural intentions [42]. Earlier research showed comparable R2 values for attitude towards using (R2 = 0.61 [107]) and for behavioural intentions (R2 = 0.63 [108], R2 = 0.53 [107]) in measuring the acceptance of social robots in separate studies. Among the constructs, perceived usefulness was the strongest predictor of attitude towards using (stronger than perceived ease of use) [109] whereas attitude towards using was the only predictor of behavioural intentions. While some of the earlier works in social robotics found a significant predictor of perceived usefulness on behavioural intentions [39, 42], our results showed that perceived usefulness had no direct causal effect in predicting behavioural intentions. Further analysis reported that attitude towards using was a full mediator between perceived usefulness and behavioural intentions, similarly as expected in the original conceptualisation of TAM [110]. This phenomenon might be due to a large effect of attitude towards predicting behaviour intentions, which in return diminished the power of perceived usefulness in predicting behaviour intentions. Mediation in TAM constructs was commonly found in earlier studies [111, 112] (e.g., [112] showed that beliefs about ease of use were a full mediator of the relationship between the level of education and beliefs about usefulness). Applied to our study, the mediation analysis reflects that when people perceived the persuasive robot as a useful advisor in selecting the charity organisations, they would have a favourable attitude towards using the robot, which in turn influences them to use the robot again in the future.

The evaluation of social responses towards the persuasive robot using questionnaire demonstrates a promising result for a better understanding of the acceptance of persuasive robots. By extending the TAM constructs used in the first objective, we expected to increase the power of the acceptance model by adding social responses in the PRAM (second objective). The social responses include trusting behaviour, trusting beliefs, psychological reactance and liking. As expected, trusting beliefs and liking fitted in the PRAM by its contribution to the increment of R2 for attitude towards using and behavioural intentions correspondingly. Earlier research pointed out the role of trust in enhancing user’s acceptance and intention to use for technologies in general [65, 66], and social robots in particular [113, 114]. Importantly, trusting beliefs was the strongest predictor of perceived usefulness. People who believe the robot will find it a useful advisor in selecting the best charity organisations for the donation task.

Additionally, Cialdini [70] highlighted that liking is one of the weapons in the principle of persuasion. As a part of intrinsic motivation suggested by Cognitive Evaluation Theory [68], liking can be increased by interacting with someone that pays us compliments and have some similarity to us [115]. Since the persuasive robot in this study used social cues that people like (as identified by previous research [14]), it helps to increase the persuasive power of the robot in persuading people to experience less reactance towards the persuasive attempts. Indirectly, liking added predictive power in explaining the behavioural intentions to use the robot in case the participants have access to the robotic advisor again.

On the other hand, compliance did not determine the attitude towards using or behavioural intentions in using the persuasive robot again in the future. This result was in line with earlier research [21, 23] which associated compliance with social influences, and which found no evidence that higher compliance led to higher attitude towards using the technology [116]. This finding may be due to the task designed in this experiment. We asked the participants to donate the money to ambiguous charity organisations, which caused them to comply with the advice given by the robot although they had low favourable attitudes towards using the robot. Similar to compliance, psychological reactance also was not a predictor for attitude towards using the robot, even though psychological reactance was predicted by liking and trusting beliefs. The likeable social cues implemented on the persuasive robot could be the reason why the psychological reactance score was low. In this study, psychological reactance was skewed on the right side (positive skewness) with a very low mean value, M = 1.02 (neutral = 3). It indicates that the persuasive attempts in this experiment did not trigger any significant feelings of anger and negative cognitions towards the robot.

One of the most important results from this study was the significant increments of attitude towards using and behavioural intentions constructs by the inclusion of social responses, particularly trusting beliefs and liking in the PRAM. By including perceived enjoyment and trusting beliefs as predictors of attitude towards using, the coefficient of determination for attitude towards using increased by 9.1% in the overall PRAM compared to the TAM. Whereas, the additional prediction by perceived enjoyment and liking increased the coefficient of determination for behavioural intentions by 2.8% in the overall PRAM compared to the TAM.

4.1 Limitations and Future Work

This study provides insights into the significant role of social responses for the prediction of persuasive robots’ acceptance through PRAM. In line with earlier research [65, 114], our model suggests that trusting beliefs should be a factor that predicts acceptance in any case where social interaction plays a role. However, we could not fully validate the model we proposed, since the experimental task in our study did not lead to sufficient variation on compliance and psychological reactance among participants. Replicating our design on the acceptance model when participants would perform an experimental task that can evoke variation levels of compliance and psychological reactance would increase the confidence in this model. This improvement might be made by changing the task used in this study; for example, instead of using ambiguous names for the charity organisations as in the current study, future study might use clear, real names of the charity organisations. We expect that if the participants have clear information about the organisations to be donated to, they will have their stances to donate to which charity, and it may vary the compliance and reactance scores. Other than that, the experimental design and questionnaire measures employed in this study might be limited to study causal effects. Changing the experimental design and using some control checks (for example, adding some questions as manipulation checks and covariates) might help to avoid uncontrolled influences of the latent variables. Further research may also consider enhancing PRAM by including other social responses like engagement and social attraction.

Notes

Main effect of age on (a) Usefulness, F(22, 55) = 1.11, p = 0.37, (b) Enjoy, F(22, 55) = 1.06, p = 0.41, (c) Intentions, F(22, 55) = 1.72, p = 0.054, (d) Beliefs, F(22, 55) = 1.22, p = 0.27 (e) Liking, F(22, 55) = 1.04, p = 0.43, (f) Compliance, F(22, 55) = 1.13, p = 0.35.

Main effect of gender on (a) Usefulness, F(22, 55) = 0.40, p = 0.53, (b) Enjoy, F(22, 55) = 0.32, p = 0.57, (c) Intentions, F(22, 55) = 0.32, p = 0.57, (d) Beliefs, F(22, 55) = 0.01, p = 0.93 (e) Liking, F(22, 55) = 0.72, p = 0.40, (f) Compliance, F(22, 55) = 0.02, p = 0.88.

Main effect of age on (a) Ease, χ2(2) = 21.68, p = 048 (b) Attitude, χ2(2) = 25.01, p = 0.30.

Main effect of gender on (a) Ease, χ2(2) = 0.24, p = 0.62 (b) Attitude, χ2(2) = 0.12, p = 0.89

.

References

Dautenhahn K (1994) Trying to imitate-a step towards releasing robots from social isolation. In: From perception to action conference, 1994, Proceedings. IEEE, pp 290–301

Breazeal C (2003) Toward sociable robots. Robot Auton Syst 42(3–4):167–175

Eguchi A, Okada H (2018) Learning with social robots—the World Robot Summit’s approach. In: IEEE integrated STEM education conference (ISEC). IEEE, pp 53–56

Share P, Pender J (2018) Preparing for a robot future? Social professions, social robotics and the challenges ahead. Irish J Appl Soc Stud 18(1):4

Herse S, Vitale J, Ebrahimian D, Tonkin M, Ojha S, Sidra S, Johnston B, Phillips S, Gudi SLKC, Clark J (2018) Bon Appetit! Robot persuasion for food recommendation. In: Companion of the 2018 ACM/IEEE international conference on human–robot interaction. ACM, pp 125–126

Rincon JA, Costa A, Novais P, Julian V, Carrascosa C (2018) A new emotional robot assistant that facilitates human interaction and persuasion. Knowl Inf Syst 60(1):363–383

Salomons N, van der Linden M, Strohkorb Sebo S, Scassellati B (2018) Humans conform to robots: disambiguating trust, truth, and conformity. In: Proceedings of the 2018 ACM/IEEE international conference on human-robot interaction. ACM, pp 187–195

Rossi S, Staffa M, Tamburro A (2018) Socially assistive robot for providing recommendations: comparing a humanoid robot with a mobile application. Int J Soc Robot 10(2):265–278

Martin CD (1997) The media equation: how people treat computers, television and new media like real people and places. Spectrum 34(3):9–10

Heerink M, Krose B, Evers V, Wielinga B (2007) Observing conversational expressiveness of elderly users interacting with a robot and screen agent. In: IEEE international conference on rehabilitation robotics (ICORR 2007). IEEE, pp 751–756

Barakova E, De Haas M, Kuijpers W, Irigoyen N, Betancourt A (2018) Socially grounded game strategy enhances bonding and perceived smartness of a humanoid robot. Connect Sci 30(1):81–98

Siegel M, Breazeal C, Norton MI (2009) Persuasive robotics: the influence of robot gender on human behavior. In: IEEE/RSJ international conference on intelligent robots and systems (IROS 2009). IEEE, pp 2563–2568

Roubroeks M, Midden C, Ham J (2009) Does it make a difference who tells you what to do?: exploring the effect of social agency on psychological reactance. In: Proceedings of the 4th international conference on persuasive technology. ACM, p 15

Ghazali AS, Ham J, Barakova EI, Markopoulos P (2018) Effects of robot facial characteristics and gender in persuasive human-robot interaction. Front Robot AI 5:73

Lee KM, Park N, Song H (2005) Can a robot be perceived as a developing creature? Effects of a robot’s long-term cognitive developments on its social presence and people’s social responses toward it. Hum Commun Res 31(4):538–563

Young JE, Hawkins R, Sharlin E, Igarashi T (2009) Toward acceptable domestic robots: applying insights from social psychology. Int J Social Robot 1(1):95

Bartneck C, Nomura T, Kanda T, Suzuki T, Kennsuke K (2005) A cross-cultural study on attitudes towards robots. In: HCI international

Louho R, Kallioja M, Oittinen P (2006) Factors affecting the use of hybrid media applications. Graph Arts Finl 35(3):11–21

Davis FD (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 13:319–340

Davis FD, Bagozzi RP, Warshaw PR (1989) User acceptance of computer technology: a comparison of two theoretical models. Manag Sci 35(8):982–1003

Venkatesh V, Davis FD (2000) A theoretical extension of the technology acceptance model: four longitudinal field studies. Manag Sci 46(2):186–204

Venkatesh V, Morris MG, Davis GB, Davis FD (2003) User acceptance of information technology: toward a unified view. MIS Q 27(3):425–478

Venkatesh V, Bala H (2008) Technology acceptance model 3 and a research agenda on interventions. Decis Sci 39(2):273–315

Venkatesh V, Thong JY, Xu X (2012) Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q 36:157–178

Ghazali AS, Ham J, Barakova E, Markopoulos P (2018) The influence of social cues in persuasive social robots on psychological reactance and compliance. Comput Hum Behav 87:58–65

Ghazali AS, Ham J, Barakova EI, Markopoulos P (2018) Poker face influence: persuasive robot with minimal social cues triggers less psychological reactance. In: The 27th IEEE international symposium on robot and human interactive communication (RO-MAN) 2018. IEEE

Ham J, Midden CJ (2014) A persuasive robot to stimulate energy conservation: the influence of positive and negative social feedback and task similarity on energy-consumption behavior. Int J Soc Robot 6(2):163–171

Yousafzai SY, Foxall GR, Pallister JG (2007) Technology acceptance: a meta-analysis of the TAM: part 1. J Model Manag 2(3):251–280

de Graaf MM, Ben Allouch S, van Dijk JA (2017) Why would i use this in my home? A model of domestic social robot acceptance. Hum Comput Interact 34(2):115–173

Bagozzi RP (2007) The legacy of the technology acceptance model and a proposal for a paradigm shift. J Assoc Inf Syst 8(4):3

Van Raaij EM, Schepers JJ (2008) The acceptance and use of a virtual learning environment in China. Comput Educ 50(3):838–852

Bhattacherjee A (2001) Understanding information systems continuance: an expectation-confirmation model. MIS Q 25(3):351–370

Ruggiero TE (2000) Uses and gratifications theory in the 21st century. Mass Commun Soc 3(1):3–37

Davis FD, Bagozzi RP, Warshaw PR (1992) Extrinsic and intrinsic motivation to use computers in the workplace. J Appl Soc Psychol 22(14):1111–1132

Tarhini A, Hone K, Liu X, Tarhini T (2017) Examining the moderating effect of individual-level cultural values on users’ acceptance of E-learning in developing countries: a structural equation modeling of an extended technology acceptance model. Interact Learn Environ 25(3):306–328

Kim H-Y, Lee JY, Mun JM, Johnson KK (2017) Consumer adoption of smart in-store technology: assessing the predictive value of attitude versus beliefs in the technology acceptance model. Int J Fash Design Technol Educ 10(1):26–36

Marakarkandy B, Yajnik N, Dasgupta C (2017) Enabling internet banking adoption: an empirical examination with an augmented technology acceptance model (TAM). J Enterp Inf Manag 30(2):263–294

Stock RM, Merkle M (2017) A service robot acceptance model: user acceptance of humanoid robots during service encounters. In: IEEE international conference on pervasive computing and communications workshops (PerCom Workshops 2017). IEEE, pp 339–344

Conti D, Di Nuovo S, Buono S, Di Nuovo A (2017) Robots in education and care of children with developmental disabilities: a study on acceptance by experienced and future professionals. Int J Social Robot 9(1):51–62

Chen TL, Bhattacharjee T, Beer JM, Ting LH, Hackney ME, Rogers WA, Kemp CC (2017) Older adults’ acceptance of a robot for partner dance-based exercise. PLoS ONE 12(10):e0182736

Lombard M, Ditton T (1997) At the heart of it all: the concept of presence. J Comput Mediat Commun 3(2):JCM321

Heerink M, Kröse B, Evers V, Wielinga B (2010) Assessing acceptance of assistive social agent technology by older adults: the almere model. Int J Soc Robot 2(4):361–375

McKnight DH, Cummings LL, Chervany NL (1998) Initial trust formation in new organizational relationships. Acad Manag Rev 23(3):473–490

Vidotto G, Massidda D, Noventa S, Vicentini M (2012) Trusting beliefs: a functional measurement study. Psicol Int J Methodol Exp Psychol 33(3):575–590

Mileounis A, Cuijpers RH, Barakova EI (2015) Creating robots with personality: the effect of personality on social intelligence. In: International work-conference on the interplay between natural and artificial computation. Springer, Cham, pp 119–132

Brehm JW (1966) A theory of psychological reactance. Academic Press

Dillard JP, Shen L (2005) On the nature of reactance and its role in persuasive health communication. Commun Monogr 72(2):144–168

Hockenberry SL, Billingham RE (1993) Psychological reactance and violence within dating relationships. Psychol Rep 73(3f):1203–1208

Quick BL, Stephenson MT (2007) The Reactance Restoration Scale (RRS): a measure of direct and indirect restoration. Commun Res Rep 24(2):131–138

Quick BL, Stephenson MT (2008) Examining the role of trait reactance and sensation seeking on perceived threat, state reactance, and reactance restoration. Hum Commun Res 34(3):448–476

Quick BL, Stephenson MT (2007) Further evidence that psychological reactance can be modeled as a combination of anger and negative cognitions. Commun Res 34(3):255–276

Miller CH, Lane LT, Deatrick LM, Young AM, Potts KA (2007) Psychological reactance and promotional health messages: the effects of controlling language, lexical concreteness, and the restoration of freedom. Hum Commun Res 33(2):219–240

Ghazali AS, Ham J, Barakova EI, Markopoulos P (2018) Poker face influence: persuasive robot with minimal social cues triggers less psychological reactance. In: 2018 27th IEEE international symposium on robot and human interactive communication (RO-MAN). IEEE, pp 940–946

Breazeal C, Faridi F, Adalgeirsson SO, Donahue TJ, Raghavan S, Shonkoff A (2018) Embodied dialog and embodied speech authoring tools for use with an expressive social robot. US Patent App. 15/812,223

Goble H, Edwards C (2018) A robot that communicates with vocal fillers has… Uhhh… greater social presence. Commun Res Rep 35(3):256–260

Admoni H, Scassellati B (2017) Social eye gaze in human-robot interaction: a review. J Hum Robot Interact 6(1):25–63

Ghazali AS, Ham J, Barakova EI, Markopoulos P (2017) Pardon the rude robot: social cues diminish reactance to high controlling language. In: 26th IEEE international symposium on robot and human interactive communication (RO-MAN), 2017. IEEE, pp 411–417

Todorov A, Oosterhof NN (2011) Modeling social perception of faces [social sciences]. IEEE Signal Process Mag 28(2):117–122

Kaptein M, Markopoulos P, de Ruyter B, Aarts E (2011) Two acts of social intelligence: the effects of mimicry and social praise on the evaluation of an artificial agent. AI & Soc 26(3):261–273

Marangunić N, Granić A (2015) Technology acceptance model: a literature review from 1986 to 2013. Univ Access Inf Soc 14(1):81–95

De Graaf MM, Allouch SB, Klamer T (2015) Sharing a life with Harvey: exploring the acceptance of and relationship-building with a social robot. Comput Hum Behav 43:1–14

Kelman HC (1958) Compliance, identification, and internalization three processes of attitude change. J Confl Resolut 2(1):51–60

Fishbein M, Ajzen I (1975) Belief, attitude, intention, and behavior: an introduction to theory and research. Addison-Wesley, Reading

Warkentin M, Johnston AC, Shropshire J (2011) The influence of the informal social learning environment on information privacy policy compliance efficacy and intention. Eur J Inf Syst 20(3):267–284

Chauhan S (2015) Acceptance of mobile money by poor citizens of India: integrating trust into the technology acceptance model. Info 17(3):58–68

Pavlou PA (2003) Consumer acceptance of electronic commerce: integrating trust and risk with the technology acceptance model. Int J Electr Commer 7(3):101–134

Ha S, Stoel L (2009) Consumer e-shopping acceptance: antecedents in a technology acceptance model. J Bus Res 62(5):565–571

Deci EL, Ryan RM (1985) Cognitive evaluation theory. Intrinsic motivation and self-determination in human behavior. Springer, Berlin, pp 43–85

Kim H-W, Chan HC, Gupta S (2007) Value-based adoption of mobile internet: an empirical investigation. Decis Support Syst 43(1):111–126

Cialdini RB, Cialdini RB (2007) Influence: the psychology of persuasion. Collins, New York

Rains SA, Turner MM (2007) Psychological reactance and persuasive health communication: a test and extension of the intertwined model. Hum Commun Res 33(2):241–269

Huijts NM, Molin EJ, Steg L (2012) Psychological factors influencing sustainable energy technology acceptance: a review-based comprehensive framework. Renew Sustain Energy Rev 16(1):525–531

Bruner GC II, Kumar A (2005) Explaining consumer acceptance of handheld Internet devices. J Bus Res 58(5):553–558

Ghani JA (1995) Flow in human computer interactions: Test of a model. Human factors in information systems: Emerging theoretical bases. Ablex, Norwood, pp 291–311

Lu Y, Zhou T, Wang B (2009) Exploring Chinese users’ acceptance of instant messaging using the theory of planned behavior, the technology acceptance model, and the flow theory. Comput Hum Behav 25(1):29–39

Ajzen I (1991) The theory of planned behavior. Organ Behav Hum Decis Process 50(2):179–211

Kennedy A, Wilkes A, Elder L, Murray WS (1988) Dialogue with machines. Cognition 30(1):37–72

Grosz BJ (1977) The representation and use of focus in dialogue understanding. SRI International Menlo Park, Menlo Park, CA, United States

Dahlbäck N, Jönsson A, Ahrenberg L (1993) Wizard of Oz studies—why and how. Knowl Based Syst 6(4):258–266

Oreg S, Sverdlik N (2014) Source personality and persuasiveness: big five predispositions to being persuasive and the role of message involvement. J Pers 82(3):250–264

Ringle CM, Wende S, Becker J-M (2015) SmartPLS 3. Boenningstedt: SmartPLS GmbH, http://www.smartpls.com

Bagozzi RP, Yi Y (2012) Specification, evaluation, and interpretation of structural equation models. J Acad Mark Sci 40(1):8–34

Henseler J, Ringle CM, Sinkovics RR (2009) The use of partial least squares path modeling in international marketing. New challenges to international marketing. Emerald Group Publishing Limited, Bingley, pp 277–319

Hair JF, Sarstedt M, Ringle CM, Mena JA (2012) An assessment of the use of partial least squares structural equation modeling in marketing research. J Acad Mark Sci 40(3):414–433

George D (2011) SPSS for windows step by step: a simple study guide and reference, 17.0 update, 10/e. Pearson Education, India

Hair JF, Black WC, Babin BJ, Anderson RE, Tatham RL (1998) Multivariate data analysis, vol 3. Prentice Hall, Upper Saddle River

Bilgihan A, Gen Y (2016) Customer loyalty in online shopping: an integrated model of trust, user experience and branding. Comput Hum Behav 61:103–113

Da Silva D, Bido D, Ringle C (2014) Structural equation modeling with the SmartPLS. Brazilian Journal Of Marketing 13(2)

Fornell C, Larcker DF (1981) Evaluating structural equation models with unobservable variables and measurement error. J Mark Res 18(1):39–50

Bacon DR, Sauer PL, Young M (1995) Composite reliability in structural equations modeling. Educ Psychol Measur 55(3):394–406

Nunnally JC, Bernstein IH, Berge JM (1967) Psychometric theory, vol 226. McGraw-Hill, New York

Henseler J, Ringle CM, Sarstedt M (2015) A new criterion for assessing discriminant validity in variance-based structural equation modeling. J Acad Mark Sci 43(1):115–135

Henseler J, Sarstedt M (2013) Goodness-of-fit indices for partial least squares path modeling. Comput Stat 28(2):565–580

Chin WW (1998) The partial least squares approach to structural equation modeling. Modern Methods Bus Res 295(2):295–336

Hair JF Jr, Hult GTM, Ringle C, Sarstedt M (2016) A primer on partial least squares structural equation modeling (PLS-SEM). Sage Publications, Thousand Oaks

Cohen J (1988) Statistical power analysis for the behavioral sciences, 2nd edn. Erlbaum Associates, Hillsdale

Piçarra N, Giger J-C (2018) Predicting intention to work with social robots at anticipation stage: Assessing the role of behavioral desire and anticipated emotions. Comput Hum Behav 86:129–146

Tenenhaus M, Amato S, Esposito Vinzi V A global goodness-of-fit index for PLS structural equation modelling. In: Proceedings of the XLII SIS scientific meeting, 2004, pp 739–742

Pal D, Triyason T, Funilkul S, Chutimaskul W (2018) Smart homes and quality of life for the elderly: perspective of competing models. IEEE Access 6:8109–8122

You S, Robert Jr LP (2018) Human–robot similarity and willingness to work with a robotic co-worker. In: Proceedings of the 2018 ACM/IEEE international conference on human-robot interaction. ACM, pp 251–260

Bollen KA, Long JS (1992) Tests for structural equation models: introduction. Sociol Methods Res 21(2):123–131

Teeroovengadum V, Heeraman N, Jugurnath B (2017) Examining the antecedents of ICT adoption in education using an Extended Technology Acceptance Model (TAM). Int J Educ Dev Inf Commun Technol 13(3):4–23

Ahmed F, Qin Y, Aduamoah M (2018) Employee readiness for acceptance of decision support systems as a new technology in E-business environments; A proposed research agenda. In: International conference on industrial technology and management (ICITM). IEEE, pp 209–212

Ezer N (2008) Is a robot an appliance, teammate, or friend? Age-related differences in expectations of and attitudes toward personal home-based robots. Georgia Institute of Technology

Ajzen I (1985) From intentions to actions: a theory of planned behavior. Action control. Springer, Berlin, pp 11–39

Giger J-C, Piçarra N (2017) Who wants to work with social robots? Using the theory of reasoned action and the theory of planned behavior to predict intention to work with social robots. In: Book of Proceedings, p 515

Park E, Del Pobil AP (2013) Users’ attitudes toward service robots in South Korea. Industr Robot Int J 40(1):77–87

Heerink M, Krose B, Evers V, Wielinga B (2009) Measuring acceptance of an assistive social robot: a suggested toolkit. In: IEEE international symposium on robot and human interactive communication (RO-MAN 2009). IEEE, pp 528–533

Yu J, Ha I, Choi M, Rho J (2005) Extending the TAM for a t-commerce. Inf Manag 42(7):965–976

Venkatesh V (1999) Creation of favorable user perceptions: exploring the role of intrinsic motivation. MIS Q 23(2):239–260

Agarwal R, Prasad J (1999) Are individual differences germane to the acceptance of new information technologies? Decis Sci 30(2):361–391

Burton-Jones A, Hubona GS (2006) The mediation of external variables in the technology acceptance model. Inf Manag 43(6):706–717

McMurray J, Strudwick G, Forchuk C, Morse A, Lachance J, Baskaran A, Allison L, Booth R (2017) The importance of trust in the adoption and use of intelligent assistive technology by older adults to support aging in place: scoping review protocol. JMIR Res Protoc 6(11):e218

de Boer W, Åström J-M (2017) Robots of the future are coming, are you ready: a study investigating consumers’ acceptance of robotics. http://www.diva-portal.org/smash/record.jsf?pid=diva2%3A1134108&dswid=3697

Cialdini RB (2009) Influence: science and practice, vol 4. Pearson education, Boston

Wu B, Chen X (2017) Continuance intention to use MOOCs: integrating the technology acceptance model (TAM) and task technology fit (TTF) model. Comput Hum Behav 67:221–232

Acknowledgements

We wish to express our gratitude to the participants who took part in this study.

Funding

This study was funded by the Ministry of Higher Education Malaysia (Grant No. 10021053), International Islamic University Malaysia (Grant No. 10021053) and European Union's H2020 Research and Innovation program (Grant No. 644780).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest